Abstract

Although numerous observational studies associated underfeeding with poor outcome, recent randomized controlled trials (RCTs) have shown that early full nutritional support does not benefit critically ill patients and may induce dose-dependent harm. Some researchers have suggested that the absence of benefit in RCTs may be attributed to overrepresentation of patients deemed at low nutritional risk, or to a too low amino acid versus non-protein energy dose in the nutritional formula. However, these hypotheses have not been confirmed by strong evidence. RCTs have not revealed any subgroup benefiting from early full nutritional support, nor benefit from increased amino acid doses or from indirect calorimetry-based energy dosing targeted at 100% of energy expenditure. Mechanistic studies attributed the absence of benefit of early feeding to anabolic resistance and futile catabolism of extra provided amino acids, and to feeding-induced suppression of recovery-enhancing pathways such as autophagy and ketogenesis, which opened perspectives for fasting-mimicking diets and ketone supplementation. Yet, the presence or absence of an anabolic response to feeding cannot be predicted or monitored and likely differs over time and among patients. In the absence of such monitor, the value of indirect calorimetry seems obscure, especially in the acute phase of illness. Until now, large feeding RCTs have focused on interventions that were initiated in the first week of critical illness. There are no large RCTs that investigated the impact of different feeding strategies initiated after the acute phase and continued after discharge from the intensive care unit in patients recovering from critical illness.

Similar content being viewed by others

Background

In critically ill patients, severe physical stress induces a catabolic response, leading to muscle wasting and weakness [1]. The longer the stay in the intensive care unit (ICU), the higher the risk of weakness, and the poorer the outcome [1, 2]. Indeed, severe weakness may preclude weaning from mechanical ventilation and may cause life-threatening complications by difficulties to cough up secretions and swallowing dysfunction, among others [1]. Also in patients surviving critical illness, persistent weakness is considered part of the post-intensive care syndrome [3,4,5]. Apart from weakness, also increased bone resorption may occur, with increased fracture risk after intensive care [5,6,7].

To counteract catabolism, nutrition has been advocated, since prolonged underfeeding could contribute to catabolism [8]. Moreover, some patients already have sarcopenia and prolonged low nutrition intake before ICU admission. Numerous observational studies have associated increased nutrition intake with improved outcome of critically ill patients [9, 10]. Yet, a causal relationship cannot be derived from such associations, since feeding tolerance closely associates with illness severity, with in general a better feeding tolerance in patients who are less ill. Hence, in observational studies, there is an inherent risk of residual confounding. Until a decade ago, in view of the absence of large randomized controlled trials (RCTs), European experts advocated to avoid any caloric or protein deficit in critically ill patients, and to start early artificial feeding, especially in patients considered to be at high nutritional risk [8]. Since then, however, several large RCTs have shown that early full feeding did not benefit adult and pediatric critically ill patients, and some even showed harm [11,12,13,14,15]. These at first sight counterintuitive results indicate that critical illness-associated catabolism is much more complex than merely a consequence of underfeeding, and that anorexia and temporary starvation may to some extent be an adaptive component of the stress response to severe illness. We here summarize the RCT evidence and review potential mechanisms explaining the negative results of recent feeding RCTs, which will hopefully guide future research and which may ultimately lead to individualization of feeding.

The impact of early full feeding in critical illness: evidence from recent feeding RCTs

As shown in a recent meta-analysis, no large-scale RCT in critically ill patients found benefit by early full feeding, as compared to more restrictive feeding regimens [16]. Two RCTs—one in adults and one in children—even found significant harm by early supplementation of insufficient or contraindicated enteral nutrition with parenteral nutrition. Indeed, in both the adult EPaNIC (N = 4640) and pediatric PEPaNIC RCTs (N = 1440), providing early supplemental parenteral nutrition prolonged ICU dependency, with increased dependency on vital organ support and incidence of new infections as compared to withholding supplemental parenteral nutrition until one week after ICU admission [11, 12]. In adults, early supplemental parenteral nutrition further increased the incidence of ICU-acquired weakness, and hampered recovery hereof [17]. In theory, harm by early supplemental parenteral nutrition could be explained by an increased nutritional dose, or by an inferior feeding route. However, 2 large RCTs in adults—the CALORIES (N = 2400) and Nutrirea-2 (N = 2410) RCT—showed no harm by parenteral nutrition when provided at isocaloric doses as enteral nutrition [18, 19], suggesting that harm by early supplemental parenteral nutrition in the EPaNIC and PEPaNIC RCTs is explained by the higher nutritional dose, rather than by the intravenous route. Moreover, the large-scale EDEN (N = 1000), PermiT (N = 894) and TARGET (N = 3957) RCTs, which compared early full enteral nutrition with lower-dose enteral nutrition for 6, respectively 14 or 28 days in ICU in critically ill adults, did not find benefit with higher nutritional doses [13,14,15].Two of these RCTs found more gastrointestinal intolerance with early full enteral nutrition [13, 15]. Also in long-term follow-up, providing early enhanced nutrition was not beneficial with regard to functional outcome [20,21,22,23,24]. Of note, the EPaNIC and PEPaNIC RCTs showing harm by early enhanced feeding had the highest relative difference in caloric intake between the 2 study groups, which enhances the statistical power to detect a treatment effect [25].

Based on this recent high level evidence, the most recent European feeding guidelines for adult critically ill patients shifted from promoting early full feeding to less aggressive artificial feeding in the first week of critical illness [26]. However, it is important to note that the shift toward providing less feeding in the acute phase should not increase the risk of refeeding syndrome, which is caused by a deficiency in micronutrients and electrolytes, including vitamin B1, potassium and phosphate [27]. Indeed, when artificial feeding is restarted after a prolonged period of starvation, the metabolic need and intracellular transport of several micronutrients and electrolytes increases, which may unmask preexisting deficiencies and lead to life-threatening symptoms [27]. A biochemical hallmark of this condition is refeeding hypophosphatemia, which has been defined as a drop in phosphate levels below 0.65 mmol/l within 72 h after institution of artificial feeding [28, 29], explained by intracellular uptake and incorporation in energy-rich phosphate bonds. Once refeeding hypophosphatemia occurs early in critical illness, temporarily limiting nutrition intake while correcting existing vitamin and electrolyte deficiencies is likely beneficial, as shown in the Refeeding RCT (N = 339) [28]. To prevent refeeding syndrome, it seems prudent to ensure sufficient micronutrient intake in all patients, which may, especially in the acute phase of illness, require parenteral administration of micronutrients and electrolytes [11, 26, 30].

Critiques on recent feeding RCTs

The neutral or negative effect of early enhanced feeding in recent RCTs has been suggested to be explained by over-representation of patients presumed to carry low risk of malnutrition, by administration of too low amino acid doses, and by the use of calculated energy targets [31,32,33]. However, these critiques have not been supported by high level evidence and several lines of evidence have contradicted them, as outlined below. The Nutrirea-3 RCT, which randomized 3044 adult patients with shock requiring mechanical ventilation and vasopressor support to early full feeding versus one week of calorie-protein restriction irrespective of the feeding route, recently finalized recruitment and will provide more insight (NCT03573739) [34].

Inclusion of patients presumed to be at low risk of malnutrition

Researchers have suggested that the absence of benefit in recent RCTs may be explained by including too many patients considered to be at low risk of malnutrition, whereby any potential benefit in perceived high-risk patients may have been obscured by no impact or even harm in hypothesized low-risk patients [32]. However, this hypothesis is not confirmed by subgroup analyses of RCTs. Indeed, in large RCTs, there was no subgroup of patients identifiable who benefited from early enhanced feeding, as defined by age, the nutritional risk screening (NRS) score, the modified Nutrition Risk in Critically Ill (NUTRIC) score, or body mass index (BMI) upon admission [11,12,13, 15, 35]. In a secondary analysis of the PermiT RCT, studied biomarkers did not discriminate adult patients who would benefit from early full enteral nutrition as compared to lower-dose enteral nutrition [35]. If anything, there was a signal in the opposite direction. Indeed, patients with low prealbumin levels, who would be considered at highest nutritional risk, had an increased risk of mortality associated with early full enteral nutrition [35]. Also in the large EPaNIC subgroup of patients for whom enteral nutrition was contraindicated (N = 517), early total parenteral nutrition was harmful as compared to virtual starvation for one week in ICU [11].

Low amino acid doses

It has been suggested that several feeding RCTs did not show benefit because of imbalanced feeding solutions, whereby the doses of amino acids would have been too low [31]. However, the largest RCT on amino acid supplements in adult critically ill patients, the Nephroprotective RCT (N = 474), did not find benefit from early amino acid supplements provided at doses of approximately 1.75 g/kg per day throughout ICU stay, while significantly increasing ureagenesis [36]. Also, in other RCTs in both adults and children, early full feeding significantly increased ureagenesis [37,38,39]. In a secondary analysis of the EPaNIC RCT, it was estimated that approximately two third of the extra amino acids provided through early parenteral nutrition were net wasted in ureagenesis, even with amino acid doses that are considered relatively low (approximately 0.8 g/kg per day) [37]. Concomitantly, both microscopic and macroscopic muscle loss were not prevented by providing early full feeding [17, 40]. In contrast, early supplementation of insufficient enteral nutrition by parenteral nutrition increased muscular fat content, aggravated muscle weakness, and hampered recovery from weakness [17]. In secondary analyses of both EPaNIC and PEPaNIC RCTs, harm by early parenteral nutrition was statistically explained by the amino acid doses, and not by the glucose or lipid doses [38, 41]. Evidently, these findings are observational and require confirmation in RCTs. Of note, in the absence of solid evidence supporting a clear protein target, the most recent European ESPEN guidelines for adult critically ill patients do not make a strong recommendation [26]. Instead, there is a grade 0 recommendation suggesting that 1.3 g/kg protein can be delivered progressively [26]. The EFFORT RCT (N = 4000; study completed December 3, 2021, with 1329 patients included according to clinicaltrials.gov) will fill this evidence gap, as it investigates whether or not a higher dose of proteins improves outcome of adult critically ill patients [42].

Calculated energy targets

The absence of benefit of early full feeding has also been attributed to the absence of indirect calorimetry to guide the energy target [43]. In acute illness, indirect calorimetry is the gold standard to measure energy expenditure, which is derived from measurement of VO2 and VCO2, and the obtained value has been proposed as energy target after the first days in ICU [44]. In most recent large feeding RCTs, indirect calorimetry was not routinely used, reflecting daily practice in most centers [11,12,13,14,15]. Instead, the energy target was determined by predictive equations that only provide an estimation of energy expenditure that may considerably deviate from the measured energy expenditure [11,12,13,14,15, 44]. However, there is no solid evidence that the feeding target should equal energy expenditure at all times, since the largest RCTs comparing indirect calorimetry-based feeding versus predictive equation-based feeding in adult critically ill patients did not show clear benefit [45, 46]. The EAT-ICU RCT (N = 199) even found harm, with an increased ICU stay in patients randomized to the intervention group in which early full feeding was guided by indirect calorimetry and by nitrogen balances as compared with the control group in which early enteral nutrition was delivered up to a fixed energy target [39]. Interestingly, the EAT-ICU intervention resulted in higher protein and energy intake in the first week than the control group, further supporting harm by a higher nutritional dose early during critical illness [39].

Despite the absence of benefit from using indirect calorimetry to target 100% of energy expenditure by feeding in large RCTs, proponents of its use in the first week of critical illness have referred to the results of a recent meta-analysis that suggested potential mortality benefit by indirect calorimetry-based feeding initiated in the first week in critically ill adults as compared with calculated energy target-based feeding [47, 48]. The potential mechanisms of mortality benefit remain unclear, however, since morbidity outcomes did not differ [47]. Although this meta-analysis may seem encouraging, the results should be interpreted with great caution, for several reasons. First, none of the included studies had a low risk of bias, and the mortality difference was barely significant [47]. It is highly likely that the statistical difference would be lost if a small number of patients (close to 1)—the fragility index of the study—would have had a different outcome. Moreover, there are concerns with regard to the reported mortality data in the largest RCT, the TICACOS-International RCT (N = 417) [46, 49]. Indeed, the reported mortality at consecutive time points decreased over time in this RCT, which is obviously impossible, and reported numbers in abstract and full text do not match [46], as reported in a letter to the editor [49]. Moreover, according to the reported numbers in TICACOS-International, there was only a statistically insignificant, but numerical difference in mortality at 90 days, which was the mortality rate used in the meta-analysis [46, 47]. In contrast, reported ICU mortality and mortality at 6 months, not used in the meta-analysis, were virtually identical [46, 47]. In view of these important concerns and unresolved issues, the level of evidence put forward by the meta-analysis remains low. Moreover, the TICACOS-International RCT may indirectly question the feasibility of widespread implementation of indirect calorimetry, since the authors, who are experts in the field, only included 417 patients over 6 years in 7 centers, whereby slow recruitment led to premature stopping of the RCT [46].

Apart from the absence of benefit from full feeding guided by indirect calorimetry in the largest RCTs, there are also pathophysiological concerns with regard to its early use to guide nutritional energy dosing. Indeed, if the ideal energy target would equal energy expenditure at all times, one intrinsically assumes that all endogenous energy production can be suppressed by providing calories by feeding, which is not the case (Fig. 1). Indeed, acute critical illness is characterized by feeding-resistant catabolism and severe insulin resistance, especially in the liver, whereby endogenous glucose production cannot be suppressed by providing nutrients and insulin [50]. Hence, providing extra calories on top of not suppressible gluconeogenesis may aggravate hyperglycemia and hypertriglyceridemia, and may only pose an additional burden on the liver [51]. Unfortunately, there is no monitor of endogenous glucose production available at the bedside, so the duration and extent of unsuppressible endogenous substrate production of individual patients remain unclear. There are no RCTs that investigated the impact of reducing feeding intake to a fixed percentage of the measured energy expenditure, to compensate for endogenous glucose production [52]. Also, no large RCTs have investigated the impact of indirect calorimetry-based feeding that is initiated in prolonged critically ill patients and continued after ICU discharge. Of note, the current ESPEN guidelines and recent experts’ opinion do not recommend to match the energy expenditure measured by indirect calorimetry with the feeding target at all times in adult critically ill patients [26, 53].

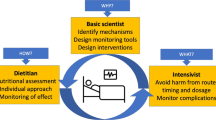

Selected mechanisms explaining the lack of benefit by early full feeding in critical illness. Evoked by the stress response to severe illness, anabolic resistance occurs, whereby muscle catabolism and hepatic gluconeogenesis cannot be counteracted by providing macronutrients, unlike in normal health. Providing extra macronutrients in such condition increases the risk of overfeeding, manifested as hyperglycemia, hypertriglyceridemia, liver dysfunction and hyperuremia by catabolism of extra provided amino acids. In addition, continuous artificial nutrition continuously suppresses autophagy and ketogenesis as potentially important repair pathways. The time when anabolic resistance ceases and the condition reverses into metabolic feeding responsiveness cannot be predicted or monitored at the bedside. Theoretically, feeding responsiveness may undergo dynamic changes over time, and the timing of such changes likely differs between patients

Mechanisms explaining lack of benefit of early full feeding

Suppression of fasting-induced recovery pathways

The lack of benefit from early full nutrition in RCTs may be explained by a continuous suppression of the fasting response. Although fasting has traditionally been considered a negative process in critical illness [8], a normal diet involves alteration of feeding periods with fasting intervals, and fasting has been attributed health-promoting effects. Indeed, diets that induce a prolonged fasting response such as fasting-mimicking diets or caloric restriction diets protected against age-related disease and improved longevity in animal models, and ameliorated risk factors of age-related disease in humans [54, 55]. This suggests that fasting-activated pathways are important to maintain normal cellular integrity and function. In large feeding RCTs in critically ill patients, however, artificial feeding has always been provided in a continuous manner [11,12,13,14,15], hereby continuously suppressing any fasting response.

Part of the beneficial effects of fasting in normal health are mediated by activation of macroautophagy [54]. Macroautophagy, hereafter referred to as autophagy, is a cellular process whereby cytoplasmic content is digested in the lysosome after its delivery to the lysosome in an intermediate vesicle that is called an autophagosome [56]. Autophagy is activated by fasting and by a variety of stress signals [56]. Particularly deprivation of amino acids is a strong stimulus of autophagy [56]. Autophagy is the only process able to remove macromolecular damage, including damaged organelles, potentially toxic protein aggregates and intracellular microorganisms and as such, it is a crucial process that is necessary to maintain homeostasis [57]. Aging is accompanied by a gradual decline in autophagic activity, and activation of autophagy has been shown to protect against age-related disease and to improve life span in animals [57, 58]. Increasing evidence also implicates autophagy as crucial repair process to recover from critical illness [59,60,61]. Moreover, mechanistic studies have implicated autophagy suppression as potential mechanism explaining harm by early full nutrition in critical illness [62]. In a critically ill animal model, early parenteral nutrition, especially with higher amino acid doses, increased liver damage and signs of muscle degeneration as compared to relative fasting, while it suppressed autophagy [63]. In this model, administration of the autophagy activator rapamycin protected against kidney injury in fed critically ill animals [64]. Likewise, in critically ill patients, early parenteral nutrition suppressed autophagy in muscle, which associated with more weakness [17]. Altogether, this evidence puts forward autophagy as potential therapeutic target in critical illness. However, pharmacological autophagy activation is complicated, since there are no specific pharmacological autophagy inducers available [65], and excessive autophagy stimulation may also be detrimental [66].

A second process that may explain the negative impact of early full feeding in critically ill patients is suppression of ketogenesis. Apart from being an alternative energy substrate during fasting, ketones serve signaling roles, may stimulate autophagy and enhance muscle regeneration [67, 68]. In a mouse model of sepsis-induced critical illness, administration of ketones improved muscle force, which appeared not related to its use as energy substrate, but by activating muscle regeneration pathways [68]. A recent study showed that ketones increase resilience of muscle stem cells to cellular stress via signaling effects [69]. Secondary analyses of the EPaNIC and PEPaNIC RCTs showed that withholding early parenteral nutrition activated ketogenesis, most robustly in critically ill children, in whom it statistically mediated part of the outcome benefit of the intervention [70, 71].

Anabolic resistance

One of the main aims of providing nutrients to critically ill patients is to inhibit or limit critical illness-associated catabolism, which would attenuate muscle wasting and weakness, and improve long-term functional outcome. However, recent nutritional RCTs have shown that early full feeding is unable to counteract catabolism. Indeed, both muscle wasting and weakness were not prevented, and long-term functional outcome was not improved [17, 20,21,22, 40]. Instead, providing higher doses of amino acids in the acute phase significantly increased ureagenesis in several RCTs [36,37,38,39]. Currently, there are no bedside monitors or biomarkers that predict or document feeding responsiveness. The failure of artificial feeding to suppress catabolism may to some extent be explained by the so-called muscle-full effect [72, 73]. Indeed, in healthy adults, muscle protein synthesis only rises temporarily in response to continuous amino acid infusion [72]. However, whether bolus feeding or intermittent feeding would indeed lead to more anabolism in critically ill patients remains to be studied. A relatively small RCT in critically ill adults (N = 121) found lower rise in the urea over creatinine ratio as marker of catabolism by intermittent feeding as compared to continuous feeding, while there was no impact on ultrasound-assessed muscle wasting [74, 75]. Moreover, there was already a baseline difference in urea over creatinine ratio, precluding a strong conclusion [75]. Regardless of the mode of delivering nutrition, the degree of anabolic resistance likely varies over time and among patients, since critical illness-associated catabolism has been related to the stress response and the accompanying inflammatory and endocrine alterations (Fig. 1) [1]. Apart from the muscle-full effect, another potential mechanism contributing to anabolic resistance is the relative immobilization of the patient. Outside critical illness, protein supplementation is most effective in achieving anabolism when combined with exercise [76]. There are no data regarding early mobilization in large feeding RCTs in critical illness, and large RCTs investigating the interaction between early mobilization and feeding in critically ill patients are lacking [77]. However, as for early feeding, early enhanced, active mobilization is not beneficial for critically ill patients and increases the risk of adverse events [78].

Perspectives for future research

These mechanistic insights provide a base for novel feeding regimens, to be developed and to be tested ultimately in RCTs powered for clinical endpoints. Although fasting may activate beneficial cellular pathways that are also essential in normal health, prolonged starvation will likely come at a price. Novel feeding strategies that may exploit these fasting-associated benefits while avoiding prolonged starvation include intermittent feeding, ketogenic diets and ketone supplementation.

Intermittent feeding diets, which alternate feeding with fasting intervals, would theoretically allow to provide feeding while intermittently activating the fasting response and its associated benefits [79]. In animal models of aging, so-called fasting-mimicking diets could replicate the benefits observed with caloric restriction [80]. Apart from activating fasting responses, intermittent feeding strategies could theoretically be beneficial through preventing the muscle-full effect and better preservation of circadian rhythm [79]. However, it remains unclear how long critically ill patients should fast before a metabolic fasting response that includes autophagy stimulation develops [81]. In a pilot crossover RCT, 12 h fasting activated ketogenesis and other components of the fasting response, while it had no impact on autophagy assessed in peripheral blood cells [82]. Yet, it remains unclear whether 12 h fasting was able to activate autophagy in vital tissues, or whether 12 h fasting was merely insufficient to initiate autophagy stimulation at all [82]. RCTs investigating the impact of intermittent versus continuous feeding strategies in critical illness did not show consistent benefit of intermittent feeding [74, 83]. Yet, RCTs were relatively small and likely underpowered to detect or exclude a meaningful clinical benefit, and the fasting interval was relatively short (in general 4–6 h), which may have been too short to induce a fasting response and its associated benefits [79]. Nevertheless, intermittent feeding may also be challenging, since the daily nutritional intake has to be given over a shorter time, which may increase the risk of complications due to enteral feeding intolerance and large glucose variability, among others [84]. Hence, efficacy and safety remain to be studied. Apart from intermittent feeding strategies, ketogenic diets or ketone supplementation could be beneficial [85]. Although ketogenic diets have been used in selected patients including patients with refractory status epilepticus, the efficacy and safety of ketogenic diets or ketone supplements for general critically ill patients remain to be studied [85].

Apart from the ideal feeding regimen or the ideal feeding mode, there is a need for validated markers of feeding tolerance and responsiveness [86]. Indeed, although enteral nutrition is usually favored over parenteral nutrition, patients on enteral nutrition may suffer feeding intolerance and, in severe cases, non-occlusive mesenteric ischemia, especially when delivered at higher doses in patients with shock [19, 86]. Currently, there are no validated biomarkers or bedside monitoring devices that can predict enteral feeding tolerance, which could help avoid complications of too early enteral feeding, such as aspiration pneumonia [87, 88]. At current, gastric residual volumes are still widely used and recommended by guidelines [89], although a RCT (N = 449) did not show benefit of measuring gastric residual volumes in adult patients receiving mechanical ventilation [90]. Also, metabolic responsiveness to feeding cannot be predicted or monitored at the bedside, which requires further investigation (Fig. 1) [87]. In the past, experts have recommended to use nutritional risk scores to inform which patients would benefit most from early enhanced nutrition [91, 92]. However, RCT data have shown that no biomarker was able to discern subpopulations of patients benefiting from early full nutrition [35]. Future research in metabolomics could help to identify which patients may benefit from enhanced or more restricted feeding and at what time [93, 94]. Currently used signs of energy or protein overload are nonspecific and frequently occur outside the context of overfeeding, including hyperglycemia, hypertriglyceridemia, elevated liver enzymes, hyperbilirubinemia, hyperuremia and hyperammonemia [95]. A potential sign that may assist in determining readiness for feeding may be the degree of insulin resistance, as can be derived from the amount of insulin required to maintain blood glucose at a predefined level [95, 96]. One important biomarker is, however, phosphate, to detect and early treat refeeding syndrome [95].

The target population and outcomes studied in RCTs on ICU nutrition also need to be considered. As responsiveness to feeding likely changes over time, there is a need for RCTs that investigate the impact of optimized nutrition started after the acute phase and continued throughout the recovery phase [97], since anabolic resistance is expected to cease at a particular time. In this regard, a large RCT in hospitalized non-critically ill adults at risk of malnutrition (N = 2088) showed that intensified nutritional support, achieved predominantly through increased oral intake, improved short-term mortality [98]. Nevertheless, the mortality difference was only transient [99], there was no impact on functional outcome after 6 months [99], and less than 2% of patients in the intervention group received enteral or parenteral nutrition [98]. Hence, it is not clear to what extent these findings can be extrapolated to patients who are still in need of artificial nutrition while recovering from critical illness. Theoretically, indirect calorimetry could be a useful adjunct in patients who are responsive to feeding, to prevent over- and underfeeding. Yet, feeding responsiveness cannot be monitored at the bedside at this time.

In future nutritional RCTs, the use of uniform endpoints would facilitate comparisons and meta-analyses, although there is only limited agreement on essential outcomes [100]. There has been considerable variability in the primary and secondary outcomes of RCTs [101, 102]. For large efficacy RCTs, the primary endpoint should be a patient-centered outcome that is likely affected by feeding [101]. The anticipated effect size should be realistic with regard to the nature and the duration of the intervention, avoiding an underpowered study. Evidently, potential confounders should be taken into account, including competing risks [101, 103].

Conclusion

Recent RCTs have not confirmed the hypothesized benefit of early full feeding, and several RCTs even showed harm of early parenteral nutrition supplementing insufficient enteral nutrition. Harm by early parenteral nutrition appeared explained by a higher nutritional dose in the acute phase, and not by the parenteral route per se, since a short period of parenteral nutrition did not cause harm as compared to an isocaloric dose of enteral nutrition. There are no large RCTs favoring indirect calorimetry-guided full feeding as compared to calculation-based feeding. The absence of benefit of early full feeding has been attributed to suppression of autophagy and ketogenesis, and to feeding-resistant muscle catabolism. Hence, intermittent feeding, ketone supplementation and ketogenic diets emerge as potential novel feeding strategies that may allow continuation of nutrition while avoiding prolonged suppression of beneficial fasting responses, which needs further study. Despite many large-scale RCTs in the last decade, it remains unclear how to optimally administer feeding, since the ideal timing and dose remain unclear. Since recent feeding practices have shifted toward lower-dose artificial nutrition and avoiding early PN, sufficient micronutrient intake should be ensured to prevent deficiencies. To allow individualization of feeding, novel biomarkers, predictive models or monitoring devices that predict and indicate the response to feeding are needed, since the presence or absence of feeding resistance and unsuppressible gluconeogenesis is likely dynamic and time-dependent, depending on the stress response to severe illness and the recovery hereof. Until that time, it will remain unclear for whom, when and how to optimally use indirect calorimetry. Evidently, any feeding strategy, even in case of a solid pathophysiological rationale, requires confirmation of efficacy and safety in a large-scale RCT powered for clinical endpoints before it can be strongly recommended in clinical practice.

Availability of data and materials

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Abbreviations

- BMI:

-

Body mass index

- ESPEN:

-

European Society for Clinical Nutrition and Metabolism

- ICU:

-

Intensive care unit

- NRS:

-

Nutritional risk screening

- NUTRIC:

-

Nutrition risk in critically ill

- RCT:

-

Randomized controlled trial

References

Vanhorebeek I, Latronico N, Van den Berghe G. ICU-acquired weakness. Intensive Care Med. 2020;46(4):637–53.

Hermans G, Van Mechelen H, Clerckx B, Vanhullebusch T, Mesotten D, Wilmer A, et al. Acute outcomes and 1-year mortality of intensive care unit-acquired weakness: a cohort study and propensity-matched analysis. Am J Respir Crit Care Med. 2014;190(4):410–20.

Herridge MS, Tansey CM, Matte A, Tomlinson G, Diaz-Granados N, Cooper A, et al. Functional disability 5 years after acute respiratory distress syndrome. N Engl J Med. 2011;364(14):1293–304.

Van Aerde N, Meersseman P, Debaveye Y, Wilmer A, Gunst J, Casaer MP, et al. Five-year impact of ICU-acquired neuromuscular complications: a prospective, observational study. Intensive Care Med. 2020;46(6):1184–93.

Rousseau AF, Prescott HC, Brett SJ, Weiss B, Azoulay E, Creteur J, et al. Long-term outcomes after critical illness: recent insights. Crit Care. 2021;25(1):108.

Orford NR, Saunders K, Merriman E, Henry M, Pasco J, Stow P, et al. Skeletal morbidity among survivors of critical illness. Crit Care Med. 2011;39(6):1295–300.

Orford NR, Lane SE, Bailey M, Pasco JA, Cattigan C, Elderkin T, et al. Changes in bone mineral density in the year after critical illness. Am J Respir Crit Care Med. 2016;193(7):736–44.

Singer P, Berger MM, Van den Berghe G, Biolo G, Calder P, Forbes A, et al. ESPEN guidelines on parenteral nutrition: intensive care. Clin Nutr. 2009;28(4):387–400.

Alberda C, Gramlich L, Jones N, Jeejeebhoy K, Day AG, Dhaliwal R, et al. The relationship between nutritional intake and clinical outcomes in critically ill patients: results of an international multicenter observational study. Intensive Care Med. 2009;35(10):1728–37.

Dvir D, Cohen J, Singer P. Computerized energy balance and complications in critically ill patients: an observational study. Clin Nutr. 2006;25(1):37–44.

Casaer MP, Mesotten D, Hermans G, Wouters PJ, Schetz M, Meyfroidt G, et al. Early versus late parenteral nutrition in critically ill adults. N Engl J Med. 2011;365(6):506–17.

Fivez T, Kerklaan D, Mesotten D, Verbruggen S, Wouters PJ, Vanhorebeek I, et al. Early versus late parenteral nutrition in critically ill children. N Engl J Med. 2016;374(12):1111–22.

Rice TW, Wheeler AP, Thompson BT, Steingrub J, Hite RD, Moss M, et al. Initial trophic vs full enteral feeding in patients with acute lung injury: the EDEN randomized trial. JAMA. 2012;307(8):795–803.

Arabi YM, Aldawood AS, Haddad SH, Al-Dorzi HM, Tamim HM, Jones G, et al. Permissive underfeeding or standard enteral feeding in critically ill adults. N Engl J Med. 2015;372(25):2398–408.

Chapman M, Peake SL, Bellomo R, Davies A, Deane A, Horowitz M, et al. Energy-dense versus routine enteral nutrition in the critically ill. N Engl J Med. 2018;379(19):1823–34.

Compher C, Bingham AL, McCall M, Patel J, Rice TW, Braunschweig C, et al. Guidelines for the provision of nutrition support therapy in the adult critically ill patient: The American Society for Parenteral and Enteral Nutrition. JPEN J Parenter Enteral Nutr. 2022;46(1):12–41.

Hermans G, Casaer MP, Clerckx B, Guiza F, Vanhullebusch T, Derde S, et al. Effect of tolerating macronutrient deficit on the development of intensive-care unit acquired weakness: a subanalysis of the EPaNIC trial. Lancet Respir Med. 2013;1(8):621–9.

Harvey SE, Parrott F, Harrison DA, Bear DE, Segaran E, Beale R, et al. Trial of the route of early nutritional support in critically ill adults. N Engl J Med. 2014;371(18):1673–84.

Reignier J, Boisramé-Helms J, Brisard L, Lascarrou JB, Ait Hssain A, Anguel N, et al. Enteral versus parenteral early nutrition in ventilated adults with shock: a randomised, controlled, multicentre, open-label, parallel-group study (NUTRIREA-2). Lancet. 2018;391(10116):133–43.

Needham DM, Dinglas VD, Bienvenu OJ, Colantuoni E, Wozniak AW, Rice TW, et al. One year outcomes in patients with acute lung injury randomised to initial trophic or full enteral feeding: prospective follow-up of EDEN randomised trial. BMJ. 2013;346:f1532.

Needham DM, Dinglas VD, Morris PE, Jackson JC, Hough CL, Mendez-Tellez PA, et al. Physical and cognitive performance of patients with acute lung injury 1 year after initial trophic versus full enteral feeding: EDEN trial follow-up. Am J Respir Crit Care Med. 2013;188(5):567–76.

Deane AM, Little L, Bellomo R, Chapman MJ, Davies AR, Ferrie S, et al. Outcomes six months after delivering 100% or 70% of enteral calorie requirements during critical illness (TARGET): a randomized controlled trial. Am J Respir Crit Care Med. 2020;201(7):814–22.

Verstraete S, Verbruggen SC, Hordijk JA, Vanhorebeek I, Dulfer K, Güiza F, et al. Long-term developmental effects of withholding parenteral nutrition for 1 week in the paediatric intensive care unit: a 2-year follow-up of the PEPaNIC international, randomised, controlled trial. Lancet Respir Med. 2019;7(2):141–53.

Jacobs A, Dulfer K, Eveleens RD, Hordijk J, Van Cleemput H, Verlinden I, et al. Long-term developmental effect of withholding parenteral nutrition in paediatric intensive care units: a 4-year follow-up of the PEPaNIC randomised controlled trial. Lancet Child Adolesc Health. 2020;4(7):503–14.

Casaer MP, Van den Berghe G. Nutrition in the acute phase of critical illness. N Engl J Med. 2014;370(13):1227–36.

Singer P, Blaser AR, Berger MM, Alhazzani W, Calder PC, Casaer MP, et al. ESPEN guideline on clinical nutrition in the intensive care unit. Clin Nutr. 2019;38(1):48–79.

Vankrunkelsven W, Gunst J, Amrein K, Bear DE, Berger MM, Christopher KB, et al. Monitoring and parenteral administration of micronutrients, phosphate and magnesium in critically ill patients: the VITA-TRACE survey. Clin Nutr. 2021;40(2):509–99.

Doig GS, Simpson F, Heighes PT, Bellomo R, Chesher D, Caterson ID, et al. Restricted versus continued standard caloric intake during the management of refeeding syndrome in critically ill adults: a randomised, parallel-group, multicentre, single-blind controlled trial. Lancet Respir Med. 2015;3(12):943–52.

Olthof LE, Koekkoek WACK, van Setten C, Kars JCN, van Blokland D, van Zanten ARH. Impact of caloric intake in critically ill patients with, and without, refeeding syndrome: a retrospective study. Clin Nutr. 2018;37(5):1609–17.

Eveleens RD, Witjes BCM, Casaer MP, Vanhorebeek I, Guerra GG, Veldscholte K, et al. Supplementation of vitamins, trace elements and electrolytes in the PEPaNIC randomised controlled trial: composition and preparation of the prescription. Clin Nutr ESPEN. 2021;42:244–51.

Hoffer LJ, Bistrian BR. Nutrition in critical illness: a current conundrum. F1000Res. 2016;5:2531.

Felbinger TW, Weigand MA, Mayer K. Early or late parenteral nutrition in critically ill adults. N Engl J Med. 2011;365(19):1839.

O’Leary MJ, Ferrie S. Early or late parenteral nutrition in critically ill adults. N Engl J Med. 2011;365(19):1839–40.

Reignier J, Le Gouge A, Lascarrou JB, Annane D, Argaud L, Hourmant Y, et al. Impact of early low-calorie low-protein versus standard-calorie standard-protein feeding on outcomes of ventilated adults with shock: design and conduct of a randomised, controlled, multicentre, open-label, parallel-group trial (NUTRIREA-3). BMJ Open. 2021;11(5):e045041.

Arabi YM, Aldawood AS, Al-Dorzi HM, Tamim HM, Haddad SH, Jones G, et al. Permissive underfeeding or standard enteral feeding in high and low nutritional risk critically ill adults: post-hoc analysis of the PermiT trial. Am J Respir Crit Care Med. 2017;195(5):652–62.

Doig GS, Simpson F, Bellomo R, Heighes PT, Sweetman EA, Chesher D, et al. Intravenous amino acid therapy for kidney function in critically ill patients: a randomized controlled trial. Intensive Care Med. 2015;41(7):1197–208.

Gunst J, Vanhorebeek I, Casaer MP, Hermans G, Wouters PJ, Dubois J, et al. Impact of early parenteral nutrition on metabolism and kidney injury. J Am Soc Nephrol. 2013;24(6):995–1005.

Vanhorebeek I, Verbruggen S, Casaer MP, Gunst J, Wouters PJ, Hanot J, et al. Effect of early supplemental parenteral nutrition in the paediatric ICU: a preplanned observational study of post-randomisation treatments in the PEPaNIC trial. Lancet Respir Med. 2017;5(6):475–83.

Allingstrup MJ, Kondrup J, Wiis J, Claudius C, Pedersen UG, Hein-Rasmussen R, et al. Early goal-directed nutrition versus standard of care in adult intensive care patients: the single-centre, randomised, outcome assessor-blinded EAT-ICU trial. Intensive Care Med. 2017;43(11):1637–47.

Casaer MP, Langouche L, Coudyzer W, Vanbeckevoort D, De Dobbelaer B, Guiza FG, et al. Impact of early parenteral nutrition on muscle and adipose tissue compartments during critical illness. Crit Care Med. 2013;41(10):2298–309.

Casaer MP, Wilmer A, Hermans G, Wouters PJ, Mesotten D, Van den Berghe G. Role of disease and macronutrient dose in the randomized controlled EPaNIC trial: a post hoc analysis. Am J Respir Crit Care Med. 2013;187(3):247–55.

Heyland DK, Patel J, Bear D, Sacks G, Nixdorf H, Dolan J, et al. The effect of higher protein dosing in critically ill patients: a multicenter registry-based randomized trial: the EFFORT Trial. JPEN J Parenter Enteral Nutr. 2019;43(3):326–34.

Oshima T, Berger MM, De Waele E, Guttormsen AB, Heidegger CP, Hiesmayr M, et al. Indirect calorimetry in nutritional therapy: a position paper by the ICALIC study group. Clin Nutr. 2017;36(3):651–62.

De Waele E, van Zanten ARH. Routine use of indirect calorimetry in critically ill patients: pros and cons. Crit Care. 2022;26(1):123.

Singer P, Anbar R, Cohen J, Shapiro H, Shalita-Chesner M, Lev S, et al. The tight calorie control study (TICACOS): a prospective, randomized, controlled pilot study of nutritional support in critically ill patients. Intensive Care Med. 2011;37(4):601–9.

Singer P, De Waele E, Sanchez C, Ruiz Santana S, Montejo JC, Laterre PF, et al. TICACOS international: a multi-center, randomized, prospective controlled study comparing tight calorie control versus Liberal calorie administration study. Clin Nutr. 2021;40(2):380–7.

Duan JY, Zheng WH, Zhou H, Xu Y, Huang HB. Energy delivery guided by indirect calorimetry in critically ill patients: a systematic review and meta-analysis. Crit Care. 2021;25(1):88.

De Waele E, Jonckheer J, Wischmeyer PE. Indirect calorimetry in critical illness: a new standard of care? Curr Opin Crit Care. 2021;27(4):334–43.

Casaer MP, Van den Berghe G, Gunst J. Indirect calorimetry: a faithful guide for nutrition therapy, or a fascinating research tool? Clin Nutr. 2021;40(2):651.

Langouche L, Vander Perre S, Wouters PJ, D’Hoore A, Hansen TK, Van den Berghe G. Effect of intensive insulin therapy on insulin sensitivity in the critically ill. J Clin Endocrinol Metab. 2007;92(10):3890–7.

Berger MM, Pichard C. Feeding should be individualized in the critically ill patients. Curr Opin Crit Care. 2019;25(4):307–13.

Fraipont V, Preiser JC. Energy estimation and measurement in critically ill patients. JPEN J Parenter Enteral Nutr. 2013;37(6):705–13.

Preiser JC, Arabi YM, Berger MM, Casaer M, McClave S, Montejo-González JC, et al. A guide to enteral nutrition in intensive care units: 10 expert tips for the daily practice. Crit Care. 2021;25(1):424.

de Cabo R, Mattson MP. Effects of intermittent fasting on health, aging, and disease. N Engl J Med. 2019;381(26):2541–51.

Flanagan EW, Most J, Mey JT, Redman LM. Calorie restriction and aging in humans. Annu Rev Nutr. 2020;40:105–33.

Kroemer G, Marino G, Levine B. Autophagy and the integrated stress response. Mol Cell. 2010;40(2):280–93.

Mizushima N, Levine B. Autophagy in human diseases. N Engl J Med. 2020;383(16):1564–76.

Eisenberg T, Knauer H, Schauer A, Büttner S, Ruckenstuhl C, Carmona-Gutierrez D, et al. Induction of autophagy by spermidine promotes longevity. Nat Cell Biol. 2009;11(11):1305–14.

Gunst J. Recovery from critical illness-induced organ failure: the role of autophagy. Crit Care. 2017;21(1):209.

Thiessen SE, Derese I, Derde S, Dufour T, Pauwels L, Bekhuis Y, et al. The role of autophagy in critical illness-induced liver damage. Sci Rep. 2017;7(1):14150.

Vanhorebeek I, Gunst J, Derde S, Derese I, Boussemaere M, Guiza F, et al. Insufficient activation of autophagy allows cellular damage to accumulate in critically ill patients. J Clin Endocrinol Metab. 2011;96(4):E633–45.

Van Dyck L, Casaer MP, Gunst J. Autophagy and its implications against early full nutrition support in critical illness. Nutr Clin Pract. 2018;33(3):339–47.

Derde S, Vanhorebeek I, Guiza F, Derese I, Gunst J, Fahrenkrog B, et al. Early parenteral nutrition evokes a phenotype of autophagy deficiency in liver and skeletal muscle of critically ill rabbits. Endocrinology. 2012;153(5):2267–76.

Gunst J, Derese I, Aertgeerts A, Ververs E-J, Wauters A, Van den Berghe G, et al. Insufficient autophagy contributes to mitochondrial dysfunction, organ failure, and adverse outcome in an animal model of critical illness. Crit Care Med. 2013;41(1):182–94.

Levine B, Packer M, Codogno P. Development of autophagy inducers in clinical medicine. J Clin Invest. 2015;125(1):14–24.

Nah J, Zhai P, Huang CY, Fernández Á, Mareedu S, Levine B, et al. Upregulation of Rubicon promotes autosis during myocardial ischemia/reperfusion injury. J Clin Invest. 2020;130(6):2978–91.

Newman JC, Verdin E. β-hydroxybutyrate: a signaling metabolite. Annu Rev Nutr. 2017;37:51–76.

Goossens C, Weckx R, Derde S, Dufour T, Vander Perre S, Pauwels L, et al. Adipose tissue protects against sepsis-induced muscle weakness in mice: from lipolysis to ketones. Crit Care. 2019;23(1):236.

Benjamin DI, Both P, Benjamin JS, Nutter CW, Tan JH, Kang J, et al. Fasting induces a highly resilient deep quiescent state in muscle stem cells via ketone body signaling. Cell Metab. 2022;34(6):902-918.e906.

De Bruyn A, Gunst J, Goossens C, Vander Perre S, Guerra GG, Verbruggen S, et al. Effect of withholding early parenteral nutrition in PICU on ketogenesis as potential mediator of its outcome benefit. Crit Care. 2020;24(1):536.

De Bruyn A, Langouche L, Vander Perre S, Gunst J, Van den Berghe G. Impact of withholding early parenteral nutrition in adult critically ill patients on ketogenesis in relation to outcome. Crit Care. 2021;25(1):102.

Bohé J, Low JF, Wolfe RR, Rennie MJ. Latency and duration of stimulation of human muscle protein synthesis during continuous infusion of amino acids. J Physiol. 2001;532(Pt 2):575–9.

Atherton PJ, Etheridge T, Watt PW, Wilkinson D, Selby A, Rankin D, et al. Muscle full effect after oral protein: time-dependent concordance and discordance between human muscle protein synthesis and mTORC1 signaling. Am J Clin Nutr. 2010;92(5):1080–8.

McNelly AS, Bear DE, Connolly BA, Arbane G, Allum L, Tarbhai A, et al. Effect of intermittent or continuous feed on muscle wasting in critical illness: a phase 2 clinical trial. Chest. 2020;158(1):183–94.

Flower L, Haines RW, McNelly A, Bear DE, Koelfat K, Damink SO, et al. Effect of intermittent or continuous feeding and amino acid concentration on urea-to-creatinine ratio in critical illness. JPEN J Parenter Enteral Nutr. 2022;46(4):789–97.

Morton RW, Traylor DA, Weijs PJM, Phillips SM. Defining anabolic resistance: implications for delivery of clinical care nutrition. Curr Opin Crit Care. 2018;24(2):124–30.

Kagan I, Cohen J, Bendavid I, Kramer S, Mesilati-Stahy R, Glass Y, et al. Effect of combined protein-enriched enteral nutrition and early cycle ergometry in mechanically ventilated critically ill patients-a pilot study. Nutrients. 2022;14(8):1.

Hodgson CL, Bailey M, Bellomo R, Brickell K, Broadley T, Buhr H, et al. Early active mobilization during mechanical ventilation in the ICU. N Engl J Med. 2022;387(19):1747–58.

Puthucheary Z, Gunst J. Are periods of feeding and fasting protective during critical illness? Curr Opin Clin Nutr Metab Care. 2021;24(2):183–8.

Di Francesco A, Di Germanio C, Bernier M, de Cabo R. A time to fast. Science. 2018;362(6416):770–5.

Pletschette Z, Preiser JC. Continuous versus intermittent feeding of the critically ill: have we made progress? Curr Opin Crit Care. 2020;26(4):341–5.

Van Dyck L, Vanhorebeek I, Wilmer A, Schrijvers A, Derese I, Mebis L, et al. Towards a fasting-mimicking diet for critically ill patients: the pilot randomized crossover ICU-FM-1 study. Crit Care. 2020;24(1):249.

Van Dyck L, Casaer MP. Intermittent or continuous feeding: any difference during the first week? Curr Opin Crit Care. 2019;25(4):356–62.

Bear DE, Hart N, Puthucheary Z. Continuous or intermittent feeding: pros and cons. Curr Opin Crit Care. 2018;24(4):256–61.

Gunst J, Casaer MP, Langouche L, Van den Berghe G. Role of ketones, ketogenic diets and intermittent fasting in ICU. Curr Opin Crit Care. 2021;27(4):385–9.

Reintam Blaser A, Preiser JC, Fruhwald S, Wilmer A, Wernerman J, Benstoem C, et al. Gastrointestinal dysfunction in the critically ill: a systematic scoping review and research agenda proposed by the section of metabolism, endocrinology and nutrition of the European Society of Intensive Care Medicine. Crit Care. 2020;24(1):224.

Van Dyck L, Gunst J, Casaer MP, Peeters B, Derese I, Wouters PJ, et al. The clinical potential of GDF15 as a “ready-to-feed indicator” for critically ill adults. Crit Care. 2020;24(1):557.

Deane AM, Chapman MJ. Technology to inform the delivery of enteral nutrition in the intensive care unit. JPEN J Parenter Enteral Nutr. 2022;46(4):754–6.

Reintam Blaser A, Starkopf J, Alhazzani W, Berger MM, Casaer MP, Deane AM, et al. Early enteral nutrition in critically ill patients: ESICM clinical practice guidelines. Intensive Care Med. 2017;43(3):380–98.

Reignier J, Mercier E, Le Gouge A, Boulain T, Desachy A, Bellec F, et al. Effect of not monitoring residual gastric volume on risk of ventilator-associated pneumonia in adults receiving mechanical ventilation and early enteral feeding: a randomized controlled trial. JAMA. 2013;309(3):249–56.

Kondrup J, Allison SP, Elia M, Vellas B, Plauth M. Educational and Clinical Practice Committee ErSoPaENE: ESPEN guidelines for nutrition screening 2002. Clin Nutr. 2003;22(4):415–21.

Heyland DK, Dhaliwal R, Jiang X, Day AG. Identifying critically ill patients who benefit the most from nutrition therapy: the development and initial validation of a novel risk assessment tool. Crit Care. 2011;15(6):R268.

Wernerman J, Christopher KB, Annane D, Casaer MP, Coopersmith CM, Deane AM, et al. Metabolic support in the critically ill: a consensus of 19. Crit Care. 2019;23(1):318.

Christopher KB. Nutritional metabolomics in critical illness. Curr Opin Clin Nutr Metab Care. 2018;21(2):121–5.

Berger MM, Reintam-Blaser A, Calder PC, Casaer M, Hiesmayr MJ, Mayer K, et al. Monitoring nutrition in the ICU. Clin Nutr. 2019;38(2):584–93.

Uyttendaele V, Dickson JL, Shaw GM, Desaive T, Chase JG. Untangling glycaemia and mortality in critical care. Crit Care. 2017;21(1):152.

Ridley EJ, Bailey M, Chapman M, Chapple LS, Deane AM, Hodgson C, et al. Protocol summary and statistical analysis plan for Intensive nutrition therapy comparEd to usual care iN criTically ill adults (INTENT): a phase II randomised controlled trial. BMJ Open. 2022;12(3):e050153.

Schuetz P, Fehr R, Baechli V, Geiser M, Deiss M, Gomes F, et al. Individualised nutritional support in medical inpatients at nutritional risk: a randomised clinical trial. Lancet. 2019;393(10188):2312–21.

Kaegi-Braun N, Tribolet P, Gomes F, Fehr R, Baechli V, Geiser M, et al. Six-month outcomes after individualized nutritional support during the hospital stay in medical patients at nutritional risk: secondary analysis of a prospective randomized trial. Clin Nutr. 2021;40(3):812–9.

Davies TW, van Gassel RJJ, van de Poll M, Gunst J, Casaer MP, Christopher KB, et al. Core outcome measures for clinical effectiveness trials of nutritional and metabolic interventions in critical illness: an international modified Delphi consensus study evaluation (CONCISE). Crit Care. 2022;26(1):240.

Fetterplace K, Ridley EJ, Beach L, Abdelhamid YA, Presneill JJ, MacIsaac CM, et al. Quantifying response to nutrition therapy during critical illness: implications for clinical practice and research? A narrative review. JPEN J Parenter Enteral Nutr. 2021;45(2):251–66.

Chapple LS, Summers MJ, Weinel LM, Deane AM. Outcome measures in critical care nutrition interventional trials: a systematic review. Nutr Clin Pract. 2020;35(3):506–13.

Schetz M, Casaer MP, Van den Berghe G. Does artificial nutrition improve outcome of critical illness? Crit Care. 2013;17(1):302.

Acknowledgements

Not applicable.

Funding

JG holds a postdoctoral research fellowship granted by the University Hospitals Leuven. MPC holds a postdoctoral research fellowship granted by the Research Foundation—Flanders. JG and MPC receive C2 project funding by KU Leuven. JR received grants from the French Ministry of Health (Programme Hospitalier de Recherche Clinique National 2012 and 2017: #PHRC-12-0184; #PHRC-17-0213). GVdB receives structural research financing by the Methusalem programme of the Flemish Government through the University of Leuven (METH14/06), and a European Research Council (ERC) Advanced Grant from the Horizon 2020 Program of the EU (AdvG-2017-785809).

Author information

Authors and Affiliations

Contributions

JG wrote the first draft, which was subsequently reviewed by all authors. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Gunst, J., Casaer, M.P., Preiser, JC. et al. Toward nutrition improving outcome of critically ill patients: How to interpret recent feeding RCTs?. Crit Care 27, 43 (2023). https://doi.org/10.1186/s13054-023-04317-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13054-023-04317-9