Abstract

Background

Prognostic models—used in critical care medicine for mortality predictions, for benchmarking and for illness stratification in clinical trials—have been validated predominantly in high-income countries. These results may not be reproducible in low or middle-income countries (LMICs), not only because of different case-mix characteristics but also because of missing predictor variables. The study objective was to systematically review literature on the use of critical care prognostic models in LMICs and assess their ability to discriminate between survivors and non-survivors at hospital discharge of those admitted to intensive care units (ICUs), their calibration, their accuracy, and the manner in which missing values were handled.

Methods

The PubMed database was searched in March 2017 to identify research articles reporting the use and performance of prognostic models in the evaluation of mortality in ICUs in LMICs. Studies carried out in ICUs in high-income countries or paediatric ICUs and studies that evaluated disease-specific scoring systems, were limited to a specific disease or single prognostic factor, were published only as abstracts, editorials, letters and systematic and narrative reviews or were not in English were excluded.

Results

Of the 2233 studies retrieved, 473 were searched and 50 articles reporting 119 models were included. Five articles described the development and evaluation of new models, whereas 114 articles externally validated Acute Physiology and Chronic Health Evaluation, the Simplified Acute Physiology Score and Mortality Probability Models or versions thereof. Missing values were only described in 34% of studies; exclusion and or imputation by normal values were used. Discrimination, calibration and accuracy were reported in 94.0%, 72.4% and 25% respectively. Good discrimination and calibration were reported in 88.9% and 58.3% respectively. However, only 10 evaluations that reported excellent discrimination also reported good calibration. Generalisability of the findings was limited by variability of inclusion and exclusion criteria, unavailability of post-ICU outcomes and missing value handling.

Conclusions

Robust interpretations regarding the applicability of prognostic models are currently hampered by poor adherence to reporting guidelines, especially when reporting missing value handling. Performance of mortality risk prediction models in LMIC ICUs is at best moderate, especially with limitations in calibration. This necessitates continued efforts to develop and validate LMIC models with readily available prognostic variables, perhaps aided by medical registries.

Similar content being viewed by others

Background

Prognostic models used in critical care medicine for mortality predictions, for benchmarking and for illness stratification in clinical trials need to be validated for the relevant setting. An ideal model should have good discrimination (the ability to differentiate between high-risk and low-risk patients) and good calibration (generate risk estimates close to actual mortality) [1]. Acute Physiology and Chronic Health Evaluation (APACHE) or the Simplified Acute Physiology Score (SAPS) and the Mortality Probability Models (MPM) are some common prognostic systems used to predict the outcome of critically ill patients admitted to the intensive care unit (ICU) [2, 3].

The performance of these models has been extensively validated, predominantly in high-income countries (HICs) [4,5,6]. These results may not be reproducible in low or middle-income countries (LMICs), not only because of different case-mix characteristics but also because of missing predictor variables. Predictor variables that are routinely available in HIC ICUs (e.g. arterial oxygenation) are often not obtainable or reliable where resources are limited [7, 8]. Furthermore, data collection and recording may not be as robust in these settings as in HICs; paper-based recording systems, limited availability of staff and lack of staff training regarding data collection are frequent challenges [9]. The presence of missing values, if imputed as normal as per convention [3, 4, 10,11,12,13], will lead to underestimation of the scores and mortality. As part of quality improvement initiatives within ICUs, severity-adjusted mortality rates, which are calculated based on these prognostic systems, are increasingly used as tools for evaluating the impact of new therapies or organisational changes and for benchmarking; therefore, underestimating the risk could result in erroneous admission policies and an underestimation of the quality of care, performance and effectiveness when used for benchmarking [14]. Additionally, the diagnostic categories in these prognostic models may not be suited to capture diagnoses more common in these countries, such as dengue, malaria, snakebite and organophosphate poisoning. Furthermore, hospital discharge outcomes may not be readily accessible [15,16,17]. These and other factors influence the performance of the models, which may then require adjustment in the form of recalibration (adjustment of the intercept of the model and overall adjustment of the associations (relative weights) of the predictors with the outcome) and/or model revision (adjustment of individual predictor-outcome associations and addition or removal of new predictors) [18,19,20].

The objective of this article is to systematically review literature on the use of critical care prognostic models in LMICs and assess their ability to discriminate between survivors and non-survivors at hospital discharge of those admitted to ICUs, their calibration and accuracy, and the manner in which missing values are handled.

Methods

Literature search and eligibility criteria

The PubMed database was searched in March 2017, for research articles using the following search strategy: (critical OR intensive) AND (mortality OR survival OR prognostic OR predictive) AND (scoring system OR rating system OR APACHE OR SAPS OR MPM) in the title, abstract and keywords (Additional file 1).

No restrictions were placed on date of publication. Titles and abstracts returned were analysed for eligibility (RH, II). Abstracts reporting the performance of prognostic models were hand searched to identify studies carried out in ICUs in LMICs (as classified by the World Bank [21]) and full-text copies retrieved. Full-text articles were also retrieved when the title or abstract did not provide the country setting. The references of all selected reports were thereafter cross-checked for other potentially relevant articles.

The inclusion criteria for this review were studies carried out in ICUs in LMICs; those evaluating or developing prognostic models in adult ICU patients designed to predict mortality, whether ICU or hospital mortality.

The exclusion criteria for this review were: studies carried out only in ICUs in HICs or in paediatric ICUs; organ failure scoring systems such as SOFA that are not designed for predicting mortality; studies evaluating models in relation to a specific disease (e.g. liver cirrhosis) or limited to trauma patients; those assessing a single prognostic factor (e.g. microalbuminurea); studies published in languages other than English; studies published only as abstracts, editorials, letters and systematic or narrative reviews; and duplicate publications.

Where ICUs in both HICs and LMICs were included in a study, only data from the low/middle-income country were to be extracted. Likewise, where a single-factor or disease-specific scoring system and a non-specialty-specific scoring system were evaluated, only the data pertaining to the latter were extracted. Studies where both adult and paediatric patients were admitted to the same ICU and studies where the age limits of patients were not specified were to be included in this review.

Data extraction and critical appraisal

The full-text articles were reviewed to assess eligibility for inclusion in the report. Disagreements between the two reviewers were resolved by discussion. The list of extracted items was based on the guidance issued by Cochrane for data extraction [22] and critical appraisal for systematic reviews of prediction models (the CHARMS checklist [23]). A second reviewer checked extracted items classed as “not reported” or “unclear”, or unexpected findings. If an article described multiple models, separate data extraction was carried out for each model.

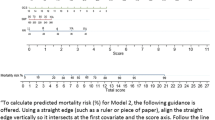

Descriptive analyses

Results were summarised using descriptive statistics. A formal meta-analysis was not planned as it was envisaged that the studies would be too heterogeneous, and a narrative synthesis was undertaken. Discrimination was assessed by the area under the receiver operating characteristic (AUROC) when reported [24]. Discrimination was considered excellent, very good, good, moderate or poor with AUROC values of 0.9–0.99, 0.8–0.89, 0.7–0.79, 0.6–0.69 and ≤ 0.6, respectively [25, 26]. Calibration was assessed by the Hosmer–Lemeshow C statistic (significant departures from perfect calibration were inferred when p values were less than 0.05 [24, 26]). Accuracy (the proportion of true positive and true negative in all evaluated cases [27]) was also considered.

Results

Study characteristics

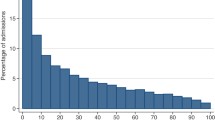

Of the 2233 studies obtained from PubMed searches, 473 were searched and 43 met the inclusion criteria. Seven further studies were included after cross-checking the reference lists of the selected studies (Fig. 1). Fifty studies met the review criteria and were selected for analysis.

Quality assessment

Study quality was assessed in accordance with the CHARMS guidelines [23] and is presented as Additional file 2. Variations existed in the conduct and reporting of the studies, especially with regard to inclusion and exclusion criteria, missing value handling, and performance and outcome measures.

Forty-three of the studies were carried out prospectively. The studies were carried out in 19 different LMICs, with the largest number carried out in India (studies = 11, models evaluated = 22), Thailand (studies = 6, models evaluated = 17) and Brazil (studies = 6, models evaluated = 17) (Table 1). Model adjustment was most frequent in India (n = 4 models). Settings, hospital and ICU characteristics are presented in Additional file 2.

Sample sizes ranged from 48 to 5780, and participant ages ranged from 1 month to 100 years (Table 1). Of the 33 studies reporting a lower age limit, 17 reported participants under the age of 18 years (Table 1).

Missing value handling was explicitly mentioned in 17 studies (Table 2). One study reported incomplete data for 26.4% of its patients but did not provide details on how this was handled [28]. Patients were excluded in nine of the studies [28,29,30,31,32,33,34,35,36], normal physiological values were imputed in five studies [37,38,39,40,41] and both exclusion (for missing variables such as chronic health status) and imputation by normal (for missing physiological values) occurred in two studies [42, 43]. No other methods of imputation were described. For the most commonly assessed models (APACHE II, SAPS II and SAPS 3) missing values were mentioned only 34.1%, 31.0% and 42.9% of the time respectively.

Model performance

The 50 studies reported a total of 114 model performance evaluations for nine versions of APACHE, SAPS and MPM as described in the subsection ‘Evaluation of the performance of existing models’. Three of the analysed studies [29, 35, 43] also described the development of five new prediction models in LMIC settings. These five new models are presented separately.

Evaluation of the performance of existing models

Model performance is described in the following in terms of the performance of the individual model evaluations carried out (n = 114).

External evaluation of models (model performance evaluation on a related but different population than the population on which the model has originally been developed [44]) was carried out 108 times as follows: performance of APACHE II was evaluated 36 times, of APACHE III five times, of APACHE IV seven times, of SAPS I twice, of SAPS II 26 times, of SAPS 3 13 times, of MPM I twice, of MPM II 12 times and of MPM III five times (Table 1).

Model adjustment was carried out six times (Table 3): three models were recalibrated using first-level customisation (computing a new logistic coefficient, while maintaining the same variables with the same weights as in the original model); two models were revised by the exclusion and/or substitution of variables; and one evaluation altered the way in which APACHE II was calculated—from the usual manual method to automatic calculation using custom-built software.

The mortality endpoint assessed for 60 (52.6%) of the performance evaluations was hospital or post-hospital mortality; for 47 (41.2%) evaluations it was ICU mortality and for seven (6.1%) the mortality endpoint was not specified (Table 1).

Ten (6%) model performance evaluations did not report either discrimination or calibration. The methods used for evaluation are presented in Table 4.

Tables 5, 6 and 7 describe the model performance of all versions of APACHE, SAPS and MPM respectively in terms of discrimination, calibration and accuracy.

Discriminatory ability of models

Discrimination was reported for 104 (91.2%) of the evaluated models (Tables 5, 6 and 7). In three evaluations (two studies [45, 46]) it was reported as sensitivity and specificity only. In 101 model performance evaluations, discrimination was reported as the AUROC; in four of these evaluations AUROC was presented as a figure and a numerical value could not be ascertained [47, 48]. Where the AUROC was reported in numerical form (97 model performance evaluations) a confidence interval was only reported in 63 evaluations.

Where the AUROC was reported as a numerical value, 21 evaluations (21.7%) reported excellent discrimination. For all versions of APACHE II, SAPS II, SAPS 3 and MPM II, excellent discrimination was reported in 16.1%, 11.5%, 47.7% and 36.4% of the model evaluations respectively.

Sixty-six (68.0%) model evaluations reported very good or good discrimination; for all versions of APACHE II this was 67.7%, for SAPS II was 80.8%, for SAPS 3 was 58.3% and for MPM II it was 45.5%. Poor discrimination was reported on one occasion only, for an evaluation of SAPS II [49].

Excellent discrimination was reported more frequently when hospital mortality (n = 15, 25%) was the outcome in comparison to when it was ICU mortality (n = 6, 10%). Normal value imputation resulted in better discrimination (n = 4, 25% excellent and n = 9, 56.25% very good) than exclusion (n = 1, 8.33% excellent and n = 3, 25.0% very good) or where missing values were not reported (n = 16, 19.0% excellent and n = 32, 38.1% very good). Discrimination was better for all models with scores calculated further into the ICU stay when compared with those calculated earlier on [32, 48, 50].

Four (n = 2 studies) of the six evaluations with model adjustments compared them to the original model (Table 3). However an independent validation set was employed in only one study (three validations), where the models were recalibrated [51]. For all three modes (APACHE II, SAPS II and SAPS 3), recalibration resulted in the improvement of previously poor calibration; and discrimination which was already excellent remained the same.

Ability of models to calibrate

Only 82 (71.9%) evaluations reported calibration (Tables 5, 6 and 7). The Hosmer–Lemeshow test was reported for both C and H statistics 17 (20.7%) times, for C statistic only 21 (25.6%) times, for H statistic only nine (10.9%) times and without further detail 35 (42.7%) times.

A p value greater than 0.05 for the Hosmer–Lemeshow statistic was reported by 49 (59.8%) evaluations that reported calibration. For all versions of APACHE II, SAPS II, SAPS 3 and MPM II, p > 0.05 was reported in 60.9%, 59%, 66.7% and 50% of model performance evaluations respectively.

Ten evaluations that reported excellent discrimination also reported good calibration. Of these, three were for first-level customisations of APACHE II, SAPS II and SAPS 3 (calibration resulted in p < 0.05 for the Hosmer–Lemeshow statistic when the non-customised model was used) [51]. The other evaluations that reported excellent discrimination and good calibration were carried out in three studies; Juneja et al. (APACHE III, APACHE IV, MPM II (initial), MPM III (initial) and SAPS 3) [1], Sekulic et al. (MPM II at 7 days) [48] and Xing et al. (SAPS 3) [52].

A p value greater than 0.05 was reported more frequently when ICU mortality was the outcome (n = 27, 77.1%) than when hospital mortality was the outcome (n = 13, 27.7%). A p value greater than 0.05 for the Hosmer–Lemeshow statistic was obtained through exclusion of missing values 100% of the time (n = 3), by normal value imputation 40.9% of the time (n = 9) or where missing values were not reported 54.7% of the time (n = 29).

Accuracy of models

Accuracy was reported for 29 evaluations (25.0%) and ranged from 55.20 to 89.7% (Tables 5, 6 and 7).

New model development

Three studies reported five new model developments [35, 36, 43]. These are described in Table 8. For all five new models, the AUROC was higher than that obtained with the original prognostic scoring system on which it was based. A good calibration was reported for both R-MPM and Simplified R-MPM; a poor calibration was reported for MPM-III. A poor calibration was reported for both ANN 22 and ANN 15 as well as for the original APACHE II on which they were based.

Discussion

This systematic review of critical care prognostic models in LMICs reports good to excellent discrimination in 88.9% of evaluations between survivors and non-survivors of ICU admission and good calibration in 58.3% of those reporting calibration. In keeping with findings in HICs [3, 53], this review found good discrimination to be more frequently reported than good calibration; although good discrimination and good calibration were rarely (11.9%) reported together in the same evaluation [1, 48, 51, 52]. Three of the 10 evaluations reporting both excellent discrimination and good calibration were from recalibrated models [51], and in two [48] the sample size was small (n = 60). It is known that a calibration measure such as the Hosmer–Lemeshow goodness-of-fit test might demonstrate high p values in these circumstances, simply as a consequence of the test having lower power and not necessarily as an indication of a good fit [53].

Differences in predictors in the different models (e.g. acute diagnosis is a variable in APACEHE II but not SAPS II) and the differences in the datasets used in the various studies may have contributed to the discrepancies seen in performances of the models. Three major findings, with special relevance to the LMIC settings, limit generalisability and can affect performance: post-ICU outcomes were not available for 40.5% where ICU mortality was the outcome; only 44.8% reported a lower age limit, with 55.8% of these including patients who were aged younger than 18 years; and missing values and their handling. The original models being evaluated were developed to assess hospital mortality. Therefore, the lack of post-ICU outcome may impact on their performance, particularly as discharge from the ICU (especially in these settings) may be influenced by non-clinical discharge decisions such as shortage of ICU beds. However, post-ICU follow-up may not always be feasible in these settings due to the lack of established follow-up systems (e.g. medical registries, electronic records). Patient age may affect model performance and could be another cause for the heterogeneity seen between studies. The lower age limit for admission to adult ICUs varies between settings, perhaps resulting in the admission of paediatric patients into adult ICUs (and their subsequent use in the datasets for the validation of adult prognostic models). Twenty-three studies did not report a lower age limit for patient admission and 17 studies included patients younger than the age of 18 years; the variation in both age criteria for inclusion and for reporting make unfeasible a complete exclusion of paediatric patients from this review of adult prognostic models. Missing value handling, which can lead to bias and thus influence model performance especially in LMIC settings [53], was only reported infrequently. Where reported, imputation by normal values (which is less justifiable in LMIC settings [9]) and exclusion of incomplete records (leading to inefficient use of the dataset) were the methods frequently utilised. Research into the utility of other techniques of imputation (e.g. multiple imputation) for missing values may reduce bias and increase the interpretability of model performance. However, missing values in prognostic models in LMIC settings are likely to be a persistent problem. Some of these difficulties may be alleviated by increasing efforts to improve the availability and recording of measures such as GCS and saturations or by effecting substitutions for the measurements that are more inaccessible in LMIC settings (e.g. urea for creatinine and saturations for PaO2). Although two studies in this review reported the exclusion of variables [30, 50], the effect of the modifications could not be ascertained: in one case, no comparison was made with the original APACHE II model [30]; and in the second, discrimination was not reported for the simplified version of SAPS II [50]; calibration was not reported for either of these models.

Validation studies of prognostic models in LMIC settings are becoming more common; 16 of the 50 studies included were published in 2015, 2016 or 2017 and additional studies, for example Moralez et al. in Brazil [54] and Haniffa et al. [9] in Sri Lanka, have been published/awaiting publication subsequent to the literature search for this review. Consequently it is important for investigators to adhere to reporting standards, such as CHARMS—especially with regard to performance measures, outcomes and missing values— to enable better interpretation.

For a critical care prognostic model to be effective it needs to be calibrated to the target setting and have an acceptable data collection burden. However, in this review, first-level customisation was carried out in only one study [51]; the calibration of APACHE II, SAPS II and SAPS 3 models improved from poor to good and the discrimination remained excellent before and after recalibration. In HIC, medical registries enable standardised, centralised, often automated, electronic data gathering, which can then be validated; thus reducing the burden of data collection. These registries include mechanisms for providing feedback on critical care unit performance and also enable regular recalibration of prognostic models, thus minimising the incorrect estimation of predicted mortalities due to changes in case mix and treatment. The absence of such registries in LMIC settings, with important exceptions (e.g. in Brazil, Malaysia and Sri Lanka), is a significant barrier for the validation and recalibration of existing models, and the development of models tailored to these settings. Accordingly, none of the validation studies included in this review is an output from a medical registry, no studies reported on model performance from different time points in the same setting and only three studies were conducted in two or more hospitals [41, 43, 55].

The use of prognostic models in practice is thought to be influenced by the complexity of the model, the format of the model, the ease of use and the perceived relevance of the model to the user [56]. The development of models with fewer and more commonly available measures perhaps in conjunction with medical registries promoting research may also be effective in improving mortality prediction in these settings; for example, the simplified Rwanda MPM [43] and TropICS [57]. Introducing simple prognostic models like those already mentioned and emphasising their usefulness by providing output that is relevant to clinicians, administrators and patients is therefore more likely to result in the collection of required data and their application in a clinical context.

ICU risk prediction models need to exhibit good calibration before they can be used for quality improvement initiatives [58, 59]. Setting-relevant models such as TropICS [57], which are well calibrated, can be used for stratification of critically ill patients according to severity, which is a pre-requisite for impact assessment of training and other quality improvement initiatives. However, models that show poor calibration but have a good discriminatory ability may still be of benefit if their intended use is for identifying high-risk patients for diagnostic testing or therapy and/or for inclusion criteria or covariate adjustment in a randomised controlled trial [58, 59].

Limitations

This review was limited to a single database (PubMed). There is no MeSH for LMIC (non-HIC) and hence a hand search strategy was deployed. No attempt was made to distinguish between upper and lower middle-income countries which are very heterogeneous in terms of provision, resources and access to healthcare. The review was intended to be for adult prognostic models used only in adult patients; however, due to the manner in which the studies were reported it was not possible to exclude paediatric patients.

Conclusion

Performance of mortality risk prediction models for ICU patients in LMICs is at most moderate, especially with limitations in calibration. This necessitates continued efforts to develop and validate LMIC models with readily available prognostic variables, perhaps aided by medical registries. Robust interpretations of their applicability are currently hampered by poor adherence to reporting guidelines, especially when reporting missing value handling.

References

Juneja D, Singh O, Nasa P, et al. Comparison of newer scoring systems with the conventional scoring systems in general intensive care population. Minerva Anestesiol. 2012;78(2):194–200. https://www.minervamedica.it/en/journals/minerva-anestesiologica/article.php?cod=R02Y2012N02A0194. Accessed 4 Oct 2016.

Rapsang AG, Shyam DC. Scoring systems in the intensive care unit: a compendium. Indian J Crit Care Med. 2014;18(4):220–8. https://doi.org/10.4103/0972-5229.130573.

Vincent JJ-L, Moreno RR, Moreno RR, et al. Clinical review: scoring systems in the critically ill. Crit Care. 2010;14(2):207. https://doi.org/10.1186/cc8204.

Knaus WA, Zimmerman JE, Wagner DP, et al. APACHE-acute physiology and chronic health evaluation: a physiologically based classification system. Crit Care Med. 1981;9(8):591–7. http://journals.lww.com/ccmjournal/Abstract/1981/08000/APACHE_acute_physiology_and_chronic_health.8.aspx. Accessed 4 Oct 2016.

Grissom CK, Brown SM, Kuttler KG, et al. A modified sequential organ failure assessment score for critical care triage. Disaster Med Public Health Prep. 2010;4(4):277–84. https://doi.org/10.1001/dmp.2010.40.

Le Gall J-R, Lemeshow S, Saulnier F, et al. A New Simplified Acute Physiology Score (SAPS II) based on a European/North American Multicenter Study. JAMA J Am Med Assoc. 1993;270(24):2957. https://doi.org/10.1001/jama.1993.03510240069035.

Aggarwal AN, Sarkar P, Gupta D, et al. Performance of standard severity scoring systems for outcome prediction in patients admitted to a respiratory intensive care unit in North India. Respirology. 2006;11(2):196–204. https://doi.org/10.1111/j.1440-1843.2006.00828.x.

Namendys-Silva SA, Silva-Medina MA, Vásquez-Barahona GM, et al. Application of a modified sequential organ failure assessment score to critically ill patients. Braz J Med Biol Res. 2013;46(2):186–93. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3854366/. Accessed 10 Aug 2016.

Haniffa R, De Silva AP, Weerathunga P, et al. Applicability of the APACHE II model to a lower middle income country. J Crit Care. 2017;42:178–83. http://www.jccjournal.org/article/S0883-9441(17)31025-0/fulltext. Accessed 13 July 2017.

Knaus WA, Draper EA, Wagner DP, et al. Prognosis in acute organ-system failure. Ann Surg. 1985;202(6):685–93. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1250999/. Accessed 27 Sept 2016.

Cullen DJ, Civetta JM, Briggs BA, et al. Therapeutic intervention scoring system: a method for quantitative comparison of patient care. Crit Care Med. 1974;2(2):57–60. http://journals.lww.com/ccmjournal/Abstract/1974/03000/Therapeutic_intervention_scoring_system__a_method.1.aspx. Accessed 12 Dec 2016.

Lemeshow S, Teres D, Klar J, et al. Mortality Probability Models (MPM II) based on an international cohort of intensive care unit patients. JAMA. 1993;270(20):2478–86. https://jamanetwork.com/journals/jama/article-abstract/409377?redirect=true. Accessed 12 Dec 2016.

Vincent J-L, Moreno R, Takala J, et al. The SOFA (Sepsis-related Organ Failure Assessment) score to describe organ dysfunction/failure. On behalf of the Working Group on Sepsis-Related Problems of the European Society of Intensive Care Medicine. Intensive Care Med. 1996;22(7):707–10. https://doi.org/10.1007/BF01709751.

Steyerberg EW, Vickers AJ, Cook NR, et al. Assessing the performance of prediction models: a framework for some traditional and novel measures. Epidimiology. 2010;21(1):128–38. https://doi.org/10.1097/EDE.0b013e3181c30fb2.

Haniffa R, De Silva AP, Iddagoda S, et al. A cross-sectional survey of critical care services in Sri Lanka: a lower middle-income country. J Crit Care. 2014;29(5):764–8. https://doi.org/10.1016/j.jcrc.2014.04.021.

Haniffa R, De Silva AP. National Intensive Care Surveillance. A Survey Report on Intensive Care Units of the Government Hospitals in Sri Lanka. Colombo: National Intensive Care Surveillance Unit Division of Deputy Director General (Medical Services); 2012. ISBN 978-955-0505-25-8.

Adhikari NKJ, Rubenfeld GD. Worldwide demand for critical care. Curr Opin Crit Care. 2011;17(6):620–5. https://doi.org/10.1097/MCC.0b013e32834cd39c.

Moons KGM, Altman DG, Vergouwe Y, et al. Prognosis and prognostic research: application and impact of prognostic models in clinical practice. BMJ. 2009;338:b606.

Rivera-Fernández R, Vázquez-Mata G, Bravo M, et al. The Apache III prognostic system: customized mortality predictions for Spanish ICU patients. Intensive Care Med. 1998;24(6):574–81. https://link.springer.com/article/10.1007/s001340050618. Accessed 5 Oct 2016.

Sakr Y, Krauss C, Amaral ACKB, et al. Comparison of the performance of SAPS II, SAPS 3, APACHE II, and their customized prognostic models in a surgical intensive care unit. Br J Anaesth. 2008;101(6):798–803. https://doi.org/10.1093/bja/aen291.

World Bank. Low and middle income data. 2017. http://data.worldbank.org/income-level/low-and-middle-income?view=chart.. Accessed 13 July 2017.

Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. The Cochrane Collaboration; 2011. Available from http://handbook-5-1.cochrane.org/.

Moons KGM, de Groot JAH, Bouwmeester W, et al. Critical appraisal and data extraction for systematic reviews of prediction modelling studies: the CHARMS Checklist. PLoS Med. 2014;11(10):e1001744. https://doi.org/10.1371/journal.pmed.1001744.

Ridley S. Severity of illness scoring systems and performance appraisal. Anaesthesia. 1998;53(12):1185–94. https://doi.org/10.1046/j.1365-2044.1998.00615.x.

Bouch DC, Thompson JP. Severity scoring systems in the critically ill. Contin Educ Anaesthesia, Crit Care Pain. 2008;8(5):181–5. https://doi.org/10.1093/bjaceaccp/mkn033.

Vincent JL. Severity of illness scoring system. In: Roberts PR, editor. Comprehensive Critical Care: Adult. 2012:875-84). Retrieved from https://med.uth.edu/anesthesiology/files/2015/05/Chapter-47-Severity-of-Illness-Scoring-Systems.pdf.

Baratloo A, Hosseini M, Negida A, El Ashal G. Part 1: Simple definition and calculation of accuracy. Sensitivity and specificity. Emergency. 2015;3(2):48–9.

Yamin S, Vaswani AK, Afreedi M. Predictive efficasy of APACHE IV at ICUs of CHK. Pakistan J Chest Med. 2011;17(1):1-14. http://www.pjcm.net/index.php/pjcm/article/view/132/125. Accessed 18 May 2016.

Ahluwalia G, Pande JN, Sharma SK. Prognostic scoring for critically ill hospitalized patients. Indian J Chest Dis Allied Sci. 1974;41(4):201–6. http://www.ncbi.nlm.nih.gov/pubmed/10661007. Accessed 7 Nov 2015.

Eapen CE, Thomas K, Cherian AM, et al. Predictors of mortality in a medical intensive care unit. Natl Med J India. 1974;10(6):270–2. http://archive.nmji.in/approval/archive/Volume-10/issue-6/original-articles-2.pdf.

Godinjak AG, Iglica A, Rama A, et al. Predictive value of SAPS II and APACHE II scoring systems for patient outcome in medical intensive care unit. Acta Med Acad. 2016;45(2):89–95. https://doi.org/10.5644/ama2006-124.165.

Khan M, Maitree P, Radhika A. Evaluation and comparison of the three scoring systems at 24 and 48 h of admission for prediction of mortality in an Indian ICU: a prospective cohort study. Ain-Shams J Anaesthesiol. 2015;8(3):294–300. https://doi.org/10.4103/1687-7934.159003.

Naqvi IH, Mahmood K, Ziaullaha S, et al. Better prognostic marker in ICU—APACHE II, SOFA or SAP II! Pak J Med Sci. 2016;32(5):PMC5103123. https://doi.org/10.12669/pjms.325.10080.

Naved SA, Siddiqui S, Khan FH. APACHE-II score correlation with mortality and length of stay in an intensive care unit. J Coll Physicians Surg Pakistan. 2011;21(1):4–8. https://doi.org/01.2011/JCPSP.0408.

Nimgaonkar A, Karnad DR, Sudarshan S, et al. Prediction of mortality in an Indian intensive care unit. Comparison between APACHE II. Intensive Care Med. 2004;30(2):248–53. https://doi.org/10.1007/s00134-003-2105-4.

Turner JS, Potgieter PD, Linton DM. Systems for scoring severity of illness in intensive care. S Afr Med J. 1989;76(1):17–20. http://archive.samj.org.za/1989%20VOL%20LXXVI%20Jul-Dec/Articles/07%20July/1.7%20SYSTEMS%20FOR%20SCORING%20SEVERITY%20OF%20ILLNESS%20IN%20THE%20RSA.%20J.A.%20Frean,%20W.F.%20Carman,%20H.H.%20Crewe-Brown.pdf. Accessed 7 Nov 2015.

Faruq MO, Mahmud MR, Begum T, et al. Comparison of severity systems APACHE II and SAPS II in critically ill patients. Bangladesh Crit Care J. 2013;1(1):27–32. http://dx.doi.org/10.3329/bccj.v1i1.14362.

Khwannimit B, Geater A. A comparison of APACHE II and SAPS II scoring systems in predicting hospital mortality in Thai adult intensive care units. J Med Assoc Thai. 2007;90(4):643–52. http://www.jmatonline.com/index.php/jmat/article/view/8591. Accessed 7 Nov 2015.

Soares M, Salluh JIF. Validation of the SAPS 3 admission prognostic model in patients with cancer in need of intensive care. Intensive Care Med. 2006;32(11):1839–44. https://doi.org/10.1007/s00134-006-0374-4.

Soares M, Fontes F, Dantas J, et al. Performance of six severity-of-illness scores in cancer patients requiring admission to the intensive care unit: a prospective observational study. Crit Care. 2004;8(4):R194–203. https://doi.org/10.1186/cc2870.

Soares M, Silva UVA, Teles JMM, et al. Validation of four prognostic scores in patients with cancer admitted to Brazilian intensive care units: results from a prospective multicenter study. Intensive Care Med. 2010;36(7):1188–95. https://doi.org/10.1007/s00134-010-1807-7.

Nassar AP, Mocelin AO, Nunes ALB, et al. Caution when using prognostic models: a prospective comparison of 3 recent prognostic models. J Crit Care. 2012;27(4):423.e1–7. https://doi.org/10.1016/j.jcrc.2011.08.016.

Riviello ED, Kiviri W, Fowler RA, et al. Predicting mortality in low-income country ICUs: The Rwanda Mortality Probability Model (R-MPM). Lazzeri C, ed. PLoS One. 2016;11(5):e0155858. https://doi.org/10.1371/journal.pone.0155858.

Steyerberg EW, Bleeker SA, Moll HA, et al. Internal and external validation of predictive models: a simulation study of bias and precision in small samples. J Clin Epidemiol. 2003;56(5):441–7. http://www.jclinepi.com/article/S0895-4356(03)00047-7/fulltext, Accessed 25 Oct 2017.

Kiatboonsri S, Charoenpan P. The severity of disease measurements among Thai medical intensive care unit patients. Southeast Asian J Trop Med Public Health. 1995;26(1):57–65. http://www.tm.mahidol.ac.th/seameo/1995-26-1/1995-26-1-57.pdf. Accessed 6 Mar 2016.

Mohan A, Shrestha P, Guleria R, et al. Development of a mortality prediction formula due to sepsis/severe sepsis in a medical intensive care unit. Lung India. 1974;32(4):313–19. https://doi.org/10.4103/0970-2113.159533.

Evran T, Serin S, Gürses E, et al. Various scoring systems for predicting mortality in Intensive Care Unit. Niger J Clin Pract. 2016;19(4):530–4. https://doi.org/10.4103/1119-3077.183307.

Sekulic AD, Trpkovic SV, Pavlovic AP, et al. Scoring systems in assessing survival of critically ill ICU patients. Med Sci Monit. 2015;21:2621–9. https://doi.org/10.12659/MSM.894153.

Galal I, Kassem E, Mansour M. Study of the role of different severity scores in respiratory ICU. Egypt J Bronchol. 2013;7(2):55. https://doi.org/10.4103/1687-8426.123995.

Zhao X-X, Su Y-Y, Wang M, et al. Evaluation of neuro-intensive care unit performance in China: predicting outcomes of Simplified Acute Physiology Score II or Glasgow Coma Scale. Chin Med J (Engl). 2013;126(6):1132–7. http://124.205.33.103:81/ch/reader/view_abstract.aspx?file_no=12-2886&flag=1. Accessed 7 Nov 2015.

Khwannimit B, Bhurayanontachai R. A comparison of the performance of Simplified Acute Physiology Score 3 with old standard severity scores and customized scores in a mixed medical-coronary care unit. Minerva Anestesiol. 2011;77(3):305–12. https://www.minervamedica.it/en/journals/minerva-anestesiologica/article.php?cod=R02Y2011N03A0305. Accessed 6 Mar 2016.

Xing X, Gao Y, Wang H, et al. Performance of three prognostic models in patients with cancer in need of intensive care in a medical center in China. PLoS One. 2015;10(6):e0131329. https://doi.org/10.1371/journal.pone.0131329.

Zhu BP, Lemeshow S, Hosmer DW, et al. Factors affecting the performance of the models in the Mortality Probability Model II system and strategies of customization: a simulation study. Crit Care Med. 1996;24(1):57–63.

Moralez GM, Rabello LSCF, Lisboa TC, et al. External validation of SAPS 3 and MPM0-III scores in 48,816 patients from 72 Brazilian ICUs. Ann Intensive Care. 2017;7:53. https://doi.org/10.1186/s13613-017-0276-3.

Silva Junior JM, Malbouisson LMS, Nuevo HL, et al. Aplicabilidade do escore fisiológico agudo simplificado (SAPS 3) em hospitais brasileiros. Rev Bras Anestesiol. 2010;60(1):20–31. https://doi.org/10.1590/S0034-70942010000100003.

Hemingway H, Croft P, Perel P, et al. Prognosis research strategy (PROGRESS) 1: a framework for researching clinical outcomes. BMJ. 2013;346:e5595. https://doi.org/10.1136/bmj.e5595.

Haniffa R, Mukaka M, Munasinghe SB, et al. Simplified prognostic model for critically ill patients in resource limited settings in South Asia. Crit Care. 2017;21:250. doi.org/10.1186/s13054-017-1843-6.

Nassar AP, Malbouisson LMS, Moreno R. Evaluation of Simplified Acute Physiology Score 3 performance: a systematic review of external validation studies. Crit Care. 2014;18(3):R117. https://doi.org/10.1186/cc13911.

Steyerberg EW, Moons KGM, van der Windt DA, et al. Prognosis Research Strategy (PROGRESS) 3: prognostic model research. PLoS Med. 2013;10(2):e1001381. https://doi.org/10.1371/journal.pmed.1001381.

Abhinandan KS, Vedavathi R. Usefulness of Sequential Organ Failure Assessment (SOFA) and Acute Physiological and Chronic Health Evaluation II (APACHE II) score in analysing patients with multiple organ dysfunction syndrome in sepsis. J Evol Med Dent Sci. 2013;2(49):9591-605. https://jemds.com/data_pdf/dr%20abhinandan%20-.pdf. Accessed 1 Apr 2017.

Liu X, Shen Y, Li Z, et al. Prognostic significance of APACHE II score and plasma suPAR in Chinese patients with sepsis: a prospective observational study. BMC Anesthesiol. 2016;16:46. https://doi.org/10.1186/s12871-016-0212-3.

Nair R, Bhandary NM, D’Souza AD. Initial Sequential Organ Failure Assessment score versus Simplified Acute Physiology score to analyze multiple organ dysfunction in infectious diseases in intensive care unit. Indian J Crit Care Med. 2016;20(4):210–5. https://doi.org/10.4103/0972-5229.180041.

Celik S, Sahin D, Korkmaz C, et al. Potential risk factors for patient mortality during admission to the intensive care units. Saudi Med J. 2014;35(2):159–64. https://www.smj.org.sa/index.php/smj/article/view/2805. Accessed 6 Mar 2016.

Chang L, Horng C-F, Huang Y-CT, et al. Prognostic accuracy of Acute Physiology and Chronic Health Evaluation II scores in critically ill cancer patients. Am J Crit Care. 2006;15(1):47–53. http://ajcc.aacnjournals.org/content/15/1/47.long. Accessed 1 Apr 2017.

Chiavone PA, Rasslan S. Influence of time elapsed from end of emergency surgery until admission to intensive care unit, on Acute Physiology and Chronic Health Evaluation II (APACHE II) prediction and patient mortality rate. Sao Paulo Med J. 2005;123(4):167–74. https://doi.org//S1516-31802005000400003.

Nouira S, Belghith M, Elatrous S, et al. Predictive value of severity scoring systems: comparison of four models in Tunisian adult intensive care units. Crit Care Med. 1998;26(5):852–9. http://journals.lww.com/ccmjournal/Abstract/1998/05000/Predictive_value_of_severity_scoring_systems_.16.aspx. Accessed 6 Mar 2016.

Fadaizadeh L, Tamadon R, Saeedfar K, et al. Performance assessment of Acute Physiology and Chronic Health Evaluation II and Simplified Acute Physiology Score II in a referral respiratory intensive care unit in Iran. Acta Anaesthesiol Taiwanica. 2012;50(2):59–62. https://doi.org/10.1016/j.aat.2012.05.004.

Ratanarat R, Thanakittiwirun M, Vilaichone W, et al. Prediction of mortality by using the standard scoring systems in a medical intensive care unit in Thailand. J Med Assoc Thai. 2005;88(7):949–55. https://pdfs.semanticscholar.org/426c/15599cf5b85adcb291dbae9e60408dbe743a.pdf. Accessed 7 Nov 2015.

Sathe PM, Bapat SN. Assessment of performance and utility of mortality prediction models in a single Indian mixed tertiary intensive care unit. Int J Crit Illn Inj Sci. 2014;4(1):29–34. https://doi.org/10.4103/2229-5151.128010.

Gilani MT, Razavi M, Azad A. A comparison of Simplified Acute Physiology Score II, Acute Physiology and Chronic Health Evaluation II and Acute Physiology and Chronic Health Evaluation III scoring system in predicting mortality and length of stay at surgical intensive care unit. Niger Med J. 2014;55(2):144–7. https://doi.org/10.4103/0300-1652.129651.

Shoukat H, Muhammad Y, Gondal KM, et al. Mortality prediction in patients admitted in surgical intensive care unit by using APACHE IV. J Coll Physicians Surg Pak. 2016;26(11):877–80. https://doi.org/2468.

Gupta R, Arora VK. Performance evaluation of APACHE II score for an Indian patient with respiratory problems. Indian J Med Res. 2004;119(6):273–82. http://www.ijmr.in/CurrentTopicView.aspx?year=Indian%20J%20Med%20Res%20119,%20June%202004,%20pp%20273-282$Original%20Article. Accessed 7 Nov 2015.

Shrestha GS, Gurung R, Amatya R. Comparison of Acute Physiology, Age, Chronic Health Evaluation III score with initial Sequential Organ Failure Assessment score to predict ICU mortality. Nepal Med Coll J. 2011;13(1):50–4. http://nmcth.edu/images/gallery/Editorial/3EFLlgs_shrestha.pdf. Accessed 6 Mar 2016.

Haidri FR, Rizvi N, Motiani B. Role of APACHE score in predicting mortality in chest ICU. J Pak Med Assoc. 2011;61(6):589–92. http://jpma.org.pk/full_article_text.php?article_id=2828. Accessed 6 Mar 2016.

Halim DA, Murni TW, Redjeki IS. Comparison of APACHE II, SOFA, and Modified SOFA Scores in Predicting Mortality of Surgical Patients in Intensive Care Unit at Dr. Hasan Sadikin General Hospital. Crit Care Shock. 2009;12(4):157–69. http://criticalcareshock.org/files/Original-Comparison-of-Apache-II-SOFA-and-Modified-SOFA-Scores-in-Predicting-Mortality-of-Surgical-Patients-in-Intensive-Care-Unit-at-Dr.-Hasan-Sadikin-General-Hospital1.pdf.

Hamza A, Hammed L, Abulmagd M, et al. Evaluation of general ICU outcome prediction using different scoring systems. Med J Cairo Univ. 2009;77(1):27-35. http://medicaljournalofcairouniversity.net/Home/images/pdf/2009/march/35.pdf. Accessed 8 Nov 2016.

Hashmi M, Asghar A, Shamim F, et al. Validation of acute physiologic and chronic health evaluation II scoring system software developed at The Aga Khan University. Pakistan Saudi J Anaesth. 2016;10(1):45. https://doi.org/10.4103/1658-354X.169474.

Hernandez AMR, Palo JEM, Sakr Y, et al. Performance of the SAPS 3 admission score as a predictor of ICU mortality in a Philippine private tertiary medical center intensive care unit. J Intensive Care. 2014;2(1):29. https://doi.org/10.1186/2052-0492-2-29.

Sutheechet N. Assessment and comparison of the performance of SAPS II and MPM 24 II scoring systems in predicting hospital mortality in intensive care units. Bull Dep Med Serv Thail. 2009;34(11):641-50. http://www.dms.moph.go.th/dmsweb/dmsweb_v2_2/content/org/journal/data/2009-11_p641-650.pdf. Accessed 11 Aug 2016.

Hosseini M, Ramazani J. Comparison of acute physiology and chronic health evaluation II and Glasgow Coma Score in predicting the outcomes of Post Anesthesia Care Unit’s patients. Saudi J Anaesth. 1974;9(2):136–41. https://doi.org/10.4103/1658-354X.152839.

Teoh GS, Mah KK, Abd Majid S, et al. APACHE II: preliminary report on 100 intensive care unit cases in University Hospital, Kuala Lumpur. Med J Malaysia. 1991;46(1):72–81. http://www.e-mjm.org/1991/v46n1/APACHE_II.pdf. Accessed 6 Mar 2016.

Wilairatana P, Noan NS, Chinprasatsak S, et al. Scoring systems for predicting outcomes of critically ill patients in northeastern Thailand. Southeast Asian J Trop Med Public Health. 1995;26(1):66–72. http://www.tm.mahidol.ac.th/seameo/1995-26-1/1995-26-1-66.pdf. Accessed 7 Nov 2015.

Author information

Authors and Affiliations

Contributions

RH and AMD conceived the study and developed the study design. RH and II performed the primary study search, extracted data, carried out statistical analysis, drafted the manuscript, and revised the manuscript. NFDK, AMD and APDS improved the idea and design, and revised the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that there are no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

A table presenting the search terms used. (XLSX 27 kb)

Additional file 2:

A table presenting the checklist for critical appraisal and data extraction for systematic reviews of prediction modelling studies. (XLSX 41 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Haniffa, R., Isaam, I., De Silva, A.P. et al. Performance of critical care prognostic scoring systems in low and middle-income countries: a systematic review. Crit Care 22, 18 (2018). https://doi.org/10.1186/s13054-017-1930-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13054-017-1930-8