Abstract

Background

Adequate training and preparation of medical first responders (MFRs) are essential for an optimal performance in highly demanding situations like disasters (e.g., mass accidents, natural catastrophes). The training needs to be as effective as possible, because precise and effective behavior of MFRs under stress is central for ensuring patients’ survival and recovery. This systematic review offers an overview of scientifically evaluated training methods used to prepare MFRs for disasters. It identifies different effectiveness indicators and provides an additional analysis of how and to what extent the innovative training technologies virtual (VR) and mixed reality (MR) are included in disaster training research.

Methods

The systematic review was conducted according to the PRISMA guidelines and focused specifically on (quasi-)experimental studies published between January 2010 and September 2021. The literature search was conducted via Web of Science and PubMed and led to the inclusion of 55 articles.

Results

The search identified several types of training, including traditional (e.g., lectures, real-life scenario training) and technology-based training (e.g., computer-based learning, educational videos). Most trainings consisted of more than one method. The effectiveness of the trainings was mainly assessed through pre-post comparisons of knowledge tests or self-reported measures although some studies also used behavioral performance measures (e.g., triage accuracy). While all methods demonstrated effectiveness, the literature indicates that technology-based methods often lead to similar or greater training outcomes than traditional trainings. Currently, few studies systematically evaluated immersive VR and MR training.

Conclusion

To determine the success of a training, proper and scientifically sound evaluation is necessary. Of the effectiveness indicators found, performance assessments in simulated scenarios are closest to the target behavior during real disasters. For valid yet inexpensive evaluations, objectively assessible performance measures, such as accuracy, time, and order of actions could be used. However, performance assessments have not been applied often. Furthermore, we found that technology-based training methods represent a promising approach to train many MFRs repeatedly and efficiently. These technologies offer great potential to supplement or partially replace traditional training. Further research is needed on those methods that have been underrepresented, especially serious gaming, immersive VR, and MR.

Similar content being viewed by others

Background

Natural and man-made disasters such as floods, mass-accidents, and terrorist attacks are ubiquitous and cause loss of life, human suffering, and infrastructural damage [1, 2]. They create particularly demanding situations for emergency services, as they are unforeseen and usually sudden events that exceed local capacity and resources to rescue and care [1]. During such disasters, medical first responders (MFRs), who are responsible for the initial prehospital care in medical emergencies, play a key role [3, 4]. However, numerous healthcare professionals, including MFRs, perceive their preparedness for the response to disasters as inadequate [5]. As previous research indicates, a higher training frequency and better training quality are associated with increased disaster preparedness [5]. To enhance the overall quality of MFR training, the aim of this review is to provide an overview of scientifically evaluated training methods and to examine whether certain methods seem to be particularly effective. Furthermore, indicators used to evaluate the training effectiveness will be identified so that future research can be guided by existing training evaluation methods. Finally, the emergence of new, immersive technologies, including virtual (VR) and mixed reality [MR; 6], has led to the development of new training programs which are becoming increasingly accessible to educators in the medical sector [7]. Therefore, we will draw particular attention to the role of immersive technologies by providing an additional analysis of how and to what extent VR and MR specifically are included in current disaster training research.

MFRs typically include paramedics and emergency medical technicians [3], but the term may also refer to physicians, ambulance specialist nurses, and trained volunteers depending on a country’s emergency medical service systems [8, 9]. During disasters, MFRs take on a variety of tasks such as the initial scene evaluation, triage, medical care, and the transport of patients [3]. They have to perform those tasks under stressful and challenging conditions, such as difficult access to the disaster site, multiple injured people and disruption in communication systems [10]. In order for MFRs to adapt to these unusual conditions, they require specifically tailored training.

Effective training involves a systematic and goal-oriented execution of exercises for the acquisition or increase of specific competences and skills [11]. The general idea of training is to challenge the current level of performance (e.g., higher intensity, higher difficulty, new content) without being too overwhelming, so that the trainee can adapt and reach a higher performance level [11,12,13,14,15]. However, training resources, including time, budget, and facilities, are usually limited. Therefore, training methods must be not only effective, but also match the resources of the rescue organization.

Despite the necessity of adequately preparing MFRs for disasters, no systematic and up-to-date overview of scientifically evaluated training methods and their effectiveness exists. Ingrassia and colleagues conducted an internet-based search via Google and Bing and identified several disaster management curricula at a postgraduate level with a large variety of methods, e.g., lectures and discussion-based exercises [16]. The trainings’ effectiveness, however, was not evaluated. Assessing studies published between 2000 and 2005, Williams and colleagues [17] concluded that the available evidence had not been sufficient to determine whether disaster training can effectively increase the knowledge and skills of MFRs and in-hospital staff. Because these findings are derived from studies conducted more than 15 years ago, new insights have most likely emerged and new training methods may have been added following recent technological advances.

Two of those new methods are VR and MR. In VR training, users are placed inside a simulated, artificial, three-dimensional environment in which they can interact with their digital surroundings [6]. VR can either be screen-based using computer monitors or experienced in more immersive forms: Through head-mounted displays or certain rooms equipped with several large screens or projections on several walls (i.e., CAVE system; 6,18). In contrast, MR combines the real and virtual worlds and refers to the whole spectrum between reality and VR. MR, for example, includes augmented reality (AR) in which users see their real surroundings supplemented with virtual objects [6]. A specific application from the medical field may be the visual insertion of patient information during practice. Given the rapid development of immersive technology, this review provides an additional analysis of the role of VR and MR training.

Altogether, the following research questions are addressed:

-

1.

Which current disaster training methods for MFRs have already been scientifically evaluated?

-

2.

Which effectiveness indicators are used to evaluate MFR disaster training methods?

-

3.

Based on the findings of the reviewed studies, which methods for MFR disaster training seem to be effective?

-

4.

How and to what extent are VR and MR used to prepare MFRs for disasters?

Methods

The preregistered (osf.io/yn5v3) systematic literature search was conducted in accordance with the PRISMA guidelines [19].

Search strategy

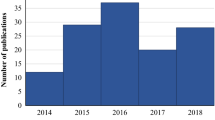

The search strategy was prepared with support of a medical information specialist to ensure the appropriateness of the search terms. Using the search engines Web of Science and PubMed, we applied search terms such as health personnel, training, and disaster (see Additional file 1 for the search string). To ensure that the results reflect current training methods, the electronic search was limited to studies published between January 2010 and September 2021. A filter limited our results to studies with a full text in English.

Inclusion and exclusion criteria

We included articles that described a training or training session (e.g., drill, lectures, mixed methods training, etc.) conducted to improve the participants’ prehospital disaster response. The training had to address prehospital content, but was allowed to also contain in-hospital topics. Participants had to be MFRs, regardless of whether they were still in training or already had work experience. In addition, to ensure adequate assessments of the effectiveness, we only considered (quasi-)experimental designs in which outcomes were compared to a control or comparison group [i.e., randomized controlled trials (RCTs), non-RCTs and at minimum pre-post testing of the same group; [20, 21]].

We excluded studies that a) did not test the effectiveness of a disaster training for MFRs, b) contained other occupational groups or not sufficiently specified groups (e.g., “others”) in addition to MFRs without reporting separate analyses for the MFRs, c) were not primary studies published in peer-reviewed journals, and d) had no full-text available.

Selection process

The search was conducted on 28th October 2021 and led to 4533 hits (Fig. 1). Duplicates were identified with the software Endnote™ (Version 20.1) and additional visual screening. Two raters (ASB and RW) independently screened the remaining hits and performed the study selection using the web application Rayyan [22]. Discrepancies in the study selection process were resolved by consensus or, if necessary, together with a third rater (YH). Fifty-five studies were included in the review.

Data collection and analysis

Two raters (ASB and RW) extracted the relevant information for each article. Again, discrepancies were resolved by consensus, and when necessary together with the third rater (YH). Whenever studies used multiple methods at different time points we only considered those applied between the pre- and post-measurement. For trainings evaluated in (non-)RCTs without a pre-test, methods must have been applied before the post-test comparison with control groups. Similarly, only effectiveness indicators with sufficient informative value about training success or failure were considered (i.e., indicators used for pre-post comparisons or for comparisons with control groups). To assess the studies’ quality and risk of bias, we used the Joanna Briggs Institute (JBI) critical appraisal checklists for RCTs and quasi‐experimental studies [23]. The JBI tool for quasi-experimental studies contains nine questions and the JBI tool for experimental studies consists of 13 questions (e.g., “Were outcomes measured in a reliable way?”). There are four possible answer options: yes, no, unclear, not applicable. The answer yes indicates quality while no indicates a risk of bias.

Results

The majority of studies used a single group pre-post design (k = 35). Other study designs included non-RCTs (k = 6) and RCTs (k = 14) with 15 out of 20 containing pre-post testing (see Table 1 for a full overview and Additional file 1: Table 1 for further information). The sample sizes varied largely between studies (range 6–524). Trainings took place on several continents with the majority of trainings conducted in North America (k = 24), followed by Asia (k = 18), Europe (k = 8), Australia and Africa (both k = 2), unclear (k = 1). The majority of tested trainings addressed general disaster management or several disaster-related topics (k = 31), followed by triage (k = 14), trauma management/sonography (k = 3) etc. Furthermore, the time spans varied between one day or less (k = 22) to up to eight months (k = 33).

Research question 1: overview of training methods

The majority of studies reported trainings that contain a combination of several methods, either in the intervention group, control group, or in both (k = 42). Training methods could be categorized into traditional and technology-based methods (Fig. 2). Traditional categories reflect lectures, real-life scenario training (e.g., mass casualty incident simulations with actors or manikins), discussion-based training (including seminars, workshops, in-class games, tabletop exercises), practical skills training (e.g., regional anesthesia), field visits (e.g., the visit of disaster affected sites or riding with the prehospital physician vehicle), and debriefings. In contrast, the technology-based category is composed of computer-based learning (i.e., online learning, educational computer programs), screen-based serious gaming, educational videos, and VR/MR. The term serious gaming refers to computer-based learning that additionally contains game elements, such as cooperation, competition, and stories [24].

Research question 2: effectiveness indicators

The trainings were evaluated with several effectiveness indicators, including knowledge and performance, but also self-reported measures (Fig. 3). Most frequently, knowledge gain was used as an indicator. Knowledge was mainly assessed with a basic knowledge test on the training content, often in a multiple-choice format. Some studies used an applied knowledge test that consisted of a written test with several patient descriptions which had to be classified into triage categories. Less than one third of the studies used performance as an indicator. Performance assessments were frequently conducted in triage simulations [25,26,27,28,29,30,31,32,33] but also in in other contexts, e.g., the management of patients affected by chemical, biological, radiological, nuclear and/or explosive events (CBNRE; 34,35) and the execution of specific medical procedures [36, 37]. Several of those studies focused on measures that could be determined easily and relatively well objectively, including accuracy of triage or treatment decisions [26,27,28,29,30,31,32,33, 38], time needed [28,29,30, 33, 38] or compliance with the correct procedure [30]. In ten studies, raters composed an overall performance score based on several criteria [25, 28, 32, 34,35,36,37,38,39,40], e.g., the evaluation of safety on site [25, 32] and airway/breathing interventions [25, 28]. Three of those studies used already existing assessment instruments, either for treating CBNRE patients [34, 35] or for single patient care in low-resource countries [40]. Only one study used team performance as a measure of effectiveness by letting raters compose an overall team performance score for the management of simulated disaster scenes [32]. All other studies measured individual performance only. Furthermore, several studies used self-reported measures, including preparedness/readiness [39, 41,42,43,44] and (self-)confidence [45,46,47,48,49,50]. In addition to knowledge, performance and self-reported measures, one study compared the level of immersion in VR to real-life scenario training [31].

Research question 3: effectiveness of training methods

All training methods demonstrated a certain effectiveness, as most studies reported positive or at least partially positive effects of the different methods (see Table 2 for an overview of the methods’ effectiveness).

Lectures were mostly used in combination with other methods and often served the initial theoretical knowledge transfer [46, 51,52,53,54,55,56]. There were three studies in which only lectures occurred between the pre-test and post-test. Two of these evaluated educational refresher sessions and reported a positive impact on knowledge [57] and performance [28]. The third one concluded that lectures led to similar performance but lower knowledge gain and partially lower training satisfaction than the combination of lectures and discussion-based training [33]. Multimethod trainings with lectures showed mixed results regarding knowledge and performance but positive effects on self-reports of preparedness, knowledge, competence, confidence, and self-efficacy.

Real-life scenario training was often similarly or less effective compared to technology-based training. Studies that compared real-life scenario training to either educational videos [58] or VR [31] reported a partially lower impact of real-life practice on knowledge [58] and similar impacts on performance [31] and training satisfaction [31]. In combination with other methods, the training also resulted in similar [29] or slightly lower [25] performance but greater knowledge gain [25] than VR training and lower self-reported competence than serious gaming [24].

Discussion-based learning was often combined with other methods and resulted in mixed knowledge outcomes but at least partially positive effects on performance and self-reports of preparedness, competence, confidence, and self-efficacy. However, two studies reported smaller performance improvements [30] and self-reported competence gain [24] than trainings that contained serious gaming.

Practical skills training was never tested as a sole method. Compared to technology-based training, multimethod training with practical skills exercises always resulted in similar or smaller effects. Trainings containing practical skills exercises led to similar [37] or lower [59] knowledge gain as well as similar performance levels [37] and self-reported learning gains [59] than trainings that contained computer-based learning instead. Furthermore, multimethod training with practical skills exercises resulted in lower performance, self-reported preparedness, and self-reported competence than screen-based VR [39] and lower self-reported competence than serious gaming [24].

Field visits were part of five trainings and varied considerably in their content and length. Evidence suggests positive effects on knowledge, performance, and self-reports of knowledge and competence. One paper compared a visit of a large ambulance bus to VR and MR training and concluded that the visit was less effective in increasing performance [38]. However, trainees only had one hour in the ambulance bus to practice finding essential objects while the VR and MR group could practice as many times as they wanted within one week (at least three times).

Debriefings were only explicitly tested once. The study used drone videos from a real-life scenario training that the trainees had previously undergone [60] and partially confirmed a positive effect on (self-)perception. In combination with other methods, debriefings led to positive outcomes on performance as well as on self-reports of knowledge, confidence, and preparedness. There were mixed findings regarding objectively measured knowledge. Furthermore, multimethod training with debriefings led to lower knowledge scores and similar self-reported learning gains than computer-based learning [59] as well as lower self-reported competence than serious gaming [24].

Computer-based learning as a stand-alone method or in combination with other methods led to improvement or partial improvement in knowledge, performance, and self-reports of preparedness, competence, and self-efficacy. Computer-based training resulted in greater knowledge gain and similar self-reported learning gains compared to traditional training [59]. Computer-based learning also led to similar knowledge and performance improvements as practical skills training, both combined with videos [37].

Educational videos usually led to at least partial knowledge gain and performance improvements as well as a partially greater knowledge gain than real-life scenario training [58]. Only one multimethod study did not find an effect on knowledge. Studies also reported positive outcomes on self-reported preparedness and competence.

Serious gaming was only evaluated in two studies [24, 30]. Ma and colleagues reported that game-based teaching resulted in significantly higher self-reported disaster nursing competence than traditional training [24]. Knight and colleagues tested a multimethod training including a lecture and serious gaming within VR [30]. Compared to traditional training, it fostered better triage accuracy and partially better step accuracy. The time needed to triage did not differ between groups.

Research questions 3 and 4: current role and effectiveness of VR and MR

The VR/MR training systems were mostly used for MFR groups with little or no work experience, including students [29, 31, 61,62,63,64], cadets [38] or job starters [25]. Seven studies tested trainings that contained PC-screen-based VR (Fig. 4), although always in combination with other methods [29, 30, 39, 61,62,63,64]. Five of them covered the topic of triage [29, 30, 61,62,63], two decontamination [61, 64], one the management of COVID-19 patients [39], and one general disaster scene management [63]. The virtual scenarios mainly included manmade disasters such as traffic accidents [29], explosions in busy areas [30, 61], building collapse and fire on boats at a seaport [63] while one simulated a major earthquake [62]. Two studies that tested pre- and in-hospital trainings used either scenarios in both settings [39] or only an in-hospital scenario [64].

During the VR exercises, trainees were able to move their avatar around and perform a variety of intervention, e.g., breathing/airway checks [29, 30, 62]. While the participants usually used a mouse, keyboard and/or a joystick, one screen-based VR system tracked the trainees’ movements with a webcam as they performed decontamination exercises [64]. Training that contained screen-based VR led to mixed findings regarding knowledge but to positive performance and self-efficacy outcomes. Compared to exclusively traditional trainings, training with screen-based VR led to greater knowledge gain [39] and self-reported preparedness [39] as well as partially greater [30, 39] or similar performance levels [29]. Furthermore, the combination with computer-based learning led to greater knowledge gain than computer-based learning alone [61].

Three studies evaluated immersive VR technology [25, 31, 38]. The first one evaluated triage training in a VR CAVE [25]. The scenario was an explosion in an office building. To perform the triage, trainees observed virtual patients to assess their respiratory rate and verbally requested pulse rates. In terms of training effectiveness, the VR exercise resulted in slightly better performance but poorer knowledge scores than real-life scenario training, both in combination with lectures. Two studies evaluated VR training with head-mounted displays in which trainees used controllers to interact with their virtual surroundings [31, 38]. The first one tested a triage training with a car chase and shooting scenario [31]. Participants could click on icons attached to each casualty to gather basic clinical information and allocate triage cards. The other VR training was designed to help MFRs get a better orientation in a large ambulance bus by practicing to find essential medical equipment [38]. Both VR systems provided feedback regarding the correctness and time of task execution. Overall, these two VR trainings with head-mounted displays led to similar [31] or greater [38] performance than traditional training and to a similar learning satisfaction [31]. One of those studies, however, indicated a higher immersion level during real-life simulations which seemed to be caused by the subscale physical demand [31]. The only study using MR compared AR training to VR with head-mounted displays and traditional education [38]. The AR training was completely similar to the VR ambulance bus training except for the use of an AR headset with transparent lenses. The device projected holograms in the trainees’ field of view. With click gestures, they were able to interact with their environment, like opening/closing drawers. The AR training resulted in a better performance than traditional training, but not as much as the VR training.

Quality assessment

Overall, the study quality was satisfactory (for a detailed overview see Additional file 1: Tables 2 and 3). For the experimental studies, either none (k = 9) or one question (k = 5) out of 13 were answered with no. For the quasi-experimental studies, usually none (k = 4), one question (k = 23) or two questions (k = 13) were answered with no. There was only one paper for which four out of nine questions were answered in the negative [46]. The higher risk of bias in the quasi-experimental studies was mainly based on question 4, which assesses the control group because a large part of the studies had a single group pre-post design (k = 35). Furthermore, some studies did not have a complete follow-up or a detailed explanation or analysis for the dropout (k = 9).

Discussion

Well-trained MFRs are essential for managing disaster situations with multiple casualties [3, 4]. To ensure that future disaster training is as effective as possible, we conducted this review on scientifically-evaluated trainings which comprised both traditional and technology-based methods. The trainings were evaluated with several different effectiveness indicators, including knowledge, performance, self-reported measures, and immersion. Despite the heterogeneity of methods and outcome measures, some conclusions could be synergized. While all methods demonstrated effectiveness, the results of this review suggest that technology-based methods often lead to similar or greater training outcomes than exclusively traditional training. Furthermore, we found ten studies that used VR, although usually combined with other methods and often PC-screen-based. Only one study evaluated MR training [38].

Although trends in effectiveness could be identified, the data basis was not sufficient to declare some methods as unequivocally more effective than others. Training methods were often tested in combination, which impaired drawing unbiased conclusions about individual methods. Furthermore, the various effectiveness indicators that were used had only limited comparability. Fewer than one-third of the included studies used performance observation as an evaluation tool. Instead, several studies used knowledge tests or self-assessments (e.g., confidence) although these have limited predictive value for actual performance [65,66,67]. Despite the great variety in studies, the data basis strongly suggests the strength of technology-based methods. Several studies compared technology-based training to training with real-life scenario exercises which are usually considered the gold standard of disaster training [68]. While these studies suggest the great potential of technology-based methods, there may be a certain degree of bias. Real-life scenario training often served as (part of) the exclusively traditional training for control groups. Therefore, studies may not have been published that did not find at least an equivalent effect of their newly developed technological methods. Instead, the training technology might have been improved and retested until it was similarly or more effective, leading to a publication bias. The same might apply to practical skills training which was always used in combination with other methods and resulted in similar or lower training effectiveness than trainings that contained technology-based methods.

Generally, the current literature indicates that technology-based methods are well suited to train MFRs for disasters. Given the usually limited resources of MFR organizations, these methods promise to be particularly beneficial. Although initial investment in the technology is required, it can then be used flexibly and repeatedly. Thus, a higher, more individually adapted training frequency can be created than with many traditional methods, especially real-life scenario training.

Current use of VR/MR and its future potential

Seven out of ten studies that tested VR training focused on non-immersive, screen-based VR. The advantage of screen-based VR is that usually no hardware other than normal computer accessories is required. However, more immersive trainings offer greater similarity to experiencing real disaster situations and could therefore be even more useful for preparing MFRs for stressful and unfamiliar situations. Given that high stress can affect the performance of MFRs, training should explicitly address stress responses [69, 70]. Although some of the reviewed trainings contained in-class teaching about dealing with emotions or stress (e.g., [39, 55, 71]), we found no studies that explicitly conducted scenario-based training while assessing and controlling for stress responses. To provide more insight into behavioral changes under stress, future studies should conduct and evaluate explicit disaster training with (continuous) stress measurements to investigate its potential for MFRs. The ongoing improvement of immersive VR and MR technology [72] seems quite promising as it can provide increasingly realistic immersive training scenarios with fewer organizational demands than real-life simulations regarding time and space. Users can experience and practice an almost unlimited number of scenarios in which demands and difficulty levels can be designed as needed [73]. Our results indicate that practical exercises with immersive technology can be conducted nearly everywhere, at any time, and with relatively little preparation, i.e., without setting up a real disaster scene. Furthermore, technical progress in recent years now allows several people to interact within the same virtual environment [74] and treat patients together as in realistic rescue operations.

Future research

Given the heterogeneity of the current literature, future research should further investigate the effectiveness of individual training methods but also systematically assess whether certain combinations work particularly well. Furthermore, training methods and validated training evaluation tools should be developed not only in terms of effectiveness, but also in terms of efficiency as (financial) resources are often limited. The results of this review suggest, for example, that technological methods such as serious gaming and VR are similarly good or better than traditional methods so that complex real-life scenario trainings with actors could be at least partially replaced. There is also initial evidence that lectures, as an easily implemented method, are well suited for refresher sessions. Future research still needs to clarify the usefulness of immersive VR and especially MR as we only found one MR experimental study that matched our inclusion criteria.

The effectiveness-efficiency trade-off also applies to training evaluation. While knowledge tests offer the advantage of being very easy to conduct and evaluate, the transferability of training success to actual operations is unclear. Performance evaluations during (virtual or real-life) scenario training may be more suitable as they are closer to the target behavior of MFRs during disasters. This review has already identified some indicators, including accuracy of decisions, time needed and compliance with the correct procedure. Future research should focus on finding the appropriate performance measures for diverse disaster training contents in terms of resource efficiency, usability, and relevance. New training technologies could also provide further opportunities for performance assessment, e.g., eye-tracking to gain insights into attentional processes. Furthermore, the assessment of team performance has hardly been considered in disaster training research, although MFRs mainly work in teams. Disaster management is a team effort and is often done in ad-hoc teams similar to other domains of acute care medicine [75]. Improved and trained teamwork improves medical performance [76]. Future studies should also assess long-term benefits of the different training methods and their combination as most of the studies we found only conducted pre-post testing within a few days or weeks.

Limitations

Our review has three main limitations. First, we only included studies published in English so we might have missed relevant studies published in other languages. Second, we only kept studies in which it was either evident that the sample only consisted of MFRs or in which separate analyses for MFRs were provided. This led to the exclusion of some studies with insufficiently specified sample categories such as others. However, it might be possible that the participants were also MFRs. Third, we decided to include only quasi-experimental and experimental studies. We consider this a strength of this systematic review, as it allowed us to create a better overview of the trainings’ effectiveness. Nevertheless, we cannot draw conclusions about what training methods are generally used in disaster training research and whether new methods have been added without being tested in (quasi-)experiments.

Conclusion

We found several traditional and technology-based trainings methods. The trainings were mainly evaluated with knowledge tests and self-reported measures, while less than one third also used actual performance measures. For valid and yet inexpensive evaluations, objectively assessible performance measures, such as accuracy, time, and order of certain actions can be used. In this review, we found that technology-based methods were often similarly or more effective than traditional training. They therefore offer great potential to supplement or at least partially replace traditional training as especially the organization of the gold-standard, real-life scenario training, can be costly and time-consuming. Two training technologies that have become increasingly popular and affordable are VR and MR. This review suggests that they have great potential which is why further assessments of these technologies are required.

Availability of data and materials

All data generated or analyzed during this study are included in this published article and its supplementary information files.

Abbreviations

- AR:

-

Augmented reality

- CBNRE:

-

Chemical, biological, radiological, nuclear and/or explosive events

- JBI:

-

Joanna Briggs Institute

- MFR:

-

Medical first responder

- MR:

-

Mixed reality

- RCT:

-

Randomized controlled trial

- VR:

-

Virtual reality

References

Centre for Research on the Epidemiology of Disasters. Natural disasters 2019. [Internet]. 2020 [cited 2022 Feb 19]. https://cred.be/sites/default/files/adsr_2019.pdf.

Srivastava K. Disaster: challenges and perspectives. Ind Psychiatry J. 2010;19(1):2. https://doi.org/10.4103/0972-6748.77623.

Chaput CJ, Deluhery MR, Stake CE, Martens KA, Cichon ME. Disaster training for prehospital providers. Prehosp Emerg Care. 2007;11(4):458–65. https://doi.org/10.1080/00207450701537076.

Alharbi AA. Effect of Mass casualty training program on prehospital care staff in Kuwait. Egypt J Hosp Med. 2018;71(6):3393–7. https://doi.org/10.12816/0047293.

Almukhlifi Y, Crowfoot G, Wilson A, Hutton A. Emergency healthcare workers’ preparedness for disaster management: an integrative review. J Clin Nurs. 2021. https://doi.org/10.1111/jocn.15965.

Milgram P, Kishino F. A taxonomy of mixed reality visual displays. IEICE Trans Inf Syst. 1994;77(12):1321–9.

Barrie M, Socha JJ, Mansour L, Patterson ES. Mixed reality in medical education: a narrative literature review. Proc Int Symp Hum Factors Ergon Health Care. 2019;8(1):28–32. https://doi.org/10.1177/2327857919081006.

Beyramijam M, Farrokhi M, Ebadi A, Masoumi G, Khankeh HR. Disaster preparedness in emergency medical service agencies: a systematic review. J Educ Health Promot. 2021;10:25. https://doi.org/10.4103/jehp.jehp_1280_20.

Yafe E, Walker BB, Amram O, Schuurman N, Randall E, Friger M, et al. Volunteer first responders for optimizing management of mass casualty incidents. Disaster Med Public Health Prep. 2019;13(2):287–94. https://doi.org/10.1017/dmp.2018.56.

Sorani M, Tourani S, Khankeh HR, Panahi S. Prehospital emergency medical services challenges in disaster; a qualitative study. Emergency. 2018;6(1).

Altmann T. Training. 2019. In: Dorsch Lexikon der Psychologie [Internet]. Hogrefe. https://dorsch.hogrefe.com/stichwort/training.

Kiefer AW, Silva PL, Harrison HS, Araújo D. Antifragility in sport: Leveraging adversity to enhance performance. Sport Exerc Perform Psychol. 2018;7(4):342–50. https://doi.org/10.1037/spy0000130.

Hill Y, Kiefer AW, Silva PL, Van Yperen NW, Meijer RR, Fischer N, et al. Antifragility in climbing: determining optimal stress loads for athletic performance training. Front Psychol. 2020;11:10. https://doi.org/10.3389/fpsyg.2020.00272.

Giessing L, Frenkel MO. Virtuelle Realität als vielversprechende Ergänzung im polizeilichen Einsatztraining – Chancen, Grenzen und Implementierungsmöglichkeiten. In: Staller MS, Körner S, editors. Handbuch polizeiliches Einsatztraining: Professionelles Konfliktmanagement - Theorie, Trainingskonzepte und Praxiserfahrungen. Wiesbaden: Springer Gabler; 2022. p. 677–92.

Hill Y, Van Yperen NW, Den Hartigh RJ. Facing repeated stressors in a motor task: does it enhance or diminish resilience? J Mot Behav. 2021;53(6):717–26. https://doi.org/10.1080/00222895.2020.1852155.

Ingrassia PL, Foletti M, Djalali A, Scarone P, Ragazzoni L, Della Corte F, et al. Education and training initiatives for crisis management in the European Union: a web-based analysis of available programs. Prehosp Disaster Med. 2014;29(2):115–26. https://doi.org/10.1017/s1049023x14000235.

Williams J, Nocera M, Casteel C. The effectiveness of disaster training for health care workers: a systematic review. Ann Emerg Med. 2008;52(3):211–22. https://doi.org/10.1016/j.annemergmed.2007.09.030.

Giessing L. The potential of virtual reality for police training under stress: a SWOT analysis. In: Arble E, Arnetz B, editors. Interventions, training, and technologies for improved police well-being and performance. Pennsylvania: IGI Global; 2021. p. 102–24. https://doi.org/10.4018/978-1-7998-6820-0.ch006.

Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015. https://doi.org/10.1186/2046-4053-4-1.

Evans D. Hierarchy of evidence: a framework for ranking evidence evaluating healthcare interventions. J Clin Nurs. 2003;12(1):77–84. https://doi.org/10.1046/j.1365-2702.2003.00662.x.

Stratton SJ. Quasi-experimental design (pre-test and post-test studies) in prehospital and disaster research. Prehosp Disaster Med. 2019;34(6):573–4. https://doi.org/10.1017/S1049023X19005053.

Ouzzani M, Hammady H, Fedorowicz Z, Elmagarmid A. Rayyan—a web and mobile app for systematic reviews. Syst Rev. 2016;5(1):25. https://doi.org/10.1186/s13643-016-0384-4.

Tufanaru C, Munn Z, Aromataris E, Campbell J, Hopp L. Chapter 3: systematic reviews of effectiveness. 2020. In: JBI manual for evidence synthesis. JBI. https://doi.org/10.46658/JBIMES-20-04.

Ma DH, Shi YX, Zhang G, Zhang J. Does theme game-based teaching promote better learning about disaster nursing than scenario simulation: a randomized controlled trial. Nurse Educ Today. 2021. https://doi.org/10.1016/j.nedt.2021.104923.

Andreatta PB, Maslowski E, Petty S, Shim W, Marsh M, Hall T, et al. Virtual reality triage training provides a viable solution for disaster-preparedness. Acad Emerg Med. 2010;17(8):870–6. https://doi.org/10.1111/j.1553-2712.2010.00728.x.

Cicero MX, Auerbach MA, Zigmont J, Riera A, Ching K, Baum CR. Simulation training with structured debriefing improves residents’ pediatric disaster triage performance. Prehosp Disaster Med. 2012;27(3):239–44. https://doi.org/10.1017/s1049023x12000775.

Cicero MX, Whitfill T, Overly F, Baird J, Walsh B, Yarzebski J, et al. Pediatric disaster triage: multiple simulation curriculum improves prehospital care providers’ assessment skills. Prehosp Emerg Care. 2017;21(2):201–8. https://doi.org/10.1080/10903127.2016.1235239.

Dittmar MS, Wolf P, Bigalke M, Graf BM, Birkholz T. Primary mass casualty incident triage: evidence for the benefit of yearly brief re-training from a simulation study. Scand J Trauma Resusc Emerg Med. 2018;26(1):35. https://doi.org/10.1186/s13049-018-0501-6.

Ingrassia PL, Ragazzoni L, Carenzo L, Colombo D, Gallardo AR, Della CF. Virtual reality and live simulation: a comparison between two simulation tools for assessing mass casualty triage skills. Eur J Emerg Med. 2015;22(2):121–7. https://doi.org/10.1097/mej.0000000000000132.

Knight JF, Carley S, Tregunna B, Jarvis S, Smithies R, de Freitas S, et al. Serious gaming technology in major incident triage training: a pragmatic controlled trial. Resuscitation. 2010;81(9):1175–9. https://doi.org/10.1016/j.resuscitation.2010.03.042.

Mills B, Dyks P, Hansen S, Miles A, Rankin T, Hopper L, et al. Virtual reality triage training can provide comparable simulation efficacy for paramedicine students compared to live simulation-based scenarios. Prehosp Emerg Care. 2020;24(4):525–36. https://doi.org/10.1080/10903127.2019.1676345.

Yanagawa Y, Omori K, Ishikawa K, Takeuchi I, Jitsuiki K, Yoshizawa T, et al. Difference in first aid activity during mass casualty training based on having taken an educational course. Disaster Med Public Health Prep. 2018;12(4):437–40. https://doi.org/10.1017/dmp.2017.99.

Zheng Z, Yuan S, Huang M, Liao J, Cai R, Zhan H, et al. Flipped classroom approach used in the training of mass casualty triage for medical undergraduate students. Disaster Med Public Health Prep. 2020;16(1):94–101. https://doi.org/10.1017/dmp.2020.162.

Andreatta P, Klotz JJ, Madsen JM, Hurst CG, Talbot TB. Outcomes from two forms of training for first-responder competency in cholinergic crisis management. Mil Med. 2015;180(4):468–74. https://doi.org/10.7205/milmed-d-14-00290.

Motola I, Burns WA, Brotons AA, Withum KF, Rodriguez RD, Hernandez S, et al. Just-in-time learning is effective in helping first responders manage weapons of mass destruction events. J Trauma Acute Care Surg. 2015;79(4 Suppl 2):152–6. https://doi.org/10.1097/ta.0000000000000570.

Pouraghaei M, Sadegh Tabrizi J, Moharamzadeh P, Rajaei Ghafori R, Rahmani F, Najafi MB. The effect of start triage education on knowledge and practice of emergency medical technicians in disasters. J Caring Sci. 2017;6(2):119–25. https://doi.org/10.15171/jcs.2017.012.

Paddock MT, Bailitz J, Horowitz R, Khishfe B, Cosby K, Sergel MJ. Disaster response team FAST skills training with a portable ultrasound simulator compared to traditional training: pilot study. West J Emerg Med. 2015;16(2):325–30. https://doi.org/10.5811/westjem.2015.1.23720.

Koutitas G, Smith S, Lawrence G. Performance evaluation of AR/VR training technologies for EMS first responders. Virtual Real. 2021;25(1):83–94. https://doi.org/10.1007/s10055-020-00436-8.

Zhang D, Liao H, Jia Y, Yang W, He P, Wang D, et al. Effect of virtual reality simulation training on the response capability of public health emergency reserve nurses in China: a quasiexperimental study. BMJ Open. 2021;11(9): e048611. https://doi.org/10.1136/bmjopen-2021-048611.

Ripoll-Gallardo A, Ragazzoni L, Mazzanti E, Meneghetti G, Franc JM, Costa A, et al. Residents working with Medecins Sans Frontieres: training and pilot evaluation. Scand J Trauma Resusc Emerg Med. 2020. https://doi.org/10.1186/s13049-020-00778-x.

Huh SS, Kang HY. Effects of an educational program on disaster nursing competency. Public Health Nurs. 2019;36(1):28–35. https://doi.org/10.1111/phn.12557.

Koca B, Arkan G. The effect of the disaster management training program among nursing students. Public Health Nurs. 2020;37(5):769–77. https://doi.org/10.1111/phn.12760.

Jones J, Kue R, Mitchell P, Eblan G, Dyer KS. Emergency medical services response to active shooter incidents: provider comfort level and attitudes before and after participation in a focused response training program. Prehosp Disaster Med. 2014;29(4):350–7. https://doi.org/10.1017/s1049023x14000648.

Unver V, Basak T, Tastan S, Kok G, Guvenc G, Demirtas A, et al. Analysis of the effects of high-fidelity simulation on nursing students’ perceptions of their preparedness for disasters. Int Emerg Nurs. 2018;38:3–9. https://doi.org/10.1016/j.ienj.2018.03.002.

Cowling L, Swartzberg K, Groenewald A. Knowledge retention and usefulness of simulation exercises for disaster medicine-what do specialty trainees know and think? Afr J Emerg Med. 2021;11(3):356–60. https://doi.org/10.1016/j.afjem.2021.05.001.

Sena A, Forde F, Yu C, Sule H, Masters MM. Disaster preparedness training for emergency medicine residents using a tabletop exercise. MedEdPORTAL. 2021;17:11119. https://doi.org/10.15766/mep_2374-8265.11119.

Greco S, Lewis EJ, Sanford J, Sawin EM, Ames A. Ethical reasoning debriefing in disaster simulations. J Prof Nurs. 2019;35(2):124–32. https://doi.org/10.1016/j.profnurs.2018.09.004.

Kuhls DA, Chestovich PJ, Coule P, Carrison DM, Chua CM, Wora-Urai N, et al. Basic disaster life support (BDLS) training improves first responder confidence to face mass-casualty incidents in Thailand. Prehosp Disaster Med. 2017;32(5):492–500. https://doi.org/10.1017/s1049023x17006550.

Betka AA, Bergren MD, Rowen JL. Improving rural disaster response preparedness. Public Health Nurs. 2021;38(5):856–61. https://doi.org/10.1111/phn.12924.

Furseth PA, Taylor B, Kim SC. Impact of interprofessional education among nursing and paramedic students. Nurse Educ. 2016;41(2):75–9. https://doi.org/10.1097/nne.0000000000000219.

Scott LA, Carson DS, Greenwell IB. Disaster 101: a novel approach to disaster medicine training for health professionals. J Emerg Med. 2010;39(2):220–6. https://doi.org/10.1016/j.jemermed.2009.08.064.

Chandra A, Kim J, Pieters HC, Tang J, McCreary M, Schreiber M, et al. Implementing psychological first-aid training for medical reserve corps volunteers. Disaster Med Public Health Prep. 2014;8(1):95–100. https://doi.org/10.1017/dmp.2013.112.

Chou WK, Cheng MT, Lin CH, Shih FY. The effectiveness of functional exercises for teaching method disaster medicine to medical students. Cureus. 2021;13(5): e15151. https://doi.org/10.7759/cureus.15151.

Merlin MA, Moon J, Krimmel J, Liu J, Marques-Baptista A. Improving medical students’ understanding of prehospital care through a fourth year emergency medicine clerkship. Emerg Med J. 2010;27(2):147–50. https://doi.org/10.1136/emj.2008.066654.

Kim J, Lee O. Effects of a simulation-based education program for nursing students responding to mass casualty incidents: a pre-post intervention study. Nurse Educ Today. 2020;85:25. https://doi.org/10.1016/j.nedt.2019.104297.

Xia R, Li SJ, Chen BB, Jin Q, Zhang ZP. Evaluating the effectiveness of a disaster preparedness nursing education program in Chengdu. China Public Health Nurs. 2020;37(2):287–94. https://doi.org/10.1111/phn.12685.

Cuttance G, Dansie K, Rayner T. Paramedic application of a triage sieve: a paper-based exercise. Prehosp Disaster Med. 2017;32(1):3–13. https://doi.org/10.1017/s1049023x16001163.

Aghababaeian H, Sedaghat S, Tahery N, Moghaddam AS, Maniei M, Bahrami N, et al. A comparative study of the effect of triage training by role-playing and educational video on the knowledge and performance of emergency medical service staffs in Iran. Prehosp Disaster Med. 2013;28(6):605–9. https://doi.org/10.1017/s1049023x13008911.

Wiese LK, Love T, Goodman R. Responding to a simulated disaster in the virtual or live classroom: is there a difference in BSN student learning? Nurse Educ Pract. 2021;55: 103170. https://doi.org/10.1016/j.nepr.2021.103170.

Fernandez-Pacheco AN, Rodriguez LJ, Price MF, Perez ABG, Alonso NP, Rios MP. Drones at the service for training on mass casualty incident: a simulation study. Medicine. 2017;96(26): e7159. https://doi.org/10.1097/md.0000000000007159.

Farra S, Miller E, Timm N, Schafer J. Improved training for disasters using 3-D virtual reality simulation. West J Nurs Res. 2013;35(5):655–71. https://doi.org/10.1177/0193945912471735.

Foronda CL, Shubeck K, Swoboda SM, Hudson KW, Budhathoki C, Sullivan N, et al. Impact of virtual simulation to teach concepts of disaster triage. Clin Simul Nurs. 2016;12(4):137–44. https://doi.org/10.1016/j.ecns.2016.02.004.

Bajow N, Djalali A, Ingrassia PL, Ragazzoni L, Ageely H, Bani I, et al. Evaluation of a new community-based curriculum in disaster medicine for undergraduates. BMC Med Educ. 2016;16(1):225. https://doi.org/10.1186/s12909-016-0746-6.

Smith S, Farra S, Dempsey A, Arms D. Preparing nursing students for leadership using a disaster-related simulation. Nurse Educ. 2015;40(4):212–6. https://doi.org/10.1097/nne.0000000000000143.

Liaw SY, Scherpbier A, Rethans J-J, Klainin-Yobas P. Assessment for simulation learning outcomes: a comparison of knowledge and self-reported confidence with observed clinical performance. Nurse Educ Today. 2012;32(6):e35–9. https://doi.org/10.1016/j.nedt.2011.10.006.

Rodgers DL, Bhanji F, McKee BR. Written evaluation is not a predictor for skills performance in an Advanced Cardiovascular Life Support course. Resuscitation. 2010;81(4):453–6. https://doi.org/10.1016/j.resuscitation.2009.12.018.

Barnsley L, Lyon PM, Ralston SJ, Hibbert EJ, Cunningham I, Gordon FC, et al. Clinical skills in junior medical officers: a comparison of self-reported confidence and observed competence. Med Educ. 2004;38(4):358–67. https://doi.org/10.1046/j.1365-2923.2004.01773.x.

Tin D, Hertelendy AJ, Ciottone GR. Disaster medicine training: the case for virtual reality. Am J Emerg Med. 2021;48:370–1. https://doi.org/10.1016/j.ajem.2021.01.085.

LeBlanc VR. The effects of acute stress on performance: implications for health professions education. Acad Med. 2009;84(10):25–33. https://doi.org/10.1097/ACM.0b013e3181b37b8f.

Ignacio J, Dolmans D, Scherpbier A, Rethans J-J, Chan S, Liaw SY. Stress and anxiety management strategies in health professions’ simulation training: a review of the literature. BMJ Simul Technol Enhanc Learn. 2016;2(2):42–6. https://doi.org/10.1136/bmjstel-2015-000097.

Chan SSS, Chan WS, Cheng YJ, Fung OWM, Lai TKH, Leung AWK, et al. Development and evaluation of an undergraduate training course for developing International council of nurses disaster nursing competencies in China. J Nurs Scholarsh. 2010;42(4):405–13. https://doi.org/10.1111/j.1547-5069.2010.01363.x.

Anthes C, García-Hernández RJ, Wiedemann M, Kranzlmüller D (eds) State of the art of virtual reality technology. In: 2016 IEEE aerospace conference; 2016 March 5–12; USA: IEEE.

Düking P, Holmberg H-C, Sperlich B. The potential usefulness of virtual reality systems for athletes: a short SWOT analysis. Front Physiol. 2018. https://doi.org/10.3389/fphys.2018.00128.

Nguyen H, Bednarz T (eds) User experience in collaborative extended reality: overview study. In: 17th EuroVR international conference; 2020 2020 Nov 25–27; Valencia, Spain. Cham: Springer.

Manser T. Teamwork and patient safety in dynamic domains of healthcare: a review of the literature. Acta Anaesthesiol Scand. 2009;53(2):143–51. https://doi.org/10.1111/j.1399-6576.2008.01717.x.

McEwan D, Ruissen GR, Eys MA, Zumbo BD, Beauchamp MR. The effectiveness of teamwork training on teamwork behaviors and team performance: a systematic review and meta-analysis of controlled interventions. PLoS ONE. 2017;12(1):e0169604. https://doi.org/10.1371/journal.pone.0169604.

Alenyo AN, Smith WP, McCaul M, Van Hoving DJ. A comparison between differently skilled prehospital emergency care providers in major-incident triage in South Africa. Prehosp Disaster Med. 2018;33(6):575–80. https://doi.org/10.1017/s1049023x18000699.

Alim S, Kawabata M, Nakazawa M. Evaluation of disaster preparedness training and disaster drill for nursing students. Nurse Educ Today. 2015;35(1):25–31. https://doi.org/10.1016/j.nedt.2014.04.016.

Aluisio AR, Teicher C, Wiskel T, Guy A, Levine A. Focused training for humanitarian responders in regional anesthesia techniques for a planned randomized controlled trial in a disaster setting. PLoS Curr. 2016. https://doi.org/10.1371/currents.dis.e75f9f9d977ac8adededb381e3948a04.

Andreatta P, Klotz J, Madsen J, Hurst C, Talbot T (eds) Assessment instrument validation for critical clinical competencies: pediatric-neonatal intubation and cholinergic crisis management. In: Interservice/industry training, simulation, and education conference (I/ITSEC); 2014; Orlando, FL.

Edinger ZS, Powers KA, Jordan KS, Callaway DW. Evaluation of an online educational intervention to increase knowledge and self-efficacy in disaster responders and critical care transporters caring for individuals with developmental disabilities. Disaster Med Public Health Prep. 2019;13(4):677–81. https://doi.org/10.1017/dmp.2018.129.

Hutchinson SW, Haynes S, Parker P, Dennis B, McLin C, Welldaregay W. Implementing a multidisciplinary disaster simulation for undergraduate nursing students. Nurs Educ Perspect. 2011;32(4):240–3. https://doi.org/10.5480/1536-5026-32.4.240.

Ingrassia PL, Ragazzoni L, Tengattini M, Carenzo L, Della CF. Nationwide program of education for undergraduates in the field of disaster medicine: development of a core curriculum centered on blended learning and simulation tools. Prehosp Disaster Med. 2014;29(5):508–15. https://doi.org/10.1017/s1049023x14000831.

James LS, Williams ML, Camel SP, Slagle P. Nursing student’s attitudes toward teams in an undergraduate interprofessional mass casualty simulation. Nurs Forum. 2021;56(3):500–12. https://doi.org/10.1111/nuf.12570.

Lampi M, Vikstrom T, Jonson CO. Triage performance of Swedish physicians using the ATLS algorithm in a simulated mass casualty incident: a prospective cross-sectional survey. Scand J Trauma Resusc Emerg Med. 2013. https://doi.org/10.1186/1757-7241-21-90.

Montán KL, Örtenwall P, Lennquist S. Assessment of the accuracy of the Medical Response to Major Incidents (MRMI) course for interactive training of the response to major incidents and disasters. Am J Emerg Med. 2015;10(2):93–107. https://doi.org/10.5055/ajdm.2015.0194.

Scott JA, Miller GT, Barry Issenberg S, Brotons AA, Gordon DL, Gordon MS, et al. Skill improvement during emergency response to terrorism training. Prehosp Emerg Care. 2006;10(4):507–14. https://doi.org/10.1080/10903120600887072.

Phattharapornjaroen P, Glantz V, Carlstrom E, Holmqvist LD, Khorram-Manesh A. Alternative leadership in flexible surge capacity-the perceived impact of tabletop simulation exercises on Thai emergency physicians capability to manage a major incident. Sustainability. 2020;12(15):5. https://doi.org/10.3390/su12156216.

Pollard KA, Bachmann DJ, Greer M, Way DP, Kman NE. Development of a disaster preparedness curriculum for medical students: a pilot study of incorporating local events into training opportunities. Am J Emerg Med. 2015;10(1):51–9. https://doi.org/10.5055/ajdm.2015.0188.

Franc JM, Verde M, Gallardo AR, Carenzo L, Ingrassia PL. An Italian version of the Ottawa Crisis Resource Management Global Rating Scale: a reliable and valid tool for assessment of simulation performance. Intern Emerg Med. 2017;12(5):651–6. https://doi.org/10.1007/s11739-016-1486-7.

Rivkind AI, Faroja M, Mintz Y, Pikarsky AJ, Zamir G, Elazary R, et al. Combating terror: a new paradigm in student trauma education. J Trauma Acute Care Surg. 2015;78(2):415–21. https://doi.org/10.1097/ta.0000000000000508.

Saiboon IM, Zahari F, Isa HM, Sabardin DM, Robertson CE. E-Learning in teaching emergency disaster response among undergraduate medical students in Malaysia. Front Public Health. 2021;9:628178. https://doi.org/10.3389/fpubh.2021.628178.

Acknowledgements

We thank Tanya Karrer, medical information specialist at the medical library of the University of Bern, for her support with the search string. We also thank Solène Gerwann for her valuable comments.

Funding

Open Access funding enabled and organized by Projekt DEAL. This project is part of the European Union’s Horizon 2020 project Medical First Responder Training using a Mixed Reality Approach featuring haptic feedback for enhanced realism (MED1stMR; grant agreement No 101021775).

Author information

Authors and Affiliations

Contributions

ASB, YH, LG, BS, CW and MOF were involved in the conception and design of the work. ASB, RW and YH contributed to the screening process, data extraction and quality assessment of papers; ASB interpreted the data and drafted the manuscript. The manuscript was critically reviewed and enriched with medical expertise by LG, TCS, BS and SM; with psychological expertise by RW, YH, CW, MOF; with technological expertise by RW and GR. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1:

Search string, additional study information and risk of bias.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Baetzner, A.S., Wespi, R., Hill, Y. et al. Preparing medical first responders for crises: a systematic literature review of disaster training programs and their effectiveness. Scand J Trauma Resusc Emerg Med 30, 76 (2022). https://doi.org/10.1186/s13049-022-01056-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13049-022-01056-8