Abstract

Background

Placing a peripheral vein catheter can be challenging due to several factors, but using ultrasound as guidance increases the success rate. The purpose of this review is to investigate the knowledge already existing within the field of education in ultrasound-guided peripheral vein catheter placement and explore the efficacy and clinical impact of different types of education.

Methods

In accordance with PRISMA-guidelines, a systematic search was performed using three databases (PubMed, EMBASE, CINAHL). Two reviewers screened titles and abstracts, subsequently full-text of the relevant articles. The risk of bias was assessed using the Cochrane Collaboration risk of bias assessment tool and the New Ottawa scale.

Results

Of 3409 identified publications, 64 were included. The studies were different in target learners, study design, assessment tools, and outcome measures, which made direct comparison difficult. The studies addressed a possible effect of mastery learning and found e-learning and didactic classroom teaching to be equally effective.

Conclusion

Current studies suggest a potential benefit of ultrasound guided USG-PVC training on success rate, procedure time, cannulation attempts, and reducing the need for subsequent CVC or PICC in adult patients. An assessment tool with proven validity of evidence to ensure competence exists and education strategies like mastery learning, e-learning, and the usage of color Doppler show promising results, but an evidence-based USG-PVC-placement training program using these strategies combined is still warranted.

Similar content being viewed by others

Introduction

Peripheral vein catheters (PVC) play a crucial role in the treatment of hospitalized patients. The number of difficult intravenous access (DIVA) patients is substantial because problems occur when obesity, dehydration or hematologic diseases make traditional PVC placement difficult [1]. In these cases the clinicians can be forced to resort to a less optimal alternative.

The use of ultrasound guidance could be a solution for ensuring a PVC placement in DIVA patients. Ultrasound-guided PVC (USG-PVC) placement is a complex procedure that requires confidence in using equipment and understanding of complex imaging with the transfer of 2D pictures to a 3D world. Since ultrasound procedures, in general, are shown to be highly user-dependent it is questionable whether a novice user would be able to perform USG-PVC with no previous training [2].

An educational program using phantoms and simulation training could improve health professionals’ knowledge and confidence and thereby provide a solution for PVC placement in DIVA patients. An improvement is seen for other technical procedures like central vein catheter (CVC) placement or lumbar puncture [3, 4]. For CVC a meta-analyse showed that trainees going through a simulation program had significant lager proportion of successfully placed CVC [5]. It is not clear which type of training is better for USG-PVC, and what clinical impact implementation of an educational program will have. A systematic review might be able to clarify this, as seen in the cases of educational programs for other procedures [6, 7]. To our knowledge, no systematic review providing an overview of the already existing research on USG-PVC training has been carried out.

Therefore, we have conducted this systematic review with the aim to 1) investigate the already existing knowledge within the field, 2) explore which type of education seems to be the most effective, and 3) assess the potential clinical impact of an education program.

Material and method

This systematic review was performed in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) [8].

Search and study selection

In collaboration with a research librarian, a systematic literature search of three databases (CINAHL, PubMed, EMBASE) was conducted using relevant search terms related to USG-PVC placement. Full search strategies can be found in Additional file 1. The first search was conducted on December 10, 2017, and a final search was conducted on February 13, 2021, to ensure the results being up to date. Two authors (RJ, PIP) independently screened all articles for eligibility based on titles and abstracts. Relevant studies were then reviewed independently as full-text for final inclusion. A third investigator (CBL) resolved any inclusion disagreements. Finally, a hand search through the reference lists of included articles was conducted and expert recommendations were screened for inclusion as well.

Eligibility criteria

Original research articles were included if both of the following were present:

-

Assessment of educational process or simulation training in context of USG-PVC placement

-

Scandinavian, English, or German language

Exclusion criteria

Articles were excluded if one or more of the following were present

-

Conference abstracts, explanatory articles, teaching books and expert opinions

-

Articles not involving USG-PVC placement and only involving CVC or peripherally inserted central catheter (PICC)

No restrictions were made for either patient population, or the type of health personnel performing the USG-PVC procedure.

Data extraction

The following data were extracted from each article: Study type, characteristics of the study population, characteristics of the patient population, educational program, technique of USG-PVC placement, and results.

Risk of bias assessment

A modified risk of bias evaluation based on the criteria of the Cochrane Collaboration risk of bias assessment tool was performed on all included studies [9]. Non-randomized studies were assessed using the Newcastle-Ottawa Scale bias tool [10].

Data synthesis

Due to the wide aim of the research question and a significant heterogeneity in study designs, a meta-analysis could not be performed and a descriptive synthesis and approach was therefore applied.

Results

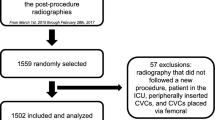

The initial search performed on December 10th, 2017, generated a total of 2207 publications, and the additional search on February 13th, 2021, added to a total number of 3352. Additional 57 articles were full-text screened after being identified through hand search of reference lists. Of the total of 3409 articles 170 were full-text screened. The full text screening resulted in a total of 64 included articles. Study flow of article identification and selection is shown in Fig. 1.

Sixty-one studies included an educational program, two were Delphi studies, and one was a prospective validity study of an assessment tool.

Assessment of need

No needs-assessment studies were found, but a few studies evaluated participants’ impression of skills and the self-defined benefits from participation after completing the education [11,12,13,14,15]. Participants found the education program helpful and felt able to place PVC in patients where it would otherwise not have been possible before education. The participants estimated a need for USG-PVC to be approximately six to seven patient cases every week. They felt an overall improvement of personal skills and considered it possible to acquire the necessary skills for ultrasound guided PVC placement through the utilized educational program [12, 14, 15].

Curriculum

In most of the included studies the curriculum was poorly described but generally included; ultrasound physics, knobology, probe selection, ultrasonic vascular and nerve recognition, preparation for USG-PVC placement, and complications (Additional file 3).

Educational methods and technique

In six studies the USG-PVC education was a part of a general ultrasound curriculum [16,17,18,19,20,21]. These educational programs included other ultrasound examinations like echocardiography and focused lung ultrasound among other techniques. The lengths of these general educational programs were between 15 to 20 h or a full rotation at an emergency ultrasound department. Five studies included scheduled follow-up with either more than 100 scans (n = 3) or at least 2 weeks of emergency medicine rotation to ensure competency (n = 5) [16, 18,19,20,21].

In studies only training the USG-PVC, the training comprised of a combination of didactic and hands-on sessions, including education on live models for normal vascular anatomy and cannulation on phantoms (Additional file 3). Fourteen educational programs included video material of the procedure [12,13,14, 22,23,24,25,26,27,28,29,30,31,32] and five included live demonstrations of the procedure [22, 23, 29, 33, 34]. Inter-study duration variations in the training sessions were seen from the shortest of 5 min to the longest of 9 h [35, 36]. In general, the duration of the training was between two to 4 h (Additional file 3).

Three studies were based on the mastery learning approach and two other studies used a similar approach [15, 28,29,30,31]. It took the participants less than 1 h of training before meeting the requirement for passing in two studies [29, 31]. A fixed curriculum time limit was used in two of the five studies, and in these two studies participants failing the first assessment attempt only required additional 15–60 min of training to pass in a second attempt [28, 30]. One study showed that extra training after meeting mastery criteria did not improve the participants’ performance [31].

E-learning, test-enhanced learning, and the composition of phantoms for training were evaluated [37,38,39]. None of these were found to have a positive or negative effect on learning competencies. Teaching participants to use colour Doppler for what is called “twinkle artifact” showed an improvement compared with only using cross-section view [40]. Two studies found a relatively steep learning curve after initial training, and that after four to nine real world attempts a nurse’s probability of success was over 70% on average [41, 42]. Likewise, results were seen in paediatric patients with a success rate of 67% after ten supervised attempts, and an increase after ten additional unsupervised attempts to 83% [27]. After ten successful attempts Ault et al. showed that the participants’ learning curve flattened [43].

Participants and training objects

The participants in the included studies varied from doctors/physicians (n = 12), nurses (n = 24), emergency department technicians (ED-technicians) (n = 3), nurse students (n = 2), medical students (n = 7) and in one study it was unclear. Additional 13 studies included more than one of the groups mentioned above. In-depth information about education participants can be found in Additional file 3.

USG-PVC methods and education were evaluated through different parameters like success rate, time, attempts, and participant rating mainly through Likert-scales. Sixty-one studies assessed the learners using mainly, two objects; either phantoms (n = 17) or living subjects (n = 38). The remaining studies did not use any evaluation objects and only evaluated through participant feedback (n = 6). The living subjects could be patients (n = 36) and some of the studies specified the type of patient. Adult patients were used in 20 studies, whereas children were used in five studies. Fifteen studies included only patients specified as DIVA patients. In other studies, the living subjects were healthy persons (n = 2). The phantoms used in studies were commercially available (n = 10) [13, 23, 34, 35, 38, 40, 44,45,46,47], homemade (n = 5) [26, 37, 39, 48, 49], or a mixture of both types of phantoms [50]. One study did not specify the type of phantom they used [51]. In-depth information about test subject and phantom distribution can be found in Additional file 3.

Assessment of competence

Assessing the competence of participants through assessment tools was done in seven studies [22, 28, 30, 31, 47, 52, 53]. In all seven studies the used assessment tool was a checklist. Good et al. evaluate if it is meaningful to use motion analysis as a tool for assessing competency for USG-PVC placement [47]. They did this by comparing a group of nurses’ motion analysis before and after USG-PIV education and comparing their improvement to the result of experts’ motion analyses. Good et al. found that 17 out of 21 nurses obtained expert proficiency in at least one of six motion-analysis metrics after education.

Two competence assessment tools were identified: one as a checklist of competence and one as a rating scale for competencies [54, 55]. Validity evidence was only explored for the rating scale [56].

USG-PVC techniques

Different types of USG-PVC techniques were evaluated in studies carried out on patients, phantoms, or both [12, 13, 18, 23, 25, 34, 35, 44,45,46,47,48, 57,58,59]. When evaluating the effect of long-axis view or short-axis view, most studies found no difference. Both the long-axis and the short-axis method were found to be better than the oblique method [35]. The differences between using the dominant or non-dominant hand for probe handling were evaluated in one study, that found the dominant to be superior [23]. Results comparing one versus two-person techniques were inconclusive [13, 58] and the implementation of guidance-markers on the screen only showed an effect when used by nurses [48].

Success rate for cannulation, number of attempts or time were the most frequently used variables for evaluating the clinical effects of education and implementation of USG-PVC placement. Likert-scales for pain and participant satisfaction were used as well. The most relevant outcomes are listed in the data-extraction sheet (Additional file 3).

Clinical impact

Traditional PVC placement without ultrasound guidance was primarily used as control. In some studies the traditional technique was specified as being anatomically guided. Nine out of ten studies found a significant difference in time, attempts, or cannulation success rate favouring ultrasound guidance compared to conventional methods on patients [16, 19, 32, 52, 60,61,62,63,64], whereas the remaining one did not find any significant difference [65]. The effect in these studies was only seen on DIVA patients. A decrease in the need for PICC or CVC was seen in several studies after the implementation of education in ultrasound guidance for PVC placement [21, 29, 62, 66, 67].

Lastly, two studies compared different health-professional groups and found no significant difference in success rate between nurses, physicians, or ED-technicians [25, 68].

Only outcomes assessed relevant to the aim of this study are included in this results section. For a full overview of outcomes see the extended data-extraction sheet in Additional file 3.

Risk of bias

The Cochrane risk-of-bias tool was used to evaluate all studies included and 33 studies were also evaluated through the Newcastle-Ottawa scale, but the heterogeneity of the included studies made the risk of bias hard to address and compare. The risk-of-bias assessments are shown in Tables 1 and 2.

The Newcastle-Ottawa scale risk assessment is based on three different areas; selection, comparability and outcome. In each a certain amount of stars can be given. These stars represent the quality in that focus area. The maximum amount of stars a study can get is 9 spread over three categories as 4/2/3 (selection/comparability/outcome). For more information, visit the Newcastle-ottawa scales website.

Discussion

In this systematic review of education in USG-PVC, we found that in especially the inpatient groups categorized as DIVA-patients, the implementation of an educational program resulted in a better patient-outcome. An effect that seems also to be present in children, but additional evidence is needed to confirm and clarify this. A large heterogeneity in the educational programs makes it hard to draw clear conclusions on how to construct the best curriculum for USG-PIV. Additionally, only few studies compared different educational methods and strategies. However, few points are possible draw from this review. The primary focus of many of the included studies was the clinical impact and evaluation of different techniques, this restricted the possibilities to recommend educational approaches other than which technique to train.

Developing an educational program

The aim of medical educational programs is to provide its participants with sufficient knowledge or competences in specific areas or procedures defined through a curriculum. Ideally, the educational program should lead to a clinical improvement and ensure a minimum of competence for all participants.

Kern’s six-step model for curriculum development can be used to ensure that an education program fulfils the aim just mentioned above [76, 77]. The six steps are; problem identification and general needs-assessment, targeted needs-assessment, setting goals and measurable objectives, educational strategies, implementation, and evaluation and feedback. In this review, we use Kern’s model to evaluate and discuss the knowledge in USG-PVC education existing at the moment. The first two steps, general and targeted needs-assessment, help to define a demand for a given education and explore the current knowledge limitations within the field [78]. To clarify the extent of the curriculum broad goals and objectives are determined (step three). Assessment tools preferably based on solid evidence of validity should be used to ensure these goals. The educational program is then implemented in a clinical setting, where it is tested for its effect and impact, and lastly evaluating the whole process to chance or refine some of the five previous steps. Throughout each step, the previous steps should be evaluated and changes considered.

Needs assessment

When assessing medical education, it is recommended to carry out a general needs-assessment on a national or international level [76, 78]. A national general needs-assessment in anaesthesiology identified the technical procedures that leading key-opinion leaders thought should be trained using simulation [79]. USG-PVC placement was mentioned as one of 30 important procedures.

Edwards et al. and Adhikari et al. investigated nurses’ perceived need for an educational program through questionnaires after completion of USG-PVC training [12, 14]. Nurses expected approximately three DIVA-patients per shift and thought that ultrasound guidance might be an aid. More than half the nurses felt that the biggest barrier for them to use USG-PVC was lack of experience and almost everyone agreed that focused training was adequate to learn this. The two studies addressed a local perception of need for education but in reverse order. A needs-assessment could be put in place to clarify the need for education, preferably with the possibility for generalisation.

Goals and objectives

General goals and specific measurable objectives are important because they help define the curriculums and direct content and participant focus [76]. Benchmarks can through assessment tools as checklists or global rating scales ensure participants’ competence during and after education programs.

A lack of a defined curriculum and a rare use of any tools for ensuring competencies are seen in the included articles. Furthermore, seven of the included articles use a general US education [16,17,18,19,20,21, 71], which makes it harder to address the effect of the USG-PVC training and open up for the possibility for transfer of skills. Ahern et al. investigated general ultrasound educations in America and found a lack of assessment tools and lack of specific curricula [80]. However, there seems to be a general consensus in the included articles regarding which topics are important e.g. ultrasound and machine understanding, knobology, probe handling, ultrasound picture recognition and ultrasound cannulation technique.

The Delphi-method can help define the extent of a curriculum together with clearly defined goals and sub-goals [81]. Messick’s framework can then be used to ensure the assessment tool’s capability to measure what it is supposed to measure [82]. The framework describes five sources of validity in experimental data consist of five groups; content, response process, internal structure, relationship to other variables, and consequences. Each source of validity can demand varying attention depending on the curriculum assessed [83].

Two assessment tools were found; Jung’s 16 items checklist and Primdahl’s rating scale, both developed through the Delphi-method. Only Primdahl et al. explored the validity evidence of the assessment tool using Messick’s framework [54,55,56]. The items in these assessment tools could be the inspiration for the items in a curriculum. In the seven studies using an assessment tool all of them were checklists and the validity evidence was not explored for any of these checklists [22, 28, 30, 31, 47, 52, 53].

Educational methods

A key element of curriculum development is addressing different educational strategies or adding educational components to improve the learning process. The mastery learning approach builds on the concept that not time but acquired competencies define the educational process [84]. Usually, by introducing new steps of procedures or topics when a previous step has been mastered. This could be introduction, machine settings and then ultrasound scanning a forearm followed by training cannulation technique on phantoms. The approach can differ and some suggest an effect by letting participants self-evaluate when they are ready to move on based on fixed goals [85, 86]. Others prefer letting an external objective evaluation and feedback by a simulator or instructor decide when to move on [87]. Feedback as a mechanism is a central part of the mastery-learning concept with its possibilities to improve performance by correction. On the other hand, too much feedback might also have its drawbacks as explained by the guidance hypotheses [84, 88, 89].

Mastery learning has proven efficacy but surprisingly only three studies used this approach [28, 30, 31]. A reason could be that it is easier to plan a traditional course using a fixed amount of time than a course that ensures that all trainees acquire the pre-defined proficiency level because trainees learn at different paces. The three studies prove that it is possible to plan an USG-PVC education by mastery learning. Compared to the studies that did not use mastery learning, mastery learning did not take up more time, with an average around 1 h, with approximate 30 min extra education time, if the first assessment was not passed [28, 30, 31]. Additionally, Kule et al. showed that so called overtraining did not increase success rate [31].

Four studies address the learning curve of nurses after an education program [41,42,43, 52]. Three studies showed a steep learning curve but a difference in attempts to obtain a success rate of 70–80% between four and ten USG-PVC performed in the clinic [41, 52, 73]. This finding aligns with the superiority of the mastery learning approach; no fixed numbers can ensure competency of all trainees.

Evaluation of different educational strategies and educational initiatives is equally important as investigating the effect of education. Chenkin et al. found that a one-hour web-based learning program was equally efficient compared to a one-hour traditional classroom lecture [38]. Only three of the studies used an e-learning module as a part of their educational program [22, 36, 47]. This indicates that e-learning is not implemented to its capability. The usage of color Doppler function to identify “twinkle artifacts” had an effect on cannulation time and a positive but non-significant impact on the success rate [40]. New studies in the future can help to clarify the full effect of color Doppler in USG-PVC education.

Neither test-enhanced learning nor using different types of phantoms seem to have a significant effect on the learning outcome and therefor none of these can be recommended [37, 39, 50].

Clinical impact

The program’s clinical impact is the highest level of evaluation in Kirkpatrick’s method for evaluating the effects of educational programs [90]. The measurable impact of an educational program on an institutional level defines the impact of the program and evaluates the potential benefits and cost-efficiency.

The majority of the included studies found that ultrasound-guidance improved PVC-placement in either attempts, time, or success rate compared to traditional method when used on DIVA patients [19, 32, 52, 60,61,62,63] as it is also mentioned in Van-Loon meta-analysis [91]. Furthermore, a decrease in the need for CVC or PICC was seen in several studies [21, 29, 62, 66, 67]. Carter et al. and Oliveira et al. found that the effectiveness did not depend on profession and education should therefore be considered to a wide range of health care professions [25, 68].

Even though most studies found an effect on success rate, time, or amount of attempts, the results varied a lot between studies and few did not find an effect. The variation of success rate was between 85 and 97.5% in the top group [17, 19, 22, 24, 42, 53, 59, 64, 68], down to only 63% in adult patients [58] and no difference between traditional and ultrasound-guided in two studies [65, 69]. These big differences seem strange and even though some are explained by difference in equipment, patients and practitioners, some of the differences might also be explained by different educational strategies and the lack of evidence-based curricula to ensure competencies in ultrasound-guided PVC-placement. This shows the importance of more studies and easy access to information about proper educational strategies for USG-PVC.

Limitations

This systematic review was conducted on the premises of the available published articles and their quantity and quality. Only including full text published articles could have resulted in the exclusion of possibly relevant results. Ideally, a meta-analysis of presented data should have been included as a part of this review, but was deemed not clinically meaningful due to the large heterogeneity of the reported methods and results.

Implications

In summary there seems to be a clinical effect of using USG-PIV in specific patient groups as DIVA, and maybe also children. An educational program could very well be structured around the mastery-learning program, with an e-learning pre-course introducing the participants to the principals of ultrasound, anatomy and USG-PVC technique. This e-learning could be followed by a hands-on session structured around mastery learning and ending with an assessment test, at the moment preferably the one from Primdahl et al. since it is the only one where validity of evidence has been explored.

Conclusion

Current studies suggest a potential benefit of ultrasound guided USG-PVC training on success rate, procedure time, cannulation attempts, and by reducing the need for subsequent CVC or PICC in adult patients. An assessment tool with proven validity of evidence to ensure competence exists and educational strategies like mastery learning, e-learning and the usage of color Doppler show promising results but an evidence-based USG-PVC-placement training program using these strategies combined is still warranted.

Availability of data and materials

All extracted data not included in the article text is available in the additional file.

References

Fields JM, Piela NE, Au AK, Ku BS. Risk factors associated with difficult venous access in adult ED patients. Am J Emerg Med. 2014;32(10):1179–82. https://doi.org/10.1016/j.ajem.2014.07.008.

Pinto A, Pinto F, Faggian A, et al. Sources of error in emergency ultrasonography. Crit Ultrasound J. 2013;5(Suppl 1):S1–S.

McMillan HJ, Writer H, Moreau KA, et al. Lumbar puncture simulation in pediatric residency training: improving procedural competence and decreasing anxiety. BMC Med Educ. 2016;16(1):198. https://doi.org/10.1186/s12909-016-0722-1.

Evans LV, Dodge KL, Shah TD, Kaplan LJ, Siegel MD, Moore CL, et al. Simulation training in central venous catheter insertion: improved performance in clinical practice. Acad Med. 2010;85(9):1462–9. https://doi.org/10.1097/ACM.0b013e3181eac9a3.

Madenci AL, Solis CV, de Moya MA. Central venous access by trainees: a systematic review and meta-analysis of the use of simulation to improve success rate on patients. Simul Healthc. 2014;9(1):7–14. https://doi.org/10.1097/SIH.0b013e3182a3df26.

Pietersen PI, Madsen KR, Graumann O, Konge L, Nielsen BU, Laursen CB. Lung ultrasound training: a systematic review of published literature in clinical lung ultrasound training. Crit Ultrasound J. 2018;10(1):23. https://doi.org/10.1186/s13089-018-0103-6.

Kahr Rasmussen N, Andersen TT, Carlsen J, et al. Simulation-based training of ultrasound-guided procedures in radiology - a systematic review. Ultraschall Med. 2019;40(5):584–602. https://doi.org/10.1055/a-0896-2714.

Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. J Clin Epidemiol. 2009;62(10):1006–12. https://doi.org/10.1016/j.jclinepi.2009.06.005.

Higgins JPT, Altman DG, Gøtzsche PC, et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343(oct18 2):d5928. https://doi.org/10.1136/bmj.d5928.

GA Wells, Shea, B, D O’Connell, J Peterson, V Welch, M Losos, P Tugwell. The Newcastle-Ottawa Scale (NOS) for assessing the quality of nonrandomised studies in meta-analyses [Available from: http://www.ohri.ca/programs/clinical_epidemiology/oxford.asp]. Accessed 13 Mar 2018.

Osborn SR, Borhart J, Antonis MS. Medical students benefit from the use of ultrasound when learning peripheral IV techniques. Crit Ultrasound J. 2012;4(1):2. https://doi.org/10.1186/2036-7902-4-2.

Edwards C, Jones J. Development and implementation of an ultrasound-guided peripheral intravenous catheter program for emergency nurses. J Emerg Nurs. 2018;44(1):33–6. https://doi.org/10.1016/j.jen.2017.07.009.

Ng C, Ng L, Kessler DO. Attitudes towards three ultrasound-guided vascular access techniques in a paediatric emergency department. Br J Nurs (Mark Allen Publishing). 2017;26(19):S26–s31. https://doi.org/10.12968/bjon.2017.26.19.S26.

Adhikari S, Schmier C, Marx J. Focused simulation training: emergency department nurses’ confidence and comfort level in performing ultrasound-guided vascular access. J Vasc Access. 2015;16(6):515–20. https://doi.org/10.5301/jva.5000436.

Breslin R, Collins K, Cupitt J. The use of ultrasound as an adjunct to peripheral venous cannulation by junior doctors in clinical practice. Med Teach. 2018;40(12):1275–80. https://doi.org/10.1080/0142159X.2018.1428737.

Costantino TG, Kirtz JF, Satz WA. Ultrasound-guided peripheral venous access vs. the external jugular vein as the initial approach to the patient with difficult vascular access. J Emerg Med. 2010;39(4):462–7. https://doi.org/10.1016/j.jemermed.2009.02.004.

Salleras-Duran L, Fuentes-Pumarola C, Bosch-Borras N, et al. Ultrasound-guided peripheral venous catheterization in emergency services. J Emerg Nurs. 2016;42(4):338–43. https://doi.org/10.1016/j.jen.2015.11.005.

Panebianco NL, Fredette JM, Szyld D, Sagalyn EB, Pines JM, Dean AJ. What you see (sonographically) is what you get: vein and patient characteristics associated with successful ultrasound-guided peripheral intravenous placement in patients with difficult access. Acad Emerg Med. 2009;16(12):1298–303. https://doi.org/10.1111/j.1553-2712.2009.00520.x.

Costantino TG, Parikh AK, Satz WA, Fojtik JP. Ultrasonography-guided peripheral intravenous access versus traditional approaches in patients with difficult intravenous access. Ann Emerg Med. 2005;46(5):456–61. https://doi.org/10.1016/j.annemergmed.2004.12.026.

Desai K, Vinograd AM, Abbadessa MKF, Chen AE. Longevity and complication rates of ultrasound guided versus traditional peripheral intravenous catheters in a pediatric emergency department. J Assoc Vasc Access. 2018;23(3):149–54. https://doi.org/10.1016/j.java.2018.06.002.

Shokoohi H, Boniface K, McCarthy M, Khedir al-tiae T, Sattarian M, Ding R, et al. Ultrasound-guided peripheral intravenous access program is associated with a marked reduction in central venous catheter use in noncritically ill emergency department patients. Ann Emerg Med. 2013;61(2):198–203. https://doi.org/10.1016/j.annemergmed.2012.09.016.

Duran-Gehring P, Bryant L, Reynolds JA, Aldridge P, Kalynych CJ, Guirgis FW. Ultrasound-guided peripheral intravenous catheter training results in physician-level success for emergency department technicians. J Ultrasound Med. 2016;35(11):2343–52. https://doi.org/10.7863/ultra.15.11059.

Durand-Bailloud L, Aho LS, Savoldelli G, Ecarnot F, Girard C, Benkhadra M. Non-dominant hand quicker to insert peripheral venous catheters under echographic guidance: a randomised trial. Anaesth, Crit Care Pain Med. 2017;36(5):291–6. https://doi.org/10.1016/j.accpm.2016.08.008.

Brannam L, Blaivas M, Lyon M, Flake M. Emergency nurses’ utilization of ultrasound guidance for placement of peripheral intravenous lines in difficult-access patients. Acad Emerg Med Off J Soc Acad Emerg Med. 2004;11(12):1361–3. https://doi.org/10.1197/j.aem.2004.08.027.

Oliveira L, Lawrence M. Ultrasound-guided peripheral intravenous access program for emergency physicians, nurses, and corpsmen (technicians) at a military hospital. Mil Med. 2016;181(3):272–6. https://doi.org/10.7205/MILMED-D-15-00056.

Blaivas M, Lyon M. The effect of ultrasound guidance on the perceived difficulty of emergency nurse-obtained peripheral IV access. J Emerg Med. 2006;31(4):407–10. https://doi.org/10.1016/j.jemermed.2006.04.014.

Blick C, Vinograd A, Chung J, Nguyen E, Abbadessa MKF, Gaines S, et al. Procedural competency for ultrasound-guided peripheral intravenous catheter insertion for nurses in a pediatric emergency department. J Vasc Access. 2021;22(2):232–7. https://doi.org/10.1177/1129729820937131.

Ballard HA, Tsao M, Robles A, et al. Use of a simulation-based mastery learning curriculum to improve ultrasound-guided vascular access skills of pediatric anesthesiologists. Paediatr Anaesth. 2020;30(11):1204–10. https://doi.org/10.1111/pan.13953.

Galen B, Baron S, Young S, Hall A, Berger-Spivack L, Southern W. Reducing peripherally inserted central catheters and midline catheters by training nurses in ultrasound-guided peripheral intravenous catheter placement. BMJ Qual Saf. 2020;29(3):245–9. https://doi.org/10.1136/bmjqs-2019-009923.

Amick AE, Feinsmith SE, Davis EM, et al. Simulation-Based Mastery Learning Improves Ultrasound-Guided Peripheral Intravenous Catheter Insertion Skills of Practicing Nurses. Simul Healthc. 2021; Publish Ahead of Print.

Kule A, Richards RA, Vazquez HM, et al. Medical Student Ultrasound-Guided Intravenous Catheter Education: A Randomized Controlled Trial of Overtraining in a Simulation-Based Mastery Learning Setting. Simul Healthc. 2021. https://doi.org/10.1097/SIH.0000000000000554. Epub ahead of print.

Bahl A, Pandurangadu AV, Tucker J, Bagan M. A randomized controlled trial assessing the use of ultrasound for nurse-performed IV placement in difficult access ED patients. Am J Emerg Med. 2016;34(10):1950–4. https://doi.org/10.1016/j.ajem.2016.06.098.

Batten S, Pazdernik V, Schneider R, Kondrashova T. Interprofessional approach to learning vascular access with ultrasonography by medical students and nurses. Mo Med. 2020;117(5):450–6.

Clemmesen L, Knudsen L, Sloth E, et al. Dynamic needle tip positioning - ultrasound guidance for peripheral vascular access. A randomized, controlled and blinded study in phantoms performed by ultrasound novices. Ultraschall Med. 2012;33(7):E321–e5.

Tassone HM, Tayal VS, Weekes AJ, et al. Ultrasound-guided oblique approach for peripheral venous access in a phantom model. Crit Ultrasound J. 2012;4(1):14.

Reeves T, Morrison D, Altmiller G. A nurse-led ultrasound-enhanced vascular access preservation program. Am J Nurs. 2017;117(12):56–64. https://doi.org/10.1097/01.NAJ.0000527490.24610.51.

Slomer A, Chenkin J. Does test-enhanced learning improve success rates of ultrasound-guided peripheral intravenous insertion? A randomized controlled trial. AEM Educ Train. 2017;1(4):310–5. https://doi.org/10.1002/aet2.10044.

Chenkin J, Lee S, Huynh T, Bandiera G. Procedures can be learned on the web: a randomized study of ultrasound-guided vascular access training. Acad Emerg Med Off J Soc Acad Emerg Med. 2008;15(10):949–54. https://doi.org/10.1111/j.1553-2712.2008.00231.x.

Davis J, Faust T, Tajani A, Bates A, Jarriel J, Au A, et al. A randomized study of training with large versus small vessel size on successful ultrasound-guided peripheral venous access. J Vasc Access. 2017;18(2):163–6. https://doi.org/10.5301/jva.5000645.

Gardecki J, Hughes LP, Zakaria S, et al. Use of the color Doppler twinkle artifact for teaching ultrasound guided peripheral vascular access. J Vasc Access. 2020;22:1129729820959907. https://doi.org/10.1177/1129729820959907. Epub ahead of print.

Anderson AP, Taroc AM, Wang X, Beardsley E, Solari P, Klein EJ. Ultrasound guided peripheral IV placement: an observational study of the learning curve in pediatric patients. J Vasc Access. 2021:112972982098795. https://doi.org/10.1177/1129729820987958.

Stolz LA, Cappa AR, Minckler MR, Stolz U, Wyatt RG, Binger CW, et al. Prospective evaluation of the learning curve for ultrasound-guided peripheral intravenous catheter placement. J Vasc Access. 2016;17(4):366–70. https://doi.org/10.5301/jva.5000574.

Ault MJ, Tanabe R, Rosen BT. Peripheral intravenous access using ultrasound guidance: defining the learning curve. J Assoc Vasc Access. 2015;20(1):32–6. https://doi.org/10.1016/j.java.2014.10.012.

Griffiths J, Carnegie A, Kendall R, Madan R. A randomised crossover study to compare the cross-sectional and longitudinal approaches to ultrasound-guided peripheral venepuncture in a model. Crit Ultrasound J. 2017;9(1):9. https://doi.org/10.1186/s13089-017-0064-1.

Erickson CS, Liao MM, Haukoos JS, Douglass E, DiGeronimo M, Christensen E, et al. Ultrasound-guided small vessel cannulation: long-axis approach is equivalent to short-axis in novice sonographers experienced with landmark-based cannulation. West J Emerg Med. 2014;15(7):824–30. https://doi.org/10.5811/westjem.2014.9.22404.

Stone MB, Moon C, Sutijono D, Blaivas M. Needle tip visualization during ultrasound-guided vascular access: short-axis vs long-axis approach. Am J Emerg Med. 2010;28(3):343–7. https://doi.org/10.1016/j.ajem.2008.11.022.

Good RJ, Rothman KK, Ackil DJ, Kim JS, Orsborn J, Kendall JL. Hand motion analysis for assessment of nursing competence in ultrasound-guided peripheral intravenous catheter placement. J Vasc Access. 2019;20(3):301–6. https://doi.org/10.1177/1129729818804997.

Thorn S, Aagaard Hansen M, Sloth E, Knudsen L. Guidance markers increase the accuracy of simulated ultrasound-guided vascular access: an observational cohort study in a phantom. J Vasc Access. 2017;18(1):73–8. https://doi.org/10.5301/jva.5000614.

Fürst RV, Polimanti AC, Galego SJ, et al. Ultrasound-guided vascular access simulator for medical training: proposal of a simple, economic and effective model. World J Surg. 2017;41(3):681–6. https://doi.org/10.1007/s00268-016-3757-x.

Leung KY, Mok KL, Yuen CK, Wong YT, Kan PG. Evaluation of a commercial phantom and pork meat model for simulation based training of ultrasound guided intravenous catheterisation. Hong Kong J Emerg Med. 2016;23(3):153–8. https://doi.org/10.1177/102490791602300304.

Vitto MJ, Myers M, Vitto CM, Evans DP. Perceived difficulty and success rate of standard versus ultrasound-guided peripheral intravenous cannulation in a novice study group: a randomized crossover trial. J Ultrasound Med. 2016;35(5):895–8. https://doi.org/10.7863/ultra.15.06057.

Feinsmith S, Huebinger R, Pitts M, et al. Outcomes of a simplified ultrasound-guided intravenous training course for emergency nurses. J Emerg Nurs. 2018;44(2):169–75.e2.

Moore C. An emergency department nurse-driven ultrasound-guided peripheral intravenous line program. J Assoc Vasc Access. 2013;18(1):45–51. https://doi.org/10.1016/j.java.2012.12.001.

Primdahl SC, Todsen T, Clemmesen L, Knudsen L, Weile J. Rating scale for the assessment of competence in ultrasound-guided peripheral vascular access - a Delphi consensus study. J Vasc Access. 2016;17(5):440–5. https://doi.org/10.5301/jva.5000581.

Jung CF, Breaud AH, Sheng AY, Byrne MW, Muruganandan KM, Dhanani M, et al. Delphi method validation of a procedural performance checklist for insertion of an ultrasound-guided peripheral intravenous catheter. Am J Emerg Med. 2016;34(11):2227–30. https://doi.org/10.1016/j.ajem.2016.08.006.

Primdahl SC, Weile J, Clemmesen L, Madsen KR, Subhi Y, Petersen P, et al. Validation of the peripheral ultrasound-guided vascular access rating scale. Medicine. 2018;97(2):e9576. https://doi.org/10.1097/MD.0000000000009576.

Blaivas M, Brannam L, Fernandez E. Short-axis versus long-axis approaches for teaching ultrasound-guided vascular access on a new inanimate model. Acad Emerg Med Off J Soc Acad Emerg Med. 2003;10(12):1307–11. https://doi.org/10.1197/S1069-6563(03)00534-7.

Chinnock B, Thornton S, Hendey GW. Predictors of success in nurse-performed ultrasound-guided cannulation. J Emerg Med. 2007;33(4):401–5. https://doi.org/10.1016/j.jemermed.2007.02.027.

Mahler SA, Wang H, Lester C, Skinner J, Arnold TC, Conrad SA. Short- vs long-axis approach to ultrasound-guided peripheral intravenous access: a prospective randomized study. Am J Emerg Med. 2011;29(9):1194–7. https://doi.org/10.1016/j.ajem.2010.07.015.

Oakley E, Wong AM. Ultrasound-assisted peripheral vascular access in a paediatric ED. Emerg Med Aust. 2010;22(2):166–70. https://doi.org/10.1111/j.1742-6723.2010.01281.x.

Bauman M, Braude D, Crandall C. Ultrasound-guidance vs. standard technique in difficult vascular access patients by ED technicians. Am J Emerg Med. 2009;27(2):135–40. https://doi.org/10.1016/j.ajem.2008.02.005.

Partovi-Deilami K, Nielsen JK, Moller AM, Nesheim SS, Jorgensen VL. Effect of ultrasound-guided placement of difficult-to-place peripheral venous catheters: a prospective study of a training program for nurse anesthetists. AANA J. 2016;84(2):86–92.

Doniger SJ, Ishimine P, Fox JC, Kanegaye JT. Randomized controlled trial of ultrasound-guided peripheral intravenous catheter placement versus traditional techniques in difficult-access pediatric patients. Pediatr Emerg Care. 2009;25(3):154–9. https://doi.org/10.1097/PEC.0b013e31819a8946.

Sou V, McManus C, Mifflin N, Frost SA, Ale J, Alexandrou E. A clinical pathway for the management of difficult venous access. BMC Nurs. 2017;16(1):64. https://doi.org/10.1186/s12912-017-0261-z.

Bridey C, Thilly N, Lefevre T, Maire-Richard A, Morel M, Levy B, et al. Ultrasound-guided versus landmark approach for peripheral intravenous access by critical care nurses: a randomised controlled study. BMJ Open. 2018;8(6):e020220. https://doi.org/10.1136/bmjopen-2017-020220.

Maiocco G, Coole C. Use of ultrasound guidance for peripheral intravenous placement in difficult-to-access patients: advancing practice with evidence. J Nurs Care Qual. 2012;27(1):51–5. https://doi.org/10.1097/NCQ.0b013e31822b4537.

Gopalasingam N, Thomsen AE, Folkersen L, et al. A successful model to learn and implement ultrasound-guided venous catheterization in apheresis. J Clin Apher. 2017;32(6):437–43. https://doi.org/10.1002/jca.21533.

Carter T, Conrad C, Wilson JL, et al. Ultrasound guided intravenous access by nursing versus resident staff in a community based teaching hospital: a “noninferiority” trial. Emerg Med Int. 2015;2015:563139.

Bair AE, Rose JS, Vance CW, Andrada-Brown E, Kuppermann N. Ultrasound-assisted peripheral venous access in young children: a randomized controlled trial and pilot feasibility study. West J Emerg Med. 2008;9(4):219–24.

Schoenfeld E, Shokoohi H, Boniface K. Ultrasound-guided peripheral intravenous access in the emergency department: patient-centered survey. West J Emerg Med. 2011;12(4):475–7. https://doi.org/10.5811/westjem.2011.3.1920.

Vinograd AM, Zorc JJ, Dean AJ, Abbadessa MKF, Chen AE. First-attempt success, longevity, and complication rates of ultrasound-guided peripheral intravenous catheters in children. Pediatr Emerg Care. 2018;34(6):376–80. https://doi.org/10.1097/PEC.0000000000001063.

Dargin JM, Rebholz CM, Lowenstein RA, Mitchell PM, Feldman JA. Ultrasonography-guided peripheral intravenous catheter survival in ED patients with difficult access. Am J Emerg Med. 2010;28(1):1–7. https://doi.org/10.1016/j.ajem.2008.09.001.

Blick C, Vinograd A, Chung J, et al. Procedural competency for ultrasound-guided peripheral intravenous catheter insertion for nurses in a pediatric emergency department. J Vasc Access. 2021;22(2):232–7. https://doi.org/10.1177/1129729820937131. Epub 2020 Jun 27.

Bortman J, Mahmood F, Mitchell J, Feng R, Baribeau Y, Wong V, et al. Ultrasound-guided intravenous line placement course for certified registered nurse anesthetists: a necessary next step. AANA J. 2019;87(4):269–75.

Kaganovskaya M, Wuerz L. Development of an educational program using ultrasonography in vascular access for nurse practitioner students. Br J Nurs (Mark Allen Publishing). 2021;30(2):S34–s42. https://doi.org/10.12968/bjon.2021.30.2.S34.

Khamis NN, Satava RM, Alnassar SA, Kern DE. A stepwise model for simulation-based curriculum development for clinical skills, a modification of the six-step approach. Surg Endosc. 2016;30(1):279–87. https://doi.org/10.1007/s00464-015-4206-x.

Bjerrum F, Thomsen ASS, Nayahangan LJ, Konge L. Surgical simulation: current practices and future perspectives for technical skills training. Med Teach. 2018;40(7):668–75. https://doi.org/10.1080/0142159X.2018.1472754.

Nayahangan LJ, Stefanidis D, Kern DE, Konge L. How to identify and prioritize procedures suitable for simulation-based training: experiences from general needs assessments using a modified Delphi method and a needs assessment formula. Med Teach. 2018;40(7):676–83. https://doi.org/10.1080/0142159X.2018.1472756.

Bessmann EL, Ostergaard HT, Nielsen BU, et al. Consensus on technical procedures for simulation-based training in anaesthesiology: a Delphi-based general needs assessment. Acta Anaesthesiol Scand. 2019;63(6):720–9. https://doi.org/10.1111/aas.13344.

Ahern M, Mallin MP, Weitzel S, Madsen T, Hunt P. Variability in ultrasound education among emergency medicine residencies. West J Emerg Med. 2010;11(4):314–8.

Clayton MJ. Delphi: a technique to harness expert opinion for critical decision-making tasks in education. Educ Psychol. 1997;17(4):373–86. https://doi.org/10.1080/0144341970170401.

Messick S. Validity of psychological assessment: validation of inferences from persons’ responses and performances as sceintific inquiry into score meaning. Am Psychol. 1995;50(9):741–9. https://doi.org/10.1037/0003-066X.50.9.741.

Downing SM. Validity: On the meaningful interpretation of assessment data. Med Educ. 2003;37(9):830–7. https://doi.org/10.1046/j.1365-2923.2003.01594.x.

Cook DA, Brydges R, Zendejas B, Hamstra SJ, Hatala R. Mastery learning for health professionals using technology-enhanced simulation: a systematic review and meta-analysis. Acad Med. 2013;88(8):1178–86. https://doi.org/10.1097/ACM.0b013e31829a365d.

Brydges R, Butler D. A reflective analysis of medical education research on self-regulation in learning and practice. Med Educ. 2012;46(1):71–9. https://doi.org/10.1111/j.1365-2923.2011.04100.x.

Brydges R, Carnahan H, Rose D, et al. Comparing self-guided learning and educator-guided learning formats for simulation-based clinical training. Journal of advanced nursing. 2010;66(8):1832–44. https://doi.org/10.1111/j.1365-2648.2010.05338.x.

Snyder CW, Vandromme MJ, Tyra SL, et al. Effects of virtual reality simulator training method and observational learning on surgical performance. World J Surg. 2011;35(2):245–52. https://doi.org/10.1007/s00268-010-0861-1.

Lee TD, White MA, Carnahan H. On the role of knowledge of results in motor learning. J Mot Behav. 1990;22(2):191–208. https://doi.org/10.1080/00222895.1990.10735510.

Stefanidis D, Korndorffer JR Jr, Heniford BT, Scott DJ. Limited feedback and video tutorials optimize learning and resource utilization during laparoscopic simulator training. Surgery. 2007;142(2):202–6. https://doi.org/10.1016/j.surg.2007.03.009.

Kirkpatrick D, Kirkpatrick J. Evaluating training programs: the four levels. Oakland: Berrett-Koehler Publishers; 2006.

van Loon FHJ, Buise MP, Claassen JJF, Dierick-van Daele ATM, Bouwman ARA. Comparison of ultrasound guidance with palpation and direct visualisation for peripheral vein cannulation in adult patients: a systematic review and meta-analysis. Br J Anaesth. 2018;121(2):358–66. https://doi.org/10.1016/j.bja.2018.04.047.

Acknowledgements

Ida Marie Krøyer Dahl: proofreading, support.

Funding

Funded by the University of Southern Denmark through a half year research grand (grant not grand) for medical students. Other than the grant mentioned above. This research received no specific grant from any funding agency in public, commercial or not-for-profit sector.

Author information

Authors and Affiliations

Contributions

Rasmus Jørgensen: Main author, conceptualization, construction of protocol, composing of search string article screening, data extraction, bias assessing, writing, table and figure construction. Pia Pietersen: Conceptualization, composing of search string, article screening, bias assessing, supervisor, proofreading, editing and reviewing. Christian Laursen: Main supervisor, conceptualization, proofreading, editing reviewing and expert guidance. Lars Konge: Supervisor, conceptualization, proofreading, editing, reviewing and expert guidance. The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

General search strategy.

Additional file 2.

Full search strategy.

Additional file 3.

Data extraxtion.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Jørgensen, R., Laursen, C.B., Konge, L. et al. Education in the placement of ultrasound-guided peripheral venous catheters: a systematic review. Scand J Trauma Resusc Emerg Med 29, 83 (2021). https://doi.org/10.1186/s13049-021-00897-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13049-021-00897-z