Abstract

Background

The access to empirically-supported treatments for common mental disorders in children and adolescents is often limited. Mental health apps might extend service supplies, as they are deemed to be cost-efficient, scalable and appealing for youth. However, little is known about the quality of available apps. Therefore, we aimed to systematically evaluate current mobile-based interventions for pediatric anxiety, depression and posttraumatic stress disorder (PTSD).

Methods

Systematic searches were conducted in Google Play Store and Apple App Store to identify relevant apps. To be eligible for inclusion, apps needed to be: (1) designed to target either anxiety, depression or PTSD in youth (0–18 years); (2) developed for children, adolescents or caregivers; (3) provided in English or German; (4) operative after download. The quality of eligible apps was assessed with two standardized rating systems (i.e., Mobile App Rating Scale (MARS) and ENLIGHT) independently by two reviewers.

Results

Overall, the searches revealed 3806 apps, with 15 mental health apps (0.39%) fulfilling our inclusion criteria. The mean overall scores suggested a moderate app quality (MARS: M = 3.59, SD = 0.50; ENLIGHT: M = 3.22, SD = 0.73). Moreover, only one app was evaluated in an RCT. The correlation of both rating scales was high (r = .936; p < .001), whereas no significant correlations were found between rating scales and user ratings (p > .05).

Conclusions

Our results point to a rather poor overall app quality, and indicate an absence of scientific-driven development and lack of methodologically sound evaluation of apps. Thus, future high-quality research is required, both in terms of theoretically informed intervention development and assessment of mental health apps in RCTs. Furthermore, institutionalized best-practices that provide central information on different aspects of apps (e.g., effectiveness, safety, and data security) for patients, caregivers, stakeholders and mental health professionals are urgently needed.

Similar content being viewed by others

Background

Mental disorders frequently originate in early periods of life [1], and are associated with significant burden and personal suffering [2]. Anxiety, depression and posttraumatic stress disorder are prevalent in children and adolescents, with increasing incidence rates worldwide [3,4,5]. Without effective and timely treatments, these common mental disorders tend to continue into adulthood, with augmented risks to develop further mental disorders and other detrimental outcomes in the long-term [e.g., 6].

Empirically-supported treatment options for common mental disorders in youth (0–18 years) embrace psychotherapy conveyed face-to-face [e.g., 7] and pharmacotherapy [e.g., 8]. In particular, cognitive-behavioral therapy (CBT) has comprehensively documented its effectiveness and acceptability in the treatment of anxiety disorders [9], depression [10, 11] and PTSD in children and adolescents [12]. However, the availability and uptake rates of conventional evidence-based psychotherapies remain low [13], due to different individual and structural barriers, like shortage of mental healthcare supplies, long-waiting times, limitations in mobility, and high treatment costs or fear of stigmatization [14, 15]. Thus, innovative and scalable solutions to lessen this treatment gap are urgently needed; and these novel interventions have to prove their empirical support in terms of various aspects of evidence-based medicine/psychotherapy, such as efficacy and effectiveness, quality or intervention safety.

Internet- and mobile-based interventions (IMIs) hold the potential to lessen existing gaps in mental healthcare [13, 16], as they feature several advantages that might help to overcome some of these obstructions to treatment. First, structural barriers might be reduced, as IMIs can be implemented irrespective of the restraints of space and time [17, 18]. Second, IMIs are deemed to be cost-efficient, as they might be scalable for large numbers of patients [19, 20]. Third, IMIs might also diminish some individual barriers, because these interventions can reduce stigma threat by preserving anonymity, and allow patients to integrate them flexibly into their daily lives at their own pace and individual progress [13, 21]. Furthermore, the digital delivery mode of these novel interventions might be especially appealing for youth, who are frequently familiar and acquainted with the Internet and its applications on mobile devices from an early age [13, 22].

The evidence-base for the efficacy of internet-based psychotherapeutic interventions for some common mental disorders in children and adolescents is documented by several meta-analyses so far [13]. The strongest empirical support can be found for anxiety disorders in youth with effect sizes ranging from 0.30 to 0.77 (standardized mean differences (SMD); 95% CI − 0.53 to 1.45) derived from five meta-analyses [23,24,25,26,27]. Furthermore, the efficacy of internet-based interventions for depression in youth is documented by four meta-analyses [23, 24, 27, 28], with effect sizes in the range from 0.16 to 0.76 (SMD; 95% CI − 0.12 to 1.12). Aside from this meta-analytical evidence, there is are also preliminary indications that internet-based interventions might be acceptable [29] and cost-effective [30], and hold the potential to extend service supplies within a framework of stepped mental healthcare [29, 31].

Given the efficacy of internet-based psychotherapeutic interventions and the worldwide dispersal of smartphones, mobile health applications (MHA) become more and more appealing as a way to complement or deliver psychotherapeutic support [19]. Recent meta-analyses indicate that also app-based psychotherapeutic interventions are efficacious [32, 33]: For instance, for depressive symptoms (SMD = 0.28, 95% CI 0.21 to 0.36), generalized anxiety symptoms (SMD = 0.30, 95% CI 0.20 to 0.40) [32] or smoking behavior (SMD = 0.39, 95% CI 0.21 to 0.57) [33]. However, studies provide mainly evidence for adults. Of the k = 66 [32] and k = 19 [33] included studies in the meta-analyses on the efficacy of app-based psychotherapeutic interventions, only one focused on youth [34].

The limited evidence on mobile health apps for children and adolescents seems like the more important, as there is already a vast (evidence-free) commercial app market accessible in routine care: In an international cooperation study, the analysis of N = 1.299 commercially available MHA yielded, that 94.8% were not evidence-based [35]. Besides major limitations towards the evidence-base in the app markets, systematic evaluations about the quality of available MHA highlight a high heterogeneity and weak to moderate quality of MHA, especially regarding the engagement of users and information quality [16, 35,36,37,38]. Moreover, privacy and security features of MHA available in the app markets have been identified as crucial issues for patients’ safety [39, 40].

Hence, it is of utmost importance to evaluate the apps available in the commercial app market to highlight the few high-quality, secure and scientifically evaluated MHA to inform and guide users as well as healthcare professionals. Standardized and reliable instruments like the Mobile Application Rating Scale (MARS) [35, 41, 42] or the ENLIGHT instrument [43] that offer an assessment for quality, privacy features, and evidence-base enable such evaluations. Although previous reviews have systematically evaluated health apps for depression [16] and PTSD [36] in adults, to our knowledge, no such study was conducted relating to mobile-based interventions targeted for common mental disorders in children and adolescents to date. Therefore, this study aimed to systematically evaluate the quality of existing apps that were explicitly designated to address symptoms of anxiety, depression and PTSD in youth, by deploying two established psychometric app rating scales [41,42,43]. Specifically, we aimed to:

-

1)

systematically evaluate the quality of eligible apps in the European Google Play Store and Apple App Store regarding user engagement, functionality, aesthetics, and information content;

-

2)

assess privacy and security features;

-

3)

identify theoretical foundations of apps and their respective intervention components;

-

4)

assess the concordance of user ratings and expert ratings.

Methods

Search strategy and inclusion criteria

A systematic search for MHA targeting anxiety, depression and posttraumatic stress disorder (PTSD) in children and adolescents was conducted by using an automatic search engine (webcrawler) that was developed within the mobile health app database project (MHAD; http://mhad.science/ [16, 36]. The webcrawler is a program that searches the app stores for given search terms and automatically extracts the provided information (e.g., app name, app description, user ratings, etc.) from the stores. The functionality and validity of the program has been proven in previous studies [44,45,46]. For further technical details please see [47].

In the present study, three sets of search terms were used to identify MHA anxiety, depression and PTSD in youth (age range: 0–18 years; Additional file 1). The search terms were derived by a team of psychologists (ASE, MD, YT), among them a licensed psychotherapist for children and adolescents (MD) and an expert for MHA quality (YT; [16, 35, 36]). The systematic searches (both, in English and German) were conducted from April 24th to April 26th, 2019.

After the app identification, a two-step inclusion process was conducted. First all identified apps were screened based on the app title and description regarding the following inclusion criteria: (a) the app was developed to address at least one of the targeted mental disorders, (b) the app was focusing on children and/or their caregivers, (c) the app was available in a language spoken by the authors (German or English), (d) the app was available for download. Second, all remaining apps were downloaded and tested against the criteria a-c again and e) the app was operative. All eligible MHA were included in the current analysis.

Assessment

The Mobile Application Rating Scale (MARS German version [42]) and the ENLIGHT are two standardized and reliable scales to assess the contents and quality of MHA. In the present analysis app characteristics (e.g., app name, price, user-rating) were assessed using the classification site of the standardized MARS [41, 42]. In addition, the classification site was used to assess methods (e.g., mindfulness exercises) and the therapeutic background. Based on previous categorizations [16, 48] therapeutic background was rated as cognitive behavioral therapy (CBT), psychodynamic psychotherapy, behavioral therapy, systemic therapy, third-wave CBT, humanistic therapy, integrative therapy, other, not applicable (N.A.; e.g., mainly psychoeducational content), or mixed (e.g., elements from multiple therapeutic backgrounds and theoretical orientations).

The MARS offers several items to assess privacy and security features (e.g., password protection) on a descriptive level. All included apps were rated by these items. In addition, the ENLIGHT instrument contains four checklists (i.e., privacy, security, credibility, evidence-based score), providing further in-depth assessment domains. For privacy and security, explicit requirements were stated, which are either rated as fulfilled or unfilled. Credibility and evidence-base is rated from very poor to excellent. For further information see [41].

Quality rating

The MARS [41, 42] was used for the quality assessment. With the MARS, MHA quality is rated on a 5-point scale ranging from 1 “inadequate” to 5 “excellent”. A total of 19 items on four sub-dimensions were included: (A) engagement (5 items: fun, interest, individual adaptability, interactivity, target group), (B) functionality (4 items: performance, usability, navigation, gestural design), (C) aesthetics (3 items: layout, graphics, visual appeal), and (D) information quality (7 items: accuracy of app description, goals, quality of information, the quantity of information, quality of visual information, credibility, evidence base). The psychometric quality of the MARS is excellent [35, 41, 42]. To assess the evidence base of the included apps, each reviewer conducted a search using Google and Google Scholar (search terms: app name, efficacy, effectiveness, observation study, study, evaluation, usability) and searched on the website of the app and app provider for information on conducted studies. Based on the search results the evidence items was rated as NA (“The app has not been trialled/tested”), 1 (“The evidence suggests the app does not work”), 2 (“App has been trialled (e.g., acceptability, usability, satisfaction ratings) and has partially positive outcomes in studies that are not randomised controlled trials (RCTs), or there is little or no contradictory evidence”), 3 (“App has been trialled (e.g., acceptability, usability, satisfaction ratings) and has positive outcomes in studies that are not RCTs, and there is no contradictory evidence“), 4 (“App has been trialled and outcome tested in 1–2 RCTs indicating positive results”), or 5 (“App has been trialled and outcome tested in > 3 high quality RCTs indicating positive results”) [41]. In addition to the four objective quality scales, the MARS contains two subjective subscales: (E) subjective quality (4 items: recommendation, frequency of use, willingness to pay, overall star-rating) and (F) perceived impact (6 items: awareness, knowledge, attitudes, intention to change, help-seeking, behavioural change).

In addition to the MARS, the ENLIGHT instrument was applied, since it provides information on additional dimensions (e.g., therapeutic alliance). ENLIGHT’s items are rated on a 5-point scale ranging from 1 “very poor” to 5 “very good”. In total ENLIGHT covers 28 items on seven sub-dimensions: (a) usability (3 items), (b) visual design (3 items), (c) user engagement (5 items), (d) content (4 items), (e) therapeutic persuasiveness (7 items), (f) therapeutic alliance (3 items), and (g) general subjective evaluation (3 items). The internal consistency of ENLIGHT is excellent [43].

Rater training

The quality rating was conducted by two independent reviewers (ASE, SE). All reviewers completed online training for the MARS before the quality assessment (https://www.youtube.com/watch?v=5vwMiCWC0Sc; last updated on September 6, 2021). For the ENLIGHT instrument, no free online training is available. Instead, reviewers were trained in using the ENLIGHT by an researcher with prior experience and expertise in app quality assessment (YT; [16, 35, 36]. After the instruction, several test apps were rated by both reviewers (ASE, SE) and ratings were discussed between the reviewers and instructor (YT) to complete the training phase. The excellent inter-rater reliability between the trained reviewers for the MARS (ICC = 0.87, 95% CI 0.84 to 0.90) and the inter-rater reliability for the ENLIGHT (ICC = 0.75, 95% CI 0.65 to 0.83) highlight that the training was successful.

Data analyses

MHA characteristics, therapeutic backgrounds, methods, and privacy and security features were analyzed descriptively. As a measure of agreement between the trained reviewers, intra-class correlation (ICC) was calculated for the dimensional quality assessment [49]. An ICC above 0.75 was defined as a satisfactory agreement between the reviewers [50]. The ratings of both reviewers were pooled. After pooling, means and standard deviations were calculated for all quality dimensions of the MARS and ENLIGHT. The associations between user ratings (star-rating in the stores: 5-point rating from 1 star to 5 stars) and experts’ quality ratings with the MARS and ENLIGHT were investigated by correlation analysis. For correlation analysis, an alpha level of 5% was defined. Holm correction for multiple testing was applied [51]. Missing values were excluded list-wise.

Results

Search and app characteristics

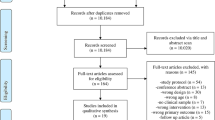

In total, 3806 MHA were identified (Apple App Store = 2658, Play Store = 1148). After the eligibility check, 15 MHA were included. All MHA were developed for Apple devices and n = 7 MHA were also available on Android. The inclusion process is summarized in Fig. 1.

Most MHA (n = 9, 60%) were from the category Health and Fitness, followed by n = 6 (40%) from the category Medicine. Lifestyle (n = 3), Education (n = 2) and social networks (n = 1) were represented categories as well. Of the 15 included MHA, two targeted depression, four anxiety, three PTSD. The remaining six targeted mixed symptoms of anxiety, depression and PTSD. Most MHA were freely available (n = 9, 60%). Prices of the six fee-based MHA (40%) ranged from 1.09 EUR to 10.99 EUR. On average, users rated the included MHA moderate to good (M = 3.6; SD = 1.04); three MHA were not rated by users. MHA characteristics are summarized in Table 1.

The investigation of methods provided by the included MHA yielded 18 different features. Most frequently, MHA provided information (n = 7) and offered psycho-educational advice (n = 7). A total of n = 6 MHA (40%) included a monitoring function. MHA also included therapeutic functions. For an aggregated overview of all functions, see Fig. 2.

In four apps the used functions and exercises were taken from multiple therapeutic backgrounds. A clear CBT focus was observed in two MHA, while at least some CBT elements (including third-wave CBT) were present in six of the MHA. Interestingly, a total of three apps incorporated the contact to a therapist or coach, which may lead to different therapeutic backgrounds and applied techniques depending on the assigned therapist or coach. An MHA-specific summary of the provided functions as well as the therapeutic background of the MHA is provided in Table 2.

Privacy, security and evidence

Of all 15 included MHA, n = 6 (40%) offered password protection, n = 4 (27%) required a log-in, n = 7 (47%) had a visible privacy policy, and n = 7 (47%) had an imprint. Consent to data collection was required in an active form by n = 5 (33%) MHA and in a passive form by n = 8 (53%), n = 1 informed about conflicts of interest/financial background. An emergency function was provided by n = 2 (13%) MHA. The ENLIGHT checklist evaluation of privacy, security, credibility, and evidence-base is summarized in Table 3.

In addition to the ENLIGHT checklist, evidence-base was assessed by MARS with the item “Has the app been trialled/tested?”. The assessment revealed, that only a single MHA (“iChill”) has been evaluated and outcome tested in an RCT.

Quality rating

MARS

The overall average quality of MHA was M = 3.59 (SD = 0.50). Quality ratings of the four sub-dimensions resulted in: engagement M = 3.34 (SD = 0.76), functionality M = 3.81 (SD = 0.49), aesthetics M = 3.78 (SD = 0.63), information quality M = 3.42 (SD = 0.68). The subjective quality was M = 3.13 (SD = 0.62) and the perceived impact M = 2.80 (SD = 0.75). Detailed information can be obtained from Table 4.

ENLIGHT

The overall quality was M = 3.22 (SD = 0.73). Quality ratings of the sub-dimensions yielded: usability M = 3.84 (SD = 0.39), visual design M = 3.72 (SD = 0.55), user engagement M = 3.27 (SD = 0.80), content M = 3.34 (SD = 0.72), therapeutic persuasiveness M = 2.34 (SD = 0.78), therapeutic alliance M = 2.56 (SD = 1.08), general subjective evaluation M = 3.34 (SD = 0.83). For detailed information see Table 1.

Associations between measures

Correlation between the overall MARS and ENLIGHT were high (r = .936, p < .001). No significant correlations between either MARS or ENLIGHT with user star rating were found (p > .050).

Discussion

To the best of our knowledge, this is the first study that systematically evaluated the quality of available apps that were denoted to tackle symptoms of anxiety, depression and PTSD in youth. Although our systematic searches yielded in a high number of initial hits, only a very small fraction of apps (0.39%) fulfilled our eligibility criteria and were independently assessed with two established app rating scales. Overall, the results point to a mediocre quality of the 15 included apps, and most importantly, to a widespread absence of rigorous scientific evaluations of apps in RCT-studies.

Our quality ratings are in line with the results of previous systematic evaluations deploying the same rating scales on apps for various health conditions and application areas (e.g., depression, PTSD, pain, rheumatism, physical activity, weight management [16, 36, 38, 44,45,46, 52,53,54]), all indicating medium overall app qualities with the MARS and ENLIGHT scales. In our study, the categories with the highest ratings were aesthetics and functionality (M = 3.78; M = 3.81), followed by information quality (M = 3.42) and engagement (M = 3.34). Also corresponding to previous studies [16, 36], the most often deployed therapeutic background for health apps was CBT, with various intervention components applied such as psychoeducation, relaxation techniques, mindfulness, exposure or monitoring/tracking.

However, and most importantly, a common deficiency of MHA is the absence of empirical investigations on their efficacy [16, 36, 55], which represents a major limitation and confines further quality aspects in our view. Considering this lack of empirical support, it seems like a minor issue that youth, caregivers and mental health professionals are hardly able to disentangle the overabundance of available apps and to distinguish therapeutic relevant apps from those that are irrelevant for their health-related intentions [56]. Moreover, our results suggest that app users might not be able to sufficiently appraise the quality of available apps, given the discrepancy of expert and user ratings, which is consistent with findings from other studies [16]. Thus, it seems necessary that objective and scientifically-based expert ratings are publicly available, in order to enable patients to make informed and guided decisions about MHA in particular, and psychotherapeutic interventions in general, advocating evidence-based mental healthcare.

Next to several strengths (including the resort to established scientifically validated app rating scales, the focus on prevalent mental disorders and comprehensive scope on apps with divergent theoretical backgrounds), there are several limitations that ought to be considered when interpreting the findings of this current study. First, the search strategy embraced only available apps in the European Google Play and Apple App stores, and therefore the results might not be representative for other outlets and app stores. Second, the rapidly evolving dynamics of the app market [16, 36, 57] might have led to the omission of novel apps as well as updates of included apps, and that some of the apps might not be accessible anymore. Third, since the two rating scales were not designed for the specificities of child and adolescent psychotherapy (i.e., addressing relevant developmental issues), ratings of youth MHA should be interpreted with caution—especially in regard to the domains of functionality and information content. Therefore, it would be a significant scientific advancement, if specific MHA rating scales were available and psychometrically evaluated for mobile-based interventions for common mental disorders in youth. Fourth and foremost, quality ratings can never be sufficient to inform clinical practice and treatment approaches alone, since efficacy and effectiveness studies are the core and gold-standard of evidence-based mental healthcare.

Accordingly, the most important future direction and imperative necessity for the advancement of MHA, is their methodological sound and rigorous evaluation in randomized controlled trials (or novel alternative evaluation frameworks to MHA)—since only one app was subject to an efficacy study, with sobering non-significant findings [58]. Without comprehensive knowledge on their (non-)efficacy and intervention safety, these novel applications ought not to be part in routine mental healthcare. However, some stakeholders might compromise some established standards of evidence-based medicine and advocate the evaluation of MHA in routine clinical care – without prior information on their efficacy and harmlessness. This debatable procedure can be observed in the health sector in Germany, where clinicians and healthcare providers are currently entitled to prescribe apps, with the intention of the German health ministry and certifying agency (i.e., BfArM) to gain an evaluation of their effectiveness post-hoc (with app providers contributing these data themselves). As such, this development might bring several risks for individual patients (e.g., non-detected and unknown side effects, adverse events, or deterioration) and might contribute to an erosion of well-established standards of evidence-based medicine in general. This concern is also nourished by the massive commercialization of the app market and limited resources in healthcare globally, raising further methodological and ethical questions [59, 60]. Thus, the adherence to well-established standards of good clinical practices as well as scientific-based intervention evaluation should be urgently applied for MHA [61], similar as it is largely the case for internet-based interventions and conventional psychotherapies offered face-to-face. Recommendations for quality criteria and standards for MHA are available, and can complement efficacy-/effectiveness studies on MHA in RCTs [62, 63]. Furthermore, the development of MHA needs to get theoretically and empirically informed, since dismantling and additive design studies are largely pending in mobile-based interventions [18], and the resort to theoretically deduced and evidence-based intervention components is a critical step to build efficacious interventions that induce essential mechanisms of therapeutic change enabling improved outcomes [64].

Taken together, our study indicates that MHA for youth possess a rather small quality and, more importantly, are insufficiently evaluated concerning their efficacy, impeding widespread conclusions and recommendations for clinical practice and healthcare policies so far. Therefore, it is of utmost importance that patients and stakeholders are thoroughly and scientifically informed about this limited evidence base and the current deficiencies and restraints of MHA for youth. Information platforms offered by (non-profit) organizations, like www.mhad.science (last updated September 6, 2021) or https://onemindpsyberguide.org/ (last updated September 6, 2021), that gather and provide scientific knowledge on central aspects of digital health interventions (e.g., effectiveness, acceptability, quality, safety, or data security) in a balanced and unbiased way, are one important contribution for the evidence-based information and empowerment of patients seeking advice and help for their mental health resolutions.

Conclusions

This study systematically evaluated the quality of MHA for anxiety, depression and PTSD in youth. The results revealed a moderate overall app quality and point to a significant lack of empirically-informed intervention development, as well as an absence of rigorous studies to investigate the efficacy of apps that are currently offered in major marketplaces. Hence, future high-quality research is urgently needed, to advance and evaluate MHA, all contributing to the augmentation and evidence-based utilization of digital health interventions. Furthermore, non-profit provisions of scientifically-based information on essential aspects of apps are urgently required to enable informed and balanced treatment choices for patients, caregivers and stakeholders in mental health.

Availability of data and materials

Data will be made available to researchers who provide a methodologically sound proposal, not already covered by other researchers. All requests should be directed to the corresponding author. Data requestors will need to sign a data access agreement. Provision of data is subject to data security regulations. Support depends on available resources.

References

Kessler RC, Amminger GP, Aguilar-Gaxiola S, Alonso J, Lee S, Üstün TB. Age of onset of mental disorders: a review of recent literature. Curr Opin Psychiatry. 2007;20:359–64.

Vos T, Allen C, Arora M, Barber RM, Bhutta ZA, Brown A, et al. Global, regional, and national incidence, prevalence, and years lived with disability for 310 diseases and injuries, 1990–2015: a systematic analysis for the Global Burden of Disease Study 2015. Lancet. 2016;388:1545–602.

Merikangas KR, He JP, Burstein M, Swanson SA, Avenevoli S, Cui L, et al. Lifetime prevalence of mental disorders in U.S. adolescents: results from the national comorbidity survey replication-adolescent supplement (NCS-A). J Am Acad Child Adolesc Psychiatry. 2010;49:980–9.

Polanczyk GV, Salum GA, Sugaya LS, Caye A, Rohde LA. Annual research review: a meta-analysis of the worldwide prevalence of mental disorders in children and adolescents. J Child Psychol Psychiatry Allied Discip. 2015;56:345–65.

Costello J, Erkanli A, Angold A. Is there an epidemic of child or adolescent depression? J Child Psychol Psychiatry. 2006;47:1263–71.

Lambert M, Bock T, Naber D, Löwe B, Schulte-Markwort M, Schäfer I, et al. Die psychische Gesundheit von Kindern, Jugendlichen und jungen Erwachsenen - Teil 1: Häufigkeit, Störungspersistenz, Belastungsfaktoren, Service-Inanspruchnahme und Behandlungsverzögerung mit Konsequenzen. Fortschritte der Neurol Psychiatr. 2013;81:614–27.

Weisz JR, Kuppens S, Eckshtain D, Ugueto AM, Hawley KM, Jensen-Doss A. Performance of evidence-based youth psychotherapies compared with usual clinical care a multilevelmeta-analysis. JAMA Psychiatry. 2013;70:750–61.

Cipriani A, Zhou X, Del Giovane C, Hetrick SE, Qin B, Whittington C, et al. Comparative efficacy and tolerability of antidepressants for major depressive disorder in children and adolescents: a network meta-analysis. Lancet. 2016;388:881–90.

Zhou X, Zhang Y, Furukawa TA, Cuijpers P, Pu J, Weisz JR, et al. Different types and acceptability of psychotherapies for acute anxiety disorders in children and adolescents: a network meta-analysis. JAMA Psychiatry. 2019;76:41–50.

Zhou X, Hetrick SE, Cuijpers P, Qin B, Barth J, Whittington CJ, et al. Comparative efficacy and acceptability of psychotherapies for depression in children and adolescents: a systematic review and network meta-analysis. World Psychiatry. 2015;14:207–22.

Cuijpers P, Karyotaki E, Eckshtain D, Ng MY, Corteselli KA, Noma H, et al. Psychotherapy for depression across different age groups: a systematic review and meta-analysis. JAMA Psychiatry. 2020;77:694–702.

Goldbeck L, Muche R, Sachser C, Tutus D, Rosner R. Effectiveness of trauma-focused cognitive behavioral therapy for children and adolescents: a randomized controlled trial in eight German mental health clinics. Psychother Psychosom. 2016;85:159–70.

Domhardt M, Steubl L, Baumeister H. Internet-and mobile-based interventions for mental and comatic conditions in children and adolescents. A systematic review of meta-analyses. Z Kinder Jugendpsychiatr Psychother. 2020;48:33–46

Andrade LH, Alonso J, Mneimneh Z, Wells JE, Al-Hamzawi A, Borges G, et al. Barriers to mental health treatment: results from the WHO World Mental Health (WMH) Surveys. Psychol Med. 2014;44:1303–17.

Gulliver A, Griffiths KM, Christensen H. Perceived barriers and facilitators to mental health help-seeking in young people: a systematic review. BMC Psychiatry. 2010;10:113.

Terhorst Y, Rathner E-MEM, Baumeister H, Sander LB. «Hilfe aus dem App-Store»: Eine systematische Übersichtsarbeit und Evaluation von Apps zur Anwendung bei Depressionen. Verhaltenstherapie. 2018;28:101–12.

Domhardt M, Ebert DD, Baumeister H. Internet-und mobile-basierte Interventionen. Bern Psychol der Gesundheitsförderung. 2018;397–410.

Domhardt M, Geßlein H, von Rezori RE, Baumeister H. Internet- and mobile-based interventions for anxiety disorders: a meta-analytic review of intervention components. Depress Anxiety. 2019;36:213–24.

Ebert DD, Van Daele T, Nordgreen T, Karekla M, Compare A, Zarbo C, et al. Internet- and mobile-based psychological interventions: applications, efficacy, and potential for improving mental health: a report of the EFPA E-Health Taskforce. Eur Psychol. 2018;23:167–87.

Steubl L, Sachser C, Baumeister H, Domhardt M. Intervention components, mediators, and mechanisms of change of Internet-and mobile-based interventions for post-Traumatic stress disorder: Protocol for a systematic review and meta-Analysis. Syst Rev. 2019;8:1–10.

Lunkenheimer F, Domhardt M, Geirhos A, Kilian R, Mueller-Stierlin AS, Holl RW, et al. Effectiveness and cost-effectiveness of guided Internet- And mobile-based CBT for adolescents and young adults with chronic somatic conditions and comorbid depression and anxiety symptoms (youthCOACHCD): study protocol for a multicentre randomized controlled trial. Trials. 2020;21:1–15.

Stasiak K, Fleming T, Lucassen MFG, Shepherd MJ, Whittaker R, Merry SN. Computer-based and online therapy for depression and anxiety in children and adolescents. J Child Adolesc Psychopharmacol. 2016;26:235–45.

Ebert DD, Zarski A-C, Christensen H, Stikkelbroek Y, Cuijpers P, Berking M, et al. Internet and computer-based cognitive behavioral therapy for anxiety and depression in youth: a meta-analysis of randomized controlled outcome trials. PLoS One. 2015;10:e0119895.

Pennant ME, Loucas CE, Whittington C, Creswell C, Fonagy P, Fuggle P, et al. Computerised therapies for anxiety and depression in children and young people: a systematic review and meta-analysis. Behav Res Ther. 2015;67:1–18.

Podina IR, Mogoase C, David D, Szentagotai A, Dobrean A. A meta-analysis on the efficacy of technology mediated CBT for anxious children and adolescents. J Ration Cogn Ther. 2016;34:31–50.

Rooksby M, Elouafkaoui P, Humphris G, Clarkson J, Freeman R. Internet-assisted delivery of cognitive behavioural therapy (CBT) for childhood anxiety: systematic review and meta-analysis. J Anxiety Disord. 2015;29:83–92.

Ye X, Bapuji SB, Winters SE, Struthers A, Raynard M, Metge C, et al. Effectiveness of internet-based interventions for children, youth, and young adults with anxiety and/or depression: a systematic review and meta-analysis. BMC Health Serv Res. 2014;14:313.

Garrido S, Millington C, Cheers D, Boydell K, Schubert E, Meade T, et al. What works and what doesn’t work? a systematic review of digital mental health interventions for depression and anxiety in young people. Front Psychiatry. 2019;10:759.

Lenhard F, Andersson E, Mataix-Cols D, Rück C, Vigerland S, Högström J, et al. Therapist-guided, internet-delivered cognitive-behavioral therapy for adolescents with obsessive-compulsive disorder: a randomized controlled trial. J Am Acad Child Adolesc Psychiatry. 2017;56:10–9.e2.

Jolstedt M, Wahlund T, Lenhard F, Ljótsson B, Mataix-Cols D, Nord M, et al. Efficacy and cost-effectiveness of therapist-guided internet cognitive behavioural therapy for paediatric anxiety disorders: a single-centre, single-blind, randomised controlled trial. Lancet Child Adolesc Heal. 2018;2:792–801.

Domhardt M, Baumeister H. Psychotherapy of adjustment disorders: current state and future directions. World J Biol Psychiatry. 2018;19:21–35.

Linardon J, Cuijpers P, Carlbring P, Messer M, Fuller-Tyszkiewicz M. The efficacy of app‐supported smartphone interventions for mental health problems: a meta‐analysis of randomized controlled trials. World Psychiatry. 2019;18:325–36.

Weisel KK, Fuhrmann LM, Berking M, Baumeister H, Cuijpers P, Ebert DD. Standalone smartphone apps for mental health—a systematic review and meta-analysis. npj Digit Med. 2019;2:118.

Tighe J, Shand F, Ridani R, Mackinnon A, De La Mata N, Christensen H. Ibobbly mobile health intervention for suicide prevention in Australian Indigenous youth: a pilot randomised controlled trial. BMJ Open. 2017;7:e013518.

Terhorst Y, Philippi P, Sander LB, Schultchen D, Paganini S, Bardus M, et al. Validation of the Mobile Application Rating Scale (MARS). Moitra E, editor. PLoS One. 2020;15:e0241480.

Sander LB, Schorndanner J, Terhorst Y, Spanhel K, Pryss R, Baumeister H, et al. ‘Help for trauma from the app stores?’ A systematic review and standardised rating of apps for Post-Traumatic Stress Disorder (PTSD). Eur J Psychotraumatol. 2020;11:1701788.

Salazar A, de Sola H, Failde I, Moral-Munoz JA. Measuring the quality of mobile apps for the management of pain: systematic search and evaluation using the mobile app rating scale. JMIR mHealth uHealth. 2018;6:e10718.

Bardus M, van Beurden SB, Smith JR, Abraham C. A review and content analysis of engagement, functionality, aesthetics, information quality, and change techniques in the most popular commercial apps for weight management. Int J Behav Nutr Phys Act. 2016;13:35.

Grundy Q, Chiu K, Held F, Continella A, Bero L, Holz R. Data sharing practices of medicines related apps and the mobile ecosystem: traffic, content, and network analysis. BMJ. 2019;364:l920.

Huckvale K, Torous J, Larsen ME. Assessment of the data sharing and privacy practices of smartphone apps for depression and smoking cessation. JAMA Netw Open. 2019;2:e192542.

Stoyanov SR, Hides L, Kavanagh DJ, Zelenko O, Tjondronegoro D, Mani M. Mobile app rating scale: a new tool for assessing the quality of health mobile apps. 2015;3:e27.

Messner EM, Terhorst Y, Barke A, Baumeister H, Stoyanov S, Hides L, et al. The german version of the mobile app rating scale (MARS-G): development and validation study. JMIR mHealth uHealth. 2020;8:e14479.

Baumel A, Faber K, Mathur N, Kane JM, Muench F. Enlight. A comprehensive quality and therapeutic potential evaluation tool for mobile and web-based eHealth interventions. J Med Internet Res. 2017;19:e82.

Portenhauser A, Terhorst Y, Schultchen D, Sander L, Denkinger M, Waldherr N, et al. Mobile apps for older adults: systematic review and evaluation within online stores. JMIR Aging. 2021;4:1–16.

Paganini S, Terhorst Y, Sander LB, Catic S, Balci S, Küchler A, et al. Quality of physical activity apps: systematic aearch in App Stores and content analysis. JMIR mHealth uHealth; 2021;9(6):e22587

Schultchen D, Terhorst Y, Holderied T, Stach M, Messner E-M, Baumeister H, et al. Stay present with your phone: a systematic review and standardized rating of mindfulness apps in European App Stores. Int J Behav Med. 2020;28:1–9.

Stach M, Kraft R, Probst T, Messner EM, Terhorst Y, Baumeister H, et al. Mobile health app database - A repository for quality ratings of mhealth apps. Proc - IEEE Symp Comput Med Syst. 2020. p. 427–32.

Kampling H, Baumeister H, Jäckel WH, Mittag O. Prevention of depression in chronically physically ill adults. In: Kampling H, editor. Cochrane Database Syst Rev. 2014. p. Art. No.: CD011246.

Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropr Med. 2016;15:155–63.

Fleiss JL. The design and analysis of clinical experiments. New York: Wiley; 1999.

Holm S. A simple sequentially rejective multiple test procedure. Scand J Stat. 1979;6:65–70.

Schoeppe S, Alley S, Rebar AL, Hayman M, Bray NA, Van Lippevelde W, et al. Apps to improve diet, physical activity and sedentary behaviour in children and adolescents: a review of quality, features and behaviour change techniques. Int J Behav Nutr Phys Act. 2017;14:83.

Machado GC, Pinheiro MB, Lee H, Ahmed OH, Hendrick P, Williams C, et al. Smartphone apps for the self-management of low back pain: a systematic review. Best Pract Res Clin Rheumatol. 2016;30:1098–109.

Knitza J, Tascilar K, Messner E-M, Meyer M, Vossen D, Pulla A, et al. German mobile apps in rheumatology: review and analysis using the Mobile Application Rating Scale (MARS). JMIR mHealth uHealth. 2019;7:e14991.

Sucala M, Cuijpers P, Muench F, Cardos R, Soflau R, Dobrean A, et al. Anxiety: there is an app for that. A systematic review of anxiety apps. Depress Anxiety. 2017;34:518–25.

Shen N, Levitan MJ, Johnson A, Bender JL, Hamilton-Page M, Jadad AR, et al. Finding a depression app: a review and content analysis of the depression app marketplace. JMIR mHealth uHealth. 2015;3:e3713.

Larsen ME, Nicholas J, Christensen H. Quantifying app store dynamics: longitudinal tracking of mental health apps. JMIR mHealth uHealth. 2016;4:e6020.

Christensen H, Batterham P, Mackinnon A, Griffiths KM, Hehir KK, Kenardy J, et al. Prevention of generalized anxiety disorder using a web intervention, iChill: randomized controlled trial. J Med Internet Res. 2014;16:e199.

Singh K, Drouin K, Newmark LP, Lee J, Faxvaag A, Rozenblum R, et al. Many mobile health apps target high-need, high-cost populations, but gaps remain. Health Aff. 2016;35:2310–8.

Stoll J, Müller JA, Trachsel M. Ethical issues in online psychotherapy: a narrative review. Front Psychiatry. 2020;10:993.

Domhardt M, Cuijpers P, Ebert D, Baumeister H. More light? Opportunities and pitfalls in digitalised psychotherapy process research. Front Psychol. 2021;2:863.

Torous J, Andersson G, Bertagnoli A, Christensen H, Cuijpers P, Firth J, et al. Towards a consensus around standards for smartphone apps and digital mental health. World Psychiatry. 2019;18:97–8.

Henson P, David G, Albright K, Torous J. Deriving a practical framework for the evaluation of health apps. Lancet Digit Heal. 2019;1:e52–4.

Domhardt M, Steubl L, Boettcher J, Buntrock C, Karyotaki E, Ebert DD, et al. Mediators and mechanisms of change in internet- and mobile-based interventions for depression: a systematic review. Clin Psychol Rev. 2021;83:101953.

Acknowledgements

The authors would like to the thank Rüdiger Pryss, Michael Stach, Robin Kraft, Pascal Damasch and Philipp Dörzenbach for their support in the development of the webcrawler and their IT-expertise.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research received no specific grant from any funding agency, commercial or not-for-profit sectors. All costs were covered by the Department of Clinical Psychology and Psychotherapy and Publication Fond of the University Ulm.

Author information

Authors and Affiliations

Contributions

MD, YT, YT, HB, EMM, LS designed the trial and initiated this study. ASE and SE conducted the MARS ratings supervised by MD and YT. MD and YT wrote the first draft of the manuscript. All authors revised the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

All authors read and approved the present manuscript and gave their consent for publication.

Competing interests

No potential competing interests are reported by the authors.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Search terms.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Domhardt, M., Messner, EM., Eder, AS. et al. Mobile-based interventions for common mental disorders in youth: a systematic evaluation of pediatric health apps. Child Adolesc Psychiatry Ment Health 15, 49 (2021). https://doi.org/10.1186/s13034-021-00401-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13034-021-00401-6