Abstract

Background

Historically, the focus of cost-effectiveness analyses has been on the costs to operate and deliver interventions after their initial design and launch. The costs related to design and implementation of interventions have often been omitted. Ignoring these costs leads to an underestimation of the true price of interventions and biases economic analyses toward favoring new interventions. This is especially true in low- and middle-income countries (LMICs), where implementation may require substantial up-front investment. This scoping review was conducted to explore the topics, depth, and availability of scientific literature on integrating implementation science into economic evaluations of health interventions in LMICs.

Methods

We searched Web of Science and PubMed for papers published between January 1, 2010, and December 31, 2021, that included components of both implementation science and economic evaluation. Studies from LMICs were prioritized for review, but papers from high-income countries were included if their methodology/findings were relevant to LMIC settings.

Results

Six thousand nine hundred eighty-six studies were screened, of which 55 were included in full-text review and 23 selected for inclusion and data extraction. Most papers were theoretical, though some focused on a single disease or disease subset, including: mental health (n = 5), HIV (n = 3), tuberculosis (n = 3), and diabetes (n = 2). Manuscripts included a mix of methodology papers, empirical studies, and other (e.g., narrative) reviews. Authorship of the included literature was skewed toward high-income settings, with 22 of the 23 papers featuring first and senior authors from high-income countries. Of nine empirical studies included, no consistent implementation cost outcomes were measured, and only four could be mapped to an existing costing or implementation framework. There was also substantial heterogeneity across studies in how implementation costs were defined, and the methods used to collect them.

Conclusion

A sparse but growing literature explores the intersection of implementation science and economic evaluation. Key needs include more research in LMICs, greater consensus on the definition of implementation costs, standardized methods to collect such costs, and identifying outcomes of greatest relevance. Addressing these gaps will result in stronger links between implementation science and economic evaluation and will create more robust and accurate estimates of intervention costs.

Trial registration

The protocol for this manuscript was published on the Open Science Framework. It is available at: https://osf.io/ms5fa/ (DOI: 10.17605/OSF.IO/32EPJ).

Similar content being viewed by others

Background

Economic evaluation is widely used to help decision-makers evaluate the value-for-money tradeoffs of a variety of public health interventions [1]. Only recently, however, have the fields of economic evaluation and implementation science begun to synergize. In most existing guidelines for the conduct of economic evaluations (including cost-effectiveness analyses), little attention is paid to program implementation and improvement—such as costing the design of health interventions (e.g., focus groups to design intervention, sensitization events), their initial implementation (e.g., development of infrastructure, hiring and training of program staff), and sustainability (e.g., annual re-trainings of personnel) [2, 3]. As a result, most cost-effectiveness analyses to date have focused on estimating the price to deliver an intervention after it has already been designed and launched, underestimating their total cost [4,5,6].

While germane to high-income countries, the costs of initial design, implementation, and improvement are particularly salient in low- and middle-income countries (LMICs)—where resources are often constrained, and initial implementation of health-related interventions may require the establishment of new infrastructure (e.g., information technology, transportation systems) and/or technical assistance from consultants whose salaries often reflect prevailing wages in higher-income settings. As a result, economic evaluations that do not consider implementation costs beyond the costs of delivery will underestimate the total cost of interventions. Omission can mislead decision-makers about program feasibility—by glossing over up-front investments and resources that are required to design and launch a program, and recurring costs to maintain it. It may also miss an opportunity to highlight infrastructural investments (e.g., development of health information systems) with transformative potential for other priorities—economies of scope being a criterion that some decision-makers may consider for investments.

The growing field of implementation science represents an opportunity to help fill this knowledge gap [7]. Many conceptual frameworks for implementation science recommend collecting costs and economic data alongside other implementation outcomes [8,9,10]. Despite these general recommendations, specific guidance is lacking as to how economic data should be collected in the context of implementation or how to interpret such data to inform decision-making. There is a need for more practical exploration of how implementation frameworks can inform economic evaluations such as cost-effectiveness analyses and budget impact analyses.

To date, little is known about the scope of research that has been performed at the intersection of economic evaluation and implementation science, particularly in LMICs. We therefore conducted a scoping review to investigate the availability, breadth, and consistency of literature on the integration of economic evaluation and implementation science for health interventions in LMICs and to identify gaps in knowledge that should be filled as the fields of economic evaluation and implementation science are increasingly integrated.

Methods

The literature search strategy was created following guidelines from the Joanna Briggs Institute (JBI) Manual for Evidence Synthesis [11] and the PRISMA-ScR checklist [12]. The protocol for this scoping review was published online May 2, 2022 and is available from the Open Science Framework at: https://osf.io/ms5fa/ (DOI: 10.17605/OSF.IO/32EPJ). The protocol is also included as supplementary material for this manuscript.

The primary question/objective of this review was: “What is the scope of the existing scientific literature on integration of implementation science and economic evaluation for health interventions in LMICs?” (Fig. 1).

Scope of review. We sought primarily to identify articles that evaluated the integration of implementation science and economic evaluation in the context of low- and middle-income countries (LMICs) [purple shaded area]. To better inform this space, we also included articles from high-income settings [red shaded area]. Both theoretical and empirical studies were included in this review

Eligibility criteria

Eligible papers must have been written in English, Spanish, French, or Portuguese, and were required to focus on studies of programs or policies that emphasize targeting of health interventions. Included papers were required to address components of both implementation science and economic evaluation. We included methodology papers, review papers, peer-reviewed clinical research, grey literature, and conference abstracts, and allowed studies that used both empirical data and theoretical frameworks. Protocol papers were excluded. No restrictions were placed on settings or study populations, but priority was given to studies centered in LMICs. Studies from high-income countries (HICs) were eligible if their methodology and findings were deemed by both independent reviewers to be potentially relevant and applicable to LMICs. As an example of a method that was deemed to be potentially relevant to LMICs, Saldana et al. (2014) mapped implementation resources and costs in the implementation process for a Multidimensional Foster Care Intervention in the USA; this mapping process could be replicated for other diseases in the LMIC context [13].

Information sources

We searched Web of Science and PubMed for papers from January 1, 2010 through December 31, 2021. We also referred to the Reference Case of the Global Health Costing Consortium and searched grey literature using the methodology outlined by the Canadian Agency for Drugs and Technology in Health [14, 15]. We used a mix of Medical Subject Headings (MeSH terms) and search queries in English related to economic evaluation and implementation science, trying different combinations of phrases to capture as many articles as possible. The full list of MeSH and search terms is available in the Additional file 1. The PubMed and Web of Science search engines sort articles in order of relevance. Due to capacities on human resources, we aimed to screen about the first 3000 articles that were listed based on relevancy from each database. To find additional papers for abstract screening, after completing the database searches, we reviewed the references of all identified articles (“backward snowballing”) as well as lists of publications that cited the included articles (“forward snowballing”). We also reviewed all publications by any author who had two or more first/senior-author papers in the final publication list. The snowballing and author searches were done using Google Scholar.

Selection of sources of evidence

After completion of the initial literature search, all articles were screened for eligibility and inclusion. The screening process was done in two stages. First, the titles and abstracts of all studies were independently reviewed by two authors (AM and RRT) for eligibility. For title screening, we excluded papers which had no term related to economic evaluation or implementation science. For abstract screening we excluded papers which had no term related to implementation in their abstracts. Both authors voted independently on whether to include the study, and if deemed ineligible, a reason for exclusion was provided. Any conflicts were resolved by discussion with a third reviewer (DWD).

After the abstract screening, all studies that received “eligible/include” votes from both reviewers underwent a full-text screening of the entire publication. The same two authors independently reviewed each publication for eligibility, with conflicts resolved by the same third author. For full-text screening, we removed papers that did not directly discuss or explore implementation science and economic outcomes, or that did not otherwise meet the eligibility criteria described above. After both rounds of screening, remaining studies were subject to data extraction (using a standardized abstraction tool), final analysis, and evaluation.

Data charting and data items

We created a data extraction tool in Microsoft Excel to guide capture of relevant details from included articles. The form was piloted before use to ensure all fields of interest were captured. Data charting for all papers was completed in duplicate, separately, by two authors. Extraction focused on metadata, study details, interventions used, frameworks considered, methodology, results and outcomes, and key takeaways. The full list of information extracted from studies is available in the Additional file 1. Results were synthesized using both quantitative and qualitative methods—including enumeration of key concepts, perspectives, populations, and themes.

Results

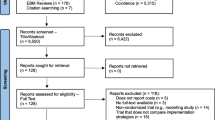

From an initial list of over 6,900 articles, we identified 23 unique articles for data extraction (Fig. 2).

PRISMA flow diagram. Rectangular boxes outlined in green refer to the articles included at each step of the review. Curved boxes outlined in red list reasons for excluding articles from the review at each step. The PubMed and Web of Science search engines list articles in order of relevance. Articles that feature low on the list (4571/6986, 65%) were excluded. Following this step, 2314 of 2415 (96%) remaining articles were excluded for not having terms related to economic evaluation or implementation science, 61 of 116 articles (53%) screened by abstract were excluded for not having implementation outcomes, and 32 of 55 articles (58%) screened by full text were excluded for not being relevant to LMICs and/or implementation science outcomes

Characteristics of included studies are presented in Table 1. Despite our explicit focus on LMICs, only one of 23 papers (4%) had a first or senior author from an LMIC [16]. Of the nine studies that provide empirical results, only one (11%) focused on an LMIC [17], five studies (55%) focused on high-income settings [13, 18,19,20,21], one (11%) looked at both LMICs and HICs [22], and two (22%) had a global focus [16, 23]. Six (26%) of the 23 papers focused on infectious diseases [17, 22, 24,25,26,27], five (22%) on mental health [13, 19, 28,29,30], and three (13%) on non-infectious diseases [18, 20, 21]. The other studies did not empirically focus on one specific disease area. For example, Cidav et al. (2020) advanced a theoretical framework to incorporate cost estimates into implementation evaluation [23]. Most research on this subject was recent: 12 of the 23 studies were published during the last 2 years of the review [17, 18, 22,23,24,25, 27,28,29,30,31,32].

Seven (30%) of the 23 studies were reviews (25,30–35), nine (39%) were methodologically focused [16, 20, 23,24,25, 28, 29, 36, 37], and nine (39%) used empirical data [13, 16,17,18,19,20,21,22,23]. A few papers had multiple types of study design.

Of the nine empirical studies, four (44%) could be mapped to an existing costing or implementation framework. Cidav et al. (2020) leveraged Proctor’s Outcomes for Implementation Research Framework to systematically estimate costs [23], Hoomans et al. (2011) provided details of a total net benefits approach [20], Saldana et al. (2014) leveraged the Stages of Implementation Completion (SIC) template for mapping costs [13], and da Silva Etges et al. (2019) presented a Time Driven Activity Based Costing (TDABC) framework [16]. A few other frameworks such as the Consolidated Framework for Implementation Research [9], Implementation Outcome Framework [8], Policy Implementation Determinants Framework [38], and the RE-AIM Framework [39], were applied by other included studies.

Papers covered a variety of focus areas, ranging from costing methodologies [16, 20, 23,24,25, 28, 29, 36, 37], and determinants of implementation [31], to reviews highlighting the paucity of evidence using economic evaluation in implementation science [34]. As examples of topic areas, Hoomans and Severens (2014) mentioned the lack of a widely accepted mechanism to incorporate cost considerations into implementation of programmatic guidelines [37]. Bozzani et al. (2021) discussed integration of “real-world” considerations to more accurately estimate the true cost of an intervention [27]. Nichols and colleagues (2020) argued that many implementation costs are not actually one-off payments, as they are typically treated in economic analyses [17]. Salomon et al. (2019) discussed how favorable outcomes of most cost-effectiveness analyses may be due to a systematic bias leading to an underestimation of costs or an overestimation of impact [26].

In terms of developing frameworks for costing implementation of health interventions, Cidav et al. (2020) provided the breakdown of costs both by the implementation strategy and the phase of implementation [23]. Krebs and Nosyk (2021) discussed mapping intervention costs to implementation outcomes like maintenance [25]. Sohn et al. (2020) proposed partitioning an intervention into three phases (design, initiation, and maintenance) to measure costs at different timepoints in the implementation process [24]. Of the few empirical studies included, outcomes centered around either cost per patient, cost per participant, or the net and marginal cost of implementation. Measured implementation cost outcomes ranged from simple collection of training costs at study launch [22] to detailed collection of data on installation, maintenance, and personnel costs across several months or years of follow-up [17, 24]. Many studies did not offer an explicit definition of implementation costs, nor did they provide specific context to what goods or services would be included.

Discussion

This scoping review analyzed 23 articles evaluating implementation outcomes and implementation costs in the health economic literature. Our review showed that there is a growing literature on implementation costs and methodologies for evaluating implementation costs. However, among the reviewed articles, there is large heterogeneity in what is meant by implementation costs, and the relevance of the current literature to LMICs is weak. Only 8 of the 23 articles were based in LMICs or directly alluded to the applicability of their techniques in low-resource settings—and only one had a first or senior author from an LMIC setting. This gap reflects trends in economic evaluation in general, with only a small portion of all costing analyses occurring in LMICs [26]. The broad methodologies and concepts for collecting costs may be translatable, in principle, from high- to low-income settings. However, owing to their richness of available costing data and large budgets, many studies and techniques from high-income settings may not be applicable or realistic in low-income settings [18, 19, 21]. Since implementation and/or improvement costs may represent a larger portion of the total cost of interventions in LMICs, the paucity of both economic evaluations and implementation costing studies from LMICs represents an important area for future research.

An important component of this research is to evaluate the feasibility of sustaining these health interventions and bringing them to scale—and the “sunk” costs to the health system if such scaling-up does not succeed. The importance of costing the sustainability of interventions is especially pertinent in LMICs, where high personnel turnover and sub-optimal infrastructure can make maintenance/sustainability particularly expensive. To capture such costs, researchers should consider incorporating cost items such as equipment breakdown (e.g., parts and labor to repair equipment), hiring and training of replacement staff, quality assurance and control, and investments in underlying infrastructure (e.g., stable electrical supply) that may be required to sustain health interventions in LMIC contexts.

Cultural adaptation is another critical component of successful implementation in the LMIC context. Such adaptation—often including such steps as formative qualitative research, application of contextual frameworks, and human-centered design (HCD)—is often very expensive relative to delivery of the intervention itself. For example, implementation of even apparently simple interventions such as umbilical chlorhexidine may require qualitative research, ethnographic inquiry, and community engagement—often at great cost [40, 41]. As another illustrative example, the estimated cost of HCD for a tuberculosis contact investigation strategy was $356,000, versus a delivery cost of $0.41 per client reached [42].

We note that “implementation” and “implementation science” are terms used in one specific field of research; other fields may use different terms, such as “improvement.” While this scoping review used “implementation” as a grounding term, use of “improvement” and “improvement science” may have resulted in different findings. For example, McDonald et al. [43] synthesized evidence to better explain the quality improvement field for practitioners and researchers, and Hannan et al. [44] studied the application of improvement science to the field of education. In evaluating the integration of costs, therefore, future studies in the field of implementation science may also wish to draw on literature that does not center on the term “implementation.”

To improve transparency and consistency in the definition and collection of implementation costs, at least four steps may be useful (Fig. 3). First, implementation costing studies could explicitly define the period of the implementation process during which costs are being collected. Both health economists and implementation scientists have highlighted the importance of defining the time of the evaluation [24, 45]. Instinctually, the general line of thought may be that “implementation” is tied to the beginning (especially) and middle of a process. But, implementation is “the process of making something active or effective”: a process which may have no end point given the need for continuous acquisition or development of resources to facilitate program upkeep (e.g., training new cohorts of health professionals) and monitoring and evaluation to ensure efficient use of program resources and evaluate impact. One suggested approach to delineating [24, 45] the timing of implementation costs includes three phases: “design/pre-implementation”, “initiation/implementation”, and “maintenance/post-implementation”. Second, as argued by Nichols (2020), authors should note if implementation costs are incurred and collected at a single point in time, or on a recurring basis [17]. Third, the activities and items that are included as “implementation costs” should be made more explicit. Researchers should state what types of activities, materials, and goods are included in their implementation costs, and align on development of methods to capture cost estimates. In doing so, consensus can be developed as to a reasonable taxonomy of implementation costs in health interventions. Finally, consensus should be developed regarding what constitutes the implementation process itself. Many studies, for example, included routine operational and delivery costs among the costs of “implementing” an intervention. Although such costs are critical to the implementation of health interventions, including operational expenses as “implementation” costs may have the unintended consequence of ignoring other costs required for design, initiation, and sustainability of interventions. A similar distinction needs to be made to separate research costs, such as Institutional Review Board approval, from implementation expenses, as the two are often conflated. Clearer consensus—with more examples—of incorporating such costs as separate from those of routine operation and delivery could lead to more frequent inclusion of these costs in economic evaluations.

Framework for improving implementation costing in LMICs. Each quadrant represents a key knowledge domain that, if addressed, will improve our understanding of implementation costs in LMICs. Arrows illustrate that these domains are cyclical and interdependent, such that addressing one domain will help to refine questions in the next

A second priority for evaluating implementation costs is to strengthen the emerging linkage between the fields of economic evaluation and implementation science. Conceptual frameworks in the implementation science literature can be useful for informing economic evaluations – but these frameworks are rarely used for that purpose. Examples of frameworks that do explicitly include costs include an 8-step framework by da Silva Etges et al. [16] to apply time-driven activity-based costing (TDABC) in micro-costing studies for healthcare organizations, where resources and costs for each department and activity are mapped to calculate the per patient costs and perform other costing analyses. As another example, Cidav et al. [23] combine the TDABC method with the implementation science framework by Proctor et al. [8] to clearly map the implementation process by specifying components of the implementation strategy and assigning costs to each action as part of the strategy. Implementation science frameworks that explicitly include costs as an outcome should consider how these costs can and should be collected—and inclusion of economic outcomes should be prioritized in the development of “next-generation” implementation science frameworks. As with the definition of implementation costs itself (as discussed above), these frameworks should include guidance regarding the types of activities and costs that researchers should collect, and appropriate methodologies for collecting those economic data. Such linkages should also be bi-directional; implementation frameworks can draw on the wealth of empirical costing studies to inform such recommendations. In doing so, closer communications between experts in implementation science and experts in economic evaluation will be essential.

Similarly, economic evaluations should increasingly seek to integrate implementation outcomes. Many economic evaluations assume perfect implementation or make overly optimistic assumptions about intervention uptake, without considering the real-world programmatic costs required to achieve that level of uptake. Several of the papers included in this review stressed the importance of acknowledging this disconnect, arguing it can bias studies toward favorable outcomes and unrealistic estimates of both impact and cost [26, 27, 32]. The need for incorporating implementation outcomes into economic studies extends to theory as well. Krebs and Nosyk (2021), for example, showed how the scale of delivery for an intervention can be estimated using reach and adoption, and that the “realistic” scale of delivery is much lower than the “ideal/perfect” situation usually assumed in economic analyses [25]. By better defining how costs should be collected within implementation science frameworks and by integrating implementation outcomes into economic analyses, researchers can perform more standardized, accurate, comparable, and programmatically viable economic evaluations.

As with any study, our work has certain limitations. First, as a scoping (rather than systematic) review, our search was not as structured or comprehensive as a formal systematic review [46]. As a scoping review, we also did not formally assess the quality of included manuscripts. Second, while this field of research is expanding, the literature on this topic was sparse, and the extracted data were heterogeneous—making comparisons across individual manuscripts difficult in many cases. This heterogeneity, while a weakness in the corresponding literature, represents an important finding of this review—and a key area of focus for future research. A third limitation is that we did not include studies published beyond 2021. This is a rapidly growing area of research, and this review will therefore need frequent updating. Finally, though we did allow grey literature to be included, we did not explicitly search any databases, repositories, or websites specific to the grey literature. This could lead to underrepresentation of this information in the review and discussion, and limit our findings relevant to policy implications.

Conclusion

In summary, this scoping review of 23 studies at the interface of economic evaluation and implementation science revealed that this literature is sparse (but rapidly growing), with poor representation of LMIC settings. This literature was characterized by heterogeneity in the considered scope of implementation costs—speaking to the importance of developing consensus on the activities and costs that should be considered as “implementation costs”, being explicit regarding the timing of those costs (both timing of incurring and evaluating costs), and more clearly distinguishing between implementation and operational costs (so as not to implicitly exclude implementation costs). These studies also highlighted the importance—and the opportunity—of forging closer linkages between the fields of implementation science and economic evaluation, including formal collaborations between experts in both fields. Closer integration of implementation science and economic evaluation will improve the relevance of economic studies of implementing health interventions, leading to more programmatically useful and robust estimates of the costs of interventions as implemented in real-world settings.

Availability of data and materials

The extraction tool and all abstracted data are available from the authors upon reasonable request.

Abbreviations

- LMIC:

-

Low- and middle-income country

- RE-AIM:

-

Reach, Effectiveness, Adoption, Implementation, Maintenance

- JBI:

-

Joanna-Briggs Institute

- Prisma-ScR:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses, Scoping Review

- MeSH:

-

Medical Subject Heading

- HIV:

-

Human immunodeficiency virus

- HCD:

-

Human-Centered Design

- TDABC:

-

Time-driven activity-based costing

References

Kernick DP. Introduction to health economics for the medical practitioner. Postgrad Med J. 2003;79:147–50.

Ramsey SD, Willke RJ, Glick H, Reed SD, Augustovski F, Jonsson B, et al. Cost-effectiveness analysis alongside clinical trials II - An ISPOR good research practices task force report. Value Health. 2015;18(2):161–72.

Sanders GD, Neumann PJ, Basu A, Brock DW, Feeny D, Krahn M, et al. Recommendations for Conduct, Methodological Practices, and Reporting of Cost-effectiveness Analyses. JAMA. 2016;316(10):1093–103.

Sax PE, Islam R, Walensky RP, Losina E, Weinstein MC, Goldie SJ, et al. Should resistance testing be performed for treatment-naive HIV-infected patients? A cost-effectiveness analysis. Clin Infect Dis. 2005;41(9):1316–23.

Walensky RP, Freedberg KA, Weinstein MC, Paltiel AD. Cost-effectiveness of HIV testing and treatment in the United States. Clin Infect Dis. 2007;45(Supplement_4):S248–54.

Cohen DA, Wu SY, Farley TA. comparing the cost-effectiveness of HIV prevention interventions. J Acquir Immune Defic Syndr. 2004;37(3):1404–14.

Bauer MS, Kirchner JA. Implementation science: what is it and why should I care? Psychiatry Res. 2020;283:112376.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Pol Ment Health. 2011;38(2):65–76.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Saldana L. The stages of implementation completion for evidence-based practice: Protocol for a mixed methods study. Implement Sci. 2014;9(1):43.

JBI Manual for Evidence Synthesis. https://jbi-global-wiki.refined.site/space/MANUAL. Accessed 24 March 2022.

Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–73.

Saldana L, Chamberlain P, Bradford WD, Campbell M, Landsverk J. The cost of implementing new strategies (COINS): a method for mapping implementation resources using the stages of implementation completion. Child Youth Serv Rev. 2014;39:177–82.

Vassall A, Sweeney S, Kahn JG, Gomez G, Bollinger L, Marseille E, et al. Reference case for estimating the costs of global health services and interventions. Global Health Costing Consortium [Internet]. 2017; Available from: https://ghcosting.org/pages/standards/reference_case. Accessed 28 March 2022.

Grey Matters: a practical tool for searching health-related grey literature. https://www.cadth.ca/resources/finding-evidence/grey-matters. Accessed 25 March 2022.

da Silva Etges APB, Cruz LN, Notti RK, Neyeloff JL, Schlatter RP, Astigarraga CC, et al. An 8-step framework for implementing time-driven activity-based costing in healthcare studies. Eur J Health Econ. 2019;20(8):1133–45.

Nichols BE, Offorjebe OA, Cele R, Shaba F, Balakasi K, Chivwara M, et al. Economic evaluation of facility-based HIV self-testing among adult outpatients in Malawi. J Int AIDS Soc. 2020;23(9):e25612.

Duan KI, Helfrich CD, Rao SV, Neely EL, Sulc CA, Naranjo D, et al. Cost analysis of a coaching intervention to increase use of transradial percutaneous coronary intervention. Implement Sci Commun. 2021;2(1):123.

Hoeft TJ, Wilcox H, Hinton L, Unützer J. Costs of implementing and sustaining enhanced collaborative care programs involving community partners. Implement Sci. 2019;14(1):37.

Hoomans T, Ament AJHA, Evers SMAA, Severens JL. Implementing guidelines into clinical practice: What is the value? J Eval Clin Pract. 2011;17(4):606–14.

Shumway M, Fisher L, Hessler D, Bowyer V, Polonsky WH, Masharani U. Economic costs of implementing group interventions to reduce diabetes distress in adults with type 1 diabetes mellitus in the T1-REDEEM trial. J Diabetes Complicat. 2019;33(11):107416.

Oxlade O, Benedetti A, Adjobimey M, Alsdurf H, Anagonou S, Cook VJ, et al. Effectiveness and cost-effectiveness of a health systems intervention for latent tuberculosis infection management (ACT4): a cluster-randomised trial. Lancet Public Health. 2021;6(5):e272–82.

Cidav Z, Mandell D, Pyne J, Beidas R, Curran G, Marcus S. A pragmatic method for costing implementation strategies using time-driven activity-based costing. Implement Sci. 2020;15(1):28.

Sohn H, Tucker A, Ferguson O, Gomes I, Dowdy D. Costing the implementation of public health interventions in resource-limited settings: a conceptual framework. Implement Sci. 2020;15(1):86.

Krebs E, Nosyk B. Cost-effectiveness analysis in implementation science: a research agenda and call for wider application. Curr HIV/AIDS Rep. 2021;18(3):176–85.

Salomon JA. integrating economic evaluation and implementation science to advance the global HIV response. J Acquir Immune Defic Syndr. 2019;82(Suppl 3):S314–21.

Bozzani FM. Incorporating feasibility in priority setting: a case study of tuberculosis control in South Africa. London School of Hygiene & Tropical Medicine. 2021. Available from: https://researchonline.lshtm.ac.uk/id/eprint/4662736/.

Eisman AB, Kilbourne AM, Dopp AR, Saldana L, Eisenberg D. Economic evaluation in implementation science: making the business case for implementation strategies. Psychiatry Res. 2020;283:112433.

Wagner TH, Yoon J, Jacobs JC, So A, Kilbourne AM, Yu W, et al. Estimating Costs of an Implementation Intervention. Med Decis Mak. 2020;40(8):959–67.

Dopp AR, Kerns SEU, Panattoni L, Ringel JS, Eisenberg D, Powell BJ, et al. Translating economic evaluations into financing strategies for implementing evidence-based practices. Implement Sci. 2021;16(1):66.

Allen P, Pilar M, Walsh-Bailey C, Hooley C, Mazzucca S, Lewis CC, et al. Quantitative measures of health policy implementation determinants and outcomes: a systematic review. Implement Sci. 2020;15(1):47.

Eisman AB, Quanbeck A, Bounthavong M, Panattoni L, Glasgow RE. Implementation science issues in understanding, collecting, and using cost estimates: a multi-stakeholder perspective. Implement Sci. 2021;16(1):75.

Merlo G, Page K, Zardo P, Graves N. Applying an implementation framework to the use of evidence from economic evaluations in making healthcare decisions. Appl Health Econ Health Policy. 2019;17(4):533–43.

Reeves P, Edmunds K, Searles A, Wiggers J. Economic evaluations of public health implementation-interventions: a systematic review and guideline for practice. Public Health. 2019;169:101–13.

Roberts SLE, Healey A, Sevdalis N. Use of health economic evaluation in the implementation and improvement science fields - a systematic literature review. Implement Sci. 2019;14(1):72.

Dopp AR, Mundey P, Beasley LO, Silovsky JF, Eisenberg D. Mixed-method approaches to strengthen economic evaluations in implementation research. Implement Sci. 2019;14(1):2.

Hoomans T, Severens JL. Economic evaluation of implementation strategies in health care. Implement Sci. 2014;9:168.

Bullock HL, Lavis JN, Wilson MG, Mulvale G, Miatello A. Understanding the implementation of evidence-informed policies and practices from a policy perspective: a critical interpretive synthesis. Implement Sci. 2021;16(1):18.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–7.

Sivalogan K, Semrau KEA, Ashigbie PG, Mwangi S, Herlihy JM, Yeboah-Antwi K, et al. Influence of newborn health messages on care-seeking practices and community health behaviors among participants in the Zambia Chlorhexidine Application Trial. PLoS One. 2018;13(6):e0198176.

Semrau KEA, Herlihy J, Grogan C, Musokotwane K, Yeboah-Antwi K, Mbewe R, et al. Effectiveness of 4% chlorhexidine umbilical cord care on neonatal mortality in Southern Province, Zambia (ZamCAT): a cluster-randomised controlled trial. Lancet Glob Health. 2016;4(11):e827–36.

Liu C, Lee JH, Gupta AJ, Tucker A, Larkin C, Turimumahoro P, et al. Cost-effectiveness analysis of human-centred design for global health interventions: a quantitative framework. BMJ Glob Health. 2022;7(3):e007912.

McDonald KM, Schultz EM, Chang C. Evaluating the state of quality-improvement science through evidence synthesis: insights from the closing the quality gap series. Perm J. 2013;17(4):52–61.

Hannan M, Russell JL, Takahashi S, Park S. Using improvement science to better support beginning teachers: the case of the building a teaching effectiveness network. J Teach Educ. 2015;66(5):494–508.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53.

Pham MT, Rajić A, Greig JD, Sargeant JM, Papadopoulos A, McEwen SA. A scoping review of scoping reviews: advancing the approach and enhancing the consistency. Res Synth Methods. 2014;5(4):371–85.

Acknowledgements

The authors would like to thank the Heart, Lung, and Blood Co-morbiditieS Implementation Models in People Living with HIV (HLB-SIMPLe) Research Coordinating Center (RCC) Consortium for helping coordinate and support this review.

Funding

Implementation Research Strategies for Heart, Lung, and Blood Co-morbidities in People Living with HIV-Research Coordinating Center-1U24HL154426-01.

Integrating Hypertension and Cardiovascular Disease Care into Existing HIV Service Package in Botswana (InterCARE)-1UG3HL154499-01.

Scaling Out and Scaling Up the Systems Analysis and Improvement Approach to Optimize the Hypertension Diagnosis and Care Cascade for HIV-infected Individuals (SCALE SAIA HTN)-1UG3HL156390-01.

Integrating HIV and hEART health in South Africa (iHeart-SA)-1UG3HL156388-01.

PULESA-UGANDA-Strengthening the Blood Pressure Care and Treatment cascade for Ugandans living with HIV-ImpLEmentation Strategies to SAve lives-1UG3HL154501-01.

Application of Implementation Science approaches to assess the effectiveness of Task-shifted WHO-PEN to address cardio metabolic complications in people living with HIV in Zambia-1UG3HL156389-01.

The funders had no role in the design of the study, the collection, analysis, and interpretation of data, or in the writing of the manuscript.

Author information

Authors and Affiliations

Contributions

(HS, DWD) conceived the idea for the manuscript. All authors (AM, RRT, FK, FM, PM, MM, JP, JS, AB, DBC, VGD, WE, BH, ML, BN, DW, HS, DWD) assisted with the development of the protocol. (AM, RRT) conducted the literature search. AM, RRT, and DWD conducted the study selection. AM and RRT performed the data extraction. AM and RRT conducted the data analysis and synthesis. All authors (AM, RRT, FK, FM, PM, MM, JP, JS, AB, DBC, VGD, WE, BH, ML, BN, DW, HS, DWD) provided feedback on major concepts, themes, and results to highlight and discuss. AM, RRT, HS, and DWD drafted the manuscript. All authors (AM, RRT, FK, FM, PM, MM, JP, JS, AB, DBC, VGD, WE, BH, ML, BN, DW, HS, DWD) were involved in revising the manuscript. All authors (AM, RRT, FK, FM, PM, MM, JP, JS, AB, DBC, VGD, WE, BH, ML, BN, DW, HS, DWD) read and approved the final manuscript. The findings and conclusions in this paper are those of the authors and do not necessarily represent the views of the National Institutes of Health.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Protocol for scoping review.

Additional file 2.

Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) Checklist.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Malhotra, A., Thompson, R.R., Kagoya, F. et al. Economic evaluation of implementation science outcomes in low- and middle-income countries: a scoping review. Implementation Sci 17, 76 (2022). https://doi.org/10.1186/s13012-022-01248-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-022-01248-x