Abstract

Background

Computerized clinical decision support systems (CDSSs) are a promising knowledge translation tool, but often fail to meaningfully influence the outcomes they target. Low CDSS provider uptake is a potential contributor to this problem but has not been systematically studied. The objective of this systematic review and meta-regression was to determine reported CDSS uptake and identify which CDSS features may influence uptake.

Methods

Medline, Embase, CINAHL, and the Cochrane Database of Controlled Trials were searched from January 2000 to August 2020. Randomized, non-randomized, and quasi-experimental trials reporting CDSS uptake in any patient population or setting were included. The main outcome extracted was CDSS uptake, reported as a raw proportion, and representing the number of times the CDSS was used or accessed over the total number of times it could have been interacted with. We also extracted context, content, system, and implementation features that might influence uptake, for each CDSS. Overall weighted uptake was calculated using random-effects meta-analysis and determinants of uptake were investigated using multivariable meta-regression.

Results

Among 7995 citations screened, 55 studies involving 373,608 patients and 3607 providers met full inclusion criteria. Meta-analysis revealed that overall CDSS uptake was 34.2% (95% CI 23.2 to 47.1%). Uptake was only reported in 12.4% of studies that otherwise met inclusion criteria. Multivariable meta-regression revealed the following factors significantly associated with uptake: (1) formally evaluating the availability and quality of the patient data needed to inform CDSS advice; and (2) identifying and addressing other barriers to the behaviour change targeted by the CDSS.

Conclusions and relevance

System uptake was seldom reported in CDSS trials. When reported, uptake was low. This represents a major and potentially modifiable barrier to overall CDSS effectiveness. We found that features relating to CDSS context and implementation strategy best predicted uptake. Future studies should measure the impact of addressing these features as part of the CDSS implementation strategy. Uptake reporting must also become standard in future studies reporting CDSS intervention effects.

Registration

Pre-registered on PROSPERO, CRD42018092337

Similar content being viewed by others

Background

Effectively translating evidence into clinical practice remains a fundamental challenge in medicine. Computerized clinical decision support systems (CDSSs) represent one approach to this problem that has been studied extensively over the last 20 years. CDSSs are information technology systems that deliver patient-specific recommendations to clinicians to promote improved care [1]. These systems may be integrated into provider electronic health records, accessed through the Internet, or delivered through mobile devices, and have a range of functions including providing point-of-care clinical prediction rules, highlighting guideline-supported management, optimizing drug ordering and documentation, and discouraging potentially harmful practices [2].

While CDSSs are widely considered to be an indispensable knowledge translation tool across care settings [3, 4], existing systematic reviews have demonstrated only small to moderate improvements in the care processes that they target, and even less promising effects on clinical outcomes [2, 5]. This has led to further research seeking to identify and establish specific features or “active ingredients” of CDSSs that might predict intervention success [6,7,8]. Prior such studies have defined CDSS “success” as a function of their impact on care process and/or clinical or economic outcomes, but impact will always be contingent on the actual uptake (i.e. use) of CDSSs, and suboptimal CDSS uptake has often been cited as an important barrier to success [1, 9]. Various general explanations for poor CDSS uptake have been proposed in previous studies, including time and financial constraints, lack of knowledge or confidence in CDSS technology, workflow disruption, perceptions of loss of autonomy, and low usability [10, 11]. However, no reviews to date have systematically investigated the precise effects of these and other potential determinants on CDSS uptake. This represents a significant knowledge gap, and an opportunity to identify barriers which could be specifically targeted in future CDSS design and/or implementation in order to realize the meaningful improvements in downstream clinical outcomes that have mostly eluded CDSSs to date.

The aim of this systematic review and meta-regression was to address this gap by identifying studies that specifically report uptake of CDSSs and isolating CDSS features that are associated with uptake.

Methods

We report our findings using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines, and our methodology followed the guidance provided in the Cochrane Handbook for Systematic Reviews [12, 13].

Searches

We searched Ovid MEDLINE, EMBASE, CINAHL, and the Cochrane Database of Controlled Trials, from January 2000 to August 2020 (Additional file 1, henceforth referred to as “Appendix”, page 2-3). We included only studies published since the year 2000, as we were primarily interested in computerized CDSS that leveraged modern information technology, and across a period of advancement for both CDSS technology and implementation science. We manually searched reference lists of included studies. The search strategy was developed by a library scientist and refined after peer review by an external library scientist, using PRESS guidelines [14].

Study inclusion and exclusion criteria

We included studies evaluating the implementation of CDSS in any health condition, across all care settings and in any patient population. Study designs included randomized controlled trials, non-randomized controlled trials, and controlled quasi-experimental designs. Conference abstracts were included. Use of the CDSS had to be voluntary (i.e. clinicians could choose whether or not to activate the CDSS or were given an option to ignore it if it was automatically activated), and quantitative information about user uptake had to be available in the manuscript or its appendices.

We defined CDSS as a computerized system to support clinical decision-making using patient-specific data. The CDSS could be accessible through providers’ electronic health record system or the Internet or delivered using mobile devices. CDSSs could be directed at physicians, nurses, or other allied health professionals. Eligible CDSSs had to be directly related to patient care, whereby simulation studies or studies focusing only on educating clinicians or trainees were excluded.

Our primary outcome of interest was provider uptake of CDSS. Uptake had to be reported as a raw proportion, representing the number of times the CDSS was used or accessed over the total number of eligible times it could have been interacted with. We focused specifically on the uptake of the CDSS and not on downstream process or clinical outcomes. CDSS uptake could be reported at the following levels: the event level (e.g. the proportion of alerts responded to); the patient level (e.g. proportion of patient visits or unique patients that the system was used for); and/or the clinician level (e.g. proportion of eligible providers who interacted with the system at least once).

Data extraction and quality assessment

A team of 6 reviewers screened abstracts and titles, and then AK and JY independently screened full-text versions of all studies not excluded in the previous step in duplicate to determine final inclusion. Next, AK, JY, and JLSC extracted data from the included articles and all final data were then independently reviewed by AK, with any remaining disagreements resolved through discussion with co-authors (further details in Additional file 1: Appendix, page 4).

We extracted the following data from included studies: study methodology, clinical setting, participant characteristics, CDSS characteristics, primary outcome reported, study duration, and CDSS uptake (if not directly reported, uptake was calculated based on data presented in the study or its appendices). Studies with more than one CDSS arm were extracted as separate trials. We documented the features of each of these CDSSs that might influence uptake. We built an initial feature list based on the GUIDES checklist. The GUIDES checklist is a tool that guides the design and implementation of CDSSs through a set of recommended criteria derived through systematic literature review, feedback from international CDSS experts, consultation with patients and healthcare stakeholders, and pilot testing [15]. In consultation with the creators of the GUIDES checklist, we created practical definitions for each of the checklist elements to facilitate data extraction into “Yes/No” CDSS feature descriptors. We then supplemented these with any additional features that were found in a previously reported modified Delphi process for identifying CDSS features subjectively found to be important by information technology experts [7]. This resulted in a final list of 52 CDSS features potentially associated with uptake, categorized into CDSS context, content, system, and implementation features, in line with the GUIDES checklist (Table 1). Each feature was graded as Yes/No/Unclear/Not Applicable by reviewers, based on information available within the manuscript itself and/or in studies referenced in the main manuscript which provided further CDSS details. Features that contained more than one element (e.g. “was there a study done and was it positive?”) had to meet both elements to be graded as Yes.

Given that some features were not routinely reported, we also sought to contact study authors to assist in feature classification. We emailed our completed CDSS feature extraction tables to the corresponding authors of each included study, requesting verification. We sent a reminder email to authors two weeks after the initial email. We adjusted our data extraction table to reflect changes suggested and justified by responding study authors. For studies for which we did not receive an author response, features designated “Unclear” were assumed to be “No” for the purposes of data analysis.

Finally, we extracted details required for risk of bias classification for each study, according to criteria discussed in the Cochrane Handbook for Systematic Reviews of Interventions, modified in line with previous systematic reviews of CDSS [6, 16]. Further details are provided in the Additional file 1.

Data synthesis and analysis

We used a random-effects meta-analysis model to calculate the average uptake proportion across the included studies, weighted by the inverse of sampling variance [17]. A log-odds (logit) transformation was used to better approximate a normal distribution and facilitate meta-analysis of proportions, as is common in the literature [18,19,20]. Next, we used a mixed-effects meta-analysis model to perform subgroup analysis, calculating the weighted average uptake proportion for each uptake level (event, patient, and clinician) [21, 22]. We expected substantial heterogeneity given the diversity of interventions, context, and diseases in the included studies.

We then performed univariate meta-regression analyses to explore how CDSS features influenced CDSS uptake across the included studies and may be able to account for the anticipated heterogeneity in CDSS uptake [23]. Using only the covariates from univariable analysis with p < 0.25 (see Additional file 1: Appendix page 5-7, Table A1), we fitted a multivariable meta-regression model, and then simplified the resulting model using multimodel inference. Multimodel inference fits and compares all possible model permutations using corrected Akaike information criterion (AIC), and demonstrates the relative importance of each included covariate across all possible models [24, 25]. We sought to determine if the simplified multivariable meta-regression model could reduce the anticipated heterogeneity in uptake proportion, and to identify any CDSS features significantly associated with increased uptake of CDSSs across all possible models.

Finally, given the low level of uptake reporting, we found across the CDSS literature, we performed a post-hoc analysis comparing uptake reporting in screened CDSS studies published prior to versus after the 2011 publication of the CONSORT-eHEALTH extrension [26].

The I2 statistic was used to report heterogeneity across all models. R Software, version 3.6.2 (R Foundation for Statistical Computing, Vienna) was used to perform all statistical analyses. Rma function from the metaphor library and metaprop function from the meta library were used to fit models. The dmetar library was used for multimodel inference analysis.

Results

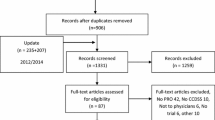

Our search identified 7841 unique citations. We identified an additional 154 citations through reference lists of included studies. We excluded 7189 citations at the initial stage of screening, leaving 806 citations (11.2%) for full-text review. Of these, 55 studies (6.8%) met inclusion criteria (see List of Included Trials – Additional file 2). Five included studies had 2 different CDSS arms [27,28,29,30,31], resulting in a total of 60 CDSS study arms (Figure 1). Among 443 studies that met other inclusion criteria, 388 were excluded due to failure to report CDSS uptake, whereby only 55/443 (12.4%) eligible studies reported uptake. Of the 201 eligible studies published in 2011 or earlier (the year of the CONSORT-EHEALTH extension publication), uptake was reported in 24 (11.9%), compared to in 32/242 (13.2%) studies published after 2011 (p = 0.77).

Of the 55 included studies, 48 (87.3%) used a randomized design, with cluster randomization being the most common. Most (41/55, 74.6%) were in outpatient settings, were conducted in the USA (36/55, 65.5%), and involved adults (46/55, 83.6%). Uptake was reported at the patient level in 32 (58.2%) studies, at the event level in 16 (29.1%) studies, and at the clinician level in 11 (20.0%) studies (4 studies reported uptake at more than one level) (Table 2). Overall risk of bias was judged to be high in most randomized and non-randomized controlled studies (28/52, 53.9%), unclear in 14/52 studies (26.9%), and low in 10/52 studies (19.2%). Overall risk of bias was low in the 3 interrupted time series studies (Additional file 1: Appendix page 8-11, Fig A1 and Tables A2-A3).

The 55 included studies covered a broad range of clinical conditions. Most CDSSs were integrated into existing software systems (47/55, 85.5%), were based on disease-specific guidelines (49/55, 89.1%), provided advice at the moment and point of clinical need (52/55, 94.6%), and targeted only physicians (31/55, 56.4%). CDSS developers were the study authors in 48/55 studies (87.3%) (see Full Study Characteristics – Additional file 2).

Authors from 30/55 (54.6%) publications verified our extraction of CDSS features. On average we adjusted answers based on author comments for 8.7 features out of 52, indicating that our baseline assignment of features to “Yes” or “No” was correct 83.3% of the time. Among the 25 studies we did not receive author responses for, we only assigned 1.6/52 (3.1%) features on average as “Unclear”.

The 60 CDSS study arms involved a total of 373,608 patients and 3607 providers. Random effects meta-analysis demonstrated an overall weighted CDSS uptake of 34.2% (95% CI 23.2 to 47.1%). As anticipated, the meta-analysis of uptake proportion across all study arms demonstrated considerable heterogeneity (I2 = 99.9%) (Additional file 1: Appendix page 12, Fig A2). Among the different reported uptake types, CDSS uptake at the event level was 26.1% (95% CI 8.2 to 58.3%), at the patient level was 32.9% (95% CI 22.7 to 45.1%), and at the clinician level was 65.6% (95% CI 43.6 to 82.4%). Effect sizes across the different subgroups were significantly different (Q(df = 2) = 7.39, p = 0.025), but meta-analysis by uptake type did not significantly change the level of heterogeneity (Additional file 1: Appendix page 13, Fig A3). At the request of reviewers, further post-hoc subgroup analyses were conducted to assess uptake based on setting (emergency department, inpatient, or outpatient), provider type (physicians vs. other healthcare providers), disease type (cardiac disease, respiratory disease, infectious disease, or other), and adult versus paediatric patient populations. None of these subgroup analyses resulted in statistically significant differences in effect sizes. A further subgroup meta-analysis was conducted based on risk of bias assessment (high, unclear, low), and this also did not result in statistically significant differences in effect sizes between subgroups (see Additional file 1: Appendix, Page 14 for full results).

We performed outlier influence analysis according to methods detailed by Viechbauer and Cheung [32], which demonstrated that one study contributing two CDSS study arms, Rosenbloom et al., was significantly distorting the pooled uptake estimate. We removed this extreme outlier from our meta-regression analysis, as it would likely have biased results (further details in Additional file 1: Appendix, page 15, text and Fig A4).

Our multivariable meta-regression model controlling for uptake type and other important CDSS features, using only those predictors from univariate screening with p < 0.25, and simplified using multimodel inference identified that formally evaluating the availability and quality of the patient data needed to inform CDSS advice (feature 4, p = 0.02) and identifying and addressing other barriers to the behaviour change targeted by the CDSS (feature 35, p = 0.02) were significantly associated with increased CDSS uptake (Table 3). Heterogeneity remained high with the multivariable meta-regression model (I2 = 99.5%), but the included covariates accounted for 38.6% of the heterogeneity present in overall uptake proportion (R2 = 38.6%) (see Additional file 1: Appendix for full univariate analysis results – page 5-7 Table A1, further details regarding model simplification – page 16 text and Fig A5, and uptake feature assignment used in statistical analysis – page 17 Fig A6-A7).

Discussion

We performed a systematic review and meta-regression to measure the uptake of CDSSs in published studies, and to identify CDSS features associated with uptake.

The first and most important finding was that CDSS uptake is seldom reported in published studies. Complex interventions such as CDSSs may fail at one of many different levels, and should ideally be accompanied by concurrent process evaluations [33], with the most basic process metric being system uptake [15]. Although uptake is the first and necessary step for any behavioural or patient-level outcome changes, only 12.4% of otherwise eligible manuscripts reported uptake. This may reflect a lack of consensus regarding optimal CDSS evaluation and reporting processes. The CONSORT-EHEALTH extension was published in 2011 [26] in an effort to address the unique and complex nature of electronic health (eHealth) interventions such as CDSSs, and does specify a requirement to report uptake of the eHealth intervention being studied. However, uptake reporting was no different in studies published before availability of the CONSORT-EHEALTH extension compared to those published after, and date of publication was not a significant predictor of increased uptake in univariate analysis (Additional file 1: Appendix Table A1). Accordingly, given the importance of system uptake in evaluating reasons for success (and especially failure) of CDSS interventions, our findings suggest an urgent need for broader awareness of CDSS-specific reporting guidelines such as the CONSORT-EHEALTH or newer tools such as the SAFER reporting framework [34], and more strict journal criteria for their use.

Next, our meta-analysis of 60 CDSS study arms involving 373,608 patients and 3607 providers, revealed that overall uptake of CDSS was 34.2%. Considering the significant resources and expenditure involved in implementing a CDSS, the finding that it was used in less than half of potential opportunities is concerning. An expected reporting bias towards more favourable uptake might suggest that true uptake is even lower. Uptake was lowest when reported at the event level (26.1%) and similar at the patient level (32.9%), but higher at the clinician level (65.6%) (p = 0.025). This is likely due to the nature of uptake measurement at the clinician level, which represented whether or not clinicians had ever used the CDSS during the study period, rather than the reflection of repeated use captured in event- and patient-level measurements. Still, it is compelling that only 2/3rds of clinicians even attempted to use an available CDSS. And among those that did try it, frequency of use clearly remained low. Overall, our findings provide an attractive explanation for the widely reported low effect sizes of CDSSs on process and clinical outcomes [2, 5]. Previous reviews have posited that disappointing CDSS effectiveness might be attributable to the lack of theory-based research, inadequate study duration, or suboptimal intervention-context matching [2, 9, 35]. Ours is the first to systematically assess CDSS uptake rather than more downstream process and clinical outcomes. In doing so, we have identified a broadly important determinant of CDSS effectiveness that suggests a need for greater focus on CDSS implementation design (for initial uptake), in addition to system design (for effective behavioural influence upon sustained usage).

Finally, our multivariable meta-regression identified two CDSS features that significantly predict uptake. The first was a contextual feature: whether a formal evaluation of the availability and quality of patient data needed to inform the CDSS had been performed. The availability and veracity of patient data that is mined from within EHR systems to inform CDSSs could influence uptake both because these data are required for personalized and accurate recommendations to be generated, and because effective access and processing of these data can reduce provider interaction time (by averting the need for provider data entry), which is a recognized barrier to uptake [36]. This finding is particularly relevant as CDSSs are increasingly available as pre-developed “off-the-shelf” interventions, and prospective users will need to carefully examine if these types of CDSSs adequately align with their documentation practices, and patient and data workflows prior to implementation [2]. The second factor, related to CDSS implementation, was the presence of other barriers to the target clinical behaviour and concurrent strategies to address them. This reflects the complexity of real-world clinical behaviours such as test or medication prescribing [37], which are often limited by barriers at system or patient levels, beyond the provider-level memory, time and knowledge barriers typically addressed by CDSSs. It is not suprising that a CDSS would not be used when its target is a behaviour that is prevented by additional barriers that it cannot address. The types of barriers that healthcare providers or organizations should consider when implementing a CDSS include user (both provider and patient) beliefs, attitudes, and skills, professional interactions, clinical capacity and resources, and organizational support [15].

The features identified in our analysis predicting uptake are different from those found to be associated with downstream CDSS effectiveness in previous systematic reviews [6, 7, 38]. This likely reflects the fact that behavioural determinants of choosing to follow the recommendations from a CDSS are fundamentally different than those that underpin a clinician’s choice to access and use such a system at all. What is most notable about the different features we identified as potential determinants of CDSS uptake is that none were related to the CDSS content or system. These findings are in line with previous qualitative CDSS research that has emphasized the importance of implementation context and attaining good “clinician-patient-system integration” when developing CDSSs [39], and highlight the importance of further integrating implementation science principles into CDSS intervention design [40].

Risk of bias

Risk of bias in our analysis was concentrated in the minority of trials that were not randomized, and in the risk of contamination category among randomized trials with non-clustered designs (Additional file 1: Appendix, Figure A6 and Table A3). As this type of bias would likely shift the result in favour of increased CDSS uptake, we did not feel that including all trials in our analysis would have changed our overall finding of low CDSS uptake.

Limitations

The principal limitation of our analysis is the heterogeneity among included trials [2]. Heterogeneity in reported CDSS uptake was considerable, even when analysing the data using uptake subtypes. This was likely a consequence of the diverse nature of CDSS interventions, settings, diseases, and study designs included. We believe that as the first review focused on CDSS uptake, it was important to include a wide breadth of CDSS studies. Although meta-analysis is generally discouraged in this context, we reported the results to provide a weighted summary measure of uptake across the available literature, and to highlight the overall low level of CDSS uptake among studies that report it. We also acknowledge that uptake is but one feature of engagement, which is a multidimensional concept [40]. Furthermore, it is conceivable that tools with relatively low uptake have a behavioural impact through halo reminder and/or education effect on the provider. Secondly, although we developed a broad feature list based on published literature and had a high rate of response from study authors validating our feature assignments, these features accounted for only 40% of the variability in CDSS uptake, suggesting that further study is needed to identify other important elements that drive uptake, which could include the influence of individual conditions or types of outcomes targeted, or more granular details about the system itself, and/or the populations and/or contexts being studied. Also, we acknowledge that like any model not based on a priori hypotheses, our use of multimodel inference to select important CDSS features associated with uptake should be viewed as exploratory and hypothesis generating [25]. We do note that this approach avoids some of the statistical limitations of step-wise variable selection methods [41]. Third, we did not investigate the potential effect of publication bias. However, the large majority of published trials did not report uptake, and unpublished negative studies would be expected to further bias the results towards our finding of low uptake. Finally, our models were limited by the fact that only 12% of studies that otherwise met eligibility criteria reported uptake, and it is possible that other important predictors of CDSS uptake will emerge if and when uptake reporting improves over time. Also, though we strongly believe that efforts to improve uptake of CDSS are clearly required, we acknowledge that future work will be needed to quantitatively establish the anticipated correlation between improved uptake and downstream improvements in process and clinical outcomes, and other study methods such as qualitative inquiry will be necessary to achieve a deeper understanding of how context influences CDSS uptake and implementation.

Conclusions

Given the often disappointing effects of CDSSs reported across systematic reviews [2, 5], many have called for a re-examination of the role of CDSSs in clinical care [4, 42]. It is possible that future CDSS effectiveness may be improved through technological advancements such as electronic health record interoperability and innovative information technology approaches such as machine learning [4]. However, to realize the benefits of such system-level advancements, existing poor system uptake will first need to be addressed. This will require a dedicated effort to address the determinants of CDSS uptake. Our review is a first step in this process, and identified that contextual and implementation-related factors were most strongly associated with uptake, rather than technological, system, or content features. These findings reinforce the importance of including theory-based implementation science approaches early in CDSS intervention design, in order to realize the promise of ever evolving CDSS technologies. It is also clear that monitoring success in this area will require more consistent reporting of uptake in published CDSS trials.

Availability of data and materials

All data generated or analysed during the study are included in the Additional file 1, and any further relevant data are available from the corresponding author on reasonable request.

References

Heselmans A, Delvaux N, Laenen A, Van de Velde S, Ramaekers D, Kunnamo I, et al. Computerized clinical decision support system for diabetes in primary care does not improve quality of care: a cluster-randomized controlled trial. Implement Sci. 2020;15(1):5.

Kwan JL, Lo L, Ferguson J, Goldberg H, Diaz-Martinez JP, Tomlinson G, et al. Computerised clinical decision support systems and absolute improvements in care: meta-analysis of controlled clinical trials. BMJ. 2020;370:m3216. Available from: http://www.bmj.com/content/370/bmj.m3216 [cited 2020 Nov 18].

Delvaux N, Vaes B, Aertgeerts B, de Velde SV, Stichele RV, Nyberg P, et al. Coding systems for clinical decision support: theoretical and real-world comparative analysis. JMIR Form Res. 2020;4(10) Available from: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC7641774/ [cited 2021 Apr 27].

Sarkar U, Samal L. How effective are clinical decision support systems? BMJ. 2020;370:m3499. Available from: http://www.bmj.com/content/370/bmj.m3499 [cited 2020 Nov 18].

Shojania KG, Jennings A, Mayhew A, Ramsay C, Eccles M, Grimshaw J. Effect of point-of-care computer reminders on physician behaviour: a systematic review. CMAJ Can Med Assoc J. 2010;182(5):E216225.

Van de Velde S, Heselmans A, Delvaux N, Brandt L, Marco-Ruiz L, Spitaels D, et al. A systematic review of trials evaluating success factors of interventions with computerised clinical decision support. Implement Sci IS. 2018;13:114. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6102833/ [cited 2019 Nov 13].

Roshanov PS, Fernandes N, Wilczynski JM, Hemens BJ, You JJ, Handler SM, et al. Features of effective computerised clinical decision support systems: meta-regression of 162 randomised trials. BMJ. 2013;346:f657.

Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330(7494):765.

Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157(1):29.

Liberati EG, Ruggiero F, Galuppo L, Gorli M, GonzGonzCoeytaux RR, et al. Effect of What hinders the uptake of computerized decision support systems in hospitals? A qualitative study and framework for implementation. Implement Sci. 2017;12(1):113.

Devaraj S, Sharma S, Fausto D, Viernes S, Kharrazi H. Barriers and Facilitators to Clinical Decision Support Systems Adoption: A Systematic Review. J Bus Adm Res. 2014;3(2):36-53.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ. 2009;339:b2535.

Higgins J, Thomas J. Cochrane handbook for systematic reviews of interventions, Version 6.2. 2021. Available from: https://training.cochrane.org/handbook/current [cited 2021 Apr 28].

McGowan J, Sampson M, Salzwedel DM, Cogo E, Foerster V, Lefebvre C. PRESS Peer Review of Electronic Search Strategies: 2015 Guideline Statement. J Clin Epidemiol. 2016;75:40Foe.

Van de Velde S, Kunnamo I, Roshanov P, Kortteisto T, Aertgeerts B, Vandvik PO, et al. The GUIDES checklist: development of a tool to improve the successful use of guideline-based computerised clinical decision support. Implement Sci. 2018;13(1):1-2. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6019508/ [cited 2021 Apr 28].

Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343:d5928.

Nyaga VN, Arbyn M, Aerts M. Metaprop: a Stata command to perform meta-analysis of binomial data. Arch Public Health. 2014;72(1):39.

Lin L, Xu C. Arcsine-based transformations for meta-analysis of proportions: pros, cons, and alternatives. Health Sci Rep. 2020;3(3):e178.

Schwarzer G, Chemaitelly H, Abu‐Raddad LJ, Rücker G. Seriously misleading results using inverse of Freeman‐Tukey double arcsine transformation in meta‐analysis of single proportions. Res Synth Methods. 2019;10(3):476–83.

Barendregt JJ, Doi SA, Lee YY, Norman RE, Vos T. Meta-analysis of prevalence. J Epidemiol Community Health. 2013;67(11):974.

Higgins J, Thompson S, Deeks J, Altman D. Statistical heterogeneity in systematic reviews of clinical trials: a critical appraisal of guidelines and practice. J Health Serv Res Policy. 2002;7(1):51–61.

Cuijpers P, Cristea IA, Karyotaki E, Reijnders M, Huibers MJH. How effective are cognitive behavior therapies for major depression and anxiety disorders? A meta-analytic update of the evidence. World Psychiatry. 2016;15(3):245ffec.

Thompson SG, Higgins JPT. How should meta-regression analyses be undertaken and interpreted? Stat Med. 2002;21(11):15591559.

Harrer M, Cuijpers P, Furukawa T, Ebert D. Doing meta-analysis in R: a hands-on guide. 2019. Available from: https://bookdown.org/MathiasHarrer/Doing_Meta_Analysis_in_R/ [cited 2021 Apr 28]

Burnham KP, Anderson DR. Model selection and multimodel inference: a practical information-theoretic approach [Internet]. 2nd ed. New York: Springer-Verlag; 2002. Available from: http://www.springer.com/gp/book/9780387953649 [cited 2021 May 3]

Eysenbach G, Group C-E. CONSORT-EHEALTH: Improving and standardizing evaluation reports of web-based and mobile health interventions. J Med Internet Res. 2011;13(4):e126.

Ballard DW, Vemula R, Chettipally UK, Kene MV, Mark DG, Elms AK, et al. Optimizing clinical decision support in the electronic health record. Clinical Characteristics Associated with the Use of a Decision Tool for Disposition of ED Patients with Pulmonary Embolism. Appl Clin Inform. 2016;7(3):883lth.

Blecker S, Austrian JS, Horwitz LI, Kuperman G, Shelley D, Ferrauiola M, et al. Interrupting providers with clinical decision support to improve care for heart failure. Int J Med Inform. 2019;131:103956.

Hendrix KS, Downs SM, Carroll AE. Pediatricians’ responses to printed clinical reminders: does highlighting prompts improve responsiveness? Acad Pediatr. 2015;15(2):158–64.

Reynolds EL, Burke JF, Banerjee M, Callaghan BC. Randomized controlled trial of a clinical decision support system for painful polyneuropathy. Muscle Nerve. 2020;61(5):640.

Rosenbloom ST, Geissbuhler AJ, Dupont WD, Giuse DA, Talbert DA, Tierney WM, et al. Effect of CPOE user interface design on user-initiated access to educational and patient information during clinical care. J Am Med Inform Assoc. 2005;12(4):458–73.

Viechtbauer W, Cheung MW-L. Outlier and influence diagnostics for meta-analysis. Res Synth Methods. 2010;1(2):112.

Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:h1258.

Singh H, Sittig DF. A sociotechnical framework for safety-related electronic health record research reporting: the SAFER reporting framework. Ann Intern Med. 2020;172(11 Suppl):S92ctroni.

Moja L, Kwag KH, Lytras T, Bertizzolo L, Brandt L, Pecoraro V, et al. Effectiveness of computerized decision support systems linked to electronic health records: a systematic review and meta-analysis. Am J Public Health. 2014;104(12):e12–22.

Lam Shin Cheung J, Paolucci N, Price C, Sykes J, Gupta S. A system uptake analysis and GUIDES checklist evaluation of the Electronic Asthma Management System: a point-of-care computerized clinical decision support system. J Am Med Inform Assoc. 2020;27(5):726evalu.

Patey AM, Grimshaw JM, Francis JJ. Changing behaviour, ‘more or less’: do implementation and de-implementation interventions include different behaviour change techniques? Implement Sci. 2021;16:20.

Garg AX, Adhikari NKJ, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293(10):1223.

Miller A, Moon B, Anders S, Walden R, Brown S, Montella D. Integrating computerized clinical decision support systems into clinical work: a meta-synthesis of qualitative research. Int J Med Inform. 2015;84(12):1009–18.

Michie S, Yardley L, West R, Patrick K, Greaves F. Developing and evaluating digital interventions to promote behavior change in health and health care: recommendations resulting from an international workshop. J Med Internet Res. 2017;19(6):e7126.

Whittingham MJ, Stephens PA, Bradbury RB, Freckleton RP. Why do we still use stepwise modelling in ecology and behaviour? J Anim Ecol. 2006;75(5):11821182.

Kwan J. What I have learned about clinical decision support systems over the past decade. BMJ. 2020; Available from: http://blogs.bmj.com/bmj/2020/09/18/janice-kwan-what-i-have-learned-about-clinical-decision-support-systems-over-the-past-decade/ [cited 2021 May 4].

Acknowledgements

We thank Stephanie Segovia, Rosalind Tang, Aliki Karanikas, and Janannii Selvanathan for their support with research administration and title and abstract screening.

Funding

AK is supported by a Health Research Training Fellowship from the Canadian Institutes of Health Research (CIHR). The funders had no role in considering the study design or in the collection, analysis, interpretation of data, writing of the report, or decision to submit the article for publication.

Author information

Authors and Affiliations

Contributions

AK and SG led the study design. AK, SVdV, and SG created CDSS uptake features. AK, JY, JLSC, and SG extracted the data, and AK performed data analysis. AK drafted the manuscript, and all authors provided feedback and revisions and approved the final submitted version. AK is the guarantor. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Supplementary material referenced throughout manuscript.

Additional file 2.

Full study details. List of included trials and full study characteristics extracted.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Kouri, A., Yamada, J., Lam Shin Cheung, J. et al. Do providers use computerized clinical decision support systems? A systematic review and meta-regression of clinical decision support uptake. Implementation Sci 17, 21 (2022). https://doi.org/10.1186/s13012-022-01199-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-022-01199-3