Abstract

Background

Normalisation Process Theory (NPT) is frequently used to inform qualitative research that aims to explain and evaluate processes that shape late-stage translation of innovations in the organisation and delivery of healthcare. A coding manual for qualitative researchers using NPT will facilitate transparent data analysis processes and will also reduce the cognitive and practical burden on researchers.

Objectives

(a) To simplify the theory for the user. (b) To describe the purposes, methods of development, and potential application of a coding manual that translates normalisation process theory (NPT) into an easily usable framework for qualitative analysis. (c) To present an NPT coding manual that is ready for use.

Method

Qualitative content analysis of papers and chapters that developed normalisation process theory, selection and structuring of theory constructs, and testing constructs against interview data and published empirical studies using NPT.

Results

A coding manual for NPT was developed. It consists of 12 primary NPT constructs and conforms to the Context-Mechanism-Outcome configuration of realist evaluation studies. Contexts are defined as settings in which implementation work is done, in which strategic intentions, adaptive execution, negotiating capability, and reframing organisational logics are enacted. Mechanisms are defined as the work that people do when they participate in implementation processes and include coherence-building, cognitive participation, collective action, and reflexive monitoring. Outcomes are defined as effects that make visible how things change as implementation processes proceed and include intervention mobilisation, normative restructuring, relational restructuring, and sustainment.

Conclusion

The coding manual is ready to use and performs three important tasks. It consolidates several iterations of theory development, makes the application of NPT simpler for the user, and links NPT constructs to realist evaluation methods. The coding manual forms the core of a translational framework for implementation research and evaluation.

Similar content being viewed by others

Background

Any researcher who wishes to become proficient at doing qualitative analysis must learn to code well and easily. The excellence of the research rests in large part on the excellence of the coding.

Anselm Strauss [1]

The analysis and interpretation of qualitative data can make an important contribution to research on implementation processes and their outcomes when such data are interpreted through the lens of implementation theory. These data may be found in documents, interview transcripts, or observational fieldnotes. In broad terms, there are two approaches to integrating qualitative methods and implementation theory. First, by explaining phenomena of interest through procedures that identify and characterise empirical regularities or deviant cases in natural language data through processes of induction [1]. Second, by deriving explanations of relevant phenomena through using structured methods of data analysis that directly engage with existing conceptual frameworks, models, and theories [2,3,4,5]. These are not mutually exclusive ways of working, and they are often combined. In this paper, we focus on developing tools for the second approach, in which a more structured approach to qualitative data analysis [6] was formed into a coding manual that supports researchers using Normalisation Process Theory (NPT) [7,8,9,10,11] in studies of implementation processes.

NPT provides a set of conceptual tools that support understanding and evaluation of the adoption, implementation, and sustainment of socio-technical and organisational innovations. NPT takes as its starting point that implementation processes are formed when actors seek to translate their strategic intentions into ensembles of beliefs, behaviours, artefacts, and practices that create change in the everyday practices of others [8, 11]. The central questions that follow from the application of NPT are always, what is the work that actors do to create change? How does this work get done? And, what are its effects? Because NPT has its origins in research on the implementation of complex healthcare interventions, it does not see the intervention as a thing-in-itself, but rather as an assemblage or ensemble of beliefs, behaviours, artefacts, and practices that may play out differently over time and between settings [8]. It is supported by empirical studies using both qualitative and quantitative methods and by systematic reviews that have explored its value in different research domains [12,13,14].

Development of the coding manual was informed by the application of methods of qualitative content analysis described by Schreier [2]. This approach can be defined as ‘a research method for the subjective interpretation of the content of text-data through the systematic classification process of coding and identifying themes or patterns’ [3] and as ‘any qualitative data reduction and sense-making effort that takes a volume of qualitative material and attempts to identify core consistencies and meanings.’ [4]. As qualitative content analysis has become more widely used, so too have coding frameworks and manuals that define the ways that data are identified, categorised, and characterised within a study. In qualitative content analysis, researchers are encouraged to develop manuals that describe and explicate definition of the ‘rules’ for coding and categorising data [5]. The process of categorisation that follows from using a coding manual is useful because it enables researchers to manage the cognitive burden of searching for and handling multiple constructs and thus enables them to manage a greater cognitive burden of interpretation. Within research teams, coding manuals support the quality and rigour of coding by providing ‘rules’ that are employed by each team member and, in this way, can ensure the consistency of coding. Parsimony can be important too: more is not necessarily better in qualitative investigation and analysis. Reducing the number of codes to those that represent core constructs can be understood as what Adams et al. [6], in a different context, have called ‘subtractive transformation’.

A generalizable NPT coding manual is of value to researchers from a range of disciplines interested in the ways that implementation processes play out. It provides a consistent set of definitions of the core constructs of the theory, shows how they relate to each other, and enables researchers doing qualitative content analysis together to work within a common frame of analysis (for example, in qualitative evidence syntheses, or in team-based qualitative analysis of interview or observational data). In the future, as software for computational hermeneutics [15] becomes more widely available and practically workable, a coding manual could also be integrated into the development of topic modelling instruments and algorithms.

Despite their value to researchers, the process of creating rigorous and robust coding manuals for individual studies is rarely described, and generalizable coding manuals are rare. In this paper, we start to fill this gap. We describe the purposes, methods of development, and application of a generalizable coding manual that translates NPT into a more easily usable framework for qualitative analysis.

Methods

NPT has developed over time through contact with empirical studies and evidence syntheses, and this has led to different iterations of the theory. These have been formed through publications that have served three purposes. First, there is a set of papers aimed explicitly at theory-building in which core constructs of NPT have been developed and their implications explored [7,8,9, 16, 17]. Second, there is a set of papers aimed explicitly at theory-translation in which those core constructs have been clarified and refined through methodological research leading to the development of toolkits [18, 19] and survey instruments [20,21,22]. Finally, there is a set of papers that contribute to theory-elaboration through the development of new constructs during empirical studies and systematic reviews. These explain additional aspects of implementation processes [23,24,25].

Translating a set of theoretical constructs into a theory-informed coding manual for qualitative data analysis involves a series of tasks that are, in themselves, a form of qualitative analysis. Qualitative research focuses on the identification, characterisation, and interpretation of empirical regularities or deviant cases in natural language data. The process described here developed organically and opportunistically through these different tasks, as they were conducted, and through discussion amongst authors of this paper. The work of defining key constructs of the theory, assembling these into a framework, and then transforming them into a workable coding manual, was informed by the qualitative content analysis procedures described by Schreier [2].

-

1.

Concept identification. The result of the iterative development of NPT is a body of constructs representing the mechanisms that motivate and shape implementation processes, the outcomes of these processes, and the contexts in which their users make them workable and integrate them into practice. These core constructs of NPT were distributed over papers that developed the theory [7,8,9,10,11, 16, 17, 23,24,25] and in others that developed the means and methods of its application [18,19,20,21,22]. In June 2020, CRM assembled these constructs in a taxonomy of statements (n=149). They identified, characterised, and explained observable features of the collective action and collaborative work of implementation (the taxonomy of NPT statements is presented in the online supplementary material),

-

2.

De-duplication and disambiguation. The taxonomy of 149 statements assembled in selection and structuring work included multiple duplicates, along with ambiguous and overlapping descriptions of constructs. CRM identified duplicate, ambiguous, and overlapping constructs. These were then either disambiguated or eliminated. After this work was competed, 38 discrete constructs were retained to make up a ‘first pass’ coding manual (the ‘first pass’ coding manual is presented in the online supplementary material).

-

3.

Piloting. The ‘first pass’ manual was piloted. CRM used it to code two papers selected from an earlier NPT systematic review. These were comprehensively coded and checked by all the authors of this paper, who critically commented on codes and coding decisions. The same coding manual was then applied to two sets of interview data collected in other studies that were informed by NPT. First, AG coded transcripts of interviews (n=55 with managers, practitioners, and patients) conducted for an evaluation of the accelerated implementation of remote clinician-patient interaction in a tertiary orthopaedic centre during the COVID-19 pandemic. Second, KG coded transcripts of interviews (n=22, with community mental health professionals) conducted for the process evaluation of the EYE-2 Trial (an engagement intervention for first episodes of psychosis employed in early intervention in the community) [26].

-

4.

Further disambiguation. Pilot work demonstrated that the main elements of the ‘first pass’ coding manual were workable in practice. The piloting exercise revealed that the first pass coding manual was hard to use because it was over-complex and because it micro-managed the process of interpretation. This defeated attempts at nuanced interpretation. Additional work to disambiguate constructs and eliminate overlapping or redundant ones was therefore undertaken as we worked through steps 5–8, below.

-

5.

Identification of context-related constructs. Within the coding manual the contexts in which implementation work takes place remained invisible, although taking context into account had been an important element of theory development and elaboration over time [10, 11]. The contexts of implementation can be understood as both structures and processes [27]. To remedy the absence of constructs representing context, CRM returned to the taxonomy of 149 NPT constructs and the first pass coding manual and searched them for salient descriptors of context. Four of these were identified and were added to the manual.

-

6.

Further piloting. The four constructs relating to implementation contexts were piloted ‘in use’ by CRM on a set of interview transcripts (n=36) collected in a study of professionals’ participation in the implementation of treatment escalation plans to manage care at the end of life in British hospitals [28]. It was found that these constructs characterised process contexts effectively.

-

7.

Presentation. The structure of the coding manual was then presented and discussed in a series of international webinars in February–April 2021. Discussion with participants in those webinars assisted in clarifying the ways that NPT constructs fitted together and characterised actual processes and outcomes.

-

8.

Agreement. Once the final structure of the coding manual was laid out, all authors then read and commented on it. This led to further ruthless editing and simplification of the coding manual.

-

9.

Post-submission. Journal peer review is intended to improve papers for publication. In this case, it led to a clearer and more coherent presentation of the methods leading to the development of the coding manual and of the coding manual itself. An important outcome of this process was further simplification of the construct descriptors in Tables 1 and 2. These were also linked to their primary sources in the NPT literature.

-

10.

‘Living peer review’. Between the initial submission of a manuscript to Implementation Science (2 September 2021) and the finalisation of the manuscript (December 30, 2021), the first draft of the coding manual was viewed or downloaded more than 1600 times from the preprint servers ResearchSquare.com and ResearchGate.net. This led to useful feedback from researchers who began to use the coding manual to do ‘real-world’ data analysis as soon as it became available but who did not have specific NPT expertise. As a result of this ‘living peer review’, further simplification of the descriptions of NPT constructs was undertaken by CRM.

Results: a coding manual for normalisation process theory

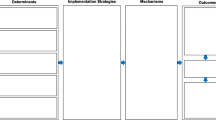

Working through the procedures described above led to part A of the coding manual for NPT. This is presented in Table 1 and consists of 12 primary NPT constructs. Although it was not originally intended to do so, we found that the final structure of the coding manual sits easily alongside the Context-Mechanism-Outcome configuration of realist evaluation studies [54]. We describe this in Fig. 1. The array of primary NPT constructs took the following form.

-

1.

Contexts are events in systems unfolding over time within and between settings in which implementation work is done (primary NPT constructs: strategic intentions, adaptive execution, negotiating capability, reframing organisational logic).

-

2.

Mechanisms motivate and shape the work that people do when they participate in implementation processes (primary NPT constructs: coherence-building, cognitive participation, collective action, reflexive monitoring).

-

3.

Outcomes are the effects of implementation work in context—that make visible how things change as implementation processes proceed (primary NPT constructs: intervention performance, normative restructuring, relational restructuring, sustainment).

These 12 constructs form a general set of codes that can be applied to almost any textual data whether these are fieldnotes, interview transcripts, or published texts. They are all grounded in empirical research. Part A (Table 1) of the coding manual thus provides a general set of codes or categories that guide analytic work. Each construct is named and briefly described. Additionally, each descriptor is accompanied by an example of the empirical application of the construct in already published work. The aim of a coding manual is to provide guidance about how to interpret data that is often highly nuanced and represents complex and sometimes very dynamic processes at work. However, no theory, framework, or model can generate a set of codes that will infallibly cover all possible features of data. In this case, guidance about interpretation, rather than scriptural authority, is the primary intention of our coding manual. Detailed guidance on the process of coding can be found in work by Strauss [1] and Schreier [2].

More granular possibilities are presented in part B (Table 2) of the coding manual. Here, the four NPT primary constructs related to mechanisms of purposive social action (coherence-building, cognitive participation, collective action, reflexive monitoring), each possess four associated secondary constructs. These secondary constructs provide further and equally empirically grounded codes where the available qualitative data support interpretation at that level of detail. Once again, each construct is named and briefly described, and each descriptor is accompanied by an example of the empirical application of the construct in already published work. However, the use of these 16 secondary constructs in coding is not mandatory, and many papers included in systematic reviews [12,13,14] of NPT studies seem either to have treated them as discretionary or not referred to them at all. They are however valuable and important, and thus have explanatory value, because the mechanisms that motivate and shape implementation processes are often those that are mobilised to overcome perceived problems of context. In NPT, analysis always focuses on purposive social action—the work that people do to enact evidence or innovation in practice—and for this reason, focusing attention on the constructs that characterise action is central to the interpretive task.

Discussion

The purpose of developing this coding manual was to clarify and simplify NPT for the user and to make it more easily integrated and workable in research on the adoption, implementation, and use of sociotechnical and organisational innovations. In qualitative content analysis—as in other forms of qualitative analysis—proliferating constructs can easily make the business of coding ever more microscopic and can mean that it becomes less analytically rewarding. Indeed, the more parsimonious a prescheduled theoretical structure is, the more space it provides for nuanced interpretation and the development of novel categories of data and the analytic constructs that can be derived from them.

In the development of the NPT coding manual described here, we sought to eliminate ambiguity and add workability from the outset. The process of selection and structuring we describe yielded a set of 12 primary NPT constructs (Table 1: coding manual part A) and 16 sub-constructs (Table 2: coding manual part B). As Fig. 1 shows, these identify, characterise, and explain the course of implementation processes through which strategic intentions are translated into practices and enable understanding of how enacting those practices can lead to different outcomes, and to varying degrees of sustainment.

Coding is a centrally important procedure in qualitative analysis [1], but it must be emphasised that it is only one part of a whole bundle of cognitive processes through which researchers make and organise meanings in the data. Here, a coding manual cannot cover all analytic possibilities presented by a qualitative data set. Reflexive procedures for identifying phenomena outside the scope of a theory, developing new codes, and linking them to other explanatory models are always important in theory-informed qualitative work. The act of coding involves descriptive work that is a foundation for the interpretation of data, but it is not a proxy for it nor is the purpose of a coding manual to verify the underpinning theory. The whole purpose of coding, and of linking coding to theory, is to build and inform interpretation and understanding. This is not a discrete stage in data analysis but is continuous throughout [1].

Linking NPT to the CMO model of realist evaluation did not happen by accident. NPT was developed through a series of iterations that were already heading in this direction. This began with empirical studies that led to rigorous analysis of the mechanisms that motivate and shape implementation processes [7, 8]. As the theory was developed and applied, further consideration was given to the problem of contexts [9, 13, 16] and to the question of how mechanisms interact with contexts to produce specific outcomes [11, 24, 25, 55]. At the same time, systematic reviews [12,13,14] revealed that the use of NPT was impeded because researchers without a strong theoretical background in the social sciences needed both clearer definitions of constructs and a conceptual toolkit that linked these together in a way that enabled them to see how implementation mechanisms and contexts interact with each other to shape different kinds of outcomes. Drawing these together in a single-coding manual would assist in solving these problems.

Strengths and limitations

We describe a set of methods likely to be useful be useful to qualitative researchers in other areas of research who wish to consider developing such manuals for other theories (for example, relational inequalities theory [56] or event system theory [57]). A strength of the work was that developing the coding manual was undertaken by an international multidisciplinary team working with personal experience of developing and working with NPT and with other implementation frameworks, models, and theories. This ensured that from the outset the development of the coding manual was closely linked to knowledge about the ways that NPT can be used. An unanticipated consequence of the coding manual being published on preprint servers (ResearchSquare.Com and ResearchGate.Com) was that other researchers started to use it almost immediately and quickly fed back criticism or encouragement. This added value to both the development process and the final product.

This work was undertaken opportunistically and grew organically. The manual thus developed cumulatively and in an ad hoc way. Working from a structured protocol would have added greater methodological transparency and perhaps also potential for replication of the development process. Finally, researchers working from different perspectives, with different experiences of NPT, using primary empirical studies rather than theory papers, or working from a prescheduled protocol, might have produced a different coding manual.

Conclusion

This paper describes the procedures by which the NPT coding manual for qualitative research was produced. It also presents the manual ready for use. But more than this, the process of producing the coding manual has also led to the simplification and consolidation of the theory by bringing together empirically grounded constructs derived from multiple iterations of theoretical development over two decades.

Coding manuals are useful tools to support analysis in qualitative research. They reduce cognitive load and at the same time render the assumptions underpinning qualitative analysis transparent and easily shared amongst teams of researchers. The coding manual makes the application of NPT simpler for the user. This adds value to qualitative research on the adoption, implementation, and sustainment of innovations by providing a stable, workable, set of constructs that sit comfortably alongside the well-established model of realist evaluation [54]. It also forms a translational framework for researching and evaluating implementation processes and thus complements other resources for NPT researchers such as the NPT Toolkit and the NOMAD survey instrument [17, 19, 21, 22].

Availability of data and materials

All materials used in this research are included in this paper as tables or are appended as online supplementary materials.

Abbreviations

- AKI:

-

Acute kidney injury

- CDSS:

-

Computer decision support system

- CM:

-

Care manager

- CST:

-

Cognitive stimulation therapy

- ERAS:

-

Enhanced recovery after surgery

- FV:

-

Family violence

- GP:

-

General practice

- IPC:

-

Infection prevention and control

- LGBT:

-

Lesbian, gay, bisexual and transsexual

- MCH:

-

Mother and child health nurse

- MOVE:

-

Improving Maternal and Child Health Care for Vulnerable Mothers (Trial Acronym)

- NPT:

-

Normalisation process theory

- OA:

-

Osteo-arthritis

- PO:

-

Physician organisations

- SDM:

-

Shared decision-making

References

Strauss A. Qualitative analysis for social scientists. Cambridge: Cambridge University Press; 1987.

Schreier M. Qualitative content analysis in practice. London: SAGE; 2012.

Hsieh H-F, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–88.

Patton MQ. Two decades of developments in qualitative inquiry: a personal, experiential perspective. Qual Soc Work. 2002;1(3):261–83.

McLellan-Lemal K, MacQueen E. Team-based codebook development: structure, process, and agreement. In: Guest G, editor. Handbook for team-based qualitative research. Altamira: Lanham MD; 2008. p. 119–36.

Adams GS, Converse BA, Hales AH, Klotz LE. People systematically overlook subtractive changes. Nature. 2021;592(7853):258–61.

May C. A rational model for assessing and evaluating complex interventions in health care. BMC Health Serv Res. 2006;6(86):1–11.

May C, Finch T. Implementing, embedding, and integrating practices: an outline of normalization process theory. Sociology. 2009;43(3):535–54.

May C. Towards a general theory of implementation. Implement Sci. 2013;8(1):18.

May C, Johnson M, Finch T. Implementation, context and complexity. Implement Sci. 2016;11(1):141.

May C, Rapley T, Finch T. Normalization Process Theory. In: Nilsen P, Birken S, editors. International Handbook of Implementation Science. London: Edward Elgar; 2020. p. 144–67.

McEvoy R, Ballini L, Maltoni S, O’Donnell CA, Mair FS, MacFarlane A. A qualitative systematic review of studies using the normalization process theory to research implementation processes. Implement Sci. 2014;9:2.

May C, Cummings A, Girling M, Bracher M, Mair FS, et al. Using normalization process theory in feasibility studies and process evaluations of complex healthcare interventions: a systematic review. Implement Sci. 2018;13(1):80.

Huddlestone L, Turner J, Eborall H, Hudson N, Davies M, Martin G. Application of normalisation process theory in understanding implementation processes in primary care settings in the UK: a systematic review. BMC Fam Pract. 2020;21(1):1–16.

Mohr JW, Wagner-Pacifici R, Breiger RL. Towards a computational hermeneutics. Big Data Soc. 2015;2(2):2053951715613809.

May C, Finch T, Mair F, Ballini L, Dowrick C. Understanding the implementation of complex interventions in health care: the normalization process model. BMC Health Serv Res. 2007;7:148.

May C, Mair FS, Finch T, MacFarlane A, Dowrick C, et al. Development of a theory of implementation and integration: normalization process Theory. Implement Sci. 2009;4:29.

Murray E, May C, Mair F. Development and formative evaluation of the e-Health Implementation Toolkit (e-HIT). BMC Med Inform Decis. 2010;10(1):61.

May C, Finch T, Ballini L, MacFarlane A, Mair F, Murray E, et al. Evaluating complex interventions and health technologies using normalization process theory: development of a simplified approach and web-enabled toolkit. BMC Health Serv Res. 2011;11(1):245.

Finch TL, Rapley T, Girling M, Mair FS, Murray E, Treweek S, et al. Improving the normalization of complex interventions: measure development based on normalization process theory (NoMAD): study protocol. Implement Sci. 2013;8:43.

Rapley T, Girling M, Mair FS, Murray E, Treweek S, McColl E, et al. Improving the normalization of complex interventions: part 1 - development of the NoMAD instrument for assessing implementation work based on normalization process theory (NPT). BMC Med Res Methodol. 2018;18(1):133.

Finch TL, Girling M, May C, Mair FS, Murray E, Treweek S, et al. Improving the normalization of complex interventions: part 2 - validation of the NoMAD instrument for assessing implementation work based on normalization process theory (NPT). BMC Med Res Methodol. 2018;18(1):135.

Mair FS, May C, O'Donnell C, Finch T, Sullivan F, Murray E. Factors that promote or inhibit the implementation of e-health systems: an explanatory systematic review. Bull World Health Organ. 2012;90(5):357–64.

May C, Sibley A, Hunt K. The nursing work of hospital-based clinical practice guideline implementation: an explanatory systematic review using normalisation process theory. Int J Nurs Stud. 2014;51(2):289–99.

Johnson MJ, May CR. Promoting professional behaviour change in healthcare: what interventions work, and why? A theory-led overview of systematic reviews. BMJ Open. 2015;5(9):e008592.

Greenwood K, Webb R, Gu J, Fowler D, de Visser R, Bremner S, et al. The Early Youth Engagement in first episode psychosis (EYE-2) study: pragmatic cluster randomised controlled trial of implementation, effectiveness and cost-effectiveness of a team-based motivational engagement intervention to improve engagement. Trials. 2021;22(1):272.

Hawe P, Shiell A, Riley T. Theorising interventions as events in systems. Am J Community Psychol. 2009;43(3-4):267–76.

May C, Myall M, Lund S, Campling N, Bogle S, Dace S, et al. Managing patient preferences and clinical responses in acute pathophysiological deterioration: what do clinicians think treatment escalation plans do? Soc Sci Med. 2020;258:113143.

Ong BN, Morden A, Brooks L, Porcheret M, Edwards JJ, Sanders T, et al. Changing policy and practice: making sense of national guidelines for osteoarthritis. Soc Sci Med. 2014;106:101–9.

Pope C, Halford S, Turnbull J, Prichard J, Calestani M, May C. Using computer decision support systems in NHS emergency and urgent care: ethnographic study using normalisation process theory. BMC Health Serv Res. 2013;13(1):111.

Agreli H, Barry F, Burton A, Creedon S, Drennan J, et al. Ethnographic study using normalization process theory to understand the implementation process of infection prevention and control guidelines in Ireland. BMJ Open. 2019;9(8):e029514.

Sutton E, Herbert G, Burden S, Lewis S, Thomas S, Ness A, et al. Using the normalization process theory to qualitatively explore sense-making in implementation of the enhanced recovery after surgery programme: “it’s not rocket science”. PLoS One. 2018;13(4):e0195890.

Dickinson C, Gibson G, Gotts Z, Stobbart L, Robinson L. Cognitive stimulation therapy in dementia care: exploring the views and experiences of service providers on the barriers and facilitators to implementation in practice using normalization process theory. Int Psychogeriatr. 2017;29(11):1869–78.

Jones CH, Glogowska M, Locock L, Lasserson DS. Embedding new technologies in practice–a normalization process theory study of point of care testing. BMC Health Serv Res. 2016;16(1):591.

Trietsch J, van Steenkiste B, Hobma S, Frericks A, Grol R, Metsemakers J, et al. The challenge of transferring an implementation strategy from academia to the field: a process evaluation of local quality improvement collaboratives in Dutch primary care using the normalization process theory. J Eval Clin Pract. 2014;20(6):1162–71.

Hall A, Wilson CB, Stanmore E, Todd C. Implementing monitoring technologies in care homes for people with dementia: a qualitative exploration using normalization process theory. Int J Nurs Stud. 2017;72:60–70.

Overbeck G, Davidsen AS, Kousgaard MB. Enablers and barriers to implementing collaborative care for anxiety and depression: a systematic qualitative review. Implement Sci. 2016;11(1):165.

Foster M, Burridge L, Donald M, Zhang J, Jackson C. The work of local healthcare innovation: a qualitative study of GP-led integrated diabetes care in primary health care. Organization, structure and delivery of healthcare. BMC Health Serv Res. 2016;16(1):11.

Røsstad T, Garåsen H, Steinsbekk A, Håland E, Kristoffersen L, Grimsmo A. Implementing a care pathway for elderly patients, a comparative qualitative process evaluation in primary care. BMC Health Serv Res. 2015;15(1):86.

May C, Rapley T, Mair FS, Treweek S, Murray E et al: Normalization Process Theory on-line user’s manual, toolkit, and NoMAD instrument. Available from http://www.Normalizationprocess.org. Accessed 27 Oct 2021.

Keenan J, Poland F, Manthorpe J, Hart C, Moniz-Cook E. Implementing e-learning and e-tools for care home staff supporting residents with dementia and challenging behaviour: a process evaluation of the ResCare study using normalisation process theory. Dementia. 2020;19(5):1604–20.

Alharbi TS, Carlström E, Ekman I, Olsson L-E. Implementation of person-centred care: management perspective. J Hosp Admin. 2014;3(3):p107.

Morden A, Brooks L, Jinks C, Porcheret M, Ong BN, Dziedzic K. Research “push”, long term-change, and general practice. J Health Organ Manag. 2015;29(7):798–821.

Lloyd A, Joseph-Williams N, Edwards A, Rix A, Elwyn G. Patchy 'coherence': using normalization process theory to evaluate a multi-faceted shared decision-making implementation program (MAGIC). Implement Sci. 2013;8:102.

Valaitis R, Cleghorn L, Dolovich L, Agarwal G, Gaber J, Mangin D, et al. Examining interprofessional team structures and processes in the implementation of a primary care intervention (Health TAPESTRY) for older adults using normalization process theory. BMC Fam Pract. 2020;21(1):63.

Burau V, Carstensen K, Fredens M, Kousgaard MB. Exploring drivers and challenges in implementation of health promotion in community mental health services: a qualitative multi-site case study using normalization process theory. BMC Health Serv Res. 2018;18(1):36.

Asiedu GB, Fang JL, Harris AM, Colby CE, Carroll K. Health Care Professionals’ perspectives on teleneonatology through the lens of normalization process theory. Health Sci Rep. 2019;2(2):e111.

Shulver W, Killington M, Crotty M. ‘Massive potential’ or ‘safety risk’? Health worker views on telehealth in the care of older people and implications for successful normalization. BMC Med Inform Dec Mak. 2016;16(1):131.

Hooker L, Small R, Humphreys C, Hegarty K, Taft A. Applying normalization process theory to understand implementation of a family violence screening and care model in maternal and child health nursing practice: a mixed method process evaluation of a randomised controlled trial. Implement Sci. 2015;10(1):39.

Ziegler E, Valaitis R, Yost J, Carter N, Risdon C. “Primary care is primary care”: use of normalization process theory to explore the implementation of primary care services for transgender individuals in Ontario. PLoS One. 2019;14(4):e0215873.

Holtrop JS, Potworowski G, Fitzpatrick L, Kowalk A, Green LA. Effect of care management program structure on implementation: a normalization process theory analysis. BMC Health Serv Res. 2016;16(1):386.

Scott J, Finch T, Bevan M, Maniatopoulos G, Gibbins C, Yates B. Acute kidney injury electronic alerts: mixed methods normalisation process theory evaluation of their implementation into secondary care in England. BMJ Open. 2019;9(12):e032925.

Bamford C, Poole M, Brittain K, Chew-Graham C, Fox C, Iliffe S, et al. team C: Understanding the challenges to implementing case management for people with dementia in primary care in England: a qualitative study using normalization process theory. BMC Health Serv Res. 2014;14(1):1–2.

Pawson R, Tilley N. Realistic evaluation. London: Sage Publications; 1997.

May C. Agency and implementation: understanding the embedding of healthcare innovations in practice. Soc Sci Med. 2013;78(0):26–33.

Avent-Holt D, Tomaskovic-Devey D. Organizations as the building blocks of social inequalities. Sociol Compass. 2019;13(2):e12655.

Morgeson FP, Mitchell TR, Liu D. Event system theory: an event-oriented approach to the organizational sciences. Acad Manag Rev. 2015;40(4):515–37.

Funding

Contributions of CRM and EM were supported by independent research funded by NIHR through support of the North Thames Applied Research Collaborative. Contributions of TLF, TR, and SP were similarly supported by the NIHR North East and North Cumbria Applied Research Collaborative. The views expressed are those of the authors and not necessarily those of the NIHR or the Department of Health and Social Care.

Author information

Authors and Affiliations

Contributions

Conception: CRM and TR. Design: CRM, BA, MB, TR, EM. Interpretation of data CRM, BA, MB, TLF, AG, MG, KG, AMacF, FSM, CMM, EM, SP, TR. Substantial revision: CRM, BA, MB, TLF, AG, MG, KG, AMacF, FSM, CMM, EM, SP, TR. The authors read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

No human subjects, animals, or tissue were used in this research, and no Institutional Review Board/Research Ethics Committee approval was necessary to undertake it.

Consent for publication

Not applicable.

Competing interests

All authors have been involved in the development or use of normalisation process theory.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

May, C.R., Albers, B., Bracher, M. et al. Translational framework for implementation evaluation and research: a normalisation process theory coding manual for qualitative research and instrument development. Implementation Sci 17, 19 (2022). https://doi.org/10.1186/s13012-022-01191-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-022-01191-x