Abstract

Background

The World Health Organisation’s global strategy for digital health emphasises the importance of patient involvement. Understanding the usability and acceptability of wearable devices is a core component of this. However, usability assessments to date have focused predominantly on healthy adults. There is a need to understand the patient perspective of wearable devices in participants with chronic health conditions.

Methods

A systematic review was conducted to identify any study design that included a usability assessment of wearable devices to measure mobility, through gait and physical activity, within five cohorts with chronic conditions (Parkinson’s disease [PD], multiple sclerosis [MS], congestive heart failure, [CHF], chronic obstructive pulmonary disorder [COPD], and proximal femoral fracture [PFF]).

Results

Thirty-seven studies were identified. Substantial heterogeneity in the quality of reporting, the methods used to assess usability, the devices used, and the aims of the studies precluded any meaningful comparisons. Questionnaires were used in the majority of studies (70.3%; n = 26) with a reliance on intervention specific measures (n = 16; 61.5%). For those who used interviews (n = 17; 45.9%), no topic guides were provided, while methods of analysis were not reported in over a third of studies (n = 6; 35.3%).

Conclusion

Usability of wearable devices is a poorly measured and reported variable in chronic health conditions. Although the heterogeneity in how these devices are implemented implies acceptance, the patient voice should not be assumed. In the absence of being able to make specific usability conclusions, the results of this review instead recommends that future research needs to: (1) Conduct usability assessments as standard, irrespective of the cohort under investigation or the type of study undertaken. (2) Adhere to basic reporting standards (e.g. COREQ) including the basic details of the study. Full copies of any questionnaires and interview guides should be supplied through supplemental files. (3) Utilise mixed methods research to gather a more comprehensive understanding of usability than either qualitative or quantitative research alone will provide. (4) Use previously validated questionnaires alongside any intervention specific measures.

Similar content being viewed by others

Background

Healthcare research is in the midst of a paradigm shift with a move towards more long-term behavioural monitoring through the use of wearable devices. This shift has been recognised by the World Health Organisation (WHO) through its recent publication of a digital health strategy [1]. While wearable devices offer researchers access to previously unattainable information regarding how people behave, additional factors need to be considered when designing and implementing these devices including patient safety, privacy, cost-effectiveness etc. Of critical importance, is that this digital shift should be patient-centred, evidence based, inclusive and contextualised [1].

Whether a device is considered usable by the person who will be wearing it has been identified as “among the most important considerations with patient-orientated digital-based solutions” [2]. The International Organization for Standardization defines usability as “the effectiveness, efficiency, and satisfaction with which specified users achieve specified goals in particular environments” [3]. In this manner usability is a broad concept that can also include the acceptability of, or satisfaction with, a device, while the WHO lists the evaluation of the usability and feasibility of a device being the first steps that should be undertaken when assessing any new digital health intervention [1, 4]. It has been suggested that for wearable devices to be accepted, they must be easy to wear, easy to use, affordable, contain relevant functionality and be aesthetically pleasing [5,6,7,8]. Usability, by its nature, is context specific and understanding how context may influence adoption has been highlighted as a research need in this area [9]. The acceptability of the above will depend on the length of time the device needs to be worn for and the characteristics of those using it, including their health conditions. The concept of usability is therefore almost never ending, as researchers and digital health developers need to ensure that their selected device is fit for purpose within all aspects of their study design. Failure to assess usability may result in researchers implementing devices that are not worn, that are worn or used incorrectly, and thus may negatively impact data collection and quality and limit the impact of any intervention [1, 10, 11].

Usability is likely to be specifically important in contexts where wearable devices are designed to be implemented during real-world tasks or activities. Walking (including gait and physical activity) in particular, is a functional task that is part of most activities of daily living and has been identified as so critical to health, that is has been labelled a ‘vital sign’ [12, 13]. Many consumer wearable measure activity as standard, but in-depth gait analysis, and the production of digital biomarkers linked to gait, is becoming an important feature of current and future research as it is recognised that understanding how people move can inform researchers and clinicians alike of patient progress, behaviour change and intervention effectiveness [14,15,16,17]. Thus, if walking is a key activity being measured by wearables, it is important to understand how usable these devices are in this context. To date, most usability studies have evaluated devices in healthy adults, or have focused primarily on consumer and/or watch based devices [5, 18,19,20,21,22,23,24,25]. However, the needs of healthy adults are likely to be very different than those with chronic health conditions with which many of these devices are deployed to support. For example, issues with fine motor control, skin sensitivity, or balance deficits may be present in clinical cohorts and may be aggravated by the use of certain devices depending on their size, materials and interactivity. A 2015 study suggested that wearable devices are generally accepted by people with chronic conditions, however this research failed to report what type of wearables were assessed, or what chronic conditions were included in the analysis [20]. It has been suggested that health conditions and the specific measurement needs of conditions impacts participant adherence [21]. Specifically in relation to walking, many chronic conditions are associated with symptoms that may impact how well an individual can walk, but the pathophysiology’s and the impact on mobility may be very different [15], for example cardiorespiratory conditions vs neurological. Wearable devices need to be usable across a comprehensive trajectory of mobility problems, thus it is worth exploring users perceptions across multiple cohorts so as to broadly determine their usability in people with chronic conditions. Therefore, this review focused on five clinical cohorts, specifically respiratory problems (chronic obstructive pulmonary disease—COPD), neurodegenerative conditions (Parkinson’s disease—PD), neuroinflammatory problems (multiple sclerosis—MS), osteoporosis and sarcopenia (hip fracture recovery/proximal femoral fracture—PFF), and cardiac pathology (congestive heart failure—CHF). Combined these cohorts are highly prevalent conditions with significant associated disability. Specifically, COPD is the most prevalent chronic respiratory illness globally [26], the rate of prevalence and burden for MS and PD are growing and for PD have doubled [27, 28], PFF is the fracture with the greatest direct cost to the community [29], CHF accounts for up to 2% of healthcare expenditure, while all conditions are associated with greater falls risk which are a significant cause of death and disability globally [30]. Collectively these conditions represent broad array of mobility problems with different trajectories of disability, thus allowing for a comprehensive evaluation of mobility. Although it is likely that some differences in usability may be noted between cohorts, it is nonetheless worth comparing across common conditions to determine where differences and similarities in usability exist.

To the author’s knowledge no systematic review investigating the usability of wearable devices specifically for mobility exists, Given the dearth of literature examining the usability of wearable devices for the assessment of walking in cohorts with chronic health conditions, further evaluations of this are required to support the use and development of these devices in the future. Therefore, this study aimed to conduct a systematic review of the literature to explore the usability of wearable devices to monitor gait and activity in five common patient cohorts where digital mobility assessment may be clinically useful to monitor their symptoms and progress.

Methods

Protocol

This study was pre-registered on PROSPERO (ID: CRD42020165301) and was performed in accordance with the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analysis) statement [31].

Search strategy and eligibility criteria

In January 2020 a search strategy was implemented within PubMed, EMBASE, Medline and Cinhal Plus. The available literature was systematically searched for studies of any research design, that assessed the usability of wearable devices to measure gait or physical activity, in any of the clinical cohorts in Mobilise-D. No language restrictions (including time and language) were applied in any of the databases, while publication dates were open ended up to the search date of January 31st 2020. The strategy was adapted for each database (Table 1).

Two authors (AK and AA) reviewed all titles and abstracts and obtained the full texts of potentially eligible studies. Following this, full-text were independently assessed for eligibility. In instances of disagreement, a third author (WJ) was included for consensus. Studies were included if they fulfilled the criteria outlined within Table 2.

Data extraction

Two authors (AK and RA) independently extracted information regarding the assessment of usability, using a piloted data extraction form. Specifically, the method of assessment (i.e. whether it was qualitative or quantitative) was noted, alongside the factors that were evaluated as part of the usability assessment, the method of analysis used, the name of the questionnaire used (if applicable), and the main findings in relation to the usability assessment. In addition, the following data were also extracted: study design and aim, number of participants, participant characteristics, number of devices used, the anatomical location they were worn, how they were attached to the participant, the duration of their use, whether participant engagement with the device was required, whether the wearable was linked to an additional device, and the context in which the device was deployed (i.e. remote or in a laboratory/clinic environment).

The quality of the included texts was evaluated by two authors (AK and AA). To determine the quality of reporting in studies involving qualitative research, the COREQ checklist was used [32]. COREQ is 32-item a reporting guideline checklist for interviews and focus group. Although not an appraisal tool it may acts as a method to judge reporting quality. The authors (AK and RA) determined whether each of the 32-items had been reported or not. The AXIS tool is a critical appraisal tool of quality for cross-sectional studies [33], which contains 20 questions to assess quality. The authors (AK and AA) responded to each question using yes (+), no (−) or don’t know (?) to judge overall quality with the AXIS tool. Quality of reporting is judged subjectively, with no clear criteria as to what constitutes high or low quality.

Analysis

A narrative synthesis of the data was completed by reporting the findings related to the study characteristics, wearable devices and systems, usability assessment (quantitative methods), usability assessment (qualitative methods) and study quality. Due to the heterogeneity of the data extracted from the included studies, no formal statistical analysis or meta-analysis was possible. Therefore, the results of this review are listed descriptively.

Results

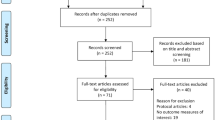

A total of 2054 articles were identified, of which 834 were duplicates. Following exclusion (reasons listed in Fig. 1) a total of 86 studies were selected for full-text screening, of which 37 were included for analysis [34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70].

Study characteristics

The characteristics of the studies are displayed in Table 3. Year of publication ranged from 2008 to 2019. The majority of studies were cross-sectional (83.8%; n = 31). Studies reported a variety of aims, which were categorised as: (i) assessing the usability of a wearable device/system (24.3%, n = 9), (ii) presenting or describing the development of a wearable device/system (8.1%, n = 3), (iii) assessing the feasibility and acceptability of a wearable device/system (59.5%, n = 22), and (iv) interventions to alter participants behaviour (8.1%, n = 3).

Almost half of the studies were completed in participants with PD (n = 18; 48.6%). COPD accounted for 24.3% of studies (n = 9), CHF was studied in 16.2% (n = 6) while MS was assessed in 10.8% of studies (n = 4). No study assessed PFF. On average, 24 participants (2.8) wore the wearable devices within the included articles (Table 3).

Wearable devices and systems

The majority of studies implemented a single device to measure gait or physical activity (n = 19; 51.4%). Across the 37 studies, 32 different wearable devices or systems were deployed, thus limiting the comparisons between them.

Devices were attached to 11 different anatomical sites on the body (Table 4), of which the wrist was the most common site (43.2%; n = 16) [37,38,39, 41, 43, 49,50,51, 54, 56,57,58, 61, 69,70,71], followed by the waist or lower back (27.1%; n = 10) [36, 38,39,40, 46, 55, 56, 58, 61, 67]. With regards to the method of attachment, eight different methods were used (Table 4) of which straps (45.9%; n = 17) [35,36,37,38,39,40,41, 43, 46, 47, 49, 54, 57,58,59,60,61, 70] and clips were the most commonly used (16.2%; n = 6) [45, 52, 56, 62, 64, 65]. Nine studies failed to report how the wearable was attached to the body (24.3%) [42, 45, 48, 50, 51, 55, 63, 68, 69], while four failed to report where they were attached (10.8%) [42, 63, 65, 68].

The majority of participants were asked to wear their devices remotely (n = 29 studies; 78.4%) [34, 37, 38, 41,42,43,44,45,46,47,48,49,50,51, 53,54,55,56,57, 59,60,61,62,63,64,65,66,67,68,69,70]. On average, participants were asked to use devices for 203.5 days (228.4). Anything from 48 h to 12 months was reported in studies (Table 4), however the majority of studies asked participants to utilise devices for 7 days or longer (n = 27; 73.0%) [37, 41,42,43,44,45,46,47,48,49,50, 53,54,55,56,57, 59, 60, 62,63,64,65,66,67,68,69,70].

Most wearables were used as part of a monitoring system (n = 32; 86.5%) [36,37,38, 40,41,42,43,44,45,46,47,48,49,50, 52, 54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70], often where there was a requirement to link devices to either a smartphone/tablet with an app (n = 23; 62.2%) [38, 42,43,44,45,46, 48,49,50,51, 54, 56, 57, 59,60,61,62,63,64,65,66,67,68,69,70]. Required engagement from participants was poorly reported and was unclear or not reported in 45.9% of studies (n = 17) [34, 35, 37, 38, 40, 47, 49, 51,52,53, 56,57,58, 60, 61, 66, 67]. However when it was explicitly reported, 52.4% (n = 11) required participants to engage with an exercise or behavioural programme as part of their use of the wearable device [38, 42,43,44, 46, 48, 54, 55, 57, 63, 68].

Usability assessment: quantitative methods

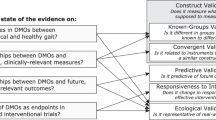

The majority of studies used only quantitative methods to assess usability (Fig. 2), specifically questionnaires (45.9% n = 17) [34,35,36,37, 40, 43,44,45, 48, 49, 51,52,53,54, 57, 66, 69]. Eight studies used only qualitative methods such as interviews or focus groups (21.6%) [38, 41, 47, 55, 56, 65, 68, 70], a further nine implemented mixed methods (24.3%) [39, 42, 46, 50, 60, 62, 63, 65, 67], two studies failed to report what methods they used, yet reported usability findings in their results or conclusions (5.4%) [59, 61], while a single study used researcher observations and field notes to document participants words and perceived usability (2.7%) [58].

Of the 26 studies that implemented questionnaires, (either individually or within mixed methods) 61.5% (n = 16) used an intervention specific questionnaire (Fig. 3) [35, 36, 39, 42,43,44,45, 48,49,50,51,52,53,54, 66, 69]. When reporting the content of their questionnaires (both previously validated and intervention specific), studies often listed the factors that were under consideration within their questionnaire, however just 10 studies (38.5%) provided the questions that they asked [34, 36, 37, 43, 48, 51, 53, 54, 66, 69]. In total 28 factors were listed as being examined as part of the questionnaires, across all 26 studies, including comfort, learnability, helpfulness and satisfaction (Additional file 1: Table S1).

Thirteen studies (50.0%) failed to list an overall result or score for their questionnaires [34, 40, 42, 43, 49,50,51,52,53, 60, 63, 65, 69]. For those 13 that did report a score, an overall average was assigned to 38.5% of studies (n = 5) [35, 39, 44, 45, 54], while 15.4% listed their results as a percentage score (n = 2) [37, 57]. An average result per question was listed for 23.1% of studies (n = 3) [46, 48, 66], while a further 23.1% of studies listed an average per questionnaire subsection (n = 3) [35, 62, 67]. One study listed the raw data of responses (7.7%) [36].

Usability assessment: qualitative method

Of the 17 studies that listed qualitative methods of assessment, six failed to report any method of analysis (35.3%) [38, 39, 58, 60, 63, 67]. A further five appeared to code generally rather than using any recognised formal analysis method (29.4%) [42, 55, 56, 65, 70]. The remaining studies used either a thematic (17.6%; n = 3) [41, 47, 68] or content (17.6%; n = 3) [50, 62, 65] analysis (Fig. 4).

Limited information was provided regarding the qualitative methods. Of the potential 32 items listed on the COREQ checklist, the included studies in this review recorded an average of just 6.7 (5.5) of these items. Interview guides were not provided for studies, however much like the quantitative components, a number of factors were listed across all studies. Specifically, 16 different factors were examined within the qualitative components, including overall experience, difficulties faced, acceptance and comfort (Additional file 1: Table S1).

As a result of the heterogeneity of presented data and the poor methods of reporting, it was not possible to compare usability results either between or within quantitative and qualitative methods, or across cohorts. In response to this, the results as reported within each study were used to determine whether positive or negative statements were provided. A list of the reported results is provided in Table 5.

Usability findings

It was not possible to conduct an assessment of the usability findings.

Study quality

The COREQ quality results have been documented in the “Usability assessment: qualitative method” section. Table 6 outlines the results from the AXIS quality tool. Six studies did not report or had unclear reporting of more than half of the 20-items on the list [38, 39, 59,60,61, 63]. The items that were most poorly reported across studies were; was the sample size justified, was the selection process likely to select participants that were representative of the target population, were measures taken to address and categorise non-responders and, does the response rate raise concerns about non-response bias.

Discussion

This systematic reviewed aimed to explore the usability of wearable devices to measure gait and physical activity in a range of cohorts with chronic health conditions. However, due to the heterogeneity in how usability is measured, combined with consistently poor reporting in the included studies, this aim was not able to be achieved. Ultimately this is the result of a poor quality body of literature. Researchers that include usability assessments within their study designs, are either not completing these assessments to an acceptable standard, or are failing to adequately report them. Although this points to a wider issue with how usability is defined, either way, at a time when research transparency is critical, and when supplemental files are commonly used, poor, basic reporting cannot be considered acceptable. Significant improvements in how usability research is defined and conducted is required to truly understand this concept, and as a result of this review, some basic recommendations for the same can be made.

The use of wearables within research is still relatively new, as evidenced by the years of publication both within this study and as reported elsewhere [72]. It is therefore understandable that just 24% of the studies within this review focused specifically on usability, as much research to date has focused on the technical side of these devices. Indeed, similar research has also noted a lack of usability assessments for wearable devices, suggesting that this is a general issues amongst clinical conditions [73]. For wearables to realise their full potential however, researchers must now begin to focus more attention towards the human factors [74,75,76]. Admittedly, the wide focus of study aims included in this review opened the potential for studies for which usability wasn’t the priority of the assessment. However it nonetheless demonstrated that researchers are attempting to assess usability, but that the quality of these evaluations are, for the most part, not fit for purpose [74, 76], and in some cases amount to little more than a ‘tick the box’ exercise. Poorly communicated, incomparable results have been previously highlighted as a key issue in this domain [4]. Indeed, despite the relative modernity of this concept, usability is consistently highlighted as a necessary step in technological developments, and is included in both WHO and ISO guidance [1, 3]. However, it has not been impactful, with some suggesting that as a construct, usability is vague, not fit for purpose and is at a dead-end in terms of its potential for impact [77]. It is argued that the lack of theory underpinning usability has created an umbrella term in which pragmatism takes preference, with a focus on immediate, study specific concerns that will change depending in the study or product in question [77]. For instance, the usability needs of an app-based intervention that incorporates a wearable device for long-term use, may be very different than the clinical needs of a device intended for 24 h monitoring.

One of the criticisms of usability is that, as an umbrella term existing in a multi-disciplinary space, it spans too many factors and means different things for different people [4, 77]. Usability has been shown to span a range of concepts including comfort, safety, durability, reliability, aesthetics and engagement [76], all of which were covered in the questionnaires and interviews in this review. Although these aspects are relevant to whether a device is acceptable and will be used, they were rarely defined. Rather than attempt to group factors together, a decision was taken to report these factors in the same manner as the studies themselves did so as to highlight the heterogeneity in terminology. This lack of consistency may be partially explained by the absence of frameworks or theories to design usability assessments [4, 72]. Without clearly defined components or theoretical rationale, reasons for evaluating factors may be unclear, thus making comparisons and conclusions difficult. Usability research should learn from behaviour change research which has designed taxonomies of intervention content in order to improve reporting, transparency and understanding [78]. Indeed a call to arms has been made for a flexible, adaptive framework that bridges the communication gap between multiple professions and domains, and serves as a reporting tool for anyone looking to conduct usability research [4]. In the absence of such a tool however, researchers conducting usability assessments need to ensure that, at the very least, they describe their own rationale and content in full so as to begin to improve communication standards themselves.

The lack of consistency in usability terminology was not the only issue encountered within this review. Many basic aspects of study methodology were often not reported, including where the device was worn, how it was attached and how long it was worn for. Linked to this was a concerning lack of both questionnaires and interview guides that were used in usability assessments. It is simply not possible to conclude whether the results of a study are internally consistent unless the measurement tools used to derive conclusions are also supplied, and a failure to provide this information should not be considered acceptable [79, 80]. The suggested taxonomy or meta-framework of usability will not work as a reporting guideline, if existing guidelines are not even enforced by publishers. Future research, and publishing bodies, should ensure that full protocols and methods of assessment are included as part of the basic reporting requirements for publication, through use of supplemental files if required.

User-centred design processes may appear onerous to researchers who are not familiar with them, or for whom feel that usability is not the primary focus of their research (e.g. within pilot or feasibility trials). However, in line with the highlighted need for a better understanding of usability a concept, future usability research needs to also become patient-centred rather than study-centred to fully understand how devices are accepted and used across a variety of contexts and cohorts and to iterate devices in response to this [74]. Various methods to conduct this exist, including think aloud processes, questionnaires, and interviews. Specifically though, mixed methods should be considered gold standard as the sum of the qualitative and quantitative components provides more insight than either method can alone [81]. Mixed methods were used in less than a third of papers of this review, with a focus instead on quantitative methods alone, a finding that was also reported elsewhere [72]. Linked to this is a reliance on intervention specific questionnaires. Various validated measures of usability for wearable technology exist [82,83,84], yet these were infrequently used. Although there is a place for intervention specific measures for understanding specific study details [85, 86], when used alone they limit the ability to compare findings both across and within patient cohorts. Thus, future usability research should not only implement mixed methods as standard practice, but also combine intervention specific measures with previously validated questionnaire to allow for comparison and to derive both specific and generalisable insights.

This review was limited by its inability to make definitive conclusions as a result of the heterogeneity found in almost every variable considered. Furthermore, the focus on five specific disease cohorts limits the generalisability of results, although given the context specific nature of usability, generalisations would not be recommended regardless of what findings were derived from the review. However, the selected cohorts nonetheless provide examples of a variety of conditions which is a progression beyond the typical focus of healthy adults. Furthermore, this review focused only on wearables that measure gait and physical activity and therefore inferences on other more general devices (e.g. blood pressure, heart rate etc.) cannot be made. Finally, it was sometimes difficult to separate whether the usability findings related specifically to the wearable or to the overall system that was being evaluated. However, given that 83.8% of the wearables in this review were paired with another device, this is perhaps not concerning as participants themselves will experience the wearable as part of this system and so their feedback will always intrinsically link the two together.

Conclusion

Technology is at an unprecedented point in its development as it has the potential to drastically alter both how we evaluate and treat various healthcare conditions. However, this can only be realised if it is widely adopted by all stakeholders, including patients and participants. It is possible to infer that wearable devices for gait and physical activity are both acceptable and usable, given the wide variety of devices, placements, durations of use etc., that were reported in this review. However, usability, and patient-centred design, is a critical component of any intervention. It is therefore not enough to simply infer usability. While a call to arms has been made for reporting guidelines and hierarchical frameworks of usability, until they are developed researchers need to be aware of the current pitfalls of the term, and work where possible to avoid them. As such, in the absence of being able to make specific usability conclusions, this review instead recommends that future research needs to:

-

(1)

Conduct usability assessments as standard, irrespective of the cohort under investigation or the type of study undertaken.

-

(2)

Adhere to standard reporting standards (e.g. COREQ) including the basic details of the study. Full copies of any questionnaires and interview guides should be supplied through supplemental files.

-

(3)

Utilise mixed methods research to gather a more comprehensive understanding of usability than either qualitative or quantitative research alone will provide.

-

(4)

Use previously validated questionnaires alongside any intervention specific measures.

-

(5)

Consider the learnings and insights that can be gained from usability research from multiple domains.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CHF:

-

Congestive heart failure

- COPD:

-

Chronic obstructive pulmonary disorder

- MS:

-

Multiple sclerosis

- PD:

-

Parkinson’s disease

- PFF:

-

Proximal femoral fracture

References

WHO. Draft global strategy on digital health. Geneva: World Health Organisation; 2020.

Mathews SC, McShea MJ, Hanley CL, Ravitz A, Labrique AB, Cohen AB. Digital health: a path to validation. NPJ Digit Med. 2019;2:38.

ISO. ISO 9241-11:2018(en) Ergonomics of human-system interaction—Part 11: Usability: Definitions and concepts: International Organization for Standardization; 2018 https://www.iso.org/obp/ui/#iso:std:iso:13407:ed-1:v1:en

Borsci S, Federici S, Malizia A, De Filippis ML. Shaking the usability tree: why usability is not a dead end, and a constructive way forward. Behav Inf Techn. 2018;38(5):519–32.

Bryson D. Unwearables. AI Soc. 2007;22:25–35.

Dvorak J. Moving wearables into the mainstream: taming the Borg. New York: Springer; 2008.

Baig MM, GholamHosseini H, Moqeem AA, Mirza F, Linden M. A systematic review of wearable patient monitoring systems - current challenges and opportunities for clinical adoption. J Med Syst. 2017;41(7):115.

Tsertsidis A, Kolkowska E, Hedstrom K. Factors influencing seniors’ acceptance of technology for ageing in place in the post-implementation stage: a literature review. Int J Med Inform. 2019;129:324–33.

Ferreira JJ, Fernandes CI, Rammal HG, Veiga PM. Wearable technology and consumer interaction: a systematic review and research agenda. Comput Hum Behav. 2021;118:106710.

Wang H, Tao D, Yu N, Qu X. Understanding consumer acceptance of healthcare wearable devices: an integrated model of UTAUT and TTF. Int J Med Inform. 2020;139:104156.

Attig C, Franke T. Abandonment of personal quantification: a review and empirical study investigating reasons for wearable activity tracking attrition. Comput Hum Behav. 2020;102:223–37.

Brabrand M, Kellett J, Opio M, Cooksley T, Nickel CH. Should impaired mobility on presentation be a vital sign? Acta Anaesthesiol Scand. 2018;62(7):945–52.

Middleton A, Fritz SL, Lusardi M. Walking speed: the functional vital sign. J Aging Phys Act. 2015;23(2):314–22.

Coravos A, Khozin S, Mandl KD. Developing and adopting safe and effective digital biomarkers to improve patient outcomes. NPJ Digit Med. 2019;2:1.

Rast FM, Labruyere R. Systematic review on the application of wearable inertial sensors to quantify everyday life motor activity in people with mobility impairments. J Neuroeng Rehabil. 2020;17(1):148.

Keogh A, Sett N, Donnelly S, Mullan Ronan H, Gheta D, Maher-Donnelly M, et al. A thorough examination of morning activity patterns in adults with arthritis and healthy controls, using actigraphy data. Digital Biomarkers. 2020;4:78–88.

Keogh A, Taraldsen K, Caulfield B, Vereijken B. It’s not about the capture, it’s about what we can learn": a qualitative study of experts’ opinions and experiences regarding the use of wearable sensors to measure gait and physical activity. J Neuroeng Rehabil. 2021;18(1):78.

Madigan E, Lin C, Mehregany M. Use and Satisfaction With Wearable Activity Trackers Among Community Dwelling Older People. Home Healthcare, Hospice, and Information Technology Conference; Washington DC; 2014.

Rupp MA, Michaelis JR, McConnell DS, Smither JA. The role of individual differences on perceptions of wearable fitness device trust, usability, and motivational impact. Appl Ergon. 2018;70:77–87.

Sun N, Rau PL. The acceptance of personal health devices among patients with chronic conditions. Int J Med Inform. 2015;84(4):288–97.

Chiauzzi E, Rodarte C, DasMahapatra P. Patient-centered activity monitoring in the self-management of chronic health conditions. BMC Med. 2015;13:77.

Steinert A, Haesner M, Steinhagen-Thiessen E. Activity-tracking devices for older adults: comparison and preferences. Univ Access Inf Soc. 2017;17(2):411–9.

Huberty J, Ehlers DK, Kurka J, Ainsworth B, Buman M. Feasibility of three wearable sensors for 24 hour monitoring in middle-aged women. BMC Womens Health. 2015;15:55.

Preusse KC, Mitzner TL, Fausset CB, Rogers WA. Older adults’ acceptance of activity trackers. J Appl Gerontol. 2017;36(2):127–55.

Keogh A, Dorn JF, Walsh L, Calvo F, Caulfield B. Comparing the usability and acceptability of wearable sensors among older irish adults in a real-world context: observational study. JMIR Mhealth Uhealth. 2020;8(4):e15704.

Labaki WW, Han MK. Chronic respiratory diseases: a global view. Lancet Respir Med. 2020;8(6):531–3.

Dorsey ER, Elbaz A, Nichols E, Abd-Allah F, Abdelalim A, Adsuar JC, et al. Global, regional, and national burden of Parkinson’s disease, 1990–2016: a systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2018;17(11):939–53.

Walton C, King R, Rechtman L, Kaye W, Leray E, Marrie R, et al. Rising prevalence of multiple sclerosis worldwide: Insights from the Atlas of MS. Multiple Sclerosis J. 2020;26(14):1816–21.

Johnell O, Kanis JA. An estimate of the worldwide prevalence, mortality and disability associated with hip fracture. Osteoporos Int. 2004;15(11):897–902.

James SL, Lucchesi LR, Bisignano C, Castle CD, Dingels ZV, Fox JT, et al. The global burden of falls: global, regional and national estimates of morbidity and mortality from the Global Burden of Disease Study 2017. Inj Prev. 2020;26(Supp 1):i3–11.

Moher D, Liberati A, Tetzlaff J, Altman DG, Group P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Br Med J. 2009;339:b2535.

Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007;19:349–57.

Downes MJ, Brennan ML, Williams HC, Dean RS. Development of a critical appraisal tool to assess the quality of cross-sectional studies (AXIS). BMJ Open. 2016;6(12):e011458.

Adams JL, Dinesh K, Xiong M, Tarolli CG, Sharma S, Sheth N, et al. Multiple wearable sensors in parkinson and Huntington disease individuals: a pilot study in clinic and at home. Digit Biomark. 2017;1(1):52–63.

Albani G, Ferraris C, Nerino R, Chimienti A, Pettiti G, Parisi F, et al. An integrated multi-sensor approach for the remote monitoring of Parkinson’s disease. Sensors (Basel, Switzerland). 2019;19:21.

Bächlin M, Plotnik M, Roggen D, Giladi N, Hausdorff JM, Tröster G. A wearable system to assist walking of Parkinson s disease patients. Methods Inf Med. 2010;49(1):88–95.

Botros A, Schütz N, Camenzind M, Urwyler P, Bolliger D, Vanbellingen T, et al. Long-term home-monitoring sensor technology in patients with Parkinson’s disease-acceptance and adherence. Sensors (Basel, Switzerland). 2019;19:23.

Cancela J, Pastorino M, Arredondo MT, Nikita KS, Villagra F, Pastor MA. Feasibility study of a wearable system based on a wireless body area network for gait assessment in Parkinson’s disease patients. Sensors (Basel). 2014;14(3):4618–33.

Cancela J, Pastorino M, Tzallas AT, Tsipouras MG, Rigas G, Arredondo MT, et al. Wearability assessment of a wearable system for Parkinson’s disease remote monitoring based on a body area network of sensors. Sensors (Basel, Switzerland). 2014;14(9):17235–55.

Carpinella I, Cattaneo D, Bonora G, Bowman T, Martina L, Montesano A, et al. Wearable sensor-based biofeedback training for balance and gait in Parkinson disease: a pilot randomized controlled trial. Arch Phys Med Rehabil. 2017;98(4):622-30.e3.

Chiauzzi E, Hekler EB, Lee J, Towner A, DasMahapatra P, Fitz-Randolph M. In search of a daily physical activity “sweet spot”: piloting a digital tracking intervention for people with multiple sclerosis. Digit Health. 2019;5:2055207619872077.

Colon-Semenza C, Latham NK, Quintiliani LM, Ellis TD. Peer coaching through mHealth targeting physical activity in people with Parkinson disease: feasibility study. JMIR Mhealth Uhealth. 2018;6(2):e42.

Deka P, Pozehl B, Norman JF, Khazanchi D. Feasibility of using the Fitbit® Charge HR in validating self-reported exercise diaries in a community setting in patients with heart failure. Eur J Cardiovasc Nurs. 2018;17(7):605–11.

Ellis T, Latham NK, DeAngelis TR, Thomas CA, Saint-Hilaire M, Bickmore TW. Feasibility of a virtual exercise coach to promote walking in community-dwelling persons with Parkinson disease. Am J Phys Med Rehabil. 2013;92(6):472–81.

Ellis TD, Cavanaugh JT, DeAngelis T, Hendron K, Thomas CA, Saint-Hilaire M, et al. Comparative effectiveness of mHealth-supported exercise compared with exercise alone for people with Parkinson disease: randomized controlled pilot study. Phys Ther. 2019;99(2):203–16.

Ferreira JJ, Godinho C, Santos AT, Domingos J, Abreu D, Lobo R, et al. Quantitative home-based assessment of Parkinson’s symptoms: the SENSE-PARK feasibility and usability study. BMC Neurol. 2015;15:1.

Floegel TA, Allen KD, Buman MP. A pilot study examining activity monitor use in older adults with heart failure during and after hospitalization. Geriatr Nurs. 2019;40(2):185–9.

Ginis P, Nieuwboer A, Dorfman M, Ferrari A, Gazit E, Canning CG, et al. Feasibility and effects of home-based smartphone-delivered automated feedback training for gait in people with Parkinson’s disease: a pilot randomized controlled trial. Parkinsonism Relat Disord. 2016;22:28–34.

Heijmans M, Habets JGV, Herff C, Aarts J, Stevens A, Kuijf ML, et al. Monitoring Parkinson’s disease symptoms during daily life: a feasibility study. NPJ Parkinsons Dis. 2019;5:21.

Hermanns M, Haas BK, Lisk J. Engaging older adults with Parkinson’s disease in physical activity using technology: a feasibility study. Gerontol Geriatr Med. 2019;5:2671.

Joshi R, Bronstein JM, Keener A, Alcazar J, Yang DD, Joshi M, et al. PKG movement recording system use shows promise in routine clinical care of patients with Parkinson’s disease. Front Neurol. 2019;10:1027.

Kayes NM, Schluter PJ, McPherson KM, Leete M, Mawston G, Taylor D. Exploring actical accelerometers as an objective measure of physical activity in people with multiple sclerosis. Arch Phys Med Rehabil. 2009;90(4):594–601.

McNamara RJ, Tsai LL, Wootton SL, Ng LW, Dale MT, McKeough ZJ, et al. Measurement of daily physical activity using the SenseWear Armband: compliance, comfort, adverse side effects and usability. Chron Respir Dis. 2016;13(2):144–54.

Midaglia L, Mulero P, Montalban X, Graves J, Hauser SL, Julian L, et al. Adherence and satisfaction of smartphone- and smartwatch-based remote active testing and passive monitoring in people with multiple sclerosis: nonrandomized interventional feasibility study. J Med Internet Res. 2019;21(8):1.

Moy ML, Weston NA, Wilson EJ, Hess ML, Richardson CR. A pilot study of an Internet walking program and pedometer in COPD. Respir Med. 2012;106(9):1342–50.

Orme MW, Weedon AE, Saukko PM, Esliger DW, Morgan MD, Steiner MC, et al. Findings of the chronic obstructive pulmonary disease-sitting and exacerbations trial (COPD-SEAT) in reducing sedentary time using wearable and mobile technologies with educational support: randomized controlled feasibility trial. JMIR Mhealth Uhealth. 2018;6(4):e84.

SilvadeLima AL, Hahn T, Evers LJW, de Vries NM, Cohen E, Afek M, et al. Feasibility of large-scale deployment of multiple wearable sensors in Parkinson’s disease. PLoS ONE. 2017;12(12):e0189161.

Stack E, King R, Janko B, Burnett M, Hammersley N, Agarwal V, et al. Could in-home sensors surpass human observation of people with Parkinson’s at high risk of falling? An ethnographic study. BioMed Res Int. 2016;2016:1–10.

Strisland F, Svagård I, Seeberg TM, Mathisen BM, Vedum J, Austad HO, et al. ESUMS: a mobile system for continuous home monitoring of rehabilitation patients. Conference Proceedings : Annual International Conference of the IEEE Engineering in Medicine and Biology Society IEEE Engineering in Medicine and Biology Society Annual Conference. 2013;2013:4670–3.

Svagård I, Austad HO, Seeberg T, Vedum J, Liverud A, Mathiesen BM, et al. A usability study of a mobile monitoring system for congestive heart failure patients. Stud Health Technol Inform. 2014;205:528–32.

Tzallas AT, Tsipouras MG, Rigas G, Tsalikakis DG, Karvounis EC, Chondrogiorgi M, et al. PERFORM: a system for monitoring, assessment and management of patients with Parkinson’s disease. Sensors (Basel, Switzerland). 2014;14(11):21329–57.

van der Weegen S, Verwey R, Tange HJ, Spreeuwenberg MD, de Witte LP. Usability testing of a monitoring and feedback tool to stimulate physical activity. Patient Prefer Adherence. 2014;8:311–22.

Varnfield M, Karunanithi MK, Särelä A, Garcia E, Fairfull A, Oldenburg BF, et al. Uptake of a technology-assisted home-care cardiac rehabilitation program. Med J Aust. 2011;194(4):S15–9.

Verwey R, van der Weegen S, Spreeuwenberg M, Tange H, van der Weijden T, de Witte L. A pilot study of a tool to stimulate physical activity in patients with COPD or type 2 diabetes in primary care. J Telemed Telecare. 2014;20(1):29–34.

Verwey R, van der Weegen S, Spreeuwenberg M, Tange H, van der Weijden T, de Witte L. Process evaluation of physical activity counselling with and without the use of mobile technology: a mixed methods study. Int J Nurs Stud. 2016;53:3–16.

Vooijs M, Alpay LL, Snoeck-Stroband JB, Beerthuizen T, Siemonsma PC, Abbink JJ, et al. Validity and usability of low-cost accelerometers for internet-based self-monitoring of physical activity in patients with chronic obstructive pulmonary disease. Interact J Med Res. 2014;3(4):e14.

Vorrink SN, Kort HS, Troosters T, Lammers J-WJ. A mobile phone app to stimulate daily physical activity in patients with chronic obstructive pulmonary disease: development, feasibility, and pilot studies. JMIR mHealth uHealth. 2016;4(1):e11.

Wendrich K, van Oirschot P, Martens MB, Heerings M, Jongen PJ, Krabbenborg L. Toward digital self-monitoring of multiple sclerosis: investigating first experiences, needs, and wishes of people with MS. Int J MS Care. 2019;21(6):282–91.

Werhahn SM, Dathe H, Rottmann T, Franke T, Vahdat D, Hasenfuss G, et al. Designing meaningful outcome parameters using mobile technology: a new mobile application for telemonitoring of patients with heart failure. ESC Heart Fail. 2019;6(3):516–25.

Wu R, Liaqat D, de Lara E, Son T, Rudzicz F, Alshaer H, et al. Feasibility of using a smartwatch to intensively monitor patients with chronic obstructive pulmonary disease: prospective cohort study. JMIR Mhealth Uhealth. 2018;6(6):e10046.

Ferreira JJ, Godinho C, Santos AT, Domingos J, Abreu D, Goncalves N, et al. Quantitative home-based assessment of Parkinson’s symptoms: the SENSE-PARK feasibility and usability study. Sinapse. 2015;15(1):180.

Niknejad N, Ismail WB, Mardani A, Liao H, Ghani I. A comprehensive overview of smart wearables: the state of the art literature, recent advances, and future challenges. Eng Appl Artif Intelli. 2020;90:1.

Wang Q, Markopoulos P, Yu B, Chen W, Timmermans A. Interactive wearable systems for upper body rehabilitation: a systematic review. J Neuroeng Rehabil. 2017;14(1):20.

Jones J, Gounge C, Crilley M. Design principles for health wearables. Commun Design Quar Rev. 2017;5(2):40–50.

Baig MM, Afifi S, GholamHosseini H, Mirza F. A Systematic review of wearable sensors and IoT-based monitoring applications for older adults - a focus on ageing population and independent living. J Med Syst. 2019;43(8):233.

Francés-Morcillo L, Morer-Camo P, Rodríguez-Ferradas MI, Cazón-Martín A. The role of user-centred design in smart wearable systems design process. Proceedings of the design 2018 15th international design conference; 2018. pp. 2197–208.

Tractinsky N. The usability construct: a dead end? Human-Comput Interact. 2017;33(2):131–77.

Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46(1):81–95.

Altman D, Simera I. Using reporting guidelines effectively to ensure good reporting of health research. In: Moher D, Altman D, Schulz K, Simera I, Wager E, editors. In: Guidelines for Reporting Health Research: A User's Manual 2014.

Dechartres A, Trinquart L, Atal I, Moher D, Dickersin K, Boutron I, et al. Evolution of poor reporting and inadequate methods over time in 20 920 randomised controlled trials included in Cochrane reviews: research on research study. BMJ. 2017;357:j2490.

O’Cathain A, Murphy E, Nicholl J. Three techniques for integrating data in mixed methods studies. BMJ. 2010;341:c4587.

Bangor A, Kortum PT, Miller JT. An empirical evaluation of the System Usability Scale. Int J Human-Comput Interact. 2008;24(6):574–94.

Lewis JR. IBM computer usability satisfaction questionnaires: psychometric evaluation and instructions for use. Int J Human-Comput Interact. 1995;7(1):57–78.

Assila A, MarcaldeOliveira K, Ezzedine H. Standardized usability questionnaires: features and quality focus. Electr J Comput Sci Inf Technol. 2016;6(1):15–31.

Abildgaard JS, Saksvik PO, Nielsen K. How to measure the intervention process? An assessment of qualitative and quantitative approaches to data collection in the process evaluation of organizational interventions. Front Psychol. 2016;7:1380.

Toomey E, Hardeman W, Hankonen N, Byrne M, McSharry J, Matvienko-Sikar K, et al. Focusing on fidelity: narrative review and recommendations for improving intervention fidelity within trials of health behaviour change interventions. Health Psychol Behav Med. 2020;8(1):132–51.

Acknowledgements

Not applicable.

Funding

This project received funding from the Innovative Medicines Initiative 2 Joint Undertaking under grant agreement No 820820. This Joint Undertaking receives support from the European Union’s Horizon 2020 research and innovation programme and EFPIA. This presentation reflects the author's view and neither IMI nor the European Union, EFPIA, or any Associated Partners are responsible for any use that may be made of the information contained herein.

Author information

Authors and Affiliations

Contributions

AK and AA screened all articles for inclusion, AK and RA completed the data extraction, WJ helped with the creation of the search strategy and analysis, BC and AK devised the research question. All authors contributed to, read, and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to publication

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Table S1.

List of factors assessed in both the quantitative and qualitative methods of usability assessments

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Keogh, A., Argent, R., Anderson, A. et al. Assessing the usability of wearable devices to measure gait and physical activity in chronic conditions: a systematic review. J NeuroEngineering Rehabil 18, 138 (2021). https://doi.org/10.1186/s12984-021-00931-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12984-021-00931-2