Abstract

Background

Routine health facility data are an important source of health information in resource-limited settings. Regular quality assessments are necessary to improve the reliability of routine data for different purposes, including estimating facility-based maternal mortality. This study aimed to assess the quality of routine data on deliveries, livebirths and maternal deaths in Kampala City, Uganda.

Methods

We reviewed routine health facility data from the district health information system (DHIS2) for 2016 to 2021. This time period included an upgrade of DHIS2, resulting in two datasets (2016–2019 and 2020–2021) that were managed separately. We analysed data for all facilities that reported at least one delivery in any of the six years, and for a subset of facilities designated to provide emergency obstetric care (EmOC). We adapted the World Health Organization data quality review framework to assess completeness and internal consistency of the three data elements, using 2019 and 2021 as reference years. Primary data were collected to verify reporting accuracy in four purposively selected EmOC facilities. Data were disaggregated by facility level and ownership.

Results

We included 255 facilities from 2016 to 2019 and 247 from 2020 to 2021; of which 30% were EmOC facilities. The overall completeness of data for deliveries and livebirths ranged between 53% and 55%, while it was < 2% for maternal deaths (98% of monthly values were zero). Among EmOC facilities, completeness was higher for deliveries and livebirths at 80%; and was < 6% for maternal deaths. For the whole sample, the prevalence of outliers for all three data elements was < 2%. Inconsistencies over time were mostly observed for maternal deaths, with the highest difference of 96% occurring in 2021.

Conclusions

Routine data from childbirth facilities in Kampala were generally suboptimal, but the quality was better in EmOC facilities. Given likely underreporting of maternal deaths, further efforts to verify and count all facility-related maternal deaths are essential to accurately estimate facility-based maternal mortality. Data reliability could be enhanced by improving reporting practices in EmOC facilities and streamlining reporting processes in private-for-profit facilities. Further qualitative studies should identify critical points where data are compromised, and data quality assessments should consider service delivery standards.

Similar content being viewed by others

Background

Reliable health information and data are essential for monitoring health status and health system performance, which is crucial for achieving the Sustainable Development Goals [1]. Routine health facility data are an important source of this information in low- and middle-income countries (LMICs) [2, 3]. The data are collected and reported by different health facilities through a standardized health management information system (HMIS), using paper-based forms or reports and the web-based District Health Information System (DHIS2) [2, 4]. Despite its importance, concerns about the quality of routine data undermine its usefulness in monitoring progress in public health issues, such as maternal mortality reduction. Regular assessments are therefore needed to verify data quality, identify gaps and enhance the reliability of facility-based data [5,6,7].

Maternal health is a critical area that greatly benefits from good quality routine facility data [8]. An important indicator in this context is the institutional maternal mortality ratio (IMMR). The IMMR is defined as the number of maternal deaths per 100,000 deliveries within health facilities [9, 10]. However, IMMR has also been reported as the number of maternal deaths per 100,000 livebirths in health facilities [11, 12]. The IMMR serves as an indicator of healthcare quality and can be a proxy measure of health system performance [2, 13]. In the context of increasing facility deliveries and most maternal deaths occurring around the time of childbirth, reliable estimates to monitor progress can be generated with good quality facility data [3].

Several factors affect routine data quality including a lack of staff training in HMIS coupled with inadequate supportive supervision, lack of necessary tools and other resources, high workload and facility type [14,15,16,17]. In urban areas, routine data quality may be affected by the vast presence of private providers [18], who face challenges of poor regulation, untrained staff and limited resources, leading to inconsistent reporting [19, 20]. Also, the fragmented nature of the health system and lack of collective action make it difficult to implement strategies reported to improve data quality, such as support supervision [17, 18, 21]. Previous studies have shown variability in the completeness and consistency of maternal health indicators [7, 22,23,24], with some showing differences in data quality by facility and indicator type [22, 24]. However, few studies have explored how expected service delivery by level of care influences these metrics. This is particularly important in urban settings, where the predominant private facilities may not be the major providers of certain services such as childbirth. Understanding these dynamics can provide valuable insights for more accurate and contextualised data quality evaluation, and inform necessary adjustments for better health estimates from routine data.

This study adds to the body of knowledge informing use of routine data for monitoring maternal health outcomes and improving performance. In Uganda, challenges with data consistency and low reporting rates in the private sector affect data quality [20, 25]. We assessed the quality of routine data reported by health facilities in Kampala, Uganda’s capital, focusing on three data elements: deliveries, livebirths, maternal deaths. The findings provide insights for improving data completeness and consistency in a context of diverse health service providers and suggest potential adaptations for contextualising existing data quality metrics.

Methods

Study design

We conducted a retrospective review of routine monthly and annual aggregated health facility data over a six-year period (2016–2021). Additionally, we collected primary data from four health facilities for verification of facility reporting.

Study setting

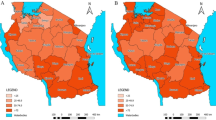

We assessed data from health facilities in Kampala City, located in central Uganda. Kampala has an estimated resident population of 1.7 million [26]; with a daytime transient population of up to 4.5 million [27]. Kampala city is divided into five urban divisions – Kawempe, Rubaga, Nakawa, Makindye and Central (Fig. 1) - comprising 96 parishes and 1285 villages. Healthcare is provided by a mix of government-owned and private health facilities. A 2016 survey reported about 1,448 health facilities in Kampala of which, 94% were private-for-profit (PFP), 2% were government and 4% were private-not-for-profit (PNFP) [28]. Generally, health services are delivered through a seven-tier system consisting of (from highest to lowest): national referral and specialist hospitals, regional referral hospitals, general hospitals, health centre (HC) IVs, HC IIIs, HC IIs, and the community/village health team levels. While uncomplicated deliveries can be conducted at HC IIs, more maternity services, including emergency obstetric care (EmOC), are provided at HC IIIs and higher levels. HC IIIs provide basic EmOC interventions such as administration of parenteral uterotonics and parenteral anticonvulsants, while HC IVs and hospitals provide comprehensive EmOC, including blood transfusion and caesarean delivery [29].

Study inclusion criteria

We included health facilities that provided childbirth services between 2016 and 2021, defining this as reporting at least one delivery in DHIS2 in the review period. We first assessed general maternal healthcare provision using antenatal care (ANC), deliveries, and maternal admissions data. We excluded duplicates and facilities that did not report any event for the three elements, assuming that they did not provide maternal healthcare and were therefore not relevant to the study. We then excluded facilities that only reported ANC services or maternity admissions as our focus was on childbirth services. To verify accuracy of facility reporting, we collected primary data from four EmOC facilities, purposively selected to ensure variability in delivery volume and sector. These included one referral and two general hospitals, and one HC IV, with two being public, one PNFP and one PFP. The facilities provided childbirth services across the six-year review period, with average annual deliveries ranging from 1,383 to 13,701. As of 2021, the four facilities accounted for about 40% of all deliveries reported in Kampala city.

Data sources

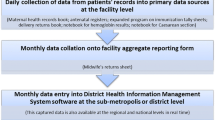

Health management information system data (DHIS2) - health unit outpatient monthly report

In Uganda, routine health data, including maternal health information are captured through the HMIS. Reporting frequencies vary by data element from weekly, monthly, quarterly, to annually. Health facilities are expected to submit their monthly reports by the 7th day of the next month. Data are first captured using paper-based HMIS forms in health facilities and then aggregated numbers are entered into DHIS2. While hospitals and HC IVs upload their data directly into DHIS2, HC IIIs and lower-level facilities submit paper reports to the district for entry into DHIS2. For this study, we assessed data reported through the Health Unit Outpatient Monthly Report (form HMIS 105), which includes maternal and child health (MCH) among other services and conditions. The review period (2016–2021) included an upgrade of DHIS2 in 2020, accompanied with revised HMIS forms and registers, with additional data elements such as number of (maternal) deaths at emergency unit and six-hour postnatal check for mothers under MCH. This resulted in two separate databases for our study (January 2016- December 2019 and January 2020- December 2021), as facilities had been not linked across the two DHIS2 versions. We analysed the two datasets separately, except for analysis of consistency overtime in 2021, where facilities were manually matched across the two datasets based on the name and location. The annual and monthly aggregated data on deliveries, livebirths, and maternal deaths were extracted from DHIS2 between January and September 2022.

Facility-based data registers and monthly report (primary data collection in four facilities)

To assess facility reporting accuracy, we reviewed the integrated maternity register, maternal death notification and review forms, and outpatient monthly reports at the four selected EmOC facilities. The maternity register captures different information on all admissions to the maternity unit including the woman’s diagnosis on admission, mode of delivery, details on the baby, and the condition of the mother and baby on discharge.

Data analysis

Data quality metrics and data elements

We adapted the World Health Organization (WHO) guidelines for routine data quality review [30]. We assessed each data element across two dimensions: completeness and timeliness, and internal consistency of reported data, with five metrics (Table 1). Completeness of facility reporting is the percentage of expected monthly reports that were submitted, while timeliness indicates the percentage of expected reports submitted by the reporting deadline. Completeness of data referred to the percentage of monthly values that were non-zero. Internal consistency, reflecting the credibility of reported events, involved examining outliers, consistency over time, and reporting accuracy [30]. Outliers were identified as monthly values with an absolute modified z-score above 3.5 for a particular year. Consistency over time was assessed by comparing the number of events reported in a reference year to the average of the three preceding years. Reporting accuracy was assessed in four facilities by reviewing source documents and comparing the counted values with the reported ones.

Three data elements used to measure IMMR were assessed: number of facility deliveries, livebirths, and maternal deaths. Facility deliveries refer to the number of women giving birth in a health facility, regardless of the number of babies born. Livebirths include all live-born babies in the facility; for multiple pregnancies, each live born baby is counted separately. Maternal death is defined as death of a woman while pregnant or within 42 days of terminating the pregnancy, from any cause related to or aggravated by the pregnancy or its management; and not incidental or accidental causes; irrespective of the duration and site of the pregnancy [31].

Data management

We generated overall performance for each data quality metric and categorized facility performance based on WHO thresholds [30]. For most metrics, data from two reference years (2019 and 2021) were analysed, while completeness and timeliness of facility reporting were assessed across all six years. Data were analysed using STATA 14 (StataCorp, College Station, Texas, US) and Microsoft Excel (Microsoft, Redmond, US). Descriptive statistics, including frequencies and percentages, were used to summarize the quality dimensions for each data element. We presented results using text, tables, and graphs, with disaggregation by facility level and ownership where possible. We categorized private facilities according to the 2018 national health facility master list [32].

In analysing data completeness, we further compared it with facility reporting completeness to identify potential true zero values and missing data for deliveries. We categorised facilities into four groups based on WHO limits, and explain them in light of Uganda’s health service delivery standards [33].

Category 1

(< 75% reporting and < 90% non-zero values)

Category 2

(< 75% reporting and > 90% non-zero values): These facilities submitted fewer than nine of the 12 expected monthly reports, with more than one monthly value as zero (Category 1), or only one or no zero values (Category 2).

Category 3

(≥ 75% reporting and < 90% non-zero values): These facilities submitted at least nine of the 12 expected monthly reports, but with one or more zero monthly values. Zero values at HC II/clinic level could be true or missing data, while at HC III and higher levels, they are likely missing data.

Category 4

(≥75% reporting and > 90% non-zero values): These facilities submitted nine or more reports, with 11 or 12 non-zero values, indicating generally good reporting.

Results

Description of the study sample

Between 2016 and 2019, 1487 health facilities reported data into DHIS2, rising to 1760 facilities between 2020 and 2021. Of the facilities reporting in 2016–2019, 255 (17%) reported at least one delivery, while in 2020–2021, 247 (14%) reported one or more deliveries (Fig. 2).

Table 2 shows the basic characteristics of facilities included in the analysis. In both datasets, over 80% of the facilities were private-for-profit, with majority classified as HC II or clinics.

Data Quality

A summary of the quality of data assessed is provided in Additional File 1.

Completeness of facility reporting

Overall, completeness of facility reporting was 56.1% in 2016, and generally increased to over 80% from 2019 to 2021 (Fig. 3). The average reporting rate across the six years was 74%. There was a general increase in timeliness of reporting between 2016 (41.6%) and 2019 (72.3%), followed by a substantial drop to 28.2% in 2020, with a slight recovery in 2021, which did not reach the level in 2019 (Fig. 3). A general increase in facility reporting was noted across facilities by ownership (Fig. 3a and b). Reporting was highest in PNFP facilities, but over time, the most improvement in reporting completeness occurred among Government-owned facilities and in hospitals.

The percentage of facilities with completeness reporting rates below 75% was 45.1% (115/255) in 2016, declining to 12.9% (33/255) in 2019, and then slightly increasing to 13.8% (34/247) in 2020 before decreasing to 12.2% (30/247) in 2021 (Fig. 4). Within this category, there was a steady decline in the percentage of facilities with zero reporting rate over the six-years, from 33.7% in 2016 to 7.7% in 2021. Majority of the facilities with < 75% reporting completeness, including zero reporting, were at HC II/clinic level and were private-for-profit facilities. In 2020–2021, no government and PNFP facilities, nor hospitals and HC IVs had zero reporting. The percentage of facilities with 100% reporting increased between 2016 and 2019, but decreased in 2020–2021.

Completeness of data elements

Overall data completeness (non-zero values) for deliveries was 55.1% in 2019 and 54.1% in 2021, and for livebirths completeness was 54.7% in 2019 and 53.6% in 2021. This means that, about five of the 12 monthly values reported for deliveries and livebirths were zeros. For maternal deaths, completeness in both 2019 and 2021 was less than 2% (Table 3), implying that, over 98% of the 12 monthly values were zeros. No missing data were found for the three data elements.

By sector, Table 3 shows lower prevalence of zero values for deliveries and livebirths in government and PNFP facilities in 2021 compared to 2019. However, completeness of maternal deaths data in government facilities was more than double in 2021 (17.2%) compared to 2019 (7.8%); while completeness was lower in 2021 (2.5%) compared to 2019 (5.7%) in PNFP facilities. Within PFP facilities, compared to 2019, data completeness in 2021 was higher for maternal deaths (0.3% vs. 0.1%), and slightly lower for deliveries (48.8% vs. 51.9%) and livebirths (48.5% vs. 51.5%).

In 2019 and 2021, 63.9–67.6% of facilities had fewer than 90% of monthly values for deliveries and livebirths as non-zero (Fig. 5). This shows that, nearly half of the facilities had more than 10% zero values in their monthly reports, with only about 20% of all facilities having non-zero values in all 12 monthly reports. On average, facilities reported about five zero values out of 12 in both 2019 (5.4) and 2021 (5.5). For maternal deaths, with the exception of one facility in 2021, less than 95% of monthly values were non-zero in all facilities. This means that in nearly all facilities, over 95% of reported monthly values for maternal deaths were zeros.

Among HC IIIs and higher-level facilities, overall completeness of data for deliveries and livebirths was about 80% in both 2019 and 2021, while for maternal deaths, completeness was less than 6%. In each reference year, 21 to 26 (≈ 30%) facilities had < 90% completeness of data for deliveries and livebirths.

In 2019, facilities with < 90% completeness rate for deliveries included: 10 (6.1%) government, 7 (4.3%) PNFP and 146 (89.6%) PFP by ownership; as well as 9 (5.5%) hospitals, 4 (2.5%) HC IVs, 6 (3.7%) HC IIIs and 141 (86.5%) HC IIs by facility level. In 2021, these facilities included: 4 (2.4%) government, 6 (3.7%) PNFP and 154 (93.9%) PFP by ownership, and 7 (4.3%) hospitals, 6 (3.7%) HC IVs, 13 (7.9%) HC IIIs and 138 (84.1%) HC IIs by facility level.

Exploring zero values vs. missingness for deliveries – comparing facility reporting and data completeness

Overall, about half of facilities (131/255 (51%) in 2019 and 136/247 (55%) in 2021) submitted at least nine of the 12 expected monthly reports (> = 75% reporting), with less than 11 non-zero values (< 90% completeness)- Table 4. Among HC IIs and clinics, 60% of facilities were in category 3 with likely true zero values and missing data (orange cells**), and just over 20% had good reporting (dark green cellsɣ). While most HC III and higher-level facilities had generally good reporting (dark green cellsɣ), 20–30% had likely missing data in their monthly reports (red cells¥).

The red cells marked (*) contain inconsistencies such as, facilities having < 100% reporting with 100% data completeness, and facilities having at least one non-zero value despite zero reporting. Facilities in the light green cells (†) reported likely true zero values.

Internal consistency of reported data

Outliers (accuracy of event reporting)

The overall percentage of monthly values which were outliers (modified z-score > 3.5) was 1.0% for deliveries, 1.2% for livebirths and 0.2% for maternal deaths in 2019. In 2021, the percentage was 1.8% for deliveries and livebirths, and 0.1% for maternal deaths. For deliveries and livebirths, the percentage of facilities with at least one outlier in the reported monthly values respectively was higher in 2021 (13.0% and 12.6%) compared to 2019 (8.6% and 11.0%) (Table 5). For maternal deaths, the number and percentage of facilities in which at least one monthly value was an outlier decreased in 2021 compared to 2019. Among HC IIIs and higher-level facilities, overall prevalence of outliers ranged from 0.3 to 3.6%. The percentage was 1.3% for deliveries, 2.0% for livebirths and 0.8% for maternal deaths in 2019, and it was 3.6% for deliveries, 2.9% for livebirths and 0.3% for maternal deaths in 2021.

For all three data elements, only one monthly value was an outlier in most affected facilities. The percentage of facilities with two or more outliers in both reference years was less than 5%, but this was higher for deliveries and livebirths in 2021 (2.4% for deliveries, 3.5% for livebirths in 2019; and 4.1% for deliveries, 4.5% for livebirths in 2021). Outliers in data for deliveries and livebirths were mostly observed in HC IIs/Clinics and private-for-profit facilities. In 2019, eight (12.9%) facilities had an outlier in the monthly values for deliveries, 11 (17.7%) for livebirths and four (6.5%) for maternal deaths. In 2021, 15 (22.4%) facilities had an outlier in values for deliveries and livebirths each, and only one (1.5%) facility for maternal deaths. The public sector had the lowest number of facilities with outliers for deliveries and livebirths in both 2019 and 2021. For maternal deaths, all facilities that had an outlier in the reported monthly values were hospitals in both 2019 (all private sector) and 2021 (public sector).

Consistency over time

The number of deliveries reported in 2019 by all facilities in the sample as compared to the average number of deliveries reported in 2016, 2017 and 2018 was relatively the same (ratio = 1). However, a 2% increase in the number of reported livebirths occurred (Table 6). Additionally, a 41% decline in the number of maternal deaths reported in 2019 was observed compared to the average of the three prior years. At least 40% of facilities had a ratio for deliveries and livebirths that was 33% or more when compared to the overall ratio for the sample and as such, reported more or less deliveries or livebirths in 2019 compared to the preceding three years.

Among facilities that provided childbirth services across both datasets (i.e., total number of deliveries in 2016-19 AND in 2020-21 was not zero), Table 6 shows an increase in the number of deliveries (8%), livebirths (7%) and maternal deaths (96%) reported in 2021 compared to the average number for each indicator for the years 2018, 2019 and 2020. Nearly 50% of facilities reported more or less deliveries and livebirths in 2021 compared to the average of the three previous years.

Among facilities at HC III and higher levels, Table 6 shows relative consistency between the number of deliveries and livebirths reported in 2019 compared to the average number of the three preceding years; while respectively there was a 10% and 8% increase in deliveries and livebirths reported in 2021 when compared to the prior three years. Of these facilities that had a difference of 33% or more between their ratio and that of the overall sample, hospitals were more prevalent ranging between 4 and 12, followed by HC IIIs ranging between 6 and 7 across the three data elements assessed.

Comparing number of deliveries and livebirths reported in 2019 and 2021

The number of deliveries in a facility is not always equal to the number of livebirths. In some cases, a delivery may result in two or more livebirths while in others, it might result in a stillbirth. Still, some inconsistencies between the two may be out of range. In this study, the ratio of the total number of deliveries to livebirths for all facilities was 1.01 in 2019 and 1.03 in 2021, suggesting that overall, the total numbers for the two elements were nearly the same. At facility level, in both reference years, facilities with a ratio of 0.8 or below [n = 4 (2019); n = 5 (2021)] were all at the HC II/clinic level. These reported more livebirths than deliveries. On the other hand, the nine facilities in 2019 with a ratio of 1.2 and above were at HC II level, and in 2021 (n = 8) these facilities included one hospital, and all were PFP facilities. These facilities reported more deliveries than livebirths.

Accuracy of facility reporting (2021)

The four facilities where reporting verification was done included two public (fac02 and fac029), two private facilities (fac07 and fac026 - one private-not-for-profit and the other private-for-profit). With the exception of fac029 (a HC IV), the rest were hospitals. The data shows relative consistency in reporting within the two public facilities with a verification factor of about 1 (Table 7). On the other hand, inconsistences in facility reporting were noted in the two private facilities. The verification factor of 10% for deliveries and livebirths and over 10% for maternal deaths in one of the private facilities point to possible underreporting of events.

Discussion

There is still a need for evidence on routine health facility data quality from low- and middle-income countries. This study assessed data from an African city, with a focus on IMMR data elements. The findings showed that the data were relatively consistent but with sub-optimal completeness despite improvements in facility reporting overtime. Additionally, the results showed that data reported by HC III and higher levels were of better quality compared to the entire sample, which predominantly comprised HC II/clinics. We acknowledge the fundamental difference in the nature of the data elements assessed whereby, deliveries and livebirths are relatively routine, while maternal deaths are rare events. We therefore discuss the findings on their data quality separately.

The completeness of data on deliveries and livebirths was below 90%, ranging between 53% and 55%. This means that about 40% of reported monthly values in a year were zeros, possibly representing either true zero values or missing data. The low completeness may be a result of facilities failing to submit all their monthly reports, as found in this study with only 20% of facilities submitting all 12 reports. Additionally, this could be influenced by health service delivery standards. In Uganda for example, HC IIs/Clinics, which constituted over 70% of our analysis sample, are not expected to routinely conduct deliveries [33], and may report true zeros. This factor may also explain the lower data completeness in private facilities, largely composed of these HC IIs/clinics. However, other factors such as lack of support, tools and motivation for reporting, could also contribute to low reporting rates in private facilities.

Our findings showed that data for deliveries and livebirths were generally consistent across the years, with some plausible deviations. Notably, the higher numbers reported in 2021 could be attributed to increased service utilization, a trend shown in Uganda for facility deliveries [10]. These changes may explain outliers, especially in higher-level facilities. However, outliers could also result from reporting and data entry errors that should be verified when reported data fall outside expected ranges. For example, though not directly related, a comparison between number of deliveries and livebirths in our study suggested possible errors, as livebirths were sometimes 20% more or less than the deliveries, and vice versa. Compared to previous studies in Uganda [7, 34] and Ghana [35], data completeness in our study was lower, though comparable to findings from other African studies [22, 36]. The observed data quality problems could be attributed to factors such as high work load, lack of reporting tools, insufficient training and supervision [14, 15, 17].

Regarding maternal deaths, overall completeness was < 2%, indicating that about 98% of reported monthly values were zeros, especially in PFP facilities. This may reflect the rarity of maternal deaths, particularly in lower-level facilities that constituted most PFP facilities in our sample, which are expected to refer women with complications to higher-level facilities. However, reporting of zero values in PFP facilities might also indicate underreporting, warranting further verification. The inconsistent reporting of maternal deaths, particularly in 2021, could be linked to improved reporting following efforts like maternal and perinatal death surveillance and response (MPDSR) strengthening in Kampala and other regions in Uganda [37]. While MPDSR has been shown to improve maternal deaths reporting [38], the increase could also indicate more women are dying in health facilities, as shown in surveillance reports [37].

However, it remains unclear how well the measured completeness (non-zero values) reflects accurate reporting and classification of all facility-related maternal deaths. Previous research has pointed to underreporting in facility documentation of maternal deaths [39, 40]. These findings suggest the need for additional verification measures to ensure maternal deaths from all causes, including abortion-related complications, are accurately reported through routine information systems. Other maternal death surveillance systems such as weekly surveillance and vital statistics registration should be leveraged. The apparent concentration of maternal deaths in fewer facilities could help target initiatives to improve data completeness.

Lastly, timeliness of facility reporting remarkably dropped in 2020, possibly due to the corona virus disease (COVID-19)-related movement restrictions, which limited health worker availability in facilities, amidst fears of acquiring the infection. Staffing challenges have been reported to negatively affect data quality [16]. Another possible explanation for the drop is the DHIS2 system upgrade in 2020, for which health facilities may have been inadequately prepared to effectively implement. This finding implies that, at expected reporting times, available data may be inadequate to support decision making or generation of health statistics. Innovative measures are needed to increase timely submission of reports while addressing known barriers like workload and staffing.

Implications of the study findings

Our results showed that data completeness for deliveries and livebirths, was generally low due to a high proportion of facilities not routinely providing childbirth services. This suggests that routine facility data quality may vary based on service delivery standards for a given indicator. Thus, data quality evaluations should consider these dynamics to better understand quality variations and make appropriate adjustments when computing estimates. Strengthening reporting and data processes in EmOC facilities could enhance reliability of routine data for estimating maternal health indicators like IMMR. Additional quality enhancement may be gained from streamlining reporting by PFP facilities, particularly at lower-levels. To achieve this, collaboration between private and public governing bodies, such as professional councils and local government, will be essential, along with digital initiatives such as electronic HMIS forms to overcome challenges like HMIS tools shortages.

Furthermore, the distinction between true zero values and missing data was unclear, which has implications for data completeness and related estimates. Future DHIS2 revisions should include system checks to clearly differentiate between the two, with clear instructions for data entry. Our study suggests validation using completeness of facility reporting and indicator data, and service levels. The assumption is that zero values in facilities that routinely/typically provide a given service likely indicate missing data, while they could be true in facilities that do not regularly provide that service. Specific focus is needed for facilities with ≥ 75% facility reporting but < 90% data completeness. Moreover, DHIS2 should be improved to better link reporting facilities during system upgrades to minimise inconsistencies, and enhance real-time feedback mechanisms for incomplete or inconsistent data, to the facilities generating the data.

We also recommend additional qualitative research to better understand data flow processes and dynamics across different contexts and service delivery levels. This would help identify critical points where data quality losses occur, and guide targeted improvements. Furthermore, our verification of reporting accuracy, limited to a few facilities, pointed to more inconsistencies in private facilities. This warrants further investigation with a larger and representative sample to determine the level, distribution, and key determinants of reporting accuracy. Evidence suggests that maintaining routine data quality in lower-level facilities is challenging [17, 22, 25], however, few studies have assessed routine data quality in the private sector. In addition to considering expected service delivery when adjusting reporting completeness when generating estimates, our findings highlight the need to correct for likely missing data, which affected about 30% of EmOC facilities in our study.

Strengths and limitations

Using systematic approaches, this study adds to the evidence on routine data quality in low-resource settings. Specifically, the study provides insights from an African city using a sample drawn from a large dataset comprised of both public and private facilities. Nonetheless, the study has the following limitations. First, our analysis sample was drawn only from health facilities that reported into DHIS2, which is not inclusive of all health facilities in Kampala city. It is likely that omitted facilities are lower-level and/or private-for-profit, which generally provide fewer childbirth services in Kampala [41]. Second, the classification of private facilities was based on the 2018 national master list and some facilities may have upgraded in that time to a higher level than what was used in the present study. This may influence estimates by facility level, but we do not expect this to have happened in many facilities. Third, we did not assess external consistency of the data, which compares routine data with population-based surveys, because of lack of access to data from more recent facility or population surveys in Kampala. Nonetheless, comparison of coverage estimates based on routine facility data as compared to survey data or examining related denominators has been explored [7, 42], highlighting the need for approaches to improve accuracy of estimates from routine data.

Conclusions

Our study provides relevant information on the quality of routine health facility data related to maternal mortality. Routine data reported by facilities providing childbirth services in Kampala on deliveries, livebirths and maternal deaths were generally sub-optimal, but of better quality in EmOC facilities. Given the likely underreporting of maternal deaths, further efforts to verify and count all facility-related maternal deaths are essential to accurately estimate facility-based maternal mortality, alongside sustainable MPDSR systems. Data reliability could be enhanced by improving reporting practices in EmOC facilities and streamlining reporting processes in PFP facilities. Further qualitative studies should identify critical points where data are compromised, and data quality assessments should consider service delivery standards. Additional validation checks are needed in DHIS2 to distinguish true zero values from missing data, with real-time feedback mechanisms for reporting facilities.

Data availability

The dataset supporting the conclusions of this article can be accessed with permission from the Kampala Capital City Authority, Directorate of Public Health.

Abbreviations

- HMIS:

-

Health management information system

- DHIS2:

-

District health information system2

- PNFP:

-

Private-not-for-profit

- PFP:

-

Private-for-profit

- HC:

-

Health centre

- EmOC:

-

Emergency obstetric care

- MCH:

-

Maternal child health

- ANC:

-

Antenatal care

- PNC:

-

Postnatal care

- MMR:

-

Maternal mortality ratio

- WHO:

-

World Health Organization

References

World Health Organization. Monitoring the building blocks of health systems: a handbook of indicators and their measurement strategies. Geneva; 2010.

Mallick L, Gheda T, Sorrel N, Trinadh D, Wenjuan W. Using Health Management Information Systems Data to Contextualise Survey-Based Estimates of Fertility, Mortality, and Wasting. DHS Occasional Paper No. 12 [Internet]. Rockville, Maryland, USA; 2020 [cited 2023 Mar 7]. https://dhsprogram.com/pubs/pdf/OP12/OP12.pdf

Mgawadere F, Kana T, Van Den Broek N. Measuring maternal mortality: a systematic review of methods used to obtain estimates of the maternal mortality ratio (MMR) in low- and middle-income countries. British Medical Bulletin. Volume 121. Oxford University Press; 2017. pp. 121–34.

Kiberu VM, Matovu JKB, Makumbi F, Kyozira C, Mukooyo E, Wanyenze RK. Strengthening district-based health reporting through the district health management information software system: the Ugandan experience. BMC Med Inform Decis Mak [Internet]. 2014;14(1):40. https://doi.org/10.1186/1472-6947-14-40

Gimbel S, Mwanza M, Nisingizwe MP, Michel C, Hirschhorn L, Hingora A et al. Improving data quality across 3 sub-saharan African countries using the Consolidated Framework for Implementation Research (CFIR): results from the African Health Initiative. BMC Health Serv Res. 2017;17.

Nshimyiryo A, Kirk CM, Sauer SM, Ntawuyirusha E, Muhire A, Sayinzoga F et al. Health management information system (HMIS) data verification: a case study in four districts in Rwanda. PLoS ONE. 2020;15(7 July).

Agiraembabazi G, Ogwal J, Tashobya C, Kananura RM, Boerma T, Waiswa P. Can routine health facility data be used to monitor subnational coverage of maternal, newborn and child health services in Uganda? BMC Health Serv Res. 2021;21.

Every Woman Every Child. Indicator and monitoring framework for the global strategy for women’s, children’s and adolescents’ health (2016–2030) [Internet]. 2016 [cited 2024 Aug 9]. https://cdn.who.int/media/docs/default-source/mca-documents/global-strategy/gs-indicator-and-monitoring-framework.pdf?sfvrsn=939e8b94_6&Status=Master

World Health Organization. Global reference list of 100 Core Health indicators (plus health-related SDGs). Internet. 2018;Geneva; 2018 [cited 2024 Aug 9]. Available from: https://iris.who.int/bitstream/handle/10665/259951/WHO-HIS-IER-GPM-2018.1-eng.pdf?sequence=1.

Ministry of Health U. National annual maternal and perinatal death surveillance and response (MPDSR) report FY 2022/23. Sep: Kampala; 2013.

Ministry of Health U. Indicators for monitoring health indices and the health sector strategic and investment plan (HSSIP) in Uganda. The user’s indicator manual. Kampala; 2011.

Akaba GO, Nnodu OE, Ryan N, Peprah E, Agida TE, Anumba DOC et al. Applying the WHO ICD-MM classification system to maternal deaths in a tertiary hospital in Nigeria: a retrospective analysis from 2014–2018. PLoS ONE. 2021;16(1 January).

World Health Organization. Global Reference List of 100 core health indicators. Geneva; 2015.

Lee J, Lynch CA, Hashiguchi LO, Snow RW, Herz ND, Webster J, et al. Interventions to improve district-level routine health data in low-income and middle-income countries: a systematic review. BMJ Global Health. Volume 6. BMJ Publishing Group; 2021.

Nguefack-Tsague G, Tamfon BB, Ngnie-Teta I, Ngoufack MN, Keugoung B, Bataliack SM et al. Factors associated with the performance of routine health information system in Yaoundé-Cameroon: a cross-sectional survey. BMC Med Inf Decis Mak. 2020;20(1).

Tilahun B, Derseh L, Atinafu A, Mamuye A, Mariam TH, Mohammed M et al. Level and contributing factors of health data quality and information use in two districts in Northwest Ethiopia: social-ecological perspective. BMC Med Inf Decis Mak. 2021;21(1).

Shama AT, Roba HS, Abaerei AA, Gebremeskel TG, Baraki N. Assessment of quality of routine health information system data and associated factors among departments in public health facilities of Harari region, Ethiopia. BMC Med Inf Decis Mak. 2021;21(1).

Elsey H, Agyepong I, Huque R, Quayyem Z, Baral S, Ebenso B et al. Rethinking health systems in the context of urbanisation: challenges from four rapidly urbanising low-income and middle-income countries. BMJ Glob Health. 2019;4(3).

McNab S, Freedman L. Maternal newborn health and the urban poor: a global scoping. New York; 2016.

Ministry of Health Uganda. Ministry of Health Strategic Plan 2020/21-2024/25. Jan: Kampala; 2020.

Vearey J, Luginaah I, Magitta NF, Shilla DJ, Oni T. Urban health in Africa: a critical global public health priority. BMC Public Health. 2019;19(1).

Bhattacharya AA, Umar N, Audu A, Allen E, Schellenberg JRM, Marchant T. Quality of routine facility data for monitoring priority maternal and newborn indicators in DHIS2: a case study from Gombe State, Nigeria. PLoS ONE. 2019;14(1).

Endriyas M, Alano A, Mekonnen E, Ayele S, Kelaye T, Shiferaw M et al. Understanding performance data: health management information system data accuracy in Southern Nations Nationalities and People’s Region, Ethiopia. BMC Health Serv Res [Internet]. 2019;19(1):175. https://doi.org/10.1186/s12913-019-3991-7

Ouedraogo M, Kurji J, Abebe L, Labonté R, Morankar S, Bedru KH et al. A quality assessment of Health Management Information System (HMIS) data for maternal and child health in Jimma Zone, Ethiopia. PLoS One [Internet]. 2019;14(3):e0213600. https://doi.org/10.1371/journal.pone.0213600

Ministry of Health Uganda. Service Availability and Readiness Assessment & Data Quality Review (SARA & DQR 2018) For Uganda. Kampala; 2022 May.

UBOS UB of S. The National Population and Housing Census 2014 – National Analytical Report. Kampala; 2017.

KCCA KCCA. Statistical abstract for Kampala City. Kampala; 2019.

Cardno Emerging Markets USA Ltd. Health Facility Census in the Kampala Capital City Authority (KCCA). Divisions. Census Report [Internet]. 2017 [cited 2024 Aug 10]. https://pdf.usaid.gov/pdf_docs/PA00THFS.pdf

World Health Organization. Monitoring emergency obstetric care: a handbook. Geneva; 2009.

World Health Organization. Data quality review: a toolkit for facility data quality assessment. Module 2. Desk review of data quality. Geneva; 2017.

World Health Organization. The WHO Application of ICD-10 to deaths during pregnancy, childbirth and the puerperium. ICD-MM. Geneva; 2012.

Ministry of Health Uganda. National health facility master list 2018. A complete list of all health facilities in Uganda [Internet]. 2018 [cited 2023 Mar 6]. https://health.go.ug/sites/default/files/Signed%20n%20final%20mfl.pdf

Ministry of Health Uganda. Comprehensive health service standards manual. Jul: Kampala; 2021.

Maïga A, Jiwani SS, Mutua MK, Porth TA, Taylor CM, Asiki G, et al. Generating statistics from health facility data: the state of routine health information systems in Eastern and Southern Africa. BMJ Global Health. Volume 4. BMJ Publishing Group; 2019.

Lasim OU, Ansah EW, Apaak D. Maternal and child health data quality in health care facilities at the Cape Coast Metropolis, Ghana. BMC Health Serv Res [Internet]. 2022;22(1):1102. https://doi.org/10.1186/s12913-022-08449-6

Adane A, Adege TM, Ahmed MM, Anteneh HA, Ayalew ES, Berhanu D, et al. Exploring data quality and use of the routine health information system in Ethiopia: a mixed-methods study. BMJ Open. 2021;11(12):e050356.

Ministry of Health Uganda. The national FY 2021/2022 annual maternal and perinatal death surveillance and response (MPDSR) report. Sep: Kampala; 2022.

Kinney MV, Walugembe DR, Wanduru P, Waiswa P, George A. Maternal and perinatal death surveillance and response in low- and middle-income countries: a scoping review of implementation factors. Health Policy and Planning. Volume 36. Oxford University Press; 2021. pp. 955–73.

Maruf F, Tappis H, Stekelenburg J, van den Akker T. Quality of maternal death documentation in Afghanistan: a Retrospective Health Facility Record Review. Front Glob Womens Health. 2021;2.

Abouchadi S, Zhang WH, De Brouwere V. Underreporting of deaths in the maternal deaths surveillance system in one region of Morocco. PLoS ONE. 2018;13(1).

KCCA KCCA. Directorate of Public Health and Environment: Annual Report, Financial Year 2021/22. Kampala; 2022 Jul.

Simmons EM, Singh K, Mpiima J, Kumar M, Weiss W. Assessing coverage of essential maternal and child health interventions using health-facility data in Uganda. Popul Health Metr. 2020;18(1).

Acknowledgements

We acknowledge input by Dr Peter Macharia for helping produce the map in Fig. 1.

Funding

CB was funded by the Belgian Directorate for Development Co-operation (DGD) PhD sandwich programme. LB was funded in part by the Research Foundation – Flanders (Fonds Wetenschappelijk Onderzoek) as part of her Senior Postdoctoral Fellowship (award number 1234820 N).

Author information

Authors and Affiliations

Contributions

CB conceptualised the study, analysed the data and produced initial drafts of the paper. ABT, JVO, LB and PW reviewed the design of the study. LB and AS participated in the analysis and interpretation of results. EAS extracted the data from DHIS2. RMK contributed to the interpretation of results. All authors reviewed and edited the initial drafts, and also approved the final version submitted.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethical approval for this study was obtained from the ethics committees of Makerere University School of Public Health (SPH-2021-169), the Uganda National Council for Science and Technology (HS1952ES), the Institute of Tropical Medicine Antwerp (1529/21) and University of Antwerp Hospital (2021 − 1743). A waiver of informed consent to use secondary data was also obtained from the Makerere University School of Public Health (SPH-2021-169). Consent to participate in this study was not applicable. All methods were carried out in accordance with relevant guidelines and regulations.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Birabwa, C., Banke-Thomas, A., Semaan, A. et al. The quality of routine data for measuring facility-based maternal mortality in public and private health facilities in Kampala City, Uganda. Popul Health Metrics 22, 22 (2024). https://doi.org/10.1186/s12963-024-00343-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12963-024-00343-z