Abstract

Background

Translating findings from systematic reviews assessing associations between environmental exposures and reproductive and children’s health into policy recommendations requires valid and transparent evidence grading.

Methods

We aimed to evaluate systems for grading bodies of evidence used in systematic reviews of environmental exposures and reproductive/ children’s health outcomes, by conducting a methodological survey of air pollution research, comprising a comprehensive search for and assessment of all relevant systematic reviews. To evaluate the frameworks used for rating the internal validity of primary studies and for grading bodies of evidence (multiple studies), we considered whether and how specific criteria or domains were operationalized to address reproductive/children’s environmental health, e.g., whether the timing of exposure assessment was evaluated with regard to vulnerable developmental stages.

Results

Eighteen out of 177 (9.8%) systematic reviews used formal systems for rating the body of evidence; 15 distinct internal validity assessment tools for primary studies, and nine different grading systems for bodies of evidence were used, with multiple modifications applied to the cited approaches. The Newcastle Ottawa Scale (NOS) and the Grading of Recommendations, Assessment, Development, and Evaluations (GRADE) framework, neither developed specifically for this field, were the most commonly used approaches for rating individual studies and bodies of evidence, respectively. Overall, the identified approaches were highly heterogeneous in both their comprehensiveness and their applicability to reproductive/children’s environmental health research.

Conclusion

Establishing the wider use of more appropriate evidence grading methods is instrumental both for strengthening systematic review methodologies, and for the effective development and implementation of environmental public health policies, particularly for protecting pregnant persons and children.

Similar content being viewed by others

Introduction

A range of detrimental impacts of air pollution exposure on reproductive and children’s health have been established [1,2,3,4,5]. However, air quality regulatory efforts, and especially those accounting for the specific vulnerabilities inherent to reproductive and children’s health, have yet to be effectively implemented on a larger scale [6,7,8]. Formally assessing the quality of the body of evidence, meaning the collection of available individual studies, has been identified as central to translating research into policy [9]. In fact, grading the quality of the body of evidence has become an integral part of the systematic review process [10], reflected in recent additions to the revised Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) 2020 guidelines, recommending authors explicitly report their approach to the process of rating the body of evidence [11]. Evidence grading approaches were developed predominantly for clinical questions, including well-established guidelines such as the Grading of Recommendations, Assessment, Development, and Evaluations (GRADE) criteria [10, 12].

However, the field of reproductive and children’s environmental health, including research on air pollutant exposure, is affected by characteristics that may complicate the critical evaluation of primary studies and bodies of evidence:

-

i)

The predominantly observational nature of available studies means that, due to inherent differences in study design compared to experimental studies, a different approach is required for identifying and addressing potential confounding and other biases [13,14,15]. Specific aspects of epidemiologic studies of air pollutant exposure and reproductive/ children’s health outcomes that may result in confounding (e.g., frequent use of spatial rather than temporal comparators, lack of covariate information from birth records or other sources), have been described [16, 17]. However, both the default ranking of experimental studies above observational studies, as well as the practice of rating primary studies based on how well they emulate a “hypothetical target RCT” have been criticized [18,19,20,21,22,23].

-

ii)

Highly heterogeneous and dynamic population characteristics that define the field of reproductive and children’s health (e.g., vulnerabilities related to developmental stages, rapid changes in health -related behaviors) require a lifestage-specific approach. Profound physiological and developmental differences between children and adults impact the toxicity and adverse biological implications of chemical exposures, based on variations in metabolic rates, (de-)toxification processes, and vulnerability during specific developmental windows [17, 24,25,26].

-

iii)

Further aspects specific to reproductive and children’s health, including generally longer expected lifespans and long latency periods, life course perspectives (e.g., developmental origins of disease), trans-generational effects, among others, necessitate a tailored approach [24].

-

iv)

Challenges related to exposure assessments are generally an issue in observational vs. experimental studies, where exposures are not controlled by investigators, and in particular, in environmental health studies [14, 27]. Exposure assessments regarding air pollution are characterized by specific challenges (e.g., differences in the availability of air monitoring data, seasonal variations in exposure patterns, etc.) [17, 27], potentially increasing misclassification, also with regard to relevant developmental periods, such as gestational trimesters. Also, there are additional considerations with regard to reproductive and children’s health: Due to differences in body size and behaviors, among others, exposure patterns are different for developing fetuses, children, and pregnant persons vs. non-pregnant adults (e.g., relative exposure doses, exposure routes and settings, timing and duration of exposure in relation to windows of susceptibility) [24, 27,28,29]. For example, children have different breathing zones (due to shorter stature) and oxygen consumption patterns, affecting their individual exposure to air pollution [25].

-

v)

The co-exposure to mixes of pollutants reflects the real-world risks faced by the global population, which may include additive/ synergistic effects between chemicals, and while modeling impacts of multiple pollutants jointly could provide more valid results, there are challenges such as collinearity and high dimensionality, among others [17, 27, 30,31,32].

-

vi)

Further, the context of decision-making in environmental health research differs: Unlike in the clinical setting, environmental exposures are often assessed for risks only after exposure -often wide-spread and long-term- has already occurred in the population [28]. Also, environmental health studies are focused on protecting, rather than improving health [28]. Therefore, while clinical research is primarily concerned with demonstrating a desired treatment effect, reproductive/ children’s environmental health should, arguably, be concerned with demonstrating the absence of adverse effects: For the former, the burden of proof lies in demonstrating an association or effect, while for the latter, it would lie in demonstrating no association or effect, in essence, safety [33,34,35,36]. Statistical methods for testing for the absence of effects (e.g., equivalence tests) are available, and in addition to providing evidence regarding the equivalence of different exposure scenarios, may also help to reduce publication bias [37,38,39].

Methodological weaknesses specific to assessing evidence related to environment exposures [40, 41], and specifically ambient air pollution [42], and pregnancy outcomes [43, 44], were previously identified among systematic reviews, particularly related to assessing internal validity and a lack of transparent evidence grading methodologies. Because systematic review methodologies were primarily developed for clinical trials, their suitability for evaluating evidence from observational/ environmental health, and how these methods can best be adapted, has been debated [14]. Further, certain aspects of existing approaches, including the aforementioned default ranking of evidence from randomized controlled trials (RCT) above that from observational studies, have previously been criticized in the context of environmental health [18, 22, 23].

In this methodological survey we aimed to evaluate frameworks for critically assessing bodies of evidence, applied in systematic reviews of epidemiological studies of environmental exposures and adverse reproductive/ child health outcomes, using research on air pollution exposure as a case-study. Air pollutant exposure was chosen based on the comparability of approaches within this research area, and the large body of available systematic reviews [45]. Based on this, we exemplify and discuss challenges and recommendations for evidence grading in the context of reproductive/ children’s environmental health.

Methods

As the unit of analysis of this work was systematic reviews, we adhered to the Preferred Reporting Items for Overviews of Reviews (PRIOR) guidelines (Supplemental Material S1, PRIOR checklist) [46], and further relevant guidance [47,48,49,50,51]. Two reviewers independently completed all steps of the systematic process, including screening for eligible references, extracting data, and assessing risk of bias (SM and AA). Discrepancies were resolved by discussing or by consulting with the third reviewer (OVE).

Eligibility criteria and review selection

The inclusion criteria are presented and explained in Table 1.

As highlighted in Table 1, we identified systematic reviews explicitly employing published criteria or guidelines for assessing or rating the quality of the body of evidence, among the collection of systematic reviews of studies of air pollutant exposure and adverse reproductive and child health outcomes.

Titles, abstracts, and full-texts of the identified publications were consecutively screened, and included in the subsequent screening step, unless there was explicit indication that the publication did not meet our inclusion criteria.

Data sources and search strategy

For identifying systematic reviews, PubMed and Epistemonikos have been identified as the database combination with the highest inclusion rate [54], and we additionally searched the database Embase. For identifying systematic reviews, in favor of built-in filters, we developed a hedge combining searches of text words, filters, and publication types, based on current recommendations for achieving maximum sensitivity [54,55,56,57].

Controlled vocabulary terms and keywords were employed to combine the concepts “air pollution”, “childhood”, and “systematic review” (Supplemental Material S2: Full electronic search strategies). We used the PubMed Reminer tool [58], and the SearchRefiner tool from the Systematic Review Accelerator website [59], to develop and assess the sensitivity and specificity of our search strategy.

On December 9, 2020, we conducted the initial systematic search of the electronic databases, without language or publication status restrictions. All searches of electronic databases were performed by SM and updated until April 07, 2023.

In addition, supplementary searches were performed using the search engines Google and Google Scholar. Search engines are used supplementarily, as these allow limited insights into how search results are produced [60]. Further, we manually performed backward and forward citation searching.

Data extraction

Data on systematic review characteristics were extracted using a standardized data extraction form. For extracting information pertaining to the evidence grading systems, descriptions reported in the original articles, as well as cited guidance documents and further related references (i.e., organization websites, etc.) were consulted. Also, we considered the versions of the approaches used within the identified systematic reviews, although in some cases, newer versions exist. If necessary, we attempted to contact systematic review authors to identify or clarify missing or unclear information.

Risk of bias assessment (ROBIS)

Risk of bias in systematic reviews was evaluated using the ROBIS tool, based on 1) the appropriateness of study eligibility criteria, 2) methods for identifying and selection of studies, 3) data extraction and quality appraisal methods, and 4) appropriateness of data synthesis, and 5) overall risk of bias [61].

Qualitative analysis/synthesis

We calculated the proportion of systematic reviews explicitly employing formal evidence grading frameworks. The main characteristics of these reviews, including both the main objectives and findings, as well as the systematic review methods, were synthesized in descriptive and tabular format. Methodological characteristics, specifically the guidelines and approaches used for grading bodies of evidence were reviewed. Notably, because approaches used for assessing a body of evidence are partially based on preceding assessments of the quality or risk of bias among primary/ individual studies, both of these types of assessments in the systematic review processes were distinctly considered herein.

With regard to individual studies, quality versus risk of bias or internal validity are related but distinct concepts, concerned with the critical assessment of individual studies. Risk of bias, refers to aspects of study design, conduct, or analysis that could give rise to systematic error in study results, and can be used synonymously with internal validity, which is the extent to which bias has been prevented through methodological aspects [62]. Study quality, on the other hand, may refer to (a) reporting quality; (b) internal validity or risk of bias; and (c) external validity or directness and applicability, among others [15]. However, while risk of bias vs. study quality assessments are truly distinct concepts, they are often interchanged or merged in research practice [63]. For this reason, in this methodological survey, these approaches were considered jointly.

The quality of/ certainty in the body of evidence, on the other hand, is assessed based on strengths and limitations of a collection of individual studies, and incorporates results from preceding risk of bias assessments, as well as aspects of directness/ applicability of the identified primary studies with regard to the review question, heterogeneity/ inconsistency across studies, the magnitude and precision of effect estimates, potential publication biases, and further criteria [12, 15]. Sometimes this step is followed by subsequent ratings regarding the strength or levels of evidence, or hazard identification, across study types, outcomes, or species [15, 64].

Certain criteria are applied differently when assessing the internal validity of individual studies versus the body of evidence. For example, while an identified risk of confounding will result in a lower internal validity score for an individual study, a body of evidence may receive a higher quality rating, if all plausible confounding “would reduce a demonstrated effect, or suggest a spurious effect when results show no effect”, as noted by multiple guidelines [64,65,66].

We considered characteristics of frameworks for rating risk of bias in individual studies, and for grading the body of evidence, specifically as they relate to reproductive/children’s environmental health, as discussed earlier (see Table 2).

Results

Review selection process

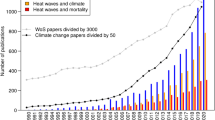

The selection process of systematic reviews is shown in Fig. 1. After screening 10,241 titles, 1,030 abstracts and 423 full texts, 177 systematic reviews were found to assess the association between exposure to air pollution and adverse reproductive/ children’s health outcomes. The most common reasons for exclusions of full texts were that the reviews considered adult or general populations (n = 62), and that reviews were non-systematic (n = 61). Out of the 177 eligible systematic reviews, 18 articles (9.8%) explicitly reported using evidence grading systems [5, 68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84]. The proportion of systematic reviews using evidence grading systems appeared to increase over time (see Fig. 2).

Systematic review characteristics

General characteristics of the 18 systematic reviews that used formal evidence grading systems are summarized in Table 3. These reviews were published between 2015 and 2023; and outcomes assessed were: spontaneous abortion [80], gestational diabetes mellitus [83], fetal growth [72], preterm birth [73, 79], birth weight [76], term birth weight [77], congenital anomalies [74], upper respiratory tract infections [81], bronchiolitis in infants [71], sleep-disordered breathing [70], blood pressure in children and adolescents [84], neuropsychological development [68, 78], autism spectrum disorder [5, 69], academic performance [75], or all child health outcomes [82].

None of the included reviews specified inclusion criteria related to the method of exposure assessment (e.g., modeling vs. monitoring approaches) (see Table 3). Two reviews considered both intervention and observational studies [70, 81], while the others included only observational studies. Between 7 and 84 studies were included by the individual reviews (Table 3) [79, 80]. One review included only studies using air monitoring stations’ data [74], while others reported a variety of exposure assessment methods and data sources. Individual-level measures of exposure (e.g., adducts in cord blood, backpack for individual monitoring) were reported for few studies included in the systematic reviews [68, 72, 76, 77].

The majority of systematic reviews included fixed or random effects meta-analyses, while five refrained for statistical pooling and synthesized their findings in narrative form [68,69,70,71, 75]. All meta-analyses included adjusted effect estimates; several reported only considering single-pollutant models.

ROBIS assessment results

Four of the included systematic reviews were rated at a low risk of bias [5, 71, 75, 76], four at a high risk of bias [68,69,70, 74], and the remaining ten at an unclear risk of bias. The most critical concerns related to methods used to search for primary studies, synthesis approaches, and insufficient reporting (Fig. 3). Between one and eight databases were searched by the various review teams [69, 75]. Six groups made no additional efforts to identify published or unpublished literature [68, 69, 71, 79, 81, 82], while eight additionally screened the reference lists of included studies and/ or those of relevant reviews [70, 72, 74,75,76, 80, 83, 84], in some cases additionally searching relevant reports [73], using web search engines [78], and one further searched grey literature databases and relevant websites, performed forward citation searches, and contacted experts in the field (Supplemental Materials S3 and S4: Details of ROBIS assessment) [5]. Methods used for primary study appraisal, synthesis, and evidence grading are described further below.

Summary of risk of bias assessment. Designed using the robvis tool [85]

Methodological characteristics- Methods for assessing risk of bias/quality in primary studies

The 18 included systematic reviews used 15 distinct approaches for assessing risk of bias/ quality/ internal validity among primary studies (Tables 3 and 4). The Newcastle–Ottawa Scale (NOS) was the most commonly cited tool (n = 9 reviews) [86], with an additional four reviews using modified NOS versions, followed by the Office of Health Assessment and Translation (OHAT) approach (n = 4 reviews)[66]. However, multiple reviews reported using multiple tools, in order to assess quality and risk of bias separately, as well as to address various study designs (e.g., cohort vs. cross-sectional studies) included within reviews [74, 78, 79, 81, 84]. Further, six reviews modified/ tailored the selected tools themselves [69, 71,72,73, 75, 81], while four reviews used tools as modified by preceding systematic reviews [74, 79, 81, 84].

The tools originated from a wide range of research fields (see Table 4), and only the Navigation Guide and OHAT approaches, used by six reviews, were developed specifically for environmental health research [64, 66]. The Risk of Bias in Non-randomized Studies of Exposure (ROBINS-E) tool was developed for studies of non-randomized studies of exposures and used in one review in its preliminary version [94]. Two reviews newly developed their own criteria for assessing primary study quality/ risk of bias [5, 68]. Notably, Lam et al. further developed the Navigation Guide risk of bias tool with expert input, as part of their application of the Navigation Guide methodology. This included developing an approach for rating exposure assessment methods for different air pollutants/ chemical classes [5]. This approach was subsequently adopted by other identified reviews [76, 81].

Exposure assessment methods in general were evaluated in all but two out of fifteen approaches [92, 93], although we considered only four tools applicable to environmental/ air pollution exposures in this regard [64, 66, 87, 94]. Co-exposures were explicitly considered by five tools [15, 62, 87, 91, 94], while all but one tool assessed confounder control [93]. However, review authors modified existing tools in some cases, for example adding considerations of sample size, selection bias, exposure assessment method, and confounder adjustment [69, 71]. Another review group used subgroup analyses to explore the effect of different exposure assessment methods [77].

Methodological characteristics- Evidence assessment methods

As stated above, 18 out of 177 systematic reviews used formal systems for assessing the quality/certainty of the body of evidence, and nine different approaches were used f by these 18 reviews (see Table 5), including published modifications of existing tools. The majority of reviews (n = 8) used the GRADE system [12], followed by modified versions of GRADE, namely the Navigation Guide (n = 4) [64], an approach developed by the World Health Organization (WHO) for air pollution research (n = 1) [98], and a modified version for environmental health research (n = 1) [99]. Other approaches were adopted from OHAT (n = 3) [15], and the International Agency for Research on Cancer’s (IARC) preamble for monographs (2006) [100], among others (see Table 5) [95,96,97, 101]. Modifications to and deviations from the frameworks were noted [68, 69, 71, 81].

The identified approaches for evidence grading were originally developed either for clinical practice [95,96,97, 101], or for research on environmental exposures [15, 64, 66, 98, 99, 104], including air pollution [98], and were characterized by highly heterogeneous methodologies. The original GRADE system assigns an initial rating based on study type, where RCTs begin at a “high” quality rating, while observational studies begin as “low”, before considering various criteria (e.g., consistency between studies), to reach a final rating of the body of evidence [106, 107]. The GRADE system as modified by the WHO (for air pollution studies) and the Navigation Guide (developed for environmental health studies, partly based on the U.S. Environmental Protection Agency’s (EPA) criteria for reproductive and developmental toxicity [28]) differ from the original version in that observational studies are initially rated as “moderate” quality, rather than “low”, among other distinguishing features (e.g., additionally calculating 80% prediction intervals to assess heterogeneity) [64, 98, 108].

While the OHAT approach is based on the GRADE system, the initial rating is based on the number of present study-design features, rather than the study type. These include: controlled exposure, exposure prior to outcome, individual outcome data, and comparison group used . Therefore, evidence from observational studies, due to a lack of controlled exposure, will never start higher than “moderate” . Unlike the GRADE system, upgrades may additionally be given for consistency across different study designs, species, or dissimilar populations, and for “other” reasons [15]. Guidance for subsequently considering quality of evidence across multiple exposures encourages considerations across the entire body of evidence .

In the IARC approach, no initial rating is assigned based on study type, although the appropriateness of different study designs in relation to the research question are considered [100]. Further criteria include study quantity and quality, statistical power, and consistency of findings. This is preceded by considerations including exposure assessment methods, temporality, use of biomarkers, and Hill’s criteria for causality [105]. In the most recent version, this is replaced by “considerations for assessing the body of epidemiological evidence” [13, 21, 105].

The Centre for Evidence Based-Medicine (CEBM) and Scottish Intercollegiate Guidelines Network (SIGN) systems again use previously assigned ratings of each included primary study, based on study type and quality, in addition to a subset of the same criteria as GRADE, but with markedly less specific guidance and explanation, compared to the aforementioned systems. The updated version of the SIGN handbook from 2019 now recommends using the GRADE system for grading evidence. The Best Evidence Synthesis (BES) system, developed for research on lower back problems, does not explicitly rate the study type as a criterion, instead presenting a highly abbreviated approach of considering merely the number, relevance, and quality of available studies [101].

In terms of considering aspects of reproductive/ children’s environmental health research in the “indirectness”, “heterogeneity”, or “confounding/ bias” domains, the Navigation Guide, GRADE approach, and OHAT framework all provide brief commentary, in the form of examples or general guidance, while the other tools make no specific reference to reproductive/ children’s health (see Table 5). Besides the SIGN and the BES systems, all tools consider the timing of exposure and/ or outcome assessment, although only the Navigation Guide and OHAT approach explicitly address this aspect with regard to reproductive/ children’s health research (e.g., developmental stages). Finally, only the Navigation Guide, OHAT approach, and IARC framework provide guidance on assessing “evidence for no effect”. Notably, systematic review authors addressed some of these aspects outside of their application of the evidence grading frameworks, in their methods (e.g., by applying relevant inclusion criteria, or by conducting subgroup analyses of different pregnancy trimesters or age groups [73, 76, 78, 83, 84]), or in their discussions.

Discussion

This is to our knowledge the first methodological survey to systematically identify and describe evidence grading systems used in the area of air pollution exposure and adverse reproductive/ child outcomes. Of note, this is not an overview of recommended, but of practiced methods in the field. Only 18 out of 177 systematic reviews (9.8%) were found to explicitly utilize formal rating systems for bodies of evidence. Such a small proportion suggests that this process is still not common in the field, although an increase was observed after 2015 (see Fig. 2), which is in line with previous findings on evidence grading approaches used in systematic reviews of air pollution exposure [42]. The inconsistency in the approaches used—15 different risk of bias assessment and 9 different evidence grading tools used across 18 reviews- plus the numerous modifications applied, reflect a lack of consensus. The NOS and GRADE system were the most commonly used tools for assessing internal validity and for grading evidence, respectively, discussed further below. It is noteworthy that multiple reviews “borrowed” tools originating from rather unrelated fields (e.g., clinical research on lower back problems), and there was marked heterogeneity in the comprehensiveness and relevance of the employed tools.

Further, numerous systematic reviews cited preceding reviews using the same approach, in reference to their own approach [5, 74, 76,77,78,79,80,81, 83]. This suggests a “propagated” methods adoption, where systematic review authors use preceding reviews for guidance, possibly leading to the uptake of inappropriate methods [109]. This implicates that the publication of worked examples, as those provided by the Navigation Guide group [110], are essential for further improving the methodological quality of systematic reviews.

Risk of bias assessment

Our findings indicate that systematic review authors use a wide range of approaches for assessing risk of bias/ quality among individual studies, in many cases originating from clinical or other less related fields. 13 reviews were found to use the original or a modified NOS version. The widespread use of the contested NOS may be one of the most "spectacular" examples of the risks of quotation errors and citation copying [109, 111]. Vandenberg et al. recently outlined how flawed exposure assessment methods put public health at risk [27], and this extends to a lack of appropriate and comprehensive evaluations of exposure assessment methods. The NOS includes only a cursory evaluation of exposures assessment methods that is arguably not applicable to environmental exposures. In general, risk of bias/ quality assessment tools have been criticized for focusing on mechanically determining the potential presence of biases, often based on how closely they emulate a hypothetical “target” RCT, rather than their likely direction, magnitude, and relative importance [18, 112]. Rather than assigning ratings based on study design, assessments should identify the most probable and important biases in relation to the particular population, exposure, and outcome under investigation, rate each study on how effectively it addresses each potential bias, and differences in results across studies should be considered in relation to susceptibility to each bias [14, 112,113,114].

The iterative development of the ROBINS-E tool [94, 115], which in its preliminary version was criticized for being based on comparisons to the “ideal” RCT, among other limitations [116], but in its final version addressed many of these concerns, including a more nuanced approach to causal inference [117], demonstrates that continuous collaboration between experts and critical appraisal of developing tools is effective and desirable. Also, the WHO has introduced a risk of bias assessment tool for air pollution exposure studies in systematic reviews [118]. In addition, informative evaluations of additional risk of bias tools available for environmental health studies have been presented [119]. Useful interactive data visualization tools exist to facilitate comparison and selection of risk of bias/ methodological quality tools for observational studies of exposures [120], collated on the basis of a preceding systematic review [63].

Evidence grading approaches

In this methodological survey, 16 out of 18 reviews used evidence grading systems that provided higher scores to experimental (vs. non-experimental) studies or related study features. The practice of ranking evidence based on a crude hierarchy of study designs has been criticized [18,19,20,21, 23]. For one, experimental studies may be no better at reducing “intractable” confounding, and other approaches (e.g., difference-in-difference) may be much more effectual in addressing particular confounding scenarios [23]. Pluralistic approaches to causal inference, that extend beyond counterfactual and interventionist approaches, have been proposed [21, 22].

Six reviews were found to use the original GRADE system for rating bodies of evidence, for which we noted a lack of consideration with regard to heterogeneities across different developmental stages, a paucity of attention paid to the timing of exposure to environmental risks, and a lack of discussion of evidence for no association or effect, in addition to the default ranking of experimental studies above observational ones. The applicability of the GRADE approach to observational studies has previously been discussed [121, 122], and challenges with rating the body of evidence from observational studies have been reported [123,124,125,126], including rating evidence from non-randomized studies as “low” by default, difficulties in assessing complex bodies of evidence consisting of different study designs, and limited applicability regarding research on etiology, among others [124, 127].

The GRADE working group has proposed the possibility of initially rating evidence from non-randomized studies as “high”, when used in conjunction with risk of bias assessment tools like ROBINS-I [94, 115, 128, 129]. The reasoning is that the lack of randomization will usually lead to rating down by at least two levels to “low”, so ultimately, evidence from observational studies will be rated as “low” with either method [115, 129], hence, this approach is again based on the principle that non-randomized studies are inherently inferior. Other suggestions have been made to start observational studies as "moderate", as done in the Navigation Guide’s and WHO’s modified versions [64, 98], and expand criteria for upgrading [124]. In prognosis research, the GRADE system has been adapted to start observational studies at “high” [130]. Further developments of the GRADE system for environmental health research, including a recent exploration of how considerations of biological plausibility can be integrated into evidence grading [131], are in progress [99, 132].

Reproductive and children’s environmental health: specific guidance needed

While some of the identified frameworks were found to address selected aspects, concerns persist regarding reproductive/ children's environmental health research: Firstly, the risk of bias assessment and evidence grading frameworks frequently used by existing systematic reviews often do not explicitly or comprehensively address important aspects, such as vulnerabilities related to developmental stages, considerations of exposure timing and relative dose, etc. [24, 25]. Also, only three evidence grading systems provide any guidance on assessing evidence for the absence of effects. Addressing these points would require considerations of how domains of current evidence grading frameworks are operationalized, including indirectness domains (e.g., timing of exposures, “worst-case” exposure scenarios [27], etc.), heterogeneity (disparities related to social determinants, diverse etiological mechanisms, etc.), and biases specific to research on pregnancy and childhood (e.g., live-birth bias). Some of the identified methodologies offer some insights into how existing frameworks may be adapted [66, 98, 104]: For example, considering null findings, in addition to positive ones, is advised by the Navigation Guide with regard to publication bias, meaning that an excess of null findings, especially from small or industry-sponsored studies, are also of concern [104]. With regard to subsequent assignments of levels of evidence, the OHAT approach notes that due to the intrinsic challenges of proving a negative, concluding "evidence for no effect," requires high levels of confidence in evidence. Low/ moderate confidence should be considered “inadequate evidence” for absence of effects [15].

Failing to explicitly address the defining features and major characteristics of reproductive and children’s environmental health as described above renders nonspecific tools such as the NOS and GRADE inadequate for comprehensively evaluating the unique risks posed by environmental exposures during vulnerable developmental stages and across the lifespan. Failing to account for these complexities within evidence grading frameworks may result in an incomplete understanding of the risks posed by environmental exposures during crucial developmental stages. This lack of specification may give rise to invalid assessment results both at the level of primary studies, as well at the level of bodies of evidence, and thereby lead to erroneous conclusions about the certainty of the assessed evidence. This in turn may undermine the formulation of effective policies for protecting reproductive and children’s health. Therefore, emphasizing the need for using more specialized frameworks (e.g., ROBINS-E, Navigation Guide, OHAT) for assessing studies on reproductive and children’s environmental health is paramount for ensuring accurate findings and interpretations and, ultimately, safeguarding the health of future generations. Altogether, while the addition of new tools or domains may not be needed, further consensus and published direction on how exactly these can be operationalized in the context of reproductive/ children’s environmental health may be useful. Providing explicit guidance and clear definitions, promoting the use of more applicable frameworks, and a continued refinement and tailoring of existing frameworks towards reproductive/ children’s environmental health research is critical for improving current methodologies [133].

Further evidence grading systems and systematic review frameworks not utilized in the identified reviews

Additional evidence grading systems and systematic frameworks for environmental health research exist, but were not utilized by the identified reviews: In 2006, the EPA published a “Framework for Assessing Health Risks of Environmental Exposures to Children” [134], providing using a “lifestage” perspective. Developing specific assessment criteria during problem formulation is recommended. A weight-of-evidence approach is used, which places emphasis on higher quality studies for evidence grading [134]. Further systematic review frameworks developed for observational studies of etiology or environmental health and toxicology research include the COSMOS-E, COSTER, and SYRINA frameworks, among others. Notably, while provided guidance on evidence grading generally reflect principles of the GRADE system, specific recommendations as to what tool or approach to use [113, 135], or whether to assign an initial rating based on study type [136], are avoided.

The existence of the approaches described above, as well as those with clear relevance to reproductive/ children’s environmental health presented earlier (e.g., ROBINS-E, Navigation Guide, OHAT), together with the limited uptake we identified, suggest that the problem lies less in an absence of appropriate methods, but with their accessibility or implementation. Promoting simple, but not oversimplified, practicable, and specific guidance should be prioritized [109].

Also, calls for child-relevant extensions to the PRISMA checklist- “PRISMA-C” have been made [26, 137, 138], and are currently under development [139]. Specific recommendations regarding risk of bias and evidence assessments could be integrated herein.

Beyond evidence grading- linking evidence and triangulation

Different types of evidence (i.e., human and non-human studies) may be combined into integrated networks of evidences within systematic reviews of environmental health risks [18, 113]. In fact, the Navigation Guide, OHAT, and IARC methodologies provide guidance on integrating evidence from human, animal, and mechanist studies [15, 64, 66, 100, 104].

Further, triangulation (i.e., leveraging differences in evidence from diverse methodological approaches with different biases to strengthen causal inference) has been encouraged for environmental health research [22, 140]. However, guidelines are needed to help researchers integrate triangulation processes into systematic reviews effectively [140].

Implications for policy

Systematic review methods for environmental health research continue to evolve, including at the U.S. federal level, which may have a direct impact on policies to protect reproductive and children’s health: Within the EPA, revisions are being made to current systematic review methodologies [141, 142], while proposed changes to the existing “weight-of-evidence” approach, which considers a plethora of different types of evidence, in favor of a “manipulative causation” framework, are being heavily contested [18, 143, 144], and probabilistic risk-specific dose distribution analyses are being piloted, to expand beyond previous threshold-based approaches [145]. This highlights that considerations of evidence assessment methodologies span scientific, political, and legal realms, and carry massive public health implications.

We hope this work can provide a comprehensive overview of the current state of practice in the field, and serve as a starting point for those working on the further refinement or promotion of evidence grading systems for reproductive/ children’s environmental health research.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- BES:

-

Best Evidence Synthesis

- CEBM:

-

The Centre for Evidence Based-Medicine

- EPA:

-

Environmental Protection Agency

- GRADE:

-

Grading of Recommendations, Assessment, Development, and Evaluations

- IARC:

-

International Agency for Research on Cancer’s

- NOS:

-

Newcastle Ottawa Scale

- OHAT:

-

Office of Health Assessment and Translation

- PRIOR:

-

Preferred Reporting Items for Overviews of Reviews

- PRISMA:

-

Preferred Reporting Items for Systematic reviews and Meta-Analyses

- SIGN:

-

Scottish Intercollegiate Guidelines Network

- RCT:

-

Randomized controlled trial

- ROBIS:

-

Risk of bias in systematic reviews

- WHO:

-

World Health Organization

References

Nyadanu SD, Dunne J, Tessema GA, Mullins B, Kumi-Boateng B, Lee Bell M, et al. Prenatal exposure to ambient air pollution and adverse birth outcomes: an umbrella review of 36 systematic reviews and meta-analyses. Environ Pollut. 2022;306:119465.

Sun X, Luo X, Zhao C, Chung Ng RW, Lim CE, Zhang B, et al. The association between fine particulate matter exposure during pregnancy and preterm birth: a meta-analysis. BMC Pregnancy Childbirth. 2015;15:300.

Xing Z, Zhang S, Jiang YT, Wang XX, Cui H. Association between prenatal air pollution exposure and risk of hypospadias in offspring: a systematic review and meta-analysis of observational studies. Aging (Albany NY). 2021;13(6):8865–79.

Lin HC, Guo JM, Ge P, Ou P. Association between prenatal exposure to ambient particulate matter and risk of hypospadias in offspring: a systematic review and meta-analysis. Environ Res. 2021;192:110190.

Lam J, Sutton P, Kalkbrenner A, Windham G, Halladay A, Koustas E, et al. A systematic review and meta-analysis of multiple airborne pollutants and autism spectrum disorder. PLoS One. 2016;11(9):e0161851.

Yamineva Y, Romppanen S. Is law failing to address air pollution? Reflections on international and EU developments. Rev Eur Comp Int Environ Law. 2017;26(3):189–200.

United Nations Environment Programme. Regulating air quality: the first global assessment of air pollution legislation. 2021.

Neira M, Fletcher E, Brune-Drisse MN, Pfeiffer M, Adair-Rohani H, Dora C. Environmental health policies for women’s, children’s and adolescents’ health. Bull World Health Organ. 2017;95(8):604–6.

Malekinejad M, Horvath H, Snyder H, Brindis CD. The discordance between evidence and health policy in the United States: the science of translational research and the critical role of diverse stakeholders. Health Res Policy Syst. 2018;16(1):81.

Movsisyan A, Dennis J, Rehfuess E, Grant S, Montgomery P. Rating the quality of a body of evidence on the effectiveness of health and social interventions: a systematic review and mapping of evidence domains. Res Synth Methods. 2018;9(2):224–42.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71.

Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336(7650):924–6.

Rothman KJ, Greenland S, Lash TL. Modern epidemiology. Vol. 3. Philadelphia: Wolters Kluwer Health/Lippincott Williams & Wilkins; 2008.

Arroyave WD, Mehta SS, Guha N, Schwingl P, Taylor KW, Glenn B, et al. Challenges and recommendations on the conduct of systematic reviews of observational epidemiologic studies in environmental and occupational health. J Expo Sci Environ Epidemiol. 2021;31(1):21–30.

Rooney AA, Boyles AL, Wolfe MS, Bucher JR, Thayer KA. Systematic review and evidence integration for literature-based environmental health science assessments. Environ Health Perspect. 2014;122(7):711–8.

Strickland MJ, Klein M, Darrow LA, Flanders WD, Correa A, Marcus M, et al. The issue of confounding in epidemiological studies of ambient air pollution and pregnancy outcomes. J Epidemiol Community Health. 2009;63(6):500–4.

Woodruff TJ, Parker JD, Darrow LA, Slama R, Bell ML, Choi H, et al. Methodological issues in studies of air pollution and reproductive health. Environ Res. 2009;109(3):311–20.

Steenland K, Schubauer-Berigan MK, Vermeulen R, Lunn RM, Straif K, Zahm S, et al. Risk of bias assessments and evidence syntheses for observational epidemiologic studies of environmental and occupational exposures: strengths and limitations. Environ Health Perspect. 2020;128(9):095002.

Rothman KJ. Six Persistent research misconceptions. J Gen Intern Med. 2014;29(7):1060–4.

Wyer PC. From MARS to MAGIC: the remarkable journey through time and space of the grading of recommendations assessment, development and evaluation initiative. J Eval Clin Pract. 2018;24(5):1191–202.

Vandenbroucke JP, Broadbent A, Pearce N. Causality and causal inference in epidemiology: the need for a pluralistic approach. Int J Epidemiol. 2016;45(6):1776–86.

Pearce N, Vandenbroucke JP, Lawlor DA. Causal inference in environmental epidemiology: old and new approaches. Epidemiology. 2019;30(3):311–6.

Pearce N, Vandenbroucke JP. Are target trial emulations the gold standard for observational studies? Epidemiology. 2023;34(5):614–8.

National Research Council (US) Committee on Pesticides in the Diets of Infants and Children. Pesticides in the diets of Infants and children. Washington (DC): National Academies Press (US); 1993.

Bearer CF. Environmental health hazards: how children are different from adults. Future Child. 1995;5(2):11–26.

Farid-Kapadia M, Askie L, Hartling L, Contopoulos-Ioannidis D, Bhutta ZA, Soll R, et al. Do systematic reviews on pediatric topics need special methodological considerations? BMC Pediatr. 2017;17(1):57.

Vandenberg LN, Rayasam SDG, Axelrad DA, Bennett DH, Brown P, Carignan CC, et al. Addressing systemic problems with exposure assessments to protect the public’s health. Environ Health. 2023;21(1):121.

Woodruff TJ, Sutton P. An evidence-based medicine methodology to bridge the gap between clinical and environmental health sciences. Health Aff (Millwood). 2011;30(5):931–7.

United States Environmental Protection Agency. Guidance on Selecting Age Groups for Monitoring and Assessing Childhood Exposures to Environmental Contaminants. Washington: DC; 2005.

Pohl HR, Abadin HG. Chemical mixtures: evaluation of risk for child-specific exposures in a multi-stressor environment. Toxicol Appl Pharmacol. 2008;233(1):116–25.

Hamra GB, Buckley JP. Environmental exposure mixtures: questions and methods to address them. Curr Epidemiol Rep. 2018;5(2):160–5.

Goldberg MS, Baumgartner J, Chevrier J. Statistical adjustments of environmental pollutants arising from multiple sources in epidemiologic studies: the role of markers of complex mixtures. Atmos Environ. 2022;270:118788.

Aronson JK. When I use a word … The precautionary principle: a definition. BMJ. 2021;375:n3111.

Kriebel D, Tickner J, Epstein P, Lemons J, Levins R, Loechler EL, et al. The precautionary principle in environmental science. Environ Health Perspect. 2001;109(9):871–6.

Altman DG, Bland JM. Statistics notes: absence of evidence is not evidence of absence. BMJ. 1995;311(7003):485.

Smith PRM, Ware L, Adams C, Chalmers I. Claims of ‘no difference’ or ‘no effect’ in Cochrane and other systematic reviews. BMJ Evid Based Med. 2021;26(3):118–20.

Quertemont E. How to statistically show the absence of an effect. Psychol Belg. 2011;51(2):109–27.

Lakens D. Equivalence tests: a practical primer for t tests, correlations, and meta-analyses. Soc Psychol Personal Sci. 2017;8(4):355–62.

Campbell H, Gustafson P. Conditional equivalence testing: an alternative remedy for publication bias. PLoS One. 2018;13(4):e0195145.

Sheehan MC, Lam J. Use of systematic review and meta-analysis in environmental health epidemiology: a systematic review and comparison with guidelines. Curr Environ Health Rep. 2015;2(3):272–83.

Sutton P, Chartres N, Rayasam SDG, Daniels N, Lam J, Maghrbi E, et al. Reviews in environmental health: how systematic are they? Environ Int. 2021;152:106473.

Sheehan MC, Lam J, Navas-Acien A, Chang HH. Ambient air pollution epidemiology systematic review and meta-analysis: a review of reporting and methods practice. Environ Int. 2016;92–93:647–56.

Nieuwenhuijsen MJ, Dadvand P, Grellier J, Martinez D, Vrijheid M. Environmental risk factors of pregnancy outcomes: a summary of recent meta-analyses of epidemiological studies. Environ Health. 2013;12(1):6.

Michel S, Atmakuri A, von Ehrenstein OS. Prenatal exposure to ambient air pollutants and congenital heart defects: an umbrella review. Environ Int. 2023;178:108076.

Berrang-Ford L, Sietsma AJ, Callaghan M, Minx JC, Scheelbeek PFD, Haddaway NR, et al. Systematic mapping of global research on climate and health: a machine learning review. Lancet Planet Health. 2021;5(8):e514–25.

Gates M, Gates A, Pieper D, Fernandes RM, Tricco AC, Moher D, et al. Reporting guideline for overviews of reviews of healthcare interventions: development of the PRIOR statement. BMJ. 2022;378:e070849.

Pollock M, Fernandes RM, Becker LA, Featherstone R, Hartling L. What guidance is available for researchers conducting overviews of reviews of healthcare interventions? A scoping review and qualitative metasummary. Syst Rev. 2016;5(1):190.

Pollock M, Fernandes R, Becker L, Pieper D, Hartling L. Chapter V: Overviews of Reviews. In: Higgins J, Thomas J, Chandler J, Cumpston M, Li T, Page M, et al., editors. Cochrane Handbook for Systematic Reviews of Interventions version 61. Cochrane; 2020. updated September 2020.

Aromataris E, Fernandez R, Godfrey CM, Holly C, Khalil H, Tungpunkom P. Summarizing systematic reviews: methodological development, conduct and reporting of an umbrella review approach. Int J Evid Based Healthc. 2015;13(3):132–40.

Hunt H, Pollock A, Campbell P, Estcourt L, Brunton G. An introduction to overviews of reviews: planning a relevant research question and objective for an overview. Syst Rev. 2018;7(1):39.

Lunny C, Brennan SE, McDonald S, McKenzie JE. Toward a comprehensive evidence map of overview of systematic review methods: paper 1—purpose, eligibility, search and data extraction. Syst Rev. 2017;6(1):231.

KrnicMartinic M, Pieper D, Glatt A, Puljak L. Definition of a systematic review used in overviews of systematic reviews, meta-epidemiological studies and textbooks. BMC Med Res Methodol. 2019;19(1):203.

Guyatt GH, Sackett DL, Sinclair JC, Hayward R, Cook DJ, Cook RJ. Users’ guides to the medical literature. IX. A method for grading health care recommendations Evid Based Med Working Group. JAMA. 1995;274(22):1800–4.

Goossen K, Hess S, Lunny C, Pieper D. Database combinations to retrieve systematic reviews in overviews of reviews: a methodological study. BMC Med Res Methodol. 2020;20(1):138.

Salvador-Olivan JA, Marco-Cuenca G, Arquero-Aviles R. Development of an efficient search filter to retrieve systematic reviews from PubMed. J Med Libr Assoc. 2021;109(4):561–74.

Navarro-Ruan T, Haynes RB. Preliminary comparison of the performance of the National Library of Medicine’s systematic review publication type and the sensitive clinical queries filter for systematic reviews in PubMed. J Med Libr Assoc. 2022;110(1):43–6.

Wright J, Walwyn R. Literature search methods for an overview of reviews (‘umbrella’ reviews or ‘review of reviews’). Leeds Institute of Health Sciences: University of Leeds; 2016.

Koster J. PubMed PubReminer Academic Medical Center, University of Amsterdam; 2014. Available from: https://hgserver2.amc.nl/cgi-bin/miner/miner2.cgi.

Scells H, Zuccon G. searchrefiner: a query visualisation and understanding tool for systematic reviews. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management. Torino: Association for Computing Machinery; 2018. p. 1939–42.

McGill Library. Grey literature and other supplementary search methods; 2022. Available from: https://libraryguides.mcgill.ca/knowledge-syntheses.

Whiting P, Savović J, Higgins JPT, Caldwell DM, Reeves BC, Shea B, et al. ROBIS: a new tool to assess risk of bias in systematic reviews was developed. J Clin Epidemiol. 2016;69:225–34.

Viswanathan M, Ansari MT, Berkman ND, Chang S, Hartling L, McPheeters M, et al. AHRQ Methods for Effective Health Care Assessing the Risk of Bias of Individual Studies in Systematic Reviews of Health Care Interventions. Methods Guide for Effectiveness and Comparative Effectiveness Reviews. Rockville (MD): Agency for Healthcare Research and Quality (US); 2008.

Wang Z, Taylor K, Allman-Farinelli M, Armstrong B, Askie L, Ghersi D, et al. A systematic review: tools for assessing methodological quality of human observational studies. 2019.

Woodruff TJ, Sutton P. The navigation guide systematic review methodology: a rigorous and transparent method for translating environmental health science into better health outcomes. Environ Health Perspect. 2014;122(10):1007–14.

Guyatt GH, Oxman AD, Sultan S, Glasziou P, Akl EA, Alonso-Coello P, et al. GRADE guidelines: 9. Rating up the quality of evidence. J Clin Epidemiol. 2011;64(12):1311–6.

Office of Health Assessment and Translation (OHAT). Handbook for Conducting a Literature-Based Health Assessment Using OHAT Approach for Systematic Review and Evidence Integration. Division of the National Toxicology Program, National Institute of Environmental Health Sciences; 2019.

Guyatt GH, Oxman AD, Kunz R, Woodcock J, Brozek J, Helfand M, et al. GRADE guidelines: 8. Rating the quality of evidence-indirectness. J Clin Epidemiol. 2011;64(12):1303–10.

Suades-González E, Gascon M, Guxens M, Sunyer J. Air pollution and neuropsychological development: a review of the latest evidence. Endocrinology. 2015;156(10):3473–82.

Morales-Suárez-Varela M, Peraita-Costa I, Llopis-González A. Systematic review of the association between particulate matter exposure and autism spectrum disorders. Environ Res. 2017;153:150–60.

Tenero L, Piacentini G, Nosetti L, Gasperi E, Piazza M, Zaffanello M. Systematic review indoor/outdoor not-voluptuary-habit pollution and sleep-disordered breathing in children: a systematic review. Transl Pediatr. 2017;6(2):104–10.

King C, Kirkham J, Hawcutt D, Sinha I. The effect of outdoor air pollution on the risk of hospitalisation for bronchiolitis in infants: a systematic review. PeerJ. 2018;6:e5352.

Fu L, Chen Y, Yang X, Yang Z, Liu S, Pei L, et al. The associations of air pollution exposure during pregnancy with fetal growth and anthropometric measurements at birth: a systematic review and meta-analysis. Environ Sci Pollut Res Int. 2019;26(20):20137–47.

Rappazzo KM, Nichols JL, Rice RB, Luben TJ. Ozone exposure during early pregnancy and preterm birth: a systematic review and meta-analysis. Environ Res. 2021;198:111317.

Ravindra K, Chanana N, Mor S. Exposure to air pollutants and risk of congenital anomalies: a systematic review and metaanalysis. Sci Total Environ. 2021;765:142772.

Stenson C, Wheeler AJ, Carver A, Donaire-Gonzalez D, Alvarado-Molina M, Nieuwenhuijsen M, et al. The impact of Traffic-Related air pollution on child and adolescent academic Performance: a systematic review. Environ Int. 2021;155:106696.

Uwak I, Olson N, Fuentes A, Moriarty M, Pulczinski J, Lam J, et al. Application of the navigation guide systematic review methodology to evaluate prenatal exposure to particulate matter air pollution and infant birth weight. Environ Int. 2021;148:106378.

Gong C, Wang J, Bai Z, Rich DQ, Zhang Y. Maternal exposure to ambient PM(2.5) and term birth weight: a systematic review and meta-analysis of effect estimates. Sci Total Environ. 2022;807(Pt 1):150744.

Lin LZ, Zhan XL, Jin CY, Liang JH, Jing J, Dong GH. The epidemiological evidence linking exposure to ambient particulate matter with neurodevelopmental disorders: a systematic review and meta-analysis. Environ Res. 2022;209:112876.

Yu Z, Zhang X, Zhang J, Feng Y, Zhang H, Wan Z, et al. Gestational exposure to ambient particulate matter and preterm birth: an updated systematic review and meta-analysis. Environ Res. 2022;212(Pt C):113381.

Zhu W, Zheng H, Liu J, Cai J, Wang G, Li Y, et al. The correlation between chronic exposure to particulate matter and spontaneous abortion: a meta-analysis. Chemosphere. 2022;286(Pt 2):131802.

Ziou M, Tham R, Wheeler AJ, Zosky GR, Stephens N, Johnston FH. Outdoor particulate matter exposure and upper respiratory tract infections in children and adolescents: a systematic review and meta-analysis. Environ Res. 2022;210:112969.

Blanc N, Liao J, Gilliland F, Zhang JJ, Berhane K, Huang G, et al. A systematic review of evidence for maternal preconception exposure to outdoor air pollution on Children’s health. Environ Pollut. 2023;318:120850.

Liang W, Zhu H, Xu J, Zhao Z, Zhou L, Zhu Q, et al. Ambient air pollution and gestational diabetes mellitus: an updated systematic review and meta-analysis. Ecotoxicol Environ Saf. 2023;255:114802.

Tandon S, Grande AJ, Karamanos A, Cruickshank JK, Roever L, Mudway IS, et al. Association of ambient air pollution with blood pressure in adolescence: a systematic-review and meta-analysis. Curr Probl Cardiol. 2023;48(2):101460.

McGuinness LA, Higgins JPT. Risk-of-bias VISualization (robvis): An R package and Shiny web app for visualizing risk-of-bias assessments. Res Synth Methods. 2021;12(1):55–61.

Wells G, Shea B, O’Connell D, Peterson J, Welch V, Losos M, et al. Newcastle-Ottawa quality assessment scales. Available from: https://www.ohri.ca/programs/clinical_epidemiology/oxford.asp.

Mustafić H, Jabre P, Caussin C, Murad MH, Escolano S, Tafflet M, et al. Main air pollutants and myocardial infarction: a systematic review and meta-analysis. JAMA. 2012;307(7):713–21.

Herzog R, Álvarez-Pasquin MJ, Díaz C, Del Barrio JL, Estrada JM, Gil Á. Are healthcare workers’ intentions to vaccinate related to their knowledge, beliefs and attitudes? a systematic review. BMC Public Health. 2013;13(1):154.

Modesti PA, Reboldi G, Cappuccio FP, Agyemang C, Remuzzi G, Rapi S, et al. Panethnic differences in blood pressure in europe: a systematic review and meta-analysis. PLoS One. 2016;11(1):e0147601.

National Institute for Environmental Health Sciences. OHAT risk of bias rating tool for human and animal studies. 2015.

Sterne J, Higgins J, Reeves B, on behalf of the development group for ACROBAT-NRSI. A Cochrane Risk Of Bias Assessment Tool: for NonRandomized Studies of Interventions (ACROBAT-NRSI); 2014.

Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343:d5928.

Hoy D, Brooks P, Woolf A, Blyth F, March L, Bain C, et al. Assessing risk of bias in prevalence studies: modification of an existing tool and evidence of interrater agreement. J Clin Epidemiol. 2012;65(9):934–9.

Morgan RL, Thayer KA, Santesso N, Holloway AC, Blain R, Eftim SE, et al. Evaluation of the risk of bias in non-randomized studies of interventions (ROBINS-I) and the ‘target experiment’ concept in studies of exposures: rationale and preliminary instrument development. Environ Int. 2018;120:382–7.

Oxford Centre for Evidence-Based Medicine. Levels of Evidence 2009. Available from: https://www.cebm.ox.ac.uk/resources/levels-of-evidence/oxford-centre-for-evidence-based-medicine-levels-of-evidence-march-2009.

OCEBM Levels of Evidence Working Group. The Oxford 2011 Levels of Evidence. Oxford Centre for Evidence-Based Medicine; 2011.

Scottish Intercollegiate Guidelines Network. SIGN 50. In: Healthcare improvement Scotland, editor. Edinburgh: Elliott House; 2011.

WHO Global Air Quality Guidelines Working Group on Certainty of Evidence Assessment. Approach to assessing the certainty of evidence from systematic reviews informing WHO global air quality guidelines. 2020. Available from: https://ars.els-cdn.com/content/image/1-s2.0-S0160412020318316-mmc4.pdf.

Morgan RL, Thayer KA, Bero L, Bruce N, Falck-Ytter Y, Ghersi D, et al. GRADE: assessing the quality of evidence in environmental and occupational health. Environ Int. 2016;92–93:611–6.

World Health Organization International Agency for Research on Cancer. IARC Monographs on the Evaluation of Carcinogenic Risks to Humans- Preamble. France: Lyon; 2006.

Bigos SJ, Richard Bowyer RO, Richard Braen G. Acute low back problems in adults, AHCPR guideline no. 14. J Manual Manipulative Ther. 1996;4(3):99–111.

Dijkers M. Introducing GRADE: a systematic approach to rating evidence in systematic reviews and to guideline development. KT Update. 2013;1(5):1–9.

GRADE Working Group. Handbook for grading the quality of evidence and the strength of recommendations using the GRADE approach. In: Holger Schünemann, Jan Brożek, Gordon Guyatt, Oxman A. 2013.

University of California San Francisco Program on Reproductive Health and the Environment. Navigation Guide Protocol for Rating the Quality and Strength of Human and Non‐Human Evidence 2012. Available from: https://prhe.ucsf.edu/sites/g/files/tkssra341/f/Instructions%20to%20Authors%20for%20GRADING%20QUALITY%20OF%20EVIDENCE.pdf.

Hill AB. The environment and disease: association or causation? Proc R Soc Med. 1965;58(5):295–300.

Balshem H, Helfand M, Schünemann HJ, Oxman AD, Kunz R, Brozek J, et al. GRADE guidelines: 3. Rating the quality of evidence. J Clin Epidemiol. 2011;64(4):401–6.

The GRADE Working Group. GRADE Handbook Schünemann H BJ, Guyatt G, Oxman A. 2013.

Pérez Velasco R, Jarosińska D. Update of the WHO global air quality guidelines: systematic reviews - An introduction. Environ Int. 2022;170:107556.

Ioannidis JPA. Massive citations to misleading methods and research tools: Matthew effect, quotation error and citation copying. Eur J Epidemiol. 2018;33(11):1021–3.

Johnson PI, Sutton P, Atchley DS, Koustas E, Lam J, Sen S, et al. The Navigation Guide—Evidence-based medicine meets environmental health: systematic review of human evidence for PFOA effects on fetal growth. Environ Health Perspect. 2014;122(10):1028–39.

Stang A, Jonas S, Poole C. Case study in major quotation errors: a critical commentary on the Newcastle-Ottawa scale. Eur J Epidemiol. 2018;33(11):1025–31.

Savitz DA, Wellenius GA, Trikalinos TA. The problem with mechanistic risk of bias assessments in evidence synthesis of observational studies and a practical alternative: assessing the impact of specific sources of potential bias. Am J Epidemiol. 2019;188(9):1581–5.

Dekkers OM, Vandenbroucke JP, Cevallos M, Renehan AG, Altman DG, Egger M. COSMOS-E: guidance on conducting systematic reviews and meta-analyses of observational studies of etiology. PLoS Med. 2019;16(2):e1002742-e.

Schubauer-Berigan MK, Richardson DB, Fox MP, Fritschi L, Canu IG, Pearce N, et al. IARC-NCI workshop on an epidemiological toolkit to assess biases in human cancer studies for hazard identification: beyond the algorithm. Occup Environ Med. 2023;80(3):119–20.

Morgan RL, Thayer KA, Santesso N, Holloway AC, Blain R, Eftim SE, et al. A risk of bias instrument for non-randomized studies of exposures: A users’ guide to its application in the context of GRADE. Environ Int. 2019;122:168–84.

Bero L, Chartres N, Diong J, Fabbri A, Ghersi D, Lam J, et al. The risk of bias in observational studies of exposures (ROBINS-E) tool: concerns arising from application to observational studies of exposures. Syst Rev. 2018;7(1):242.

ROBINS-E Development Group. Risk Of Bias In Non-randomized Studies - of Exposure (ROBINS-E). Launch version 20 June 2023 2023. Available from: https://www.riskofbias.info/welcome/robins-e-tool.

WHO Global Air Quality Guidelines Working Group on Risk of Bias Assessment. Risk of bias assessment instrument for systematic reviews informing WHO global air quality guidelines 2020. Available from: https://apps.who.int/iris/handle/10665/341717.

Rooney AA, Cooper GS, Jahnke GD, Lam J, Morgan RL, Boyles AL, et al. How credible are the study results? Evaluating and applying internal validity tools to literature-based assessments of environmental health hazards. Environ Int. 2016;92–93:617–29.

Taylor KW, Wang Z, Walker VR, Rooney AA, Bero LA. Using interactive data visualization to facilitate user selection and comparison of risk of bias tools for observational studies of exposures. Environ Int. 2020;142:105806.

Schünemann H, Hill S, Guyatt G, Akl EA, Ahmed F. The GRADE approach and Bradford Hill’s criteria for causation. J Epidemiol Community Health. 2011;65(5):392–5.

Durrheim DN, Reingold A. Modifying the GRADE framework could benefit public health. J Epidemiol Community Health. 2010;64(5):387.

Montgomery P, Movsisyan A, Grant SP, Macdonald G, Rehfuess EA. Considerations of complexity in rating certainty of evidence in systematic reviews: a primer on using the GRADE approach in global health. BMJ Glob Health. 2019;4(Suppl 1):e000848.

Rehfuess EA, Akl EA. Current experience with applying the GRADE approach to public health interventions: an empirical study. BMC Public Health. 2013;13(1):9.

Hilton Boon M, Thomson H, Shaw B, Akl EA, Lhachimi SK, López-Alcalde J, et al. Challenges in applying the GRADE approach in public health guidelines and systematic reviews: a concept article from the GRADE Public Health Group. J Clin Epidemiol. 2021;135:42–53.

Zähringer J, Schwingshackl L, Movsisyan A, Stratil JM, Capacci S, Steinacker JM, et al. Use of the GRADE approach in health policymaking and evaluation: a scoping review of nutrition and physical activity policies. Implement Sci. 2020;15:1–18.

Norris SL, Bero L. GRADE methods for guideline development: time to evolve? Ann Intern Med. 2016;165(11):810–1.

Sterne JA, Hernán MA, Reeves BC, Savović J, Berkman ND, Viswanathan M, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355:i4919.

Schünemann HJ, Cuello C, Akl EA, Mustafa RA, Meerpohl JJ, Thayer K, et al. GRADE guidelines: 18. How ROBINS-I and other tools to assess risk of bias in nonrandomized studies should be used to rate the certainty of a body of evidence. J Clin Epidemiol. 2019;111:105–14.

Iorio A, Spencer FA, Falavigna M, Alba C, Lang E, Burnand B, et al. Use of GRADE for assessment of evidence about prognosis: rating confidence in estimates of event rates in broad categories of patients. BMJ. 2015;350:h870.

Whaley P, Piggott T, Morgan RL, Hoffmann S, Tsaioun K, Schwingshackl L, et al. Biological plausibility in environmental health systematic reviews: a GRADE concept paper. Environ Int. 2022;162:107109.

Morgan RL, Beverly B, Ghersi D, Schünemann HJ, Rooney AA, Whaley P, et al. GRADE guidelines for environmental and occupational health: a new series of articles in Environment International. Environ Int. 2019;128:11–2.

Moher D, Stewart L, Shekelle P. Establishing a new journal for systematic review products. Syst Rev. 2012;1(1):1.

U.S. Environmental Protection Agency (EPA). A framework for assessing health risks of environmental exposures to children. Washington, DC: National Center for Environmental Assessment; 2006.

Whaley P, Aiassa E, Beausoleil C, Beronius A, Bilotta G, Boobis A, et al. Recommendations for the conduct of systematic reviews in toxicology and environmental health research (COSTER). Environ Int. 2020;143:105926.

Vandenberg LN, Ågerstrand M, Beronius A, Beausoleil C, Bergman Å, Bero LA, et al. A proposed framework for the systematic review and integrated assessment (SYRINA) of endocrine disrupting chemicals. Environ Health. 2016;15(1):74.

Farid-Kapadia M, Joachim KC, Balasingham C, Clyburne-Sherin A, Offringa M. Are child-centric aspects in newborn and child health systematic review and meta-analysis protocols and reports adequately reported?-two systematic reviews. Syst Rev. 2017;6(1):31.

Cramer K, Wiebe N, Moyer V, Hartling L, Williams K, Swingler G, et al. Children in reviews: methodological issues in child-relevant evidence syntheses. BMC Pediatr. 2005;5:38.

Kapadia MZ, Askie L, Hartling L, Contopoulos-Ioannidis D, Bhutta ZA, Soll R, et al. PRISMA-Children (C) and PRISMA-Protocol for Children (P-C) Extensions: a study protocol for the development of guidelines for the conduct and reporting of systematic reviews and meta-analyses of newborn and child health research. BMJ Open. 2016;6(4):e010270.

Lawlor DA, Tilling K, Davey SG. Triangulation in aetiological epidemiology. Int J Epidemiol. 2017;45(6):1866–86.

National Academies of Sciences E, and Medicine. The Use of Systematic Review in EPA’s Toxic Substances Control Act Risk Evaluations. Washington, DC: The National Academies Press; 2021.

United States Environmental Protection Agency. Draft Protocol for Systematic Review in TSCA Risk Evaluations 2022. Available from: https://www.epa.gov/assessing-and-managing-chemicals-under-tsca/draft-protocol-systematic-review-tsca-risk-evaluations.

Goldman GT, Dominici F. Don’t abandon evidence and process on air pollution policy. Science. 2019;363(6434):1398–400.

Richmond-Bryant J. In defense of the weight-of-evidence approach to literature review in the integrated science assessment. Epidemiology. 2020;31(6):755–7.

McPartland J. Finally—EPA takes steps to unify its approach to the evaluation of chemicals for cancer and non-cancer endpoints. Environ Defense Fund. 2021. https://blogs.edf.org/health/2021/07/13/finally-epa-takes-steps-to-unify-its-approach-to-the-evaluation-of-chemicals-for-cancer-and-non-cancer-endpoints/.

Permission to reproduce materials from other sources

Not applicable.

Registration and protocol

A registration or protocol for this methodological survey was not prepared.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

SM and OVE: Conceptualization; SM, AA, and OVE: Data curation and Formal analysis; SM and OVE: Investigation and Methodology; SM: Project administration; SM: Resources and Software; OVE: Supervision; SM, AA, and OVE: Validation; SM: Visualization; Roles/Writing – SM: original draft; SM, AA, and OVE: Writing—review & editing. All authors approved the final version of the article. SM is the guarantor of this work. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1:

Material S1. Preferred Reporting Items for Overviews of Reviews (PRIOR) checklist. Material S2. Full electronic search strategies. Material S3. Details of ROBIS assessment: Risk of bias assessment results for each systematic review. Material S4. Details of ROBIS assessment: Responses to each question of the ROBIS tool.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Michel, S.K.F., Atmakuri, A. & von Ehrenstein, O.S. Systems for rating bodies of evidence used in systematic reviews of air pollution exposure and reproductive and children’s health: a methodological survey. Environ Health 23, 32 (2024). https://doi.org/10.1186/s12940-024-01069-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12940-024-01069-z