Abstract

Background

While spontaneous robotic arm control using motor imagery has been reported, most previous successful cases have used invasive approaches with advantages in spatial resolution. However, still many researchers continue to investigate methods for robotic arm control with noninvasive neural signal. Most of noninvasive control of robotic arm utilizes P300, steady state visually evoked potential, N2pc, and mental tasks differentiation. Even though these approaches demonstrated successful accuracy, they are limited in time efficiency and user intuition, and mostly require visual stimulation. Ultimately, velocity vector construction using electroencephalography activated by motion-related motor imagery can be considered as a substitution. In this study, a vision-aided brain–machine interface training system for robotic arm control is proposed and developed.

Methods

The proposed system uses a Microsoft Kinect to detect and estimates the 3D positions of the possible target objects. The predicted velocity vector for robot arm input is compensated using the artificial potential to follow an intended one among the possible targets. Two participants with cervical spinal cord injury trained with the system to explore its possible effects.

Results

In a situation with four possible targets, the proposed system significantly improved the distance error to the intended target compared to the unintended ones (p < 0.0001). Functional magnetic resonance imaging after five sessions of observation-based training with the developed system showed brain activation patterns with tendency of focusing to ipsilateral primary motor and sensory cortex, posterior parietal cortex, and contralateral cerebellum. However, shared control with blending parameter α less than 1 was not successful and success rate for touching an instructed target was less than the chance level (= 50%).

Conclusions

The pilot clinical study utilizing the training system suggested potential beneficial effects in characterizing the brain activation patterns.

Similar content being viewed by others

Background

People can face losing all or part of their motor functions because of various diseases or physical accidents such as spinal cord injury, stroke, and amyotrophic lateral sclerosis. The damage to motor functions frequently makes it difficult to perform activities of daily living [1, 2]. Among various assistive technologies, brain–machine interfaces (BMIs), which depends on features from user’s neural signals, enables control of external devices. BMIs allow a person to bypass conventional neuromuscular pathways to interact with the environment.

Since the concept of BMIs was first proposed in the 1970s at the University of California Los Angeles [3, 4], scientists and engineers have improved upon technology for developing a human-controlled external robotic arm that does not require physical movement [5,6,7,8]. Monkeys have fed themselves by controlling a robotic arm [9], and humans have utilized invasive neural signals to control a seven degree-of-freedom (DOF) robotic arm as if it were their own [10, 11]. The previous notable studies [10, 11] showed that the success rate of reaching and grasping can reach approximately 70–90% in allocated time. These studies used intracortical microelectrode array (MEA), which are highly invasive. Features from spikes measured from MEA provide high spatial resolution, which is advantageous in accurate prediction of imagery hand velocity.

Even though approaches based on MEA are highly successful, many researchers still attempt to control a robotic arm with noninvasive neural signals, such as those derived from electroencephalography (EEG), which does not require surgically implanting an electrode array. Previous EEG studies for robotic arm control uses features, such as P300 [12,13,14,15], N2pc [13, 15], steady-state visual evoked potential [16,17,18,19], and mental task differentiation [13, 20,21,22,23,24]. These approaches have demonstrated high performance in terms of accuracy, but relatively unintuitive, inefficient in terms of time, and mostly requires additional interfaces for visual stimulation. Thus, hand velocity predicted from EEG activated by motion-related motor imagery can be considered as an ultimate substitution for conventional EEG based control. Even though some research using mental task differentiation [13, 20,21,22,23,24] exhibited robotic arm control based on motor imagery, they utilized classification approach rather than velocity vector prediction. Velocity control of robotic arm base on EEG activated by motor imagery is a highly challenging purpose and has not yet exhibited satisfactory performance because of its limited spatial resolution and low signal-to-noise ratio [25].

Thus, we propose and develop a novel training system, which can improve motor imagery ability to extract features for velocity vector prediction. The training system utilizes shared control which uses auxiliary camera and makes the control easier. Shared control approaches that blend sensor information and neural signals for a more intelligent system have been applied to BMI field for improvement of task performance or fine control of terminal device [26,27,28,29]. In the previous studies, the shared control approaches have been applied to determination of the parameters of decoder and user adaptation (co-adaptive process) [11, 30]. The studies were applied to train primates [30] and humans [11], and the training process can proceed in two phases. In the first phase, observation-based training is provided (Fig. 1a). During the phase, the robotic arm moves automatically driven by preprogrammed algorithm to reach targets and an initial decoder is generated. In the second phase of calibration, the user controls the robotic arm by using the initial decoder while the shared control strategy assists the reaching motion (Fig. 1b). An improved decoder can be built from the second phase. These studies utilized invasive MEA and the training process has not yet been applied to noninvasive neural signals to the best of our knowledge.

In this study, a novel vision-aided BMI training system is developed and applied to co-adaptive process of user and decoder. Whereas previous invasive studies used preferred direction based decoder, this study used regression between neural signal and hand velocity. The developed system is applied to two clinical cases of potential users (patients with cervical spinal cord injury) with functional magnetic resonance image (fMRI) studies to assess feasibility and possible effects of the training. As a neural signal, EEG is used because of its noninvasiveness.

Methods

Training system overview

The newly proposed training system automatically detects the target object on the basis of the image and uses the image information as well as the brain signal information to more easily control the robotic arm approaching the target object. During the phase of training with the system, shared control strategy can motivate user by maintaining the success rate to certain level. Kinect is used for image acquisition, and separation of pixel colour and hierarchical clustering is applied for multiple target object detection. For shared control, conventional artificial potential approach is modified to apply to BMI system for robotic arm control. As the dependency of image information is higher than that of brain signal information, the control difficulty of robotic arm is lowered. When the information from brain signal is completely suppressed, the robotic arm motion doesn’t reflect the user intention, and the process becomes an observation-based training. Further specific details are described in sections below.

Detecting target objects using the Kinect

The position of target objects must be accurately defined to compensate for the predicted hand trajectory from neural signals. Before their position can be estimated, the targets must be detected. Although various target detection algorithms have been reported, elaborate algorithms are not required for the BMI training system because it is operated in a relatively well-arranged space with clean background. Green balls (diameter: 7 cm) serve as targets in this study, so binary images (green: 1; else: 0) were acquired using RGB images obtained by the Kinect (Kinect for XBOX 360, Microsoft, Redmond, WA, US). Using RGB values from the image, green pixels were separated, as shown in Eq. (1).

The images were filtered to remove noise via the process described in Eqs. (2) and (3).

Noise-filtered images can contain more than one target object, so pixels designated as “1” should be clustered to their corresponding target object. Hierarchical clustering distinguishes multiple target objects simultaneously. Conventional clustering algorithms require the number of clusters to be predetermined for centroid generation. However, a BMI system is highly limited if the number of target object is predetermined; thus, a divisive hierarchical clustering approach was chosen instead. This approach initially assumes that there is one target object is. When the x- or y-axis standard deviation of pixels are equal or larger than 20, the cluster is reclustered with two centroids. This procedure is repeated until all clusters have a pixel distribution whose standard deviation is less than 20 in both the x- and y-axes. The target detection procedure is summarized in Fig. 2.

To validate the algorithm, an environment containing several green target objects was prepared and photographed with the Kinect. The number of target objects ranged from two to four and 20 images were acquired for each number of target objects (for a total of 60 images). The distance between target objects was controlled within the range from 15 to 35 cm. For each number of target objects, the accuracy of detecting all existing targets was measured.

Estimation of 3D position (camera calibration)

The 3D position of detected target object should be estimated using information acquired from images. The Kinect provides two types of images: RGB and depth images. RGB images provide three channels of data with 480 × 640 resolution. Depth images provide one channel of 480 × 640 resolution; each pixel represents a depth index related to the distance to objects in the image. Using three types of camera calibration, the 3D position of each target object can be estimated.

The first calibration consists of distortion compensation between the RGB and depth images. The same object is reflected in different pixels in the RGB and depth images, so calibration to match the two images is necessary for position estimation (Fig. 3a). Linear regression was applied to obtain the transformation matrix for the mapping. Images with several balls were photographed with the RGB and depth camera, respectively (Fig. 3b), and the pixel coordinates of the balls were measured. This process was repeated to obtain a larger dataset. The coordinates of the balls from the RGB images were stored to matrix A with a size of 165 × 2, which contains pixel information from 165 balls. Matrix D, for the depth image, was obtained similarly. Then, matrix B, which maps pixels from the RGB to the depth image, can be obtained with the linear regression shown below.

Thus, B was obtained as shown below. The R2 values for the x- and y-axes were nearly 1.0 (> 0.999) and their root mean square errors (RMSEs) were 2.97 and 2.40 pixels, respectively. This result indicates that less than 3 pixels of error are occurred with the proposed linear regression. When total size of the images (480 × 640 pixels) is considered, this error seems to be trivial.

The second calibration is between the depth index and real distance. We utilized a linear curve fitting provided by the Matlab Curve Fitting Toolbox (Matlab R2016b, Mathworks Inc., Natick, MA, US), the result of which is shown in Eq. (7).

Finally, 2D position was estimated from the x and y pixels of the RGB image. The above approach was used and the results are shown in Eqs. (8) and (9).

To validate the implemented algorithm, we compared it with an optic tracker (PST Base, ps-tech, Amsterdam, Netherlands) that estimates the 3D position of pre-attached stickers with high accuracy (positon < 0.5 mm RMSE, orientation < 1° RMSE2). Two target objects with pre-attached stickers were prepared and placed randomly on the prepared experimental setup, as depicted in Fig. 4. The distance between the two target objects was measured using the optic tracker and Kinect. This procedure was repeated 10 times and the difference between the two approaches was analyzed.

Vision-aided robotic system hardware

The vision-aided robotic system consists of three components: a 6-DOF anthropomorphic robot arm (JACO, Kinova, Boisbriand, QC, Canada), Kinect, and targets. The dimensions of the aluminum profiles for fixing three components were designed by considering the workspace of the robotic arm, as shown in Fig. 5a. The arm was fixed on one side of the aluminum profiles and the Kinect on the other. The green target objects were fixed between them using flexible supports. Kinect detects target objects and the estimated positions are delivered to the robot arm for its waypoint generation. The coordinates of the Kinect and robot arm are different; thus, the homogeneous transformation matrix (Eqs. (10) and (11)) should be multiplied before the target position information is used for waypoint generation.

Vectors \( \vec{A} \) and \( \vec{B} \) are the position of target object measured from the Kinect and robot gripper, respectively. The origin of the robotic arm was defined as a point with an offset of 0.2 m in the x- and z-axes from the origin of frame 0 (the center of joint1, see Fig. 5b).

Shared control using artificial potential

To generate waypoints of robot operation, both neural signals and the target object location should be considered. Previous studies reported shared control blending a velocity vector predicted from neural signals and the position of a target object; however, none considers the highly practical situation in which more than one target object exists in the workspace to the best of our knowledge. Thus, it is necessary to propose a novel shared-control strategy for BMI system that is practical for use with multiple targets. To solve this issue, our research utilized artificial potential, which is a conventional motion planning approach for robots to avoid obstacles and reach a destination. In this study, we modified the conventional approach to a proper form to apply to the BMI training system. It attracts robots to the most probable target object that allows the robot end-effector to approach the user-intended target. In this study, the joints of the robotic arm are compensated by blending the predicted hand velocity and the ideal vector to the intended target.

The detailed algorithm for shared control is described below. First, artificial potential was formed by considering the intended target object using Eqs. (12)–(14).

\( q \) is the configuration of the joints and \( q_{g, i} \) is the goal configuration for the \( i{\text{th}} \) target object. \( U_{a, i} \) is the artificial potential provided by the \( i{\text{th}} \) target object. The stiffness \( k_{a, i} \) is programmed to be 1 when the user of the BMI system most intends to reach the \( i{\text{th}} \) target object. The most intended target object is determined by the currently predicted velocity from neural signals. The angle between the predicted velocity vector and the vector from the current position to each target position is calculated and the target with the smallest angle is determined to be the intended target object. For the unselected target objects, \( k_{a, i} \) is set to 0. So, \( f_{t} \) is the attractive force acted by the intended target. The intended target object is updated for each stage of waypoint generation. Thus, the BMI user can change the preferred target object while controlling the robotic arm. Then, the ideal vector to the intended target object is generated based on the current waypoint of end-effector and attractive force \( f_{t} \) as shown in Eq. (15).

\( x_{e} \left( {q_{k} } \right) \) is the \( k{\text {th}} \) waypoint for robot arm end-effector and the vector \( \Delta x_{i, k} \) points the ideal direction to approach the intended target. When the predicted hand velocity vector moves away from the origin and the angle to the most intended target object is less than 90°, the next waypoint is generated via Eqs. (16) and (17).

\( \Delta x_{k} \) is the compensation vector for the \( k{\text{th}} \) waypoint and \( \Delta x_{n, k} \) is the hand velocity vector predicted from neural signals. The vector \( \Delta x_{i, k} \), which points in the ideal direction to the intended target, is scaled to the size of vector \( \Delta x_{n, k} \), and the two are blended with proportion of \( \alpha \) and \( 1 - \alpha \). The larger \( \alpha \) increases the compensation from the Kinect and decreases the strength of the originally predicted hand velocity from neural signals. Additionally, inertia is considered via parameter \( \beta \) to suppress unintended sudden movement of the robotic arm. Similar BMI studies of shared control also considered the issue of reducing acceleration, applying a smoothing approach to motion planning [31]. At Eq. (17), the compensation for the \( k + 1{\text{th}} \) waypoint is provided by blending the \( k{\text{th}} \) compensation vector with the proportion of \( 1 - \beta \). Blending parameters \( \alpha \) and \( \beta \) both range from 0 to 1.

To calculate equations for artificial potential, forward and inverse kinematics of the robotic arm are required; conventional kinematics of a 3-DOF anthropomorphic arm [32] were utilized in this study. The homogeneous transformation matrix for forward kinematics is suggested in Eq. (18). The Denavit–Hartenberg parameters for deriving the matrix are listed in Table 1.

Relevant nomenclature is suggested in Fig. 4b. \( c_{1} \), \( c_{2} \), and \( c_{3} \) indicate \( { \cos }\theta_{1} \), \( { \cos }\theta_{2} \), and \( { \cos }\theta_{3} \). \( s_{1} \), \( s_{2} \), and \( s_{3} \) indicate \( { \sin }\theta_{1} \), \( { \cos }\theta_{2} \), and \( { \sin }\theta_{3} \). Furthermore, \( c_{23} \) and \( s_{23} \) indicate \( { \cos }\left( {\theta_{2} + \theta_{3} } \right) \) and \( { \sin }\left( {\theta_{2} + \theta_{3} } \right) \), respectively. Additional information for inverse kinematics is suggested in Eqs. (19)–(21).

\( pW_{x} \), \( pW_{y} \), and \( pW_{z} \) indicate the x-, y-, and z-coordinates of the end-effector position measured in frame 0.

The proposed algorithm was validated by applying it to hand trajectories predicted from noninvasive EEG signals. Predicted hand trajectories obtained in previous research [25] were used. The details of decoding process were introduced in the previous paper. The dataset contains 120 hand trajectories predicted from a healthy participant consisting of 4 directional reaching movements (30 trials per direction). Blending parameters \( \alpha \) and \( \beta \) affect the performance of the algorithm and were modulated from 0.05 to 1.00 in intervals of 0.05 for optimization.

Clinical application in participants with upper limb impairment

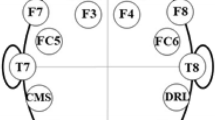

We established a clinical BMI training system consisting of an EEG acquisition system (Synamps 2, Compumedics Neuroscan, Texas, USA) and the vision-aided robotic system. The two systems are connected to a personal computer for a cooperated system. EEG is processed by Matlab, and the robotic arm is controlled by C# (Microsoft, Redmond, WA, USA) based SDK. Two volunteers with severe upper limb impairment due to cervical spinal cord injury participated in this study. The inclusion criteria for recruiting participants were: (1) severely disabled patient with unilateral or bilateral upper limb paralysis, (2) no significant cognitive impairment (MMSE > 26), and (3) able to fully understand the study and provide informed consent. Participant #1 is a 31-year-old male with American Spinal Injury Association Impairment Scale (AIS) C at level C4 (motor C7/C4 and sensory C5/C5), and participant #2 is a 47-year-old male with AIS B at level C4 (motor C4/C4 and sensory C5/C5). These patients are completely unable to control their own arms. The participants were given a total of 10 sessions for BMI training. The first 5 sessions were designed to help the participants get used to motor imagery using targets and virtual reality video files (Fig. 6a). In the next 5 sessions, developed BMI training system was utilized (Fig. 6b). In each session, the users were instructed to imagine robotic arm control to reach an instructed target out of two. Two types of training were applied to each session. As the first type, observation-based training was performed, and the parameters of the decoder were determined using multiple linear regressions between robotic arm motion and EEG signal. Since the decoder was not yet verified by patients with upper limb paralysis, blending parameters were set to 1, and the instructed targets were preprogrammed in the controller. After observation-based training of each session, shared control based robotic arm control was attempted with blending parameters α = β = 0.6. For each trial of shared control session, users were instructed to choose one target out of two, and success rate was measured for about 40 trials. fMRI while performing motor imagery tasks was taken before the 6th training session and after the 10th training session. Overall plan of clinical application is represented in Fig. 7. This clinical study was approved by the Institutional Review Board of Seoul National University Hospital (IRB No. 1605-136-765).

fMRI evaluation

Functional imaging consisted of motor imagery tasks in 3 directions: upper, lower-left, and lower-right. Block design was used in all tasks; during each task, participants were instructed to imagine reaching and grasping movements repeatedly in selected directions. For each task, 8 active blocks and 7 rest blocks (each 20 s) were interleaved. The fMRI images were acquired with a Siemens MAGNETOM Trio, A Tim Syngo scanner using echo planar imaging (EPI, TE = 30 ms, TR = 3000 ms), angulated in parallel to the anterior and posterior commissure line. A T1-weighted image was also obtained for anatomical reference. The fMRI data were preprocessed using Statistical Parametric Mapping 12 (SPM12, Wellcome Trust Centre for Neuroimaging, London, UK; http://www.fil.ion.ucl.ac.uk/spm/) executed in MATLAB 2015b (Mathworks Inc., Natick, MA).

Results

Accuracy of target object detection and position estimation

No failure in accurate target detection occurred. In all images, the appropriate number of targets were detected at each location. The same conditions with two target objects were photographed and the distance between the two targets was measured using both the self-calibrated Kinect and an optic tracker. The optic tracker was used as a gold standard because it had been validated as a highly accurate system. In the second approach, as represented in Table 2, the Kinect-based system exhibited a distance error of 0.0250 ± 0.0160 m compared with the optic tracker. This distance error is 4.620 ± 3.490% of the total distance between the two target objects, indicating that there is less than a 2-cm error when the robot conducts approximately 40 cm of reaching and grasping movements. When the size of the robot gripper and the diameter of the target are considered, deviation of 1–2 cm is tolerable.

Accuracy improvement of BMI system using artificial potential

This study proposes a shared control algorithm using artificial potential and validates it using hand trajectories predicted from noninvasive EEG neural signals. The algorithm was applied to 120 reaching trajectories in 4 directions. The target object positions were calculated using real hand trajectories, which were simultaneously measured with an accelerometer placed on the index finger. Applying this algorithm improved the predicted hand trajectories, and the shortest distance to the intended target object decreased, as shown in Fig. 8a. Additionally, we can confirm that the three joint angles of the robotic arm continuously reached to the ideally required joint angle to reach the target (Fig. 8b, c). Figure 9a shows the average improvement of the shortest distance to the target object as blending parameters differ. As blending parameters α and β increased, the degree of improvement increased the shortest distance to the target improved 57.37% when both blending parameters were 1.00. The patterns of improvement were similar for four each direction (Fig. 9b). Additionally, it was also confirm that the algorithm with low blending parameter α worsen the performance of the BMI system. The decreased error lower than 0 in Fig. 9 indicates the shortest distance to intended target object became farther rather than the improvement.

a Compensated trajectory (red) reaches more closely to the target object than the raw hand trajectory predicted from EEG. Blue line indicates trajectory with no compensation. b Definition of the joint angles of the robotic arm. c Green line indicates the ideal joint angle to reach the target object. Joint angles are closer to the ideal joint angles with compensation (red) than without (blue)

Whereas the implemented algorithm using artificial potential enabled the robot arm to more closely reach the intended target object, the shortest distance to nonintended target objects was not substantially affected by the algorithm. According to Fig. 10a, the average shortest distance to the nonintended targets decreased 4.07% when α = β = 1.00. The degree of improvement was affected by α rather than β. The condition α = β = 0.60 led to 5.84% improvement (Fig. 10b). In individual cases of each of the four directions, less than 15% improvement was observed. The decrease in the shortest distance to the nonintended target was significantly less than that of the shortest distance to the intended target. Thus, we confirmed that the algorithm enables the robot end-effector to selectively reach an intended target. The decrease in the shortest distances to the nonintended and intended targets were compared, as shown in Table 3. The p-value, calculated using a one-tailed two-sample t-test, implies that the distance error to the intended targets was significant less than to nonintended targets. Figure 11a, b show all the reaching trials before and after compensation, respectively (α = β = 0.60; 1 of the 120 total trials is not represented, as it is a serious outlier).

Effect of vision-aided BMI training in two clinical cases

Before the BMI training, both participants showed brain activation in multiple areas in both hemispheres in fMRI findings. Participant #1 demonstrated significant brain activation in the precentral gyrus (primary motor cortex), postcentral gyrus (primary sensory cortex), posterior parietal cortex (PPC), and lateral portion of the middle frontal gyrus and inferior frontal gyrus (prefrontal cortex) in the right hemisphere, which correspond to the left hand the participant was trying to move (Fig. 12a). The contralateral cerebellum was also significantly activated (Fig. 12a). After the training, brain activation was focused to the right precentral and postcentral gyri, PPC, and contralateral cerebellum (Fig. 12b). Participant #2 also demonstrated scattered brain activation across both hemispheres—especially in the occipital lobe—before real-time training; however, after training, the activated areas tended to focusing to the left precentral and postcentral gyri, PPC, and contralateral cerebellum, corresponding to the right hand the participant intended to move. However, in both cases, activation patterns did not differ between the three imagined reaching directions.

a fMRI image during reaching task (left arm) in participant #1 shows brain activation in various area including right primary motor and sensory cortex, posterior parietal cortex, prefrontal cortex, and left cerebellum (p < 0.001). b Brain activation is relatively focused to the right primary motor and sensory cortex, posterior parietal cortex, and left cerebellum after 5 sessions of BMI training with real-time visual feedback (p < 0.001)

Discussion

Vision-aided BMI training system

The vision-aided system consists of an anthropomorphic robotic arm, Kinect, and aluminum profiles for fixing the other components. The Kinect enables target object detection and position estimation. In 60 validation trials (20 each for 2, 3, and 4 target objects), there were no failures in target detection. The implemented target detection algorithm can automatically detect multiple targets; however, it is still limited in that target objects must be green and the performance can deteriorate for increasing numbers of target objects. Improved image processing approaches, such as a convolutional neural network [33, 34], or a histogram of oriented gradients [35, 36], could potentially be applied as appropriate alternatives that overcome this issue.

The position estimation error rate was about 4.62%, and occurred for two main reasons. First, the calibration approach is linear. For camera calibration and position estimation, we applied linear regression using the least-squares method. Even though this approach performed satisfactorily, it cannot fully explain the complicated relations between variables. Second, the implemented algorithm estimated the center of two target objects, whereas the optic tracker measured the distance between stickers, causing differences between the two results. Furthermore, small errors caused by the internal Kinect software cannot be excluded.

BMI system using artificial potential

Artificial potential, one of the main focuses of this study, was used to compensate for the joint movement of the robot arm and reach closer to the intended target. We found that the motion planning approach improved the shortest distance to the intended target up to 57.37%, whereas that to the nonintended targets showed almost no improvement. A t-test supported this observation statistically (p < 0.0001).

This result is meaningful because none of the information for determining the target object was preprogrammed. Although shared control based on high-level commands (a goal-oriented approach) frees the system from the burden of low-level and demanding high-speed interaction, it limits the robot arm to preprogrammed commands [37]. The suggested process-oriented approach provides low-level intervention in the robot arm movement and does not highly limit the paths by which the robot moves. The compensating information for following intended target was obtained from only raw hand velocities predicted from EEG and the positions of possible targets. Thus, the suggested algorithm maintains the volition of the user and improves robustness and accuracy.

Optimal blending parameters

When α = β = 0.60, the degree of improvement is sufficiently saturated, such that the shortest distance to the intended target decreased by 51.85%, which is approximately 90% of 57.37%. According to Table 4, the degree of improvement sufficiently saturated at the condition α = β = 0.60. In the case of Direction 4, the condition in which α = β = 0.60 (60.49%) exhibited greater improvement than the condition in which α = β = 1.00 (55.28%); therefore, we infer that blending parameters exceeding 0.60 are not highly effective.

Less blending parameter α provides less compensation and higher decoding power of user and decoder should be guaranteed. The blending parameter α can be modulated to sustain the success rate of instructed tasks and motivate the users [11]. Low blending parameter β implies that the robotic arm reflects the previous velocity vector value as high weight, and sudden unpredictable motions are sufficiently suppressed. The parameter β can be determined by considering the user safety and signal characteristic. When it is considered that user prefers BMI system with high level of user volition and safety, α = β = 0.60 can be provided as the optimal condition if initial decoder.

Advantages of novel BMI training system

The suggested training system preserves the high level of user volition for the robotic arm control. It gives the training system the following advantages. First, it is easy to set the appropriate chance level for active shared control. Since active shared control can reach a target even with random walk signal through an auxiliary control command, reaching the target point is not a measure of success. Rather, it is a more objective indicator of success to reach the intended target among various candidate objects. In this case, the chance level is calculated as in Eq. (22).

Second, it is easy to perform robust parameter training because the targets can be moved by using flexible cable as used in the current study. Trained model parameters for targets at various locations can avoid the issue of the decoding model being over-fitted to objects at specific locations. In addition, the reaching success rate of the target object at any arbitrary position can give a higher sense of trust to the reader of a paper or the viewer of a video.

Effect of vision-aided BMI training in clinical application

In the first phase of training (first 5 training sessions), the participants were given a VR video of robotic motion for motor imagery of reaching motion, as the purpose of this phase was to accustom them to the imagery task. Users were instructed to assume that the robotic arm was their arm, as the robotic arm was positioned nearest to their paralyzed arm. However, even after 5 sessions of imagery training, fMRI revealed that brain activation areas were scattered across motor planning and execution areas in both hemispheres. This result suggested a less characterized pattern of brain activity, although the most significantly active areas were consistent with previous studies reporting areas activated during motor imagery and BMI learning: the ipsilateral premotor cortex, supplementary motor area, primary motor cortex, PPC, insula, and contralateral cerebellum [38, 39].

After 5 additional training sessions with the observation-based training provided, brain activation patterns tended to focused to the primary motor and sensory areas, PPC, and contralateral cerebellum. Wander et al. [40] also demonstrated that cortical adaptation occurs during BMI learning, during which activation in the prefrontal cortex, premotor cortex, and PPC tend to decrease as users switch from cognitive to automatic phases. However, in our study, whereas prefrontal and premotor cortex activity decreased significantly, PPC activity was relatively sustained. This difference may arise from the fact that the participants were still in the training phase of external robot control, which may require PPC and cerebellar activity for planning movements and BMI learning [41], even if imagining the reaching movement itself may have entered the autonomic phase, requiring less prefrontal and premotor activity. This result suggests that reorganization and plasticity of the brain play an essential role in BMI training, in addition to algorithm-based training of the system, such as machine learning methods. Cortical reorganization during BMI training for reaching and grasping or neurofeedback in rehabilitation has also been shown in other studies [42, 43]. Because the gross activation pattern significantly changes with repeated training, machine learning parameters should be updated with each training session.

Limitations

Using the proposed shared control strategy for the robot end-effector to reach the intended target, the success rate of reaching the instructed target did not exceed the chance level significantly. It is possible that, as we could not confirm the difference in brain activation according to the direction of motion imagery in fMRI, it may be necessary to observe the activation with electrodes of a higher spatial resolution in the area where activation is focused. At least, it is almost certain that EEG cannot distinguish the pattern inside the active area of the fMRI image in Fig. 12b. So, as the future study, more invasive electrodes with finer spatial resolution can be considered. Electrodes for electrocorticography, which is less invasive (intermediate type of invasive and non-invasive electrodes) is expected to provide improved results.

Conclusions

This study presents a BMI training system that automatically detects and estimates the position of possible target objects. Further, we applied an artificial-potential-based algorithm to predicted hand velocity, and it enabled a robot arm to selectively reach for an intended target. The developed training system was applied to two potential users with cervical spinal cord injury, and provided observation-based training. The pilot clinical study utilizing the training system suggested potential beneficial effects in characterizing the brain activation patterns. The suggested training method may help adaptation and optimization of the brain activity, to eventually utilize the BMI practically as an assistive technology. However, the shared control was not successful because of low decoding power. When the size of focused activation area is considered, finer electrode with higher spatial resolution is required. The suggested training method may help adaptation and optimization of the brain activity.

Abbreviations

- BMI:

-

brain–machine interface

- DOF:

-

degree of freedom

- MEA:

-

microelectrode array

- EEG:

-

electroencephalography

- fMRI:

-

functional magnetic resonance image

- AIS:

-

American Spinal Injury Association Impairment Scale

- PPC:

-

posterior parietal cortex

- ADL:

-

activities of daily living

References

Koo B, Leonessa A. An adaptive block backstepping control design for functional electrical stimulation of agonist-antagonist muscles. In: ASME 2011 dynamic systems and control conference and bath/ASME Symposium on fluid power and motion control. 2011. p. 479–86.

Pang M, Guo S, Huang Q, Ishihara H, Hirata H. Electromyography-based quantitative representation method for upper-limb elbow joint angle in sagittal plane. J Med Biol Eng. 2015;35:165–77.

Vidal JJ. Toward direct brain–computer communication. Ann Rev Biophys Bioeng. 1973;2:157–80.

Vidal JJ. Real-time detection of brain events in EEG. Proc IEEE. 1977;65:633–41.

Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain–computer interfaces for communication and control. Clin Neurophysiol. 2002;113:767–91.

Donoghue JP. Connecting cortex to machines: recent advances in brain interfaces. Nat Neurosci. 2002;5:1085–8.

Schwartz AB. Cortical neural prosthetics. Annu Rev Neurosci. 2004;27:487–507.

Lebedev MA, Nicolelis MA. Brain–machine interfaces: past, present and future. Trends Neurosci. 2006;29:536–46.

Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008;453:1098–101.

Hochberg LR, Bacher D, Jarosiewicz B, Masse NY, Simeral JD, Vogel J, Haddadin S, Liu J, Cash SS, van der Smagt P. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485:372–5.

Collinger JL, Wodlinger B, Downey JE, Wang W, Tyler-Kabara EC, Weber DJ, McMorland AJ, Velliste M, Boninger ML, Schwartz AB. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet. 2013;381:557–64.

Pathirage I, Khokar K, Klay E, Alqasemi R, Dubey R. A vision-based P300 brain computer interface for grasping using a wheelchair-mounted robotic arm. In: Proceedings of the 2013 IEEE/ASME international conference on advanced intelligent mechatronics. 2013. p. 188–93.

Mohammed S, Moreno JC, Kong K, Amirat Y. Intelligent assistive robots. In: Brain–machine interfaces for assistive robotics. Berlin: Springer; 2015.

Palankar M, Laurentis KJD, Alqasemi R, Veras E, Dybey R. Control of a 9-DOF wheelchair-mounted robotic arm system using a P300 brain computer interface: initial experiments. In: Proceedings of the 2008 IEEE international conference on robotics and biomimetics. 2009. p. 348–53.

Blasco JLS, Ianez E, Ubeda A, Azorin JM. Visual evoked potential-based brain–machine interface applications to assist disabled people. Expert Syst Appl. 2012;39:7908–18.

Valbuena D, Cyriacks M, Friman O, Volosyak I, Graser A. Brain–computer interface for high-level control of rehabilitation robotic systems. In: Proceedings of the 2007 IEEE 10th international conference on rehabilitation robotics. 2007. p. 619–25.

Bakardjian H, Tanaka T, Cichocki A. Brain control of robotic arm using affective steady-state visual evoked potentials. In: Proceedings of the 5th IASTED international conference on human–computer interaction. 2010. p. 264–70.

Ortner R, Allison BZ, Korisek G, Gaggl H, Pfurtscheller G. An SSVEP BCI to control a hand orthosis for persons with tetraplegia. IEEE Trans Neural Syst Rehabil Eng. 2011;19:1–5.

Sakurada T, Kawase T, Takano K, Komatsu T, Kansaku K. A BMI-based occupational therapy assist suit: asynchronous control by SSVEP. Front Neurosci. 2013;7:1–10.

Hortal E, Planelles D, Costa A, Ianez E, Ubeda A, Azorin JM, Fernandez E. SVM-based brain–machine interface for controlling a robot arm through four mental tasks. Neurocomputing. 2015;151:116–21.

Ubeda A, Ianez E, Badesa J, Morales R, Azorin JM, Garcia N. Control strategies of an assistive robot using a brain–machine interface. In: 2012 IEEE/RSJ international conference on intelligent robotics and systems. 2012. p. 3553–8.

Meng J, Zhang S, Bekyo A, Olsoe J, Baxter B, He B. Noninvasive electroencephalogram based control of a robotic arm for reach and grasp task. Sci Rep. 2016;6:1–15.

Onose G, Grozea C, Anghelescu A, Daia C, Sinescu CJ, Ciurea AV, Spircu T, Mirea A, Andone I, Spanu A, Popescu C, Mihaescu A-S, Fazli S, Danoczy M, Popescu F. On the feasibility of using motor imagery EEG-based brain–computer interface in chronic tetraplegics for assistive robotic arm control: a clinical test and long-term post-trial follow up. Spinal Cord. 2012;50:599–608.

Bhattacharyya S, Konar A, Tibarewala DN. Motor imagery, P300 and error-related EEG-based robot arm movement control for rehabilitation purpose. Med Biol Eng Comput. 2014;52:1007–17.

Kim YJ, Park SW, Yeom HG, Bang MS, Kim JS, Chung CK, Kim S. A study on a robot arm driven by three-dimensional trajectories predicted from non-invasive neural signals. Biomed Eng Online. 2015;14:1.

Kim HK, Biggs J, Schloerb W, Carmena M, Lebedev MA, Nicolelis MA, Srinivasan MA. Continuous shared control for stabilizing reaching and grasping with brain–machine interfaces. IEEE Trans Biomed Eng. 2006;53:1164–73.

Downey JE, Weiss JM, Muelling K, Venkatraman A, Valois J-S, Hebert M, Bagnell JA, Schwartz AB, Collinger JL. Blending of brain–machine interface and vision-guided autonomous robotics improves neuroprosthetic arm performance during grasping. J Neuroeng Rehabil. 2016;13:1.

McMullen DP, Hotson G, Katyal KD, Wester BA, Fifer MS, McGee TG, Harris A, Johannes MS, Vogelstein RJ, Ravitz AD. Demonstration of a semi-autonomous hybrid brain–machine interface using human intracranial EEG, eye tracking, and computer vision to control a robotic upper limb prosthetic. IEEE Trans Neural Syst Rehabil Eng. 2014;22:784–96.

Katyal KD, Johannes MS, Kellis S, Aflalo T, Klaes C, McGee TG, Para MP, Shi Y, Lee B, Pejsa K. A collaborative BCI approach to autonomous control of a prosthetic limb system. In: Proceedings of the 2014 IEEE international conference on systems, man, and cybernetics. 2014. p. 1479–82.

Clanton ST. Brain–computer interface control of an anthropomorphic robotic arm. PhD Thesis, Robotics Institute, Carnegie Mellon University, 2011.

Lopes A, Rodrigues J, Perdigao J, Pires G, Nunes U. A new hybrid motion planner: applied in a brain-actuated robotic wheelchair. IEEE Robot Autom Mag. 2016;23:82–93.

Siciliano B, Sciavicco L, Villani L, Oriolo G. Robotics: modelling, planning and control. Berlin: Springer Science & Business Media; 2010.

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–44.

Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural network. In: Advances in neural information processing systems. 2012. p. 1097–1105.

Dalal N, Triggs B. Histograms of oriented gradients for human detection. In: Proceedings of the 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR’05). 2005. p. 886–93.

Zhu Q, Yeh MC, Cheng KT, Avidan S. Fast human detection using a cascade of histograms of oriented gradients. In: Proceedings of the 2006 IEEE computer society conference on computer vision and pattern recognition. 2006. p. 1491–8.

Allison BZ, Leeb R, Brunner C, Muller-Putz GR, Bauernfeind G, Kelly JW, Neuper C. Toward smarter BCIs: extending BCIs through hybridization and intelligent control. J Neural Eng. 2012;9:1–7.

Marchesotti S, Martuzzi R, Schurger A, Blefari ML, Del Millán JR, Bleuler H, Blanke O. Cortical and subcortical mechanisms of brain–machine interfaces. In: Human brain mapping. https://doi.org/10.1002/hbm.23566. (In Press).

Hiremath SV, Chen W, Wang W, Foldes S, Yang Y, Tyler-Kabara EC, Collinger JL, Boninger ML. Brain computer interface learning for systems based on electrocorticography and intracortical microelectrode arrays. Front Integr Neurosci. 2015;9:40. https://doi.org/10.3389/fnint.2015.00040.

Wander JD, Blakely T, Miller KJ, Weaver KE, Johnson LA, Olson JD, Fetz EE, Rao RP, Ojemann JG. Distributed cortical adaptation during learning of a brain–computer interface task. Proc Natl Acad Sci USA. 2013;110(26):10818–23.

Baldauf D, Cui H, Andersen RA. The posterior parietal cortex encodes in parallel both goals for double-reach sequences. J Neurosci. 2008;28(40):10081–9.

Carmena JM, Lebedev MA, Crist RE, O’Doherty JE, Santucci DM, Dimitrov DF, Patil PG, Henriquez CS, Nicolelis MA. Learning to control a brain–machine interface for reaching and grasping by primates. PLoS Biol. 2003;1(2):e42.

Ushiba J, Soekadar SR. Brain–machine interfaces for rehabilitation of poststroke hemiplegia. Prog Brain Res. 2016;228:163–83.

Authors’ contributions

YJK implemented the training system, including the robotic arm and Kinect. HSN (co-first author) participated in all experiments as a clinician, including recruiting potential users and analyzing fMRI data. WHL, HGS, JHL, and BMO participated in the experiments partially and advised on methods for satisfying the requirements of potential users. MSB and SK (co-corresponding authors) suggested ideas and motivations for this study and supervised all details of the research. All authors read and approved the final manuscript.

Acknowledgements

The authors would like to thank the participants for participating in these experiments.

Competing interests

The authors declare that they have no competing interests.

Availability of data and materials

Data will not be shared at this stage, publicly. Further studies on these data are ongoing. The datasets analyzed during the current study are only available from the corresponding author on reasonable request.

Consent for publication

All participants signed an informed consent to publish their data and images.

Ethics approval and consent to participate

All participants provided informed consent and were reimbursed for their participation on completion of the experiment. This study was reviewed and approved by the Institutional Review Board (IRB) of Seoul National University Hospital (IRB No. 1605-136-765).

Funding

This study was supported by the grant (NRCTR-EX-16008) from the Translational Research Center for Rehabilitation Robots, Korea National Rehabilitation Center, Ministry of Health & Welfare, Korea, by the Brain Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT & Future Planning (2016M3C7A1904984), and by the NRF of Korea grant funded by the Korea government (MSIP) (Grant 2017R1A2B2006163).

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Kim, Y.J., Nam, H.S., Lee, W.H. et al. Vision-aided brain–machine interface training system for robotic arm control and clinical application on two patients with cervical spinal cord injury. BioMed Eng OnLine 18, 14 (2019). https://doi.org/10.1186/s12938-019-0633-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12938-019-0633-6