Abstract

Background

The increase in the number of predatory journals puts scholarly communication at risk. In order to guard against publication in predatory journals, authors may use checklists to help detect predatory journals. We believe there are a large number of such checklists yet it is uncertain whether these checklists contain similar content. We conducted a systematic review to identify checklists that help to detect potential predatory journals and examined and compared their content and measurement properties.

Methods

We searched MEDLINE, Embase, PsycINFO, ERIC, Web of Science and Library, and Information Science & Technology Abstracts (January 2012 to November 2018); university library websites (January 2019); and YouTube (January 2019). We identified sources with original checklists used to detect potential predatory journals published in English, French or Portuguese. Checklists were defined as having instructions in point form, bullet form, tabular format or listed items. We excluded checklists or guidance on recognizing “legitimate” or “trustworthy” journals. To assess risk of bias, we adapted five questions from A Checklist for Checklists tool a priori as no formal assessment tool exists for the type of review conducted.

Results

Of 1528 records screened, 93 met our inclusion criteria. The majority of included checklists to identify predatory journals were in English (n = 90, 97%), could be completed in fewer than five minutes (n = 68, 73%), included a mean of 11 items (range = 3 to 64) which were not weighted (n = 91, 98%), did not include qualitative guidance (n = 78, 84%), or quantitative guidance (n = 91, 98%), were not evidence-based (n = 90, 97%) and covered a mean of four of six thematic categories. Only three met our criteria for being evidence-based, i.e. scored three or more “yes” answers (low risk of bias) on the risk of bias tool.

Conclusion

There is a plethora of published checklists that may overwhelm authors looking to efficiently guard against publishing in predatory journals. The continued development of such checklists may be confusing and of limited benefit. The similarity in checklists could lead to the creation of one evidence-based tool serving authors from all disciplines.

Similar content being viewed by others

Background

The influx of predatory publishing along with the substantial increase in the number of predatory journals pose a risk to scholarly communication [1, 2]. Predatory journals often lack an appropriate peer-review process and frequently are not indexed [3], yet authors are required to pay an article processing charge. The lack of quality control, the inability to effectively disseminate research and the lack of transparency compromise the trustworthiness of articles published in these journals. Until recently, no agreed-upon definition of predatory journals existed. However, through a consensus process [4], an international group of researchers, journal editors, funders, policy makers, representatives of academic institutions, and patient partners, developed a definition of predatory journals and publishers. The group recognized that identifying predatory journals and publishers was nuanced; not all predatory journals meet all ‘predatory criteria’ nor do they meet each criterion at the same level. Thus, in defining predatory journals and publishers, the group identified four main characteristics that could characterize journals or publishers as predatory: “Predatory journals and publishers are entities that prioritize self-interest at the expense of scholarship and are characterized by false or misleading information, deviation from best editorial/publication practices, lack of transparency, and/or use of aggressive and indiscriminate solicitation practices.” [4]. Lists of suspected predatory journals and publishers are also available, although different criteria for inclusion are used [5].

Various groups have developed checklists to help prospective authors and/or editors identify potential predatory journals; these are different from efforts, such as “Think. Check. Submit.” to identify legitimate journals. Anecdotally, we have recently noticed a steep rise in the number of checklists developed specifically to identify predatory journals, although to our knowledge this has not been quantified previously. Further, we are unaware of any research looking at the uptake of these checklists. On the one hand, the development of these checklists – practical tools to help detect potential predatory journals – may lead to a substantial decrease in submissions to these journals. On the other hand, large numbers of checklists with varying content may confuse authors, and possibly make it more difficult for them to choose any one checklist, if any at all, as suggested by the choice overload hypothesis [6]. That is, the abundance of conflicting information could result in users not consulting any checklists. Additionally, the discrepancies between checklists could impact the credibility of each one. Thus, these efforts to reduce the number of submissions to predatory journals will be lost. Therefore, we performed a systematic review of peer reviewed and gray literature that include checklists to help detect potential predatory journals in order to identify the number of published checklists and to examine and compare their content and measurement properties.

Methods

We followed standard procedures for systematic reviews and reported results according to Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines [7]. The project protocol was publicly posted prior to data extraction on the Open Science Framework (http://osf.io/g57tf).

Data sources and searches

An experienced medical information specialist (BS) developed and tested the search strategy using an iterative process in consultation with the review team. The strategy was peer reviewed by another senior information specialist prior to execution using the Peer Review of Electronic Search Strategies (PRESS) Checklist [8] (see Additional file 1).

We searched multiple databases with no language restrictions. Using the OVID platform, we searched Ovid MEDLINE® ALL (including in-process and epub-ahead-of-print records), Embase Classic + Embase, PsycINFO and ERIC. We also searched Web of Science and the Library, Information Science and Technology Abstracts (LISTA) database (Ebsco platform). The LISTA search was performed on November 16, 2018 and the Ovid and Web of Science searches were performed on November 19, 2018. Retrieval was limited to the publication dates 2012 to the present. We used 2012 as a cut-off since data about predatory journals were first collected in 2010, [9] and became part of public discourse in 2012 [10]. The search strategy for the Ovid databases is included in Additional file 2.

In order to be extensive in our search for checklists that identify potential predatory journals, we identified and then searched two relevant sources of gray literature, based on our shared experiences in this field of research: university library websites and YouTube. Neither search was restricted by language. We used the Shanghai Academic Rankings of World Universities (http://www.shanghairanking.com/ARWU-Statistics-2018.html) to identify university library websites of the top 10 universities in each of the four world regions (Americas, Europe, Asia / Oceania, Africa). We chose this website because it easily split the world into four regions and we saw this as an equitable way to identify institutions and their libraries. As our author group is based in Canada, we wanted to highlight the universities in our region and therefore identified the library websites of Canada’s most research-intensive universities (U15) (search date January 18, 2019) and searched their library websites. We also searched YouTube for videos that contained checklists (search date January 6, 2019). We limited our YouTube search to the top 50 results filtered by “relevance” and used a private browser window. Detailed methods of these searches are available on the Open Science Framework (http://osf.io/g57tf).

Eligibility criteria

Inclusion criteria

Our search for studies was not restricted by language, however, for reasons of feasibility, we included studies and/or original checklists developed or published in English, French or Portuguese (languages spoken by our research team). We defined checklist as a tool whose purpose is to detect a potential predatory journal and the instructions are in point form / bullet form / tabular format / listed items. To qualify as an original checklist, the items had to have been identified and/or developed by the study authors or include a novel combination of items from multiple sources, or an adaptation of another checklist plus items added by the study authors. We included studies that discussed the development of an original checklist. When a study referenced a checklist, but did not describe the development of the checklist, we searched for the paper that discussed the development of the original checklist and included that paper.

Exclusion criteria

Checklists were not considered original if items were hand-picked from one other source; for example, if authors identified the five most salient points from an already existing checklist.

We did not include lists or guidance on recognizing a “legitimate” or “trustworthy” journal. We stipulated this exclusion criterion since our focus was on tools that specifically identify predatory journals, not tools that help to recognize legitimate journals.

Study selection

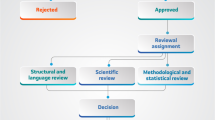

Following de-duplication of the identified titles, we screened records using the online systematic review software program Distiller Systematic Review (DSR) (Evidence Partners Inc., Ottawa, Canada). For each stage of screening, data extraction and risk of bias assessment, we pilot tested a 10% sample of records among five to six reviewers. Screening was performed in two stages: Stage 1: title and abstract; Stage 2: full-text screening (see Fig. 1). Both stages were completed by two reviewers independently and in duplicate. At both stages, discrepancies were resolved either through consensus or third party adjudication.

Data extraction and risk of Bias assessment

For each eligible study, two reviewers independently extracted relevant data into DSR and a third reviewer resolved any conflicts. The extracted data items were as follows: 1- checklist name, 2- number of items in the checklist, 3- whether the items were weighted, 4- the number of thematic categories covered by the checklist (six-item list developed by Cobey et al. [3]), 5- publication details (name of publication, author and date of publication), 6- approximate time to complete checklist (reviewers used a timer to emulate the process that a user would go through to use the checklist and recorded the time as 0–5 min, 6–10 min, or more than 10 min), 7- language of the checklist, 8- whether the checklist was translated and into what language(s), 9- methods used to develop the checklist (details on data collection, if any), 10- whether there was qualitative guidance (instructions on how to use the checklist and what to do with the results) and/or 11- quantitative guidance (instructions on summing the results or quantitatively assessing the results to inform a decision). The list of extracted data items can be found on the Open Science Framework (https://osf.io/na756/).

In assessing checklists identified via YouTube, we extracted only data items that were presented visually. Any item or explanation that was delivered by audio only was not included in our assessment. We used the visual presentation of the item to be a sign that the item was formally included in the checklist. For example, if presenters only talked about a checklist item but did not have it on a slide in the video or in a format that could be seen by those watching the video, we did not extract this data.

To assess risk of bias, we developed an a priori list of five questions for the purpose of this review, adapted from A Checklist for Checklists tool [11], and principles of internal and external validity [12]. The creation of a novel tool to assess risk of bias was necessary since there is no appropriate formal assessment tool that exists for the type of review we conducted. Our author group looked over the three areas identified in the Checklist for Checklists tool (Development, Drafting and Validation). Based on extensive experience working with reporting guidelines (DM), which are checklists, we chose a feasible number of items from each of the three categories to be used in our novel tool. We pilot tested the items among our author group to ensure that all categories were captured adequately, and that the tool could be used feasibly.

We used the results of this assessment to determine whether the checklist was evidence-based. We assigned each of the five criteria (listed below) a judgment of “yes” (i.e. low risk of bias), “no” (i.e. high risk of bias) or “cannot tell” (i.e. unclear risk of bias) (see coding manual with instructions for assessment to determine risk of bias ratings: https://osf.io/sp4vx/). If the checklist scored three or more “yes” answers on the questions below, assigning the checklist an overall low risk of bias, we considered it evidence-based. We made this determination based on the notion that a low risk of bias indicates that there is a low risk of systematic error across results. Two reviewers independently assessed data quality in DSR and discrepancies were resolved through discussion. A third reviewer was called to resolve any remaining conflicts.

The five criteria, adapted from the Checklist for Checklists tool [11], used to assess risk of bias in this review were as follows:

1. Did the developers of the checklist represent more than one stakeholder group (e.g. researchers, academic librarians, publishers)? 2. Did the developers report gathering any data for the creation of the checklist (i.e. conduct a study on potential predatory journals, carry out a systematic review, collect anecdotal data)? 3. Does the checklist meet at least one of the following criteria: 1- Has title that reflects its objectives; 2- Fits on one page; 3- Each item on the checklist is one sentence? 4. Was the checklist pilot-tested or trialed with front-line users (e.g. researchers, students, academic librarians)? 5. Did the authors report how many criteria in the checklist a journal must meet in order to be considered predatory? |

In assessing websites, we used a “two-click rule” to locate information. Once on the checklist website, if we did not find the information within two mouse clicks, we concluded no information was available.

Data synthesis and analysis

We examined the checklists qualitatively and conducted qualitative comparisons of the items. We compared the items in the included checklists to gauge their agreement on content by item and overall. We summarized the checklists in table format to facilitate inspection and discussion of findings. Frequencies and percentages were used to present characteristics of the checklists. We used the list developed by Shamseer et al. [13] as the reference checklist and compared our results to this list. We chose this as the reference list because of the rigorous empirical data generated by authors to ascertain characteristics of potential predatory journals.

Results

Deviations from our protocol

We refined our definition of an original checklist to exclude checklists that were comprised of items taken solely from another checklist. Checklists made up of items taken from more than one source were considered original even when the developers did not create the checklist items themselves. For reasons of feasibility, we did not search the reference lists in these checklists to identify further potentially relevant studies.

To screen the titles and abstracts, we had anticipated using the liberal accelerated method where only one reviewer is required to include citations for further assessment at full-text screening and two reviewers are needed to exclude a citation [14]. Instead, we used the traditional screening approach: we had two reviewers screen records independently and in duplicate. We changed our screening methods because it became feasible to use the traditional screening approach, which also reduced the required number of full-text articles to be ordered.

After completing data collection, we recognized that checklists were being published in discipline-specific journals, within biomedicine. We wanted to determine what disciplines were represented and in what proportion. We conducted a scan of the journals and used an evolving list of disciplines to assign to the list of journals, i.e. we added disciplines to the evolving list as we came across them.

Study selection

Following the screening of 1529 records, we identified 93 original checklists to be included in our study (see full details in Fig. 1).

Checklist characteristics

We identified 53 checklists identified through our search of electronic databases. The numbers of checklists identified increased over time: one each in 2012 [10], 2013 [15], rising to 16 in 2017 [13, 16,17,18,19,20,21,22,23,24,25,26,27,28,29,30] and 12 in 2018 [31,32,33,34,35,36,37,38,39,40,41,42]. We identified 30 original checklists [1, 43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71] from university library websites. More checklists were published in more recent years (2017 = 4 [45,46,47,48]; 2018 = 7 [49,50,51,52,53,54,55]; 2019 = 11 [56,57,58,59,60,61,62,63,64,65,66]; five checklists listed no publication date). We identified 10 more checklists from YouTube [72,73,74,75,76,77,78,79,80,81] that included one uploaded in 2015 [72], six in 2017 [73,74,75,76,77,78] and three in 2018 [79,80,81]. See Table 1 for full checklist characteristics.

Language and translation

Almost all checklists were published in English (n = 90, 97%), and the remaining checklists in French (n = 3, 3%) [49, 52, 61]. Two additional English checklists identified through university library websites were translated into French [55, 67] and one was translated into Hebrew [69].

Approximate time for user to complete checklist, number of items per checklist and weighting

Most checklists could be completed within five minutes (n = 68, 73%); 17 checklists (18%) could be completed in six to 10 min [16, 17, 19, 21, 32, 34, 39,40,41,42, 53,54,55, 62, 72, 82, 103] and eight checklists (9%) took more than 10 min to complete [10, 20, 46, 49, 66, 76, 85, 96]. Checklists contained a mean of 11 items each, and a range of between three and 64 items. Items were weighted in two checklists [55, 96].

Qualitative and quantitative guidance

Qualitative guidance on how to use the results of checklists was provided on 15 checklists (16%) [21, 31, 38, 47, 50, 51, 55, 59, 65, 67, 68, 82, 96, 100, 101], and quantitative guidance was provided on two checklists [55, 96], i.e. prescribing a set number of criteria that would identify the journal or publisher as predatory.

Methods used to develop checklists

In order to develop the checklists, authors noted using analysis by specialists [46], information from already existing checklists [85, 91, 93], using existing literature on predatory journals to pick the most salient features to create a new checklist [31, 42, 100], developing checklists after empirical study [13, 27, 39, 96] or after personal experiences [15].

Risk of bias assessment

Among all 93 checklists, there were three (3%) assessed as evidence-based [27, 96, 100] (see Table 2 for detailed risk of bias assessment results including whether a checklist was determined to be evidence-based, i.e. rated as low risk of bias for at least three of the criteria).

Results for risk of bias criteria

Criterion #1: representation of more than one stakeholder group in checklist development

For the majority of checklists (n = 88, 94%), it was unclear whether there was representation of more than one stakeholder group in the checklist development process (unclear risk of bias). The remaining five checklists reported the inclusion of more than one stakeholder group (low risk of bias) [22, 46, 55, 59, 100].

Criterion #2: authors reported gathering data to inform checklist development

In most studies (n = 55, 59%) there was no mention of data gathering for checklist development (unclear risk of bias); in 26 cases (28%), one or two citations were noted next to checklist items, with no other explanation of item development or relevance (high risk of bias) [18, 19, 22,23,24, 30, 32, 33, 35, 36, 40,41,42, 45, 53, 83,84,85, 87, 88, 90, 92, 94, 97, 99, 104]. Twelve records (13%) included a description of authors gathering data to develop a checklist for this criterion (low risk of bias) [13, 15, 26, 31, 37,38,39, 43, 50, 95, 96, 100].

Criterion #3: at least one of the following: title that reflected checklist objective; checklist fits on one page; items were one sentence long

Most checklists were assessed as low risk of bias on this criterion, with 81 of the checklists (87%) meeting at least one of the noted criteria (relevant title, fits on one page, items one sentence long).

Criterion #4: authors reported pilot testing the checklist

In the majority of studies (n = 91, 98%), authors did not report pilot testing during the checklist development stages (unclear risk of bias).

Criterion #5: checklist instructions included a threshold number of criteria to be met in order to be considered predatory

The majority of studies (n = 90, 97%), did not include a threshold number of criteria to be met in order for the journal or publisher to be considered predatory (high risk of bias).

Assessment of the thematic content of the included checklists

We found checklists covered the six thematic categories, as identified by Cobey et al., [3] as follows (see Table 3 for thematic categories and descriptions of categories):

Journal operations: 85 checklists (91%) assessed information on the journal’s operations.

Assessment of previously published articles: 40 checklists (43%) included questions on the quality of articles published in the journal in question.

Editorial and peer review process: 77 checklists (83%) included questions on the editorial and peer review process.

Communication: 71 checklists (76%) included an assessment of the manners in which communication is set up between the journal / publisher and the author.

Article processing charges: 61 checklists (66%) included an assessment of information on article processing charges.

Dissemination, indexing and archiving: 62 checklists (67%) included suggested ways in which submitting authors should check for information on dissemination, indexing and archiving procedures of the journal and publisher.

Across all 93 checklists, a mean of four out of the six thematic categories was covered, demonstrating similar themes covered by all checklists. Twenty percent of checklists (n = 19), including the reference checklist, covered all six categories [10, 13, 16, 19, 20, 26, 32, 34, 40, 42, 46, 53, 55, 62, 66, 67, 76, 85, 103]. Assessment of previously published articles was the category least often included in a checklist (n = 40, 43%), and a mention of the journal operations was the category most often included in a checklist (n = 85, 91%).

Discipline-specific journals

Of the checklists published in academic journals, 10 (22%) were published in nursing journals [25, 31, 35, 38, 41, 87, 89, 91,92,93], eight (18%) were published in journals related to general medicine [13, 16, 20, 22, 23, 34, 94, 95], four (9%) in emergency medicine journals [29, 36, 90, 100], four (9%) in information science journals [19, 30, 40, 82], four (9%) in psychiatry and behavioral science journals [18, 24, 83, 86]. The remaining checklists were published in a variety of other discipline-specific journals, within the field of biomedicine, with three or fewer checklists per discipline (e.g. specialty medicine, pediatric medicine, general medicine and surgery, medical education, and dentistry).

Discussion

Many authors have developed checklists specifically designed to identify predatory journals; the number of checklists developed has increased since 2012, with the majority of checklists published since 2015 (n = 81, 87%). Comparing the 93 identified checklists to the reference checklist, we observed that on average, the content of the checklist items were similar, including the categories or domains covered by the checklist; all checklists were also similar on the following: time to complete the checklist, number of items in the checklist (this number does vary considerably, however the average number of items is more consistent with the reference list), and lack of qualitative and quantitative guidance on completing the checklists. Furthermore, only 3% of checklists (n = 3) were deemed evidence-based, few checklists weighted any items (n = 2, 2%) and few checklists were developed through empirical study (n = 4, 4%). Of note, one of the checklists [33] was in a paper in a journal that is potentially predatory.

Summary of evidence

In total, we identified 93 checklists to help identify predatory journals and/or publishers. A search of electronic databases resulted in 53 original checklists, a search of library websites of top universities resulted in 30 original checklists and a filtered and limited search of YouTube returned 10 original checklists. Overall, checklists could be completed quickly, covered similar categories of topics and were lacking in guidance that would help a user determine if the journal or publisher was indeed predatory.

Strengths and limitations

We used a rigorous systematic review process to conduct the study. We also searched multiple data sources including published literature, university library websites, globally, and YouTube. We were limited by the languages of checklists we could assess (English, French and Portuguese). However, the majority of academic literature is published in English [105]. Thus, we are confident that we captured the majority of checklists or at least a representative sample. For reasons of feasibility, we were not able to capture all checklists available.

Our reference checklist did not qualify as evidence-based when using our predetermined criteria to assess risk of bias, which could be because the list of characteristics in the reference list was not initially intended as a checklist per se. However, the purpose of the reference checklist was to serve as a reference point for readers, regardless of its qualification as evidence-based or not. Creating a useable checklist tool requires attention not only to the development of the content of items but also to other details, such as pilot testing and making the items succinct, as identified in our risk of bias criteria. This perhaps was not attended to by Shamseer et al. because of the difference in the intended purpose of their list.

Our risk of bias tool was created based on other existing tools and developed through expertise of the authors. Although useful for the purpose of this exercise, the tool remains based on our expert judgment although it does include elements of scientific principles.

We noted that the “Think. Check. Submit.” checklist [106] was referenced in many publications and we believe it is used often as guidance for authors to identify presumed legitimate journals. However, we did not include this checklist in our study because we excluded checklists that help to identify presumed legitimate publications. Instead, our specific focus was on checklists that help to detect potential predatory journals.

Conclusion

In our search for checklists to help authors identify potential predatory journals, we found great similarity across checklist media and across journal disciplines in which the checklists were published.

Although many of the checklists were published in field-specific journals and / or addressed a specific audience, the content of the lists did not differ much. This could be reflective of the idea that checklist developers are all looking to identify the same items. Only a small proportion of the records included the empirical methods used to develop the checklists, and only a few checklists were deemed evidence-based according to our criteria. We noted that checklists with more items did not necessarily mean that it took longer to complete; this speaks to the level of complexity of some checklists versus others. Importantly, very few authors offered concrete guidance on using the checklists or offered any threshold that would guide authors to identify definitively if the journal was predatory. The lack of checklists providing threshold values could be due to the fact that a definition of predatory journals did not exist until this year [3, 4]. We identify a threshold value as important for the checklist’s usability. Without a recommended or suggested threshold value, checklist users may not feel confident to make a decision on submitting or not submitting to a journal. We are recommending a threshold value as a way for users to actively engage with the checklist and make it a practical tool. The provision of detailed requirements that would qualify a journal as predatory therefore would have been a challenge.

With this large number of checklists in circulation, and the lack of explicit and exacting guidelines to identify predatory publications, are authors at continued risk of publishing in journals that do not follow best publication practices? We see some value in discipline-specific lists for the purpose of more effective dissemination. However, this needs to be balanced against the risk of confusing researchers and overloading them with choice [6]. If most of the domains in the identified checklists are similar across disciplines, would a single list, relevant in all disciplines, result in less confusion and maximize dissemination and enhance implementation?

In our study, we found no checklist to be optimal. Currently, we would caution against any further development of checklists and instead provide the following as guidance to authors:

Look for a checklist that:

-

1-

Provides a threshold value for criteria to assess potential predatory journals, e.g. if the journal contains these three checklist items then we recommend avoiding submission;

-

2-

Has been developed using rigorous evidence, i.e. empirical evidence that is described or referenced in the publication.

We note that only one checklist [96] out of the 93 we assessed fulfills the above criteria. There may be other factors (length of time to complete, number of categories covered by the checklist, ease of access, ease of use or other) that may influence usability of the checklist.

Using an evidence-based tool with a clear threshold for identifying potential predatory journals may help reduce the burden of research waste occurring as a result of the proliferation of predatory publications.

Availability of data and materials

All data are available upon request. Supplementary material is available on the Open Science Framework (http://osf.io/g57tf).

Abbreviations

- DSR:

-

Distiller Systematic Review

- LISTA:

-

Library, Information Science and Technology Abstracts

- PRESS:

-

Peer Review of Electronic Search Strategies

- PRISMA:

-

Preferred Reporting Items for Systematic reviews and Meta-Analyses

References

Clark J, Smith R. Firm action needed on predatory journals. BMJ. 2015;350:h210.

Benjamin HH, Weinstein DF. Predatory publishing: an emerging threat to the medical literature. Acad Med. 2017;92(2):150.

Cobey KD, Lalu MM, Skidmore B, Ahmadzai N, Grudniewicz A, Moher D. What is a predatory journal? A scoping review [version 2; peer review: 3 approved]. F1000Res. 2018;7:1001.

Grudniewicz A, Moher D, Cobey KD, Bryson GL, Cukier S, Allen K, et al. Predatory journals: no definition, no defence. Nature. 2019;576(7786):210–2.

Strinzel M, Severin A, Milzow K, Egger M. Blacklists and whitelists to tackle predatory publishing: a cross-sectional comparison and thematic analysis. MBio. 2019;10(3):e00411–9.

Iyengar SS, Lepper MR. When choice is demotivating: can one desire too much of a good thing? J Pers Soc Psychol. 2000;79(6):995–1006.

Moher D, Liberati A, Tetzlaff J, Altman DG, Group TP. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097.

McGowan J, Sampson M, Salzwedel DM, Cogo E, Foerster V, Lefebvre C. PRESS peer review of electronic search strategies: 2015 guideline statement. J Clin Epidemiol. 2016;75:40–6.

Shen C, Björk B-C. ‘Predatory’ open access: a longitudinal study of article volumes and market characteristics. BMC Med. 2015;13(1):230.

Beall J. Predatory publishers are corrupting open access. Nature News. 2012;489(7415):179.

Gawande A. The checklist manifesto: how to get things right. New York: Picador; 2010.

Shadish WR, Cook TD, Campbell DT. Experimental and quasi-experimental designs for generalized causal inference. Boston: Houghton Mifflin; 2002.

Shamseer L, Moher D, Maduekwe O, Turner L, Barbour V, Burch R, et al. Potential predatory and legitimate biomedical journals: can you tell the difference? A cross-sectional comparison. BMC Med. 2017;15(1):28.

Khangura S, Konnyu K, Cushman R, Grimshaw J, Moher D. Evidence summaries: the evolution of a rapid review approach. Syst Rev. 2012;1:10.

Beall J. Medical publishing triage - chronicling predatory open access publishers. Ann Med Surg. 2013;2(2):47–9.

Abadi ATB. Roadmap to stop the predatory journals: author’s perspective. RMM. 2017;5(1):1–5.

Berger M. Everything you ever wanted to know about predatory publishing but were afraid to ask. 2017. http://www.ala.org/acrl/sites/ala.org.acrl/files/content/conferences/confsandpreconfs/2017/EverythingYouEverWantedtoKnowAboutPredatoryPublishing.pdf. Accessed 11 June 2019.

Das S, Chatterjee SS. Say no to evil: predatory journals, what we should know. Asian J Psychiatr. 2017;28:161–2.

Erfanmanesh M, Pourhossein R. Publishing in predatory open access journals: a case of Iran. Publ Res Q. 2017;33(4):433–44.

Eriksson S, Helgesson G. The false academy: predatory publishing in science and bioethics. Med Health Care Philos. 2017;20(2):163–70.

Janodia MD. Identifying predatory journals - a few simple steps. Curr Sci. 2017;112(12):2361–2.

Khan F, Moher D. Predatory journals: do not enter. UOJM Epub. 2017. https://uottawa.scholarsportal.info/ottawa/index.php/uojm-jmuo/article/view/1755. Accessed 11 June 2019.

Klyce W, Feller E. Junk science for sale sham journals proliferating online. R I Med J. 2017;100(7):27–9.

Manca A, Martinez G, Cugusi L, Dragone D, Dvir Z, Deriu F. The surge of predatory open-access in neurosciences and neurology. Neuroscience. 2017;353:166–73.

Miller E, DeBerg J. The perils of predatory publishing: views and advice from an editor and a health sciences librarian. Pain Manag Nurs. 2017;18(6):351–2.

Misra DP, Ravindran V, Wakhlu A, Sharma A, Agarwal V, Negi VS. Publishing in black and white: the relevance of listing of scientific journals. Rheumatol Int. 2017;37(11):1773–8.

Mouton J, Valentine A. The extent of South African authored articles in predatory journals. S Afr J Sci. 2017;113:7–8.

Oren GA. Predatory publishing: top 10 things you need to know. 2017. https://wkauthorservices.editage.com/resources/author-resource-review/2017/dec-2017.html. Accessed 11 June 2019.

Stratton SJ. Another ‘dear esteemed colleague’ journal email invitation? Prehosp Disaster Med. 2017;32(1):1–2.

Balehegn M. Increased publication in predatory journals by developing countries’ institutions: what it entails? and what can be done? Int Inf Libr Rev. 2017;49(2):97–100.

McCann TV, Polacsek M. False gold: safely navigating open access publishing to avoid predatory publishers and journals. J Adv Nurs. 2018;74(4):809–17.

O’Donnell M. Library guides: understanding predatory publishers: identifying a predator. 2018. https://instr.iastate.libguides.com/predatory/id. Accessed 11 June 2019.

Ajuwon GA, Ajuwon AJ. Predatory publishing and the dilemma of the Nigerian academic. Afr J Biomed Res. 2018;21(1):1–5.

Bowman MA, Saultz JW, Phillips WR. Beware of predatory journals: a caution from editors of three family medicine journals. J Am Board Fam Med. 2018;31(5):671–6.

Gerberi DJ. Predatory journals: alerting nurses to potentially unreliable content. Am J Nurs. 2018;118(1):62–5.

Pamukcu Gunaydin G, Dogan NO. How can emergency physicians protect their work in the era of pseudo publishing? Turk J Emerg Med. 2018;18(1):11–4.

Kokol P, Zavrsnik J, Zlahtic B, Blazun VH. Bibliometric characteristics of predatory journals in pediatrics. Pediatr Res. 2018;83(6):1093–4.

Lewinski AA, Oermann MH. Characteristics of e-mail solicitations from predatory nursing journals and publishers. J Contin Educ Nurs. 2018;49(4):171–7.

Memon AR. Predatory journals spamming for publications: what should researchers do? Sci Eng Ethics. 2018;24(5):1617–39.

Nnaji JC. Illegitimate academic publishing: a need for sustainable global action. Publ Res Q. 2018;34(4):515–28.

Power H. Predatory publishing: how to safely navigate the waters of open access. Can J Nurs Res. 2018;50(1):3–8.

Richtig G, Berger M, Lange-Asschenfeldt B, Aberer W, Richtig E. Problems and challenges of predatory journals. J Eur Acad Dermatol Venereol. 2018;32(9):1441–9.

Carlson E. The top ten ways to tell that a journal is fake. 2014. https://blogs.plos.org/yoursay/2017/03/22/the-top-ten-ways-to-tell-that-a-journal-is-fake/. Accessed 11 June 2019.

University of Edinburgh. Some warning signs to look out for. 2015. https://www.ed.ac.uk/information-services/research-support/publish-research/scholarly-communications/predatory-or-bogus-journals. Accessed 16 Jan 2019.

Africa Check. Guide: how to spot predatory journals in the wild. 2017. https://africacheck.org/factsheets/guide-how-to-spot-predatory-academic-journals-in-the-wild/. Accessed 16 Jan 2019.

Cabells. Cabells blacklist violations. 2017. https://www2.cabells.com/blacklist-criteria. Accessed 16 Jan 2019.

Duke University Medical Centre. Be iNFORMEd checklist. 2017. https://guides.mclibrary.duke.edu/beinformed. Accessed 16 Jan 2019.

University of Calgary. Tools for evaluating the reputability of publishers. 2017. https://library.ucalgary.ca/guides/scholarlycommunication/predatory. Accessed 16 Jan 2019.

Coopérer en information scientifique et technique. Eviter les éditeurs prédateurs (predatory publishers). 2018. https://coop-ist.cirad.fr/aide-a-la-publication/publier-et-diffuser/eviter-les-editeurs-predateurs/3-indices-de-revues-et-d-editeurs-douteux. Accessed 16 Jan 2019.

Eaton SE (University of Calgary). Avoiding predatory journals and questionable conferences: a resource guide. 2018. https://prism.ucalgary.ca/bitstream/handle/1880/106227/Eaton%20-%20Avoiding%20Predatory%20Journals%20and%20Questionable%20Conferences%20-%20A%20Resource%20Guide.pdf?sequence=1. Accessed 16 Jan 2019.

Lapinski S(Harvard University). How can I tell whether or not a certain journal might be a predatory scam operation? 2018. https://asklib.hms.harvard.edu/faq/222404. Accessed 16 Jan 2019.

Sorbonne Université. Comment repérer un éditeur prédateur. 2018. https://paris-sorbonne.libguides.com/bibliodoctorat/home. Accessed 16 Jan 2019.

University of British Columbia. Publishing a journal article. 2018. http://guides.library.ubc.ca/publishjournalarticle/predatory#s-lg-box-9343242. Accessed 16 Jan 2019.

University of Alberta. Identifying appropriate journals for publication. 2018. https://guides.library.ualberta.ca/c.php?g=565326&p=3896130. Accessed 16 Jan 2019.

University of Toronto Libraries. Identifying deceptive publishers: a checklist. 2018. https://onesearch.library.utoronto.ca/deceptivepublishing. Accessed 16 Jan 2019.

Dalhousie University. How to recognize predatory journals. 2019. http://dal.ca.libguides.com/c.php?g=257122&p=2830098. Accessed 16 Jan 2019.

McGill University. Avoiding illegitimate OA journals. 2019. https://www.mcgill.ca/library/services/open-access/illegitimate-journals. Accessed 16 Jan 2019.

McMaster University. Graduate guide to research. 2019. https://libguides.mcmaster.ca/gradresearchguide/open-access. Accessed 16 Jan 2019.

Prater C. 8 ways to identify a questionable open access journal. Am J Experts. 2019. https://www.aje.com/en/arc/8-ways-identify-questionable-open-access-journal/. Accessed 16 Jan 2019.

Ryerson University Libraries. Evaluating journals. 2019. http://learn.library.ryerson.ca/scholcomm/journaleval. Accessed 16 Jan 2019.

Université Laval. Editeurs prédateurs . 2019. https://www.bda.ulaval.ca/conservation-des-droits/editeurs-predateurs/. Accessed 16 Jan 2019.

University of Cambridge. Predatory publishers. 2019. https://osc.cam.ac.uk/about-scholarly-communication/author-tools/considerations-when-choosing-journal/predatory-publishers. Accessed 16 Jan 2019.

University of Pretoria. Predatory publications: predatory journals home. 2018. http://up-za.libguides.com/c.php?g=834649. Accessed 16 Jan 2019.

University of Queensland Library. Identifying reputable open access journals. 2019. https://guides.library.uq.edu.au/ld.php?content_id=46950215. Accessed 16 Jan 2019.

University of Queensland Library. Red flags for open access journals. 2019. https://guides.library.uq.edu.au/for-researchers/get-published/unethical-publishing. Accessed 16 Jan 2019.

University of Witwatersrand. Predatory publisher checklist. 2019. https://libguides.wits.ac.za/openaccess_a2k_scholarly_communication/Predatory_Publishers. Accessed 16 Jan 2019.

Canadian Association of Research Libraries. How to assess a journal. (Date unknown). http://www.carl-abrc.ca/how-to-assess-a-journal/. Accessed 16 Jan 2019.

Columbia University Libraries. Evaluating publishers. (Date Unknown). https://scholcomm.columbia.edu/publishing.html. Accessed 16 Jan 2019.

Technion Library. Author guide on how to publish an open access article (Date unknown). https://library.technion.ac.il/en/research/open-access. Accessed 16 Jan 2019.

University of Califirnia, Berkeley. Evaluating publishers. (Date unknown). http://www.lib.berkeley.edu/scholarly-communication/publishing/lifecycle/evaluating-publishers. Accessed 16 Jan 2019.

University of Ottawa Scholarly Communication. Predatory publishers (Date unknown). https://scholarlycommunication.uottawa.ca/publishing/predatory-publishers. Accessed 16 Jan 2019.

Robbins S (Western Sydney University). Red flags. 2015. https://www.youtube.com/watch?v=7WSDJKaw6rk. Accessed 6 Mar 2020.

Attia S. Spot predatory journals. 2017. https://www.youtube.com/watch?v=vU2M3U7knrk. Accessed 6 Mar 2020.

Kysh L, Johnson RE, (keck School of Medicine of the University of Southern California). Characteristics of predatory journals. 2017. https://www.youtube.com/watch?v=rpVzJWxvClg. Accessed 6 Mar 2020.

McKenna S (Rhodes University). Predatory publications: shark spotting. 2017. https://www.youtube.com/watch?v=LfOKgS4wBvE. Accessed 6 Mar 2020.

Nicholson DR (University of Witwatersrand). Cautionary checklist. 2017. http://webinarliasa.org.za/playback/presentation/0.9.0/playback.html?meetingId=a967fc2ec0fdbb5a6d4bfaad2a02dc51a48febab-1508483812099&t=0s. Accessed 6 Mar 2020.

Raszewski R. What to watch out for. 2017. https://www.youtube.com/watch?v=wF1EEq6qsZQ. Accessed 6 Mar 2020.

Seal-Roberts J (Healthcare Springer). So how do we recognize a predatory journal? 2017. https://www.youtube.com/watch?v=g_l4CXnnDHY. Accessed 6 Mar 2020.

Menon V, Berryman K. Characteristics of predatory journals. 2018. https://www.youtube.com/watch?v=aPc_C6ynF8Q. Accessed 6 Mar 2020.

Office of Scholarly Communication Cambridge University. Predatory publishers in 3 minutes. 2018. https://www.youtube.com/watch?v=UwUB3oquuRQ. Accessed 6 Mar 2020. .

Weigand S. (UNC Libraries). Prepare to publish part II: journal selection & predatory journals. 2018. https://www.youtube.com/watch?v=KPyDQcC_drg. Accessed 6 Mar 2020.

Crawford W. Journals, “journals” and wannabes: investigating the list. Cites Insights. 2014;14(7):24.

Knoll JL. Open access journals and forensic publishing. J Am Acad Psychiatry Law. 2014;42(3):315–21.

Lukic T, Blesic I, Basarin B, Ivanovic B, Milosevic D, Sakulski D. Predatory and fake scientific journals/publishers: a global outbreak with rising trend: a review. Geogr Pannonica. 2014;18(3):69–81.

Beall J. Criteria for determining predatory open-access publishers. 3rd ed; 2015. https://beallslist.weebly.com/uploads/3/0/9/5/30958339/criteria-2015.pdf. Accessed 9 June 2019.

Bhad R, Hazari N. Predatory journals in psychiatry: a note of caution. Asian J Psychiatr. 2015;16:67–8.

Bradley-Springer L. Predatory publishing and you. J Assoc Nurses AIDS Care. 2015;26(3):219–21.

Hemmat Esfe M, Wongwises S, Asadi A, Akbari M. Fake journals: their features and some viable ways to distinguishing them. Sci Eng Ethics. 2015;21(4):821–4.

Inane Predatory Publishing Practices Collaborative. Predatory publishing: what editors need to know. J Cannt. 2015;25(1):8–10.

Pamukcu Gunaydin GP, Dogan NO. A growing threat for academicians: fake and predatory journals. S Afr J Sci. 2015;14(2):94–6.

Proehl JA, Hoyt KS. Editors. Predatory publishing: what editors need to know. Adv Emerg Nurs J. 2015;37(1):1–4.

Stone TE, Rossiter RC. Predatory publishing: take care that you are not caught in the open access net. Nurs Health Sci. 2015;17(3):277–9.

Yucha C. Predatory publishing: what authors, reviewers, and editors need to know. Biol Res Nurs. 2015;17(1):5–7.

Cariappa MP, Dalal SS, Chatterjee K. To publish and perish: a Faustian bargain or a Hobson’s choice. Med J Armed Forces India. 2016;72(2):168–71.

Carroll CW. Spotting the wolf in sheep’s clothing: predatory open access publications. J Grad Med Educ. 2016;8(5):662–4.

Dadkhah M, Bianciardi G. Ranking predatory journals: solve the problem instead of removing it! Adv Pharm Bull. 2016;6(1):1–4.

Fraser D. Predatory journals and questionable conferences. Neonatal Netw. 2016;35(6):349–50.

Glick M. Publish and perish (clues suggesting a ‘predatory’ journal). J Am Dent Assoc. 2016;147(6):385–7.

Glick M. Publish and perish (what you can expect from a predatory publisher). J Am Dent Assoc. 2016;147(6):385–7.

Hansoti B, Langdorf MI, Murphy LS. Discriminating between legitimate and predatory open access journals: report from the International Federation for Emergency Medicine Research Committee. West J Emerg Med. 2016;17(5):497–507.

Morley C. “Dear esteemed author:” spotting a predatory publisher in 10 easy steps. STFM Blog. 2016. https://blog.stfm.org/2016/05/23/predatory-publisher/. Accessed 11 June 2019.

Nolan T. Don’t fall prey to ‘predatory’ journals. Int Sci Edit. 2016. https://www.internationalscienceediting.com/avoid-predatory-journals/. Accessed 11 June 2019.

Ward SM. The rise of predatory publishing: how to avoid being scammed. Weed Sci. 2016;64(4):772–8.

Predatory publishing. In: Wikipedia . 2019. https://en.wikipedia.org/w/index.php?title=Predatory_publishing&oldid=900101199. Accessed 11 June 2019.

Amano T, González-Varo JP, Sutherland WJ. Languages are still a major barrier to global science. PLoS Biol. 2016;14(12):e2000933.

Think, Check, Submit. 2019. https://thinkchecksubmit.org/. Accessed 13 July 2019.

Acknowledgements

The authors would like to thank Raymond Daniel, Library Technician, at the Ottawa Hospital Research Institute, for document retrieval, information support and setup in Distiller.

Funding

This project received no specific funding. David Moher is supported by a University Research Chair (University of Ottawa). Danielle Rice is supported by a Canadian Institutes of Health Research Health Systems Impact Fellowship; Lucas Helal is supported by Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001, PDSE - 88881.189100/2018–01. Manoj Lalu is supported by The Ottawa Hospital Anesthesia Alternate Funds Association. The funders had no involvement in the study design, in the collection, analysis or interpretation of the data, in the writing of the report, or in the decision to submit the paper for publication. All authors confirm their work is independent from the funders. All authors, external and internal, had full access to all of the data (including statistical reports and tables) in the study and can take responsibility for the integrity of the data and the accuracy of the data analysis.

Author information

Authors and Affiliations

Contributions

(CReDiT: https://www.casrai.org/credit.html): Conceptualization: SC and DM. Methodology: BS. Project administration: SC. Investigation (data collection): SC, LH, DBR, JP, NA, MW. Writing – Original Draft: SC. Writing – Review & Editing: All authors read and approved the final manuscript. Supervision: DM, ML.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethical approval was not required as this study did not involve human participants.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

Peer Review of Electronic Search Strategies (PRESS) Checklist.

Additional file 2.

Search strategy for the Ovid database.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Cukier, S., Helal, L., Rice, D.B. et al. Checklists to detect potential predatory biomedical journals: a systematic review. BMC Med 18, 104 (2020). https://doi.org/10.1186/s12916-020-01566-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12916-020-01566-1