Abstract

Background

mHealth technologies are now widely utilised to support the delivery of secondary prevention programs in heart disease. Interventions with mHealth included have shown a similar efficacy and safety to conventional programs with improvements in access and adherence. However, questions remain regarding the successful wider implementation of digital-supported programs. By applying the Reach-Effectiveness-Adoption-Implementation-Maintenance (RE-AIM) framework to a systematic review and meta-analysis, this review aims to evaluate the extent to which these programs report on RE-AIM dimensions and associated indicators.

Methods

This review extends our previous systematic review and meta-analysis that investigated the effectiveness of digital-supported programs for patients with coronary artery disease. Citation searches were performed on the 27 studies of the systematic review to identify linked publications that reported data for RE-AIM dimensions. All included studies and, where relevant, any additional publications, were coded using an adapted RE-AIM extraction tool. Discrepant codes were discussed amongst reviewers to gain consensus. Data were analysed to assess reporting on indicators related to each of the RE-AIM dimensions, and average overall reporting rates for each dimension were calculated.

Results

Searches found an additional nine publications. Across 36 publications that were linked to the 27 studies, 24 (89%) of the studies were interventions solely delivered at home. The average reporting rates for RE-AIM dimensions were highest for effectiveness (75%) and reach (67%), followed by adoption (54%), implementation (36%) and maintenance (11%). Eleven (46%) studies did not describe relevant characteristics of their participants or of staff involved in the intervention; most studies did not describe unanticipated consequences of the intervention; the ongoing cost of intervention implementation and maintenance; information on intervention fidelity; long-term follow-up outcomes, or program adaptation in other settings.

Conclusions

Through the application of the RE-AIM framework to a systematic review we found most studies failed to report on key indicators. Failing to report these indicators inhibits the ability to address the enablers and barriers required to achieve optimal intervention implementation in wider settings and populations. Future studies should consider alternative hybrid trial designs to enable reporting of implementation indicators to improve the translation of research evidence into routine practice, with special consideration given to the long-term sustainability of program effects as well as corresponding ongoing costs.

Registration

PROSPERO—CRD42022343030.

Similar content being viewed by others

Background

Cardiac rehabilitation (CR) is a multi-component program that is designed to optimise cardiovascular risk reduction, foster compliance to healthy behaviours, and promote an active lifestyle for people with cardiovascular disease [1, 2]. Introduced in the late 1960s, there is a substantive evidence base supporting CR as a clinically effective and cost-effective intervention for the secondary prevention of heart disease [3, 4] and it is now routinely recommended across a wide range of cardiac diagnoses. While centre-based CR programs have been shown to be effective in reducing hospital admissions and improving health-related quality of life [3, 5], reported referral, access, and participation rates have been sub-optimal [6, 7].

The use of mobile health (mHealth) technologies in support of CR and secondary prevention programs has increased in recent years. An increase accelerated by the onset of the COVID-19 pandemic when access to centre-based programs was limited. Integrating mHealth, which includes mobile and wireless technologies, wearables, mobile apps [8], and more recently, the use of sensors and AI [9], into home-based and hybrid models of secondary prevention has shown similar safety and efficacy to centre-based programs [10]. Such digital-supported secondary prevention programs have shown increases in adherence [11] and positive effects on behavioural and psychosocial outcomes [11, 12]. However, unifying evidence for the overall impact of these programs on health outcomes is lacking, especially in programs that incorporate novel technologies [11].

A recent systematic review and meta-analysis examined the impact of digital-supported secondary prevention programs, assessing if programs using mHealth reduced readmissions and mortality in patients with coronary artery disease [13]. The review found that programs using mHealth may be effective in lowering hospital visits and readmissions, but there was no evidence for reduced mortality outcomes. The authors reflected that assessment of longer-term effectiveness and program scalability may be hindered by non-generalisable study populations and short follow-up periods. Thus, while digital supported secondary prevention programs have become more common and have demonstrated efficacy, translatability beyond the research setting is unclear.

The Reach-Effectiveness-Adoption-Implementation-Maintenance (RE-AIM) model has proven to be a useful planning an evaluation framework to evaluate the degree to which interventions report on internal (i.e., accuracy of research methods and findings) as well as external validity (i.e., generalisability and translatability) factors [14]. RE-AIM has been successfully applied to systematic and scoping reviews that have evaluated threats to the transferability of a multitude of digital-supported health interventions to practice, including those for mental health [15, 16], diabetes self-management [17], chronic disease [18], physical activity [19], and vaccination promotion [20]. To our knowledge, there has been no review reporting on the implementation of digital-supported interventions to improve health outcomes and the secondary prevention of heart disease.

This study aims to evaluate the extent to which RE-AIM dimensions and associated internal and external validity indicators are reported in the included studies of the systematic review and meta-analysis by Braver et al. [13], as such knowledge would offer guidance to improve the wider implementation and optimisation of digital-enabled secondary prevention programs.

Methods

Study design

We undertook a systematic search for any additional publications to those already included in the systematic review and meta-analysis by Braver et al. [13]. Braver included studies that compared mHealth-supported secondary prevention programs against standard delivered programs, this study searched for and included any additional publications that reported on characteristics, delivery, and implementation of those studies.

This study is registered with PROSPERO (CRD42022343030) and conforms to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [21].

Search strategy and selection

To identify additional publications to those already included in the systematic review and meta-analysis by Braver et al. [13], we conducted reference list and citation searches using the MEDLINE, Embase, and Scopus databases. We also searched trial registries using identified clinical trial registration numbers. All search results were imported into Endnote 20 software and duplicate records were removed. Remaining records were exported into Covidence, a cloud-based systematic review program [22].

Titles and abstracts were examined for eligibility independently by two reviewers (AI and CL), with a third reviewer (CM) adjudicating any discrepancy. Non-English language publications and publications reporting on work other than the original studies included by Braver et al. were excluded. Conference abstracts, reviews, book chapters were also excluded. The full text of the remaining publications was obtained and screened. Publications were included if they described any additional data to that reported in the studies included studies of Braver et al., such as secondary analyses, long-term follow-up results, or cost-effectiveness analyses. The inclusion criteria for eligible studies are presented in Table 1.

Braver et al. initially identified 27 unique studies to be included in their systematic review, they later decided to exclude nine studies as there was no usual care disease management plan as the control group, however, we included all the 27 unique studies in our review since the control group program was not relevant to our study aim. The full search strategy of Braver et al. is described elsewhere [13].

Data extraction tool

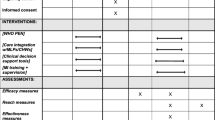

The RE-AIM data extraction tool used was adapted from previous extraction tools [17, 19] to suit the characteristics of digital-supported interventions and align with the recommendations made by Holtrop et al. [23]. Minor modifications were made to previously used RE-AIM item definitions to ensure that the description of the indicators for each of the five RE-AIM dimensions were relevant and unambiguous [16, 19]. The resulting 25-item coding sheet, consisting of the five RE-AIM dimensions and corresponding indicators is presented (Table 2).

For reach, the coding sheet included the target population, study inclusion and exclusion criteria, participation rate, and the representativeness of the participants. One indicator was added to assess reasons for not participating, as this information might provide insight into any unanticipated negative consequences of the intervention. Effectiveness was evaluated with eight indicators, including the impact of the intervention (primary and secondary outcomes, as well as measures of quality of life); the reporting of results for at least one follow-up; intent to treat assessment; intervention satisfaction; any unanticipated consequences; and participant attrition. Adoption consisted of three indicators. Two related to the intervention location (home, centre-based, hybrid, or other) and the staff delivering the intervention. One item was added to collect information about the individuals delivering the intervention, such as their characteristics, qualifications, level of expertise and uptake. The indicators used to assess implementation included duration and frequency of intervention, the ongoing cost of intervention delivery, and the extent to which the intervention was delivered as intended. We expanded this final item by asking for details of any adaptations made, to get insight into how the intervention worked in specific contexts [24]. In addition, we included an item to document whether theory-based approaches were used, as it is recognised that when a study is informed by theory, the probability of successful implementation and sustainability of an intervention increases [23, 25]. Finally, to document maintenance, four indicators were used, which included description and reasons for program (dis)continuation after completion, and measures of cost of maintenance. One item was added to indicate if the study reported on the maintenance of any behaviour change post-intervention [23]. Another item assessed if the intervention was adopted in a different setting [26].

Data extraction

All included publications were coded independently in Covidence by two reviewers (AI and CM). Any discrepancy in the coding was resolved through discussion amongst the two reviewers and arbitration by a third reviewer (CL) until consensus was reached. We coded data that was related to general study and intervention characteristics and whether a study, including their associated papers, reported on each of the 25 RE-AIM indicators (i.e., yes or no). For each included study and their associated papers, we calculated an overall indicator reporting rate based on the total number of reported indicators (maximum score = 25). In addition, we calculated the indicator reporting rate for each RE-AIM dimension by dividing the total of “yes” scores for that dimension by the total number of indicators in the dimension. The reporting comprehensiveness of the studies was assessed using tertile cut-off points, as done in previous reviews using RE-AIM [27]. Studies that scored between 18–25 indicators (72–100%) were considered to have a high reporting quality, studies that scored 9–16 indicators (36–68%) were of moderate quality, and those that scored 0–8 indicators (0–31%) reflected low quality. In addition, we calculated the average reporting rates of the indicators for each dimension.

Results

Searches for additional publications to those already included in the systematic review and meta-analysis by Braver et al. [13], found 665 possible additional publications. Of these, nine were included in our study, resulting in a total of 36 publications linked directly to the 27 studies included in Braver et al. The nine additional publications provided data on one or more dimensions of the RE-AIM framework. An overview of the selection process is illustrated in Fig. 1.

Study characteristics

Thirty six publications linked to the 27 studies were published between 2015 and 2022 (n = 30; 83%) and the earliest publication [28] was dated 2008. The characteristics and RE-AIM reporting rates extracted from the 36 publications of the 27 studies are presented in detail in an additional file (see Supplementary Table).

Of the 27 studies, most were conducted in Canada [28,29,30,31,32] and the USA [33,34,35,36,37] (n = 10; 41%) and Europe (n = 7; 26%), and all except one [34] (96%) were RCTs. Most study interventions (n = 24; 89%) were solely delivered in the home setting. Three studies used a hybrid delivery method, i.e., they were either delivered at a centre and included a home-based digital health component [37], or the intervention begun at the centre and continued at home [38, 39]. In 11 (46%) of the 24 solely at home delivered interventions [31,32,33,34,35, 40,41,42,43,44,45], no staff were directly involved in the delivery of care or they were only available on participant’s request. Seven studies [33, 35, 45,46,47,48,49] (26%) only used a mobile application to deliver the intervention, while three studies [37, 44, 50] (11%) used a mobile application and a web-based platform. Only four studies [28,29,30, 34] (15%) did not require the use of a smartphone during the intervention. In 10 studies (37%), the intervention included additional technology, including wearable devices [29, 33, 34, 38, 39, 43, 47, 50, 51] and electronically monitored pill bottles [36].

RE-AIM reporting

The average reporting rates (% of RE-AIM dimension indicators) for the 27 studies were highest for Effectiveness (75%) and Reach (67%), followed by Adoption (54%), Implementation (36%) and Maintenance (11%) (see Table 3 and Additional file 1). In only one study [51], the comprehensiveness of reporting was considered high quality (72–100%). All other studies were rated as moderate quality (36–68%), although one study [34] was borderline moderate with a quality rating of 36%. The following sections summarise the reporting of the separate studies on each of the RE-AIM dimension indicators.

Reach

Most studies (n = 24; 89%) indicated that they identified and recruited their participants during hospitalisation, shortly after discharge or in a rehabilitation centre. Lear et al. [29] did not provide details beside from evaluating participants from regional/rural settings, Volpp et al. [36] recruited participants via insurance companies’ data, and Wolf et al. [44] recruited from a previous study. No studies reported characteristics of target populations, as all potential participants were identified via disease characteristics. In some cases, further characteristics were provided in the inclusion and exclusion criteria, including age, language, and severe physical and/or mental impairments. All studies reported on both inclusion and exclusion criteria, however, only 11 studies (41%) provided information relevant to the use of mHealth, such as the requirement to own a (smart) phone [33, 40, 41, 45, 46, 52, 53], have a landline telephone or cellular service [31, 32], or have access to the Internet [30, 37]. One study [54] excluded participants (without a support person) that were physically or mentally unable to use the technical equipment needed for telemonitoring. Participation rate could not be calculated for three studies [28, 35, 55] as the authors did not include data on sample size or eligible participants exposed to the intervention. The median participation rate, from the 24 (89%) studies that reported participation rates was 41% (range, 6%—100%). None of the 27 studies comprehensively described all relevant characteristics of their participants, making it challenging to draw conclusions about the representativeness of the sample or whether the low participation rates indicated that the intervention was not suitable for the target population.

Effectiveness

All except one study [44] reported on both primary and secondary outcome measures. Fourteen (52%) studies reported on study variable(s) for more than one follow-up time point [28,29,30, 35, 37,38,39, 43, 46,47,48, 50, 51, 55]. A little over half of the studies (n = 15; 56%) included the quality of life as an outcome measure [28, 30, 37, 39, 41, 42, 45,46,47,48, 50, 51, 54, 55] and 17 (63%) studies reported any measure of participant satisfaction or monitored participant feedback [28, 29, 31, 32, 34, 37, 39,40,41,42,43, 48, 49, 51, 53,54,55]. Maddison et al. [50] was the only study to report outcomes that might have been unanticipated consequences of the intervention, including soft tissue injuries and a broken ankle. Twenty-two studies (81%) reported on intention-to-treat analysis [28,29,30,31,32, 35, 36, 38,39,40,41,42,43, 45, 47,48,49,50,51, 53,54,55] or if they only included participants that were present at follow-up. Attrition was provided or could be calculated for all studies except one [34]. The median attrition rate (measured directly after the intervention) for the intervention group (range, 0%—33%) and the control group (range, 0%—45%) was 11%. Only two studies (7%) did not provide reasons for why participants dropped out of the intervention [31, 44].

Adoption

All studies described the intervention location. For three studies, the intervention either started with centre-based training sessions [33, 55] or information sessions [46]. Three studies were delivered in a hybrid format, i.e., starting on-site and continuing at home [37,38,39]. Other than interaction with on-site staff during the centre-based component or introduction of the study and when outcomes were assessed, almost half (n = 11; 46%) of the home-based interventions were fully delivered via online apps and did not require the involvement of health professionals unless specifically requested by a participant [31,32,33,34, 40,41,42,43,44,45]. In the remaining 13 (54%) studies, researchers and health professionals, including nurses, physicians, physiotherapists, dieticians, and exercise specialists, were involved to review data, provide feedback and provide motivational reinforcement. With the exception of one study [47], any further characteristics of staff delivering the intervention were not reported.

Implementation

Implementation was, together with maintenance, the least addressed dimension. While almost all studies reported on intervention duration and intervention frequency, none of the studies mentioned the ongoing cost of intervention implementation. Information on intervention fidelity was also scarce and reported in only three publications: Widmer et al. [37] reported that no changes were made after the initiation of the study; Pakrad et al. [46] mentioned some changes were made after protocol registration, without reporting detail; and Pfaeffli-Dale et al. [43] stated that the study protocol was amended to include an additional end point. Nine studies (33%) reported on the application of theories, models, or frameworks to guide the implementation and delivery of the intervention. Four (15%) [33, 42, 43, 46] used the social cognitive theory, a common behaviour-change theory applied in managing chronic health conditions. Seven studies (26%) [33, 37, 41, 46, 50, 51, 55] used behaviour change techniques or models to guide the delivery of the behaviour change interventions.

Maintenance

Only 10 (37%) studies measured primary or secondary outcomes post intervention. In four (15%) studies [37, 44, 50, 51], follow-up assessment(s) took place three to six months after the intervention ended. A further four (15%) studies measured outcomes one year [28,29,30] or two years [39] after intervention completion. One study [55] and its additional publication [56] reported on outcome measures up to four years after the start of the intervention. Two (7%) studies reported on program maintenance. This included the sustainability of adaptations made following intervention completion [51] and continued use and monitoring of the e-health tool after six months [44]. None of the studies reported program maintenance costs or whether the program was adopted in another setting.

Discussion

The aim of this review was to evaluate the implementation of digital-supported interventions for the secondary prevention of heart disease using the RE-AIM framework. We found that there were significant gaps in the reporting of internal and external validity factors at both the individual and organisational level. It may be that data related to specific RE-AIM dimensions were not reported because authors did not find these data relevant to the aim of the publication or there was an intent to report this in subsequent publications. Since the included studies were all clinical trials, data contributing to reach, effectiveness, and adoption should be reported, with implementation and maintenance more likely to be underreported.

RE-AIM dimensions

Characteristics required to describe the Reach dimension, which is an individual level measure of participation, are not well reported. None of the 27 studies explicitly described the target population, a finding echoed in similar reviews [16, 17, 19, 57]. The use of convenience sampling methods in trials often makes it not possible to draw conclusions on the representativeness of the sample and consequently, limits generalisability of digital-supported interventions to different socio-demographic groups. The exclusion criteria of the studies, as defined elsewhere [13] placed several restrictions that reduced access for vulnerable populations, including the elderly, the culturally and linguistically diverse, and those with no access to a smart device or no connectivity to the Internet. Whitelaw et al. [58] also reported some common participant-level barriers in the uptake of digital health technologies in cardiovascular care, including difficult-to-use technology, poor Internet connection, and fear of using technology. The relatively low median participation rate (41%) among the studies implies the existence of participant-level access barriers. This rate is lower than the median or average rates provided by other systematic reviews reporting on the RE-AIM dimensions of digital health interventions, which range from 51%-70% [16, 17, 19]. Interestingly, the observed low participation rate is similar to traditional, face-to-face programs (REF). Digital-supported programs aim to increase access and uptake to secondary prevention care. This finding suggests that these novel programs may not be achieving their desired goals and more research is needed to understand the factors impacting participation. Further, attrition rates were low compared to traditional programs, suggesting that digital-supported programs may enhance adherence once patients participate. This may be an important factor explaining the benefits of mHealth supported secondary prevention programs and further supports the findings from our previous review [13].

All the studies evaluated were digital-supported interventions for secondary prevention of heart disease and consequently the primary and secondary outcome measures of the interventions were predominantly clinical and behavioural in nature. Reporting of broader outcomes, such as quality of life and participant satisfaction, was rare, even though these outcomes can provide insight into improving the quality of the delivered care as well as intervention compliance [59]. Critical evaluation of the impact of intervention delivery was less clear. In most of the studies, attrition was low, however, a few studies reported much higher attrition. Common reasons provided for attrition related to either clinical deterioration (being medically unwell or death) or participant withdrawal. Yet, it was not always apparent if a withdrawal was related to the use of the technology in the intervention or to other circumstances.

Improving the functional capacity, wellbeing, and health-related quality of life of people diagnosed with heart disease requires long-term lifestyle modifications, including adherence to prescribed medical treatments. However, any long-lasting effect on outcomes could not be assessed for most studies, as the long-term maintenance of individuals’ behaviour and health states was only reported in a minority of the studies. The ability of digital technology to improve care and increase uptake and equitable access to secondary prevention requires more evidence about long-term maintenance of effects.

Adoption assesses the setting and staff delivering the program. Reporting mainly focused on the intervention location and few details were provided about the characteristics of staff delivering the intervention, their willingness and motivation to be involved, and if any training was provided. Digital-supported interventions for the secondary prevention often include some clinical oversight and so a failure to report details of delivery agents fails to inform what resources may be needed to deliver future interventions. This makes translation difficult to other settings and as such, decreases the probability that the intended behaviour change will be adopted and maintained [20]. Typically, studies that examine clinical or behavioural outcomes fail to report the organisational level dimensions of the RE-AIM framework (adoption, implementation, and maintenance) [60]. To ensure that healthcare organisations enable their workforces to use digital methods in service delivery design and implementation must attempt to close the gap between an organizations’ adoption of digital methods relative to their digital ability [61].

Factors related to the implementation dimension provide insight into the feasibility and replicability of the intervention [60]. In our review, we found no available information on adaptations made prior to, during, and after program implementation, even though Stirman et al. [24] highlighted that this information is required to understand how to adapt interventions to different contexts while retaining critical components. In addition, almost no use was made of frameworks to inform the delivery of the interventions, while their use has been recognised as being vital to the implementation and sustainability of novel eHealth solutions, in which people, technology, and context are intertwined [62]. Similar to other reviews [17, 19, 20], none of the studies reported the costs of intervention implementation. This lack of reporting eliminates a monetary reference point for future researchers when considering designing similar strategies.

Overall, Maintenance was the most underreported dimension of the framework. Few studies reported maintenance of effect at the individual level. No study reported organisational level maintenance, which is the extent to which the intervention has become a part of routine health care practices [63]. Also, none of the included studies reported ongoing costs of program delivery. Teams designing interventions find planning for and assessing maintenance difficult [14], however, for an intervention to become institutionalised or part of the routine organisational practices, a greater emphasis on recognising external validity factors is needed. There may also be shortfalls in funding that cut interventions short and inhibit the evaluation of maintenance. The general lack of long-running large digital health projects hinder alternative insight into the durability of used digital resources [64] and therefore, the sustainability and generalisability of interventions.

The lack of information on external validity factors in most trials inhibits the evaluation of factors that influence program access and uptake and sustained implementation of interventions and their wider replication in different settings or with more diverse populations. Failing to report factors inhibits the opportunity to improve program uptake and access for disadvantaged populations. Additionally, none of the studies reported the costs involved with intervention implementation or the ongoing costs of program delivery, which eliminates the use of monetary information necessary for decision making. We recommend the use of hybrid trial designs that combine clinical effectiveness trials with implementation and have the potential to rapidly translate the evidence into real-world settings [65], and that to improve long-term sustainability and determine ongoing costs, intervention studies must report across all RE-AIM indicators.

Improvements to the RE-AIM framework

Currently, there is a lack of consistency and accuracy in the reporting of many RE-AIM dimension indicators, hindering intervention replication and translation [23, 66]. In conducting our review, some modifications, including the addition of indicators, were made to the RE-AIM framework (described in the “Methods” section). The nature of digital-supported interventions necessitated these changes so that the framework could be applied to the specific characteristics, delivery, and implementation of such interventions. For example, additional information was collected about individuals delivering the intervention, such as the staff acceptability of the intervention. This addition was needed because the rapid development of digital health technologies is profoundly changing the way healthcare is delivered. Positive consumer acceptance and adoption (from both patients and clinicians) is impacting staff approaches to health occupations, tasks, and functions.

Strengths and limitations

This review is the first that we are aware of to evaluate the process and implementation of digital-supported interventions in the secondary prevention of heart disease. By using a framework adapted to fit the specific characteristics of digital-supported interventions, we were able to systematically assess the extent to which each of the included studies reported on internal and external validity criteria. This process provided a comprehensive insight into the generalisability, translatability and scale-up of the interventions to wider populations and settings.

The changes made to the RE-AIM framework allows its application to the reviewed interventions, however, those changes have yet to be tested for reliability and validity.

While we attempted to systematically identify any publications that would provide additional RE-AIM data, it is possible that some were missed. Furthermore, we based our conclusions solely on the extent to which the RE-AIM indicators were reported in the publications and we did not contact authors for missing information. Finally, as we only included English peer-reviewed publications, we might have missed studies published in other languages.

Conclusions

The lack of reporting of external validity factors in mHealth-supported interventions inhibits the evaluation of factors that influence program access and uptake and sustained implementation of interventions. As such, it cannot be assumed that reported outcomes are generalisable. A failure to report inhibits the opportunity to improve program uptake and access for disadvantaged populations and the wider replication in different settings or with more diverse populations. Additionally, none of the studies reported the costs involved with intervention implementation or the ongoing costs of program delivery, negating the use of financial information in decision making. We recommend that future approaches to digital supported secondary prevention of heart disease should adopt hybrid trial designs to report relevant RE-AIM dimensions and associated indicators to improve the translatability of empirical evidence to real-world settings.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CR:

-

Cardiac Rehabilitation

- RE-AIM:

-

Reach, Effectiveness, Adoption, Implementation, Maintenance

References

Thomas RJ, Beatty AL, Beckie TM, et al. Home-based cardiac rehabilitation: a scientific statement from the American Association of Cardiovascular and Pulmonary Rehabilitation, the American Heart Association, and the American College of Cardiology. Circulation. 2019;140:e69–89.

Taylor RS, Dalal HM, McDonagh STJ. The role of cardiac rehabilitation in improving cardiovascular outcomes. Nat Rev Cardiol. 2022;19:180–94. https://doi.org/10.1038/s41569-021-00611-7.

Anderson L, Taylor RS. Cardiac rehabilitation for people with heart disease: an overview of Cochrane systematic reviews. Cochrane Database Syst Rev. 2014. https://doi.org/10.1002/14651858.CD011273.pub2.

Dibben G, Faulkner J, Oldridge N, et al. Exercise-based cardiac rehabilitation for coronary heart disease. Cochrane Database Syst Rev. 2021. https://doi.org/10.1002/14651858.CD001800.pub4.

Long L, Anderson L, Gandhi M, et al. Exercise-based cardiac rehabilitation for stable angina: systematic review and meta-analysis. Open Heart. 2019;6:e000989.

Aragam KG, Dai D, Neely ML, et al. Gaps in referral to cardiac rehabilitation of patients undergoing percutaneous coronary intervention in the United States. J Am Coll Cardiol. 2015;65:2079–88.

Resurrección DM, Moreno-Peral P, Gómez-Herranz M, et al. Factors associated with non-participation in and dropout from cardiac rehabilitation programmes: a systematic review of prospective cohort studies. Eur J Cardiovasc Nurs. 2019;18:38–47. https://doi.org/10.1177/1474515118783157.

Schorr EN, Gepner AD, Dolansky MA, et al. Harnessing mobile health technology for secondary cardiovascular disease prevention in older adults: a scientific statement from the American Heart Association. Circ Cardiovasc Qual Outcomes. 2021;14:e000103.

Sotirakos S, Fouda B, Mohamed Razif NA, et al. Harnessing artificial intelligence in cardiac rehabilitation, a systematic review. Future Cardiol. 2022;18:154–64. https://doi.org/10.2217/fca-2021-0010.

Anderson L, Sharp GA, Norton RJ, et al. Home‐based versus centre‐based cardiac rehabilitation. Cochrane Database Syst Rev. 2017;(6):Art. No. CD007130.

Gandhi S, Chen S, Hong L, et al. Effect of mobile health interventions on the secondary prevention of cardiovascular disease: systematic review and meta-analysis. Can J Cardiol. 2017;33:219–31. https://doi.org/10.1016/j.cjca.2016.08.017.

Su JJ, Yu DSF, Paguio JT. Effect of eHealth cardiac rehabilitation on health outcomes of coronary heart disease patients: a systematic review and meta-analysis. J Adv Nurs. 2020;76:754–72.

Braver J, Marwick TH, Oldenburg B, et al. Digital health programs to reduce readmissions in coronary artery disease: a systematic review and meta-analysis. JACC Adv. 2023;2:100591. https://doi.org/10.1016/j.jacadv.2023.100591.

Kwan BM, McGinnes HL, Ory MG, et al. RE-AIM in the real world: use of the RE-AIM framework for program planning and evaluation in clinical and community settings. Front Public Health. 2019;7:345.

Vis C, Mol M, Kleiboer A, et al. Improving implementation of eMental health for mood disorders in routine practice: systematic review of barriers and facilitating factors. JMIR Ment Health. 2018;5:e9769.

Zeiler M, Kuso S, Nacke B, et al. Evaluating reach, adoption, implementation and maintenance of Internet-based interventions to prevent eating disorders in adolescents: a systematic review. Eur J Public Health. 2021;31:i38–47.

Yoshida Y, Patil SJ, Brownson RC, et al. Using the RE-AIM framework to evaluate internal and external validity of mobile phone-based interventions in diabetes self-management education and support. J Am Med Inform Assoc. 2020;27:946–56. https://doi.org/10.1093/jamia/ocaa041.

Stellefson M, Chaney B, Barry AE, et al. Web 2.0 chronic disease self-management for older adults: a systematic review. J Med Internet Res. 2013;15:e35. https://doi.org/10.2196/jmir.2439.

Blackman KC, Zoellner J, Berrey LM, et al. Assessing the internal and external validity of mobile health physical activity promotion interventions: a systematic literature review using the RE-AIM framework. J Med Internet Res. 2013;15:e224. https://doi.org/10.2196/jmir.2745.

Asare M, Popelsky B, Akowuah E, et al. Internal and external validity of social media and mobile technology-driven HPV vaccination interventions: systematic review using the reach, effectiveness, adoption, implementation, maintenance (RE-AIM) framework. Vaccines. 2021;9:197.

Moher D, Shamseer L, Clarke M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015;4:1–9.

Veritas Health Innovation. Covidence systematic review software. Melbourne: Veritas Health Innovation; 2022.

Holtrop JS, Estabrooks PA, Gaglio B, et al. Understanding and applying the RE-AIM framework: clarifications and resources. J Clin Transl Sci. 2021;5:e126. https://doi.org/10.1017/cts.2021.789.

Stirman SW, Miller CJ, Toder K, et al. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement Sci. 2013;8:1–12.

Strifler L, Cardoso R, McGowan J, et al. Scoping review identifies significant number of knowledge translation theories, models, and frameworks with limited use. J Clin Epidemiol. 2018;100:92–102.

Balady GJ, Ades PA, Bittner VA, et al. Referral, enrollment, and delivery of cardiac rehabilitation/secondary prevention programs at clinical centers and beyond: a presidential advisory from the American Heart Association. Circulation. 2011;124:2951–60.

Allen K, Zoellner J, Motley M, et al. Understanding the internal and external validity of health literacy interventions: a systematic literature review using the RE-AIM framework. J Health Commun. 2011;16:55–72.

Woodend AK, Sherrard H, Fraser M, et al. Telehome monitoring in patients with cardiac disease who are at high risk of readmission. Heart Lung. 2008;37:36–45. https://doi.org/10.1016/j.hrtlng.2007.04.004.

Lear SA, Singer J, Banner-Lukaris D, et al. Randomized trial of a virtual cardiac rehabilitation program delivered at a distance via the internet. Circ Cardiovasc Qual Outcomes. 2014;7:952–9. https://doi.org/10.1161/CIRCOUTCOMES.114.001230.

Reid RD, Morrin LI, Beaton LJ, et al. Randomized trial of an internet-based computer-tailored expert system for physical activity in patients with heart disease. Eur J Prev Cardiol. 2012;19:1357–64. https://doi.org/10.1177/1741826711422988.

Sherrard H, Struthers C, Kearns SA, et al. Using technology to create a medication safety net for cardiac surgery patients: a nurse-led randomized control trial. Can J Cardiovasc Nurs. 2009;19:9–15.

Sherrard H, Duchesne L, Wells G, et al. Using interactive voice response to improve disease management and compliance with acute coronary syndrome best practice guidelines: a randomized controlled trial. Can J Cardiovasc Nurs. 2015;25:10–5.

Marvel FA, Spaulding EM, Lee MA, et al. Digital health intervention in acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2021;14:e007741. https://doi.org/10.1161/CIRCOUTCOMES.121.007741.

McElroy I, Sareh S, Zhu A, et al. Use of digital health kits to reduce readmission after cardiac surgery. J Surg Res. 2016;204:1–7. https://doi.org/10.1016/j.jss.2016.04.028.

Riegel B, Stephens-Shields A, Jaskowiak-Barr A, et al. A behavioral economics-based telehealth intervention to improve aspirin adherence following hospitalization for acute coronary syndrome. Pharmacoepidemiol Drug Saf. 2020;29:513–7. https://doi.org/10.1002/pds.4988.

Volpp KG, Troxel AB, Mehta SJ, et al. Effect of electronic reminders, financial incentives, and social support on outcomes after myocardial infarction: the HeartStrong randomized clinical trial. JAMA Intern Med. 2017;177:1093–101. https://doi.org/10.1001/jamainternmed.2017.2449.

Widmer RJ, Allison TG, Lennon R, et al. Digital health intervention during cardiac rehabilitation: a randomized controlled trial. Am Heart J. 2017;188:65–72. https://doi.org/10.1016/j.ahj.2017.02.016.

Frederix I, Driessche NV, Hansen D, et al. Increasing the medium-term clinical benefits of hospital-based cardiac rehabilitation by physical activity telemonitoring in coronary artery disease patients. Eur J Prev Cardiol. 2015;22:150–8. https://doi.org/10.1177/2047487313514018.

Frederix I, Solmi F, Piepoli MF, et al. Cardiac telerehabilitation: a novel cost-efficient care delivery strategy that can induce long-term health benefits. Eur J Prev Cardiol. 2017;24:1708–17. https://doi.org/10.1177/2047487317732274.

Bae JW, Woo SI, Lee J, et al. mHealth interventions for lifestyle and risk factor modification in coronary heart disease: randomized controlled trial. JMIR Mhealth Uhealth. 2021;9:e29928. https://doi.org/10.2196/29928.

Chow CK, Redfern J, Hillis GS, et al. Effect of lifestyle-focused text messaging on risk factor modification in patients with coronary heart disease: a randomized clinical trial. JAMA. 2015;314:1255–63. https://doi.org/10.1001/jama.2015.10945.

Khonsari S, Chandler C, Parker R, et al. Increasing cardiovascular medication adherence: a medical research council complex mhealth intervention mixed-methods feasibility study to inform global practice. J Adv Nurs. 2020;76:2670–84. https://doi.org/10.1111/jan.14465.

Pfaeffli Dale L, Whittaker R, Jiang Y, et al. Text message and internet support for coronary heart disease self-management: results from the Text4Heart randomized controlled trial. J Med Internet Res. 2015;17:e237. https://doi.org/10.2196/jmir.4944.

Wolf A, Fors A, Ulin K, et al. An eHealth diary and symptom-tracking tool combined with person-centered care for improving self-efficacy after a diagnosis of acute coronary syndrome: a substudy of a randomized controlled trial. J Med Internet Res. 2016;18:e40. https://doi.org/10.2196/jmir.4890.

Yudi MB, Clark DJ, Tsang D, et al. SMARTphone-based, early cardiac REHABilitation in patients with acute coronary syndromes: a randomized controlled trial. Coron Artery Dis. 2021;32:432.

Pakrad F, Ahmadi F, Grace SL, et al. Traditional vs extended hybrid cardiac rehabilitation based on the continuous care model for patients who have undergone coronary artery bypass surgery in a middle-income country: a randomized controlled trial. Arch Phys Med Rehabil. 2021;102:2091-2101.e2093. https://doi.org/10.1016/j.apmr.2021.04.026.

Snoek JA, Meindersma EP, Prins LF, et al. The sustained effects of extending cardiac rehabilitation with a six-month telemonitoring and telecoaching programme on fitness, quality of life, cardiovascular risk factors and care utilisation in CAD patients: the TeleCaRe study. J Telemed Telecare. 2019;27:473–83. https://doi.org/10.1177/1357633X19885793.

Treskes RW, van Winden LAM, van Keulen N, et al. Effect of smartphone-enabled health monitoring devices vs regular follow-up on blood pressure control among patients after myocardial infarction: a randomized clinical trial. JAMA Netw Open. 2020;3:e202165–e202165. https://doi.org/10.1001/jamanetworkopen.2020.2165.

Yu C, Liu C, Du J, et al. Smartphone-based application to improve medication adherence in patients after surgical coronary revascularization. Am Heart J. 2020;228:17–26. https://doi.org/10.1016/j.ahj.2020.06.019.

Maddison R, Rawstorn JC, Stewart RA, et al. Effects and costs of real-time cardiac telerehabilitation: randomised controlled non-inferiority trial. Heart. 2019;105:122–9.

Snoek JA, Prescott EI, van der Velde AE, et al. Effectiveness of home-based mobile guided cardiac rehabilitation as alternative strategy for nonparticipation in clinic-based cardiac rehabilitation among elderly patients in Europe: a randomized clinical trial. JAMA Cardiol. 2021;6:463–8. https://doi.org/10.1001/jamacardio.2020.5218.

Portney L. In: Watkins MP, editor. Foundations of clinical research applications to practice, volume 892. Upper Saddle River: Prentice Hall; 2000. ISBN: 0131716409.

Khonsari S, Subramanian P, Chinna K, et al. Effect of a reminder system using an automated short message service on medication adherence following acute coronary syndrome. Eur J Cardiovasc Nurs. 2015;14:170–9. https://doi.org/10.1177/1474515114521910.

Blasco A, Carmona M, Fernández-Lozano I, et al. Evaluation of a telemedicine service for the secondary prevention of coronary artery disease. J Cardiopulm Rehabil Prev. 2012;32:25–31. https://doi.org/10.1097/HCR.0b013e3182343aa7.

Kraal JJ, Van den Akker-Van Marle ME, Abu-Hanna A, et al. Clinical and cost-effectiveness of home-based cardiac rehabilitation compared to conventional, centre-based cardiac rehabilitation: Results of the FIT@Home study. Eur J Prev Cardiol. 2017;24:1260–73. https://doi.org/10.1177/2047487317710803.

Brouwers RWM, Kemps HMC, Herkert C, et al. A 12-week cardiac telerehabilitation programme does not prevent relapse of physical activity levels: long-term results of the FIT@Home trial. Eur J Prev Cardiol. 2022;29:e255–7. https://doi.org/10.1093/eurjpc/zwac009.

Messiah SE, Sacher PM, Yudkin J, et al. Application and effectiveness of eHealth strategies for metabolic and bariatric surgery patients: a systematic review. Digit Health. 2020;6:2055207619898987.

Whitelaw S, Pellegrini DM, Mamas MA, et al. Barriers and facilitators of the uptake of digital health technology in cardiovascular care: a systematic scoping review. Eur Heart J Digit Health. 2021;2:62–74. https://doi.org/10.1093/ehjdh/ztab005.

Asadi-Lari M, Tamburini M, Gray D. Patients’ needs, satisfaction, and health related quality of life: towards a comprehensive model. Health Qual Life Outcomes. 2004;2:1–15.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89:1322. https://doi.org/10.2105/ajph.89.9.1322.

Morris ME, Brusco NK, Jones J, et al. The widening gap between the digital capability of the care workforce and technology-enabled healthcare delivery: a nursing and allied health analysis. Healthcare (Basel). 2023;11:994. https://doi.org/10.3390/healthcare11070994.

Cruz-Martinez RR, Wentzel J, Asbjornsen RA, et al. Supporting self-management of cardiovascular diseases through remote monitoring technologies: metaethnography review of frameworks, models, and theories used in research and development. J Med Internet Res. 2020;22:e16157. https://doi.org/10.2196/16157.

Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103:e38–46.

Labrique A, Vasudevan L, Weiss W, et al. Establishing standards to evaluate the impact of integrating digital health into health systems. Glob Health Sci Pract. 2018;6:S5–17.

Curran GM, Bauer M, Mittman B, et al. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50:217–26. https://doi.org/10.1097/MLR.0b013e3182408812.

Harden SM, Gaglio B, Shoup JA, et al. Fidelity to and comparative results across behavioral interventions evaluated through the RE-AIM framework: a systematic review. Syst Rev. 2015;4:155. https://doi.org/10.1186/s13643-015-0141-0.

Acknowledgements

The Academic and Research Collaborative in Health (B Oldenburg PhD & C Lynch), La Trobe University.

Melinda Carrington receives an endowed fellowship in the Cardiology Centre of Excellence from Filippo and Maria Casella.

La Trobe University Large Collaboration Scheme Group; James Boyd, Damminda Alahakoon, Timothy Skinner, Meg Morris, George Moschonis, Dana Wong, Adam Semciw, Rebecca Jessup, Tom Marwick, Quan Huynh, Anthony Gust.

Funding

This research received no external funding.

Author information

Authors and Affiliations

Contributions

Conception and design: BO, CL, AI, CdeM-M. Collection, and assembly of data: CdeM-M, CL, AI. Data analysis and interpretation: CdeM-M, CL, JB. Manuscript writing: CdeM-M, CL, GZ, MC, BO. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Supplementary Table 1.

Characteristics and RE-AIM reporting rates of reviewed studies.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

de Moel-Mandel, C., Lynch, C., Issaka, A. et al. Optimising the implementation of digital-supported interventions for the secondary prevention of heart disease: a systematic review using the RE-AIM planning and evaluation framework. BMC Health Serv Res 23, 1347 (2023). https://doi.org/10.1186/s12913-023-10361-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-023-10361-6