Abstract

Background

While interprofessional collaboration (IPC) is widely considered a key element of comprehensive patient treatment, evidence focusing on its impact on patient-reported outcomes (PROs) is inconclusive. The aim of this study was to investigate the association between employee-rated IPC and PROs in a clinical inpatient setting.

Methods

We conducted a secondary data analysis of the entire patient and employee reported data collected by the Picker Institute Germany in cross-sectional surveys between 2003 and 2016. Individual patient data from departments within hospitals was matched with employee survey data from within 2 years of treatment at the department-level. Items assessing employee-rated IPC (independent variables) were included in Principal Component Analysis (PCA). All questions assessing PROs (overall satisfaction, less discomforts, complications, treatment success, willingness to recommend) served as main dependent variables in ordered logistic regression analyses. Results were adjusted for multiple hypothesis testing as well as patients’ and employees’ gender, age, and education.

Results

The data set resulted in 6154 patients from 19 hospitals respective 103 unique departments. The PCA revealed three principal components (department-specific IPC, interprofessional organization, and overall IPC), explaining 67% of the total variance. The KMO measure of sampling adequacy was .830 and Bartlett’s test of sphericity highly significant (p < 0.001). An increase of 1 SD in department-specific IPC was associated with a statistically significant chance of a higher (i.e., better) PRO-rating about complications after discharge (OR 1.07, 95% CI 1.00–1.13, p = 0.029). However, no further associations were found. Exploratory analyses revealed positive coefficients of department-specific IPC on all PROs for patients which were treated in surgical or internal medicine departments, whereas results were ambiguous for pediatric patients.

Conclusions

The association between department-level IPC and patient-level PROs remains – as documented in previous literature - unclear and results are of marginal effect sizes. Future studies should keep in mind the different types of IPC, their specific characteristics and possible effect mechanisms.

Trial registration

Study registration: Open Science Framework (DOI https://doi.org/10.17605/OSF.IO/2NYAX); Date of registration: 09 November 2021.

Similar content being viewed by others

Background

Health care practitioners are nowadays confronted with a steadily aging patient structure and, therefore, face interprofessional needs in the treatment of chronic and/or complex diseases. Interprofessional collaboration (IPC) is defined by the World Health Organization as a patients’ treatment in which “multiple health workers from different professional backgrounds provide comprehensive services by working with patients, their families, carers and communities to deliver the highest quality of care across settings” [1]. However, IPC “is increasingly used but diversely implemented” [2] making the assessment of health care quality difficult. Moreover, a holistic picture of care can only be drawn if, in addition to clinical quality indicators, patient-specific outcomes are included in health system performance assessments.

The overall evidence of the association of IPC with patients’ outcomes remains uncertain. Whereas positive effects have been found with regard to clinical endpoints [3], patient-reported outcomes (PROs) are underrepresented in the literature and the little data available do not show a clear direction of relationships [2,3,4,5,6,7].

In this study, we aimed to evaluate the association between employee-rated IPC and PROs in inpatient settings. Secondly, we wanted to provide an exploratory analysis of department-specific differences that were encountered.

Methods

This study was guided by the recommendations of the Good Practice of Secondary Data Analysis (GPS, third revision 2012/2014) [8]. The pre-analysis plan for this secondary data analysis has been preregistered in the Open Science Framework Registry (DOI https://doi.org/10.17605/OSF.IO/2NYAX).

There are two modifications in comparison with our pre-analysis plan:

-

The preplanned investigation of covariates using analyses such as lasso, elastic net, or ridge were rejected due to the ordinal scaling of item responses.

-

As the addition of demographic (control) variables to the ordered logistic regression model revealed a high number of missing values, we decided to waive imputation but analyze only those patients from whom we got demographic (control) details.

Picker survey data

The dataset was comprised of the entire patient and employee reported data that the Picker Institute Germany collected in 342 cross-sectional surveys between 2003 and 2016, which was the entire time period in which the Picker Institute conducted surveys in Germany. They were conducted on the occasion of quality improvement and monitoring processes in hospitals (i.e., the timing of the surveys was based on single occasions and the dataset does not represent systematic repeated cross-sections or panel data). For this purpose, validated employee (“Picker Employee Questionnaires”) as well as patient questionnaires (“Picker Inpatient Questionnaires”) were applied in different departments within hospitals. Good psychometric properties have been shown in several investigations [9,10,11,12,13]. The paper-pencil questionnaires were introduced to employees respective patients along with up to two reminders according to the Total Design Method by Dillman [14]. Initial information on voluntariness in participation and pseudonymized analysis was given (see declarations for further information on ethics approval and consent to participate). Only those patients who did not respond within two (1st reminder) or 4 weeks (2nd reminder) received an additional reminder with a copy of the questionnaire and a return envelope. As employee surveys generally result in a lower response rate, particular importance was attached to ensuring a high level of commitment and willingness to participate in these surveys. For this reason, employee surveys were conducted anonymously, which meant that all those initially contacted also received all additional reminder letters. Employees who have already answered were advised to dispose the questionnaire in the reminder letter.

Measured variables

General information on the composition of Picker questionnaires can be found in Additional file 1. For our analyses, we used all questions assessing PROs as main dependent variables. Items assessing employee-rated IPC were included in Principal Component Analysis (PCA, see statistical analysis). The resulting principal components served as independent variables in further analyses. Demographic variables (gender, age, and education) were used as control variables for employees as well as patients. Items come with different response options, ranging from binary item formats to items with up to seven on a Likert scale (higher scores represent better IPC respective PRO). The specific items can be found in the Additional file 2.

Data analysis

All calculations have been performed using Stata MP 17.0. The level of significance was defined as 0.05. As some hospitals decided to survey their patients and employees in two consecutive years for organizational reasons (instead of conducting the two types of surveys simultaneously), data from hospitals that conducted both an employee and patient survey within a two-year period were analysed. In addition, the departments surveyed had to be identical. As we were able to create unique hospital-department-codes, consisting of a unique hospital- plus a unique code for a given department, irrespective of questionnaire type (patient or employee questionnaire) or survey year, we were able to match the respective department-level IPC score to the individual-level patient data.

A PCA on items assessing IPC from the employee’s point of view was used to derive main components underlying these scales. The overview of all items included in PCA can be found, along with the prespecified PCA model, in Additional file 3. Items were identified as suitable for main components if the Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy coefficient was > 0.50, and the Bartlett’s test of sphericity significant (i.e., < 0.05). Only factors with eigenvalues ≥1 were considered (Guttmann-Kaiser Criterion) and results were rotated using both promax and varimax rotation [15,16,17] in order to check the sensitivity of results to these alternative interpretations. Reassuringly, the results mirrored the unrotated principal solution. Thus, we rely on the unrotated solution to predict standardized component scores for the employees and subsequently aggregate these at the department-year-level (i.e., the department-year-IPC-score for each component was the mean of the employee component scores for each component).

These mean component scores were z-standardized to allow the statement that an increase of 1 SD on IPC score at department-year-level was associated with a change of β1 (OR) in PRO outcomes at the individual-patient-level. The unit of analysis was individual patient data from departments within hospitals which have surveyed employees from the identical departments in the same year, the year before or after.

Due to the ordinal scaling of the dependent variables (i.e., PROs), the regression model was implemented as an ordered logistic regression model, such that estimated coefficients (logged odds) are subsequently transformed and interpreted as odds ratios (OR). The formal model takes the general form:

where yicj denotes PRO of patient i treated in department c. As the outcome variable yj consists of ordered outcome categories, the score was estimated as a linear function of the independent variables (department-level IPC and department- and individual-level covariates) and cutpoints defined by the number of categories of the dependent variable. The probability of observing outcome l was then defined as the probability of the function and error term being within the range of the estimated cutpoints. β1 was the coefficient of interest denoting the change in the probability of outcomes in response to a one-unit increase in the department-year-level IPC score (IPCc). Xkij and Xgcj denote vectors of individual and cluster-level control variables, respectively. θh denotes hospital fixed effects and εicj denotes the random error term. Standard errors are cluster-robust at the department-year-level. Additionally, we adjust the reported p-values for the false discovery rate (FDR) using Andersons’ q values for multiple hypothesis testing, and examine of the association between patient- and employee-rated IPC to assure validity of the IPC measure.

In order to answer the secondary research questions, exploratory subgroup analyses were undertaken afterwards. Results were adjusted for multiple hypothesis testing based on the false discovery rate [18].

Results

Population characteristics

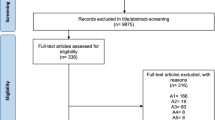

In total, 43 Hospitals including 519 departments and 88,243 individual-level observations (25,470 employees and 62,773 patients) surveyed both employees and patients within 2 years in the period from 2003 to 2016 (n = 132 surveys). After PCA, matching of employee-based department-level IPC scores to patient-level PRO data, and limitation to those patients for whom demographic (control) variables were available (see below) there were 6154 patient observations (unit of analysis) from 19 hospitals respective 103 departments and 49 surveys included in the analyses. Table 1 presents employees’ and patients’ descriptive statistics. Survey characteristics, including information regarding the specific survey years, department types and number of surveyed patients and employees from each included hospital can be found in Additional file 4.

PCA

The PCA revealed three principal components, explaining 67% of the total variance:

-

Department-specific IPC (component 1) contains four items which focus on trust, support, professional relationship, and problem solving with colleagues working within the same department,

-

Interprofessional organization (component 2) includes three questions on clearly defined tasks and structuredness of team meetings or handover talks,

-

Overall IPC (component 3) was comprised of two items addressing the professional relationship with colleagues from other departments.

Both promax and varimax rotation revealed the same composition of components. Thus, we rely on the original (unrotated) unrotated principal solution to predict the component scores. The KMO measure of sampling adequacy was .830 and Bartlett’s test of sphericity significant (p < 0.001). The final set of IPC items can be found in Additional file 2.

PRO items

All items assessing PROs from patients which were included in the final data set were used for regression analyses (see Additional file 2 for main dependent variables).

Association between employee-rated IPC and PRO

When neither controlling for employees’ nor patients’ demographic characteristics (gender, age, education) a statistically significant association was found between the PRO complications and department-specific IPC, meaning that an increase of 1 SD in the first IPC component leads to a statistically significant chance of a higher (i.e., better) PRO-rating regarding the question “Did complications occur after your/ your child’s discharge from hospital?” (OR 1.06, 95% CI 1.00–1.11, p = 0.046). This result was consistent after adding control variables to the ordered logistic regression model (OR 1.07, 95% CI 1.00–1.13, p = 0.029) (see Table 2).

No statistically significant results between IPC components and PROs emerge neither with nor without adding control variables in the remaining analyses (see Table 2).

To account for the problem of multiple inference (i.e., 15 tests due to 5 outcomes and three IPC variables), we also report sharpened q-values for the adjusted regression estimates based on the method developed by Benjamini et al. [19], i.e., the expected proportion of false rejections of the null hypothesis [18]. This approach allows a straightforward comparison with the original p-values and has more power than Bonferroni correction: With a large number of tests, Bonferroni correction (which controls for the familywise error rate, i.e.., the probability of any false rejections of the null hypothesis), may be considered too conservative. In our case, both sharpened q-values and Bonferroni corrected p-values (i.e., p-values multiplied by the number of tests) are severely inflated, indicating no significant relationship between IPC and PROs in any of our analyses when accounting for multiple inference.

Robustness

Focusing on the association between patient- and employee-rated IPC, we estimated OR (using ordered logistic regression) regarding the question “How well does the communication and coordination between doctors and nurses work?” which was asked of patients as well as employees. Here, a statistically significant result (OR 1.10, 95% CI 1.02–1.18, p = 0.011) was found (n = 5345 patients), meaning that patients as well as employees of this sample have comparable ratings of IPC.

Exploratory analyses

Regarding exploratory analysis, we decided to focus on heterogenous associations between IPC and PRO by department-type. Given the fact, that there are 13 department types left in the final data set, we decided to analyze the three departments with the largest sample size. As a result, we analyzed “Internal medicine” (n = 2100), “Surgery” (n = 1698), and “Pediatrics” (n = 442). As stated in the pre-analysis plan (see above), results generated in these exploratory analyses are intended to be viewed as hypothesis generating rather than hypotheses testing. Nevertheless, we investigated Andersons’ q values to account for the multitude of analyses.

Results are presented in the Additional file 5. Significant Andersons’ q values confirm the significance of all p values emerged in these analyses. Statistically significant results for “Surgery” and “Internal medicine” are found only regarding one single IPC-component (department-specific IPC). In accordance with the above-described results, an increase of 1 SD in department-specific IPC leads to higher odds for a better PRO-rating of patients which were treated within these departments, not only in relation to the PRO complications, but also to the other investigated PROs.

To the contrary, results for pediatric patients are more ambiguous. Whereas the chance of a better PRO-rating regarding complications was higher with an increase in department-specific IPC (OR 1.15, 95% CI 1.07–1.23, p < 0.001), it was lower with an increase in interprofessional organization (second IPC component) (OR 0.59, 95% CI 0.44–0.79, p < 0.001). The opposite picture arises when looking at the PROs overall satisfaction, less discomforts, and treatment success. Regarding the third IPC component (overall IPC) an increase of 1 SD was associated with a worse PRO-rating in all investigated outcomes.

Discussion

The aim of this observational study was to investigate the association between employee-rated IPC and PROs. To answer this research question, we analyzed cross-sectional survey data of employee as well as matched patient surveys which have been conducted by the Picker Institute Germany between 2003 and 2016. After PCA, IPC was subdivided into three different components: department-specific IPC, interprofessional organization, and overall IPC. Five PRO items, focusing on overall satisfaction, the improvement of discomforts, complications, subjective overall treatment success, and willingness to recommend, were available as outcomes for analyses. After data cleaning, the final dataset contained 6154 patients from 19 hospitals respective 103 departments for whom employee-rated department-level IPC scores could be matched. Robustness analysis revealed comparable ratings of IPC confirmed that linking employee-rated IPC with patient-rated PROs was valid in terms of answering our research question. In summary, all OR revealed in the analyses are about 1 suggesting that there was neither a positive nor negative chance of better PRO rating with increasing department-specific IPC, interprofessional organization, or overall IPC (see Table 2).

As previous PRO studies often lack large-scale survey designs [2, 3, 20] the generalizability of these previous results can be questioned in many cases. In contrast, the strength of this secondary data analysis study lies in a dataset containing not only a relatively large sample size of employee and patient reports but also a multitude of hospitals and departments. Therefore, we are confident to assume a high external validity for Germany. Moreover, our study was innovative as the dataset enabled us to match data from both employee and patient surveys regarding a PRO-specific research question with a well validated PRO measure. The Picker Questionnaire was rated as “the most likely to cover important areas regarding hospital stay and [ …] the least difficult to understand as well as [ …] the best designed questionnaire” [21] in a randomized trial of four different patient satisfaction questionnaires [22].

Overall, results show marginal positive or negative OR respective effect sizes, meaning there was neither evidence of a negative nor a positive association between IPC and PRO. The patient-related outcome “complications” was the only one which was significantly associated with IPC. A positive effect, emerged when focusing on “department-specific IPC” and “complications”. However, Anderson’s q valued were insignificant in each case assuming that significant results are likely due to multiple hypothesis testing.

Regarding exploratory analyses there are some positive associations with regard to the departments surgery, and internal medicine with significant Andersons’s q values as well. Yet, contradictory results emerged when focusing on pediatrics, were IPC components show negative impacts on pro-ratings in some cases.

Other secondary data analyses of Picker survey data are not available. Nevertheless, our results are comparable to those found in experimental studies using the Picker Questionnaire for assessing PROs after specific interprofessional interventions. Luthy et al. [21] used the Picker Questionnaire to assess the effect of interprofessional bedside-rounds versus daily rounds outside the patients’ room in a quasi-experimental controlled study. Even if the intervention was found to have a positive effect on the patient-healthcare professionals’ satisfaction in terms of interprofessional collaboration, the willingness to recommend was, in fact, negatively associated with this. O’Leary et al. [5] studied a similar research question in a cluster-randomized controlled trial and used the Picker Questionnaire for the assessment of patients’ satisfaction after discharge. Here, the patients’ overall satisfaction did not differ between intervention and control group. In studies using other kinds of PRO measures the association between IPC and PROs in terms of quality of life, symptoms, anxiety, and depression remained unclear, as well [3, 4, 6]. With regard to the different effects of the above-named IPC components in our analysis, Carron et al. [2] also came to the conclusion, that the effect of IPC was likely related to the specific IPC-type.

According to Reeves et al. [23], IPC interventions can be divided into three sections: interprofessional education, interprofessional practice, and interprofessional organization. Our items (and components) do not directly map into this definition of IPC but include several items related to components also discussed in Reeves et al.: Whereas the components one (“department-specific IPC”) and three (“overall IPC”) both cover items addressing interprofessional practice, component two (“interprofessional organization”) includes items focusing on areas also covered in Reeves et al. under the same term. Unfortunately, items addressing interprofessional education are not included in any of the three components. However, in a Cochrane Review by Reeves et al. [24] positive effects of interprofessional education interventions for healthcare professionals on patient outcomes, adherence rates, patient satisfaction, and clinical process outcomes were found in several studies.

Even if the effect of IPC on specific PROs remains unclear, the effectiveness of IPC should not be defined solely on the basis of PROs but also in the light of different kinds of clinical outcomes. For example, Pascucci et al. 2021 [3] systematically reviewed the literature and found positive effects of interprofessional interventions on clinical outcomes, such as levels of systolic blood pressure, diastolic blood pressure, blood levels of Glycated Hemoglobin (HbA1c)], cholesterol level, decrease of smoking, and in the duration of hospitalization.

This study has several limitations. Firstly, we must admit that items used for analyses are not partially validated but within the validation process of the whole questionnaire. As the surveys were part of quality improvement processes within hospitals and therefore did not focus on IPC in detail, survey participants were neither advised to the definition of IPC nor was information on the nature of IPC within departments collected. However, as Picker Questionnaires were validated using focus groups as well as pilot studies to ensure content and face validity [9,10,11,12,13], a common understanding of IPC can be assumed. Secondly, our sample consists of hospitals which self-selected themselves into being surveyed and data represents a voluntary sample of employees and patients within a given time-frame. Details about these self-selection mechanisms are not available.

Initiatives such as PaRIS (Patient-Reported Indicator Surveys) launched by the OECD in 2017 show the future direction of PROs in the context of health system assessments [25]. In PaRIS, the aim is to develop and standardize patient-reported indicators as well as to survey PROs and patient-reported experiences of people with chronic diseases systematically in order to assess how health systems address patients’ needs [25]. It will be necessary to examine in future studies in how far results obtained from initiatives like this may allow further exploration of the effect of IPC. Moreover, future studies should discuss the conceptualization of IPC and study the effect mechanism of the different IPC types. As our study focuses on inpatients, a comprehensive overview by concentrating on the outpatient clinical setting is desirable.

Conclusion

Our findings suggest that, overall, the association between department-level IPC and patient-level PROs remains unclear and results are of marginal effect sizes. However, PROs represent only one piece of a puzzle in the examination of IPC and promising results were found in previous studies examining the effect of IPC on clinical outcomes. As the three types of IPC may have different effect mechanisms between IPC types, the investigation of the effectiveness of IPC should keep in mind the different aspects of these types.

Availability of data and materials

The analysis relies on proprietary data collected by the Picker Institute Germany up until March 2016. As such, the data are not publicly available. As the Picker Institute Germany was incorporated to the BQS Institute for Quality and Patient Safety in April 2016, the BQS is the legal entity owning the data. Thus, any requests may be directed to the BQS Institute. However, the code to generate the results (Stata Do-file/ MP 17.0) is available on request.

Abbreviations

- FDR:

-

False discovery rate

- GPS:

-

Good Practice of Secondary Data Analysis

- IPC:

-

Interprofessional collaboration

- KMO:

-

Kaiser-Meyer-Olkin measure of sampling adequacy coefficient

- OR:

-

Odds ratio

- PCA:

-

Principal Component Analysis

- PRO:

-

Patient-reported outcomes

References

World Health Organization (WHO). Framework for action on Interprofessional Education & Collaborative Practice. 2010.

Carron T, Rawlinson C, Arditi C, Cohidon C, Hong QN, Pluye P, et al. An overview of reviews on Interprofessional collaboration in primary care: effectiveness. Int J Integr Care. 2021;21:31.

Pascucci D, Sassano M, Nurchis MC, Cicconi M, Acampora A, Park D, et al. Impact of interprofessional collaboration on chronic disease management: findings from a systematic review of clinical trial and meta-analysis. Health Policy. 2021;125:191–202.

Shaw C, Couzos S. Integration of non-dispensing pharmacists into primary healthcare services: an umbrella review and narrative synthesis of the effect on patient outcomes. Aust J Gen Pract. 2021;50:403–8.

O’Leary KJ, Killarney A, Hansen LO, Jones S, Malladi M, Marks K, et al. Effect of patient-centred bedside rounds on hospitalised patients’ decision control, activation and satisfaction with care. BMJ Qual Saf. 2016;25:921–8.

Krug K, Bossert J, Deis N, Krisam J, Villalobos M, Siegle A, et al. Effects of an Interprofessional communication approach on support needs, quality of life, and mood of patients with advanced lung Cancer: a randomized trial. Oncologist. 2021;26:e1445–59.

Zwarenstein M, Goldman J, Reeves S. Interprofessional collaboration: effects of practice-based interventions on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2009;Issue 3. Art No.: CD000072. https://doi.org/10.1002/14651858.CD000072.pub2.

Swart E, Gothe H, Geyer S, Jaunzeme J, Maier B, Grobe TG, et al. Good practice of secondary data analysis (GPS): guidelines and recommendations. Gesundheitswesen. 2015;77:120–6.

Riechmann M, Stahl K. Employee satisfaction in hospitals - validation of the Picker employee questionnaire: the German version of the “survey of employee perceptions of health care delivery” (Picker Institute Boston). Gesundheitswesen. 2013;75:e34–48.

Stahl K, Lietz D, Riechmann M, Günther W. Patientenerfahrungen in der Krankenhausversorgung: Revalidierung eines Erhebungsinstruments. Z Med Psychol. 2012;21:11–20.

Stahl K, Schirmer C, Kaiser L. Adaption and validation of the picker employee questionnaire with hospital midwives. J Obstet Gynecol Neonatal Nurs. 2017;46:e105–17.

Stahl K, Steinkamp G, Ullrich G, Schulz W, van Koningsbruggen-Rietschel S, Heuer H-E, et al. Patient experience in cystic fibrosis care: development of a disease-specific questionnaire. Chronic Illn. 2015;11:108–25.

Steinkamp G, Stahl K, Ellemunter H, Heuer E, van Koningsbruggen-Rietschel S, Busche M, et al. Cystic fibrosis (CF) care through the patients’ eyes – a nationwide survey on experience and satisfaction with services using a disease-specific questionnaire. Respir Med. 2015;109:79–87.

Dillman DA. The design and Administration of Mail Surveys. Annu Rev Sociol. 1991;17:225–49.

Bühner M. Einführung in die Test- und Fragebogenkonstruktion. 3., aktualisierte und erw. Aufl. München: Pearson Studium; 2011.

Kaiser HF. An index of factorial simplicity. Psychometrika. 1974;39:31–6.

Yeomans KA, Golder PA. The Guttman-Kaiser criterion as a predictor of the number of common factors. J Royal Stat Soc Series D (The Statistician). 1982;31:221–9.

Anderson ML. Multiple inference and gender differences in the effects of early intervention: a reevaluation of the abecedarian, Perry preschool, and early training projects. null. 2008;103:1481–95.

Benjamini Y, Krieger AM, Yekutieli D. Adaptive linear step-up procedures that control the false discovery rate. Biometrika. 2006;93:491–507.

Kaiser L, Conrad S, Neugebauer EAM, et al. Interprofessional collaboration and patient-reported outcomes in inpatient care: a systematic review. Syst Rev. 2022;11:169. https://doi.org/10.1186/s13643-022-02027-x.

Luthy C, Francis Gerstel P, Pugliesi A, Piguet V, Allaz AF, Cedraschi C. Bedside or not bedside: Evaluation of patient satisfaction in intensive medical rehabilitation wards. PLoS One. 2017;12(2):e0170474. https://doi.org/10.1371/journal.pone.0170474.

Perneger TV, Kossovsky MP, Cathieni F, di Florio V, Burnand B. A randomized trial of four patient satisfaction questionnaires. Med Care. 2003;41:1343–52.

Reeves S, Clark E, Lawton S, Ream M, Ross F. Examining the nature of interprofessional interventions designed to promote patient safety: a narrative review. Int J Qual Health Care. 2017;29(2):144–50. https://doi.org/10.1093/intqhc/mzx008.

Reeves S, Perrier L, Goldman J, Freeth D, Zwarenstein M. Interprofessional education: effects on professional practice and healthcare outcomes (update). Cochrane Database Syst Rev. 2013;2013:CD002213.

OECD. Putting people at the centre of health care. PaRIS survey of Patients with Chronic Conditions. 2019.

Acknowledgements

The authors would like to thank the Picker Institute Germany for permission to use the pseudonymized survey data in this study.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

LK and DP conceptualized the study. LK analyzed the data and wrote the draft manuscript of the observational study. EAN, and DP critically reviewed the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

As our study represents a secondary data analysis, data collection was already completed and a foregoing ethics approval not applicable. Nevertheless, we reached out to the ethics committee for an approval of the data analysis and received a positive response (ethics committee of the Witten/Herdecke University, 29 June 2017, Request 96/2017). All methods were performed using relevant guidelines. At the time of the survey Picker Germany informed all employees as well as patients about the voluntariness of participation, that non-participation will not lead to any consequences and data will be analyzed pseudonymized. Patients as well as employees were informed that a survey participation is equivalent to an informed consent.

Consent for publication

Patients as well as employees were advised that participation is voluntary, and that data will be used pseudonymized in statistical analysis and reports. The Picker Institute Germany allowed LK to publish results of this study.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Questionnaires.

Additional file 2.

List of variables.

Additional file 3.

Prespecified Model for PCA.

Additional file 4.

Survey characteristics.

Additional file 5.

Exploratory Analyses.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Kaiser, L., Neugebauer, E.A.M. & Pieper, D. Interprofessional collaboration and patient-reported outcomes: a secondary data analysis based on large scale survey data. BMC Health Serv Res 23, 5 (2023). https://doi.org/10.1186/s12913-022-08973-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-022-08973-5