Abstract

Background

Following the ACA, millions of people gained Medicaid insurance. Most electronic health record (EHR) tools to date provide clinical-decision support and tracking of clinical biomarkers, we developed an EHR tool to support community health center (CHC) staff in assisting patients with health insurance enrollment documents and tracking insurance application steps. The objective of this study was to test the effectiveness of the health insurance support tool in (1) assisting uninsured patients gaining insurance coverage, (2) ensuring insurance continuity for patients with Medicaid insurance (preventing coverage gaps between visits); and (3) improving receipt of cancer preventive care.

Methods

In this quasi-experimental study, twenty-three clinics received the intervention (EHR-based insurance support tool) and were matched to 23 comparison clinics. CHCs were recruited from the OCHIN network. EHR data were linked to Medicaid enrollment data. The primary outcomes were rates of uninsured and Medicaid visits. The secondary outcomes were receipt of recommended breast, cervical, and colorectal cancer screenings. A comparative interrupted time-series using Poisson generalized estimated equation (GEE) modeling was performed to evaluate the effectiveness of the EHR-based tool on the primary and secondary outcomes.

Results

Immediately following implementation of the enrollment tool, the uninsured visit rate decreased by 21.0% (Adjusted Rate Ratio [RR] = 0.790, 95% CI = 0.621–1.005, p = .055) while Medicaid-insured visits increased by 4.5% (ARR = 1.045, 95% CI = 1.013–1.079) in the intervention group relative to comparison group. Cervical cancer preventive ratio increased 5.0% (ARR = 1.050, 95% CI = 1.009–1.093) immediately following implementation of the enrollment tool in the intervention group relative to comparison group. Among patients with a tool use, 81% were enrolled in Medicaid 12 months after tool use. For the 19% who were never enrolled in Medicaid following tool use, most were uninsured (44%) at the time of tool use.

Conclusions

A health insurance support tool embedded within the EHR can effectively support clinic staff in assisting patients in maintaining their Medicaid coverage. Such tools may also have an indirect impact on evidence-based practice interventions, such as cancer screening.

Trial registration

This study was retrospectively registered on February 4th, 2015 with Clinicaltrials.gov (#NCT02355262). The registry record can be found at https://www.clinicaltrials.gov/ct2/show/NCT02355262.

Similar content being viewed by others

Background

Lack of health insurance in the United States is linked to unmet healthcare needs [1], intermittent access to necessary treatment and services (e.g., medication) [2,3,4,5,6,7], and lower likelihood of receipt of evidence-based services as recommended [8,9,10,11,12,13,14,15], compared to those with insurance coverage. These delays can lead to higher rates of disease incidence and mortality, and increased healthcare costs [11, 16,17,18,19,20,21,22,23,24]. In contrast, greater access to health insurance is associated with lower mortality rates [25], lower out-of-pocket expenditures and medical debts (especially with public insurance), greater self-reported mental and physical health [26]. The positive impact of health insurance on life expectancy is due, in part, to health insurance facilitating receipt of recommended care, especially preventive care services essential for early detection and management of cancer and other chronic diseases. For instance, timely receipt of cancer screening and prevention has contributed to the increase in cancer survival rates [27].

Based on evidence linking health insurance to better health outcomes, the Patient Protection and Affordable Care Act (ACA) was passed in 2010 to expand access to health insurance coverage in the United States (US) [28,29,30], and between 2013 and 2018 the number of uninsured people in the US dropped from 44.8 million to 27.9 million [1]. As predicted, the ACA expansions in access to health insurance were associated with higher rates of receipt of recommended care, including provision of preventive services [31].

Successfully enrolling in an insurance program and staying enrolled, however, can be difficult and complex, especially for patients eligible for Medicaid insurance. There are numerous barriers [32,33,34] to both initial and re-enrollment into Medicaid including patient stigma, lack of knowledge on how to apply, a complex application process, and misunderstandings about whether an individual meets all of the eligibility criteria. The ACA led to improvement in re-enrollment with states using available data on the beneficiary to determine ongoing eligibility [35]. That said, if data are incomplete, the beneficiary must fill-out the missing information within a 30-day of the termination period, which can be challenging and if enrollment is not renewed within 90 days of the termination date, the beneficiaries must complete a new application [35]. To help individuals in overcoming these challenges, the Navigator program was implemented as part of the ACA legislation to provide outreach, education, and enrollment assistance to adults seeking or needing health insurance [36]. The Centers for Medicare and Medicaid Services (CMS) provided funds for the navigators who mainly assisted adults with obtaining coverage through health insurance marketplace for the first few years after the ACA. Since then, funds for this navigator program have been reduced substantially [37]. In addition to initial investments in the Navigator program, the Health Resources and Services Administration (HRSA) provided outreach and enrollment assistance grants to federally qualified health centers to assist their patients with obtaining insurance [37]. Over 1000 community health centers (CHCs) have been awarded these HRSA grants [38]. CHCs provide services to nearly 28 million patients every year. The patient population of CHCs is predominately low-income (91% at near or in poverty), racial and ethnic minorities (36% Hispanic/Latino; 22% black), and Medicaid beneficiaries (48%) or uninsured patients (23%). CHCs reduce barriers to cost (through sliding scale fee structures), accept patients without insurance, and tailor services to specific populations (e.g., homeless, non-English speakers) [36].

Unlike the CMS navigator program which is on the decline, the HRSA-funded outreach and enrollment (O&E) efforts supported ongoing work done by eligibility specialists who are located within or directly linked to the CHCs. These O&E specialists assist patients with enrolling and re-enrolling in health insurance, especially with Medicaid. Patients needing assistance may self-identify or be referred at the time of an appointment, or identified for outreach prior to an appointment so that necessary insurance is in place by the time of their appointment. The HRSA O&E awards required CHC grant recipients to track the number of times they assisted individuals with health insurance. Initially, these requirements were complex and included keeping track of the count of persons assisted, applications submitted, and estimation of the number of individuals enrolled.

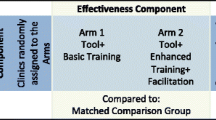

Most O&E specialist did not have tools embedded within the EHR or other clinical practice management systems to facilitate their O&E work. Yet, recent advances in health information technology (HIT) capabilities could provide opportunities for developing HIT tools to facilitate more timely and effective support for health insurance enrollment and maintenance. Within a hybrid effectiveness-implementation design [39], we designed and implemented a health insurance support tool integrated within the electronic health record (EHR) to facilitate the essential services O&E navigators made. By facilitating the services provided by O&E navigators, more patients could be assisted, gain insurance, or avoid insurance gaps improving access to care for these patients. In this two-arm cluster-randomized trial (described elsewhere) [40], we recruited a total of 23 clinics in seven health center systems randomly assigned to one of two intervention arms. Both arms received the intervention (insurance support tool) with the only difference being varying degree of implementation support which lasted 18 months. Qualitative data collection included ethnographic observation and semi-structured interviews with key stakeholders at CHCs focused on barriers and facilitators to adoption of the enrollment tool were also conducted. Details about the implementation of the tool and differences in tool adoption between the two intervention arms appear elsewhere [40,41,42]. To summarize the findings of the implementation trial: we found that both arms used the tool though adoption was low (16%), but uptake was higher in Arm 2 (received practice coaching). Qualitative interviews identified the importance of perceived relative advantage of the tool, implementation climate, and leadership engagement to as facilitators for adoption [41].

The aim of this quasi-experimental study was to determine whether the use of the EHR insurance support tool assisted with insurance application and insurance gaps, and whether it was associated with improvement in cancer preventive care delivery. Here, we assess whether the tool was effective in improving outcomes considering CHCs, albeit not all, did adopt the tool.

Methods

Setting

We recruited CHCs from the OCHIN practice-based research network (PBRN) [43]. OCHIN is a large network of CHCs using a single instance of the Epic EHR. Its centrally hosted Epic EHR is deployed in nearly 100 organizations caring for nearly 2,000,000 patients across 18 states. Participating CHCs were recruited from a subset of OCHIN PBRN members meeting the following criteria: location in a state that expanded Medicaid in 2014, implementation of the OCHIN EHR prior to 2013, and > 1000 adult patients with ≥1 visit in the year prior to the study.

Intervention: insurance support tool

The insurance support tool (referred to as the “enrollment tool”), described elsewhere [40, 41], consisted of an electronic ‘form’ which appeared alongside typical patient registration processes within the EHR. O&E specialists or other staff who assist patients with registration and insurance enrollment enter the data in the form and use it to track enrollment. The enrollment tool includes the following functionalities:

Tracking and documenting

Fillable fields to collect and document insurance enrollment information such as status of insurance application, insurance ID, effective date, eligibility status, number and type of assists provided, total number of individuals assisted, notes, etc.

Panel management function

Allows users to (1) run a report of patients with upcoming appointments within 30 days and identify those without health insurance; and (2) run a daily report of health insurance application assistance in progress.

Retrospective data report

Reports the number of total individuals assisted with each opened form to generate HRSA quarterly reporting of outreach and enrollment assistance provided.

Tool adoption was measured as the first instance the enrollment form was touched for a specific patient. Though the form could be updated, to assess effectiveness, we utilized the first instance of tool use.

Comparison group

We used propensity score matching [44] to identify the comparison group of 23 CHCs that most closely resembled the intervention group on clinic and patient characteristics that have the potential to confound the enrollment tool’s effect on health insurance coverage and cancer screening rates. Eligible clinics for the study’s comparison group were matched based on the state of the organization (i.e., comparison clinics had to be in the same states as those in intervention clinics), the total number of patients in 2014 (i.e., prior to intervention initiation), and the percentage of uninsured visits, female gender, and Medicaid beneficiaries. The 23 comparison clinics were selected on a 1:1 ratio based on the nearest available match from the intervention clinics. The intent was to achieve the optimal overall balance in the matching characteristics between the intervention and comparison groups. Balance diagnostics were performed to assess whether the propensity score model had been properly specified [45]. All intervention and matched comparison clinics received HRSA grant funding for outreach and enrollment and are located in Oregon, California, and Ohio.

Study period

The enrollment tool was implemented mid-September 2016. There are overlapping yet separate study periods for (1) the intent-to-treat analysis assessing tool effectiveness on the primary outcomes of insurance status and cancer screenings at the clinic-level and (2) the effect of the treatment on the treated to evaluate, at the patient-level, the impact among those for whom the tool was used on. For the intent-to-treat analysis, we collected data on tool adoption for 18 months before tool implementation and 18 months after tool implementation. For the analysis estimating the effect of treatment on the treated, the study period started 6 months prior to tool implementation, in order to establish baseline insurance, followed by 18 months of tool implementation, plus an additional 6 months, to allow post-tool follow-up visits and Medicaid application processing time, for a total of 24 months of evaluation.

Patient inclusion

Patients aged 19–64 with ≥1 ambulatory visit prior to tool use (assessment of insurance status/cancer screenings) were included. EHR data from patients in Oregon CHCs were linked to Medicaid enrollment data from the Oregon Health Authority to determine enrollment status.

Measures

Insurance status

EHR data contain information on payor types as well as billable codes for services performed at each ambulatory care visit; as these data are used for billing purposes, they represent reliable information on insurance status and services received at each visit [46]. For the primary outcome of insurance status, we considered separate monthly insurance rates (including Medicaid, private, other public, and uninsured). Each monthly insurance rate was estimated as the number of monthly encounters paid by a specific insurance type (or self-pay) divided by total visits for patients aged 19–64 years old. For the effect of the treatment on the treated analyses, we used the visit date nearest to the tool use to determine baseline insurance status. Patients could be uninsured or insured by Medicaid, or classified as “other” insurance, which consisted mainly of private insurance and also Medicare and public programs (e.g., grants).

Cancer screenings

EHR data were used to assess whether patients were up-to-date with cervical, colorectal, and breast cancer screenings. Services due and services received were identified through procedure codes, diagnosis codes, lab/imaging/scanned results, active problem lists, and longitudinal “health maintenance” records. Services that were ordered but not verified as received (via a direct result or health maintenance result) were not counted. For each measure, individuals were identified as “due” for screening based on sex and age eligibility. For individual measures, patients for whom the screening was not indicated based on special circumstances were excluded (e.g., women with a history of total hysterectomy were excluded from cervical cancer screening). A preventive ratio was calculated for each cancer screening [47, 48]. A preventive ratio is the total person-time covered (after delivery of a particular preventive service) divided by the total person-time eligible for a particular service. This calculation results in a percentage of time “covered” by a preventive service (e.g., a mammogram “covers” an individual for breast cancer screening for 2 years). The percentage ranges from 0 to 100%, where 100% represents complete coverage (i.e., services received without delay). Patient-level preventive ratios were averaged across patients within clinics to produce clinic-level measure of performance. We use preventive ratios over conventional metrics (i.e., binary receipt of a service over a certain period) because it provides an estimate of the average time covered (or delayed) by the preventive service as a percentage rather than a dichotomous value. Using a clinic-level preventive ratio for each cancer screening, we are able to assess whether intervention clinics had fewer patients overdue for screenings than comparison clinics.

Statistical analyses

Unadjusted descriptive statistics of patient characteristics and insurance status were reported at the clinic-level and stratified by intervention group. Characteristics included the average percent of female patients over the entire study period, percent of patients at < 138% of the federal poverty line (FPL), percent speaking English or Spanish, and percent classified as Non-Hispanic White or non-Hispanic minorities (all other races)/Hispanic, and state in which the clinics were located. Chi-square tests and t-tests assessed the differences in characteristics between comparison and intervention groups.

To assess the effectiveness of the enrollment tool on insurance status, we first compared changes in clinic-level rates of insurance pre- versus post-tool implementation in intervention relative to comparison clinics using an intent-to-treat approach. We utilized a comparative interrupted time-series framework to estimate the monthly rate of three types of insurance statuses (uninsured, Medicaid, and private/other insurance) between 23 intervention clinics and 23 comparison clinics, across the 36-month study period. The “interruption”, or the implementation of the enrollment tool, occurred in the middle of the study, where we modeled the possibility of a level change in the monthly rate (i.e., an immediate intervention effect following implementation) and a rate change in the monthly rate (i.e., post-intervention change in the rate over time). Although several modeling options are possible including a difference-in-differences approach, we utilized a comparative interrupted time-series approach as it provides a more flexible inferential framework than difference-in-differences designs given our monthly data collection and study design. Additionally, comparative interrupted time-series has often been noted as a more powerful approach than difference-in-differences [49]. To model the clinic-level monthly insurance rate, we fit a Poisson generalized estimated equation (GEE) model, assuming an autoregressive covariance matrix of degree 1 (AR1) to account for the autocorrelation of monthly observations within clinics. We used the same modeling approach to assess the effectiveness of the enrollment tool on three different cancer screenings: breast cancer, cervical cancer, and colorectal cancer. Overall, our regression models followed the following form:

where Yjt is the number of patients in practice j who had an insurance visit or cancer screening during time t. The trend of the control group is denoted by B1. The intervention period is denoted by B2, with an indicator of 1 for postt in the last half of the study (18 months) and 0 otherwise. Intercept differences between clinics that were in the intervention group are represented by groupj. The difference of the linear trend of the intervention group relative to the comparison group in the pre-period is B4. B5 represents the level change in slope of the treatment on the intervention group in the post-period. B6 to B10 represent clinic- and state-level characteristics. Lastly, μjt is the number of patients in clinic j at time t who were eligible (i.e., the denominator), for an insurance visit or cancer screening, and the logarithm of this term is considered the offset of a Poisson regression model. To assess the validity of utilizing a comparative interrupted time series approach, we evaluated pre-period outcome trends between the intervention and comparison group using visual inspection and it was deemed appropriate [50]. For added flexibility, pre-period outcome trends that were not parallel or experienced fluctuation, additional model terms of time and its interaction with the treatment indicator were included to account for these differences. Lastly, post-estimation of these models produced estimated monthly insurance and screening rates over the 36-month study period, with the addition of a post-period counterfactual trend for the intervention group for ease of interpretation. Statistical significance was reported at the p-value < 0.05 level. Statistical software R/RStudio version 4.0.5 was used, including packages geepack and emmeans for statistical modelling and post-estimation procedures.

We estimated the effect of the treatment on the treated to determine whether tool use was associated with gaining and maintaining Medicaid coverage. We summarized the number of patients with a tool instance in Oregon (97% of tool instances were in Oregon) and assessed their baseline and follow-up insurance status.

The Institutional Review Board of the Oregon Health & Science University has reviewed and approved this study.

Results

Table 1 displays the unadjusted characteristics of patients in the intervention and comparison groups. Patients in the intervention group were less likely to be < 138% under the FPL and less likely to be English speaking than those in the comparison group. The distribution of insurance visits was similar in both intervention and comparison groups, composed primarily of Medicaid insured visits over the 36 months. Intervention clinics had higher preventive ratios for breast and cervical cancer screenings and lower ratios for colorectal cancer screenings than the comparison group.

Tool effectiveness on insurance coverage – intent-to-treat

Figure 1 displays the estimated changes in clinic-level rates of uninsured, Medicaid, and other insured visits before and after the tool implementation among the intervention and comparison groups. The intervention group prior to tool implementation had lower uninsured visit rates and Medicaid visit rates than the comparison group.

Effectiveness of the enrollment tool on insurance visit rates, 18 months pre- and post-implementation. Note: Other insurance include mainly private insurance, and also other public programs. Dotted black vertical line denotes the implementation of the insurance tool. Clinic-level insurance visit rates were estimated from a Poisson GEE model, adjusted for percent female, < 138% FPL, percent English, percent non-Hispanic White, and state, and utilized an auto-regressive correlation matrix with degree 1. The dotted blue line represents the predicted trend if the enrollment tool had never been implemented

Immediately following implementation of the enrollment tool, the uninsured visit rate decreased by 21.0% (Adjusted Rate Ratio [RR] = 0.790, 95% CI = 0.621, 1.005, p = 0.055) in the intervention group relative to comparison group (Table 2). Though there was an immediate impact on uninsurance rate, the trends in uninsured visit rates (i.e., slope) were similar between intervention and comparison groups (ARR = 1.005; 95% CI = 0,990, 1.021). Similarly, Medicaid-insured visits increased by 4.5% (ARR = 1.045; 95% CI = 1.013, 1.079) immediately after tool implementation in the intervention group compared to the comparison group; the trends in Medicaid visit rates in the intervention and comparison groups were similar (ARR = 1.001; 95% CI = 0.998, 1.004). We observed no immediate impact of the intervention on other insurance type (ARR = 0.999; 95% CI = 0.904, 1.104).

Tool effectiveness on insurance coverage – effect of the treatment on the treated

Table 3 shows the effect of the treatment on the treated. First, 97% of tool use was observed in Oregon clinics. At the time of tool use, 75% had Medicaid visit, 17% had uninsured visit, and 6% had a privately insured visit. Among those with a tool use, 81% were enrolled in Medicaid 12 months after tool use. Of those, 82% had a Medicaid visit at the time of tool use while 10% had uninsured visits. For the 19% who were never enrolled in Medicaid following tool use, most were uninsured (44%) at the time of tool use. Overall, patients who were uninsured (50%) were the least likely to be enrolled in Medicaid within 12 months of tool use relative to other type of insured patients (Medicaid = 93%, Private = 78%).

Tool effectiveness on Cancer screenings – intent to treat

Figure 2 displays the estimated changes in clinic-level screening rates of breast cancer, cervical cancer, and colorectal cancer before and after tool implementation among the intervention and comparison groups. The intervention group, prior to tool implementation, had higher breast cancer and cervical cancer screening preventive ratios and lower colorectal cancer screening preventive ratios than the comparison group. Immediately following implementation of the enrollment tool, cervical screening preventive ratio increased 5.0% (ARR = 1.050, 95% CI = 1.009, 1.093) in the intervention group relative to comparison group (Table 4). Though there was an immediate increase, the trends in cervical cancer preventive ratio were similar between intervention and comparison groups (ARR = 0.999; 95% CI = 0.996, 1.002). For breast and colorectal cancer preventive ratios, the immediate impact and the trends over time were not different between the intervention and comparison groups.

Effectiveness of the enrollemnt tool on cancer screening preventive ratios, 18 months pre- and post-implementation. Note: Preventive ratios represent the percentage of patient-time covered by needed cancer screening. Dotted black vertical line denotes the implementation of the insurance tool. Preventive ratios were estimated from a Poisson GEE model, adjusted for percent female, < 138% FPL, percent English, percent non-Hispanic White, and state, and utilized an auto-regressive correlation matrix with degree 1. The dotted blue line the predicted trend if the enrollment tool had never been implemented

Discussion

The health insurance support tool was associated with an initial change in uninsured and Medicaid visits as well as on cancer screenings receipt at the clinic level. Following this initial positive change, the intervention clinics followed similar trends in outcomes relative to the comparison clinics. The tool, however, was only used on a small fraction of patients (16%, see published results on implementation outcomes [41]), which likely explain the small clinic level changes. We believe the low tool adoption rates were due, in part, to the low-intensity of the implementation support, varied uptake of the tool use, and contextual factors (e.g., change in leadership) [41]. First, we did not provide rigid guidance on best practices for implementing this tool but instead let the O&E specialists decide how to use the enrollment tool based on their unique patient populations and needs. As such, the tool was used differently than expected. For instance, O&E specialists in one health system used the tool mainly for outreach, assisting community members who never became established patients at the CHC. Clinics also had varied perspectives of which individuals needed tool use (i.e., who is at risk for uninsurance) and who required support based on resources available through their existing systems of O&E specialists. While it was important for this tool to be adaptable to existing clinic support systems, the absence of these definitions made evaluation of tool impact more challenging. This study shows that EHR-based insurance tool can be useful in clinical practice and may have some associated positive impacts of preventive care, though small with this flexible implementation strategy.

Despite some implementation challenges, O&E specialists did use the tool often for Medicaid re-enrollment, which is essential in avoiding coverage and care gaps. As highlighted by the effect of treatment on the treated analysis, most patients with tool use were enrolled and continued to be enrolled with Medicaid. This result is supported by qualitative interviews with O&E specialists who noted that the enrollment tool was most useful for renewing insurance of Medicaid patients who could be reached through the regional Medicaid program eligibility lists and other forms of in-reach. This finding underscores that EHR-based tools can be developed to assist both clinical and non-clinical personnel in their work. Additionally, it suggests the need for electronic linkage of Medicaid enrollment dates to facilitate clinic efforts in outreaching to patients to assist them in avoiding coverage gaps.

We expected that the tool would be most useful for patients without health insurance. A fraction of tool use instances was associated with an uninsured patient (17%) relative to Medicaid patients (74%). Additionally, most uninsured patients assisted did not gain insurance. This result suggests continued barriers for patients to gain insurance. Previous studies have shown continuous barriers to gaining insurance among various racial and ethnic groups [51, 52]. Continued effort to provide insurance to all is warranted.

Limitations

This study has some limitations. First, intervention clinics volunteered to be part of the study and are not representative of other CHCs. Second, uninsured patients are less likely to seek care than insured patients and may not be accurately represented in our study. Third, intervention clinics decided on how to use the tool and for whom, rendering effectiveness analysis difficult.

Conclusion

Insurance support tools integrated within an EHR can be used to streamline health insurance outreach, tracking, and reporting, especially if linked to external sources such as Medicaid enrollment dates for in-reach purposes. Such tools may also have an indirect impact on evidence-based practice interventions, such as cancer screening.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Change history

02 May 2022

A Correction to this paper has been published: https://doi.org/10.1186/s12913-022-07974-8

Abbreviations

- ACA:

-

Affordable Care Act

- EHR:

-

Electronic Health Record

- CHC:

-

Community Health Center

- GEE:

-

Generalized Estimated Eq.

- O & E:

-

Outreach and Enrollment

- CMS:

-

Centers for Medicare and Medicaid Services

- HRSA:

-

Health Resources and Services Administration

- HIT:

-

Health Information Technology

- PBRN:

-

Practice-Based Research Network

- FPL:

-

Federal Poverty Level

References

Key Facts about the Uninsured Population [https://www.kff.org/uninsured/issue-brief/key-facts-about-the-uninsured-population/].

Baicker K, Finkelstein A. The effects of Medicaid expansion: learning from the Oregon experiment. N Engl J Med. 2011;365(8):683–5. https://doi.org/10.1056/NEJMp1108222.

Bednarek HL, Steinberg Schone B. Variation in preventive service use among the insured and uninsured: does length of time without coverage matter? J Health Care Poor Underserved. 2003;14(3):403–19. https://doi.org/10.1353/hpu.2010.0529.

Busch SH, Duchovny N. Family coverage expansions: impact on insurance coverage and health care utilization of parents. J Health Econ. 2005;24(5):876–90. https://doi.org/10.1016/j.jhealeco.2005.03.007.

McWilliams JM, Meara E, Zaslavsky AM, Ayanian JZ. Use of health services by previously uninsured Medicare beneficiaries. N Engl J Med. 2007;357(2):143–53. https://doi.org/10.1056/NEJMsa067712.

McWilliams JM, Zaslavsky AM, Meara E, Ayanian JZ. Impact of Medicare coverage on basic clinical services for previously uninsured adults. JAMA. 2003;290(6):757–64. https://doi.org/10.1001/jama.290.6.757.

Seo V, Baggett TP, Thorndike AN, Hull P, Hsu J, Newhouse JP, et al. Access to care among Medicaid and uninsured patients in community health centers after the affordable care act. BMC Health Serv Res. 2019;19(1):291. https://doi.org/10.1186/s12913-019-4124-z.

Cancer Facts and Figures, 2008 [http://www.cancer.org/downloads/STT/2008CAFFfinalsecured.pdf].

Carney PA, O'Malley J, Buckley DI, Mori M, Lieberman DA, Fagnan LJ, et al. Influence of health insurance coverage on breast, cervical, and colorectal cancer screening in rural primary care settings. Cancer. 2012;118(24):6217–25. https://doi.org/10.1002/cncr.27635.

DeVoe J, Fryer G, Phillips R, Green L. Receipt of preventive care among adults: insurance status and usual source of care. Am J Public Health. 2003;93(5):786–91. https://doi.org/10.2105/AJPH.93.5.786.

Farkas DT, Greenbaum A, Singhal V, Cosgrove JM. Effect of insurance status on the stage of breast and colorectal cancers in a safety-net hospital. Am J Manag Care. 2012;18(5 Spec No. 2):SP65–70.

Mandelblatt JS, Yabroff KR, Kerner JF. Equitable access to Cancer services: a review of barriers to quality care. Cancer. 1999;86(11):2378–90. https://doi.org/10.1002/(SICI)1097-0142(19991201)86:11<2378::AID-CNCR28>3.0.CO;2-L.

Robinson JMSV. The role of health insurance coverage in cancer screening utilization. J Health Care for the Poor and Underserved. 2008;19(3):842–56.

Shi L, Lebrun LA, Zhu J, Tsai J. Cancer screening among racial/ethnic and insurance groups in the United States: a comparison of disparities in 2000 and 2008. J Health Care Poor Underserved. 2011;22(3):945–61. https://doi.org/10.1353/hpu.2011.0079.

Swan JBN, Coates RJ, Rimer BK, Lee NC. Progress in cancer screening practices in the United States: results from the 2000 National Health Interview Survey. Cancer. 2003;97(6):1528–40. https://doi.org/10.1002/cncr.11208.

Bradley CJ, Dahman B, Bear HD. Insurance and inpatient care: differences in length of stay and costs between surgically treated cancer patients. Cancer. 2012;118(20):5084–91. https://doi.org/10.1002/cncr.27508.

Bradley CJ, Gandhi SO, Neumark D, Garland S, Retchin SM. Lessons for coverage expansion: a Virginia primary care program for the uninsured reduced utilization and cut costs. Health Aff (Millwood). 2012;31(2):350–9. https://doi.org/10.1377/hlthaff.2011.0857.

Harvin JA, Van Buren G, Tsao K, Cen P, Ko TC, Wray CJ. Hepatocellular carcinoma survival in uninsured and underinsured patients. J Surg Res. 2011;166(2):189–93. https://doi.org/10.1016/j.jss.2010.04.036.

Miller DC, Litwin MS, Bergman J, Stepanian S, Connor SE, Kwan L, et al. Prostate cancer severity among low income, uninsured men. J Urol. 2009;181(2):579–84. https://doi.org/10.1016/j.juro.2008.10.010.

Roetzheim RG, Pal N, Gonzalez EC, Ferrante JM, Van Durme DJ, Krischer JP. Effects of health insurance and race on colorectal cancer treatments and outcomes. Am J Public Health. 2000;90(11):1746–54. https://doi.org/10.2105/ajph.90.11.1746.

Schootman M, Walker MS, Jeffe DB, Rohrer JE, Baker EA. Breast cancer screening and incidence in communities with a high proportion of uninsured. Am J Prev Med. 2007;33(5):379–86. https://doi.org/10.1016/j.amepre.2007.07.032.

Siminoff LA, Ross L. Access and equity to cancer care in the USA: a review and assessment. Postgrad Med J. 2005;81(961):674–9. https://doi.org/10.1136/pgmj.2005.032813.

Slatore CG, Au DH, Gould MK. American Thoracic Society disparities in healthcare G: an official American Thoracic Society systematic review: insurance status and disparities in lung cancer practices and outcomes. Am J Respir Crit Care Med. 2010;182(9):1195–205. https://doi.org/10.1164/rccm.2009-038ST.

Ward E, Halpern M, Schrag N, Cokkinides V, DeSantis C, Bandi P, et al. Association of insurance with cancer care utilization and outcomes. CA Cancer J Clin. 2008;58(1):9–31. https://doi.org/10.3322/CA.2007.0011.

Sommers BD, Long SK, Baicker K. Changes in mortality after Massachusetts health care reform. Ann Intern Med. 2014;160(9):585–93. https://doi.org/10.7326/M13-2275.

Finkelstein A, Taubman S, Wright B, Bernstein M, Gruber J, Newhouse JP, et al. Oregon health study G: the Oregon health insurance experiment: evidence from the first year. Q J Econ. 2012;127(3):1057–106. https://doi.org/10.1093/qje/qjs020.

Screening for Breast Cancer: Systematic Evidence Review Update for the US Preventive Services Task Force [https://www.ncbi.nlm.nih.gov/books/NBK36392/].

Hoopes MJ, Angier H, Gold R, Bailey SR, Huguet N, Marino M, et al. Utilization of community health centers in Medicaid expansion and nonexpansion states, 2013-2014. J Ambul Care Manage. 2016;39(4):290–8. https://doi.org/10.1097/JAC.0000000000000123.

Angier H, Hoopes M, Gold R, Bailey SR, Cottrell EK, Heintzman J, et al. An early look at rates of uninsured safety net clinic visits after the affordable care act. Ann Fam Med. 2015;13(1):10–6. https://doi.org/10.1370/afm.1741.

Huguet N, Hoopes MJ, Angier H, Marino M, Holderness H, DeVoe JE. Medicaid expansion produces Long-term impact on insurance coverage rates in community health centers. J Prim Care Community Health. 2017;8(4):206–12 2150131917709403.

Huguet N, Springer R, Marino M, Angier H, Hoopes M, Holderness H, et al. The impact of the affordable care act (ACA) Medicaid expansion on visit rates for diabetes in safety net health centers. J Am Board Fam Med. 2018;31(6):905–16. https://doi.org/10.3122/jabfm.2018.06.180075.

Stuber JP, Maloy KA, Rosenbaum S, Jones KC. Beyond Stigma: What Barriers Actually Affect the Decisions of Low-Income Families to Enroll in Medicaid? In: Health Policy and Management Issue Briefs. https://hsrc.himmelfarb.gwu.edu/sphhs_policy_briefs/53/;; 2000.

Parker R. Health literacy: a challenge for American patients and their health care providers. Health Promot Int. 2000;15(4):277–83. https://doi.org/10.1093/heapro/15.4.277.

Bhatt D, Schelhase K. Barriers to enrollment for the uninsured: a single-site survey at an urban free Clinic in Milwaukee. WMJ. 2019;118(1):44–6.

Medicaid Eligibility, Enrollment Simplification, and Coordination under the Affordable Care Act: A Summary of CMS’s March 23, 2012 Final Rule [https://www.kff.org/wp-content/uploads/2013/04/8391.pdf].

National Association of Community Health Centers: Community Health Center Chartbook. In. Bethesda, MD; 2020.

Keith K. CMS announces even deeper navigator cuts. Health Affairs Blog; 2018.

Health centers to help uninsured Americans gain affordable health coverage [https://wayback.archive-it.org/3926/20150618191111/http://www.hhs.gov/news/press/2013pres/07/20130710a.html].

Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217–26. https://doi.org/10.1097/MLR.0b013e3182408812.

DeVoe JE, Huguet N, Likumahuwa-Ackman S, Angier H, Nelson C, Marino M, et al. Testing health information technology tools to facilitate health insurance support: a protocol for an effectiveness implementation hybrid randomized trial. Implement Sci. 2015;10(1):123. https://doi.org/10.1186/s13012-015-0311-4.

Hatch B, Tillotson C, Huguet N, Marino M, Baron A, Nelson J, et al. Implementation and adoption of a health insurance support tool in the electronic health record: a mixed methods analysis within a randomized trial. BMC Health Serv Res. 2020;20(1):428. https://doi.org/10.1186/s12913-020-05317-z.

Huguet N, Hatch B, Sumic A, Tillotson C, Hicks E, Nelson J, et al. Implementation of Health Insurance Support Tools in Community Health Centers. J Am Board Fam Med. 2018;31(3):410–6. https://doi.org/10.3122/jabfm.2018.03.170263.

Devoe JE, Sears A. The OCHIN community information network: bringing together community health centers, information technology, and data to support a patient-centered medical village. J Am Board Fam Med. 2013;26(3):271–8. https://doi.org/10.3122/jabfm.2013.03.120234.

D'Agostino RB Jr. Propensity score methods for bias reduction in the comparison of a treatment to a non-randomized control group. Stat Med. 1998;17(19):2265–81. https://doi.org/10.1002/(SICI)1097-0258(19981015)17:19<2265::AID-SIM918>3.0.CO;2-B.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–7. https://doi.org/10.2105/AJPH.89.9.1322.

Marino M, Angier H, Valenzuela S, Hoopes M, Killerby M, Blackburn B, et al. Medicaid Coverage Accuracy in Electronic Health Records. Prev Med Rep. 2018;11:297–304.

Vogt TM, Aickin M, Ahmed F, Schmidt M. The prevention index: using technology to improve quality assessment. Health Serv Res. 2004;39(2):511–30. https://doi.org/10.1111/j.1475-6773.2004.00242.x.

Stange KC, Flocke SA, Goodwin MA, Kelly RB, Zyzanski SJ. Direct observation of rates of preventive service delivery in community family practice. Prev Med. 2000;31(2 Pt 1):167–76. https://doi.org/10.1006/pmed.2000.0700.

Soumerai SB, Starr D, Majumdar SR. How do you know which health care effectiveness research you can trust? A Guide to Study Design for the Perplexed Prev Chronic Dis. 2015;12:E101.

Hallberg K, Williams R, Swanlund A, J. E. Short comparative interrupted time series using aggregate school-level data in education research. Educ Res. 2018;47(5):295–306. https://doi.org/10.3102/0013189X18769302.

Patel K, Wu V, Parker R. Getting enrolled Isn't enough: the importance of teaching consumers how to use health insurance. Health Affairs Blog; 2015.

Angier H, Ezekiel-Herrera D, Marino M, Hoopes M, Jacobs EA, JE DV, et al. Racial/ethnic disparities in health insurance and differences in visit type for a population of patients with diabetes after Medicaid expansion. J Health Care Poor Underserved. 2019;30(1):116–30. https://doi.org/10.1353/hpu.2019.0011.

Acknowledgements

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The authors also acknowledge the participation of our partnering health systems.

Funding

This work was supported by the National Cancer Institute (NCI) grant number R01CA181452. This work was also supported by NCI Award Number P50CA244289. This program was launched by NCI as part of the Cancer Moonshot.℠ The funder had no direct role in study design, data collection, analysis, or interpretation, or writing the manuscript.

Author information

Authors and Affiliations

Contributions

NH provided conceptualization, supervision, and writing - original draft. SV provided data curation, formal analysis, and writing - review and editing. MM provided conceptualization, supervision, methodology, visualization, and writing - review and editing. LM provided writing - review and editing. BH provided conceptualization, data curation and writing - review and editing. AB provided data curation, formal analysis, and writing- review and editing. DJC provided conceptualization, supervision, formal analysis and writing- reviewing and editing. JED provided funding acquisition, conceptualization, supervision, and writing - review and editing. All authors contributed to and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval

This study has been reviewed and approved by the Oregon Health & Science University Institutional Review Board (eIRB # 00009862). Participating health center management provided written consent for their health center to participate in the project. Individual staff who were interviewed provided verbal consent to participate. Verbal consent was deemed sufficient because of the neutral content being discussed, the fact that all data were deidentified, and because of the need to efficiently and informally discuss workflow with many staff.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version of this article was revised: the given names and family names of all authors were erroneously transposed.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Huguet, N., Valenzuela, S., Marino, M. et al. Effectiveness of an insurance enrollment support tool on insurance rates and cancer prevention in community health centers: a quasi-experimental study. BMC Health Serv Res 21, 1186 (2021). https://doi.org/10.1186/s12913-021-07195-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-021-07195-5