Abstract

Background

Children with health insurance have increased access to healthcare and receive higher quality care. However, despite recent initiatives expanding children’s coverage, many remain uninsured. New technologies present opportunities for helping clinics provide enrollment support for patients. We developed and tested electronic health record (EHR)-based tools to help clinics provide children’s insurance assistance.

Methods

We used mixed methods to understand tool adoption, and to assess impact of tool use on insurance coverage, healthcare utilization, and receipt of recommended care. We conducted intent-to-treat (ITT) analyses comparing pediatric patients in 4 intervention clinics (n = 15,024) to those at 4 matched control clinics (n = 12,227). We conducted effect-of-treatment-on-the-treated (ETOT) analyses comparing intervention clinic patients with tool use (n = 2240) to intervention clinic patients without tool use (n = 12,784).

Results

Tools were used for only 15% of eligible patients. Qualitative data indicated that tool adoption was limited by: (1) concurrent initiatives that duplicated the work associated with the tools, and (2) inability to obtain accurate insurance coverage data and end dates. The ITT analyses showed that intervention clinic patients had higher odds of gaining insurance coverage (adjusted odds ratio [aOR] = 1.32, 95% confidence interval [95%CI] 1.14–1.51) and lower odds of losing coverage (aOR = 0.77, 95%CI 0.68–0.88), compared to control clinic patients. Similarly, ETOT findings showed that intervention clinic patients with tool use had higher odds of gaining insurance (aOR = 1.83, 95%CI 1.64–2.04) and lower odds of losing coverage (aOR = 0.70, 95%CI 0.53–0.91), compared to patients without tool use. The ETOT analyses also showed higher rates of receipt of return visits, well-child visits, and several immunizations among patients for whom the tools were used.

Conclusions

This pragmatic trial, the first to evaluate EHR-based insurance assistance tools, suggests that it is feasible to create and implement tools that help clinics provide insurance enrollment support to pediatric patients. While ITT findings were limited by low rates of tool use, ITT and ETOT findings suggest tool use was associated with better odds of gaining and keeping coverage. Further, ETOT findings suggest that use of such tools may positively impact healthcare utilization and quality of pediatric care.

Trial registration

ClinicalTrials.gov, NCT02298361; retrospectively registered on November 5, 2014.

Similar content being viewed by others

Background

Health insurance coverage is associated with increased access to healthcare and reduced unmet needs [1,2,3,4]. Insured children are more likely to receive recommended preventive care and less likely to experience preventable hospitalizations compared to uninsured children [5,6,7,8,9,10]. In the United States (US), although children’s coverage steadily increased following Children’s Health Insurance Program (CHIP) expansions, [11] important gaps remain [12,13,14,15]. For example, 28% of children insured by Medicaid or CHIP lost coverage within 12 months, [14] and two years after passage of the CHIP Reauthorization Act, 21% of pediatric patients seen in a community health center (CHC) network were uninsured [16].

Concurrent to CHIP expansions, technological developments have yielded new opportunities to enhance how healthcare teams support patients with gaining insurance coverage [17,18,19,20]. To act on these developments, we conducted the Innovative Methods for Parents And Clinics to Create Tools for Kids’ Care (IMPACCT Kids’ Care) Study, [21] in which we built electronic health record (EHR) tools to help primary care CHCs provide health insurance enrollment assistance. We chose this setting because many low-income children eligible for Medicaid or CHIP receive care at CHCs [22]. The tools were designed to help CHC staff monitor patients’ coverage eligibility and track provided insurance support. To our knowledge, no previous efforts have sought to develop, implement, and study such tools in CHCs or any other settings. Thus this research, while exploratory, is highly novel.

Methods

Overview

We implemented the EHR-based tools in four intervention CHCs and selected four matched control CHCs for comparison. We used a retrospective cohort design to identify our pediatric study population (aged 0–19 at time of visit). We measured tool use; assessed facilitators and barriers to tool use; and assessed tool impact and effect on health insurance coverage, CHC utilization, and receipt of recommended care. We used intent-to-treat (ITT) analyses to measure tool impact at the population level. We used effect-of-treatment-on-the-treated (ETOT) analyses to measure what happened when the tools were used and to distinguish consideration of the tools’ effect on individuals from consideration of the tools’ effect on populations. Details of the study design and tool development processes were previously described [21, 23, 24].

Study period

The tools were implemented on 6/1/2014; we collected and analyzed data from 6 months pre-implementation through 18 months post-implementation.

Setting

Participating CHCs were members of OCHIN, Inc., a 501(c)(3) organization that provides health information technology to CHCs [25, 26]. The majority of these CHCs’ pediatric patients met income eligibility for Medicaid or CHIP, yet many were uninsured at the time of a visit, and thus could benefit from the tools. OCHIN members share an Epic© EHR, making it possible to build and implement the study tools on a single EHR platform, and centrally obtain EHR data.

Sample

Eight CHCs participated in this study: four Oregon CHCs volunteered to be ‘intervention’ sites, and four ‘control’ sites were selected from a pool of 38 non-intervention CHCs in Oregon using a propensity score technique [27] to match sites by patient population demographics (ratio of children to adults and percent Hispanic ethnicity) and date of EHR implementation (number of months of clinic EHR experience). The clinic with the closest propensity score was selected as the matched control for each intervention clinic. In addition to matching, we controlled for differences between intervention and control sites (i.e., residual confounding) through statistical regression adjustment.

Intervention

We developed the EHR tools in OCHIN Epic© via a user-centered design process, described elsewhere [23, 24]. Detailed tool descriptions are provided in Additional file 1. The central tool was a health insurance assistance tracking form in a patient’s medical record; we used data from this tool to determine that patients had been exposed to the intervention. In addition to the tracking form, pop-up alerts were built into the EHR to notify staff when a patient’s insurance was soon to expire or when a patient appeared eligible but was not enrolled in public insurance. These were visible in the EHR at all relevant patient visits. A data roster tool was also available for CHC staff to create lists of patients for whom insurance assistance had been initiated and required follow-up, or who had an upcoming appointment and might need enrollment support. The tools were designed with low-income pediatric patients in mind but were available for use on any patients at the intervention clinics.

EHR and Medicaid datasets

We used patient-level EHR data to assess whether or not the health insurance assistance tracking form was used, and to obtain demographic and visit data. We also used EHR data to assess coverage status at visits. To quantify longitudinal insurance coverage periods (coverage start and end dates), we created individual patient linkages between EHR data and Medicaid/CHIP enrollment data from the state of Oregon, per established methods using Medicaid/CHIP identification numbers [28,29,30]. We used household case numbers in the Medicaid/CHIP enrollment data to measure how long each household was established at a clinic.

Variables

The primary predictor variable for ITT analyses was whether a pediatric patient received care at one of the four intervention clinics versus one of the four control clinics. The primary predictor variable for ETOT analyses was whether or not a pediatric patient in the intervention clinics had the tracking tool used to assist them or not. Other covariates are shown in the tables. Outcome variables were: health insurance coverage status, coverage gain, coverage loss, utilization of care, and receipt of recommended care (assessed using healthcare quality measures from the Children’s Health Insurance Program Reauthorization Act (CHIPRA)) [31].

Analyses

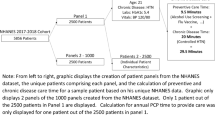

We used mixed methods to measure rates of tool use and to understand facilitators and barriers to tool use [32]. We used ITT and ETOT analyses to assess the impact of the tools on health insurance coverage, CHC utilization, and receipt of recommended healthcare services. In ITT analyses, we compared pediatric patients with ≥1 clinical visit at an intervention clinic (n = 15,024) to those with ≥1 clinical visit at a matched control CHC (n = 12,227); see Fig. 1. In ETOT analyses, among all pediatric patients with ≥1 clinical visit at an intervention clinic, we compared those patients on whom the tracking tool was used (n = 2240) with those on whom the tool was not used (ETOT comparisons, n = 12,784); Fig. 1. To assess outcomes related to coverage and utilization, we conducted several of the analyses in subgroups of patients for whom we had Medicaid data (described below).

Mixed methods to assess tool use

Quantitative

We calculated rate of tool use as the number of pediatric patients with ≥1 clinical visit at an intervention CHC for whom the tracking tool was used (numerator = 2240) over the total number of intervention clinic pediatric patients with ≥1 clinical visit at an intervention CHC (denominator = 15,024). Using chi-square tests, we compared demographic, care utilization, and coverage characteristics of (1) intervention clinic patients versus control clinic patients (ITT groups), and (2) patients at the intervention clinics with tool use versus patients at the intervention clinics without tool use (ETOT groups). We adjusted for statistically significant differences between groups using multivariable analyses (described below).

Qualitative

Prior to tool implementation, we conducted observations and interviews at two intervention and two comparison CHCs, purposively selected to assess baseline insurance assistance processes. Semi-structured interviews were conducted with clinic leaders, clinicians, and staff (N = 26, 5–7 individuals per site) to understand the clinics’ insurance assistance processes and inform tool development [23]. One year after tool implementation, we conducted observations and interviews at the intervention sites to observe insurance assistance processes and tool use. We spent 2–3 days at each clinic, observing processes and creating detailed fieldnotes. Field researchers also conducted semi-structured interviews with intended tool users (e.g., front desk staff, schedulers, eligibility specialists / insurance assistants, clinic administrators; N = 12) to assess perceptions of the tools. These interviewees were purposively selected among staff observed to have tasks most relevant to assisting patients with health insurance, regardless of whether these staff members were using the tools or not using them.

Recorded interviews were professionally transcribed. Transcripts and fieldnotes were de-identified and entered into Atlas.ti (Version 7.0, Atlas.ti Scientific Software Development GmbH, Berlin, Germany) for analysis. We used a grounded theory approach to analyze the data [33]. First, our multi-disciplinary research team met regularly to analyze all data for one clinic, informing how we named and tagged the text, and how we defined emerging themes to create a code book. We repeated this process for each clinic, meeting to analyze and make sense of the data. Then, we compared emerging themes across the clinics, and identified factors that influenced clinic tool use.

ITT and ETOT analyses: Tool impact on coverage, utilization, receipt of recommended care

For both ITT and ETOT analyses, our assessment period included the 6 months prior to tool implementation, which allowed us to incorporate patients who would have been identified as uninsured or about to lose coverage, were the tool used proactively. To examine changes in coverage status and clinic utilization, we calculated odds ratios comparing ITT and ETOT groups using adjusted logistic generalized estimating equation (GEE) models to account for clustering of patients within clinic, and adjusted for covariates (see footnotes, Tables 2 and 3). Where there were statistically significant demographic and utilization differences between patients in comparison groups (Table 1), we adjusted for these factors in the analyses. Coverage and utilization outcomes were conducted in relevant subsets of patients as follows:

-

(1)

Among patients who could be linked to Medicaid/CHIP data (> 90% of patients), we assessed (a) the odds of gaining coverage after a period of uninsurance, and (b) the odds of losing coverage after having Medicaid/CHIP coverage at any time during the study period. Medicaid/CHIP enrollment data needed to evaluate this outcome were available through 9/30/2015, so these analyses ended at that date (Table 2).

-

(2)

Among patients with an uninsured visit within 6 months of the tool’s implementation, we calculated the odds of returning for a second visit. This analysis utilized visit coverage information from the EHR and was not limited to patients who could be linked to Medicaid/CHIP (Table 3).

-

(3)

Among patients in (2) who did return for a visit, we calculated the (a) odds of being uninsured and (b) the odds of being insured by Medicaid/CHIP at return visit(s) (Table 3).

We assessed receipt of recommended healthcare services among the subgroup of children recommended to receive each of the services based on age and sex (see Table 4 footnotes).

We used chi-square statistics to test between-group differences in the percentage of eligible children meeting each CHIPRA measure. We then estimated adjusted odds ratios for meeting each measure, comparing intervention versus control clinic patients (ITT) and patients in the intervention clinics with tool use versus those without tool use (ETOT). As before, odds ratios were estimated using adjusted logistic GEE models accounting for clinic clustering and adjusted for covariates (see footnotes, Table 4).

All quantitative analyses were performed in SAS software, v.9.4 (SAS Institute Inc., Cary, NC). Statistical significance was set at α < 0.05. The study was approved by the Oregon Health & Science University Institutional Review Board.

Results

Tool use

The tracking tool was used for approximately 15% of pediatric patients with ≥1 clinical visit at an intervention site (Fig. 1 and Table 1). Tool use changed over time: an initial spike in use, which then followed by decreasing use over several months. After additional site visits and trainings, tracking tool use increased gradually but steadily throughout follow-up. By the end of follow-up, the tracking tool was being used on about 17% of pediatric patients per month (Fig. 2).

The characteristics of children at intervention clinics differed from those seen in matched control clinics. Intervention clinic patients were older, more commonly Hispanic and Spanish-speaking, and had lower household incomes (p < .001 for all). Within the intervention clinics, patients with tool use differed from those for whom the tool was not used, notably by ethnicity: 95% of patients with tool use were Hispanic, compared to 65% of within-clinic comparison patients; and 88% used Spanish as their primary language, compared to 51% (p < .001 for both, Table 1). Qualitative findings concurred that patients’ ethnicity and language drove tool use in the intervention clinics. Children for whom the tool was used were also older, had lower household incomes, were more likely to be established clinic patients, and had more clinical encounters during the study period compared to within-clinic comparison patients (p < .001).

Although designed for use with established pediatric patients, we observed tool use in two additional groups: (1) children with no clinical visits in the assessment period (n = 969), and (2) adult patients (n = 3207); see Additional file 2. We also qualitatively observed eligibility specialists using the tracking tool in unanticipated ways. For example, family members often came in together for insurance assistance, and eligibility specialists initiated entries into the tracking tool for everyone in the family. Additionally, almost one-third of adults with a Medicaid ID for whom the tracking tool was used did not share a case number with other household members, which indicated that the tracking tool was used for ‘single’ adults, not just family members of children being assisted.

Barriers to tool use

Qualitative data suggest that tool use was affected by two factors. First, several initiatives were occurring concurrent to the study period, including Oregon’s 2014 Medicaid expansions; the US Health Resources and Services Administration (HRSA) funding for CHCs to extend insurance assistance to all community members; [34] and an alternative payment program requiring staff to document every type of patient interaction, including insurance assistance, using specified reporting methods.

These concurrent initiatives created a frenzy of insurance-oriented change, increased eligibility specialists’ workloads, and distracted staff from using the tracking tool consistently. In addition, our tools could not be easily adapted to the new workflow and reporting requirements involved in the HRSA and alternate payment model initiatives. Consequently, to avoid duplicate data entry, CHC staff often tracked insurance-related interactions in spreadsheets, rather than the tracking and roster tools. Eligibility specialists’ increased workloads also limited the time they had to use the roster tool to identify patients whose insurance was nearing expiration:

It’s been really hard for me to get into that list. I know I should be working on it … even though I do the application online, it’s fairly time consuming ... So unless we block the schedule … there is not enough time to do the follow-up list. [Clinic 4, Eligibility Specialist]

Second, as the state was flooded with expanded Medicaid insurance applications, some enrollees had their insurance ‘end dates’ electronically extended, so the end dates shown in our tools did not always coincide with the end dates an enrollee received from the state. This discrepancy confused eligibility specialists about when they should help patients reapply:

We think that the insurance is supposed to be expired by January. Then we realize that it’s been extended three more months. When we check [in] three more months, the next day there’s another three more months. So my staff are like, “How can I do any follow up?” […] There is not a real and exactly accurate redetermination date for us to support our patients. [Clinic 4, Eligibility Specialist Supervisor]

We were excited because [the tools were] going to tell us when it’s time to renew. We’re going to be able to research all these patients and call them ... But then it didn’t really work. And then the front would schedule appointments because [the tool] would say it’s time to renew. But then when they would come [in] I would call and [the state] would say, no, it’s not time. So that was kind of a bummer. We thought it would work, and ... help not only the patient but, you know, us too. [Clinic 5, Eligibility Specialist]

As a result, staff who were initially excited about using the tools could not trust the pop-up alerts to accurately notify them when patients needed to re-enroll, so they ignored the pop-ups and did not initiate tracking tool entries for additional patients.

Tool impact on coverage and care utilization

In ITT analyses, intervention clinic patients had higher odds of gaining insurance coverage (adjusted odds ratio [aOR] = 1.32, 95% confidence interval [95%CI] 1.14–1.51) and lower odds of losing coverage (aOR = 0.77, 95%CI 0.68–0.88), compared to control clinic patients (Table 2). Similarly, in ETOT analyses, patients with tool use had higher odds of gaining insurance (aOR = 1.83, 95%CI 1.64–2.04), and lower odds of losing it (aOR = 0.70, 95%CI 0.53–0.91), versus patients in the clinic without tool use; Table 2.

In ITT analyses, uninsured intervention clinic patients had higher odds of being uninsured at all return visits (aOR = 2.00, 95%CI 1.37–2.94) and lower odds of being insured by Medicaid at any return visit (aOR = 0.42, 95% CI 0.29–0.61), compared to control clinic patients (Table 3). These relationships were reversed in ETOT analyses: uninsured patients with tool use had lower odds of being uninsured at all return visits (aOR = 0.49, 95%CI 0.27–0.90), and a trend toward higher odds of being insured by Medicaid (aOR = 2.00, 95% CI 0.93–4.31, ns), compared to patients without tool use (Table 3). The ETOT analysis also found higher odds of a return visit after an uninsured visit among treated patients (aOR = 1.78, 95%CI 1.42–2.23), Table 3.

Tool impact on receipt of recommended care

In ITT analyses, intervention clinic patients had lower odds of receipt of several services versus control clinic patients (well-child visits in first 15 months, chlamydia screening, and MMR), but higher rates and odds of immunizations for adolescents (Table 4). In ETOT comparisons, tool use was associated with higher rates of receipt of most assessed services (Table 4). For example: 63% of eligible patients with tool use received recommended well-child visits by 15 months of age, versus 39% of within-clinic comparison patients (p < .001; aOR = 1.54, 95% CI 1.06–2.25); females for whom the tool was used were more likely to complete human papillomavirus (HPV) vaccination by age 13 (54%), versus 36% of within-clinic comparisons (p < .001; aOR = 1.44, 95%CI 1.12–1.84).

Discussion

In this novel study, we partnered with stakeholders in a user-centered design process to build EHR-based insurance assistance tools for CHCs [21, 23, 24]. To our knowledge, this is the first study to build and test EHR-based tools designed to support primary care clinics as they assist patients with insurance enrollment.

In the 18 months post-implementation of our EHR-based tools, tool use rates were low. Our qualitative results suggest two important (and likely connected) factors that explain this outcome. Both were a result of this study occurring during historic healthcare reforms. First, federal initiatives incentivized the CHCs to use an insurance documentation system that was different from our tools, [34] making adoption of our tools less appealing. Second, swamped by the 44% Medicaid enrollment increase that occurred between July 2013 and March 2014, [35] Oregon electronically extended coverage expiration dates for many people already enrolled in public insurance without requiring that re-application paperwork be processed. This automatic extension of coverage compromised our ability to obtain accurate insurance coverage end dates for our tools and led to confusion and mistrust of the tools we developed. Such real-world factors are inherent to pragmatic trials and an important lesson in this nascent endeavor.

The most notable impact of the tools was in improving the odds that a child with a Medicaid ID either gained back coverage or did not lose coverage, in both ITT and ETOT analyses (Table 2). Among the uninsured children in this subsample, having a previous Medicaid ID indicated that a given child was likely eligible to regain coverage and may have just needed assistance from clinic staff using the tool. Among all uninsured children (those with or without prior Medicaid), it is likely that some children did not qualify for Medicaid for various reasons (e.g., citizenship requirement). More children in the intervention group clinics may not have qualified, especially given the higher percentage of Hispanic patients and the lower percentage of children with Medicaid coverage in the intervention clinic group. This may explain why the ITT and ETOT analyses did not concur regarding return uninsured visits among uninsured children (ITT analyses showed higher odds of uninsured return visits; ETOT analyses showed lower odds of uninsured return visits). It is also important to note that the ETOT analyses were performed only using the intervention clinics. Restricting the sample to only intervention clinics, allows us to identify what impact the tool use had among those with access to those tools. Our ability to see a consistent population-level impact on receipt of recommended care in ITT analyses may have been hampered by low tool uptake [36]. However, it was promising to observe the consistency in the ETOT analyses with higher rates of every recommended healthcare service among patients with tool use versus those without tool use. This suggests that efforts to increase use of the tools could positively impact children’s receipt of recommended healthcare services in the future.

Limitations

To accommodate stakeholder requests, our intervention was not randomly allocated to the four CHCs we initially recruited – all four received the intervention. Instead, we used propensity score techniques to identify four comparable matched CHCs. Although this approach is not as closely controlled as a more traditional randomized trial, it was chosen to suit this pragmatic, real-world evaluation. This approach was also more ethically appropriate for research in this setting because it enabled us to avoid recruiting CHCs who were very reluctant to be randomized into a control arm. Even using these matching methods, there were still significant differences between intervention and control sites, due to the small number of non-intervention CHCs available for matching. We attempted to address this imbalance through additional statistical regression adjustment. As with any observational study, there may have been unobserved differences between study groups, which in turn may have affected the study results. Due to EHRs not being structurally designed to link records for families, we could not assess whether tool use was impacted by the number of individuals assisted in a given family. The length of our follow-up period was 18 months, which may not have been sufficiently long to assess tool adoption and impact, including changes to CHC utilization and recommended care receipt. In addition, it was only technically feasible to quantify use of the tracking tool at the individual level, so we selected use of this tool to indicate that individual patients had been exposed to the intervention. While we evaluated use of the pop-up alert and roster tools in qualitative data collection, we were not able to assess whether use of these other features impacted the outcomes of interest. It is possible that non-tool use patients (our ETOT comparison group) had some action taken from the pop-up or roster tools that was not quantitatively tracked, thus underestimating our measures of tool effect.

While many of the ETOT results are promising, we caution against drawing long term conclusions based on these results in light of the more equivocal ITT findings. For EHR tools such as these to show convincing population-level impacts, they must be broadly adopted. Nonetheless, we did observe significant benefit among patients for whom clinic staff thought the tools would be useful.

Next steps

Despite barriers to tool adoption, our results suggest several future potential benefits of EHR-based insurance assistance tools. CHC staff saw benefit in using the tools, especially for Hispanic, Spanish-speaking patients, and for some individuals beyond the study population (e.g., adults). It was encouraging to observe that the tools were used to assist many adults (n = 3207) as well as children (2240) in the intervention clinics. Many of these adults had a child who was also assisted, and qualitative data concurred that eligibility specialists often aided entire families. This finding led our team to obtain funding to develop and test similar tools for adults [37]. We also learned that designing and implementing EHR-based insurance assistance tools requires close collaboration with payers (e.g., Medicaid/CHIP), an infrastructure that can generate accurate data on insurance end dates, [38] and one that can be easily adapted to a changing insurance landscape.

Conclusions

This study was the first to evaluate the feasibility and impact of developing EHR-based tools to help primary care clinics provide health insurance enrollment support for patients. Our results suggest that EHR-based tools have the potential to increase insurance enrollment, prevent coverage loss, and improve the receipt of recommended care. However, these results are only achieved when the tools are used; tool adoption overall was not high enough to fully assess population-level impacts. Nevertheless, encouraging findings from this novel study provide important lessons to improve such tools and increase their future adoption.

Abbreviations

- aOR:

-

adjusted odds ratio

- CHC:

-

community health center

- CHIP:

-

Children’s Health Insurance Program

- CHIPRA:

-

Children’s Health Insurance Program Reauthorization Act

- CI:

-

confidence interval

- EHR:

-

electronic health record

- GEE:

-

generalized estimating equation

- HPV:

-

human papillomavirus

- HRSA:

-

Health Resources and Services Administration

- ID:

-

identification number

- ITT:

-

intent-to-treat

- US:

-

United States

References

Hoffman C, Paradise J. Health insurance and access to health care in the United States. Ann N Y Acad Sci. 2008;1136:149–60.

Courtemanche CJ, Zapata D. Does universal coverage improve health? The Massachusetts experience. J Policy Anal Manag. 2014;33:36–69.

Newacheck PW, Stoddard JJ, Hughes DC, Pearl M. Health insurance and access to primary care for children. N Engl J Med. 1998;338:513–9.

Schoen C, DesRoches C. Uninsured and unstably insured: the importance of continuous insurance coverage. Health Serv Res. 2000;35:187–206.

Olson LM, Tang SF, Newacheck PW. Children in the United States with discontinuous health insurance coverage. N Engl J Med. 2005;353:382–91.

Cassedy A, Fairbrother G, Newacheck PW. The impact of insurance instability on children's access, utilization, and satisfaction with health care. Ambul Pediatr. 2008;8:321–8.

Cummings JR, Lavarreda SA, Rice T, Brown ER. The effects of varying periods of uninsurance on children's access to health care. Pediatrics. 2009;123:e411–8.

DeVoe JE, Ray M, Krois L, Carlson MJ. Uncertain health insurance coverage and unmet children's health care needs. Fam Med. 2010;42:121–32.

Federico SG, Steiner JF, Beaty B, Crane L, Kempe A. Disruptions in insurance coverage: patterns and relationship to health care access, unmet need, and utilization before enrollment in the state Children's health insurance program. Pediatrics. 2007;120:e1009–16.

Sudano JJ, Baker DW. Intermittent lack of health insurance coverage and use of preventive services. Am J Public Health. 2003;93:130–7.

The Henry J. Kaiser Family Foundation: Children’s Health Coverage: Medicaid, CHIP and the ACA. http://kff.org/health-reform/issue-brief/childrens-health-coverage-medicaid-chip-and-the-aca/ (2014). Accessed May 4, 2016.

Aiken KD, Freed G, Davis M. When insurance status is not static: insurance transitions of low-income children and implications for health and health care. Acad Pediatr. 2004;4:237–43.

Fairbrother GL, Emerson HP, Partridge L. How stable is medicaid coverage for children? Health Aff. 2007;26:520–8.

Sommers BD. Why millions of children eligible for Medicaid and SCHIP are uninsured: poor retention versus poor take-up. Health Aff. 2007;26:w560–7.

Short PF, Graefe DR, Swartz K, Uberoi N. New estimates of gaps and transitions in health insurance. Med Care Res Rev. 2012;69:721–36.

Hatch B, Angier H, Marino M, Heintzman J, Nelson C, Gold R, Vakarcs T, Devoe J. Using electronic health records to conduct children's health insurance surveillance. Pediatrics. 2013;132:e1584–91.

Blumenthal D. Performance improvement in health care--seizing the moment. N Engl J Med. 2012;366:1953–5.

Bates DW, Bitton A. The future of health information technology in the patient-centered medical home. Health Aff (Millwood). 2010;29:614–21.

Blumenthal D. Implementation of the federal health information technology initiative. N Engl J Med. 2011;365:2426–31.

Buntin MB, Burke MF, Hoaglin MC, Blumenthal D. The benefits of health information technology: a review of the recent literature shows predominantly positive results. Health Aff (Millwood). 2011;30:464–71.

Angier H, Marino M, Sumic A, O'Malley J, Likumahuwa-Ackman S, Hoopes M, Nelson C, Gold R, Cohen D, Dickerson K, et al. Innovative methods for parents and clinics to create tools for kids' care (IMPACCT Kids' care) study protocol. Contemp Clin Trials. 2015;4:159–63.

The Henry J. Kaiser Family Foundation: A Profile of Community Health Center Patients: Implications for Policy. http://kff.org/medicaid/issue-brief/a-profile-of-community-health-center-patients-implications-for-policy/ (2013). Accessed March 30, 2016.

DeVoe J, Angier H, Likumahuwa S, Hall J, Nelson C, Dickerson K, Keller S, Burdick T, Cohen D. Use of qualitative methods and user-centered design to develop customized health information technology tools within federally qualified health centers to keep children insured. J Ambul Care Manag. 2014;37:148–54.

Likumahuwa-Ackman S, Angier H, Sumic A, et al. IMPACCT kids’ care: a real-world example of stakeholder involvement in comparative effectiveness research. J Comp Eff Res. 2015;4:351–7.

DeVoe JE, Gold R, Spofford M, Chauvie S, Muench J, Turner A, Likumahuwa S, Nelson C. Developing a network of community health centers with a common electronic health record: description of the safety net west practice-based research network (SNW-PBRN). J Am Board Med. 2011;24:597–604.

Devoe JE, Sears A. The OCHIN community information network: bringing together community health centers, information technology, and data to support a patient-centered medical village. J Am Board Fam Med. 2013;26:271–8.

Rosenbaum PR, Rubin DB. Constructing a control group using multivariate matched sampling methods that incorporate the propensity score. Am Stat. 1985;39:33–8.

Angier H, Gold R, Gallia C, Casciato A, Tillotson CJ, Marino M, Mangione-Smith R, DeVoe JE. Variation in outcomes of quality measurement by data source. Pediatrics. 2014;133:e1676–82.

Gold R, Angier H, Mangione-Smith R, Gallia C, McIntire PJ, Cowburn S, Tillotson C, DeVoe JE. Feasibility of evaluating the CHIPRA care quality measures in electronic health record data. Pediatrics. 2012;130:139–49.

Angier H, Gold R, Crawford C, P O’Malley J, J Tillotson C, Marino M, JE DV. Linkage methods for connecting children with parents in electronic health record and state public health insurance data. Matern Child Health J. 2014;18:2025–33.

Centers for Medicare & Medicaid Services. Core Set of Children’s Health Care Quality Measures for Medicaid and CHIP (Child Core Set): Technical Specifications and Resource Manual for Federal Fiscal Year 2015 Reporting.

Fetters MD, Curry LA, Creswell JW. Achieving integration in mixed methods designs-principles and practices. Health Serv Res. 2013;48:2134–56.

Glaser BG. Conceptualization: on theory and theorizing using grounded theory. Int J Qual Methods. 2002;1:23–38.

HRSA Health Center Program. Health Center Outreach and Enrollment Technical Assistance.

The Henry J. Kaiser Family Foundation: How is the ACA Impacting Medicaid Enrollment? http://kff.org/medicaid/issue-brief/how-is-the-aca-impacting-medicaid-enrollment/ (2014). Accessed May 4, 2016.

Gupta SK. Intention-to-treat concept: a review. Perspect Clin Res. 2011;2:109–12.

DeVoe JE, Huguet N, Likumahuwa-Ackman S, Angier H, Nelson C, Marino M, Cohen D, Sumic A, Hoopes M, Harding RL, et al. Testing health information technology tools to facilitate health insurance support: a protocol for an effectiveness-implementation hybrid randomized trial. Implement Sci. 2015;10:123.

DeVoe JE, Tillotson CJ, Lesko SE, Wallace LS, Angier H. The case for synergy between a usual source of care and health insurance coverage. J Gen Intern Med. 2011;26:1059–66.

Acknowledgements

The authors gratefully acknowledge the contributions from the participating CHCs. In addition, we thank our patient investigator, Kay Dickerson, BA, and clinical investigators, Lynn Baker, BSEE, and Andrew Suchocki, MD, MPH, as well as our tool development team, Duane Ellington and Marla Dearing.

Funding

All phases of this study were supported by the Patient-Centered Outcomes Research Institute (PCORI) Award (#308; Jennifer DeVoe, PI). All statements in this report, including its findings and conclusions, are solely those of the authors and do not necessarily represent the views of PCORI, its Board of Governors, or Methodology Committee.

Availability of data and materials

The datasets generated and analyzed during the current study are not publicly available because they contain information that could compromise research participant confidentiality and privacy, but are available from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Contributions

JD conceptualized and designed the study and participated in development of the insurance support tools and interpretation of data. MH conducted all analyses, participated in interpretation of data, and assisted with drafting the initial manuscript. CN participated in conceptualization of the study, design of the insurance support tools and interpretation of data, and supervised implementation and conduct of the study. DC participated in conceptualization and design of the study, development of the insurance support tools, and interpretation of data. AS coordinated implementation of study tools and conducted data collection activities. JH lead qualitative data collection activities, conducted qualitative data analysis, and participated in the interpretation of the data. HA assisted with the conception of this trial, contributed to initial preparation of the manuscript, and assisted with interpretation of data. MM oversaw statistical analyses, provided substantive results interpretation, and contributed to initial preparation of the manuscript. JO assisted with analysis planning and contributed substantive results interpretation. RG participated in conceptualization and design of the study, design and development of the insurance support tools, and interpretation of data; she also drafted the initial manuscript. All authors critically reviewed and revised the manuscript and approved the final manuscript as submitted.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was reviewed and approved by the Oregon Health & Science University Institutional Review Board (IRB00000471). The IRB approved a waiver of documenting consent for study interviews with clinic leaders, clinicians, and staff, as the study was minimal risk and the research involved no procedures for which written consent is normally required outside the research context. An informed consent discussion was completed and the verbal consent was recorded. The IRB granted a waiver of consent for the EHR and Medicaid data used in the quantitative analyses as the study was minimal risk, waiving consent did not adversely affect the rights and welfare of the subjects, and the research could not be practicably carried out without the waiver.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

IMPACCT electronic health record (EHR) tool descriptions. Illustrations of EHR study tools designed for use by community health center staff assisting patients with insurance enrollment. Data from fictitious patients were used when creating the illustrations. (DOCX 10958 kb)

Additional file 2:

Demographic and encounter characteristics, individuals with tracking tool use outside of the study population. Table presenting sex, age, race-ethnicity, primary language, and percent of FPL characteristics of two groups outside the study population for whom the Insurance Tracking Form was used (children with no clinical visits in the assessment period, n = 969; and, adult patients, n = 3207). (DOCX 16 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

DeVoe, J.E., Hoopes, M., Nelson, C.A. et al. Electronic health record tools to assist with children’s insurance coverage: a mixed methods study. BMC Health Serv Res 18, 354 (2018). https://doi.org/10.1186/s12913-018-3159-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-018-3159-x