Abstract

Background

Biomedical Relation Extraction (RE) is essential for uncovering complex relationships between biomedical entities within text. However, training RE classifiers is challenging in low-resource biomedical applications with few labeled examples.

Methods

We explore the potential of Shortest Dependency Paths (SDPs) to aid biomedical RE, especially in situations with limited labeled examples. In this study, we suggest various approaches to employ SDPs when creating word and sentence representations under supervised, semi-supervised, and in-context-learning settings.

Results

Through experiments on three benchmark biomedical text datasets, we find that incorporating SDP-based representations enhances the performance of RE classifiers. The improvement is especially notable when working with small amounts of labeled data.

Conclusion

SDPs offer valuable insights into the complex sentence structure found in many biomedical text passages. Our study introduces several straightforward techniques that, as demonstrated experimentally, effectively enhance the accuracy of RE classifiers.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Introduction

Biomedical Relation Extraction (RE) plays a pivotal role in structuring unstructured medical texts, enabling the construction of knowledge graphs [1, 2] and the extraction of complex relationships between biomedical entities such as drugs, proteins, and genes [3,4,5]. Effective RE aids in the discovery of new drug interactions and biological pathways, critical for advancing medical research and clinical decision-making.

Despite advancements through supervised RE methods [6,7,8], their efficacy is often limited by the scarcity of labeled biomedical data. Several approaches, such as weak supervision [9] and semi-supervised learning (SSL) [10], have been developed to address the challenges of limited training data through leveraging unlabeled data. More recently, in-context learning techniques using Large Language Models (LLMs) have emerged, requiring significantly less labeled data [11, 12].

Weak supervision, for instance, utilizes heuristics, rules, or distant supervision to generate noisy labels for unlabeled data, a technique pioneered by [9]. Although this method enhances the volume of trainable data, it also introduces label noise. Other strategies, such as those developed by [13, 14], employ linguistic patterns like SDP (Shortest Dependency Path) tokens or frequent phrases to formulate labeling rules, reducing the need for manual annotation yet increasing the hidden costs of rule derivation.

SSL utilizes both a limited pool of labeled data and a larger volume of unlabeled data to enhance learning models [10]. Common SSL strategies such as self-training rely heavily on predictors that impute labels on unlabeled examples, which are then used to retrain the models [15]. Variants of self-training include dual training, where a secondary predictor retrieves relevant unlabeled instances [16], and Gradient Imitation Reinforcement Learning (GIRL), which optimizes the correlation between gradients of labeled and unlabeled data to enhance performance [17].

The challenge in self-training is limited labeled data leads to low-quality label imputations, as the reliance on the predictor impedes learning a powerful model. Likewise, graph-based SSL methods, such as label propagation, utilize lexical and syntactic features to propagate labels among closely situated data points [18, 19]. However, determining the correct distance metric and neighborhood thresholds necessary for identifying relevant relational patterns within data remains an open research question. This issue also affects in-context learning, where selecting relevant examples from a constrained dataset for task demonstrations also requires precise distance metrics.

This study aims to address two pivotal questions: What is an effective representation for defining a suitable distance metric in low-resource settings, and how can we reduce the model’s dependence on imputed labels to boost RE accuracy in SSL scenario? To tackle the first question, we propose employing the SDP between entities to process the encoder output and compute the SDP representation for RE. SDP, derived from dependency parse trees, identifies the minimal syntactic dependencies essential for connecting entities, providing valuable hints about their relationships [20,21,22] (see Fig. 1).

For the second question, we advocate using a nearest neighbor approach instead of relying solely on model-based imputations. This method carefully propagates labels to nearby data points based on a specifically defined SDP-based distance metric, thereby allowing for the integration of soft labeling techniques that account for the uncertainty and noise in label imputation.

By addressing these research questions and conducting extensive experiments across three biomedical RE benchmarks, we aim to develop a versatile strategy compatible with any standard RE architecture and SSL algorithm. This approach is designed to deliver accurate results in various low-resource environments, encompassing supervised, SSL, and in-context learning scenarios. In summary, the contributions of this paper are:

-

We propose utilizing SDP to calculate SDP representation of entity pairs for RE, improving accuracy in various low-resource settings such as supervised learning and in-context learning.

-

We use SDP representation to calculate SDP-based distance metric between RE examples. We use this distance metric to support two types of SSL algorithms. We experimentally evaluate on biomedical text the usefulness on the distance metric.

-

Our extensive experiments on three key biomedical RE benchmarks confirm the efficiency of our proposed method in low-resource settings.

Background and task formulation

In this section, we introduce the relevant concepts and formally define the RE task.

Shortest dependency path (SDP). Let \(\mathcal {T}\) be a dependency parse tree corresponding to sequence s representing the syntactical relationship between words in a sentence. In a dependency parse tree, words are represented as nodes, and the relationships between words are represented as directed edges. Given a pair of entities \((e_s, e_o)\), SDP is defined as the minimum set of tokens that can be reached from \(e_s\) to \(e_o\) through the dependency tree \(\mathcal {T}\). Figure 1 shows an example of a parse tree, where the extracted SDP tokens between a pair of entities (Chemical and Gene) are highlighted in red. We need to highlight that we don’t consider the relation dependency between words in this study.

Relation extraction (RE). Given sentence \(s=(w_1,w_2,...,w_n)\), a subject entity \(e_{s}\), and an object entity \(e_{o}\), the RE task is to predict the relation label \(r \in \mathcal {R}\) of triple \(x=(s,e_s,e_o)\), where \(\mathcal {R}\) is a union of a predefined set of relation types and None, referring to no relation or other type of relation.

Semi-supervised RE. This approach utilizes a small set of labeled examples \(\mathcal {D}_L=\{(x_i,r_i)\}_{i=1}^{N_l}\) and a larger set of unlabeled examples \(\mathcal {D}_U=\{(x_j)\}_{j=1}^{N_u}\) to train a classifier model \(f_{\theta }\). The model aims to fit the labeled data while also leveraging the unlabeled data to improve overall accuracy.

In the following subsections, we refer to \(x=(s,e_s,e_o)\) as an RE example and r as the RE label. In addition, we assume the entity mentions can be identified using external tools and the set of relation types \(\mathcal {R}\) is defined.

Methodology

SDP representation

In this section, we propose the SDP representation for RE. RE is defined as a text classification problem. As an encoder, we utilize the BERT neural network architecture [23]. BERT takes a sentence of tokens as input and produces embedding vectors for the entire sentence and each individual token, denoted as \(H:[h_{cls},h_1,...,h_n] = \text {BERT}(w_1,...,w_n)\), where \(w_i\) represents the i-th token in the sentence, \(h_i\) is its embedding, and \(h_{cls}\) is the sentence embedding.

This sequence of vector embeddings can be used in multiple ways as an input to the classification head. As illustrated in Fig. 2, we first present several baseline approaches for using a sequence of embedding vectors. Then, we propose how to calculate the SDP embedding.

CLS: BERT CLS embedding is used as an input to the RE decoder. This representation has been commonly used for many downstream text classification tasks, including RE [6, 7]. We denote the dimension of this token as L, which corresponds to the length of \(h_{cls}\).

ENT: Followed by the best setup in [24], we concatenate embeddings of the two entity tokens. If an entity consists of multiple tokens we only consider the first token.

where \(\oplus\) denotes the concatenation. The dimension of this representation as 2L which corresponds to \(h_{s}\) and \(h_{o}\).

ATLOP: Based on [8], we combine the entity embeddings using a vector called the local context. This vector contains pertinent information related to both entities. Let’s consider \(A_s\) and \(A_o\) as the self-attention matrices for entities \(e_s\) and \(e_o\) from the last layer in BERT, where \(A \in R^{H\times l \times l}\). \(A_{ijk}\) represents attention from token j to token k in the \(i_{th}\) attention head, while \(A_{i}^E \in R^{H \times l}\) denotes attention from the \(i_{th}\) entity to all tokens. We locate the local context that is important to both \(e_s\) and \(e_o\) by multiplying their entity-level attentions, and obtain the localized context embedding c(s, o) by:

Where H is the contextual embedding derived from BERT. To construct the final embedding for an instance, we concatenate the local context embedding with the embeddings of the entity pair:

The resulting representation has length \(3 \times L\).

SDPrep: Our main contribution is the use of SDP to enrich the embeddings by focusing on syntactic paths that are most indicative of relations. Biomedical sentences use complex terminology can be quite long, but they follow a relatively structured grammar. We hypothesize that the SDP between pairs of entities in biomedical documents is very likey to contain key information in revealing the relation type. To this end, we empploy SDP to direct our attention mechanism within BERT encoder. By averaging the embeddings of the tokens that constitute the SDP and concatenating these with the embeddings of the starting and ending tokens of the entities involved, we form a meaningful representation which can be formulated as follow:

where |SDP| denotes the number of SDP tokens. The dimension of SDP representation is \(3 \times L\), which blends syntactic precision with semantic richness.

In the RE model, the decoder is a single classification layer \(W \in R^{K \times L^*}\) where \(L^*\) is the dimension of the input representation (\(L^*=3L\) for SDP representation) and K is the number of relation types. Importantly, this decoder can be replaced with any off-the-shelf RE architecture and is placed on top of the processed encoder representation. In addition, the SDP representation can be integrated separately to define a distance metric such as in in-context learning or retrieval scenarios, enhancing the model’s applicability to a broader range of tasks that require a nuanced understanding of entity relationships. The classification of the RE task given a representation is performed by:

where \(V_x\) is the output vector from the last layer of the encoder corresponding to the SDP-enhanced representation for input x, and W represents the weight matrix of the classification layer. The loss for training is computed as the cross-entropy between p(r|x) and the true relation labels.

SDP in semi-supervised RE

This section explores the integration of the Shortest Dependency Path (SDP) with nearest neighbor-based label propagation in an extreme low-resource SSL setting. Our approach is designed to effectively utilize a minimal set of labeled data points, thereby eliminating the need for a large labeled for training and validation set.

Our primary goal is to leverage the SDP representation in conjunction with nearest neighbor techniques to compute distances between RE examples. This enables precise label propagation and reduces reliance on predictors for generating pseudo-labeled data.

We developed an SSL approach that combines graph-based SSL and self-training. Similar to graph-based SSL, our algorithm propagates labels to closely neighboring unlabeled data Unlike traditional graph-based methods, it meticulously restricts label propagation to only the nearest neighbors rather all than unlabeled data [25]. This constraint is essential in low-resource settings, as it minimizes the noise introduced by including broader sets of unlabeled data and preserves the influence of the scarce labeled data available in the training process. From the self-training perspective, while our approach utilizes the predictor to extract representations, it does not rely on it to generate pseudo-labels. This is because it is challenging to develope a reliable and unbiased predictor with limited labeled data. Instead, we utilize these representations to compute soft labels, which are determined based on the proximity of unlabeled RE examples to their labeled counterparts. This technique indirectly employs the predictor, enhancing the label quality without the direct use of potentially biased pseudo-labels. Prior research shows that soft labels result in a more robust classifier [26] by informing the training process about the quality of imputed labels. To compute soft labels for unlabeled examples, we follow the steps below:

-

Find the nearest neighbor of the unlabeled example among the labeled examples.

-

Calculate the cosine similarity(d) between the unlabeled example and its top-k nearest neighbors (labeled data).

-

Aggregate the cosine similarities for each class type

-

Compute the soft labels for each class type by normalizing the aggregated similarities using softmax.

To optimize the RE model on soft labels, we use Noise Aware Cross Entropy loss (Eq. 7), which is computed for each class separately, and then the losses are summed together,

where N is the total number of examples, and \(y_{i,c}\) and \(\hat{y}_{i,c}\) represent the ground truth and predicted soft label for example i and class c. The algorithm is executed iteratively, with each cycle incorporating a limited number of additional soft labels. We specify a fractional amount of unlabeled data as validation set and monitor the predictor’s fluctuations to determine convergence. Convergence is achieved when the prediction variation on a validation set is less than 5% between iterations, or when the maximum number of iterations is reached. It is important to note that our algorithm does not rely on ground truth validation data, using instead the stability of predictions as a stopping criterion.

This semi-supervised approach, centered on the strategic use of SDP and soft labeling along with nearest neighbor-based propagation, is designed to enhance the efficiency of RE models in SSL settings constrained by extreme limited labeled data.

Experiments

Dataset

We evaluate the effectiveness of our method on three public biomedical relation extraction datasets retrieved from PubMed database. The statistics of these datasets are shown in Table 1. 1) ChemProt [27] consists of 1,820 PubMed abstracts with chemical-protein interactions annotated by domain experts and was used in the BioCreative VI text mining chemical-protein interactions shared task. 2) DDI [28] contains MedLine abstracts on drug-drug interactions as well as documents describing drug-drug interactions from the DrugBank database. 3) PPI [29] utilizes AIMed corpus to automatically extract interaction relations of protein-protein pairs affected by genetic mutations.

Compared methods

To perform experiments, we first compared our SDP-based finetuning strategy with several supervised RE baselines. Then, we adopted the best-performing baseline as the RE classifier model to explore the impact of SDP in SSL baselines and in-context-learning. In all the experiments, we applied SciBERT [30] as the encoder. We performed all the experiments under a very limited budget for labeled data and abundant unlabeled data. We denote \(\text {SUP-RE}_{sdp}\) and \(\text {SSL-RE}_{sdp}\) as supervised and semi-supervised variants of SDP in the remaining subsections.

Supervised baseline methods: The goal is to compare the performance of different RE architectures (as discussed in “SDP representation” section) in a supervised setting and show that fine-tuning using \(\text {SUP-RE}_{sdp}\) achieves a better performance compared to the existing approaches. These approaches are CLS, ENT, ATLOP. This experiment utilizes limited labeled data to explore the best-performing RE model (in our case is 500).

Semi-supervised baseline methods: The goal of this experiment is to compare the superior performance of \(\text {SSL-RE}_{sdp}\) with predictor-based SSL. To ensure fair comparison with \(\text {SSL-RE}_{sdp}\), we used the best-supervised baseline from Table 2, which was \(\text {SUP-RE}_{sdp}\), as RE model. We applied the following SSL methods on \(\mathcal {D}_{L} \cup \mathcal {D}_{U}\):

(1) Label Propagation [25], which is a graph-based algorithm that iteratively updates the label probability in \(\mathcal {D}_{U}\) by matrix multiplication (TR, where T is a \(n \times n\) weighted adjacency matrix (pairwise relations between labeled and unlabeled data) and R is \(n \times C\) class probability matrix). (2) Self-Training [15], which iteratively expands \(\mathcal {D}_{L}\) by using the most confident (above \(\tau\)) predictor’s prediction among \(\mathcal {D}_{U}\). (3) DualRE [16], which is a dual training algorithm that utilizes a learning-to-rank model as a dual module to retrieve the relevant instances from \(\mathcal {D}_{U}\) for a given relation. (4) RE-Ensemble, which replaces the dual module in DualRE [16] with the same predictor in the primal module, with a different random initialization. RE-Ensemble imputes the labels based on the agreement of the two modules.

We also provide SUP-REsdp as a supervised baseline, which can also serve as a few-shot baseline since it is only trained on limited labeled data without access to unlabeled data. In addition, we report SSL-REsdp-1-Iter, which is the same as \(\text {SSL-RE}_{sdp}\), but only uses one iteration to perform imputation.

Experimental setting

Implementation: We implemented all the baselines using Pytorch. For DualRE and RE-Ensemble [16], we replaced the Position-aware Recurrent Neural Network that was used originally in [31] with \(\text {SUP-RE}_{sdp}\). The source code for these baselines can be found hereFootnote 1. In addition, we used the code provided by [32] to apply label propagation algorithm.

Training details: We adopted SciBERT as the encoder for all the experiments and update all the parameters. For supervised finetuning, we add one linear layer followed by softmax to perform classification. We use the following set of hyper-parameters as suggested in [23]:

-

Transformer Architecture: 12 Layers, 768 hidden dimension, 6 heads

-

Learning rate: 3e-5

-

Weight initialization: SciBERT-base

-

Batch size: 32

-

Optimizer: adam

-

Training epochs: 5

-

Maximum sequence length: 200

We used 1 GPU, Tesla V100-SXM2, for training. We applied SciSpacy [33] dependency parser to our corpus to retrieve the SDP tokens for an entity pair.

For SSL experiments, we kept the same hyper-parameters. We impute labels to the top-5 unlabeled data. A similar strategy is applied to retrieve labeled examples for each unlabeled data to compute soft-labels.

In Self-Training, since we use the RE model to provide predictions on unlabeled data, we set the threshold for the most likely class to be above 0.90. However, since the majority of the predictions were overconfident based on the validation results, resulting in imputing noisy labels, we only select the top 100 in the augmented set. In Label Propagation implementation based on [32], we chose KNN as kernel function, and set the K to 5, which specifies the number of closest labeled instances to include in the label propagation process for each unlabeled instance. For DualRE and RE-Ensemble, we followed the default hyperparameters mentioned in [16]. We only leveraged 50% of \(\mathcal {D}_{U}\), and used the default confidence thresholds \(\alpha =0.5\) and \(\beta =2\) predictor and retrieval modules, respectively. We applied the same convergence criteria as in \(\text {SSL-RE}_{\text {sdp}}\) for self-training and dual training.

Evaluation metric: Following the previous work in RE [14, 16], we report micro-F1 as the most important evaluation metric. It provides an evaluation of the model’s ability to simultaneously capture precision and recall across all classes. We ignored correct predictions of None in micro score calculation.

Results

We conducted each experiment over three different independent sets of labeled data and reported the mean performance.

Comparison with supervised baselines

Table 2 demonstrates the performance of different supervised RE architectures under 500 training budget. \(\text {SUP-RE}_{sdp}\) approach achieves higher accuracy compared to the CLS, ENT, and ATLOP architectures. This can be attributed to the explicit guidance provided by SDP, which directs the predictor to focus on tokens relevant to the target label in biomedical settings where limited labeled data exists.

Among the approaches considered, the CLS representation exhibits the lowest performance. This could be due to the fact that it is sentence-level representation, having less relevant information for entities.

When compared to ATLOP, \(\text {SUP-RE}_{sdp}\) appears to have slightly better F1 score across all datasets. This indicates that the local context pooling mechanism in ATLOP does not capture dependencies as accurately as \(\text {SUP-RE}_{sdp}\). Furthermore, \(\text {SUP-RE}_{sdp}\) slightly outperforms ENT-based fine-tuning on the DDI and ChemProt datasets, while delivering comparable performance on PPI.

To statistically validate the performance differences observed, a Repeated Measures ANOVA was conducted for each dataset. This analysis confirmed the significance of the observed variations in performance, with the p-value for DDI at \(p=0.0030\), for ChemProt at \(p=0.0028\), and for PPI at \(p=0.0025\). The consistency of these statistically significant results supports the superior efficacy of the \(\text {SUP-RE}_{sdp}\) approach across all examined datasets, reaffirming its selection for further analysis.

Considering the slightly better performance of \(\text {SUP-RE}_{sdp}\), as shown in Table 2, and the statistical confirmation of its superiority through ANOVA testing, we have selected it as the RE model for the subsequent subsections. These findings emphasize the importance of methodological selection and highlight the benefit of leveraging SDP-guided approaches in low-resource settings for RE tasks.

Comparison with non-encoder baselines

This experiment evaluates our supervised relation extraction (RE) method, which integrates Shortest Dependency Paths (SDP) and BERT-based representations, against traditional non-encoder baselines utilizing SDP or dependency trees as graph kernels for relation extraction. The fundamental principle of these kernel methods is to assess the similarity between two sentences by examining how closely their structural patterns align. These kernels operate in conjunction with Support Vector Machines (SVM) to classify sentences. Our analysis focuses on the Protein-Protein Interaction (PPI) dataset due to the availability of extensive kernel method benchmarks. We adopted the experimental setup from [34] to ensure a consistent comparison with the kernel methods listed in Table 2 of their study. All experiments were conducted using 10-fold cross-validation on the full PPI dataset, corresponding to the AIMed results in Table 2 of [34]. Following their recommendations, we implemented entity blinding to prevent the influence of named entity recognition problems and to highlight entity locations to the classifier. Our results are compared with a range of kernel methods as detailed in Table 3:

Edit distance kernel (edit) [39]: This kernel calculates the similarity by measuring the edit distance between the shortest paths connecting protein names within a dependency tree. The similarity is determined by the minimum number of edit operations−deletions, insertions, or substitutions required to make one path identical to the other, normalized by the length of the longer path.

Cosine similarity kernel (cosine) [39]: This method computes the cosine similarity between vectors representing the shortest paths in a dependency parse tree between pairs of entities. It quantifies the number of common terms along these paths, adjusted for path length.

All-paths graph kernel (APG) [40]: APG considers all possible path lengths within the dependency parse and surface word sequence, assigning greater weight to paths closer to the shortest path between entities, thereby reflecting dependency proximity.

k-band shortest path spectrum kernel (kBSPS) [41]: This kernel extends the analysis beyond the shortest dependency path to include nodes within a specified k-band distance, enriching the contextual data for relationship extraction.

Other kernels: We further compare our method against kernels that utilize syntax tree representations of sentences, such as the Subtree kernel (ST) [35], Subset tree kernel (SST) [36], Partial tree kernel (PT) [37], and Spectrum tree kernel (SpT) [38].

Table 3 showcases a comparative analysis between various non-encoder-based kernel methods and our SDP-based approach for relation extraction. Notably, our method, \(\text {SUP-RE}_{sdp}\), significantly outperforms the other models in precision (P), recall (R), and F1 score, achieving 81.21% precision, 78.0% recall, and an F1 score of 79.4%. This demonstrates a marked improvement over traditional non-encoder methods like the APG kernel, which has the next highest F1 score of 54.7% but with a substantially lower recall. The kBSPS, while competitive to APG, still trails our method with an F1 score of 44.6%. The substantial lead in performance metrics highlights the effectiveness of integrating SDPs with BERT-based representations, providing evidence that our LLM-based representation using SPD captures complex semantic relationships more effectively than conventional kernel methods.

Comparison with semi-supervised baselines

Table 4 shows the result of our approach compared to SSL baselines and few-shot supervised baseline (\(\text {SUP-RE}_{sdp}\)). According to the results, one can observe that \(\text {SSL-RE}_{sdp}\) outperforms all of the baselines across all datasets, which demonstrates the effectiveness of our framework versus SSL baselines.

\(\text {SSL-RE}_{sdp}\) achieved consistent gain over Label Propagation, Self-Training, DualRE, RE Ensemble on all datasets and with different labeling budgets, except in PPI dataset trained on 500 budget where DualRE performed the best.

One can observe Self-Training and DualRE do not have stable performance due to reliance on the predictor to provide weak labels. For example, Self-Training outperforms DualRE in PPI dataset on [50, 100, 200] budgets, while underperforming DualRE on DDI and ChemProt occasionally. This provides evidence that predictor-based SSL models are sensitive to the performance of the RE model.

In addition, Label Propagation performed weaker than baselines which shows that its low quality of imputation damages the model’s performance.

It could be concluded that \(\text {SSL-RE}_{sdp}\) benefits from iterative augmentation, after comparing to SSL-REsdp(1-ter), which only uses one pass of label imputation. In addition, it improves the performance over the supervised baseline by a significant margin in all the experiments.

Performance on different datasets

The marginal gain of \(\text {SSL-RE}_{sdp}\) on PPI is smaller than on ChemProt and DDI in Table 4. This is because the size of PPI is \(4.2 \times\) smaller than DDI and ChemProt. Therefore, the amount of unlabeled data may not be sufficient to identify the most similar neighbors. This can be observed on other baselines since they underperformed the supervised baseline on this dataset, except for DualRE trained on 500 budget.

Performance as a fraction of labeled data size

Based on the results in Table 4, \(\text {SSL-RE}_{sdp}\) is the most advantageous when the labeled dataset is extremely small (around 100 - 500), which is common in Biomedical domain. In DDI, \(\text {SSL-RE}_{sdp}\) can reduce the need for labeled data by up to \(~5\times\), SUP-REsdp achieves 0.46 F1 when trained on \(\mathcal {D}_L = 500\), while SSL-REsdp[100] boosts SUP-REsdp[500] performance by \(4\%\) when using only \(\mathcal {D}_L = 100\).

Similar outcome can be observed in ChemProt dataset. SSL-REsdp[100] is \(~2\times\) more accurate than SUP-REsdp[200], while using \(2\times\) less labeled data. SSL-REsdp is also more accurate than SUP-REsdp on PPI on 50, 100, and 200 budgets, reducing the labeling need by \(4\times\), achieving 0.56 with SUP-REsdp[200] and 0.52 with SSL-REsdp[50].

Overall, one can observe that \(\text {SSL-RE}_{sdp}\) is significantly beneficial when the cost of collecting labeled data is very high.

Statistical significance test

The t-test testFootnote 2 for statistical significance has been used to find whether the difference between \(\text {SSL-RE}_{sdp}\) and other SSL baselines are due random chance. Therefore, we define the null hypothesis as there is not a significant difference in the performance of \(\text {SSL-RE}_{sdp}\) and other baselines. To this end, we use the final F1 scores from 3 independent runs across 4 labeling budgets to calculate p-value and t-statistics. We report the pvalue of our method compared to label propagation, self-training, RE-Ensemble, and dualRE in Table 5. The reported results reject the null hypothesis for all the baselines as they are all less than the significance level of 0.05, meaning our results are significantly better than baselines. This can be confirmed through t-statistic’s magnitude, since it is positive which indicates a higher difference between the average performance of \(\text {SSL-RE}_{sdp}\) versus baselines and suggests stronger evidence against the null hypothesis.

Imputation bias analysis

To validate \(\text {SSL-RE}_{sdp}\) could prevent any imputation bias (e.g. a certain RE type is overpredicted) due to label noise and enables quality weak labels, in Fig. 3, we represent gold label distribution with blue and weak label distribution with green. Technically, we have the ground truth labels available for all imputed weak labels. From Fig. 3, we observe that weak label distribution is close to the gold label distribution with less drift.

Qualitative analysis of \(\text {SSL-RE}_{sdp}\) versus baselines

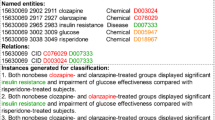

Figure 4 demonstrates few examples of the actual prediction of baseline models vs \(\text {SSL-RE}_{sdp}\). All models are trained using SDP finetuning. SDP tokens used in finetuning are highlighted as green. In the first four examples, \(\text {SSL-RE}_{sdp}\) can accurately captures the gold relations between entities, while in the last example self-training and RE-Ensemble performed better.

In-context learning

The aim of this experiment is to assess the effect of utilizing Shortest Dependency Path (SDP) representation to boost the accuracy of Relation Extraction (RE) within the in-context-learning framework. To this end, we furnish the GPT-3 model with task-specific instructions and a few examples that illustrate the task at hand.

Recent research [11, 12] indicates that dynamically selecting in-context examples for each test instance, rather than employing a fixed set of in-context examples, results in notable improvements in GPT-3’s in-context learning. Taking inspiration from the approach outlined in [11], we implement a k-nearest neighbor (kNN) retrieval module to identify the most closely related examples from our constrained training dataset to serve as the in-context prompts for each test instance. During this process, we use the SDP representation as the basis for calculating the distance metric, which in turn determines the similarity between the test and training instances.

In our experiments, we allocate a training budget of 50 and, in each test, we contrast the efficacy of SDP-based nearest neighbor retrieval with random and fixed prompting. In the random prompting scenario, we arbitrarily select in-context examples from the training dataset for every test instance, while in the fixed prompt setting, we maintain a consistent set of examples across all test sets. We employ stratified sampling to ensure each relation type is represented in the prompt along with the task instruction. To carry out this task, we leverage the highly potent GPT-3 DaVinci engine. However, due to cost considerations associated with using GPT-3, we restrict our test set to a subsample of 200 examples for each experiment. For both fixed and random prompts, we repeat the experiments three times (keeping the test set constant but varying the in-context examples) to establish the reliability of our results.

As depicted in Table 6, the inclusion of SDP-based nearest neighbor retrieval in Drug-Drug Interaction (DDI) led to a considerable improvement in performance for both fixed and random prompts. A modest positive effect was observed for ChemProt, with an approximate increase of 1.9% in performance. However, no discernible improvement was recorded for Protein-Protein Interaction (PPI).

Ablation study

Choice of representation on augmentation module

We investigate the impact of SDP on the label imputation. To this end, we performed experiments on different sequence representations to compute distance metric, and thereafter used the imputed labels to train the RE model. Note that we keep \(\text {SUP-RE}_{sdp}\) architecture for the RE model training, and only changed the representation in the imputation. We use the following representations to extract from the last hidden state of the encoder \(\mathcal {Q}\).

-

CLS (L): is an aggregate representation of all the tokens in a sentence

-

ent-avg (L): is the average embedding of the entities in a sentence

-

ent-sdp-avg (L): is the average embedding of the entities and SDP tokens

-

ENT (2L): is concatenation of the embeddings of two entities in a sentence

-

ent-words-between (3L): is concatenation of the embeddings of the two entities along with the average representation of all the words between two entities

-

\(\text {SDP}_{\text {rep}}\) (3L): is our proposed representation in Eq. 5

As shown in Table 7, \(\text {SDP}_{\text {rep}}\) representation results in overall better F1 score compared to other representations. By comparing the average performance of all representations across all datasets, we observed that \(\text {SDP}_{\text {rep}}\) ranked highest achieving 0.39 average F1 score, ent-sdp-avg ranked second with 0.38 average F1, and CLS ranked lowest with 0.35 average F1.

The representation ent-words-between achieved second to the last (0.37 average F1), meaning adding unnecessary context does not help to find high-quality neighbor search.

Effectiveness of soft labels

To better understand the impact of soft label assignment in weak label imputation, Table 8 reports the performance against hard label assignment, where we only take the label with highest probability during training. We could see that soft labels improve the performance on DDI and ChemProt datasets by 13% and 8% in F1. There is no improvement over PPI dataset.

Discussion and limitation

Our study demonstrates the SDP’s linear scalability which is a critical factor for practical large-scale applications. In practical testing, SDP generation took only 8.86 seconds for 500 samples, while scaling to larger datasets, such as 2000 samples, necessitated a proportional increase in computation time to 34.05 seconds. This efficient preprocessing enables the model’s use in extensive literary corpora, such as PubMed abstracts, without imposing significant computational delays.

Our findings suggest that the integration of SDP with nearest neighbor enriches the model with nuanced syntactic and semantic context while carefully imputing pseudo labels. However, as dataset sizes grow, the method’s relative benefit may diminish due to stronger inherent patterns within the data. Nevertheless our approach offers a pragmatic and feasible solution for initial analyses, beneficial for users needing immediate insights without the complexity of larger models.

Moreover, the SDP representation can seamlessly augment the capabilities of existing off-the-shelf RE models, thereby enhancing their accuracy and reliability for comprehensive analysis.

We acknowledge certain limitations in our methodology. One limitation of our work is that we assume that unlabeled and labeled data are sampled from the same distribution. If the sampling of labeled data is biased, our label imputation approach may not work that well.

Second, our approach depends on the availability of a good dependency parser. This is a limitation if the proposed approach is used on rare languages or in very specialized domains. Third, all three of our datasets had a relatively small number of clearly delineated relation types. It would be important for future work to exploit the effectiveness of the proposed approach on data with a much larger number of relation types.

Fourth, our experiments were performed using the BERT encoder. While it is one of the first strong LLM models, we have recently witnessed the emergence of much stronger models such as GPT-4. It remains an open question if SDP representation could be helpful to those newer LLMs. There are two reasons we did not use GPT-4 or comparable models. First, most of those models are proprietary and inaccessible to researchers. The open-sourced versions are typically much weaker for multiple reasons. In addition, the state-of-the-art LLMs are also extremely large, and our lab did not have sufficient computational resources to support experimenting with those models.

Conclusion

This study demonstrates the utility of Shortest Dependency Path (SDP) representations in supervised, semi-supervised, and in-context learning for low-resource biomedical relation extraction (RE). We introduced an innovative SDP-based representation, which we employed to compute the distance metric between RE instances. In addition, we proposed a new semi-supervised learning (SSL) algorithm tailored for biomedical RE. Comprehensive experimental assessments on three biomedical text datasets substantiate the effectiveness of SDP representation. Importantly, our proposed approaches are not tied to a specific neural network architecture and can be seamlessly integrated as a wrapper around existing and future RE models.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Notes

DualRE. https://github.com/INK-USC/DualRE. Accessed 11 July 2023.

Student’s t-test. https://en.wikipedia.org/wiki/Student%27s_t-test. Accessed 11 July 2023

Abbreviations

- BERT:

-

Bidirectional encoder representations from transformers

- GPT-3:

-

Generative Pre-trained Transformer 3

- RE:

-

Relation Extraction

- SciBERT:

-

Science BERT

- SDP:

-

Shortest Dependency Path

- SSL:

-

Semi Supervised Learning

References

Bairoch A, Apweiler R. The SWISS-PROT protein sequence data bank and its supplement TrEMBL. Nucleic Acids Res. 1997;25(1):31–6.

Wishart DS, Knox C, Guo AC, Shrivastava S, Hassanali M, Stothard P, et al. DrugBank: a comprehensive resource for in silico drug discovery and exploration. Nucleic Acids Res. 2006;34(suppl_1):D668–72.

Köhler G, Bode-Böger S, Busse R, Hoopmann M, Welte T, Böger R. Drug-drug interactions in medical patients: effects of in-hospital treatment and relation to multiple drug use. Int J Clin Pharmacol Ther. 2000;38(11):504–13.

Von Mering C, Krause R, Snel B, Cornell M, Oliver SG, Fields S, et al. Comparative assessment of large-scale data sets of protein-protein interactions. Nature. 2002;417(6887):399–403.

Wilkinson GR. Drug metabolism and variability among patients in drug response. N Engl J Med. 2005;352(21):2211–21.

Wei Q, Ji Z, Si Y, Du J, Wang J, Tiryaki F, et al. Relation extraction from clinical narratives using pre-trained language models. In: AMIA annual symposium proceedings. vol. 2019. American Medical Informatics Association; 2019. p. 1236.

Lee J, Yoon W, Kim S, Kim D, Kim S, So CH, et al. BioBERT: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics. 2020;36(4):1234–40.

Zhou W, Huang K, Ma T, Huang J. Document-level relation extraction with adaptive thresholding and localized context pooling. In: Proceedings of the AAAI Conference on Artificial Intelligence. Washington, DC: Association for the Advancement of Artificial Intelligence (AAAI); 2021. pp. 14612–14620. vol. 35.

Mintz M, Bills S, Snow R, Jurafsky D. Distant supervision for relation extraction without labeled data. In: Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP. Association for Computational Linguistics (ACL); 2009. pp. 1003–1011.

Ouali Y, Hudelot C, Tami M. An overview of deep semi-supervised learning. 2020. arXiv preprint arXiv:2006.05278 .

Liu J, Shen D, Zhang Y, Dolan B, Carin L, Chen W. What Makes Good In-Context Examples for GPT-\(3\)? 2021. arXiv preprint arXiv:2101.06804 .

Rubin O, Herzig J, Berant J. Learning to retrieve prompts for in-context learning. 2021. arXiv preprint arXiv:2112.08633 .

Qu M, Ren X, Zhang Y, Han J. Weakly-supervised relation extraction by pattern-enhanced embedding learning. In: Proceedings of the 2018 World Wide Web Conference. Republic and Canton of Geneva Switzerland: International World Wide Web Conferences Steering Committee; 2018. pp. 1257–1266.

Zhou W, Lin H, Lin BY, Wang Z, Du J, Neves L, et al. Nero: a neural rule grounding framework for label-efficient relation extraction. In: Proceedings of The Web Conference 2020. New York, NY: Association for Computing Machinery; 2020. pp. 2166–2176.

Rosenberg C, Hebert M, Schneiderman H. Semi-Supervised Self-Training of Object Detection Models. In: 2005 Seventh IEEE Workshops on Applications of Computer Vision (WACV/MOTION’05) - Volume 1, vol. 1. 2005. pp. 29–36. https://doi.org/10.1109/ACVMOT.2005.107.

Lin H, Yan J, Qu M, Ren X. Learning dual retrieval module for semi-supervised relation extraction. In: The World Wide Web Conference. New York, NY: Association for Computing Machinery; 2019. pp. 1073–1083.

Hu X, Zhang C, Yang Y, Li X, Lin L, Wen L, et al. Gradient Imitation Reinforcement Learning for Low Resource Relation Extraction. In: Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Punta Cana, Dominican Republic: Association for Computational Linguistics; 2021.

Zhou D, Bousquet O, Lal T, Weston J, Schölkopf B. Learning with local and global consistency. Adv Neural Inf Process Syst. 2003;16:321–8. https://doi.org/10.5555/2981345.2981386.

Chen J, Ji D, Tan CL, Niu ZY. Relation extraction using label propagation based semi-supervised learning. In: Proceedings of the 21st International Conference on Computational Linguistics and 44th Annual Meeting of the Association for Computational Linguistics. Sydney, Australia: Association for Computational Linguistics; 2006. pp. 129–136.

Li Z, Yang Z, Shen C, Xu J, Zhang Y, Xu H. Integrating shortest dependency path and sentence sequence into a deep learning framework for relation extraction in clinical text. BMC Med Inform Decis Mak. 2019;19(1):1–8.

Zhang Y, Zheng W, Lin H, Wang J, Yang Z, Dumontier M. Drug-drug interaction extraction via hierarchical RNNs on sequence and shortest dependency paths. Bioinformatics. 2018;34(5):828–35.

Liu H, Hunter L, Kešelj V, Verspoor K. Approximate subgraph matching-based literature mining for biomedical events and relations. PLoS ONE. 2013;8(4):e60954.

Devlin J, Chang MW, Lee K, Toutanova K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). Minneapolis: Association for Computational Linguistics; 2019. pp. 4171–4186. https://aclanthology.org/N19-1423.

Baldini Soares L, FitzGerald N, Ling J, Kwiatkowski T. Matching the Blanks: Distributional Similarity for Relation Learning. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics; 2019. pp. 2895–2905. https://aclanthology.org/P19-1279.

Zhu X, Ghahramani Z. Learning from labeled and unlabeled data with label propagation. 2002. https://www.bibsonomy.org/bibtex/25ddd451b8facd00d9493358a3db9b733/ldietz.

Sajjadi M, Javanmardi M, Tasdizen T. Regularization with stochastic transformations and perturbations for deep semi-supervised learning. Adv Neural Inf Process Syst. 2016;29:1171–9. https://doi.org/10.5555/3157096.3157227.

Krallinger M, Rabal O, Akhondi SA, Pérez MP, Santamaría J, Rodríguez GP, et al. Overview of the BioCreative VI chemical-protein interaction Track. In: Proceedings of the sixth BioCreative challenge evaluation workshop, vol. 1. 2017. pp. 141–146. https://biocreative.bioinformatics.udel.edu/resources/publications/bcvi-proceedings/.

Segura-Bedmar I, Martínez Fernández P, Herrero Zazo M. Semeval-2013 task 9: Extraction of drug-drug interactions from biomedical texts (ddiextraction 2013). Association for Computational Linguistics; 2013.

Bunescu R, Ge R, Kate RJ, Marcotte EM, Mooney RJ, Ramani AK, et al. Comparative experiments on learning information extractors for proteins and their interactions. Artif Intell Med. 2005;33(2):139–55.

Beltagy I, Lo K, Cohan A. SciBERT: a pretrained language model for scientific text. 2019. arXiv preprint arXiv:1903.10676 .

Zhang Y, Zhong V, Chen D, Angeli G, Manning CD. Position-aware attention and supervised data improve slot filling. In: Conference on Empirical Methods in Natural Language Processing. Copenhagen, Denmark: Association for Computational Linguistics; 2017.

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: Machine Learning in Python. J Mach Learn Res. 2011;12:2825–30.

Neumann M, King D, Beltagy I, Ammar W. ScispaCy: fast and robust models for biomedical natural language processing. In: Proceedings of the 18th BioNLP Workshop and Shared Task. Florence: Association for Computational Linguistics; 2019. pp. 319–327. https://aclanthology.org/W19-5034.

Tikk D, Thomas P, Palaga P, Hakenberg J, Leser U. A comprehensive benchmark of kernel methods to extract protein-protein interactions from literature. PLoS Comput Biol. 2010;6(7):e1000837.

Smola A, Vishwanathan S. Fast kernels for string and tree matching. Adv Neural Inf Process Syst. 2002;15:585–92. https://doi.org/10.5555/2968618.2968691.

Collins M, Duffy N. Convolution kernels for natural language. Adv Neural Inf Process Syst. 2001;14:625–32. https://doi.org/10.5555/2980539.2980621.

Moschitti A. Efficient convolution kernels for dependency and constituent syntactic trees. In: European Conference on Machine Learning. Springer; 2006. pp. 318–329.

Kuboyama T, Hirata K, Kashima H, Aoki-Kinoshita KF, Yasuda H. A spectrum tree kernel. Inf Media Technol. 2007;2(1):292–9.

Erkan G, Ozgur A, Radev D. Semi-supervised classification for extracting protein interaction sentences using dependency parsing. In: Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL). Prague, Czech Republic: Association for Computational Linguistics; 2007. pp. 228–237.

Airola A, Pyysalo S, Björne J, Pahikkala T, Ginter F, Salakoski T. All-paths graph kernel for protein-protein interaction extraction with evaluation of cross-corpus learning. BMC Bioinformatics. 2008;9:1–12.

Palaga P. Extracting relations from biomedical texts using syntactic information. Mémoire de DEA, Technische Universität Berlin; 2009. p. 138.

Funding

This project was funded by the Department of Computer and Information Sciences at Temple University. The funding bodies played no role in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Author information

Authors and Affiliations

Contributions

S.E. and S.V. designed the study, S.E. implemented and experimentally evaluated the framework, S.E. and S.V. wrote and revised the paper.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Enayati, S., Vucetic, S. Leveraging shortest dependency paths in low-resource biomedical relation extraction. BMC Med Inform Decis Mak 24, 205 (2024). https://doi.org/10.1186/s12911-024-02592-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-024-02592-2