Abstract

Introduction

Open globe injuries (OGI) represent a main preventable reason for blindness and visual impairment, particularly in developing countries. The goal of this study is evaluating key variables affecting the prognosis of open globe injuries and validating internally and comparing different machine learning models to estimate final visual acuity.

Materials and methods

We reviewed three hundred patients with open globe injuries receiving treatment at Khatam-Al-Anbia Hospital in Iran from 2020 to 2022. Age, sex, type of trauma, initial VA grade, relative afferent pupillary defect (RAPD), zone of trauma, traumatic cataract, traumatic optic neuropathy (TON), intraocular foreign body (IOFB), retinal detachment (RD), endophthalmitis, and ocular trauma score (OTS) grade were the input features. We calculated univariate and multivariate regression models to assess the association of different features with visual acuity (VA) outcomes. We predicted visual acuity using ten supervised machine learning algorithms including multinomial logistic regression (MLR), support vector machines (SVM), K-nearest neighbors (KNN), naïve bayes (NB), decision tree (DT), random forest (RF), bagging (BG), adaptive boosting (ADA), artificial neural networks (ANN), and extreme gradient boosting (XGB). Accuracy, positive predictive value (PPV), recall, F-score, brier score (BS), Matthew correlation coefficient (MCC), receiver operating characteristic (AUC-ROC), and calibration plot were used to assess how well machine learning algorithms performed in predicting the final VA.

Results

The artificial neural network (ANN) model had the best accuracy to predict the final VA. The sensitivity, F1 score, PPV, accuracy, and MCC of the ANN model were 0.81, 0.85, 0.89, 0.93, and 0.81, respectively. In addition, the estimated AUC-ROC and AUR-PRC of the ANN model for OGI patients were 0.96 and 0.91, respectively. The brier score and calibration log-loss for the ANN model was 0.201 and 0.232, respectively.

Conclusion

As classic and ensemble ML models were compared, results shows that the ANN model was the best. As a result, the framework that has been presented may be regarded as a good substitute for predicting the final VA in OGI patients. Excellent predictive accuracy was shown by the open globe injury model developed in this study, which should be helpful to provide clinical advice to patients and making clinical decisions concerning the management of open globe injuries.

Similar content being viewed by others

Introduction

Open globe injury (OGI) is a potentially blinding ocular injury that is a full-thickness wound of the eye wall [1]. Globally, OGI exhibits an alarming annual incidence of nearly 203,000 cases [2], contributing substantially to permanent visual impairment and blindness [3]. In comparison to closed-globe injuries, OGI has a more visual damage, usually requires surgical repair, and increases the financial cost to society, the healthcare system, and patients. Numerous factors influence the final visual acuity (VA) in ocular trauma patients. Key determinants are age, trauma mechanism, whether relative afferent pupillary defects (RAPDs) are present or not, initial VA, hyphema, the wound’s size and location, intraocular foreign body (IOFB), vitreous hemorrhage, detachment of retinal, damage to the lens, and the Ocular Trauma Score (OTS) value, as highlighted by previous studies [4, 5].

From a clinical standpoint, accurately estimating a patient’s final visual outcome when an open globe injury occurs poses a significant challenge. To address this, establishing precise predictive models becomes imperative. The Ocular Trauma Score (OTS) is the most popular approach [6], which considers six factors, including the initial VA, endophthalmitis, retinal detachment, globe rupture, perforating injury, and relative afferent pupillary defect (RAPD) to provide prognostic assessments. However, OTS has been developed nearly 20 years ago. The improvements of surgical methods and equipment, especially the advancement of vitreoretinal surgeries during this period, probably has impacted the validity the OTS as a prognosis estimation system. Notably, OTS lacks consideration for certain complications of ocular trauma, such as traumatic cataract, which is a treatable condition [7]. In response to these limitations, alternative prognostic models have been proposed.

The classification and regression tree (CART), introduced in 2008, offers another approach for predicting visual outcomes in OGI patients [8]. Islam et al. and Toit et al. validated the OTS score to predict final VA and announced it as a valuable tool [9, 10]. By Compare both OTS and CART by Lee et al. and Man et al., Lee recommend that using both OTS and CART together in eye trauma assessments leads to more accurate predictions of vision outcomes while Man found OTS has higher accuracy [11, 12]. Choi et al. (2021) established a predictive tool for OGI utilizing machine learning algorithms on 171 patients, demonstrating the evolving landscape of prognostic methodologies. Boosted decision tree had the best result in predicting final VA [13,14,15]. Aoun et al. (2023) investigated the application of Support Vector Machines (SVM) and Neural Networks for predicting final visual acuity (VA) in 87 patients with ocular trauma. Their findings demonstrated superior accuracy with SVM compared to neural networks [16].

Algorithms leveraging machine learning exhibit robust capabilities in processing medical decision-making data, particularly in the realm of clinical predictions [17, 18]. developing a model able to forecast outcomes based on known outcomes and new input values, the supervised learning algorithm is utilized [19]. . This approach is particularly well-suited for handling extensive and intricate medical datasets [20].

In the context of ocular trauma, specifically Open Globe Injury (OGI), our study focused on harness the power of supervised machine learning approaches. Through the application of these methodologies, we endeavored forecasting the final Visual Acuity (VA) in patients affected by OGI.

Materials and methods

Study design and ethics

A comprehensive retrospective review was undertaken, involving 301 treated Open Globe Injuries (OGI) patients at Khatam-al-Anbia Eye Hospital, Mashhad, Iran during the period spanning 2020 to 2022. The Institutional Review Board of Mashhad University of Medical Sciences approved this study (IRB code: IR.MUMS.MEDICAL. REC.1399.060.). The study’s retrospective design ensured the meticulous examination of past cases while safeguarding the confidentiality and anonymity of the patients’ data. We declare that all techniques were developed in accordance with the regulations and rules.

-

i.

Inclusion and Exclusion Criteria In this investigation, the study cohort comprised all admitted patients diagnosed with Open Globe Injuries (OGI), with no limitations based on age or gender. According to the implementation protocol in our center, OGI patients undergo primary repair surgery within 24 h of referral. Considering that the delay in restoration may affect the final vision prognosis if the surgery is delayed for more than 48 h, this case was not included in the study. Strict data cleaning techniques were used to guarantee the dataset’s dependability and integrity. A meticulous process was undertaken to eliminate noise, irrelevant information, and inconsistencies within the collected data. This involved the removal of null values, ensuring that missing or incomplete data did not compromise the quality of the analysis. Additionally, unrealistic values were identified and systematically excluded, further enhancing the accuracy and validity of the study findings.

-

ii.

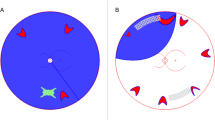

Input Data The data was collected from OGI dataset was included 301 patients with 12 features as prognostic factors including: Age, Sex, Type, Grade VA (Initial VA grade), Relative Afferent Pupillary Defect (RAPD), Zone, Traumatic Cataract, Traumatic optic Neuropathy (TON), Intraocular Foreign Body (IOFB), Retinal Detachment (RD), Endophthalmitis, and OTS grade. More descriptions and related images of dataset features are provided in Table 1; Fig. 1.

Within this dataset, a dual-feature categorization was implemented, classifying information into demographic and clinical parameters for each OGI patient. These categories were pivotal as input data for the comprehensive study.

Three different types of features for preprocessing and developing prognosis models were considered: (1) categorical features, (2) continuous features, and (3) binary variables (Table 2). Table 3 displays the features distribution.

-

iii.

Outcome The final visual acuity (VA) is a continuous number between 0 and 1. The mean ± standard deviation of the duration of the follow-up was 364.5 ± 53.2 days with the range of 275–402 days. The final VA was defined as the patient’s best corrected distance VA at the last follow-up examination after performing rescue surgeries such as pars plana vitrectomy, cataract surgery etc. to manage early and late complications. In the pursuit of enhanced precision through machine learning methodologies, a pragmatic approach involves the categorization of this continuum into three distinct classes. Table 4 provides a visual representation of the stratification of final visual acuity within these classes. Obviously, binary outcome has more accuracy than multiclass outcome. This classification approach discerns patients into three categories based on their final visual acuity outcomes: those with poor VA (83 individuals, accounting for 27.5% of the cohort), moderate VA (84 individuals, constituting 27.9%), and good VA (134 individuals, representing 44.5% of the studied population).

Methodology

This study presents a visual acuity prediction model. First of all, preprocessing and feature engineering on dataset’s features were done. After that, ten different ML models were developed. Finally, all developed models were assessed determining the optimal model based on outcome. Figure 2 illustrates the Three-Phases methods. The steps as follows.

Step one: data preprocessing and feature engineering

There were four phases to preprocess data. After handling missing data and applying include/exclude criteria, these stages were followed: (1) Statistical analysis of input and output characteristics was done; (2) Data scaling, normalization techniques, and feature selection (the effect of features on predictions) were employed to handle different input data binary, categorical, and continuous); (3) To reduce the impact of unbalanced datasets, data sampling techniques were applied. Detailed sections as follows.

-

i.

Statistical Analyses The features and outcomes were subjected to a descriptive analysis. The statistically significant differences between the values of categorical and continuous features in three classes (poor VA, moderate VA, and good VA) were compared using the Chi-square test and the t-test. Also, to remove any potential duplication, features and outcome correlations were calculated.

-

ii.

Data Normalization and Scaling In order to reduce the effects of differing continuous and categorical feature value ranges on the ML models performance, data scaling methods were proposed. All categorical features including age and gender were normalized by one-hot encoding to zero and one. Also, numerical features were normalized by Z-score technique to bring them to a common scale.

-

iii.

Feature Importance For feature selection, we employed mutual information and random forest techniques, which provide each feature a score, then arrange the features in order of that score. It is important to identify irrelevant attributes from our model. The most significant input variables for the final VA were chosen and imported to model. Results shows the accuracy with all features versus only important features has no significant difference, therefore, all features entered to model. Figure 3 shows feature importance.

-

iv.

Resampling Imbalanced Data The imbalanced distribution of groups leads to overfit and poor performance of ML models [22]. This was one the most challenging problem in our dataset. Over-sampling and under-sampling sampling techniques were offered to deal with this problem [23]. For increasing sample counts in groups with low cases, Random Over-Sampling (ROS), Synthetic Minority Oversampling Technique (SMOTE), and borderline SMOTE are used, while for decreasing sample counts in groups with high cases, under-sampling algorithms, such as Random Under-Sampling (RUS) and Tomek links, were applied [24,25,26,27]. On the other hand, the SMOTE Tomek algorithm is a combination of oversampling and undersampling techniques [23]. . Moreover, the technique makes use of SMOTE for data enhancement on the minority class and Tomek Links to remove some samples from the majority class. This technique is better at improving the performance of machine learning models and eliminating noise or ambiguities in decision making. The current study assesses sampling strategies in the following categories: ROS, SMOTE, RUS, Tomek, and SMOTE Tomek using a basic LR model. In comparison with other methods, SMOTE Tomek has shown the best results.

Step two: development of ML models

We introduce our final strategy for OGI CDSS using cross-validation to determine the best model parameters and configurations in this section.

We were considered as the most indicative machine learning models with the best performance in prior studies as selected models to predict final VA in the OGI patients. K-fold cross-validation to train models and avoid the problem of overfitting was used in this study. We used cross-validation within the training set (80% of the data) to assess model performance and select optimal hyperparameters. By dividing the training data into folds, we could train models on one fold and evaluate them on the others, simulating unseen data [28]. . For ANN, the model employs a sequential architecture consisting of a single hidden layer with 16 neurons and ReLU activation, followed by an output layer with 3 neurons and softmax activation for multi-class classification. Hyperparameters were selected through a grid search methodology to optimize performance based on validation accuracy. The final choices include the RMSprop optimizer, a batch size of 10, and a maximum of 8,000,000 epochs. Early stopping was implemented to prevent overfitting, halting training if validation loss didn’t improve for 10 consecutive epochs. A K-Nearest Neighbors classifier with 5 neighbors was trained and evaluated. The number of neighbors acquired using K-folds to obtain a more robust estimate of optimal value. The Random Forest Classifier was tuned with grid search, exploring different numbers of trees (2-100), maximum tree depths [5,6,7,8,9,10,11,12,13,14,15], minimum samples for splitting (2-100), and minimum samples per leaf [1,2,3,4,5]. The Support Vector Machine used a linear kernel with a regularization parameter optimized through grid search. The Decision Tree Classifier had its maximum depth and minimum split/leaf samples similarly tuned. Random state of 42 used for reproducibility in ML models.

Performance metrics were used to assess K-fold cross-validation on the training dataset. The models with the greatest performance are the ultimate predictors.

Step three: evaluation of the models’ performance

The state-of-the-art references for multiclass classifiers and regressors were used as the basis for determining the evaluation metrics. The total number of patients that final VA was correctly anticipated is represented by a true positive (TP) and the total number of patients with wrong predicted final VA is indicated by a false negative (FN). Also, the total number of patients whose blindness was accurately predicted is shown by a true negative (TN) and the total number of patients misdiagnosed as having good VA is represented by a false positive (FP).

Recall, F1 score, Mathews Correlation Coefficient (MCC), Area Under Curve of Receiver Operator Characteristic (AUC-ROC), the Area Under the Curve of Precision-Recall (AUC-PRC), Accuracy, the Calibration Plot, and the Brier Score was computed [29,30,31,32].

Results

In this study, the Python 3.8 (Anaconda/Jupyter) platform, along with the Pandas, Scikit-learn, and NumPy frameworks, were utilized in the development, evaluation, and visualization of all models. The machine, which was running Microsoft Windows 10 Enterprise, had a 2.5 GHz Intel Core i5 × 64 processor and 4 GB of RAM. The next subsection will provide the findings of the statistical examination of the input features and the significance of features in predictive models. Furthermore, the performance of models to predict final VA is evaluated.

Results of the statistical analysis

The findings of a descriptive statistical analysis of continuous and categorical features are displayed in Table 5. Of 301 OGI patients were admitted in the Ophthalmology Department at the Khatam-al-Anbia hospital, 12 features were selected. For continuous features, we computed the mean and standard deviation (SD), while for categorical features as well as binary features, the number and percentage were calculated. Moreover, the independent samples t-test was used for continuous features and the Chi-square test was used for categorical features to determine whether there was a statistically significant difference between the three study classes (Poor VA, Moderate VA, and Good VA). As a result, between most features of the three classes there were statistically significant differences (95% confidence interval, P-value less than 0.05). Although, in the features including age, intraocular foreign body (IOFB), and endophthalmitis were not significantly different between the three classes.

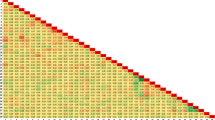

Moreover, we calculated correlation between the factors. The relationship between these features is depicted in the correlation matrix. This correlation was better illustrated by the heat map. Evaluating the potential correlations between the features, the Pearson function with threshold 0.85 was used. The Pearson correlation coefficient can also be used to assess whether two variables have a significant relationship or not [33]. The corresponding cell is red if there is a strong correlation (greater than a threshold) between two features. The outcomes demonstrated that there is little correlation between the predictive features. The correlation of two features is indicated by the value inside each cell (refer to Fig. 4). According to the Fig. 4 characteristics such as age, OTS, Grade VA, and type are some of the essential features in OGI patients. To determine the clinical factors’ significance for the research, a random forest ranking is employed (Fig. 3). The most illuminating set of features can be chosen by plotting the random forest’s interpretation of feature importance. It selects many possible combinations of variables to find the best features [34]. Python 3.8 was used to draw all the figures.

Evaluation of quality

Using a training dataset consisting of 80% of the primary records, we developed predictive ML models of OGI patients. Also, 80 and 20% of the dataset were randomly selected for training and testing. Moreover, the model parameters were tuned and determined using GridsearchCV, a technique for obtaining the best parameter values based on the grid’s specified set of parameters. The SMOTE Tomek method was employed to create a balanced dataset. Of the total, the group with good acuity made up 45% (n = 134), followed by the blind group (27%, n = 83) and the mild acuity group (28%, n = 84). The subsequent subsections assess the models’ predictive accuracy for the result.

Evaluation of classifiers quality to predict final VA for OGI patients

In this research the final VA in OGI patients predicted using ten models. A combination of classic and ensemble models, which consisted of Multinomial LR, KNN, SVM, ANN, Decision Tree, Random Forest, Naïve Bayas, XGB, Bagging, and ADA were used. The last four methods (Random Forest, XGB, Bagging, and ADA) are ensemble models. Then in the analysis phase, to compare the developed models three perspectives were applied: [1] predictive performance of models, [2] models’ ability assessment for discrimination employing the area under the curves (AUCs), and [3] a calibration plot to evaluate goodness-of-fit in models.

Predictive performance of models. Applying the measurements outlined in 2.3.3, the models’ performance was evaluated for predicting final VA of OGI patients. Table 6 displayed the suggested ML models’ predictive performance. Based on results, through all metrics, the ANN technique generated better results than any other model. It showed the highest values of AUC-ROC (0.96), AUC-PRC (0.91), precision (0.89), sensitivity (0.81), accuracy (0.93), F-measure (0.81), and MCC (0.75).

Assessment of models’ discriminating abilities. The receiver operating characteristic curves (AUCROC) and precision-recall curves (AUC-PRC) were applied evaluating the power of models to discriminate. While AUC-PRC displays precision values for recall (sensitivity) values, AUC-ROC illustrates the trade-off between specificity and sensitivity. Notably, for imbalanced datasets, metrics like AUC-PRC and AUC-ROC are usually considered to be the most informative [35]. Figure 5 illustrated AUC plots for ten models and for each class separately. AUC-ROC and AUC-PRC for all models in one shot illustrated in Fig. 6.1 and 6.2. AUC-ROC = 0.96 and AUC-PRC = 0.91, the highest overall value, were obtained by ANN, while other models showed respectable performance to forecast the final VA of OGI patients in both plots. Moreover, according to the accuracy of the methods, Repeated tests revealed that the models with the highest average accuracy were the ANN (Acc = 0.93) and the RF, LR, and XGB (Acc = 0.90).

Goodness-of-fit assessment in models. A visual tool for evaluating the degree of agreement between observations and predictions in the predicted values is the calibration plot. The Log loss, another metric for evaluating the quality of classification models, were calculated as calibrated log loss and uncalibrated log loss to compare, showed ANN (Calibrated-log-loss = 0.232) and RF (Calibrated-log-loss = 0.246) had smallest value in all models (Table 7). The calibration plots illustrated in Fig. 7. Also, the models with the best brier score loss values (BS, a metric composed of terms for refinement and calibration) among all of them were ANN (BS = 0.201) and RF (BS = 0.311) (Table 6).

Discussion

This study aimed to evaluate and compare the efficacy of different ML algorithms regarding the prediction of the final VA in patients with OGI. We used 12 features in 301 patients to train and evaluate ML models. Gender, type of trauma, zone of involvement, RAPD, presence of traumatic cataract, endophthalmitis, RD, traumatic optic neuropathy, IOFB, age, OTS score, and initial VA were the selected features for prognostication. To address the imbalanced distribution of classes (three classes here) which leads to overfitting and low performance of ML classes, SMOTE technique was used.

Since some variables were categorical and some were continuous, chi-square test and t-test were used to determine if there was a statistically significant difference between the three study groups (Poor VA, Moderate VA, and Good VA). In the features including age, intraocular foreign body (IOFB), and endophthalmitis were not significantly different between the three classes. The results of most of the previous studies indicate that the presence of IOFB or endophthalmitis is a significant factor in worsening the prognosis. Also, IOFB and endophthalmitis are indications for early vitrectomy (at the earliest possible time) in OGI patients [36]. This inconsistency could be due to early vitrectomy in these patients or the small sample size in each subgroup in this study. Moreover, we calculated correlation between the factors. The correlation matrix illustrates that the predictive features are not highly correlated. A random forest ranking is used to detect the importance of the clinical factors which showed that age, OTS, Grade VA, and type of trauma are some of the essential features in OGI patients. Choi et al. were applied some feature selection metrics [13] while others just used classical statistical methods. Factors associated with final VA which reported in previous studies includes age, initial VA, mechanism of injury, location and size of the wound, RAPDs, adnexal trauma, vitreous prolapse, and ocular tissue damage [6,7,8, 37,38,39,40]. Previous research on risk factors associated with prognosis in individuals with OGI has mostly used small sample sizes. Furthermore, the number of features was limited. Considering the complexities of treatment and the wide range of complications that may occur after OGI, the use of machine learning algorithms and various features can help in increasing the accuracy of predicting the vision prognosis of patients.

Employing a combination of classic and ensemble models, which consisted of Multinomial LR, KNN, SVM, ANN, Decision Tree, Random Forest, Naïve Bayas, XGB, Bagging, and ADA demonstrated ANN technique has superior results among all the models in all metrics. It shows the highest values of AUC-ROC (0.96), AUC-PRC (0.91), precision (0.89), sensitivity (0.81), accuracy (0.93), F-measure (0.81), and MCC (0.75). GridsearchCV as a technique for finding the optimal parameter values from a given set of parameters in a grid, was employed to tune and determine the model parameters during the 10-fold-cross-validation.

Successful initial repair and subsequent visual rehabilitation is a challenge for ophthalmic trauma surgeons. Besides, counseling of the trauma victim and his family is one of the crucial steps in the patient’s management. Despite the fact that the care of OGI has altered due to the development of new modalities and enhanced surgical techniques, we still need to counsel and prognosticate any patient with OGI before and after the primary repair surgery. Numerous studies have been evaluated the significant factors affecting final visual outcome in OGI previously [13, 41, 42]. In this study, a random forest ranking is used to detect the importance of the clinical factors. Age, OTS score, initial VA grade, and the type of trauma were the most important features. However, the reason for the less importance of features like IOFB and endophthalmitis can be the small number of patients with these conditions. Evaluation of the effect of age on the vision prognosis of OGI patients has had inconsistent results in previous studies [36, 43]. Inter-population variations in culture, lifestyle, mean lifespan, employment, and socioeconomic level might be the cause of this diversity. Regarding the type of injury, our results were compatible with those of previous studies. Globe rupture had the worst visual prognosis. Globe rupture is typically more closely linked to retinal detachment, optic nerve injury, and retinal damage. Furthermore, there are further challenges with primary repair surgery and rescue vitrectomy in this trauma mechanism. We showed that a poor initial visual acuity was an essential prognostic factor. According to this finding, lesser ocular tissue damage is reflected in a superior initial VA, which guarantees a better visual prognosis.

The study has some limitations and precautions. The data were collected from only one eye hospital from one city. Therefore, it is suggested that data be collected from several centers in different geographical locations, and external verification will have better performance and reliability. Moreover, the size of the dataset used was not significant, which is considered a precaution in this study. Besides, the occurrence of phthisis bulbi had not been investigated in this study. Future research topics could include evaluating the factors associated with the incidence of phthisis bulbi or sympathetic ophthalmia, selecting the weight corresponding to each feature and determining model parameters using meta-heuristic algorithms and fuzzy theory for ranking.

Conclusion

As classic and ensemble ML models were compared, results shows that the ANN model was the best. As a result, the framework that has been presented may be regarded as a good substitute for predicting the final VA in OGI patients. Excellent predictive accuracy was shown by the open globe injury (OGI) predictive model developed in this research, which should be helpful to provide clinical advice to patients and to make clinical decisions concerning the management of open globe injuries.

Data availability

No datasets were generated or analysed during the current study.

References

Kuhn F, Morris R, Witherspoon CD. Birmingham Eye Trauma Terminology (BETT): terminology and classification of mechanical eye injuries. Ophthalmol Clin North Am. 2002;15(2):139–43.

Négrel A-D, Thylefors B. The global impact of eye injuries. Ophthalmic Epidemiol. 1998;5(3):143–69.

Shin YI, Lee YH, Kim KN, Lee SB. The availability of modified ocular trauma score in Korean patients with open globe injury. J Korean Ophthalmological Soc. 2013;54(12):1902–6.

Anguita R, Moya R, Saez V, Bhardwaj G, Salinas A, Kobus R, et al. Clinical presentations and surgical outcomes of intraocular foreign body presenting to an ocular trauma unit. Graefe’s Archive Clin Experimental Ophthalmol. 2021;259:263–8.

Guven S, Durukan AH, Erdurman C, Kucukevcilioglu M. Prognostic factors for open-globe injuries: variables for poor visual outcome. Eye. 2019;33(3):392–7.

Kuhn F, Maisiak R, Mann L, Mester V, Morris R, Witherspoon CD. The ocular trauma score (OTS). Ophthalmology Clinics of North America. 2002;15(2):163-5, vi.

Unver YB, Kapran Z, Acar N, Altan T. Ocular trauma score in open-globe injuries. J Trauma Acute Care Surg. 2009;66(4):1030–2.

Schmidt G, Broman A, Hindman HB, Grant MP. Vision survival after open globe injury predicted by classification and regression tree analysis. Ophthalmology. 2008;115(1):202–9.

du Toit N, Mustak H, Cook C. Visual outcomes in patients with open globe injuries compared to predicted outcomes using the ocular trauma scoring system. Int J Ophthalmol. 2015;8(6):1229–33.

ul Islam Q, Ishaq M, Yaqub MA, Mehboob MA. Predictive value of ocular trauma score in open globe combat eye injuries. J Ayub Med Coll Abbottabad. 2016;28(3):484–8.

Lee BW, Hunter D, Robaei DS, Samarawickrama C. Open globe injuries: epidemiology, visual and surgical predictive variables, prognostic models, and economic cost analysis. Clin Exp Ophthalmol. 2021;49(4):336–46.

Man C, Steel D. Visual outcome after open globe injury: a comparison of two prognostic models—the ocular trauma score and the classification and regression tree. Eye. 2010;24(1):84–9.

Choi S, Park J, Park S, Byon I, Choi H-Y. Establishment of a prediction tool for ocular trauma patients with machine learning algorithm. Int J Ophthalmol. 2021;14(12):1941.

Parver LM, Dannenberg AL, Blacklow B, Fowler CJ, Brechner RJ, Tielsch JM. Characteristics and causes of penetrating eye injuries reported to the National Eye Trauma System Registry, 1985-91. Public Health Rep. 1993;108(5):625.

DE BUSTROS S, MICHELS RG, GLASER BM. Evolving concepts in the management of posterior segment penetrating ocular injuries. Retina. 1990;10:S72–5.

Aoun SB, Sammouda T, Walha W, Sahli M, Trigui A. Role of Artificial Intelligence in Predicting Visual Prognosis after Open Globe Trauma in Children. 2023.

Handelman G, Kok H, Chandra R, Razavi A, Lee M, Asadi H. eD Octor: machine learning and the future of medicine. J Intern Med. 2018;284(6):603–19.

Rahimian F, Salimi-Khorshidi G, Payberah AH, Tran J, Ayala Solares R, Raimondi F, et al. Predicting the risk of emergency admission with machine learning: development and validation using linked electronic health records. PLoS Med. 2018;15(11):e1002695.

Panch T, Szolovits P, Atun R. Artificial intelligence, machine learning and health systems. J Global Health. 2018;8(2).

Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S et al. Artificial intelligence in healthcare: past, present and future. Stroke Vascular Neurol. 2017;2(4).

Siderov J, Tiu AL. Variability of measurements of visual acuity in a large eye clinic. Acta Ophthalmol Scand. 1999;77(6):673–6.

Tarik A, Aissa H, Yousef F. Artificial intelligence and machine learning to predict student performance during the COVID-19. Procedia Comput Sci. 2021;184:835–40.

Wongvorachan T, He S, Bulut O. A comparison of undersampling, oversampling, and SMOTE methods for dealing with imbalanced classification in educational data mining. Information. 2023;14(1):54.

He H, Ma Y. Imbalanced learning: foundations, algorithms, and applications. 2013.

Kaur P, Gosain A, editors. Comparing the behavior of oversampling and undersampling approach of class imbalance learning by combining class imbalance problem with noise. ICT Based Innovations: Proceedings of CSI 2015; 2018: Springer.

Tomek I. Two modifications of CNN. 1976.

Van Hulse J, Khoshgoftaar TM, Napolitano A, editors. An empirical comparison of repetitive undersampling techniques. 2009 IEEE international conference on information reuse & integration; 2009: IEEE.

Fushiki T. Estimation of prediction error by using K-fold cross-validation. Stat Comput. 2011;21:137–46.

Goonewardana H. Evaluating Multi-Class Classifiers Jan 3, 2019 [ https://medium.com/apprentice-journal/evaluating-multi-class-classifiers-12b2946e755b.

Jiao Y, Du P. Performance measures in evaluating machine learning based bioinformatics predictors for classifications. Quant Biology. 2016;4:320–30.

Vujović Ž. Classification model evaluation metrics. Int J Adv Comput Sci Appl. 2021;12(6):599–606.

Zhu Q. On the performance of Matthews correlation coefficient (MCC) for imbalanced dataset. Pattern Recognit Lett. 2020;136:71–80.

Cohen I, Huang Y, Chen J, Benesty J, Benesty J, Chen J et al. Pearson correlation coefficient. Noise Reduct Speech Process. 2009:1–4.

Rigatti SJ. Random forest. J Insur Med. 2017;47(1):31–9.

Saito T, Rehmsmeier M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE. 2015;10(3):e0118432.

Liu Y, Wang S, Li Y, Gong Q, Su G, Zhao J. Intraocular foreign bodies: clinical characteristics and prognostic factors influencing visual outcome and globe survival in 373 eyes. Journal of Ophthalmology. 2019;2019.

Kuhn F, Morris R, Witherspoon C, Mester V. The Birmingham eye trauma terminology system (BETT). J Fr Ophtalmol. 2004;27(2):206–10.

Matthews GP, Das A, Brown S. Visual outcome and ocular survival in patients with retinal detachments secondary to openor closed-globe injuries. SLACK Incorporated Thorofare, NJ; 1998. pp. 48–54.

Rahman I, Maino A, Devadason D, Leatherbarrow B. Open globe injuries: factors predictive of poor outcome. Eye. 2006;20(12):1336–41.

Weng Y, Ma J, Zhang L, Jin H-Y, Fang X-Y. A presumed iridocyclitis developed to panophthalmitis caused by a non-metallic intraocular foreign body. Int J Ophthalmol. 2019;12(5):870.

Cruvinel Isaac DL, Ghanem VC, Nascimento MA, Torigoe M, Kara-José N. Prognostic factors in open globe injuries. Ophthalmologica. 2003;217(6):431–5.

Esmaeli B, Elner SG, Schork MA, Elner VM. Visual outcome and ocular survival after penetrating trauma: a clinicopathologic study. Ophthalmology. 1995;102(3):393–400.

Evelyn-Tai LM, Zamri N, Adil H, Wan-Hazabbah WH. Open globe injury in Hospital Universiti Sains Malaysia-A 10-year review. Int J Ophthalmol. 2014;7(3):486.

Acknowledgements

This study is the result of a research project approved by Mashhad University of Medical Sciences. The authors would like to appreciate the cooperation of all the patients in conducting this study.

Funding

The present study has no funding.

Author information

Authors and Affiliations

Contributions

R.M and M.M prepared the manuscript. R.M developed and analyzed all prediction models with python (All code resource is freely available at https://github.com/rahi60/OGIpatientFinalVA.git )S.E. and N.SH. assisted in designing the study and helped in the idea of the study. A. S, M. S, H.GH, S. Z-GH, A. K, M. A, M. A, S.MH, S.SR, and M. AA gathered the dataset. All authors have read and approved the content of the manuscript.

Corresponding author

Ethics declarations

Ethical approval and consent to participate

The Institutional Review Board of Mashhad University of Medical Sciences approved this study (IRB code: IR.MUMS.MEDICAL. REC.1399.060.). The study’s retrospective design ensured the meticulous examination of past cases while safeguarding the confidentiality and anonymity of the patients’ data. We declare that all techniques were developed in accordance with the regulations and rules. Written informed consent was obtained from all participants.

Consent for publication

This paper didn’t include any individual person’s data. Rights and permissions Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

All code resource is freely available at https://github.com/rahi60/OGIpatientFinalVA.git.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Shariati, M.M., Eslami, S., Shoeibi, N. et al. Development, comparison, and internal validation of prediction models to determine the visual prognosis of patients with open globe injuries using machine learning approaches. BMC Med Inform Decis Mak 24, 131 (2024). https://doi.org/10.1186/s12911-024-02520-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-024-02520-4