Abstract

Background

Usability is a key factor affecting the acceptance of mobile health applications (mHealth apps) for elderly individuals, but traditional usability evaluation methods may not be suitable for use in this population because of aging barriers. The objectives of this study were to identify, explore, and summarize the current state of the literature on the usability evaluation of mHealth apps for older adults and to incorporate these methods into the appropriate evaluation stage.

Methods

Electronic searches were conducted in 10 databases. Inclusion criteria were articles focused on the usability evaluation of mHealth apps designed for older adults. The included studies were classified according to the mHealth app usability evaluation framework, and the suitability of evaluation methods for use among the elderly was analyzed.

Results

Ninety-six articles met the inclusion criteria. Research activity increased steeply after 2013 (n = 92). Satisfaction (n = 74) and learnability (n = 60) were the most frequently evaluated critical measures, while memorability (n = 13) was the least evaluated. The ratios of satisfaction, learnability, operability, and understandability measures were significantly related to the different stages of evaluation (P < 0.05). The methods used for usability evaluation were questionnaire (n = 68), interview (n = 36), concurrent thinking aloud (n = 25), performance metrics (n = 25), behavioral observation log (n = 14), screen recording (n = 3), eye tracking (n = 1), retrospective thinking aloud (n = 1), and feedback log (n = 1). Thirty-two studies developed their own evaluation tool to assess unique design features for elderly individuals.

Conclusion

In the past five years, the number of studies in the field of usability evaluation of mHealth apps for the elderly has increased rapidly. The mHealth apps are often used as an auxiliary means of self-management to help the elderly manage their wellness and disease. According to the three stages of the mHealth app usability evaluation framework, the critical measures and evaluation methods are inconsistent. Future research should focus on selecting specific critical measures relevant to aging characteristics and adapting usability evaluation methods to elderly individuals by improving traditional tools, introducing automated evaluation tools and optimizing evaluation processes.

Similar content being viewed by others

Background

Socioeconomic development in most regions worldwide has been accompanied by large reductions in fertility and equally substantial increases in life expectancy, which have led to an increase in both the number and the proportion of older people [1]. The number of adults aged 65 or older worldwide is projected to grow rapidly, rising from 727 million in 2020 to 1.5 billion in 2050 [2]. As individuals age, their intrinsic capacities decline, and the risk of multimorbidity increases, resulting in the need for ongoing monitoring or treatment [3]. However, there is a disconnect between health-care needs and health-care utilization in older people who is caused by the high cost of medical expenses, the shortage of medical human resources, and the lack of access to health services due to functional constraints [4]. To breakdown the above barriers, internet-based mobile health services have emerged. Mobile health (mHealth) refers to medical and public health services supported by mobile devices, and a software platform on such devices is called a mHealth app, with an estimation number of 325,000 in 2017 [5, 6].

In 2019, the adoption rate of smartphones by older adults aged 55–91 years was 40–68% [7]. In this context, mHealth is a promising tool for promoting healthy aging through evidence-based self-management interventions that help older adults maintain functional ability and independence [8]. The effectiveness of mHealth in promoting healthy behavior and managing chronic diseases has been proven [9]. Nevertheless, the acceptance of mHealth tools by the elderly has been limited [10], with 43% seniors over 70 quit using them during the first 14 days [11]. Usability is considered a vital factor influencing the adoption of mHealth by the elderly [12, 13], which is defined as “the extent to which a system can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use” [14, 15]. Effectiveness, efficiency and satisfaction are the critical measures of usability and thus the key points of evaluation [16]. A usable mHealth app with an age-friendly interface has many benefits for elderly individuals, including enhancing their well-being, increasing accessibility and reducing the risk of harm [17,18,19]. At present, a number of published standards have pointed out that usability evaluation is an indispensable step in the development of mHealth apps, and call for combining through the usability evaluation methods from empirical research [20,21,22].

Several reviews have been conducted to identify usability methods for mHealth apps. Zapata et al. reviewed empirical usability methods for mHealth apps by analyzing 22 studies [23]. Four evaluation methods were identified: questionnaires, interviews, logs and thinking aloud. After four years, the review was updated to include 133 articles [24], suggesting that further research should explore which methods are best suited for the target users according to their physiology and health conditions [24]. Considering the particularities of the disease, Davis et al. provided a review of usability testing of mHealth interventions for HIV [25]. In summary, previous reviews have three limitations. First, usability methods suitable for older adults have not received attention. As the elderly generally face physical, cognitive, and perceptual barriers and have lower overall familiarity with technology [26], the evaluation methods they use may be different from those of other age groups. Inappropriate methods may increase the cognitive load of elderly individuals, leading to inaccurate assessment results. Second, the global mHealth app market size was valued at USD 40.05 billion in 2020, significantly higher than in 2015 [27]. It is very likely that the types of usability evaluation methods employed have been optimized or broadened. Thus, it is necessary to reinvestigate the methods currently being used. Third, user-centered design is a powerful framework for creating easy-to-use and satisfying mHealth apps, which can be divided into three phases: requirements assessment, development, and post release [28, 29]. Choosing the appropriate usability methods at different phases can improve the cost-effectiveness of development. However, clear guidance for method selection has not been provided in the existing reviews. Based on previous literature [30,31,32,33], the mHealth app usability evaluation framework (Table 1) was proposed to identify the evaluation timeline and focus of usability, including three stages.

Based on the above analysis, there is a need to focus on the usability evaluation process of mHealth apps for the elderly and classify the evaluation approaches according to the mHealth app usability evaluation framework. The aim of this study includes (1) identifying, exploring, and summarizing the current state of the literature on the usability evaluation of mHealth apps for older adults and (2) incorporating evaluation methods into the appropriate stages. We performed a scoping review, as our aim is to map the literature on usability testing rather than seeking to answer a specific question by looking only for the best available information.

Methods

TO complete this scoping review, the framework developed by Arksey and O’Malley was followed [34]. The reporting of this study followed the instructions suggested by the PRISMA extension for scoping reviews (Additional file 1: Multimedia Appendix S1).

Identifying the research question

The following research questions were established to guide this review: (1) What is the current state of the literature that addresses usability evaluation for developing mHealth apps relevant to older adults? (2) What health conditions/diseases are being addressed by the apps that employ usability evaluation? (3) What critical measures of usability are addressed in these studies? (4) What empirical methods and techniques are used to evaluate usability?

Searching for relevant studies

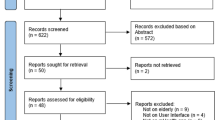

Ten databases shown in Fig. 1 of different disciplines were searched, such as medicine, nursing, allied health, computer and engineering sciences. The following keywords were identified and combined to address the research questions: (1) mobile devices, (2) the software used in the devices, (3) improving health as the main purpose, (4) mobile health, (5) usability as the research topic, and (6) the elderly as the target population. Chinese synonyms were used to maximize inclusion. Keywords and related subject headings were searched using Boolean operators. The search string is shown in Table 2. Finally, the reference lists of the included studies were reviewed to identify additional studies.

Selecting relevant studies to include

The inclusion criteria were smart device-based mHealth studies that (1) focused on mHealth apps, (2) conducted usability evaluations, (3) set the target users of the apps as elderly individuals, and (4) were published from January 2000 until December 2020. Only articles published in 2000 or after were selected to accommodate the release of the first touchscreen phone marketed as a smartphone [23]. The exclusion criteria were as follows: (1) non-English and non-Chinese-language publications, (2) did not specifically describe the process of usability evaluation, (3) unable to obtain full-text versions, and (4) conference abstracts. Two authors (QW and JL) independently screened the titles and abstracts first, followed by a full-text review, and conflicts were resolved through the judgment of a third author (JT) and team discussion.

Charting data from the selected literature

The descriptive analytical method was used in this stage [35]. A data charting form was developed to guide the data extraction. The variables entered included standard bibliographical information (i.e., authors, year of publication, source of publication, country of origin), health condition/disease addressed by the app, critical measures of usability, the process of usability evaluation (methods, environment, duration, number of participants), and reflections on the evaluation methods (researchers’ discussion on evaluation methods). Full articles were imported as pdf files into NVivo software to extract, organize and search related data. Two authors (QW and JL) extracted the data independently, and the discrepancies were resolved by team consultation.

Collating, summarizing and reporting the findings

This stage consisted of three substages: analyzing the data, reporting the results, and applying meaning to the results. For the first substage, a descriptive numerical summary was conducted to depict the characteristics and distribution of the included studies. Abductive approaches to qualitative content analysis, which combine the deductive and inductive phases, were used to analyze the data [36]. In the deductive phase, considering that the purpose of our research was to classify usability evaluation methods based on the development stages of mHealth apps and to recommend adopting a theoretical framework to systematically collate and summarize the extracted data [34], the three stages of the mHealth app usability evaluation framework were used as the theoretical categories (Table 1). The critical measures and evaluation methods of usability were classified into the appropriate theoretical categories, and the frequency of each variable was counted. In the inductive phase, the data extracted from articles and included in the variable “reflections on the evaluation methods” were read several times to summarize the statement of the advantages and disadvantages of the usability evaluation methods. Then, these statements were condensed and abstracted to interpret whether these methods were appropriate for use among elderly individuals. Finally, we identified possible gaps in the current studies and suggested evaluation methods that are suitable for elderly individuals.

Results

Search and screening results

The initial search obtained 1386 articles. After removing duplicates and reviewing the title, abstract and full text, 87 articles were selected. Nine more articles found through the reference list reviews were accepted. Finally, a total of 96 articles were included in this review. The flow diagram of the search procedure is presented in Fig. 1.

Characteristics of source documents

Figure 2 shows the number of articles published per year and the types of journals. The articles were published between 2010 and 2020, with only 4 articles published before 2014 [37,38,39,40], after which the growth rate increased and peaked of 27 articles in 2020. Health informatics journals were the main publication channel, accounting for 42% (n = 40) of the selected articles. Of the 96 studies included (Fig. 3), 41 were from Europe, 30 from America, 21 from Asia, and 4 from Australia. According to the mHealth app usability evaluation framework, the distribution of articles under 3 stages is presented in Fig. 4. It is worth noting that the assessment process of 12 studies involved two stages, and one study investigated user satisfaction in different countries after diagnosing and fixing usability problems in the laboratory and real setting [2]. Slightly less than one-third (n = 29, 30.2%) of the studies reported the iterative design-evaluation process of mHealth applications by involving end users and stakeholders. Additional file : Multimedia Appendix S2 provides an overview of the articles included in the scoping review.

Functions of the mHealth application

As shown in Fig. 5, the function of mHealth apps in the selected studies can be divided into four categories: wellness management (n = 39), disease management (n = 36), health-care services (n = 17), and social contact (n = 4). In the wellness management category, mHealth apps were used to improve the general health of older adults rather than focusing on specific diseases, which contained a variety of solutions, including fall prevention [41, 42], fitness [43, 44], lifestyle modification [45, 46], medication adherence [47, 48], health monitoring [40, 49], nutrition [50, 51], and cognitive stimulation [52, 53]. In the category of disease management, mHealth apps played a role in different stages of disease development, such as disease screening during diagnosis [54], decision support during treatment [33], and self-management during rehabilitation [55, 56]. In the health-care services category, mHealth apps have been a useful tool for helping health care providers optimize medical services and empowering users to access their health data during care transitions [38, 57, 58]. The last category of mHealth consisted of those providing social contact. These apps aimed to reduce social isolation and loneliness in older adults by encouraging social participation and strengthening ties with family members [59,60,61,62]. In addition, the target users of the mHealth apps in 78 (81.4%) articles were elderly individuals (aged 50/55/60/65 years or older), while others were aimed mostly at people with chronic diseases and were tested to see whether these apps were suitable for use by older people. The complete range of functions, health conditions and target users can be found in Additional file 2: Multimedia Appendix S2.

Critical measures of usability evaluation for mHealth applications

Following the usability definitions of ISO 9241-11, ISO 25010, and Nielsen, nine critical measures of usability evaluation were extracted from the selected articles: effectiveness, efficiency, satisfaction, learnability, memorability, errors, attractiveness, operability, and understandability [14, 63, 64]. It is worth noting that effectiveness, efficiency, and satisfaction focus on the impact on users when they interact with the system, while the others concern the characteristics of the system and whether they can compensate for the decline of intrinsic capacity in elderly individuals. As shown in Table 3, the two most frequently evaluated measures are satisfaction and learnability, consistent with the dimensions of the Systems Usability Scale (SUS) [65], which was applied in 40 papers. The aspects of usability that were considered least often in the articles reviewed were errors and memorability. The assessment ratios of some critical measures were significantly related to the different stages of evaluation, indicating that the focus of the evaluation content at each stage may be different. The proportion of satisfaction and learnability in stage three was significantly higher than that in the first and second stages (P = 0.018 and P = 0.04 respectively). In contrast, the proportions of operability and comprehensibility in stages one and two were significantly higher than those in stage three (P = 0.02 and P = 0.01 respectively).

Empirical methods of usability evaluation for mHealth applications

Usability evaluation approaches can be classified into two categories: usability inspection and usability testing. Usability inspection is a general name for a set of methods that are all based on having experienced practitioners inspect the system using the predetermined principles with the aim of identifying usability problems [66]. In contrast, usability testing involves observing and recording the objective performance and subjective opinions of the target users when interacting with the product in order to diagnose usability issues or establish benchmarks [67].

Usability inspection methods

Fifteen articles used usability inspection methods to assess mHealth applications, which included two approaches: heuristic evaluation (n = 14) and cognitive walkthrough (n = 2), and one of the articles used both approaches [68].

The heuristic evaluation method requires one or more reviewers to compare the app to a list of principles that must be taken into account when designing and identifying where the app does not follow those principles [69]. In the 14 heuristic evaluation articles, the evaluators usually had different research backgrounds, such as human–computer interaction, gerontology, and specific disease areas, so that a multidisciplinary perspective could be obtained [55, 59]. The number of evaluators was in the range of 2–8, which generally referred to the suggestion by Nielsen that ‘three to five evaluators can identify 85% of the usability problems’ [63]. The heuristics can be divided into two types: generic and specific. Six studies used Nielsen’s ten principles, which are the most utilized generic heuristics [33, 40, 63]. However, traditional generic heuristics were not created for small touchscreen devices, which were the main type of app carrier, and did not consider design features that were appropriate for older adults to address their age-related functional decline in terms of perception, cognition, and movement [69]. To ensure that usability issues in these specific domains were not overlooked, the remaining eight studies extended the generic heuristics by adding usability requirements specific to elderly individuals, such as dexterity, navigation, and visual design, and finally established new heuristic checklists to evaluate the apps targeting older adults [55, 59]. Nevertheless, there was a lack of reliability analysis and expert validation for these tools except for a checklist developed by Silva [70].

Cognitive walkthrough involves one or more evaluators working through a series of tasks using the apps and describing their thought process while doing so as if they are a first-time user [71]. The focus of this method is on understanding the app’s learnability for new users [31]. The evaluators in these two studies were usability practitioners and health-care professionals [68, 72]. Before the assessment, the researchers prepared the users’ personals and the task lists [68]. During the walkthrough, the evaluators were encouraged to think aloud, and their performance was recorded by usability metrics, such as task duration and completion rate [72].

Usability testing methods

Almost 93% (89/96) of the studies used usability testing to evaluate mobile applications. Test participants were the target users of the apps, and they were all elderly. Some studies (n = 52) investigated the experiences of evaluators with mobile devices or their level of eHealth literacy to obtain the testing results for experts, intermediates, and novices [41, 47, 73]. The number of participants varied according to the stage and purpose of the evaluation. The average sample sizes of the first two stages were 22.8 (ranging from 2 to 189) and 15.2 (ranging from 3 to 50), respectively, with the purpose of identifying usability problems in the laboratory or real-life environment. Most of the above studies referred to Nielsen’s recommendations, which can come close to the maximum benefit–cost ratio, that is, testing three to five subjects, modifying the application, and then retesting three to five new subjects iteratively until no new major problems are identified [74]. Some studies determined the sample sizes according to the type of study design, including RCTs and qualitative research [75,76,77]. In stage three, usability testing was usually part of a feasibility or pilot study, and the sample size was therefore based on these design types, with an average of 60.1 (ranging from 8 to 450) [54, 78, 79].

During usability testing, the objective performance and subjective opinions of the participants were collected with the corresponding data collection methods. Thirty-four studies presented objective performance data that came from observations of operational behavior, body movements and facial expressions and could be collected by performance metrics, behavioral observation logs, screen recordings, and eye tracking [47, 72, 80, 81]. Eighty-five studies gathered the subjective opinions of the participants, which involved the users’ experience with the app and their design preferences for each part of the interface and could be investigated by means of concurrent thinking aloud, retrospective thinking aloud, questionnaires, interviews, and feedback logs [37, 41, 52, 73, 82]. The details and descriptive statistics of each data collection method are presented in Table 4.

The most frequently used collection method was questionnaires (n = 68). Of the studies, 51 used well-validated usability questionnaires, which were flexible enough to assess a wide range of technology interfaces. Frequently used usability questionnaires were the SUS (n = 40), the NPS (n = 4) and the NASA-TLX (n = 3). However, considering the lack of specificity of the standardized tool, self-designed questionnaires that lacked a reliable psychometric analysis were used in 24 studies to assess the unique features of the apps, including navigation, interface layout, and font size [45, 75, 83]. A combination of these two types of questionnaires was employed in 8 studies [59, 75, 84].

The intersection of these methods is presented in Fig. 6. Seven studies conducted both usability inspection and usability testing. Thirty studies analyzed the results of testing based on both objective performance and subjective perceptions. Figure 7 demonstrates the distribution of the three types of evaluation methods in each stage of the mHealth app usability evaluation framework. In the three stages, most of the studies captured the subjective opinions during or after the user testing process, which was most prominent in the “routine use” stage (90.5%). The objective performance of the users was also collected at all stages, which accounted for the highest proportion in the “combining components” stage (29.3%). The usability inspection conducted by the experts was applied only in the first stage (16.3%). Table 5 illustrates the statistical description of each evaluation approach in the three stages.

Discussion

Principal findings

This review identified 9 usability critical measures and 11 unique methods of usability evaluation and analyzed their distribution in the mHealth app usability evaluation framework. The results can assist researchers in the field of mHealth for the elderly in identifying the appropriate critical measures and choosing evaluation methods that are suitable for each usability assessment stage in the life cycle of development.

Emerging trends in mHealth apps to support wellness and disease management for the elderly

Overall, usability evaluation research on mHealth for the elderly has been on the rise, with a noticeable increase in 2016, and the number of articles published in 2020 was higher than that between 2010 and 2016. However, the growth rate of usability studies is far lower than the increasing number of mHealth apps. The total global mHealth market is predicted to reach nearly USD 100 billion in 2021, which would be a fivefold increase from approximately 21 billion dollars in 2016. In addition, 68% of healthcare organizations in Europe reported that they were targeting elderly people for telehealth solutions. There may be two reasons for this unequal increase. First, researchers may not realize the importance of improving the usability of mHealth apps to help the elderly overcome the digital divide [26]. Second, commercial companies developing mHealth apps are reluctant to expose usability problems to the public because of the risk of losing competitiveness [96]. In terms of app functions, wellness management and disease management have become the main types, which is consistent with recommendations for healthy aging, suggesting prevention strategies according to dynamic changes in the intrinsic abilities of the elderly [97].

Stage one: combining components

Approximately 64% of the studies evaluated the usability of mHealth apps at stage one, which means that most of the digital health technologies for the elderly were still in development and needed to be optimized iteratively in a controlled environment. The critical measures chosen in this phase tended to evaluate the design attributes of the system, such as understandability, operability, and attractiveness. The reason for this choice may derive from the primary purpose of this stage, which focuses on identifying usability problems rather than collecting users’ perceived ease of use or satisfaction [23, 30]. Additionally, usability inspection methods were used only in this stage. Some researchers pointed out that this type of approach should be used in the early stage of development because it is important not to expose a prototype with potential ergonomic quality control and safety problems to a vulnerable user group, such as older adults, until it has been fully inspected by experts [69, 72, 98].

Stage two: integrating the system into the setting

Even if a mHealth app is usable in a laboratory setting, implementation in a real environment may have different results. Therefore, stage two was carried out in realistic situations to evaluate the usability under the influence of uncontrolled environmental variables. Approximately 30% of the studies involved stage two, and eight were conducted on the basis of the optimized results in stage one. In terms of the critical measures, more research focused on the user’s subjective feelings; for example, 83.3% assessed user satisfaction in stage two and only 70.5% in stage one. The operation of the apps by the elderly was also highlighted in this stage. Age-related cognitive changes, including processing speed, executive function, and visuomotor skills, may negatively influence interactions with apps [99]. Recent design guidelines for mobile phones suggested that improving the operability of the interface, such as a simple navigation structure, could help minimize users’ cognitive load [100]. In terms of evaluation methods, most studies used questionnaires and/or interviews to collect users’ subjective opinions, and only 20% collected objective performance data. This phenomenon may be caused by the function of mHealth apps, most of which require the elderly to use them for a period of time for self-management. However, it is unrealistic and inconvenient for researchers to observe usage performance over a long period; thus, collecting perceptions after self-exploration is a viable evaluation method.

Stage three: routine use

After the first two stages, researchers used complete mHealth apps to conduct pilot or feasibility studies among the target population, and the usability evaluation was part of them [101]. Perceptions of satisfaction and learnability were most often evaluated, probably because almost 60% of the studies at stage three used the SUS, including two dimensions: satisfaction and learnability. In the 96 articles, there was no research to establish a usability benchmark for an app. This may be due to the large sample size required for this type of study and is usually conducted by commercial companies through market research [102].

Gaps and potential for future research

The use of multiple usability evaluation methods

Several design guidelines state that a usability evaluation should include both inspection and testing methods, and inspection should be carried out before testing [31, 63, 103]. However, only two studies met the above recommendations. There are two reasons for using multiple evaluation methods. First, usability inspection methods do not have the problem of the participants in usability testing possibly not representing the pronounced heterogeneity of the target users [55]. Second, the evaluators in usability inspection are experts, thereby limiting the potential of the assessment results to provide the views of the elderly who are the end users of the app [104].

With regard to usability testing, collecting only the subjective experience of users is inadequate for identifying usability problems accurately and comprehensively [105]. However, among the 89 articles involving usability testing, 37% (n = 33) employed one evaluation method, and questionnaires were chosen in 23 of them. Specific reasons for using multiple methods to collect both subjective and objective data may be as follows. First, varying results may be obtained from different evaluation methods. One study by Richard and colleagues conducted a questionnaire survey (ASQ and NASA-TLX) from elderly users to evaluate a fall detection app [72]. The ASQ scores indicated that the users were satisfied with the product, while the NASA-TLXA and objective metrics results suggested that the app created a large mental burden for the users. The possible reason for these conflicting results was that the users judged the app to be easy and satisfactory because they completed the task successfully without considering the difficulties encountered and the time spent [72]. Second, the advantages and disadvantages of each method can supplement each other. Observational performance data collect objective behavioral characteristics of users, which cannot explain the internal mechanism of such behavior [31]. This disadvantage can be solved by analyzing user experience and preferences, which identify the cognitive process during interaction with the app [106]. Additionally, subjective opinion data are self-reported and often affected by acquiescence bias, social desirability bias, and recency bias, which leads to the underestimation of results [107]. If objective evaluation methods are also used in the test, these biases may be balanced [108]. However, using multiple evaluation methods may increase the length of testing, ultimately adding to the test burden of the elderly [33]. Thus, researchers should use the appropriate number of evaluation methods to collect subjective and objective data according to the stage of assessment, testing goals, and workload that the participants can accept.

A number of studies have pointed out that due to the decline in working memory, elderly people would frequently forget the operation steps when using mHealth apps, which is also the main reason why they give up using them [109,110,111]. These results all highlighted the importance of improving the memorability of the apps for elderly individuals. However, in this review, only 13 studies measured memorability, and all of them were subjectively evaluated by experts or users. One way to objectively measure memorability is to invite participants to perform a series of tasks after having become proficient in using the apps and then asking them to perform similar tasks after a period of inactivity. The two sets of results can then be compared to determine how memorable the apps were [112]. The reason for the infrequent use of this method may be the difficulty of recruiting participants who are willing to return multiple times to participate in an evaluation. Based on the above description, future research should pay attention to memorability when evaluating mHealth apps for the elderly while optimizing the objective evaluation method of this attribute to increase the recruitment rate of participants.

Adapting usability evaluation methods to the elderly

In the context of mHealth apps for elderly individuals, it is necessary to adjust the standardized usability evaluation methods to accommodate the end users’ abilities. Standardized usability evaluation tools, such as Nielsen’s heuristics and the SUS, usually overlook specific usability issues to compensate for the decline in cognition, perception, and mobility among the elderly [98]. Thirty-two articles in this review developed their own assessment tools, of which 8 were heuristic checklists and 24 were questionnaires. However, these tools still need rigorous psychometric analysis [59].

In usability testing with older adults, researchers should choose the appropriate data collection methods according to their physiological characteristics [33]. For example, the concurrent think-aloud method requires too much attention from elderly participants with cognitive limitations, resulting in reporter bias and task execution failure [83]; thus, one study used the retrospective think-aloud method to enable the participants to explain their behavior after completing the tasks [82]. Automated usability evaluation (AUE) methods are a promising area of usability research and can improve the accuracy and efficiency of the test; thus, they may be suitable for the elderly because of the shorter timeline, preventing participants from losing focus [113, 114]. In this review, 3 papers employed an automatic capture method (screen recording and eye tracking) [72, 81, 115], and one paper used the automatic analysis method (natural language processing) [116]. In some studies, the language of the original scales is modified to match the understandability of the elderly and avoid increasing the response burden, for example, by removing a double negative from an item in the SUS or changing “cumbersome” in the SUS to “awkward” [82, 117].

The aim of researchers, designers and developers of mHealth apps should be to conduct a usability evaluation that accommodates aging barriers and possible multimorbidity issues [118]. Based on this consideration, it is necessary to choose the appropriate methods and adjust the evaluation process based on the physical function and cognitive ability of elderly users. In this review, some studies used mHealth apps to provide support activities of daily living or disease management for older adults with mental illness (dementia, cognitive impairment, schizophrenia, etc.) [117, 119,120,121]. Due to the limitations of the research conditions, participants in these studies were only given a short time to understand and try the apps before testing. However, such an evaluation process may not guarantee that participants fully comprehended the function of the app, given the impact of mental illness on their understanding and learning ability [122]. Meanwhile, patients with mental illness sometimes cannot express their self-feelings well [26], so using only subjective opinion report-based evaluation methods may affect the accuracy of the results. In view of the above two points, for such elderly patients, researchers should formulate appropriate app teaching programs and add objective evaluation methods to the research design.

Deciding the sample size of usability evaluation

Our review found that in the first two stages of the usability evaluation framework, the articles focused on detecting usability problems, and the sample size generally referred to the suggestions by Nielsen [63]. However, if the products under investigation have many problems available for discovery with probabilities of occurrence that are markedly different from the 0.31 proposed by Nielsen, then there is no guarantee that observing five participants will lead to the discovery of 85% of the problems [96]. Some researchers have suggested using complex alternative models instead of the simple binomial model to calculate the sample size [123]. However, the feasibility of such a model needs to be verified.

Study limitations

This study may have some threats to its validity. (1) Conclusion validity: Relevant research questions may have been overlooked. Considering that this review focuses mainly on evaluation methods, the results of the usability assessment were not summarized. In future studies, the severity of usability problems in each study can be classified and rated through the user action framework (UAF) and Nielsen’s severity rating [124]. (2) Construct validity: Although the PICO criteria were used to guide the search strategies, we did not include gray literature or literature other than Chinese and English.

Given the nature of the scoping review, this study did not synthesize evidence to determine the effectiveness of usability evaluation methods. Instead, it captured the diversity of the available literature with its varied objectives, critical measures, populations, and methods. Consequently, this study was primarily exploratory and suggestive of future research directions.

Conclusions

This scoping review provides a descriptive map of the literature on the methods used for usability evaluation of mHealth apps for elderly individuals. With the widespread popularity of mHealth applications for elderly individuals, the number of articles evaluating the usability of these techniques has grown rapidly in the past five years. mHealth apps are often used as an auxiliary means of self-management to help the elderly manage their wellness and disease. Due to the inconsistent evaluation purposes of each stage in the mHealth app usability evaluation framework, the critical measures and evaluation methods used in different stages have a certain tendency. Future research should focus on selecting specific critical measures relevant to the aging characteristics and adapting usability evaluation methods to elderly individuals by improving traditional tools, introducing automated evaluation tools and optimizing the evaluation process.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- mHealth:

-

Mobile health

- mHealth apps:

-

Mobile health applications

- SUS:

-

Systems Usability Scale

- USE:

-

Usefulness Satisfaction and Ease of Use Questionnaire

- UEQ:

-

User Experience Questionnaire

- ASQ:

-

After Scenario Questionnaire

- NASA-TLX:

-

National Aeronautics and Space Administration Task Load Index

- NPS:

-

Net Promoter Score

- Health-ITUES:

-

Health Information Technology Usability Evaluation Scale

- QUIS:

-

Questionnaire for User Interaction Satisfaction

- PSSUQ:

-

Post-Study System Usability Questionnaire

- ICF-US:

-

International Classification of Functioning based Usability Scale

- MARS:

-

Mobile Application Rating Scale

- AUE:

-

Automated usability evaluation

- UAF:

-

User action framework

References

Beard JR, Officer A, de Carvalho IA, Sadana R, Pot AM, Michel J, et al. The World report on ageing and health: a policy framework for healthy ageing. The Lancet. 2016;387(10033):2145–54.

United Nations. World population ageing 2020 highlights: Living arrangements of older persons. 2020. https://www.un.org/development/desa/pd/news/world-population-ageing-2020-highlights. Accessed 21 May 2021.

Chhetri J, Xue Q, Chan P. Intrinsic capacity as a determinant of physical resilience in older adults. J Nutr Health Aging. 2021;25(8):1006–11.

Liao Q, Lau W, Mcghee S, Yap M, Sum R, Liang J, et al. Barriers to preventive care utilization among Hong Kong community-dwelling older people and their views on using financial incentives to improve preventive care utilization. Health Expect. 2021;24(4):1242–53.

World Health Organization. mHealth: new horizons for health through mobile technologies. 2011. https://www.who.int/goe/publications/goe_mhealth_web.pdf. Accessed 27 Oct 2021.

Research2Guidance. mHealth App Economics 2017. https://research2guidance.com/wp-content/uploads/2017/10/1-mHealth-Status-And-Trends-Reports.pdf. Accessed 27 Oct 2021.

Pea research center. Millennials stand out for their technology use, but older generations also embrace digital life. 2019 Sep 09. https://www.benton.org/headlines/millennials-stand-out-their-technology-use-older-generations-also-embrace-digital-life-0. Accessed 27 Oct 2021.

Siaw-Teng L, Ansari S, Jonnagaddala J, Narasimhan P, Ashraf MM, Harris-Roxas B, et al. Use of mHealth for promoting healthy ageing and supporting delivery of age-friendly care services: a systematic review. Int J Integr Care. 2019;19(Suppl 1):1–2.

Elavsky S, Knapova L, Klocek A, Smahel D. Mobile health interventions for physical activity, sedentary behavior, and sleep in adults aged 50 years and older: a systematic literature review. J Aging Phys Activ. 2019;27(4):565–93.

Peek STM, Luijkx KG, Vrijhoef HJM, Nieboer ME, Aarts S, van der Voort CS, et al. Understanding changes and stability in the long-term use of technologies by seniors who are aging in place: a dynamical framework. BMC Geriatr. 2019;19(1):e236.

Puri A, Kim B, Nguyen O, Stolee P, Tung J, Lee J. User acceptance of wrist-worn activity trackers among community-dwelling older adults: mixed method study. JMIR mHealth uHealth. 2017;5(11):e173.

Palas JU, Sorwar G, Hoque MR, Sivabalan A. Factors influencing the elderly’s adoption of mHealth: an empirical study using extended UTAUT2 model. BMC Med Inform Decis. 2022;22(1):191.

Hoque R, Sorwar G. Understanding factors influencing the adoption of mHealth by the elderly: an extension of the UTAUT model. Int J Med Inform. 2017;101:75–84.

International Organization for Standardization. Ergonomics of human-system interaction-Part 11: Usability: Definitions and concepts. 2018. https://www.iso.org/standard/63500.html. Accessed 28 Oct 2021.

Kongjit C, Nimmolrat A, Khamaksorn A. Mobile health application for Thai women: investigation and model. BMC Med Inform Decis Mak. 2022;22(1):202.

Bevan N, Carter J, Earthy J, Geis T, Harker S. New ISO standards for usability, usability reports and usability measures. 2016. https://doi.org/10.1007/978-3-319-39510-4_25.

Narasimha S, Madathil KC, Agnisarman S, Rogers H, Welch B, Ashok A, et al. Designing telemedicine systems for geriatric patients: a review of the usability studies. Telemed E-Health. 2017;23(6):459–72.

Harte R, Quinlan LR, Glynn L, Rodríguez-Molinero A, Baker PM, Scharf T, et al. Human-centered design study: enhancing the usability of a mobile phone app in an integrated falls risk detection system for use by older adult users. JMIR mHealth uHealth. 2017;5(5):e71.

Ruzic L, Sanfod JA. Universal design mobile interface guidelines (UDMIG) for an aging population. In: Marston H, Freeman S, Musselwhite C, editors, Mobile e-Health, human–computer interaction series. Springer; 2017. p. 17–37.

Sadegh SS, Khakshour Saadat P, Sepehri MM, Assadi V. A framework for m-health service development and success evaluation. Int J Med Inform. 2018;112:123–30.

Harte R, Glynn L, Rodríguez-Molinero A, Baker PM, Scharf T, Quinlan LR, et al. A human-centered design methodology to enhance the usability, human factors, and user experience of connected health systems: a three-phase methodology. JMIR Hum Factors. 2017;4(1):e8.

National Institute of Standards and Technology. NIST guide to the processes approach for improving the usability of electronic health records. 2010. https://www.nist.gov/publications/nistir-7741-nist-guide-processes-approach-improving-usability-electronic-health-records. Accessed 28 Oct 2021.

Zapata BC, Fernández-Alemán JL, Idri A, Toval A. Empirical studies on usability of mHealth apps: a systematic literature review. J Med Syst. 2015;39(2):1–19.

Maramba I, Chatterjee A, Newman C. Methods of usability testing in the development of eHealth applications: a scoping review. Int J Med Inform. 2019;126:95–104.

Davis R, Gardner J, Schnall R. A review of usability evaluation methods and their use for testing eHealth HIV interventions. Curr HIV Aids Rep. 2020;17(3):203–18.

Wildenbos GA, Peute L, Jaspers M. Aging barriers influencing mobile health usability for older adults: a literature based framework (MOLD-US). Int J Med Inform. 2018;114:66–75.

Grand View Research. mHealth Apps market size 2021–2028. 2021 Feb. https://www.marketresearch.com/Grand-View-Research-v4060/mHealth-Apps-Size-Share-Trends-14163352/. Accessed 28 Oct 2021.

International Organization for Standardization. Ergonomics of human-system interaction-Part 210: Human-Centred design for interactive systems. 2019. https://www.iso.org/standard/77520.html. Accessed 28 Oct 2021.

Nimmolrat A, Khuwuthyakorn P, Wientong P, Thinnukool O. Pharmaceutical mobile application for visually-impaired people in Thailand: development and implementation. BMC Med Inform Decis. 2021;21(1):217.

Yen P, Bakken S. Review of health information technology usability study methodologies. J Am Med Inform Assn. 2011;19(3):413–22.

International Organization for Standardization. Ergonomics of human-system interaction-usability methods supporting human-centred design. 2002. https://www.iso.org/standard/31176.html. Accessed 28 Oct 2021.

Molina-Recio G, Molina-Luque R, Jiménez-García AM, Ventura-Puertos PE, Hernández-Reyes A, Romero-Saldaña M. Proposal for the user-centered design approach for health apps based on successful experiences: integrative review. JMIR mHealth uHealth. 2020;8(4):e14376.

Cornet VP, Toscos T, Bolchini D, Rohani GR, Ahmed R, Daley C, et al. Untold stories in user-centered design of mobile health: practical challenges and strategies learned from the design and evaluation of an app for older adults with heart failure. JMIR mHealth uHealth. 2020;8(7):e17703.

Levac D, Colquhoun H, O’Brien KK. Scoping studies: advancing the methodology. Implement Sci. 2010;5:69–78.

Anderson JK, Howarth E, Vainre M, Humphrey A, Jones PB, Ford TJ. Advancing methodology for scoping reviews: recommendations arising from a scoping literature review (SLR) to inform transformation of children and adolescent mental health services. BMC Med Res Methodol. 2020;20(1):242.

Graneheim UH, Lindgren B, Lundman B. Methodological challenges in qualitative content analysis: a discussion paper. Nurs Educ Today. 2017;56:29–34.

Siek KA, Ross SE, Khan DU, Haverhals LM, Cali SR, Meyers J. Colorado care tablet: the design of an interoperable personal health application to help older adults with multimorbidity manage their medications. J Biomed Inform. 2010;431(5):S22–6.

Fromme EK, Kenworthy-Heinige T, Hribar M. Developing an easy-to-use tablet computer application for assessing patient-reported outcomes in patients with cancer. Support Care Cancer. 2011;19(6):815–22.

Hamid A, Sym FP. Designing for patient-centred factors in medical adherence technology. In: Proceedings of the 6th international conference on rehabilitation engineering and assistive technology; 2012. Tampines, Singapore.

Vermeulen J, Neyens JC, Spreeuwenberg MD, van Rossum E, Sipers W, Habets H, et al. User-centered development and testing of a monitoring system that provides feedback regarding physical functioning to elderly people. Patient Prefer Adherence. 2013;7:843–54.

Hamm J, Money AG, Atwal A. Enabling older adults to carry out paperless falls-risk self-assessments using guidetomeasure-3D: a mixed methods study. J Biomed Inform. 2019;92:103–35.

Hawley-Hague H, Tacconi C, Mellone S, Martinez E, Ford C, Chiari L, et al. Smartphone apps to support falls rehabilitation exercise: app development and usability and acceptability study. JMIR mHealth uHealth. 2020;8(9):e15460.

Nikitina S, Didino D, Baez M, Casati F. Feasibility of virtual tablet-based group exercise among older adults in Siberia: findings from two pilot trials. JMIR mHealth uHealth. 2018;6(2):e40.

Ehn M, Eriksson LC, Akerberg N, Johansson A. Activity monitors as support for older persons’ physical activity in daily life: qualitative study of the users’ experiences. JMIR mHealth uHealth. 2018;6(2):e34.

Compernolle S, Cardon G, van der Ploeg HP, Van Nassau F, De Bourdeaudhuij I, Jelsma JJ, et al. Engagement, acceptability, usability, and preliminary efficacy of a self-monitoring mobile health intervention to reduce sedentary behavior in Belgian older adults: mixed methods study. JMIR mHealth uHealth. 2020;8(10):e18653.

Quinn CC, Staub S, Barr E, Gruber-Baldini A. Mobile support for older adults and their caregivers: dyad usability study. JMIR Aging. 2019;2(1):e12276.

Guay M, Latulippe K, Auger C, Giroux D, Seguin-Tremblay N, Gauthier J, et al. Self-selection of bathroom-assistive technology: development of an electronic decision support system (Hygiene 2.0). J Med Internet Res. 2020;22(8):e161758.

Chung K, Kim S, Lee E, Park JY. Mobile app use for insomnia self-management in urban community-dwelling older Korean adults: retrospective intervention study. JMIR mHealth uHealth. 2020;8(8):e17755.

Panagopoulos C, Kalatha E, Tsanakas P, Maglogiannis I. Evaluation of a mobile home care platform lessons learned and practical guidelines. In: DeRuyter B, Kameas A, Chatzimisios P, Mavrommati I, editors. Lecture notes in computer science. Springer; 2015. p. 328–43.

Liu YC, Chen CH, Lin YS, Chen HY, Irianti D, Jen TN, et al. Design and usability evaluation of mobile voice-added food reporting for elderly people: randomized controlled trial. JMIR mHealth uHealth. 2020;8(9):e20317.

Ribeiro D, Machado J, Ribeiro J, Vasconcelos M, Vieira EF, de Banos AC. SousChef: mobile meal recommender system for older adults. In: C Rocker, J ODonoghue, M Ziefle, L Maciaszek, W Molloy, editors. Communications in computer and information science. Springer International Publishing; 2017. p. 36–45.

Hill NL, Mogle J, Wion R, Kitt-Lewis E, Hannan J, Dick R, et al. App-based attention training: incorporating older adults’ feedback to facilitate home-based use. Int J Older People Nurs. 2018;13(1):1–10.

Boyd A, Synnott J, Nugent C, Elliott D, Kelly J. Community-based trials of mobile solutions for the detection and management of cognitive decline. Healthc Technol Lett. 2017;4(3):93–6.

Miller DP, Weaver KE, Case LD, Babcock D, Lawler D, Denizard-Thompson N, et al. Usability of a novel mobile health iPad App by vulnerable populations. JMIR mHealth uHealth. 2017;5(4):e434.

Arnhold M, Quade M, Kirch W. Mobile applications for diabetics: a systematic review and expert-based usability evaluation considering the special requirements of diabetes patients age 50 years or older. J Med Internet Res. 2014;16(4):e104.

Bhattacharjya S, Stafford MC, Cavuoto LA, Yang Z, Song C, Subryan H, et al. Harnessing smartphone technology and three dimensional printing to create a mobile rehabilitation system, mRehab: assessment of usability and consistency in measurement. J Neuroeng Rehabil. 2019;16(1):127.

Liu H, Li Y, Zhu L. Optimal design of app user experience for the elderly based on conjoint analysis. Packaging Eng. 2018;39(24):264–70.

Jing C. Design for elderly mobile medical App based on user experience. (Master Thesis) North China University of Technology, Beijing, China, 2018.

Petrovcic A, Rogelj A, Dolnicar V. Smart but not adapted enough: heuristic evaluation of smartphone launchers with an adapted interface and assistive technologies for older adults. Comput Hum Behav. 2018;79:123–36.

Ray P, Li J, Ariani A, Kapadia V. Tablet-based well-being check for the elderly: development and evaluation of usability and acceptability. JMIR Hum Factors. 2017;4(2):e12.

Jansen-Kosterink S, Varenbrink P, Naafs A. The GezelschApp: a dutch mobile application to reduce social isolation and loneliness. In: The 4th international conference on information and communication technologies for ageing well and e-Health; 2018; Funchal, Madeira.

Jansen-Kosterink SM, Bergsma J, Francissen A, Naafs A. The first evaluation of a mobile application to encourage social participation for community-dwelling older adults. Heal Technol. 2020;10(5):1107–13.

Nielsen J. Usability engineering. Cambridge: AP Professional; 1994.

International Organization for Standardization. Systems and software engineering-Systems and software Quality Requirements and Evaluation (SQuaRE)-System and software quality models. 2011. https://www.iso.org/standard/35733.html. Accessed 28 Oct 2021.

Bangor A, Kortum PT, Miller JT. An empirical evaluation of the system usability scale. Int J Hum Comput Int. 2008;24(6):574–94.

Nielsen J. Usability inspection methods. Conference companion on human factors in computing systems. Massachusetts: Association for Computing Machinery; 1994.

Barnum C. Usability testing essentials. Morgan Kaufmann; 2018.

Morey SA, Stuck RE, Chong AW, Barg-Walkow LH, Mitzner TL, Rogers WA. Mobile health apps: improving usability for older adult users. Ergon Des. 2019;27(4):4–13.

Salman HM, Ahmad WFW, Sulaiman S. Usability evaluation of the smartphone user interface in supporting elderly users from experts’ perspective. IEEE Access. 2018;6:22578–91.

Silva PA, Holden K, Nii A. Smartphones, smart seniors, but not-so-smart apps: a heuristic evaluation of fitness apps. In: Foundations of augmented cognition. Springer; 2014. p. 347–358.

Beauchemin M, Gradilla M, Baik D, Cho H, Schnall R. A multi-step usability evaluation of a self-management app to support medication adherence in persons living with HIV. Int J Med Inform. 2019;122:37–44.

Harte R, Quinlan LR, Glynn L, Rodriguez-Molinero A, Baker PM, Scharf T, et al. Human-centered design study: enhancing the usability of a mobile phone app in an integrated falls risk detection system for use by older adult users. JMIR mHealth uHealth. 2017;5(5):e71.

Reading TM, Grossman LV, Baik D, Lee CS, Maurer MS, Goyal P, et al. Older adults can successfully monitor symptoms using an inclusively designed mobile application. J Am Geriatr Soc. 2020;68(6):1313–8.

Why you only need to test with 5 users. 18 Mar 2000. https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/. Accessed 28 Oct 2021.

Lunardini F, Luperto M, Romeo M, Basilico N, Daniele K, Azzolino D, et al. Supervised digital neuropsychological tests for cognitive decline in older adults: usability and clinical validity study. JMIR mHealth uHealth. 2020;8(9):e17963.

Liu YC, Chen CH, Tsou YC, Lin YS, Chen HY, Yeh JY, et al. Evaluating mobile health apps for customized dietary recording for young adults and seniors: randomized controlled trial. JMIR mHealth uHealth. 2019;7(2):e10931.

Grindrod KA, Li M, Gates A. Evaluating user perceptions of mobile medication management applications with older adults: a usability study. JMIR mHealth uHealth. 2014;2(1):e11.

de Batlle J, Massip M, Vargiu E, Nadal N, Fuentes A, Ortega BM, et al. Implementing mobile health-enabled integrated care for complex chronic patients: patients and professionals’ acceptability study. JMIR mHealth uHealth. 2020;8(11):e22136.

Guo Y, Chen Y, Lane DA, Liu L, Wang Y, Lip G. Mobile health technology for atrial fibrillation management integrating decision support, education, and patient involvement: mAF app trial. Am J Med. 2017;130(12):1388–96.

Alvarez EA, Garrido M, Ponce DP, Pizarro G, Cordova AA, Vera F, et al. A software to prevent delirium in hospitalised older adults: development and feasibility assessment. Age Ageing. 2020;49(2):239–45.

Jankowski J, Saganowski S, Brodka P. Evaluation of TRANSFoRm mobile eHealth solution for remote patient monitoring during clinical trials. Moile Information Systems. 2016;22(7):e18598.

Hamm J, Money A, Atwal A. Fall prevention self-assessments via mobile 3D visualization technologies: community dwelling older adults’ perceptions of opportunities and challenges. JMIR Hum Factors. 2017;4(2):e15.

Wildenbos GA, Jaspers M, Schijven MP, Dusseljee-Peute LW. Mobile health for older adult patients: using an aging barriers framework to classify usability problems. Int J Med Inform. 2019;124:68–77.

Zmily A, Mowafi Y, Mashal E. Study of the usability of spaced retrieval exercise using mobile devices for Alzheimer’s disease rehabilitation. JMIR mHealth uHealth. 2014;2(3):e31.

Isakovic M, Sedlar U, Volk M, Bester J. Usability pitfalls of diabetes mHealth apps for the elderly. J Diabetes Res. 2016;2016:1–9.

Brooke J. SUS-A quick and dirty usability scale. 1996. https://digital.ahrq.gov/sites/default/files/docs/survey/systemusabilityscale%2528sus%2529_comp%255B1%255D.pdf. Accessed 28 Oct 2021.

Gao M, Kortum P, Oswald F. Psychometric evaluation of the USE (usefulness, satisfaction, and ease of use) questionnaire for reliability and validity. Proc Hum Factors Ergon Soc Annu Meet. 2018;62(1):1414–8.

Schrepp M. User Experience Questionnaire Handbook. 2019. https://www.ueq-online.org/Material/Handbook.pdf. Accessed 28 Oct 2021.

Lewis JR. Psychometric evaluation of an after-scenario questionnaire for computer usability studies: the ASQ. ACM SIGCHI Bull. 1991;23(1):78–81.

Hart SG, Staveland LE. Development of NASA-TLX (Task Load Index): results of empirical and theoretical research. Adv Psychol. 1988;52:139–83.

Schnall R, Cho H, Liu J. Health information technology usability evaluation scale (Health-ITUES) for usability assessment of mobile health technology: validation study. JMIR mHealth uHealth. 2018;6(1): e4.

The University of Maryland. QUIS: The questionnaire for user interaction satisfaction. 9 Mar 1999. https://www.umventures.org/technologies/quis%E2%84%A2-questionnaire-user-interaction-satisfaction-0. Accessed 7 Jan 2022.

Lewis JR. Psychometric evaluation of the post-study system usability questionnaire: the PSSUQ. Proc Hum Factors Soc Ann Meet. 1992;36(16):1259–60.

Teixeira A, Ferreira F, Almeida N, Silva S, Rosa AF, Pereira JC, et al. Design and development of Medication Assistant: older adults centered design to go beyond simple medication reminders. Univ Access Inf. 2017;16(3):545–60.

Stoyanov SR, Hides L, Kavanagh DJ, Zelenko O, Tjondronegoro D, Mani M. Mobile app rating scale: a new tool for assessing the quality of health mobile apps. JMIR mHealth uHealth. 2015;3(1):e27.

Lewis JR. Usability: lessons learned and yet to be learned. Int J Hum Comput Int. 2014;30(9):663–84.

World Health Organization. World report on ageing and health. 2015. https://www.who.int/publications/i/item/9789241565042.

Hermawati S, Lawson G. Establishing usability heuristics for heuristics evaluation in a specific domain: is there a consensus? Appl Ergon. 2016;56:34–51.

Li C, Neugroschl J, Zhu CW, Aloysi A, Schimming CA, Cai D, et al. Design considerations for mobile health applications targeting older adults. J Alzheimers Dis. 2020;79(1):1–8.

Ruzic L, Harrington C, Sanford J. Universal design mobile interface guidelines for mobile health and wellness apps for an aging population including people aging with disabilities. Int J Adv Softw. 2017;10(3):372–84.

Wilson K, Bell C, Wilson L, Witteman H. Agile research to complement agile development: a proposal for an mHealth research lifecycle. NPJ Digit Med. 2018;1:46–52.

User Experience Professionals' Association. Usability Benchmark. Dec 2012. http://usabilitybok.org/usability-benchmark. Accessed 28 Oct 2021.

Barnum C. Big U and little u usability. Usability testing essentials. Morgan Kaufmann; 2020. p. 53–81.

Bhattarai P, Newton-John TRO, Phillips JL. Quality and usability of arthritic pain self-management apps for older adults: a systematic review. Pain Med. 2018;19(3):471–84.

Broekhuis M, van Velsen L, Hermens H. Assessing usability of eHealth technology: a comparison of usability benchmarking instruments. Int J Med Inform. 2019;128:24–31.

Alwashmi MF, Hawboldt J, Davis E, Fetters MD. The iterative convergent design for mobile health usability testing: mixed methods approach. JMIR mHealth uHealth. 2019;7(4):e11656.

Cho H, Powell D, Pichon A, Kuhns LM, Garofalo R, Schnall R. Eye-tracking retrospective think-aloud as a novel approach for a usability evaluation. Int J Med Inform. 2019;129:366–73.

Wang J, Antonenko P, Celepkolu M, Jimenez Y, Fieldman E, Fieldman A. Exploring relationships between eye tracking and traditional usability testing data. Int J Hum-Comput Int. 2019;35(6):483–94.

Fox G, Connolly R. Mobile health technology adoption across generations: narrowing the digital divide. Inform Syst J. 2018;28(6):995–1019.

Ahmad NA, Mat Ludin AF, Shahar S, Mohd Noah SA, Mohd TN. Willingness, perceived barriers and motivators in adopting mobile applications for health-related interventions among older adults: a scoping review. BMJ Open. 2022;12(3):e054561.

Pang NQ, Lau J, Fong SY, Wong CY, Tan KK. Telemedicine acceptance among older adult patients with cancer: scoping review. J Med Internet Res. 2022;24(3):e28724.

Harrison R, Flood D, Duce D. Usability of mobile applications: literature review and rationale for a new usability model. J Interact Sci. 2013;1(1):1–16.

Fabo P, Durikovic R. Automated usability measurement of arbitrary desktop application with eye tracking. In: 16th International conference on information visualisation; 2012; Montprllier, France.

Harms P. Automated usability evaluation of virtual reality applications. ACM Trans Comput Hum Int. 2019;26(3):1–36.

Quintana Y, Fahy D, Abdelfattah AM, Henao J, Safran C. The design and methodology of a usability protocol for the management of medications by families for aging older adults. BMC Med Inform Decis Mak. 2019;19(1):181.

Petersen CL, Halter R, Kotz D, Loeb L, Cook S, Pidgeon D, et al. Using natural language processing and sentiment analysis to augment traditional user-centered design: development and usability study. JMIR mHealth uHealth. 2020;8(8):e16862.

Quintana M, Anderberg P, Sanmartin Berglund J, Frogren J, Cano N, Cellek S, et al. Feasibility-usability study of a tablet app adapted specifically for persons with cognitive impairment-SMART4MD (support monitoring and reminder technology for mild dementia). Int J Env Res Pub Health. 2020;17(18):e6816.

Nimmanterdwong Z, Boonviriya S, Tangkijvanich P. Human-centered design of mobile health Apps for older adults: systematic review and narrative synthesis. JMIR mHealth uHealth. 2022;10(1):e29512.

Lai R, Tensil M, Kurz A, Lautenschlager NT, Diehl-Schmid J. Perceived need and acceptability of an app to support activities of daily living in people with cognitive impairment and their carers: pilot survey study. JMIR mHealth uHealth. 2020;8(7):e16928.

Fortuna KL, Lohman MC, Gill LE, Bruce ML, Bartels SJ. Adapting a psychosocial intervention for smartphone delivery to middle-aged and older adults with serious mental illness. Am J Geriatr Psychiatry. 2017;25(8):819–28.

Hackett K, Lehman S, Divers R, Ambrogi M, Gomes L, Tan CC, et al. Remind Me To Remember: a pilot study of a novel smartphone reminder application for older adults with dementia and mild cognitive impairment. Neuropsychol Rehabil. 2020;32(1):22–50.

Jones M, Deruyter F, Morris J. The digital health revolution and people with disabilities: perspective from the United States. Int J Environ Res Public Health. 2020;17(2):381.

Schmettow M. Sample size in usability studies. Commun ACM. 2012;55(4):64–70.

Khajouei R, Peute LWP, Hasman A, Jaspers MWM. Classification and prioritization of usability problems using an augmented classification scheme. J Biomed Inform. 2011;44(6):948–57.

Acknowledgements

We would like to thank Ms. Xuemei Li, Naval Medical University, who assisted with developing the search strategy.

Funding

This work was funded by the Shanghai Science and Technology Committee under Grant [16XD1403200].

Author information

Authors and Affiliations

Contributions

QW and LZ formulated and designed the study topic. QW participated in designing, coordinating, and conducting the study and drafting the manuscript. JL participated in the definition of the review plan, monitored all phases of the review, participated in the decision making, and reviewed the manuscript. TJ, XC, and Wei Z contributed to the data analysis and the discussion of the results. HW, Wan Z, and YG contributed to manuscript development and refinement. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors report no declarations of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

PRISMA-ScR-Fillable-Checklist

Additional file 2.

96 included articles

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Wang, Q., Liu, J., Zhou, L. et al. Usability evaluation of mHealth apps for elderly individuals: a scoping review. BMC Med Inform Decis Mak 22, 317 (2022). https://doi.org/10.1186/s12911-022-02064-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-022-02064-5