Abstract

Background

For valid informed consent, it is crucial that patients or research participants fully understand all that their consent entails. Testing and revising informed consent documents with the assistance of their addressees can improve their understandability. In this study we aimed at further developing a method for testing and improving informed consent documents with regard to readability and test-readers’ understanding and reactions.

Methods

We tested, revised, and retested template informed consent documents for biobank research by means of 11 focus group interviews with members from the documents’ target population. For the analysis of focus group excerpts we used qualitative content analysis. Revisions were made based on focus group feedback in an iterative process.

Results

Focus group participants gave substantial feedback on the original and on the revised version of the tested documents. Revisions included adding and clarifying explanations, including an info-box summarizing the main points of the text and an illustrative graphic.

Conclusion

Our results indicate positive effects on the tested and revised informed consent documents in regard to general readability and test-readers’ understanding and reactions. Participatory methods for improving informed consent should be more often applied and further evaluated for both, medical interventions and clinical research. Particular conceptual and methodological challenges need to be addressed in the future.

Similar content being viewed by others

Background

In human subject research as well as in clinical care, informed consent (IC) is considered an ethical and legal requirement, supporting the protection of participants’ and patients’ rights and maintaining public trust [1,2,3]. Although there are several well-known limits to informed consent [4, 5], its general importance remains mainly uncontested. For valid informed consent, it is crucial that prospective research participants understand as well as possible all that their consent entails [6]. However, clearly explaining all relevant information for IC has proven to be a major challenge [7]. Challenges include the complexity and amount of information [8] and the readability and understandability of IC documents [7, 9, 10].

Usually, prospective research participants or patients are presented with written information about the planned medical intervention or the research project – mostly accompanied by verbal information by the responsible health-care professional or researcher. Although the importance of written information for valid IC seems to be uncontested, it has been found weak in truly informing prospective research participants [7, 11]. The weaknesses of written information may be one reason that some authors focus on other additional or even alternative ways to inform study participants [12, 13]. However, in a systematic review of interventions aiming to increase understanding in IC, Nishimura et al. show that enhanced consent forms were amongst the most effective [14]. This indicates that, irrespective of other improvements to the consent process, it is worth the effort to carefully test and improve IC documents according to test-readers’ feedback.

As several literature reviews show, a growing number of mainly quantitative studies, including randomized controlled trials, have been conducted specifically to assess understanding in IC in clinical research-settings [7, 14,15,16,17]. These studies measure, for example, whether participants understand the purpose and the risks of the research explained in the IC documents, whether they actually grasp the meaning of randomisation, or whether they understand that their participation is voluntary and that they have the right to withdraw. These quantitative approaches are particularly suitable for the systematic assessment of research participants’ actual understanding and memory of certain pieces of information. However, they teach us little about how to solve understanding problems in a way that addresses the requirements of the IC documents’ target population.

In the field of health information, decision aids, and patient information leaflets complementary approaches have been developed to both assess and improve understanding of written information: “User testings” have been applied to written information about various topics [18,19,20,21], including patient information documents for clinical trials [22,23,24]. User testings employ semi-structured individual interviews to not only assess test-readers’ understanding, but also to analyse the findability of different pieces of information, reasons for understanding problems, and test-readers’ general feedback on the informational documents. These findings are used to revise and retest the documents in an iterative process until understanding of all main aspects of the tested documents is achieved.

Although most user testings conduct individual interviews, in the evaluation of written health information and decision aids, focus group interviews have also been used [25, 26]. Focus groups enable participants to comment on each other’s statements, to clear up misunderstandings amongst themselves, and to discuss complex and divisive issues. This allows the assessment of the relative relevance of different feedback – e.g. when participants put their own feedback into perspective after comments by other participants – and the identification of contrasting views, as well as the underlying rationales – e.g. when participants discuss particular issues amongst themselves and give reasons for their differing opinions. These insights from focus groups can facilitate understanding the nature of test-readers’ opinions towards the tested documents. Hence, their requirements and special needs can be addressed more effectively in the revision process.

Additionally, focus groups have been argued to be a suitable means for assessing test-readers’ general perceptions of a given document, including emotional responses to certain issues [26]. This information can help to further improve the readability of information documents, e.g. by explaining emotionally-charged pieces of information and by avoiding ambiguous formulations.

To our knowledge, there has been no attempt to test and improve documents used in the informed consent process using focus groups. Furthermore, prior studies using focus groups did not aim to assess systematically the opportunities and challenges faced by focus group–informed revision of IC documents. In addition, prior focus group studies did not report how the tested documents were revised or whether they were re-evaluated after revision. In this study we aim at further developing the existing methods for participatory improvement of IC documents with regard to (i) assessing their readability, (ii) evaluating readers’ understanding and reactions, (iii) using test results to revise the IC document, and (iv) re-evaluating the revised IC document. For this purpose, we will first describe in detail the methods we used for testing, revising and retesting exemplary IC documents for biobank research; secondly, we will present data and results from this focus group-study; and thirdly critically reflect our methods and results.

Methods

Tested informed consent documents

In this study we worked with template IC documents addressing broad consent in biobank research. The template was published in 2013 by a working group of the umbrella organisation for German research ethics committees (AKMEK, Arbeitskreis Medizinischer Ethikkommissionen) [27]. Several expert groups (biobank chairs, members of Research Ethics Committees, experts in data protection, bioethics, and law, and industry representatives) were involved in the development of these IC documents, but no patient or public representatives. The template was particularly suitable for our study, as broad consent for biobank research concerns both patients and healthy people. Research biobanks aim to collect and store human biological samples and related data for an indefinite period of time, ultimately to use samples and data for a broad set of research questions. The tested IC documents include written information for prospective biobank donors as well as the actual consent form.

Methods for testing IC documents and assessing test-readers’ reactions

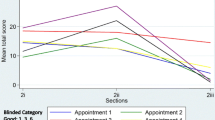

To assess test-readers’ perception of the provided IC documents, we conducted a series of 11 focus groups containing an average of 5–6 residents from an urban region in Germany (Hannover). The first seven focus groups discussed the original IC documents. After analysing audiotapes of these groups and revising the IC documents according to the results, we conducted a second set of four focus groups discussing the revised version of IC documents. Two groups in the second set were conducted with new and two with repeat participants (for an explanation of focus group composition, see below). After analysing audiotapes of this second set of focus groups the documents were again revised accordingly. The course of the project is depicted in Fig. 1. The project was approved by Hannover Medical School’s local research ethics committee (No. 6689–2014). Written informed consent was obtained from all focus group participants.

Recruitment and selection of focus group participants

Focus group participants were recruited through a postal survey of a random sample of 1050 Hannover residents. The tested IC documents addressed the general public instead of a particular group of patients. Hence, a sample of the general public best reflects the documents’ target population. All addressees were invited to volunteer for focus group participation. In addition to the focus group invitation, we dispatched a short questionnaire to survey respondents’ knowledge of and attitudes towards biobanks. Respondents’ sociodemographic data (age, gender and school education) was used to enable diverse composition of focus groups. Survey results on knowledge of and attitudes towards biobanks will be published elsewhere. To gather information for the design of future similar projects, we tested three different incentives for focus group recruitment, each offered to 350 addressees: €30, €60 or a taxi voucher to travel to and from the focus group. Response rates for the three recruitment groups were compared. However, in the end, all actual focus group participants were given the highest compensation, €60.

From the pool of volunteers, the actual focus group participants were selected by stratified random sample. We used sex (male/female), age (lower/higher than median) and school education (elementary/middle/higher education) as stratification variables, to ensure each group had a diverse composition. For just one group, we decided to include only elementary-educated participants, to give this sub-group the opportunity to freely express their criticisms of the tested IC documents.

Conduct and analysis of focus groups

All 11 focus groups (7 discussing the original and 4 discussing the revised version of IC documents) were semi-structured by an interview guide. In the first four groups, participants were given time to read the IC documents just before the focus group started. All other participants received the documents about a week before the focus group, to read at their leisure. The interview guide was pre-tested in the first focus group in June 2015 and slightly revised afterwards. Because there were only minor adjustments to the guide, and to avoid losing the group’s insightful feedback, this group was included in the analysis. The revised interview guide covered seven topics, which are listed in Table 1. In June/July 2015 six more focus groups were conducted with this final version of the interview guide. After this first set of seven focus groups, the IC documents were revised according to test-readers’ feedback for the first time.

In February/March 2016, after the first revision, participants from the first set of seven focus groups were invited to participate in two additional focus groups (of eight and six participants respectively). They were asked to comment on the quality of the revised documents compared to the original versions. In addition, another two focus groups (four participants each) were conducted with new participants who had not seen the original version of IC documents. For this second set of four focus groups (groups 8 to 11) an interview guide (Table 1) with one additional section was used: assessment of certain changes to the original documents. Additionally, the wording of the introduction and of some questions was adjusted to account for the different setting of the second set of focus groups.

All focus groups were facilitated by one of the authors (SB or HK), assisted by a second person (UH, DS or JP), and audio-recorded for analysis. Detailed excerpts were made from the tapes by carefully listening to the whole tapes and paraphrasing all relevant content. Especially relevant statements were transcribed verbatim. For an in-depth analysis of a sub-sample, four tapes (focus groups 1, 2, 5 and 6 from the first set of seven focus groups, each between 00:57 and 1:08 h) were fully transcribed. In addition, participants’ and facilitators’ notes taken during focus groups were analysed. The analysis of excerpts, transcripts, and notes focused on synthesizing participants’ criticisms and suggestions for improving the IC documents’ quality, as well as the systematic identification of understanding difficulties and emotional responses regarding certain topics.

After the first set of seven focus groups, a category scheme was developed by means of qualitative content analysis: we grouped test-readers’ statements into categories according to the subject they dealt with. Further, we combined similar or contrary comments on the same issues into first- and second-order sub-categories. The same category scheme was used to analyse the last four focus groups. Only one additional main category was added, to capture participants’ comments on the changes made to the original IC documents. Based on the results of the analysis, the template IC documents were revised after the first set of seven focus groups, and again slightly adapted after the second set of four focus groups.

Revision of IC documents

For the revision of the original IC documents we used the sub-categories identified in the qualitative content analysis. To ensure transparent and systematic revision, we developed a traffic-light system to mark our changes in the original document (see Table 2). Colour marking was performed to help track our changes and to distinguish at a glance the degree to which changes were directly based on test-readers’ feedback. In addition, we assigned a distinct code to every single piece of feedback, and noted for every change we made to the original document to which feedback it related. Also, we documented how we addressed each piece of feedback. Where we did not address a particular suggestion, we gave reasons for our decisions. The revised IC documents were discussed by all authors after each revision.

Results

Response analysis

In total, 66 of 204 survey respondents volunteered for focus group interviews (see Fig. 1). Of these, 47 participated in the two sets of focus groups: 42 were invited and 39 actually participated in the first set; 22 were invited and participated in the second set (14 participants from the first set and eight new participants). Table 3 shows the characteristics of focus group participants relative to survey respondents as a whole. Focus groups were equally attended by male and female participants; all age-groups as well as all levels of school education were represented. However, individuals with higher education were significantly over-represented amongst survey respondents, as well as amongst focus group participants. There was no significant difference in response rates according to recruitment incentive (see Table 4).

Category scheme for focus group-feedback

The category scheme developed after the first set of seven focus groups consists of eight primary categories: 1) Text length, 2) structure, 3) style/language, 4) understandability/clarity, 5) comprehensiveness, 6) trust in the information provided, 7) emotional reactions, 8) others. The same category scheme was used for the analysis of the second set of four focus groups. Only one additional primary category, “assessment of changes to original text”, was added. Additional file 1: Table S1 (online supplement 1) presents the complete category scheme, including first- and second-order sub-categories. In the following we describe core results from the focus group feedback.

Focus group feedback on the original IC documents

Participants’ comments on the tested IC documents were mixed. While overall they regarded the text to be quite understandable and easy to read, they gave detailed feedback on some aspects they thought needed improvement. These included the length of the text, its structure, style and language (citations 1–3 in Table 5). Participants also mentioned certain pieces of information they found obscure, such as “long-term storage” (citation 4) or “pseudonymisation” (citation 5).

While some feedback addressed more general problems for readability and understandability, participants also made concrete suggestions for the revision of the IC documents, such as rephrasing certain paragraphs (cit. 6) or adding figures and illustrations to the text (cit. 7).

Test-readers also gave feedback on how much they trusted the given information and how they felt about the tested documents in general. Most participants said they trusted the information given, and they felt the documents aimed to give objective and well balanced information (cit. 8, 9). However, some participants indicated that certain terms, e.g. “body-materials” (cit. 10) and topics, e.g. feedback on incidental findings (cit. 11) caused discomfort. Individual participants also expressed general distrust and wished for more supervisory bodies (cit. 12), others found phrases in the tested IC documents caused distrust (cit. 13), and hence should be revised.

Some of the emotional responses and distrust expressed could be addressed by rephrasing the relevant paragraphs in the documents. Other negative reactions seemed to be caused by misunderstandings which could be cleared up by better explaining certain topics (cit. 14, 15).

Several test readers also made additional suggestions for the design of the tested document or for the consent process as a whole – e.g. to use multimedia devices or videos to support written information (cit. 16) or to add a short version with the key aspects at the beginning of the IC documents (cit. 17).

In sum, the results of the first set of focus groups indicated that the original IC documents were performing adequately in terms of completeness and balance of the given information, as well as for understandability. However, some topics were difficult to understand, and participants made suggestions to improve readability by changing the structure and style of the text.

Revision of IC documents and feedback on the revised versions

To address the test-readers’ feedback, we revised the original IC documents, using the traffic-light colour system described in Table 2 to track the changes we made. Changes to the text included:

-

Adding a table of contents;

-

Adding a summary of the most important points to the front page, including the general purpose of the IC documents (e.g. recruiting participants for biobank research), the general purpose of biobanks, and participants’ rights (e.g. not to participate, to withdraw at any time, etc.);

-

Adding text boxes which summarised key points of each main section of the text (e.g. character and purposes of the biobank, data collection and data storage, participants’ rights, etc.);

-

Adding a graphic to illustrate the processes of biobank donation and biobank research (see Fig. 2);

-

Making some points more explicit, and explaining certain pieces of information in more detail (e.g. clarifying the concept of “long-term storage”, explaining the concept of “incidental findings relevant to your health” and the possibility of reporting them to participants);

-

Shortening some over-long sentences;

-

Changing or better explaining technical terms (e.g. “pseudonymisation”, “bio-materials”, “Research Ethics Committee” etc.).

After the first revision, the IC documents were tested in four more focus groups. Feedback from both old and new participants was mostly favourable (cit. 17 in Table 5). Some of the new participants, who had not seen the original documents, specifically praised some of the revisions, without knowing they were new, such as the added information-boxes (cit. 18) and illustration (cit. 19). These elements were also positively rated by repeat participants, who had already read the original version. However, participants in all four focus groups disliked some changes to the original version. In particular, some of the added text boxes were perceived to be unnecessary (cit. 20). Also, to clarify certain topics the revised version contained some more-detailed and therefore longer explanations. In a direct comparison of the original (mostly shorter) to the revised (longer) explanations, the feedback was mixed. Although participants acknowledged that the longer version was easier to understand, they thought it included some too-detailed information (cit. 21); the text was already too long to read for most laypeople and therefore they argued to shorten the explanations (cit. 22).

After analysing all focus groups, we again revised the IC documents. The changes we made in the second revision, however, were mainly marginal or partly reversed prior changes to the original document, including:

-

Shortening previously revised explanations;

-

Rephrasing some over-long sentences;

-

Removing unnecessary info boxes (only the box summarising the key points of the whole text was retained).

The final version of the tested IC documents was discussed until all project members agreed that it best reflected test-readers’ feedback.

Methodological findings and limitations

Aside from evaluating and improving the tested IC documents, we also intended to contribute to the further development methods for participatory improvement of IC documents. Some methodological issues are exemplified and discussed in the following paragraphs:

Recruitment and compensation of focus group participants

The IC documents we tested addressed members of the general public, rather than patients living with particular diseases. We therefore recruited focus group participants from a random sample of the general public. For recruitment of focus group participants we used three different amounts of compensation as incentives (€30, €60, free taxi). As there was no significant difference in response rates for the three groups (Table 4), we assume that the amount of compensation promised to addressees did not influence their willingness to participate in the study. It is widely debated whether research participants in qualitative research should be financially compensated [28, 29]. We decided to treat focus group participants as experts, who are usually compensated for each one-time-consultation. Therefore all participants were provided with EUR 60 for each focus group they participated in.

Response rate and composition of focus groups

Only 6.6% of invited persons volunteered for focus group participation. This causes concerns regarding potential biases. However, we did not aim for statistical representation of the target population, but rather aimed to identify the whole spectrum of different comments on the tested IC documents. For this purpose, we applied a stratified sampling strategy to achieve a diverse composition of each focus group. Overall, our sampling aim of recruiting a diverse sample of participants was fulfilled: except for one group for only elementary-educated participants, participants of all focus groups represented different age groups, levels of education and personal backgrounds.

Number of focus groups

In the first set we conducted seven focus groups. However, after the first three or four focus groups, most feedback did not raise new issues, but merely confirmed feedback from prior groups. We therefore assume that in the first set of focus groups a lower number of groups would have been sufficient to obtain test-readers most important criticism, suggestions and emotional responses. In the second set, four groups – two with former and two with new participants – appeared to be adequate to assess the full spectrum of feedback and to confirm several critical aspects in more than one group.

Discussing revised IC documents with new and repeat focus group participants

Many comments in the second set of focus groups were given by both former and new participant groups. However, this was not perceived as redundant. On the contrary, obtaining consistent feedback from both kinds of participants was helpful to confirm some of the changes to the original documents and point out need for additional revisions. While new participants had a fresh perspective and, hence, were able to give information about whether the documents were understandable to potential future biobank participants; repeat participants were asked to validate and approve the changes made according to their feedback in the first set of focus groups. Combining these two pieces of information allowed to better assess the quality of our revisions and of the revised documents as a whole with regard to readability, understandability as well as test-readers’ perception and emotional responses. Hence, we would recommend inviting both, new and repeat participants to discuss the revised version of the tested documents.

Reading tested documents in advance vs. at the beginning of focus group

Providing the tested IC documents directly before the focus groups enabled us to obtain participants’ spontaneous reactions to the text. This was especially valuable for assessing their emotional responses. However, based on our content analysis, all project members agreed in the impression that feedback by participants who read the IC documents in advance was more nuanced and more detailed. These participants also seemed more confident to give definite feedback, because they had been able to consider their opinions of the issues. In addition, some had made detailed written notes in their version of the documents, which were used as a complementary source for revisions. Hence, both variations – reading documents in advance and directly before focus groups – have proven advantageous in a complementary manner for testing and revising IC documents.

Discussion

Discussion of applied methods

In this study we tested, revised and retested IC documents for use in biobank research by means of focus groups. This discourse-oriented method was chosen to obtain test-readers’ detailed and nuanced feedback and suggestions, as well as to identify possible misconceptions, controversial opinions and emotional reactions to certain topics.

Overall, focus group interviews have proven to be a viable means for the participatory improvement of IC documents. Participants gave valuable feedback for revising the original documents. Like in other “user testings” many comments concerned the application of general rules for clear writing, such as using short sentences, or not using technical terms [e.g. 22, 30]. But participants also gave detailed feedback on certain facts they found hard to understand or about additional information they would like to have for their consent decision. In a recent Delphi procedure, Beskow and colleagues identified a set of key points prospective biobank donors must grasp before being able to give valid consent [31]. This includes: purpose of data and specimen collection, necessary procedures, duration of data and specimen storage, risks, confidentiality protections, benefits and costs, voluntariness, discontinuing participation, whom to contact for questions, Commercialisation of stored material, and handling of new findings with relevance to the subjects’ own health. The points in their list correspond well with the topics about which our test-readers gave most feedback. Hence, their comments addressed essential aspects of the IC documents.

In contrast to individual interviews used by most “user testings” [19,20,21,22,23,24, 30], the discursive focus group setting also allowed for controversial discussion amongst the participants, which helped reveal contrasting opinions and misunderstandings, e.g. a discussion in one focus group revealed that some test-readers presumed that all donated specimens were analysed by the biobank and, hence, they believed that participants could hope for diagnostic benefits. This belief made them indignant about the formulation in the IC document that “only incidental findings with direct relevance to your health” would be communicated. After identifying this misunderstanding, we were able to clarify the formulation in the IC documents. All findings from the focus groups were used to revise the original IC documents in two rounds. Comments on the revised IC documents by participants of the second set of focus groups, as well as by biobank experts, were mainly positive. This indicates that the revised IC documents were an improvement. However, we have not yet measured by means of a randomized controlled study whether certain indicators for understanding have been improved, and if so to what degree. Such RCT methodology has already been used to evaluate IC quality [14] and could also be applied in further research on the effects of the participatory improvement of IC documents.

Practical and conceptual challenges

In the course of our study we encountered a set of practical and conceptual challenges which could inform future similar studies, and also indicate the need for further methodological research on the evaluation and improvement of consent documents [32]. Firstly, how can we better distinguish the different dimensions of “understanding” with regard to health care or research-related texts, including IC documents? Research on informed consent in clinical research has attempted to better distinguish between misconceptions, misestimates and optimism [33]. These conceptual dimensions, however, all deal with testing understanding in the above-mentioned paradigm of objective, survey-based IC research. In our study we often dealt with a complementary but more preliminary dimension of understanding, that is, whether a reader has the subjective impression of grasping the text message. Further conceptual and empirical research is needed to assess the theoretical and practical relevance of integrating subjective and objective dimensions of understanding in evaluating and improving health and research-related texts.

Secondly, as mentioned above, the IC documents we tested were designed for informing members of the general public about participating in biobank research. To gain information about this group’s specific needs and potential problems in understanding the IC documents, we invited members of this group to participate in focus groups. However, other IC documents in clinical research or care aim at informing and recruiting other groups – e.g. patients, their relatives or carers. Hence, for effectively testing and improving these IC documents, members of the respective target population should be included. We believe that the results of our study are applicable to other target populations too. Despite several differences between target populations, most of them do not possess medical expert knowledge and can therefore be perceived as lay people. Learning about lay peoples’ perception of the tested IC documents can help improving their quality in the above described manner. However, further empirical research is needed to confirm this perception and to analyse potential differences between patients, research participants, members of the general public and other relevant groups as sources for improving IC documents.

Thirdly, for effectively improving written information according to test-readers’ requirements, revisions need to actually reflect their feedback. However, our test-readers’ opinions did not always indicate obvious changes in the original document. Sometimes their opinions differed or were based on what we interpreted as prevailing misconceptions. Furthermore, some reasonable requirements were hard to meet simultaneously – like the wish for more detailed explanations and the demand for shortening the respective paragraph. Some solutions for this particular Problem – e.g. using videos or electronic IC documents to present information in a dynamic way (cit. 17 and 18 in Table 5) – have already been suggested by our test-readers’ themselves and by the literature on dynamic consent [34]. However, for a transparent revision of tested documents, systematic solutions for how to address ambiguous or contesting kinds of feedback can be addressed in the revision process. Authors of user tests have named three sources for their changes to the original documents: first, feedback from user testing; second, best practice guidelines in information wording and clear writing; and third, authors’ experiences with writing patient information documents [20, 22,23,24]. These are indubitably important sources for revisions. But, to our knowledge, there are no detailed reports how exactly revisions were made based on test-readers’ feedback, and how authors dealt with the above outlined challenges. Of course, revised documents should comply with best practice guidelines; how can those responsible for the participatory improvement of IC documents demonstrate that revisions also meet test-readers’ feedback in a systematic and unbiased way?

Finally, the methodology we applied in our study is rather complex and costly and hence might not be feasible in many contexts. E.g., in some cases there might not be enough potential participants for a high number of focus groups. Also, conducting and analysing focus groups takes a lot of time which might not be available. To comply with limited resources, one could e.g. reduce the number of focus groups or the number of participants in each focus group. Other user tests have used individual interviews to test, revise and retest written information [e.g. 20, 22, 23, 24]. This might also make the process less costly and more feasible. However, additional conceptual and empirical research is needed to systematically compare different methods for testing and revising written information and to analyse the respective advantages and disadvantages of each method.

Conclusion

Although in our study we used IC documents intended to inform prospective biobank donors, we believe our findings are applicable for the participatory improvement of IC documents for other research settings as well as for medical interventions, too. Our results indicate that focus groups are a suitable complement to other methods for participatory text improvement – like the more formal user tests using individual interviews [e.g. 20, 22, 23, 24]. In all settings of participatory text improvement, however, further conceptual and empirical research is needed to identify and address the full set of challenges in assessing and revising texts based on test-readers’ feedback. The clarification of these challenges can contribute to a consolidated conceptual model for the development and improvement of IC documents and other kinds of written information.

Abbreviations

- AKMEK:

-

Organisation for German research ethics committees (“Arbeitskreis Medizinischer Ethikkommissionen”)

- Cit. :

-

Citation from focus groups

- IC:

-

Informed consent

References

Grady C. Enduring and emerging challenges of informed consent. New Engl J Med. 2015;372:855–62.

Capron AM: Legal and regulatory standards of informed consent in research. In: The Oxford Textbook of Clinical Reasearch Ethics. edn. Edited by Emanuel EJ, Grady C, Crouch RA, Lie RK, Miller FG, Wendler D. Oxford: Oxford University Press; 2008: 613–632.

OECD: Guidelines on human biobanks and genetic research database: organisation for economic co-operation and development (OECD); 2009.

O'Neill O. Some limits of informed consent. J Med Ethics. 2003;29(1):4–7.

Corrigan O. Empty ethics: the problem with informed consent. Sociology of Health and Illness. 2003;25(3):768–92.

Sand K, Kaasa S, Loge JH. The understanding of informed consent information—definitions and measurements in empirical studies. AJOB Prim Res. 2010;1(2):4–24.

Flory JH, Wendler D, Emanuel EJ: Empirical issues in informed consent for research. In: The Oxford Textbook of Clinical Reasearch Ethics. edn. Edited by Emanuel EJ, Grady C, Crouch RA, Lie RK, Miller FG, Wendler D. Oxford: Oxford University Press; 2008: 645–660.

Hallinan ZP, Forrest A, Uhlenbrauck G, Young S, Jr MKR. Barriers to change in the informed consent process: a systematic literature review. IRB. 2016;38(3):1–10.

Sand K, Eik-Nes NL, Loge JH. Readability of informed consent documents (1987-2007) for clinical trials: a linguistic analysis. J Empir Res Hum Res Ethics. 2012;7(4):67–78.

Wen G, Liu X, Huang L, Shu J, Xu N, Chen R, Huang Z, Yang G, Wang X, Xiang Y, et al. Readability and content assessment of informed consent forms for phase II-IV clinical trials in China. PLoS One. 2016;11(10):e0164251.

Davis TC, Holcombe RF, Berkel HJ, Sumona P, Divers SG. Informed consent for clinical trials: a comparative study of standard versus simplified forms. J Natl Cancer Inst. 1998;90(9):668–74.

Simon CM, Klein DW, Schartz HA. Interactive multimedia consent for biobanking: a randomized trial. Genet Med. 2016;18(1):57–64.

Henry J, Palmer BW, Palinkas L, Glorioso DK, Caligiuri MP, Jeste DV. Reformed consent: adapting to new media and research participant preferences. IRB. 2009;31(2):1–8.

Nishimura A, Carey J, Erwin PJ, Tilburt JC, Murad MH, McCormick JB. Improving understanding in the research informed consent process: a systematic review of 54 interventions tested in randomized control trials. BMC Med Ethics. 2013;14(28)

Agre P, Campbell FA, Goldman BD, Boccia ML, Kass N, McCullough LB, Merz JF, Miller SM, Mintz J, Rapkin B et al: Improving informed consent: the medium is not the message IRB 2003, Suppl 25(5):S11-S19.

Flory JH, Emanuel EJ. Interventions to improve research participants' understanding in informed consent for research. JAMA. 2004;292(13):1593–601.

Paris A, Nogueira da Gama Chaves D, Cornu C, Maison P, Salvat-Melis M, Ribuot C, Brandt C, Bosson JL, Hommel M, Cracowski JL. Improvement of the comprehension of written information given to healthy volunteers in biomedical research: a single-blind randomized controlled study. Fundam Clin Pharmacol. 2007;21(2):207–14.

Knapp P, Wanklyn P, Raynor DK, Waxman R. Developing and testing a patient information booklet for thrombolysis used in acute stroke. Int J Pharm Pract. 2010;18(6):362–9.

Brooke RE, Herbert NC, Isherwood S, Knapp P, Raynor DK. Balance appointment information leaflets: employing performance-based user-testing to improve understanding. Int J Audiol. 2013;52(3):162–8.

Raynor DK. User testing in developing patient medication information in Europe. Res Social Adm Pharm. 2013;9(5):640–5.

Fearns N, Graham K, Johnston G, Service D. Improving the user experience of patient versions of clinical guidelines: user testing of a Scottish intercollegiate guideline network (SIGN) patient version. BMC Health Serv Res. 2016;16:37.

Knapp P, Raynor DK, Silcock J, Parkinson B. Performance-based readability testing of participant information for a phase 3 IVF trial. Trials. 2009;10:79.

Knapp P, Raynor DK, Silcock J, Parkinson B. Performance-based readability testing of patient materials for a phase I trial: TNG1412. J Med Ethics. 2009;35(9):573–8.

Knapp P, Raynor DK, Silcock J, Parkinson B. Can user testing of a clinical trial patient information sheet make it fit-for-purpose?--a randomized controlled trial. BMC Med. 2011;9:89.

Zschorlich B, Knelangen M, Bastian H. The development of health information with the involvement of consumers at the German Institute for Quality and Efficiency in health care (IQWiG). Gesundheitswesen. 2011;73(7):423–9.

Hirschberg I, Seidel G, Strech D, Bastian H, Dierks M-L. Evidence-based health information from the users' perspective - a qualitative analysis. BMC Health Serv Res. 2013;13(405)

Strech D, Bein S, Brumhard M, Eisenmenger W, Glinicke C, Herbst T, Jahns R, von Kielmansegg S, Schmidt G, Taupitz J, et al. A template for broad consent in biobank research. Results and explanation of an evidence and consensus-based development process. Eur J Med Genet. 2016;59(6–7):295–309.

Head E. The ethics and implications of paying participants in qualitative research. Int J Soc Res Methodol. 2009;12(4):335–44.

Grady C. Money for research participation: does it jeopardize informed consent? Am J Bioeth. 2001;1(2):40–4.

Raynor DK, Knapp P, Silcock J, Parkinson B, Feeney K. "User-testing" as a method for testing the fitness-for-purpose of written medicine information. Patient Educ Couns. 2011;83(3):404–10.

Beskow LM, Dombeck CB, Thompson CP, Watson-Ormond JK, Weinfurt KP. Informed consent for biobanking: consensus-based guidelines for adequate comprehension. Genet Med. 2015;17(3):226–33.

Bossert S, Strech D. An integrated conceptual framework for evaluating and improving ‘understanding’ in informed consent. Trials. 2017;18(1):482.

Horng S, Grady C. Misunderstanding in clinical research: distinguishing therapeutic misconception, therapeutic misestimation, and therapeutic optimism. IRB. 2003;25(1):11–6.

Budin-Ljøsne I, Teare HJA, Kaye J, Beck S, Bentzen HB, Caenazzo L, Collett C, D’Abramo F, Felzmann H, Finlay T, et al. Dynamic consent: a potential solution to some of the challenges of modern biomedical research. BMC Medical Ethics. 2017;18(1):4.

Acknowledgements

The authors thank Jonas Lander, Carsten Markowsky, and Pia-Sophie Salvers for their help in developing and pre-testing materials for focus group recruitment, and their assistance with data entry and data handling, as well as their assistance with the organisation of focus group interviews. Thanks to Reuben Thomas for proof-reading and language-editing the manuscript.

Funding

This project was partly funded by intramural funds of Hannover Medical School and by the German Research Foundation (DFG) via the grant "Cluster of Excellence 62, REBIRTH – From Regenerative Biology to Reconstructive Therapy" (http://gepris.dfg.de/gepris/projekt/24102914). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Availability of data and materials

Data sharing is not applicable to this article as no (quantitative) datasets were generated or analysed during the current study. The interview-guide and the Coding scheme used for analysis of qualitative data are available as supporting information.

Author information

Authors and Affiliations

Contributions

DS, SB, and HK conceived and designed the project and the used methods. Focus group interviews were conducted mainly by SB, HK, and UH with support from JP and DS. Data extraction and analysis were conducted by SB, UH, HK, and DS. All authors contributed to data interpretation and repeatedly discussed revisions of the tested IC documents. SB drafted the article. DS and HK, substantially contributed to several versions of the manuscript. All authors approved the final version of the article.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The project was approved by Hannover Medical School’s local research ethics committee (No. 6689–2014). Written informed consent was obtained from all study participants.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1: Table S1.

Category scheme for test-readers’ feedback on IC documents. Table S1 contains the detailed category scheme developed for content analysis of focus groups. (DOCX 17 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Bossert, S., Kahrass, H., Heinemeyer, U. et al. Participatory improvement of a template for informed consent documents in biobank research - study results and methodological reflections. BMC Med Ethics 18, 78 (2017). https://doi.org/10.1186/s12910-017-0232-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12910-017-0232-7