Abstract

Background

Mistletoe extracts are used as an adjunct therapy for cancer patients, but there is dissent as to whether this therapy has a positive impact on quality of life (QoL).

Methods

We conducted a systematic review searching in several databases (Medline, Embase, CENTRAL, CINAHL, PsycInfo, Science Citation Index, clinicaltrials.gov, opengrey.org) by combining terms that cover the fields of “neoplasm”, “quality of life” and “mistletoe”. We included prospective controlled trials that compared mistletoe extracts with a control in cancer patients and reported QoL or related dimensions. The quality of the studies was assessed with the Cochrane Risk of Bias tool version 2. We conducted a quantitative meta-analysis.

Results

We included 26 publications with 30 data sets. The studies were heterogeneous. The pooled standardized mean difference (random effects model) for global QoL after treatment with mistletoe extracts vs. control was d = 0.61 (95% CI 0.41–0.81, p < 0,00001). The effect was stronger for younger patients, with longer treatment, in studies with lower risk of bias, in randomized and blinded studies. Sensitivity analyses support the validity of the finding. 50% of the QoL subdomains (e.g. pain, nausea) show a significant improvement after mistletoe treatment. Most studies have a high risk of bias or at least raise some concern.

Conclusion

Mistletoe extracts produce a significant, medium-sized effect on QoL in cancer. Risk of bias in the analyzed studies is likely due to the specific type of treatment, which is difficult to blind; yet this risk is unlikely to affect the outcome.

PROSPERO registration

Similar content being viewed by others

Background

Cancer is a major public health concern [1], with quality of life (QoL) as a fundamental variable of the patients’ well-being [2, 3]. The European white-berry mistletoe (Viscum album L.), an ever-green plant that grows as a semi-parasite on trees, has a long tradition in the treatment of cancer patients, particularly in continental Europe [4].

Viscum album extract (VAE) is applied subcutaneously, normally two to three times per week whereas the complete treatment duration varies from some weeks up to five years and more. Different products are available such as ABNOBAViscum, Helixor, Iscador, or Lektinol.

Mistletoe contains biologically active molecules including lectins, flavonoides, viscotoxins, oligo- and polysaccharides, alkaloids, membrane lipids and other substances [5]. Although the exact pharmacological mode of action of mistletoe is not completely elucidated, there is a growing number of biological studies with a clear focus on lectins. Lectins (from the Latin legere, “to select”) are carbohydrate-binding proteins displayed on cell-surfaces to convey the interaction of cells with their environment [6]. Lectins mediate many immunological activities: For example, lectins show an immunomodulatory effect on neutrophils and macrophages by increasing the natural killer cytotoxicity and the number of activated lymphocytes [7,8,9]. They induce apoptosis in human lymphocytes [10] and boost the antioxidant system in mice [11]. In healthy subjects, the subcutaneous application of mistletoe has stimulated the production of granulocyte-macrophage colony-stimulating factor (GM-CSF), Interleukin 5 and Interferon gamma [12], indicating the immunopotentiating properties of mistletoe. The multiple ways how mistletoe affects the immune system have been recently reviewed elsewhere [13]. In consequence, the immunological pathways of conventional oncological treatments may be influenced by VAE, affecting cancer cells and decreasing adverse effects. This may result in a better quality of life.

A number of reviews has been published over the last two decades that address the effects of VAE on QoL in cancer patients [14,15,16,17,18,19,20]. However, these studies are either out of date, don’t make use of all published evidence, and/or don’t combine the data quantitatively into a pooled effect size.

The aim of this study is therefore to review and analyze the current evidence regarding QoL of cancer patients which were treated with VAE and to calculate a meta-analysis.

Methods

The study has been reported in accordance to PRISMA. The protocol was submitted to PROSPERO (registration number: CRD42019137704).

Sources of evidence

We searched the databases Medline, Embase, PsychInfo, CENTRAL, CINAHL, Web of Science, and clinicaltrials.gov, we used google scholar, hand-searched the reference lists of reviews and identified studies and screened for grey literature via Google and opengrey.org. In case of missing data we contacted the authors.

Search strategy

We developed a search strategy by iteratively combining synonyms and/or subterms of “quality of life” (e.g. well-being, QoL), “cancer” (e.g. neoplasm, sarcom, lymphom) and “mistletoe” (e.g. Helixor, Eurixor) to identify an adequate set of terms. We applied the following search strategy for Medline (Pubmed) and adopted it to the other databases accordingly:

-

1.

quality of life OR HRQoL OR HRQL OR QOL OR patient satisfaction OR well-being OR wellbeing

-

2.

mistel OR mistletoe OR Iscador OR Iscar OR Helixor OR Iscucin OR Abnobaviscum OR Eurixor OR Plenosol OR Lektinol OR Vysorel OR Isorel OR Cefalektin OR Viscum

-

3.

Krebs OR cancer OR neoplasm/ OR tumor OR oncolog* OR onkologie OR carcin* OR malignant OR metastasis

-

4.

Humans [MESH]

-

5.

1 AND 2 AND 3 AND 4

With the exception of #4 the general search fields were applied.

Selection criteria

We included studies that measured QoL or self-regulation of cancer patients treated with mistletoe extracts assessed by performance status scales or patient-reported instruments. Studies were chosen if they were

-

prospective controlled studies with

-

two or more arms,

-

both interventional and non-interventional.

The search was not limited to languages.

Studies were excluded if

-

they did not meet the aforementioned inclusion criteria,

-

if they tested multi-component complementary medicine interventions,

-

if they failed to report sufficient information to be included into the meta-analysis or

-

where this information cannot be gleaned from authors or extracted from graphs.

Data management

The data was extracted from each study and entered into a spreadsheet by two authors independently. Then the extracted spreadsheets were compared and discrepancies were resolved by discussion until consensus was reached. We coded the following characteristics:

-

number of participants in each treatment arm

-

year, when study was conducted; in case this was not given, we estimated a 3 year lag from publication date for the meta-regression

-

duration of study

-

country where the study was conducted

-

cancer type

-

age

-

gender of patients

-

diagnosis according to ICD 10

-

duration of study

-

type of study (interventional vs. non-interventional, randomized vs. non-randomized, blinded vs. not blinded, single vs. multi-center)

-

additional therapy (e.g. chemotherapy)

-

number of drop-outs in each study arm

-

active mistletoe extract preparation (e.g. Eurixor, Iscador, etc.)

-

control treatment (e.g. placebo)

-

effect size of primary outcomes plus standard deviation, or confidence intervals for effect measure provided using the reported global measure of QoL

-

instrument used to measure primary outcomes

-

statistics according to intent-to-treat analysis (yes/no)

-

sponsoring of study (corporate, public, no-sponsoring).

If numerical data provided by the study publication was insufficient to calculate effect sizes, we contacted the authors. In cases where additional data were provided by the authors, these were then used instead of the published data. In older studies this was impossible. In those cases we used the given information (for instance means and confidence intervals, or means and p-values, or statistical information to generate the necessary data). In some cases we had to use medians as means and recover standard errors of the means from the given confidence intervals which also necessitated an adaptation of the confidence intervals into symmetrical ones. In each case we used the more conservative option which yielded larger standard errors and hence larger standard deviations. Thus, we generally opted for an error on the conservative side. When no quantitative information was given, but only graphs were presented, we printed high resolution graphs and derived the mean values and standard errors applying a ruler and used the given statistical information to arrive at the necessary quantitative scores. All these procedures were conducted independently and in duplicate [21].

Risk of bias (quality) assessment

The Cochrane Risk of Bias tool 2 (Rob 2) was used to assess the risk of bias in randomized controlled trials [22]. All studies were assigned to the intention-to-treat-effect-analysis. Non-randomized or non-interventional studies were additionally analyzed with the Newcastle Ottawa Scale [23]. Two reviewers (HW, ML) independently assessed the risk of bias. In case of discrepancies they decided by consensus.

Statistical analysis

The data were analyzed using Comprehensive Meta-Analysis V. 2 and Revman 5.3.5, the summary measure was the standardized mean difference. The meta-analysis was calculated independently by both authors using the two software tools Comprehensive Meta-Analysis and RevMan. The results were compared and underlying discrepancies resolved by discussion until both analyses yielded the same numerical results up to the second decimal. We report the overall analysis according to the results yielded by the RevMan analysis and conducted sensitivity analyses with Comprehensive Meta-Analysis.

The heterogeneity between studies was assessed by the Cochrane Q test and quantified by the index of heterogeneity (I2) [24]. A value of I2 of 25, 50 and 75% indicates low, medium and high heterogeneity, respectively. If heterogeneity was higher than 25% we applied a random effects model for pooling the data, else a fixed effects model was used. As heterogeneity was high for the overall data-set, a random effects model was indicated. Fixed effect models were only used sparingly in exploratory subgroup analyses or sensitivity analyses, when heterogeneity was low.

We conducted subgroup analysis in order to identify possible sources of the heterogeneity. Stratified analyses were performed by: study types (e.g. blinded vs. not blinded, randomized versus non-randomized, types of control), additional treatments, country, risk-of-bias status, type of sponsoring, QoL instruments and related dimensions (in particular self-regulation), and mistletoe compound. Type of cancer was not included, as there were too many different cancer types. We conducted meta-regressions and regressed the three continuous predictors year of study, age of patients and length of treatment on effect size. We checked for publication bias using Egger’s regression intercept method and Duval and Tweedie’s trim and fill method [25].

Results

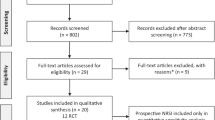

598 studies were identified by electronic and hand searches, after removing duplicates. 67 full texts were retrieved of which 26 publications with 30 separate data sets met the inclusion criteria (see Fig. 1) [26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50]. We contacted 14 authors for additional information which was granted by five [26, 30, 40, 49, 51].

90% of the studies were conducted in Europe including Russia, 50% in Germany, and 10% in Asia. Three trials were blinded, four studies or datasets were not randomized. Different mistletoe preparations with varying conventional treatments were compared to conventional treatment (alone in 22 cases or plus an additional comparator in eight cases, respectively) for multiple types and stages of cancer. In nine studies QoL was measured with EORTC-QLQ-C30, six studies assessed self-regulation, and the others used one or multiple other instruments. The study characteristics are displayed in Table 1.

The results of the overall meta-analysis are presented in Fig. 2.

As can be seen the studies are highly heterogeneous (I2 = 84%), and hence the random effects model is applied to estimate the combined standardized mean difference as d = 0.61 (95% CI 0.41–0.81, p < 0.00001, z = 6.05).

The meta-analyses of the sub-dimensions of QoL are shown in Table 2. The SMD of seven out of 14 QoL dimensions are significant (p ≤ 0.05). The pooled SMD of role and social functioning are 0.63 (95% CI 0.05–1.22) and 0.62 (95% CI 0.22–1.03), respectively. For pain, it is SMD = − 0.86 (95% CI -1.54-(− 0.18)) and for nausea, it is SMD = − 0.55 (95% CI -1-(− 0.1)).

The risk of bias assessment is displayed in the Figs. 3 and 4. 65% had an overall high risk of bias which resulted for most studies from the 85% high risk of bias in the measurement of outcome. This can be attributed to the missing blinding process, the QoL assessment as patient-reported outcome, and the uncertain appropriateness of some measurement instruments which may only incompletely capture the concept of QoL.

The five non-randomized trials [31, 34,35,36, 42] were additionally assessed with the Newcastle-Ottawa-scale. All studies had an overall score of 7 out of a maximum of 9. The sums in the selection, the comparability and the outcome/exposure domain were 3, 2 and 2, respectively, for all studies.

The sensitivity analyses are presented in Table 3.

The sensitivity analyses confirmed the robustness of the results. Neither the methodological nor other moderator variables showed strong deviations. With the exception of the non-randomized studies (non-randomized: d = 0.38, p = 0.1), the lung cancer studies (d = − 0.18, p = 0.15), the studies conducted with Lektinol (d = 0.67, p = 0.1), and the studies using an index measure (e.g. Karnofsky index) as outcome (d = 0.33, p = 0.1) all other moderator analyses showed no appreciable differences between subgroups and yielded highly significant effect sizes. In tendency, methodologically more rigorous studies yielded higher or equally high effect sizes than less rigorous ones. Most notably, randomized studies yielded a higher effect size (d = 0.70, p = 0.001) than non-randomized ones (d = 0.38, p = 0.1). Studies using active controls (d = 0.6, p = 0.004) did not differ from studies using other controls (d = 0.65, p < 0.001). Various types of additional treatment did not show differential effect sizes, except individualized best care, which, however, is an estimate based on only one study and hence not reliable. Although the effect sizes of the various products vary, their confidence intervals overlap, and hence suggest the conclusion that they are roughly equally effective. There is no difference in effect sizes depending on countries, type of sponsoring, or type of measures. Studies that relied on corporate sponsoring, and studies using only a single index measure yielded a somewhat smaller effect size, although confidence intervals overlap and thus signal non-significant differences.

The three meta-regressions are presented in Table 4 and in Figs. 5 and 6.

The study year is positively correlated with effect size. For each year the study was more recent the estimated QoL-effect size is larger by d = 0.03. Although there is a tendency for a larger effect size in younger patients this effect is not significant. The slope of the regression line for the duration of treatment is borderline significant, indicating that longer treatment produces effects that are 0.04 standard deviations larger per additional treatment week. Note though that only treatments between 5 and 52 weeks have entered the analysis and the variance is not large.

Publication bias was estimated using two methods. Egger’s regression intercept model regresses effect size on precision of study with the assumption that smaller studies that are less precise will more often go unpublished. A regression line with a lot of smaller and more imprecise studies missing should thus miss the origin by a large margin. In our analysis the intercept of the regression is 0.82 with a non-significant deviation from the origin (t = 0.65, p-value two tailed = 0.5). Duval and Tweedie’s trim and fill method is an extension of the graphical funnel plot analysis and analyzes how many studies would have to be trimmed to generate a perfectly symmetrical funnel plot. In our analysis this method estimates no studies to be trimmed on the left side, i.e. on the negative or low side of the effect size estimate and an estimate of 7 trimmed studies on the right side, i.e. on the positive side of the effect size funnel with an adjustment that leads to a higher effect size, if the studies are trimmed. These two analyses of publication bias show that publication bias is not a likely explanation of this result and any funnel asymmetries are not due to unpublished studies but due to positive outliers.

Discussion

This meta-analysis shows a significant and robust medium-sized effect of d = 0.61 of Viscum album extract (VAE) treatment on QoL in cancer patients.

The results should be regarded in the light of the following facts:

The included studies vary with regard to the cancer site, the control intervention, the additional oncological treatment, and the VAE. While sensitivity analyses confirmed the robustness and reliability of the findings, they could not account for the heterogeneity of the effect sizes. Neither methodological moderators (blinded vs. unblinded studies, randomized versus non-randomized, studies with high versus low risk of bias, active versus non-active control) nor structural moderators (type of outcome measure, funding, VAE product used, additional treatment) could clarify the heterogeneity. We suspect that this is due to multiple interactions between cancer types and stages, treatments and structural variables that cannot be explored with a limited set of 30 studies. Nevertheless, our sensitivity analyses document the overall robustness of the effect, as none of the levels of moderators exhibits significant deviations from other levels or from the overall effect size. This gives our effect size estimate of d = 0.61 reliability.

Although the overall risk of bias is high in many studies, one should bear in mind two aspects. First, we applied the intention-to-treat-algorithm of Rob2 as the more conservative approach and not the per-protocol evaluation which may have resulted in a better overall bias. Second, due to the local skin reaction of VAE application the blinding of participants and carers is practically impossible and could only have been implemented reliably with an active placebo, which is ethically questionable. In Rob2 this leads to a high risk of bias in the measurement of the outcome. On the one hand, the lack of blinding might have biased the results since most QoL are self-reported and there may be strong beliefs among users of anthroposophic medicine which might additionally be fortified by the severity of the disease and the hope that an additional treatment has a positive impact [53]. It was shown that these attitudes are correlated with a better QoL [54]. On the other hand, there is no evidence from the included studies that the attitudes differed between treatment arms and if patients in the control group searched and used for surrogate medications for the VAE, this bias would favor the comparator. Furthermore, our sensitivity analysis gives no indication that studies with blinding and without blinding estimate different effect sizes and there is also no difference in effect sizes between studies from Germany – where mistletoe is well known – and other countries where mistletoe is less known and used. The results of the Newcastle-Ottawa-Scale, finally, indicate a good methodological quality for the non-randomized trials that were included in the review.

Another limitation is that self-regulation, the Karnofsky performance index, or the ECOG scale cover important aspects of QoL, but are different in content from other measures such as the global QoL of the EORTC-QLQ C30 scale. This source of heterogeneity was also addressed by our sensitivity analyses. This showed that, indeed, as one would expect, single item indices estimate lower effect sizes, although the difference is not significant. In the same vein, the inclusion of non-randomized and non-interventional trials might have biased the results due to their lower internal validity, but their exclusion during sensitivity analyses again did not alter the significance of the pooled outcome. In addition, four of the five non-RCTs had a matched-pair design which increases the comparability between treatment arms compared to other types of group allocation.

The meta-regression shows that more recent studies have higher effect sizes compared to older studies. This is counterintuitive at first sight, as normally more recent studies are implemented with more methodological rigor due to the GCP guidelines and a higher methodological skill of trialists. This, one would think, should, if at all, lead to smaller effect sizes in more recent trials. The fact that this is not the case shows, together with our sensitivity analysis that methodological bias is an unlikely explanation for the effect size found. However, another point is worth bearing in mind: earlier studies were very often implemented with severely ill patients with tumor status IV or in palliative care. Only in more recent studies was VAE also used as add on treatment in first line patients with a relatively good chance of surviving. Thus the higher effect size for more recent studies might also reflect the less severe status of these patients.

Our review has a number of strengths. First, we conducted a comprehensive search for published and grey literature with no time or language limitation to minimize publication bias. Our analysis of publication bias supports the conclusion that the effect size estimate is not due to publication bias. Some authors who we contacted, however, failed to provide additional information and the respective studies were consequently excluded. Second, we calculated a pooled SMD for a global measure of QoL and for its subdomains such as pain or fatigue. Third, we analyzed the data both with Revman 5.3 and CMA software which implements the Hunter-Schmidt-corrections for small sample bias. We did both analyses in parallel and independently, thus preventing coding or typing errors from biasing our results.

The weaknesses of this review are obvious. Any meta-analysis can only be as good as the original studies entered. Some of these studies are large and methodologically strong. But some are also badly reported, small and with a mixed patient load. In some cases we had to recalibrate confidence interval estimates, because the data given were not detailed enough. Although it would have been desirable, the variance between cancer types and stages was too large to allow for detailed assessments and separate analyses, which might have reduced the heterogeneity. Although we can testify to the robustness of the overall effect size estimate, we have not succeeded in clarifying the heterogeneity of the studies. This requires multi-center studies in large cohorts of patients with large budgets. Thus, one consequence of this meta-analysis would be to call for more serious efforts from public funders to study the effects of VAEs in large and homogeneous patient cohorts to confirm or disconfirm the results of this analysis.

Clinical relevance

Our results indicate a statistically significant and clinically valuable improvement of the subjective well-being of patients with different types of cancer after the treatment with VAE. The analyses for the subdomains revealed a significant pooled SMD for important symptoms and functioning indices, whereas other show a positive, yet not significant effect of VAE compared to control. Whether these vital elements of QoL such as emotional functioning or fatigue are influenced remain statistically uncertain. Overall, a robust estimate of an improvement of d = 0.61 in quality of life represents a medium-sized [55] and clinically relevant [56, 57] effect that makes VAE treatment a viable add-on option to any anticancer treatment.

Conclusion

Our analysis provides evidence that global QoL in cancer patients is positively influenced by VAE. Because the risk of bias and the heterogeneity is high, future research needs to better assess the actual impact. Large studies in homogeneous patient populations are required to address these problems.

Availability of data and materials

The database on which this study is based is available on request from the authors.

References

Mattiuzzi C, Lippi G. Current cancer epidemiology. J Epidemiol Global Health. 2019;9(4):217–22.

Goerling U, Stickel A. Quality of life in oncology. Recent Results Cancer Res. 2014;197:137–52.

Jitender S, Mahajan R, Rathore V, Choudhary R. Quality of life of cancer patients. J Exp Ther Oncol. 2018;12(3):217–21.

Bussing A. Mistletoe: the genus Viscum. Amsterdam: Harwood Academic; 2000.

Pfüller U. Chemical Constituents of European Mistletoe (Viscum album L.) Isolation and Characterisation of the Main Relevant Ingredients: Lectins, Viscotoxins, Oligo−/polysaccharides, Flavonoides, Alkaloids. Mistletoe: CRC Press; 2003. p. 117–38.

Berg JM, Tymoczko JL, Stryer L. Biochemistry. Jeremy M. Berg, John L. Tymoczko, Lubert Stryer; with Gregory J. Gatto, Jr. New York: WH Freeman; 2012.

Hoessli DC, Ahmad I. Mistletoe lectins: carbohydrate-specific apoptosis inducers and immunomodulators. Curr Org Chem. 2008;12(11):918–25.

Hajto T, Hostanska K, Gabius H-J. Modulatory potency of the β-galactoside-specific lectin from mistletoe extract (Iscador) on the host defense system in vivo in rabbits and patients. Cancer Res. 1989;49(17):4803–8.

Hülsen H, Born U. Einfluss von Mistelpräparaten auf die in-vitro-Aktivität der natürlichen Killerzellen von Krebspatienten (Teil 2). Therapeutikon. 1993;7(10):434–9.

Büssing A, Suzart K, Bergmann J, Pfüller U, Schietzel M, Schweizer K. Induction of apoptosis in human lymphocytes treated with Viscum album L. is mediated by the mistletoe lectins. Cancer Lett. 1996;99(1):59–72.

Greń A, Formicki G, Massanyi P, Szaroma W, Lukáč N, Kapusta E. Use of Iscador, an extract of mistletoe (Viscum album L.) in treatment. Journal of microbiology. Biotechnol Food Sci. 2019;2019:19–20.

Huber R, Rostock M, Goedl R, Ludtke R, Urech K, Buck S, et al. Mistletoe treatment induces GM-CSF-and IL-5 production by PBMC and increases blood granulocyte-and eosinophil counts: a placebo controlled randomized study in healthy subjects. Eur J Med Res. 2005;10(10):411.

Oei SL, Thronicke A, Schad F. Mistletoe and immunomodulation: insights and implications for anticancer therapies. Evid Based Complement Alternat Med. 2019;2019:5893017. https://doi.org/10.1155/2019/5893017.

Büssing A, Raak C, Ostermann T. Quality of life and related dimensions in cancer patients treated with mistletoe extract (iscador): a meta-analysis. Evid Based Complement Alternat Med. 2012;2012.

Ernst E, Schmidt K, Steuer-Vogt MK. Mistletoe for cancer? A systematic review of randomised clinical trials. Int J Cancer. 2003;107(2):262–7.

Freuding M, Keinki C, Kutschan S, Micke O, Buentzel J, Huebner J. Mistletoe in oncological treatment: a systematic review: part 2: quality of life and toxicity of cancer treatment. J Cancer Res Clin Oncol. 2019b.

Freuding M, Keinki C, Micke O, Buentzel J, Huebner J. Mistletoe in oncological treatment: a systematic review. J Cancer Res Clin Oncol. 2019a;145(3):695–707.

Horneber M, Bueschel G, Huber R, Linde K, Rostock M. Mistletoe therapy in oncology. Cochrane Database Syst Rev. 2008;(2).

Kienle GS, Kiene H. Influence of Viscum album L (European mistletoe) extracts on quality of life in cancer patients: a systematic review of controlled clinical studies. Integr Cancer Ther. 2010;9(2):142–57.

Melzer J, Iten F, Hostanska K, Saller R. Efficacy and safety of mistletoe preparations (Viscum album) for patients with cancer diseases. Complement Med Res. 2009;16(4):217–26.

Higgins JPT, Thomas J, Chandler J, Cumpston M LT, Page MJ, Va W, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 6: The Cochrane Collaboration; 2019.

Cochrane. RoB 2: A revised Cochrane risk-of-bias tool for randomized trials 2019 [Available from: https://methods.cochrane.org/bias/resources/rob-2-revised-cochrane-risk-bias-tool-randomized-trials.

Wells G. The Newcastle-Ottawa Scale (NOS) for assessing the quality of non randomised studies in meta-analyses. 2001. http://www.ohri.ca/programs/clinical_epidemiology/oxfordasp. Accessed 11 Aug 2019.

Higgins JP, Thompson SG. Quantifying heterogeneity in a meta-analysis. Stat Med. 2002;21(11):1539–58.

Sterne JA, Egger M. Regression methods to detect publication and other bias in meta-analysis. Publication bias in meta-analysis: Prevention, assessment and adjustments; 2005. p. 99–110.

Bar-Sela G, Wollner M, Hammer L, Agbarya A, Dudnik E, Haim N. Mistletoe as complementary treatment in patients with advanced non-small-cell lung cancer treated with carboplatin-based combinations: a randomised phase II study. Eur J Cancer. 2013;49(5):1058–64.

Borrelli E. Evaluation of the quality of life in breast cancer patients undergoing lectin standardized mistletoe therapy. Minerva Medica. 2001;92(Suppl 1 Nr 3):105–7.

Dold U, LE, Mäurer HC, Müller-Wening D, Sakellariou B, Trendelenburg F, Wagner G. Krebszusatztherapie beim fortgeschrittenen nicht-kleinzelligen Bronchialkarzinom. Stuttgart-New York: Georg Thieme Verlag; 1991.

Enesel MB, Acalovschi I, Grosu V, Sbarcea A, Rusu C, Dobre A, et al. Perioperative application of the Viscum album extract Isorel in digestive tract cancer patients. Anticancer Res. 2005;25(6C):4583–90.

Grah C. Misteltherapie bei nichtkleinzelligem Bronchialkarzinom. Berlin: Fu-Berlin; 2010.

Grossarth-Maticek R, Ziegler R. Prospective controlled cohort studies on long-term therapy of breast cancer patients with a mistletoe preparation (Iscador®). Complement Med Res. 2006a;13(5):285–92.

Grossarth-Maticek R, Ziegler R. Randomised and non-randomised prospective controlled cohort studies in matched-pair design for the long-term therapy of breast cancer patients with a mistletoe preparation (Iscador): a re-analysis. Eur J Med Res. 2006b;11(11):485.

Grossarth-Maticek R, Ziegler R. Efficacy and safety of the long-term treatment of melanoma with a mistletoe preparation (Iscador). Schweizerische Zeitschrift für Ganzheitsmedizin. 2007c;19(6):325.

Grossarth-Maticek R, Ziegler R. Prospective controlled cohort studies on long-term therapy of ovarian cancer patients with mistletoe (Viscum album L.) extracts Iscador. Arzneimittelforschung. 2007b;57(10):665–78.

Grossarth-Maticek R, Ziegler R. Prospective controlled cohort studies on long-term therapy of cervical cancer patients with a mistletoe preparation (Iscador®). Complement Med Res. 2007a;14(3):140–7.

Grossarth-Maticek R, Ziegler R. Randomized and non-randomized prospective controlled cohort studies in matched pair design for the long-term therapy of corpus uteri cancer patients with a mistletoe preparation (Iscador). Eur J Med Res. 2008;13(3):107.

Heiny B. Additive Therapie mit standardisiertem Mistelextrakt reduziert die Leukopenie und verbessert die Lebensqualität von Patientinnen mit fortgeschrittenem Mammakarzinom unter palliativer Chemotherapie (VEC-schema). Krebsmedizin. 1991;12:1–14.

Heiny BM, Albrecht V, Beuth J. Stabilization of the quality of life by mistletoe lectin-1 standardized mistletoe extract in advanced colorectal carcinoma. Onkologe. 1998;4(SUPPL. 1):S35–S8.

Kaiser G, Büschel M, Horneber M, Smetak M, Birkmann J, Braun W, et al. Studiendesign und erste Ergebnisse einer prospektiven placebokontrollierten, randomisierten Studie mit AbnobaViscum Mali 4. [Prospective, randomised, placebo-controlled, double-blind study with a mistletoe extract: Study design and early results]. In: Scheer R, Bauer R, Becker H, Berg PA, Fintelmann V, editors. Die Mistel in der Tumortherapie: Grundlagenforschung und Klinik [Mistletoe in tumor therapy: Basic research and clinic]. Essen: KVC publisher; 2001. p. 485–505. .

Kim KC, Yook JH, Eisenbraun J, Kim BS, Huber R. Quality of life, immunomodulation and safety of adjuvant mistletoe treatment in patients with gastric carcinoma - a randomized, controlled pilot study. BMC Complement Altern Med. 2012;12(172):1472–6882.

Lenartz D, Stoffel B, Menzel J, Beuth J. Immunoprotective activity of the galactoside-specific lectin from mistletoe after tumor destructive therapy in glioma patients. Anticancer Res. 1996;16(6B):3799–802.

Loewe-Mesch A, Kuehn J, Borho K, Abel U, Bauer C, Gerhard I, et al. Adjuvante simultane Mistel−/Chemotherapie bei Mammakarzinom–Einfluss auf Immunparameter, Lebensqualität und Verträglichkeit. Complement Med Res. 2008;15(1):22–30.

Longhi A, Mariani E, Kuehn JJ. A randomized study with adjuvant mistletoe versus oral Etoposide on post relapse disease-free survival in osteosarcoma patients. Eur J Integr Med. 2009;1(1):31–9.

Piao BK, Wang YX, Xie GR, Mansmann U, Matthes H, Beuth J, et al. Impact of complementary mistletoe extract treatment on quality of life in breast, ovarian and non-small cell lung cancer patients. A prospective randomized controlled clinical trial. Anticancer Res. 2004;24(1):303–9.

Semiglasov VF, Stepula VV, Dudov A, Lehmacher W, Mengs U. The standardised mistletoe extract PS76A2 improves QoL in patients with breast cancer receiving adjuvant CMF chemotherapy: a randomised, placebo-controlled, double-blind, multicentre clinical trial. Anticancer Res. 2004;24(2C):1293–302.

Semiglazov VF, Stepula VV, Dudov A, Schnitker J, Mengs U. Quality of life is improved in breast cancer patients by standardised mistletoe extract PS76A2 during chemotherapy and follow-up: a randomised, placebo-controlled, double-blind, multicentre clinical trial. Anticancer Res. 2006;26(2B):1519–29.

Steuer-Vogt M, Bonkowsky V, Scholz M, Fauser C, Licht K, Ambrosch P. Influence of ML-1 standardized mistletoe extract on the quality of life in head and neck cancer patients. Hno. 2006;54(4):277–86.

Tröger W, Jezdic S, Zdrale Z, Tisma N, Hamre HJ, Matijasevic M. Quality of life and neutropenia in patients with early stage breast cancer: a randomized pilot study comparing additional treatment with mistletoe extract to chemotherapy alone. Breast Cancer. 2009;3(1):35–45.

Tröger W, Zdrale Z, Tisma N, Matijasevic M. Additional therapy with a mistletoe product during adjuvant chemotherapy of breast cancer patients improves quality of life: an open randomized clinical pilot trial. Evid Based Complement Alternat Med. 2014a;2014: 430518. https://doi.org/10.1155/2014/430518.

Tröger W, Galun D, Reif M, Schumann A, Stanković N, Milićević M. Quality of life of patients with advanced pancreatic cancer during treatment with mistletoe: a randomized controlled trial. Deutsches Ärzteblatt International. 2014b;111(29–30):493–502 33 p following.

Tröger W, Galun D, Reif M, Schumann A, Stankovic N, Milicevic M. Quality of life of patients with advanced pancreatic cancer during treatment with mistletoe: a randomized controlled trial. Dtsch Arztebl Int. 2014;111(29–30):493.

Longhi A, Reif M, Mariani E, Ferrari S. A randomized study on postrelapse disease-free survival with adjuvant mistletoe versus oral etoposide in osteosarcoma patients. Evid Based Complement Alternat Med. 2014;2014:210198. https://doi.org/10.1155/2014/210198.

von Rohr E, Pampallona S, van Wegberg B, Cerny T, Hürny C, Bernhard J, et al. Attitudes and beliefs towards disease and treatment in patients with advanced cancer using anthroposophical medicine. Oncol Res Treat. 2000;23(6):558–63.

Beadle GF, Yates P, Najman JM, Clavarino A, Thomson D, Williams G, et al. Illusions in advanced cancer: the effect of belief systems and attitudes on quality of life. Psycho-Oncol. 2004;13(1):26–36.

Cohen J. Statistical power analysis for the behavioral sciences. 2nd ed. Hillsdale, NJ: Lawrence Erlbaum Associates, Publishers; 1988.

Osoba D, Rodrigues G, Myles J, Zee B, Pater J. Interpreting the significance of changes in health-related quality-of-life scores. J Clin Oncol. 1998;16(1):139–44.

Cella D, Eton DT, Fairclough DL, Bonomi P, Heyes AE, Silberman C, et al. What is a clinically meaningful change on the functional assessment of Cancer therapy–lung (FACT-L) questionnaire?: results from eastern cooperative oncology group (ECOG) study 5592. J Clin Epidemiol. 2002;55(3):285–95.

Acknowledgments

Not applicable.

Funding

This study was funded by the Förderverein komplementärmedizinische Forschung, Arlesheim, Switzerland. The funding body had no influence in the design of the study, the collection, analysis, and interpretation of data and in writing the manuscript.

Author information

Authors and Affiliations

Contributions

Both authors contributed equally. HW developed the protocol, ML edited and improved it and submitted it to PROSPERO. ML developed and implemented the search strategy. Both authors extracted the data independently, discussed discrepancies, and calculated the analyses independently. ML calculated the quantitative analysis reported and HW calculated the sensitivity analyses reported. ML wrote the first draft of the paper and HW edited and contributed to writing. Both authors analyzed and interpreted the data. All authors have read and approved the manuscript.

Author’s information

Harald Walach is a professor with Poznan Medical University, where he teaches mindfulness to the international medical students, and he is a visiting professor with University Witten-Herdecke, where he teaches philosophical foundations of psychology to psychology undergraduates. Apart from that he is founder and director of the Change Health Science Institute (www.chs-institute.org), based in Berlin Germany. Martin Loef is an independent researcher and Harald Walach’s partner in the CHS Institute, Berlin. He is a specialist in conducting systematic reviews and meta-analyses and lifestyle diagnostics.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors have no conflict of interest to declare.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Loef, M., Walach, H. Quality of life in cancer patients treated with mistletoe: a systematic review and meta-analysis. BMC Complement Med Ther 20, 227 (2020). https://doi.org/10.1186/s12906-020-03013-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12906-020-03013-3