Abstract

Background

There is a growing interest in the inclusion of real-world and observational studies in evidence synthesis such as meta-analysis and network meta-analysis in public health. While this approach offers great epidemiological opportunities, use of such studies often introduce a significant issue of double-counting of participants and databases in a single analysis. Therefore, this study aims to introduce and illustrate the nuances of double-counting of individuals in evidence synthesis including real-world and observational data with a focus on public health.

Methods

The issues associated with double-counting of individuals in evidence synthesis are highlighted with a number of case studies. Further, double-counting of information in varying scenarios is discussed with potential solutions highlighted.

Results

Use of studies of real-world data and/or established cohort studies, for example studies evaluating the effectiveness of therapies using health record data, often introduce a significant issue of double-counting of individuals and databases. This refers to the inclusion of the same individuals multiple times in a single analysis. Double-counting can occur in a number of manners, such as, when multiple studies utilise the same database, when there is overlapping timeframes of analysis or common treatment arms across studies. Some common practices to address this include synthesis of data only from peer-reviewed studies, utilising the study that provides the greatest information (e.g. largest, newest, greater outcomes reported) or analysing outcomes at different time points.

Conclusions

While common practices currently used can mitigate some of the impact of double-counting of participants in evidence synthesis including real-world and observational studies, there is a clear need for methodological and guideline development to address this increasingly significant issue.

Similar content being viewed by others

Introduction

Both in the evaluation of health technologies and epidemiological research, systematic reviews and meta-analysis are regarded as providing high quality evidence [1, 2]. With the heightening interest in studies reporting the use of real-world data in health research literature, which include observational studies using registry or electronic health record data collected routinely in clinical practice, the incorporation of these studies in evidence synthesis is becoming increasingly common [3,4,5,6]. Utilising data from all available sources, including observational studies, can provide many benefits in epidemiology, such as increased power and more generalizable results. However, this can often introduce a number of analytical problems such as confounding, significant heterogeneity and misclassification bias within the non-randomised evidence.

While methods such as meta-regression have been considered to address these issues [7, 8], a significant problem that has received little attention within public health research is the double-counting, also referred to as sample overlap, of individuals and databases when including such studies in evidence syntheses. With the increased use of cohort and real-world data in evidence synthesis, double-counting has the potential to become a significant issue. Some aspects of double-counting have been discussed by Senn (2009) and Lunny et al.,(2021), specifically in the context of whole studies or study arms which were being included multiple times in the meta-analysis [9, 10]. More attention has been given to this issue in the fields of social science, education, economics and finance, where analytical approaches to dealing with such issues have been suggested [11, 12]. However, currently there is no published guidance available on how to address this.

Sample overlap between studies will lead to spuriously high precision in meta-analysis and is also potentially a source of bias. Due to this, many reviewers choose to exclude or adjust for studies where there is an overlap of participants. It may not be obvious if studies contain overlapping patients and so double-counting of individuals in a synthesis may exist without the reviewer’s knowledge. Whilst guidance documents for conducting systematic reviews and meta-analysis of intervention and prevalence/incidence studies exist, none of these consider the effect of the large magnitude of sample overlap expected in whole population studies on meta-analysis results [13, 14]. Therefore, this paper aims to highlight and illustrate some of the specific methodological and practical aspects of double-counting of individuals and datasets that should be considered in evidence syntheses that include real-world and observational data using a number of public health case studies.

Methods

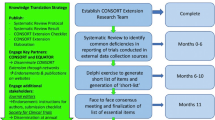

Various aspects of double-counting of populations in included observational and real-world evidence studies in meta-analysis, henceforth referred to as the overlapping populations problem for brevity, will be discussed with case-studies used to highlight particular issues. The case studies used to illustrate the common issues with overlapping populations have been identified through involvement of authors of this manuscript with multiple applied synthesis projects [15,16,17,18]. Each case study has utilised various approaches of addressing the problem; in each scenario the method used to address this issue by authors was discussed, which considered the most appropriate approach to answer the research question and maximise the data available. Further aspects of overlapping populations in specialised areas of health research, that have the potential to become important issues, are then considered. The implications for research will be discussed and potential methods to address these issues reviewed.

Results

Case study 1 – COVID-19 outcomes by ethnicity

This case study considers a systematic review and meta-analysis which aimed to assess the effect of ethnicity on a range of COVID-19 outcomes and faced multiple issues regarding double-counting [18]. Three examples of double-counting of individuals/populations that were highlighted by the authors in this study are summarised below (details obtained from contact with the author group):

-

(1)

Multiple studies using same research database:

Four studies [19,20,21,22] were identified which had used data from UK Biobank, a consented cohort study, and presented data on ethnicity differences and COVID-19 infectivity. To address the issue of overlapping populations the authors included only one of the four studies, favouring peer reviewed papers over pre-prints and then choosing the largest study:

-

16th March–14th April 2020, 669 COVID-19 positive cases from entire UK Biobank sample (502,536 participants) [Pre-print] [19]

-

16th March–3rd May 2020, 948 COVID-19 positive cases from reduced UK Biobank sample (392,116 participants) [Peer-reviewed] [20]

-

16th March–14th April 2020, 651 COVID-19 positive cases from reduced UK Biobank sample (415,582 participants) [Pre-print] [21]

-

16th March–14th April 2020, 669 COVID-19 positive cases from entire UK Biobank sample (502,536 participants) [Pre-print] [22]

Hence, only Niedzwiedz et al.,(2020) [20] was included. Figure 1 reports the adjusted risk ratios before and after excluding overlapping populations. There were reductions in overall relative effect estimates, with increased heterogeneity estimates after excluding overlapping population papers. For example in the Asian population, risk ratio (RR) of infectivity of COVID-19 reduced from 1.63(1.39,1.92) to 1.50(1.24,1.83) with increased uncertainty.

-

(2)

Multiple studies using the same hospital database

Five eligible studies [23,24,25,26,27] were identified which had used data from the Mount Sinai Health System, New York. Two of these assessed specific subgroups; (i) patients with HIV [24] and (ii) patients with myeloma [26], which were excluded as these patient subgroups would be included in the other three papers which assessed all those with COVID-19 in the database. The remaining three papers each included slightly different subsets of the same hospital cohort:

Forest plots for meta-analysis of adjusted RR for infectivity of COVID-19 in people with Asian or Black ethnicity versus those of White ethnicity (Blue: including all eligible studies; Red: after removal of studies with overlapping populations) in case study 1. Note: Z Raisi-Estabragh et al., [20] is not included in the figure as they did not report adjusted RR

As the dates for all three studies overlapped significantly and all included hospital inpatients, the largest study was included [25], which also gave the most complete data.

Figure 2 shows the odds ratios for mortality after contraction of COVID-19, before and after removing overlapping population studies. There were little changes in odds ratios of mortality after contracting COVID-19 before and after removing overlapping population studies; although no difference was seen in this example, there might be cases of larger overlap where this may not have been the case.

-

(3)

Multiple studies from the same country:

Forest plots for meta-analysis of odds ratios (OR) for mortality after contraction of COVID-19 of people with Asian, Black, or Hispanic ethnicity versus those of White ethnicity (Blue: including all eligible studies; Red: after removal of studies with overlapping populations) in case study 1. NOTE: B. Wang et al., [24] is not included in figure as they did not report the necessary data to calculate the specified unadjusted OR

The majority of papers included in the review analysed data from the USA (84%). Some studies were nationwide, for example, the Centers for Disease Control and Prevention published data on all women of reproductive age across the USA [28]. Other studies were conducted in the same states but often with no specific information on which hospitals were used. Where overlap, such that a study (ies) population was fully encompassed by another, could not be established, all studies in question were included. In the UK, two studies included UK wide data [29, 30]; only Williamson et al. (2020) [30] was included in the review as it had the larger sample size. Examples [1] and [2] as described above may appear to be more conservative (i.e. exclude all but one study) than the approach described in this section. However, this is a reflection on the general rule-of-thumb that was conducted throughout the meta-analysis; only exclude studies when the overlap was deemed to be ‘certain’, optimising data available.

Case study 2 – incidence of major limb amputation in the UK

When conducting a systematic review on the reasons for variation in reported incidence of major lower limb amputation [16, 17], a major issue was the overlap of study period. Data syntheses including whole country population studies may mean combining studies with a major participant overlap. In addition to the problem of patient overlap due to using the same national health record database, in this review, suspected patient overlap was also observed between studies using the same or similar time periods. Figure 3 illustrates the overlapping of study populations in the included review articles by plotting the study periods for each article. As nearly all articles experienced an overlap in study period and no articles provided the information necessary to gauge the magnitude of the participant overlap, articles were not excluded from the review. Given this overlap (and the heterogeneous methods of the included articles) statistically combining data would not have provided a meaningful outcome; only a narrative summary could be provided.

Case study 3 – use of SGLT-2is and GLP-1RAs in type 2 diabetes

The previous two case studies have focused solely on synthesis of observational studies. Overlapping of data is also an issue when incorporating real-world evidence into synthesis of Randomised Controlled Trials (RCTs). This case study discusses an extension to include real-world evidence in a previously published systematic review and Network Meta Analysis (NMA) of RCTs of two classes of glucose-lowering medications in type 2 diabetes [15]. While including real-world evidence, a number of issues related to double-counting were highlighted:

-

(1)

Multiple studies sharing common treatment arms:

In this NMA, assessing the effect of the treatments on the change from baseline in HbA1c(%) measuring blood-glucose levels, multiple observational studies utilising the same database were identified. Reporting results after 24 weeks of intervention, two US studies had used the Quintiles Electronic Medical Database to evaluate treatment effects [31, 32]. Saunders et al.,(2016) [31] extracted data to compare participants given liraglutide to those given exenatide once weekly (QW) between 1st Feb 2012-31st May 2013. Unni et al.,(2017) [32] compared participants prescribed albiglutide, dulaglutide or exenatide QW between the 1st Feb 2012-31st March 2015. The overlapping treatment arm of exenatide QW over a common timeframe would likely result in some overlapping participants in these two studies. Therefore, sensitivity analysis was conducted by excluding the exenatide QW arm from the study conducted by Unni et al.,(2017) [32] and including all other arms in that study.

-

(2)

Overlapping studies at multiple time points:

In addition to the two studies described above, McAdam-Marx et al.,(2016) [33] conducted a study comparing standard care to liraglutide and exenatide QW users using the Quintiles Electronic Medical Database. However, this study analysed the change in HbA1c(%) after 52 weeks of treatment. It is often desirable to synthesise all time points in the same statistical model because of benefits in efficiency and coherence. While this is not an issue in this particular case study, as multiple time points have been analysed separately, if all data were synthesised in a single analysis, the issue of data overlap would be present. In that case, the study with the longest follow-up would be included in the synthesis.

-

(3)

Overlapping of participants in studies using the same database even when the treatments being investigated are not common:

While there is obvious overlap in participants in studies utilising the same database with a common treatment arm, a more difficult to identify issue of overlapping participants may occur if the treatment arms used are not common between the studies. In this case study, multiple studies utilised the Optum Research database [34, 35]. One study compared the use of albiglutide to liraglutide between July 2014–December 2015 [35] while another compared canagliflozin to dapagliflozin users between January 2014–September 2016 [34]. In both studies, participants could be on background therapies in addition to the study treatment. Therefore, there is a possibility that participants on combination therapy could be included multiple times in the NMA (e.g., participants given liraglutide also given canagliflozin). In this instance, it is difficult to extract the overlapping participants, and therefore all studies were included in the NMA.

Other potential issues

Overlap of real-world evidence and randomised controlled trial data

There are many methods for participant recruitment into a trial; some methods include referrals from clinicians and/or physicians, electronic health records and from registries [36, 37]. For example, in the UK Collaborative Trial of Ovarian Cancer Screening (UKCTOCS) trial, more than 200,000 participants were recruited from 13 UK registries to be randomised to various screening methods [38, 39]. However, recruiting patients from these settings will mean there is a possibility of duplicated participants from the observational and RCT data in the evidence synthesis. Using the example from the UKSTOCS trial, if this trial is included in the evidence synthesis, along with an observational study using at least one of the recruitment registries for UKCTOCS, there is a high likelihood of participants overlapping in the RCT and observational study.

This issue of duplicated participants in RCT and observational data is particularly apparent in the Systematic Anti-Cancer Therapy (SACT) database [40]. As SACT include all patients in the UK to be given anti-cancer therapy, those in RCTs receiving particular therapies would also be included in the SACT data, resulting in participants being duplicated if combined in a meta-analysis.

Single arm studies and studies with historical controls

There are increasing numbers of single arm trials conducted for regulatory purposes to assess effectiveness of interventions. These are often designed to compare treatment effectiveness in participants before and after interventions [41]. Alternatively, single-arm data are sometimes compared to historical controls (control arm data from a past study) [41]. To get reliable historical control arms, groups are collating control arms from certain past studies to generate databases for specific areas of disease research [42]. Therefore, single-arm studies that access the same control databases may use the same set of participants for recruitment of historical control data. Including multiple single-arm studies with historical controls in an evidence synthesis analysis could result in the duplication of participants in the control arms.

Multicentre studies

Duplicate/multiple publication has been defined as the “publication of a paper that overlaps substantially with one already published”–so called ‘salami slicing’ [43]. This needs to be taken into account in both RCTs and observational studies. Sometimes individual sites from multicentre RCTs publish results separately without cross-referencing the main analysis [44]. Involving all publications in evidence synthesis could result in overlapping participants, with poor reporting leading to confusion on whether participants have been recruited for the larger RCT or a specific centre [44]. Further, multiple publication could also be an issue in observational studies [45]. In England, provided researchers obtain consent and ethical approval, individual hospitals and regions can publish cohort studies. However, data could also be collected for larger health care databases such as NHS Hospital Episode Statistics Admitted Patient Care data, which collects information from 451 NHS trust hospitals in England [46]. When collecting the totality of evidence for a systematic review, if the link between publications is not made clear by the authors of the primary studies, individuals could be duplicated when conducting the evidence synthesis.

Possible solutions

It is important to extract the pertinent information for studies included in order to make an informed decision on which studies/treatment arms to include in the evidence synthesis when including observational studies (Box 1). An essential step would be to determine what information can be clarified by contacting authors of the primary studies, such as database used or timeframe of analysis if unclear. Alternatively, obtaining anonymised patient IDs and/or Individual Patient Data (IPD) of those analysed could be used to determine the percentage overlap across studies utilising and excluding duplicate individuals from the same real-world data. However, IPD can often be difficult or expensive to obtain and may not always be feasible in a meta-analysis study.

Discussion

The use of real-world data in evidence synthesis within public health is becoming increasingly common, and provides a number of benefits from an epidemiological standpoint, such as increased power and greater generalisability. However, double-counting of individuals across studies is becoming a significant problem, particularly in meta-analyses; currently, there is no guidance to account for this issue in meta-analysis. In this paper, the issue of double-counting of overlapping populations was discussed in reference to a number of case studies. However, it should be noted that while case studies have been used to illustrate the issues with double-counting of individuals/populations, this is not to be overly critical of the work reported in these papers and may not reflect all potential issues.

In the case studies, while double-counting of populations/individuals is considered an important issue, it has not been adjusted for in a meta-analysis in any detailed manner apart from using standard approaches [18]. Inclusion of larger studies or those that provide a greater level of relevant information (e.g. more outcome data or more complete population) is a possible solution in order to minimise the impact of overlapping populations. If data are unavailable in the most comprehensive study for an outcome of interest, an alternative study could be utilised [18]. However, excluding studies could also result in non-duplicated participants being excluded, leading to a potential loss of power in the analysis. It may be possible to use IPD to address some of the issues, which may also mitigate the issue of power loss; however, in many cases, for example UK Biobank data and the Clinical Practice Research Datalink (CPRD), data must be destroyed after a certain period of time and cannot be shared. Further, as many databases contain anonymised data, it would be difficult to identify participants duplicated across multiple datasets. Proposed analytical methods for dealing with study overlap were not considered [11], although the requirement for data on the extent of the overlap would have been challenging to estimate. All potential solutions considered have benefits and limitations. For example, given known issues with publication bias prioritising peer reviewed articles above pre-prints may not always be sensible. Without further methodological research comparing methods under different scenarios (e.g. size of overlap, number of studies with overlap) it is impossible to give strong recommendations. Therefore, it is vital to carry out sensitivity analyses, excluding studies in which duplicate participants may be a concern to assess if results are robust. As seen in the case studies, evidence syntheses utilise different approaches to address overlapping populations; therefore, recommendations based on robust evaluations of available methods are needed for a standardised method of addressing this issue.

In order to assess the potential for double-counting when performing evidence synthesis, researchers rely on the reporting quality of the original studies. Often it is not possible to determine if overlap is an issue or the extent of it. The REporting of studies Conducted using Observational Routinely-collected Data (RECORD) collaborative have developed reporting guidelines for studies using real-world data [47]. This is an extension to the STrengthening the Reporting of OBservational studies in Epidemiology (STROBE) reporting guidelines which cover all observational studies [48], including additional recommendations specific to the use of routinely collected data. It is hoped that such guidelines will improve the reporting quality of studies using real-world data so that the extent of overlap can be considered. Even with transparent reporting of data provenance, the risk of double-counting can never be completely removed, but it can be minimised.

There are a number of serious consequences when double-counting of information is not taken into account in evidence synthesis. Including overlapping populations can artificially inflate the precision of effect estimates, potentially leading to inappropriate conclusions drawn from the analysis and consequently inappropriate decisions made based on such data [11]. Sensitivity analysis conducted in case study 1 showed less certainty around relative effect estimates after excluding overlapping population studies with increased heterogeneity. While some changes to effect estimates were minimal, this issue could prove to be much larger in other areas and have an impact in evidence-based decision-making when, for example, such estimates are used as inputs into a cost-effectiveness analysis. Additional issues as a consequence of overlapping populations include duplicate publication bias [14]. This refers to the bias introduced when individual centres from a multicentre study publish results but do not specify that it is from a multiple centre study. The same bias can be extended to real-world studies where hospital data are utilised in a region, but the specific hospital from which the data is derived from is not mentioned; this makes it difficult to distinguish the amount of overlap across the various studies in the evidence synthesis model and so increasing the possibility of bias. Further methodological research needs to be undertaken to assess the full impact of this issue as well as development of appropriate methodologies to address it.

Conclusions

This manuscript has highlighted a number of issues and challenges associated with double-counting of individuals and databases when including real-world data in meta-analysis within the field of public health. However, current approaches to address this issue may result in relevant available information not being fully utilised, leading to a potential reduction in analysis power. This manuscript calls attention to the need for clear guidance and methodological development, to ensure appropriate and efficient inclusion of real-world and observational data in evidence synthesis, without increasing bias or spurious precision while making maximal use of the data available.

Availability of data and materials

No new data were generated or analysed in support of this research. The study includes no data from unpublished restricted [non-publicly available] human clinical databases. The data that support the findings of this study are available from the corresponding author upon reasonable request.

Change history

08 December 2022

A Correction to this paper has been published: https://doi.org/10.1186/s12889-022-14741-1

Abbreviations

- RCTs:

-

Randomised Controlled Trials

- NMA:

-

Network Meta Analysis

- QW:

-

Once weekly

- UKCTOCS:

-

UK Collaborative Trial of Ovarian Cancer Screening

- SACT:

-

Systematic Anti-Cancer Therapy

- IPD:

-

Individual Patient Data

- CPRD:

-

Clinical Practice Research Datalink

References

Evans D. Hierarchy of evidence: a framework for ranking evidence evaluating healthcare interventions. J Clin Nurs. 2003;12(1):77–84.

Petrisor B, Bhandari M. The hierarchy of evidence: levels and grades of recommendation. Indian J Orthop. 2007;41(1):11–5.

Hsu J, Santesso N, Mustafa R, Brozek J, Chen YL, Hopkins JP, et al. Antivirals for treatment of influenza: a systematic review and meta-analysis of observational studies. Ann Intern Med. 2012;156(7):512–24.

Hutton B, Joseph L, Fergusson D, Mazer CD, Shapiro S, Tinmouth A. Risks of harms using antifibrinolytics in cardiac surgery: systematic review and network meta-analysis of randomised and observational studies. BMJ. 2012;345:e5798.

Stegeman BH, de Bastos M, Rosendaal FR, van Hylckama VA, Helmerhorst FM, Stijnen T, et al. Different combined oral contraceptives and the risk of venous thrombosis: systematic review and network meta-analysis. BMJ. 2013;347:f5298.

Dias S, Ades AE, Welton NJ, Jansen JP, Sutton AJ. Network meta-analysis for decision-making. Hoboken: Wiley; 2018.

Metelli S, Chaimani A. Challenges in meta-analyses with observational studies. Evid Based Mental Health. 2020;23(2):83–7.

Mueller M, D’Addario M, Egger M, Cevallos M, Dekkers O, Mugglin C, et al. Methods to systematically review and meta-analyse observational studies: a systematic scoping review of recommendations. BMC Med Res Methodol. 2018;18(1):1–18.

Lunny C, Pieper D, Thabet P, Kanji S. Managing overlap of primary studies results across systematic reviews: practical considerations for authors of overviews of reviews. BMC Med Res Methodol. 2021;21(1):140.

Senn SJ. Overstating the evidence–double counting in meta-analysis and related problems. BMC Med Res Methodol. 2009;9(1):10.

Bom PRD, Rachinger H. A generalized-weights solution to sample overlap in meta-analysis. Res Synth Methods. 2020;11(6):812–32.

Hedges LV, Tipton E, Johnson MC. Robust variance estimation in meta-regression with dependent effect size estimates. Res Synth Methods. 2010;1(1):39–65.

Aromataris E, Munn Z. JBI manual for evidence synthesis. Chapter 11: scoping reviews; 2020.

Higgins JP, Thomas J, Chandler J, Cumpston M, Li T, Page M, et al. Cochrane handbook for systematic reviews of interventions version 6.1 (updated September 2020): The Cochrane Collaboration; 2020. Available from: www.training.cochrane.org/handbook

Hussein H, Zaccardi F, Khunti K, Davies MJ, Patsko E, Dhalwani NN, et al. Efficacy and tolerability of sodium-glucose co-transporter-2 inhibitors and glucagon-like peptide-1 receptor agonists: a systematic review and network meta-analysis. Diabetes Obes Metab. 2020;22(7):1035–46. https://doi.org/10.1111/dom.14008. Epub 2020 Mar 18.

Meffen A, Houghton JSM, Nickinson ATO, Pepper CJ, Sayers RD, Gray LJ. Understanding variations in reported epidemiology of major lower extremity amputation in the UK: a systematic review. BMJ Open. 2021.

Meffen A, Pepper CJ, Sayers RD, Gray LJ. Epidemiology of major lower limb amputation using routinely collected electronic health data in the UK: a systematic review protocol. BMJ Open. 2020;10(6):e037053.

Sze S, Pan D, Nevill CR, Gray LJ, Martin CA, Nazareth J, et al. Ethnicity and clinical outcomes in COVID-19: a systematic review and Meta-analysis. EClinicalMedicine. 2020;29-30:100630.

Kolin DA, Kulm S, Elemento O. Clinical and genetic characteristics of Covid-19 patients from UK Biobank. medRxiv [Preprint]. 2020:2020.05.05.20075507. https://doi.org/10.1101/2020.05.05.20075507. Update in: PLoS One. 2020;15(11):e0241264.

Niedzwiedz CL, O’Donnell CA, Jani BD, Demou E, Ho FK, Celis-Morales C, et al. Ethnic and socioeconomic differences in SARS-CoV-2 infection: prospective cohort study using UK biobank. BMC Med. 2020;18(1):160.

Prats-Uribe A, Paredes R, Prieto-Alhambra D. Ethnicity, comorbidity, socioeconomic status, and their associations with COVID-19 infection in England: a cohort analysis of UK Biobank data. medRxiv. 2020:2020.05.06.20092676.

Raisi-Estabragh Z, McCracken C, Ardissino M, Bethell MS, Cooper J, Cooper C, et al. Non-white ethnicity, male sex, and higher body mass index, but not medications acting on the renin-angiotensin system are associated with coronavirus disease 2019 (COVID-19) hospitalisation: review of the first 669 cases from the UK Biobank. medRxiv. 2020:2020.05.10.20096925.

Paranjpe I, Russak AJ, De Freitas JK, Lala A, Miotto R, Vaid A, et al. Clinical Characteristics of Hospitalized Covid-19 Patients in New York City. medRxiv. 2020:2020.04.19.20062117.

Sigel K, Swartz T, Golden E, Paranjpe I, Somani S, Richter F, et al. Coronavirus 2019 and people living with human immunodeficiency virus: outcomes for hospitalized patients in new York City. Clin Infect Dis. 2020;71(11):2933–8.

Wang A-L, Zhong X, Hurd YL. Comorbidity and Sociodemographic determinants in COVID-19 Mortality in an US Urban Healthcare System. medRxiv. 2020:2020.06.11.20128926.

Wang B, Van Oekelen O, Mouhieddine TH, Del Valle DM, Richter J, Cho HJ, et al. A tertiary center experience of multiple myeloma patients with COVID-19: lessons learned and the path forward. J Hematol Oncol. 2020;13(1):94.

Wang Z, Zheutlin AB, Kao Y-H, Ayers KL, Gross SJ, Kovatch P, et al. Analysis of hospitalized COVID-19 patients in the Mount Sinai Health System using electronic medical records (EMR) reveals important prognostic factors for improved clinical outcomes. medRxiv. 2020:2020.04.28.20075788.

Ellington S, Strid P, Tong VT, Woodworth K, Galang RR, Zambrano LD, et al. Characteristics of women of reproductive age with laboratory-confirmed SARS-CoV-2 infection by pregnancy status—United States, January 22–June 7, 2020. Morb Mortal Wkly Rep. 2020;69(25):769.

Aldridge RW, Lewer D, Katikireddi SV, Mathur R, Pathak N, Burns R, et al. Black, Asian and Minority Ethnic groups in England are at increased risk of death from COVID-19: indirect standardisation of NHS mortality data. Wellcome Open Res. 2020;5:88.

Williamson EJ, Walker AJ, Bhaskaran K, Bacon S, Bates C, Morton CE, et al. Factors associated with COVID-19-related death using OpenSAFELY. Nature. 2020;584(7821):430–6.

Saunders WB, Nguyen H, Kalsekar I. Real-world glycemic outcomes in patients with type 2 diabetes initiating exenatide once weekly and liraglutide once daily: a retrospective cohort study. Diabetes Metab Syndr Obes. 2016;9:217–23.

Unni S, Wittbrodt E, Ma J, Schauerhamer M, Hurd J, Ruiz-Negron N, et al. Comparative effectiveness of once-weekly glucagon-like peptide-1 receptor agonists with regard to 6-month glycaemic control and weight outcomes in patients with type 2 diabetes. Diabetes Obes Metab. 2017.

McAdam-Marx C, Nguyen H, Schauerhamer MB, Singhal M, Unni S, Ye X, et al. Glycemic control and weight outcomes for Exenatide once weekly versus Liraglutide in patients with type 2 diabetes: a 1-year retrospective cohort analysis. Clin Ther. 2016;38(12):2642–51.

Blonde L, Patel C, Bookhart B, Pfeifer M, Chen Y-W, Wu B. A real-world analysis of glycemic control among patients with type 2 diabetes treated with canagliflozin versus dapagliflozin. Curr Med Res Opin. 2018;1-21.

Buysman EK, Sikirica MV, Thayer SW, Bogart M, DuCharme MC, Joshi AV. Real-world comparison of treatment patterns and effectiveness of albiglutide and liraglutide. J Comp Eff Res. 2017.

Gliklich RE, Dreyer NA, Leavy MB, editors. Registries for evaluating patient outcomes: a user's guide. 3rd ed. Rockville (MD): Agency for Healthcare Research and Quality (US); 2014. Report No.: 13(14)-EHC111.

Tan MH, Thomas M, MacEachern MP. Using registries to recruit subjects for clinical trials. Contemp Clinical Trials. 2015;41:31–8.

Jacobs IJ, Menon U, Ryan A, Gentry-Maharaj A, Burnell M, Kalsi JK, et al. Ovarian cancer screening and mortality in the UK collaborative trial of ovarian cancer screening (UKCTOCS): a randomised controlled trial. Lancet. 2016;387(10022):945–56.

Menon U, Gentry-Maharaj A, Ryan A, Sharma A, Burnell M, Hallett R, et al. Recruitment to multicentre trials—lessons from UKCTOCS: descriptive study. BMJ. 2008;337:a2079.

Bright CJ, Lawton S, Benson S, Bomb M, Dodwell D, Henson KE, et al. Data resource profile: the systemic anti-Cancer therapy (SACT) dataset. Int J Epidemiol. 2020;49(1):15–l.

Paulus JK, Dahabreh IJ, Balk EM, Avendano EE, Lau J, Ip S. Opportunities and challenges in using studies without a control group in comparative effectiveness reviews. Res Synth Methods. 2014;5(2):152–61.

Woolacott N, Corbett M, Jones-Diette J, Hodgson R. Methodological challenges for the evaluation of clinical effectiveness in the context of accelerated regulatory approval: an overview. J Clin Epidemiol. 2017;90:108–18.

International Committee of Medical Journal Editors I. Uniform requirements for manuscripts submitted to biomedical journals. Pathology. 1997;29(4):441–7.

Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, editors. Cochrane Handbook for Systematic Reviews of Interventions version 6.3 (updated February 2022). Cochrane. 2022. Available from www.training.cochrane.org/handbook.

Sandy J, Kilpatrick N, Persson M, Bessel A, Waylen A, Ness A, et al. Why are multi-centre clinical observational studies still so difficult to run? Br Dent J. 2011;211(2):59–61.

Herbert A, Wijlaars L, Zylbersztejn A, Cromwell D, Hardelid P. Data resource profile: hospital episode statistics admitted patient care (HES APC). Int J Epidemiol. 2017;46(4):1093–i.

Benchimol EI, Smeeth L, Guttmann A, Harron K, Moher D, Petersen I, et al. The REporting of studies conducted using observational routinely-collected health data (RECORD) statement. Plos Med. 2015;12(10):e1001885. https://doi.org/10.1371/journal.pmed.1001885.

Vandenbroucke JP, von Elm E, Altman DG, Gøtzsche PC, Mulrow CD, Pocock SJ, et al. Strengthening the reporting of observational studies in epidemiology (STROBE): explanation and elaboration. Plos Med. 2007;4(10):e297. https://doi.org/10.1371/journal.pmed.0040297.

Acknowledgements

The authors gratefully acknowledge all authors of the case-studies highlighted in this manuscript; Dr. Shirley Sze, Dr. Daniel Pan, Dr. Christopher A. Martin, Dr. Joshua Nazareth, Dr. Jatinder S. Minhas, Pip Divall, Professor Kamlesh Khunti, Dr. Laura Nellums, Dr. Manish Pareek, John S.M. Houghton, Andrew T.O. Nickinson, Coral J. Pepper, Professor Rob D. Sayers, Dr. Francesco Zaccardi, Professor Melanie J. Davies, Emily Patsko, Dr. Nafeesa Dhalwani, David Kloecker and Ekaterini Ioannidou.

Funding

This research was funded by the Medical Research Council [HH, KRA, SB, LIG and AS supported by grant no. MR/R025223/1]. CRN and AS were supported by Complex Reviews Support Unit which is funded by the National Institute for Health and Care Research [project number 14/178/29]. CRN is partially funded through an NIHR Pre-Doctoral Research Fellowship. AM is funded through the George Davies Charitable Trust [Registered Charity Number 1024818]. The funders had no role in the design and conduct of the study, the collection, analysis, and interpretation of the data, or the preparation, review, or approval of the manuscript. This project was supported by the National Institute for Health and Care Research (NIHR) Applied Research Collaboration East Midlands (ARC EM). The views expressed are those of the author(s) and not necessarily those of the NIHR or the Department of Health and Social Care.

Author information

Authors and Affiliations

Contributions

HH, LJG, KRA, SB and AS conceived the article. HH, LJG, AM and CRN provided information on case studies and contributed to first draft of the manuscript. All authors contributed to manuscript revisions. HH is the guarantor of the manuscript. The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

KRA is a member of the National Institute for Health and Care Excellence (NICE) Diagnostics Advisory Committee, the NICE Decision and Technical Support Units, and is a National Institute for Health Research (NIHR) Senior Investigator Emeritus [NF-SI-0512-10159]. He has served as a paid consultant, providing unrelated methodological and strategic advice, to the pharmaceutical and life sciences industry generally, as well as to DHSC/NICE, and has received unrelated research funding from Association of the British Pharmaceutical Industry (ABPI), European Federation of Pharmaceutical Industries & Associations (EFPIA), Pfizer, Sanofi and Swiss Precision Diagnostics/Clearblue. He has also received course fees from ABPI and is a Partner and Director of Visible Analytics Limited, a health technology assessment consultancy company. All other authors (HH, CN, AM, SB, AS, LG) have no conflicts of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Hussein, H., Nevill, C.R., Meffen, A. et al. Double-counting of populations in evidence synthesis in public health: a call for awareness and future methodological development. BMC Public Health 22, 1827 (2022). https://doi.org/10.1186/s12889-022-14213-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12889-022-14213-6