Abstract

Background

Adolescents are considered at high risk of developing iron deficiency. Studies in children indicate that the prevalence of iron deficiency increased with malaria transmission, suggesting malaria seasonally may drive iron deficiency. This paper examines monthly seasonal infection patterns of malaria, abnormal vaginal flora, chorioamnionitis, antibiotic and antimalarial prescriptions, in relation to changes in iron biomarkers and nutritional indices in adolescents living in a rural area of Burkina Faso, in order to assess the requirement for seasonal infection control and nutrition interventions.

Methods

Data collected between April 2011 and January 2014 were available for an observational seasonal analysis, comprising scheduled visits for 1949 non-pregnant adolescents (≤19 years), (315 of whom subsequently became pregnant), enrolled in a randomised trial of periconceptional iron supplementation. Data from trial arms were combined. Body Iron Stores (BIS) were calculated using an internal regression for ferritin to allow for inflammation. At recruitment 11% had low BIS (< 0 mg/kg). Continuous outcomes were fitted to a mixed-effects linear model with month, age and pregnancy status as fixed effect covariates and woman as a random effect. Dichotomous infection outcomes were fitted with analogous logistic regression models.

Results

Seasonal variation in malaria parasitaemia prevalence ranged between 18 and 70% in non-pregnant adolescents (P < 0.001), peaking at 81% in those who became pregnant. Seasonal variation occurred in antibiotic prescription rates (0.7–1.8 prescriptions/100 weekly visits, P < 0.001) and chorioamnionitis prevalence (range 15–68%, P = 0.026). Mucosal vaginal lactoferrin concentration was lower at the end of the wet season (range 2–22 μg/ml, P < 0.016), when chorioamnionitis was least frequent. BIS fluctuated annually by up to 53.2% per year around the mean BIS (5.1 mg/kg2, range 4.1–6.8 mg/kg), with low BIS (< 0 mg/kg) of 8.7% in the dry and 9.8% in the wet seasons (P = 0.36). Median serum transferrin receptor increased during the wet season (P < 0.001). Higher hepcidin concentration in the wet season corresponded with rising malaria prevalence and use of prescriptions, but with no change in BIS. Mean Body Mass Index and Mid-Upper-Arm-Circumference values peaked mid-dry season (both P < 0.001).

Conclusions

Our analysis supports preventive treatment of malaria among adolescents 15–19 years to decrease their disease burden, especially asymptomatic malaria. As BIS were adequate in most adolescents despite seasonal malaria, a requirement for programmatic iron supplementation was not substantiated.

Similar content being viewed by others

Introduction

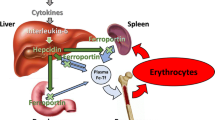

Seasonality defines the epidemiology of malaria and is observed for almost all infectious diseases, denoting cyclical changes in exposure and host immunity [1,2,3]. Studies in children showed prevalence of iron deficiency increased over the malaria season, [4] but decreased when malaria transmission was interrupted by vector control, [5] suggesting malaria was seasonally driving iron deficiency. The mechanism responsible for this interaction was hepcidin. This hormone, when stimulated by inflammation due to malaria or respiratory infection in young children, restricts enteric iron absorption and, by limiting iron availability, may lead to iron deficiency [6, 7].

Whether malaria drives seasonal iron deficiency in adolescents is an important question, given their continuing exposure to malaria and multiple other infections, alongside increased iron requirements for growth and maturation. In Burkina Faso, higher body iron stores (BIS) in adolescents predicted an increased malaria risk in the following rainy season approximately 5 months later, [8] as well as in early pregnancy in those who went on to conceive [9]. Preterm birth incidence showed a striking seasonal pattern, with a 50% increased effect size in iron supplemented women [10]. An inhibitory effect of iron on nitric oxide, interfering with macrophage-mediated action against Plasmodium [11], as well as the parasite’s own capacity to acquire iron as a means to increase pathogenicity [12], may explain the higher malaria risk with elevated iron levels. Median levels of serum hepcidin were significantly higher in the presence of malaria parasitaemia in non-pregnant and pregnant adolescents, which could reduce iron absorption to reverse some of these effects [13]. Of interest was hepcidin up-regulation with lower genital tract infection. Concentrations of lactoferrin (Lf), which binds free iron in genital mucosa, rose in the presence of lower tract infections capable of causing chorioamnionitis, and positively correlated with serum hepcidin, serum ferritin and BIS [14]. Iron deficient adolescents were more likely to have normal vaginal flora [15]. These observations suggest that infection prevalence at a given time point is confounded by prior iron deficiency or repletion, which itself may be seasonally governed.

Seasonal studies on adolescents are lacking yet understanding seasonal infection patterns should provide a basis for timely selection of interventions to reduce adolescent morbidity and mortality, especially before a first pregnancy. To date, malaria seasonality has been interpreted mostly in relation to vector parameters and age-specific parasite prevalence [16]. Seasonal malaria-iron studies conducted in young children have relied on observations recorded at the start and end of the wet season, with events not measured during interim periods or between seasons [17]. This paper examines monthly seasonal infection patterns of malaria, abnormal vaginal flora, chorioamnionitis, antibiotic and antimalarial prescriptions, in relation to changes in iron biomarkers and nutritional indices in adolescents living in a rural area of Burkina Faso, in order to assess the requirement for seasonal infection control and nutrition interventions. Data had been collected over a 32-month period, as part of a community-based randomised controlled trial in adolescents of weekly iron supplementation [13].

Methods

The community based randomised controlled trial of iron supplements (weekly ferrous gluconate (60 mg) with folic acid (2.8 mg) as intervention or folic acid alone as control) was approved by ethical review boards at collaborating centres and registered with clinicaltrials.gov: NCT01210040 (Additional file 1). Participants were recruited as healthy, nulliparous, non-pregnant women aged 15–24 years from thirty rural villages located within the Health Demographic Surveillance System of the Clinical Research Unit in Nanoro in rural Burkina Faso, [13] and followed weekly. Individual/guardian written consents were obtained from participants at recruitment. HIV prevalence was low (< 2%). Malaria is hyperendemic, with the main rainfall (daily chance of precipitation above 20%) from May to mid-October [18]. Food shortages occur from June to August (lean season), corresponding to the early rainy season, with food abundance post-harvest around November to December [19]. All participants were treated with albendazole and praziquantel at enrolment and provided with an insecticide impregnated bed net.

Between April 2011 and January 2014 data were collected at several study assessment points and at weekly follow-up visits. The present analysis included young menarcheal women at baseline who remained non-pregnant until the end of the trial, as well as data from those who became pregnant during the 18-month supplementation period and had re-consented at entry to the pregnancy cohort (Additional file 1). They were monitored until delivery and their babies followed up to 2 years of age [20]. As there were no significant or substantive differences in iron biomarker profiles between arms at trial end-points, [13] data from both arms were pooled for the present analysis.

Non-pregnant cohort assessments

Baseline: Recruitment extended over 9 months. Demographic data, dietary information and medical histories, including last menstrual period and age at menarche, were collected and a clinical examination performed [13]. Height (nearest mm) and weight (nearest 100 g) for Body Mass Index (BMI) and mid-upper arm circumference (mm: MUAC) were measured in duplicate. A venous blood sample (5 ml) was collected for later iron biomarker assessments [13]. Self-taken vaginal swabs were requested for bacterial vaginosis (BV), gram stain, pH and Lf assays as previously described [14, 15]. Gram stains were scored using Nugent criteria with 7–10 indicating BV, 4–6 intermediate and 0–3 normal flora.

For trial design reasons, malaria parasitaemia and haemoglobin were not measured at baseline, although women symptomatic for malaria were treated in line with government guidelines (artesunate-amodiaquine or arthemeter-lumefantrine, or rescue treatment with quinine). Clinical malaria (axillary temperature ≥ 37.5 °C and/or history of fever within last 48 h) was confirmed by rapid diagnostic test (RDT). At the weekly visits which followed recruitment, participants symptomatic with malaria or other infections were referred to local health centres for free treatment and reasons for treatment were recorded. Missed periods were followed up with a urine pregnancy test.

End Assessment (FIN): Final assessments were made after 18 months of supplementation when procedures for nutritional and iron biomarker assessments at enrolment were repeated. Malaria parasitaemia was assessed and women with positive results were treated.

Pregnant cohort assessments

At a first antenatal visit (ANC1) scheduled for 13–16 weeks gestation, in addition to standard antenatal care (including daily iron and folic acid for all participants and administration of intermittent preventive malaria treatment at relevant gestational dates) a blood sample (5 ml) was obtained for iron biomarkers and malaria microscopy. Self-taken vaginal swabs were requested, as for the non-pregnant cohort [15]. BMI and MUAC were measured. The pregnant cohort continued with weekly follow-up and referral for free treatment for symptomatic illness until delivery, when placentae were collected, if available, to determine placental malaria and chorioamnionitis [10].

Laboratory procedures

These have been previously described, [13, 14] and a technical resumé is provided in Additional file 2. Blood was assayed for plasma ferritin, serum transferrin receptor (sTfR), hepcidin and C-reactive protein (CRP). A non-pregnant internal regression slope log (ferritin) against log CRP estimate was used for ferritin correction [21]. Iron stores were calculated using the regression-adjusted ferritin estimate. Low BIS were defined as zero iron stores < 0 mg/kg. BIS (mg/kg) were calculated using the equation derived by Cook et al.: body iron (mg/kg) = − [log10 (1000 × sTfR/ferritin) – 2.8229]/0.1207 [22]. Hepcidin assays were performed at the Department of Laboratory Medicine, Radboud University Nijmegen Medical Center, The Netherlands [23]. Malaria films using whole blood were Giemsa stained and read by two qualified microscopists. For discrepant findings (positive/negative; > two-fold difference for parasite densities ≥400/μl; > log10 if < 400/μl), a third independent reading was made. Duplicate Lf samples were processed and analysed independently. Lf was measured by ELISA and measurements standardised for duplicate samples and sample weight [14]; the geometric mean is presented here. Gram stains were sent for Nugent scoring to the Microbiology Department at Manchester University NHS Foundation Trust, UK. Abnormal flora are defined as Nugent scores 4–10.

Statistical analysis

The sample available was determined by the size of the trial which was sized using formal power calculations for the scheduled malaria endpoint [13], which for pregnant women was at ANC1. Continuous outcomes, based on scheduled visits, were fitted to a mixed-effects linear model with month (1 to 12), age (centred at the median age of 17 years), and pregnancy status as fixed effect covariates and woman as a random effect. This allowed correlation between repeat measurements in the same women and increased the power of the analysis by exploiting the within-women differences across seasons. Effects were plotted adjusted for age and pregnancy and represent non-pregnant women at age 17 years. 95%CI (Confidence intervals) were computed using a profile likelihood method. Amplitude of the seasonal effect was estimated as the difference between the largest and smallest monthly coefficients. The peak and nadir were determined by the months with the largest and smallest monthly coefficients. CI for the amplitude and peak/nadir months were derived using bootstrapping. Where appropriate the outcomes were log-transformed for analysis, and coefficients and amplitudes are presented on this log scale.

Dichotomous infection outcomes were fitted with analogous logistic regression models, but as there were insufficient repeat measurements to fit a random woman effect, standard fixed-effect models are presented, with coefficients expressed as odds ratios. Seasonal amplitude is presented as the difference between the event rates in the highest and lowest months, after adjusting for age and pregnancy effects.

Prescription data were aggregated into the number of prescriptions dispensed per month and fitted using a Poisson regression model. Consideration of individual level covariates was precluded as appropriate denominators were not known. The number at risk was estimated by the number of recorded contacts (weekly home plus unscheduled health centre visits) and thus approximate to person-weeks of exposure. CI for seasonal amplitude, peak and nadir months were estimated by simulating monthly totals from a Poisson distribution using the estimated rates and numbers at risk. Seasonal amplitude is presented as the difference between event rates in the highest and lowest months.

For comparison annual means were estimated as simple means (geometric means for log-transformed outcomes) of the 12 monthly coefficients. We also present standard deviations (again log-transformed where appropriate) between observations for the continuous outcomes to provide context for the observed seasonal amplitudes.

Results

Table 1 lists data sources and numbers of assessments available at baseline and end assessment for non-pregnant women, at ANC1 for pregnant women and at weekly visits. In total 1949 women contributed some data to the study. Of these 93.3% were adolescent, with only 130 more than 19 years and none less than 15 years old. All were menarcheal and nulliparous at enrolment and 315 women who became pregnant during follow-up were assessed at ANC1. At 102452 weekly follow-up visits of 1897 women, including some unscheduled visits, 1.3% were prescribed free antibiotics for mainly dysenteric symptoms, and 2.1% free antimalarials for clinical malaria. Distribution of attendances is shown in Fig. 1, with months and years of attendance for the assessment points. The total monthly attendances over 12 months were spread across wet and dry seasons. Fewer non-pregnant attendances in September and October were recorded.

Seasonal infection patterns

Combined non-pregnant and pregnant monthly prevalence estimates for malaria parasitaemia and for genital bacterial flora with a Nugent score ≥ 4 are shown in Fig. 2, together with frequency of prescriptions for antibiotics and antimalarials. Malaria parasitaemia prevalence showed highly significant seasonal variation (P < 0.001), with a wet season September peak of 70% in non-pregnant women and a single dry season nadir in May of 18%. The September peak malaria prevalence for pregnant women in early pregnancy at ANC1 was 81%. The odds ratio for increased malaria infection in pregnancy was 1.9 (95%CI 1.3–2.6) (Table 2). Antimalarials for clinical cases were more frequently prescribed in the middle of the wet season with an August peak (P < 0.001), although malaria prevalence remained above 40% for 4 months into the dry season.

Monthly fitted estimates for infection parameters. Models fitted to all data adjusted for age and pregnancy status. Error bars represent 95% CI. Yellow: dry season months; blue: wet season months. Horizontal line is annual mean. Lines are fitted estimates and represent values for non-pregnant women of median age 17 years

Prevalence of abnormal vaginal bacterial flora was uniform through the dry season with a mean value of 23%, with higher prevalence of 38% at the end of the wet season (P = 0.28). There were no significant effects of age or pregnancy status on prevalence of abnormal vaginal flora. Prescription of antibiotics almost doubled during the wet season (P < 0.001). Monthly prevalence of chorioamnionitis is shown for 181 women with delivery placental samples. Mean chorioamnionitis prevalence was 43%, with higher values through most of the dry season (peak June) and a nadir at the end of the wet season (October) (P = 0.026). Table 2 summarises significance levels for seasonal variation, along with pregnancy and age effect sizes.

Seasonal iron biomarker patterns

Monthly concentrations of iron biomarkers and CRP are shown in Fig. 3, with a summary of significance levels and timing of seasonal peaks and troughs in Table 2. Median BIS were 5.1 mg/kg2 and showed significant seasonal variation (range 4.1–6.8 mg/kg) with a single November peak and April nadir, (P < 0.001). This represents an average of 53.2% variation around mean BIS during a 12-month period. BIS values were slightly below the annual mean value throughout the wet season but recovered quickly in the dry season and remained slightly above this annual mean. However, percentages with low BIS (< 0 mg/kg) were 9.5% in the dry season and 9.2% in the wet season (P = 0.19), with the corresponding adjusted estimates in non-pregnant women being 8.7% (dry) and 9.8% (wet) (P = 0.36), after fitting a logistic model allowing for age and pregnancy.

Monthly fitted estimates for iron biomarkers and C-reactive protein. Models fitted to all data adjusted for age and pregnancy status. Error bars represent 95% CI. Yellow: dry season months; blue: wet season months. Horizontal line is annual mean. Lines are fitted estimates and represent values for non-pregnant women of median age 17 years

Mean sTfR concentration was 6.7 μg/ml, with a single October peak following an increase in concentration in the last 2 months of the wet season (P < 0.001). sTfR concentrations declined in November and December in the early dry season when malaria prevalence was still high. Monthly hepcidin concentrations were higher towards the end of the wet season compared to dry season values (P < 0.001). The mean value was 3.68 nmol/l, with relatively narrow range (2.75–5.21 nmol/l). A single peak in concentration occurred in August, with a single nadir in January in the middle of the dry season.

Monthly vaginal Lf concentration was almost uniform throughout the year, but with a decline in values in the late wet season and seasonal range of 2.0–21.5 μg/ml, P = 0.016). Seasonal effects on iron biomarkers were small compared to the variation between individuals as shown by their standard deviations (Table 2). CRP concentrations were moderately raised and showed smooth seasonal cyclic variation with a range of 0.41–1.28 mg/l, with two nadirs in the dry season and a single peak at the end of the wet season (P < 0.001). CI for all biomarkers were wider for September and October due to the smaller number of assessments available for these months.

Anthropometric and dietary patterns

Monthly variation in BMI and MUAC are shown in Fig. 4, with summary statistics in Table 2. Highly significant seasonal variation was observed for both indices (P < 0.001). Seasonal patterns for each indicator were closely mirrored, with the same peak month (March) at the end of the post-harvest period, and nadirs in October for BMI and November for MUAC at the end of the wet season. The ranges of these changes were 19.5–20.4 kg/m2 for BMI and 23.7–24.3 cm for MUAC (both P < 0.001).

Monthly fitted estimates for BMI and MUAC. Models fitted to all data adjusted for age and pregnancy status. Error bars represent 95% CI. Yellow: dry season months; blue: wet season months. Horizontal line is annual mean. Lines are fitted estimates and represent values for non-pregnant women of median age 17 years

Discussion

This study assessed seasonal trends in adolescent infections, nutritional status and risk of iron deficiency over a 32-month period. The data were consistent with malaria and other infections driving the hepcidin response to control iron homeostasis. As a result, BIS fluctuated by up to 53% per year around a mean of 5.5 mg/kg. Iron stores were below the mean in the wet season but recovered sharply post-harvest, at the beginning of the dry season when food was more plentiful and workloads decreased. Increased prescription of antibiotics and antimalarials in the wet season reflected higher infection risk, which may partly relate to reduced immunity with poorer diet, [24] and increased malaria exposure.

The finding that ~ 75% of all those followed were parasitaemic at the peak of the wet season is a clear indicator of the need to lower wet season malaria transmission in this older age group. We have previously reported a high risk of adverse birth outcomes in the same adolescents, [10] and an increased risk of malaria in their infants [20]. Elevated hepcidin values in the dry season suggest that chronic asymptomatic infections are carried over from the wet season and contribute to these outcomes. In this study, 96% of malaria infections in non-pregnant and pregnant women were asymptomatic [13]. Asymptomatic P.falciparum parasitaemias in young pregnant women are also described in Mali, Ghana and Gambia [25], but pregnant women are easier to reach for treatment than non-pregnant adolescents. School-based intervention programmes for 5 to 15 year old children [26,27,28] may be feasible, but would not cover the older age group in our study. In Burkina Faso, secondary school enrolment for both sexes in 2019 was just 43% [29]. Making services more accessible to adolescents would be helpful, especially for symptomatic gastrointestinal and respiratory problems, but asymptomatic malaria and genital infections would still be missed.

Seasonal malaria chemoprevention of children less than 6 years is recommended by the World Health Organization and is being widely implemented in community settings in West Africa, including Burkina Faso [30]. It requires monthly administration of a full course of antimalarials during the malaria season and is effective in reducing morbidity and mortality by maintaining therapeutic drug concentrations in the blood [31]. Research is needed to address the feasibility and cost effectiveness of a similar community-based approach for seasonal prevention of malaria in older adolescents, some of whom could be unknowingly pregnant. Clinicals trials would be required, keeping in mind that the antimalarial treatment recommended by the World Health Organization for first trimester pregnancy would be difficult to implement in the adolescent population, most of whom would not be pregnant. Current WHO guidelines recommend 7 days of quinine plus clindamycin, twice daily, for uncomplicated P. falciparum malaria during the first trimester [30]. Yet an Evidence Review Group convened by WHO’s Global Malaria Programme concluded that artemisinin combination treatments (ACTs) should be used to treat uncomplicated falciparum malaria in the first trimester [32]. Their use in any trimester in human pregnancy has not been associated with increased risk of miscarriage, stillbirth or congenital abnormality compared to those treated with quinine, or to the background population level of these outcomes [33]. Clinical trials of ACTs in non-pregnant adolescents should screen for pregnancy to indicate the level of acceptability of routine pregnancy testing and to exclude first trimester exposures with a seasonal malaria programme using ACTs.

Hepcidin levels were highest in August, a month before peak malaria prevalence, and did not rise further despite continued malaria transmission in the dry season. An inflammatory signal denoted by rising CRP concentrations at the start of the wet season was positively associated with rising hepcidin and decreasing CRP concentrations in the late wet season with lower hepcidin values. These stabilised around a mean of 3.7 nM/l (Table 2), considerably higher than the 2.6 nM/l median reference values for healthy western women (18–24 years), using the same assay [23]. In young non-pregnant Beninese women with asymptomatic parasitaemias, a ~ 40% reduction in iron absorption occurred at similar hepcidin levels to those reported here [34], and being persistently raised, would anticipate an increased risk of iron deficiency. Yet a decline in BIS levels did not occur until four to 5 months after the hepcidin peak and were lowest in April, mid-dry season. Mean BIS estimates were comparable to those reported for first trimester American women in the NHANES survey (6.28 mg/kg), and for Vietnamese non-pregnant women (4.7 mg/kg) [9].

The sTfR response was highest at the end of the wet season, a month following the peak values for malaria prevalence, CRP and hepcidin. A rise in sTfR is the initial intracellular response to a reduction in iron supply, indicating a degree of functional iron depletion and an erythropoietic response [35]. These sTfR concentrations declined in the early dry season, when malaria prevalence was still high, suggesting only a short period of malaria-related functional iron depletion, with no obvious need for ongoing iron supplementation. The problem is that iron interventions for adolescents are largely based on anaemia prevalence [36]. In a previous paper we reported an end assessment anaemia prevalence (Hb < 12 g/dl) of 43.4% in the non-pregnant cohort [9]. This is a level at which iron-containing supplementation of adolescents is recommended, including iron fortified food, multiple micronutrients, and lipid-based nutrient supplements [36]. None of these would be recommended in Burkina Faso, given the lack of an effective adolescent malaria control strategy, as well as availability of alternative dietary iron sources. Across this region, sorghum is a major contributor to dietary iron intakes across wet and dry seasons, with post-harvest crops increasing dietary diversity and providing more sources of iron [37, 38]. Traditional sorghum beer, consumed widely in Burkina Faso [39], and other areas with limited resources, also contributes to micronutrient adequacy in the diet [40].

The higher prevalence of abnormal vaginal flora in September and October was not statistically significant, although its occurrence corresponded with a significant peak in vaginal Lf concentration in October. This may indicate a local mucosal immune response to genital infection, [41] but it was not associated with hepcidin upregulation in the same months. Lf concentrations declined over the wet season as did BMI and MUAC. We have previously reported a significant positive association of vaginal Lf with MUAC and BMI in this population [14] and these nutritional indices declined throughout the wet season. Targeted seasonal measures to redress this might be warranted but relevant interventions have not been addressed [42]. General measures to improve community food security, such as reducing post-harvest food storage losses, would benefit adolescents [43]. Malaria chemoprevention should have synergistic effects in decreasing anaemia [26].

It is of interest that peak prevalence of chorioamnionitis occurred approximately 5 months following the higher prevalence of abnormal vaginal flora. Chorioamnionitis risk was highest when women were less likely to receive anti-malarials or antibiotics. Risk of chorioamnionitis may relate to early gestational exposure to abnormal flora and ascending chronic infection established in the wet season.

The main strength of this study was the large sample and weekly follow-up. Within the demographic surveillance area, all health care contacts could be readily monitored although diagnosis of dysentery was based on symptoms and not confirmed by bacteriology. The trial was not designed to assess seasonal effects, but close follow-up of participants allowed collection of infection treatment data, across clearly defined seasons, not solely at study endpoints. Haemoglobin was not measured at baseline when iron biomarkers were assessed, and dietary data were not collected at study endpoints. The rural location represented a high malaria transmission area with well-defined seasonality, common to the sub-Sahelian region. Mid-wet season data came mainly from pregnant women, as non-pregnant adolescents were generally absent from home for planting/harvesting. As a result, separate seasonal effects in pregnant and non-pregnant women could not be ascertained and constant pregnancy effects throughout the year were assumed, with seasonal effects adjusted for age and pregnancy co-variates. Geographical variations in exposures to pathogens or diet may have confounded seasonal effects as trial recruitment was conducted sequentially across villages, but these biases are likely to be negligible.

Conclusions

Seasonal analysis is very useful for rationalising delivery of adolescent interventions. Malaria seasonal chemoprevention for this age-group should be evaluated in clinical trials to target their high prevalence of asymptomatic and chronic P.falciparum infections. In terms of the need for adolescent iron supplementation, this seasonal analysis showed iron stores were satisfactory at the population level and were largely maintained by host mechanisms, despite seasonal dietary changes. Some individuals would benefit from additional iron for specific clinical reasons, but this would not justify programmatic roll-out of iron supplementation. Whether improved nutrition would increase immunity to lower genital tract infections is an area for future research.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- BIS:

-

Body iron stores

- sTfr:

-

Serum transferrin receptor

- BMI:

-

Body mass index

- MUAC:

-

Mid-upper-arm-circumference

- Lf:

-

Lactoferrin

- HIV:

-

Human immunodeficiency virus

- BV:

-

Bacterial vaginosis

- Px:

-

Prescription of medication

- FIN:

-

End assessment

- ANC1:

-

First antenatal care visit

- CRP:

-

C-reactive protein

- ELISA:

-

Enzyme-linked immunosorbent assay

- CI:

-

Confidence intervals

- SD:

-

Standard deviation

- IQR:

-

Inter-quartile range

- NAHNES:

-

The National Health and Nutrition Examination Survey

- PALUFER:

-

Malaria-iron randomised iron supplementation trial

References

Paynter S, Ware RS, Sly PD, Williams G, Weinstein P. Seasonal immune modulation in humans: observed patterns and potential environmental drivers. J Infect. 2015;70:1–10. https://doi.org/10.1016/j.jinf.2014.09.006 PMID: 25246360.

Lal A, Hales S, French N, Baker MG. Seasonality in human zoonotic enteric diseases: a systematic review. PLoS One. 2012;(4):e31883. https://doi.org/10.1371/journal.pone.0031883.

Liu B, Taioli E. Seasonal variations of complete blood count and inflammatory biomarkers in the US population - analysis of NHANES data. PLoS One. 2015;10(11):e0142382. https://doi.org/10.1371/journal.pone.0142382.

Muriuki JM, Atkinson SH. How eliminating malaria may also prevent iron deficiency in African children. Pharmaceuticals. 2018;11(4):96. https://doi.org/10.3390/ph11040096.

Frosch AEP, Ondigo BN, Ayodo GA, Vulule JM, John CJ, Cusick SE. Decline in childhood iron deficiency after interruption of malaria transmission in highland Kenya. Am J Clin Nutr. 2014;100(3):968–73. https://doi.org/10.3945/ajcn.114.087114.

Schmidt PJ. Regulation of iron metabolism by hepcidin under conditions of inflammation. J Biol Chem. 2015;290(31):18975–83. https://doi.org/10.1074/jbc.R115.650150.

Prentice AM, Bah A, Jallow MW, Jallow AT, Sanyang S, Sise EA, et al. Respiratory infections drive hepcidin-mediated blockade of iron absorption leading to iron deficiency anemia in African children. Sci. Adv. 2019;5:eaav9020. https://doi.org/10.1126/sciadvaav9020.

Brabin L, Roberts SA, Tinto H, Gies S, Diallo S, Brabin B. Iron status of Burkinabé adolescent girls predicts malaria risk in the following rainy season. Nutrients. 2020;12(5):1446. https://doi.org/10.3390/nu12051446.

Diallo S, Roberts SA, Gies S, Rouamba T, Swinkels D, Geurts-Moespot AJ, et al. Malaria early in the first pregnancy: potential impact of iron status. Clin Nutr. 2020;39(1):204–14. https://doi.org/10.1016/j.clnu.2019.01.016.

Brabin B, Gies S, Roberts SA, Diallo S, Lompo OM, Kazienga A, et al. Excess risk of preterm birth with periconceptional iron supplementation in a malaria endemic area: analysis of secondary data on birth outcomes in a double blind randomized controlled safety trial in Burkina Faso. Malar J. 2019;18(1):161. https://doi.org/10.1186/s12936-019-2797-8.

Weiss G, Werner-Felmayer G, Werner ER, Grunewald K, Wachter H, Hentze MW. Iron regulates nitric oxide synthase activity by controlling nuclear transcription. J Exp Med. 1994;180(3):969–76. https://doi.org/10.1084/jem.180.3.969.

Moya-Alvarez V, Cottrell G, Ouédraogo S, Accrombessi M, Massougbodgi A, Cot M. Does iron increase the risk of malaria in pregnancy? Open Forum Infect Dis. 2015;2(2):ofv38. https://doi.org/10.1093/ofid/ofv038.

Gies S, Diallo S, Roberts SA, Kazienga A, Powney M, Brabin L, et al. Effects of weekly iron and folic acid supplements on malaria risk in nulliparous women in Burkina Faso: a periconceptional double-blind randomized controlled noninferiority trial. J Inf Dis. 2018;7(7):1099–109. https://doi.org/10.1093/infdis/jiy257.

Roberts SA, Brabin L, Diallo S, Gies S, Nelson A, Stewart C, et al. Mucosal lactoferrin response to genital tract infections is associated with iron and nutritional biomarkers in young Burkinabé women. Eur J Clin Nutr. 2019;73(11):1464–72. https://doi.org/10.1038/s41430-019-0444-7.

Brabin L, Roberts SA, Gies S, Diallo S, Stewart CL, Kazienga A, et al. Effects of long-term weekly iron and folic acid supplementation on lower genital tract infection: a double blind, randomized controlled trial in Burkina Faso. BMC Med. 2017;15(1):206. https://doi.org/10.1186/s12916-017-0967-5.

Reiner RC Jr, Geary M, Atkinson PM, Smith DL, Gething PW. Seasonality of Plasmodium falciparum transmission: a systematic review. Malar J. 2015;14:343. https://doi.org/10.1186/s12936-015-0849-2.

Mohandas N, Hillyer CD. The iron fist: malaria and hepcidin. Blood. 2014;123(21):3217–8. https://doi.org/10.1182/blood-2014-04-562223.

https://weatherspark.com/y/40160/Average-Weather-in-Ouagadougou-Burkina-Faso-Year-Round#Sections-Precipitation. Accessed 6 July 2021.

Becquey E, Delpeuch F, Konaté AM, Delsol H, Lange M, Zoungrana M, et al. Seasonality of the dietary dimension of household food security in urban Burkina Faso. Br J Nutr. 2012;107(12):1860–70. https://doi.org/10.1017/S0007114511005071.

Gies S, Roberts SA, Diallo S, Lompo OM, Tinto H, Brabin BJ. Risk of malaria in young children after periconceptional iron supplementation. Mat Child Nutr. 2020;25(2):e13106. https://doi.org/10.1111/mcn.13106.

Namaste SM, Rohner F, Huang JJ, Bhushan NL, Flores-Ayala R, Kupka R, et al. Adjusting ferritin concentrations for inflammation: biomarkers reflecting inflammation and nutritional determinants of anemia (BRINDA) project. Am J Clin Nutr. 2017;106(Suppl 1):359S–71S. https://doi.org/10.3945/ajcn.116.141762.

Cook J, Flowers CH, Skine BS. The quantitative assessment of body iron. Blood. 2003;101(9):3359–64. PMID: 12521995. https://doi.org/10.1182/blood-2002-10-3071.

Galesloot TE, Vermeulen SH, Geurts-Moespot AJ, Klaver SM, Kroot JJ, van Tienoven D, et al. Serum hepcidin: reference ranges and biochemical correlates in the general population. Blood. 2011;117(25):e218–25. https://doi.org/10.1182/blood-2011-02-337907.

Nairz M, Weiss G. Iron in infection and immunity. Mol Asp Med. 2020;75:100864. https://doi.org/10.1016/j.mam.2020.100864.

Berry I, Walker P, Tagbor H, Coulibaly SO, Kayentao K, Williams J, et al. Seasonal dynamics of malaria in pregnancy in West Africa: evidence for carriage of infections acquired before pregnancy until first contact with antenatal care. Am Trop Med Hyg. 2018;98(2):534–42. https://doi.org/10.4269/ajtmh.17-0620.

Cohee LM, Opondo C, Clarke SE, Halliday KE, Cano J, Shipper AG, et al. Preventive malaria treatment among school-aged children in sub-Saharan Africa: a systematic review and meta-analysis. Lancet Glob Health. 2020;8:ei499–511. https://doi.org/10.1016/S2214-109X(20)30325-9.

Clarke SE, Rouhani S, Diarra S, Saye R, Bamadio M, Jones R, et al. Impact of a malaria intervention package in schools on plasmodium infection, anaemia and cognitive function in schoolchildren in Mali: a pragmatic cluster-randomised trial. BMJ Glob Health. 2017;2(2):e000182. https://doi.org/10.1136/bmjgh-2016-000182.

Bundy DAP, de Silva N, Horton S, Patton GC, Schultz L, Jamison DT. Disease control Priorities-3, child and adolescent health and development authors group. Investment in child and adolescent health and development: key messages from disease control priorities, 3rd edition. Lancet. 2018;391(10121):687–99. https://doi.org/10.1016/S0140-6736(17)32417-0.

Burkina Faso: Secondary school enrolment. https://www.theglobaleconomy.com/Burkina-Faso/Secondary school_enrollment/

World Health Organization. WHO Guidelines for Malaria. Geneva: WHO/UCN/GMP/2021.01; 2021.

Partnership ACCESS-SMC. Effectiveness of seasonal malaria chemoprevention at scale in west and Central Africa: an observational study. Lancet. 2020;396(10265):1829–40. https://doi.org/10.1016/S0140-6736(20)32227-3.

McGready R, Nosten F, Barnes KI, Mokuolu O, White NJ. Why is WHO failing women with falciparum malaria in the first trimester of pregnancy? Lancet. 2020;395(10226):779. https://doi.org/10.1016/S0140-6736(20)30161-6.

Saito M, Gilder ME, McGready R, Nosten F. Antimalarial drugs for treating and preventing malaria in pregnant and lactating women. Expert Opin Drug Saf. 2018;17(11):1129–44. https://doi.org/10.1080/14740338.2018.1535593.

Cercamondi CI, Egli IM, Ahouandjinou E, Dossa R, Zeder C, Salami L, et al. Afebrile plasmodium falciparum parasitemia decreases absorption of fortification iron but does not affect systemic iron utilization: a double stable-isotope study in young Beninese women. Am J Clin Nutr. 2010;92(6):1385–92. https://doi.org/10.3945/ajcn.2010.30051.

Cook JD. Defining optimal body iron. Proc Nutr Soc. 1999;58(2):489–95. https://doi.org/10.1017/S0029665199000634.

World Health Organization. Essential nutrition actions: mainstreaming nutrition through the life course. 2019. ISBN 978–92–4-151585.

Arsenault JE, Nikiema L, Allemand P, Ayassou KA, Lanou H, Moursi M, et al. Seasonal differences in food and nutrient intakes among young children and their mothers in rural Burkina Faso. J Nutr. 2014;3:e55. https://doi.org/10.1017/jns.2014.53.

Martin-Prevel Y, Allemand P, Nikiema L, Ayassou KA, Ouedraogo G, Moursi M, et al. Biological status and dietary intake of iron, zinc and vitamin A among women and preschool children in rural Burkina Faso. PLoS ONE. 2016;11(3). https://doi.org/10.1371/journal.pone.0146810.

Martinez P, Røislien J, Naidoo N, Clausen T. Alcohol abstinence and drinking among African women: data from the World Health Surveys. BMC Public Health. 2011;11:160. https://doi.org/10.1186/1471-2458/11/160.

Lyumugabe F, Gros J, Nzungize J, Bajyana E, Thonart P. Characteristics of African traditional beers brewed with sorghum malt: a review. Biotechnol Agron Soc Environ. 2012;16:509–30.

Rosa L, Cutone A, Lepanto MS, Paesan R, Valenti P. Lactoferrin: a natural glycoprotein involved in iron and inflammatory homeostasis. Int J Mol Sci. 2017;18(9):1985. https://doi.org/10.3390/ijms18091985.

Lassi ZS, Moin A, Das JK, Salam RA, Bhutta ZA. Systematic review on evidence-based adolescent nutrition interventions. Ann N Y Acad Sci. 2017;1393(1):34–50. https://doi.org/10.1111/nyas.13335.

Brander M, Bernauer T, Huss M. Improved on-farm storage reduces seasonal food security of smallholder farmer households – Evidence from a randomized control trial in Tanzania. Food Policy. 2021;98. https://doi.org/10.1016/j.foodpol.2020.101891.

Acknowledgments

The study on which these data are based was a collaborative effort of many individuals involved in field work, data management, laboratory tests, and data monitoring (13). We thank the PALUFER Research Team, the community field workers who worked to ensure good practice and acceptability, the Department of Laboratory Medicine, Radboud University Nijmegen Medical Center, Nijmegen, The Netherlands for hepcidin assays and Manchester University NHS Foundation Trust Microbiology laboratories for slide reading and quality control.

Funding

The original trial was funded by the National Institutes of Health, the Eunice Shriver National Institutes of Child Health and Human Development, (NIH-1U01HD061234-01A1), and the NIH Office of Dietary Supplements (−05S1 and 02S2). The funders played no role in the study design, in the collection, analysis, and interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Author information

Authors and Affiliations

Contributions

SR was responsible for statistical analysis and data interpretation; LB wrote the original, and subsequent drafts of the paper and provided the focus on adolescent health; BB was the Principal Investigator for the PALUFER trial. He conceived and drafted the methodology for this seasonal analysis and was a major contributor in data interpretation and writing; SG was the clinician responsible for delivery and supervision of the PALUFER trial in Burkina Faso; HT was Director of the Research Unit in Nanoro and provided the required infrastructure for the PALUFER trial; SD carried out the laboratory investigations in Burkina Faso. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The clinical protocol was approved by the Liverpool School of Tropical Medicine, UK, Research Ethics Committee (LSTM/REC), to the Institutional Review Board of the Institute of Tropical Medicine, Antwerp, Belgium (IRB/ITM), to the Antwerp University Hospital Ethics Committee (EC/UZA), to the Institutional Ethics Committee of Centre Muraz (Comité d’Ethique Institutionnel du Centre Muraz, CEI/CM); and to the National Ethics Committee (Comité Ethique pour la Recherche en Santé, CERS) in Burkina Faso.

All methods were carried out in accordance with relevant guidelines and regulations. Prior to enrolment the study team visited each village to inform village elders and senior women about trial objectives and for permission to invite young women to take part. Informed consent with right to withdraw (signature or thumb print) was granted by each participant, or by her appointed guardian if a minor or married at recruitment. The consent procedure was repeated for participants continuing to be followed in the pregnant cohort.

Consent for publication

Not applicable.

Competing interests

No, I declare that the authors have no competing interests as defined by BMC, or other interests that might be perceived to influence the results and/or discussion reported in this paper.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Background to the PALUFER safety trial of periconceptional iron supplementation.

Additional file 2.

Resumé of laboratory methods and placental histology for chorioamnionitis.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Roberts, S.A., Brabin, L., Tinto, H. et al. Seasonal patterns of malaria, genital infection, nutritional and iron status in non-pregnant and pregnant adolescents in Burkina Faso: a secondary analysis of trial data. BMC Public Health 21, 1764 (2021). https://doi.org/10.1186/s12889-021-11819-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12889-021-11819-0