Abstract

Background

Systematic reviews should inform American Academy of Ophthalmology (AAO) Preferred Practice Pattern® (PPP) guidelines. The quality of systematic reviews related to the forthcoming Preferred Practice Pattern® guideline (PPP) Refractive Errors & Refractive Surgery is unknown. We sought to identify reliable systematic reviews to assist the AAO Refractive Errors & Refractive Surgery PPP.

Methods

Systematic reviews were eligible if they evaluated the effectiveness or safety of interventions included in the 2012 PPP Refractive Errors & Refractive Surgery. To identify potentially eligible systematic reviews, we searched the Cochrane Eyes and Vision United States Satellite database of systematic reviews. Two authors identified eligible reviews and abstracted information about the characteristics and quality of the reviews independently using the Systematic Review Data Repository. We classified systematic reviews as “reliable” when they (1) defined criteria for the selection of studies, (2) conducted comprehensive literature searches for eligible studies, (3) assessed the methodological quality (risk of bias) of the included studies, (4) used appropriate methods for meta-analyses (which we assessed only when meta-analyses were reported), (5) presented conclusions that were supported by the evidence provided in the review.

Results

We identified 124 systematic reviews related to refractive error; 39 met our eligibility criteria, of which we classified 11 to be reliable. Systematic reviews classified as unreliable did not define the criteria for selecting studies (5; 13%), did not assess methodological rigor (10; 26%), did not conduct comprehensive searches (17; 44%), or used inappropriate quantitative methods (3; 8%). The 11 reliable reviews were published between 2002 and 2016. They included 0 to 23 studies (median = 9) and analyzed 0 to 4696 participants (median = 666). Seven reliable reviews (64%) assessed surgical interventions.

Conclusions

Most systematic reviews of interventions for refractive error are low methodological quality. Following widely accepted guidance, such as Cochrane or Institute of Medicine standards for conducting systematic reviews, would contribute to improved patient care and inform future research.

Similar content being viewed by others

Background

Systematic reviews of interventions are used to inform clinical guidelines [1], to set research priorities [2], and to help patients and clinicians make healthcare decisions. Well-conducted systematic reviews focus on clear research questions and use reproducible methods to identify, select, describe, and synthesize information from relevant studies [3]. Such reviews can reduce uncertainty about the effectiveness of interventions, lead to faster adoption of safe and effective interventions, and identify research needs. On the other hand, poorly-conducted systematic reviews may be harmful if they contribute to suboptimal patient care or promote unnecessary research.

Each year, the number of published systematic reviews continues to increase throughout medicine, yet the quality of systematic reviews is highly variable [2, 4]. To be considered reliable, systematic reviews must be conducted using methods that minimize bias and error in the review process, and must be reported completely and transparently [3].

Because refractive error is the leading cause of visual impairment globally [5], effective interventions to correct refractive error are important to patients and to optometrists and ophthalmologists. The American Academy of Ophthalmology (AAO) updated their Preferred Practice Pattern (PPP) for Refractive Errors & Refractive Surgery in 2017. To assist the AAO in this task, Cochrane Eyes and Vision @ United States (CEV@US) investigators sought to identify reliable systematic reviews about interventions for refractive error using a database of systematic reviews in eyes and vision [6].

Methods

Systematic reviews were eligible for this project if they evaluated the effectiveness or safety of interventions for refractive error that were included in the 2012 AAO’s PPP Refractive Errors & Refractive Surgery [7]. We excluded systematic reviews related to cataract removal with implantation of an intraocular lens for correcting refractive error because this topic is covered in another PPP. We included all reports that claimed to be systematic reviews. Otherwise, we defined a systematic review as “a scientific investigation that focuses on a specific question and uses explicit, pre-specified scientific methods to identify, select, assess, and summarize similar but separate studies [8].” Consistent with this definition, eligible studies were not required to include meta-analyses. Whenever a systematic review had been updated since the initial publication, we reviewed the most recent update.

CEV@US maintains a database of systematic reviews related to vision research and eye care (see Online Additional file 1 for search strategies used to identify reviews). We conducted an initial search of PubMed and Embase in 2007, and we updated the search in 2009, 2012, 2014, and on May 15, 2016 [9]. Two individuals identified systematic reviews related to vision research and eye care. For the 2007, 2009, and 2012 searches, two people identified relevant refractive error systematic reviews independently. For the 2014 and 2016 searches, one person identified potentially eligible refractive error reviews, then a second person verified that the reports were eligible. Differences were resolved through discussion that sometimes included another team member.

We developed a data abstraction form that included components of the Critical Appraisal Skills Programme (CASP) [10], the Assessment of Multiple Systematic Reviews (AMSTAR) [11], and the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) [12]. We adapted a version of the form used in previous studies [4, 13] (see Online Additional file 2 for a copy of the electronic form). In addition to the results presented in this paper, we recorded other descriptive information about the reviews. We entered data electronically using the Systematic Review Data Repository (SRDR) [14].

Two authors (EMW and SN) abstracted data independently from eligible systematic reviews and resolved discrepancies through discussion. We classified systematic reviews as “reliable” when the systematic reviewers had (1) defined criteria for the selection of studies, (2) conducted comprehensive literature searches for eligible studies, (3) assessed the methodological quality (risk of bias) of the included studies, (4) used appropriate methods for meta-analyses (which we assessed only when meta-analyses were reported), and (5) presented conclusions that were supported by the evidence provided in the review. We considered a systematic review “unreliable” when one or more of these criteria were not met.

Results

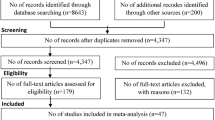

Of 124 systematic reviews in our database and classified as related to refractive error, 39 met our eligibility criteria (Fig. 1). Systematic reviews that met our inclusion criteria were published between 1996 and 2014 (median = 2011). They included between 0 and 309 studies (median = 9.5). In 13/39 (33%) systematic reviews, we could not ascertain how many participants contributed data; the 26 remaining reviews included data from 0 to 9336 participants.

Of the 39 systematic reviews, 22 (56%) assessed surgical interventions, including reviews of LASEK or LASIK (18; 45%) and intraocular lenses (4; 10%). Other reviews assessed orthokeratology (10; 26%), atropine (2; 5%), monovision (1; 3%), contact lenses (1; 3%), spectacles (1; 3%), and multiple interventions (2; 5%). Participants with myopia were included in 30 (77%) reviews. Reviews also included participants with astigmatism (15; 38%), hyperopia (10; 26%), and presbyopia (3; 8%).

Of the 39 eligible systematic reviews, 11 (28%) were classified as reliable [15,16,17,18,19,20,21,22,23,24,25], and 28 (72%) were classified as unreliable (Fig. 2) [26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53]. One of the reliable reviews did not include any studies. All 11 reliable systematic reviews assessed interventions for myopia, either to slow progression of myopia in children (5 reviews), to correct myopia surgically (5 reviews), or to treat choroidal neovascularization secondary to pathologic myopia (1 review). The 11 reliable systematic reviews were published between 2002 and 2016, included data from 0 to 23 studies (median = 9), and analyzed data for 0 to 4696 participants (median = 666). Of the 11 systematic reliable reviews, 7 (64%) assessed surgical interventions, and included some of the same studies. Different groups of reviewers reached contradictory conclusions about the safety and effectiveness of LASEK compared with PRK (Table 1).

The 28 systematic reviews that we classified as unreliable were published between 1996 and 2016 (see Online Additional file 3 for the data extracted for all included systematic reviews) [26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53]. Unreliable systematic reviews did not define the criteria for selecting studies (5; 13%), did not assess methodological quality of the individual studies (10; 26%), did not conduct comprehensive literature searches for eligible studies (17; 44%), or used inappropriate quantitative methods, such as combining randomized and non-randomized studies for meta-analysis (3; 8%). All systematic reviews that presented conclusions not supported by the data were also classified as unreliable for another reason (20; 51%).

Discussion

Our investigation revealed that most systematic reviews about interventions for refractive error published to date have been of low methodological quality; consequently, these reviews may result in inappropriate decisions about clinical care and future research. Some of the shortcomings we identified were related to reporting deficiencies. For example, authors of most reviews did not report criteria for selecting studies. Also, many authors did not report essential characteristics of the included studies, such as sample sizes. Other shortcomings were related to both poor reporting and poor conduct. Our findings are thus consistent with evidence that most systematic reviews published to date have been neither conducted nor reported following best practices [54, 55].

Although visual impairment due to uncorrected refractive error is particularly prevalent in low-income countries [5], most of the systematic reviews included in our study addressed interventions not widely available in those countries. Systematic reviewers may have focused on interventions of interest to decision-makers in high-income countries, and there may be few randomized trials of interventions suitable for use in low-income countries and thus available for inclusion in systematic reviews. Although we classified the systematic review reported by Pearce [29] as unreliable, it is the exception among the 40 systematic reviews for having dealt with an intervention with wide applicability.

A comprehensive and reproducible literature search lays the foundation for a high-quality systematic review. To be transparent and reproducible, systematic reviews should list all information sources searched, the exact search terms used, the operators used to combine terms, and the dates of the searches [56]; however, many systematic reviews of interventions for refractive error did not describe the search methods for identifying eligible studies. Moreover, the number of citations retrieved in many systematic reviews was so small that a knowledgeable reader might suspect the search methods were not sensitive. As a rule of thumb, systematic reviewers can expect to retrieve and review about 200 citations (titles and abstracts) and 5 full-text reports to identify one study eligible for inclusion in a systematic review [57].

Reviewers should describe the methodological quality of included studies using a method such as the Cochrane Risk of Bias Tool to identify potential sources of bias that could influence the credibility of results of individual studies and hence systematic reviews and meta-analysis [58, 59]. Many reviews did not mention having assessed the methodological quality of individual studies, and some reviews specified methods that are known to be unreliable (e.g., the Jadad Scale) [60]. Quality scores are problematic because they are inconsistent and lack validity; the same study may be assessed as excellent using one scoring method but poorly using another scoring method [61].

Finally, authors of seven systematic reviews used methods for data synthesis that were inappropriate. Of particular concern are reviews in which outcomes from randomized controlled trials were combined with outcomes from observational studies in the same meta-analysis. For a research question on the effectiveness of an intervention, randomized controlled trials, by design, have more protection against bias than observational studies. When both types of studies are available, systematic reviewers should justify the reasons for relying on observational data as a substitute for or a complement to data from randomized trials. It is important to understand and to appraise the strengths and weaknesses of observational data when they are used to assess the effectiveness of interventions. When dissimilar studies are combined in meta-analysis, the resulting estimates of intervention effects might be meaningless.

Systematic reviews about interventions for refractive error assessed only a few distinct interventions, a finding consistent with other evidence that systematic reviews are duplicative throughout medicine [62]. After completing our review and sharing the results with the AAO PPP, the panel informed us that one reliable systematic review was not relevant because it examined choroidal neovascularization and laser treatment in high myopia, which is considered a retina topic [24]. Furthermore, some of the reliable reviews included only a few small studies and thus provided inconclusive evidence about the effectiveness and safety of the targeted interventions. Although it is possible for reviews to use pre-specified methods and reach different conclusions (e.g., because of differences in outcomes or inclusion criteria), we find it concerning that some of the reliable reviews reached inconsistent conclusions about the same interventions, even though they included some of the same studies.

We searched for systematic reviews using PubMed and Embase, and we might have missed systematic reviews that are not indexed in these databases. It seems unlikely that systematic reviews in non-indexed journals would be of better quality than the reviews we identified, and their inclusion in our study would have been unlikely to lead us a different conclusion. More high-quality evidence is needed to inform clinical guidelines and to improve patient care for this common problem.

Journal editors could help improve the quality of systematic reviews by adopting four requirements. First, editors should require that authors of systematic reviews publish or provide protocols for their reviews that adhere to current best practices (e.g., the Preferred Reporting Items for Systematic Review and Meta-analysis Protocols (PRISMA-P) statement [63]). Second, editors should require that all reports of systematic reviews include the information in the PRISMA statement [64], which should be documented in a completed PRISMA checklist that accompanies every systematic review manuscript and is confirmed by a peer reviewer or a member of the editorial team. By considering only those manuscripts that adhere to these requirements, editors could ensure that readers have the information needed to assess the methodological rigor of systematic reviews and to decide whether to apply their findings to practice. Third, to reduce the publication of reviews that are essentially redundant (i.e., reviews that include the same studies), editors should require that authors explain how their systematic reviews differ from other reviews of the same interventions or, if the systematic reviews are not different, editors should require that authors reference existing systematic reviews about the same topic. Finally, manuscripts that report systematic reviews should be reviewed by people knowledgeable about both systematic reviews and the clinical specialties that the reviews concern. Eight ophthalmology and optometry journals now have editors for systematic reviews, which may improve the quality of reviews in those journals (http://eyes.cochrane.org/associate-editors-eyes-and-vision-journals).

Conclusions

In conclusion, systematic reviews about interventions for refractive error could benefit patient care and avoid redundant research if the authors of such reviews followed widely accepted guidance, such as Cochrane or Institute of Medicine standards for conducting systematic reviews [3, 65]. The reliable systematic reviews we identified may be useful in updating the AAO PPP and in future updates of the American Optometric Association’s Evidence-Based Clinical Practice Guidelines.

References

Institute of Medicine. Clinical practice guidelines we can trust. Washington, DC: The National Academies Press; 2011.

Li T, Vedula SS, Scherer R, Dickersin K. What comparative effectiveness research is needed? A framework for using guidelines and systematic reviews to identify evidence gaps and research priorities. Ann Intern Med. 2012;156(5):367–77. https://doi.org/10.7326/0003-4819-156-5-201203060-00009.

Institute of Medicine. Finding what works in health care: standards for systematic reviews. Washington, DC: The National Academies Press; 2011.

Lindsley K, Li T, Ssemanda E, Virgili G, Dickersin K. Interventions for age-related macular degeneration: are practice guidelines based on systematic reviews? Ophthalmology. 2016;123(4):884–97. https://doi.org/10.1016/j.ophtha.2015.12.004.

Naidoo KS, Leasher J, Bourne RR, Flaxman SR, Jonas JB, Keeffe J, et al. Global vision impairment and blindness due to uncorrected refractive error, 1990-2010. Optom Vis Sci. 2016;93(3):227–34. https://doi.org/10.1097/OPX.0000000000000796.

Li T. Register systematic reviews. CMAJ. 2010;182(8):805. https://doi.org/10.1503/cmaj.110-2064.

American Academy of Opthalmology. Refractive errors and refractive surgery. San Francisco; 2012. https://www.aao.org/preferred-practice-pattern/refractive-errors--surgery-ppp-2013.

Eden J, Levit L, Berg A, Morton S. Finding what works in health care. Washington, D.C.: National Academies Press; 2011.

Li T, Ervin AM, Scherer R, Jampel H, Dickersin K. Setting priorities for comparative effectiveness research: a case study using primary open-angle glaucoma. Ophthalmology. 2010;117(10):1937–45. https://doi.org/10.1016/j.ophtha.2010.07.004.

Critical Appraisal Skills Programme (CASP). Critical appraisal skills programme (CASP) [internet]. 2015 [cited 10 Nov 2015]. Available from: http://www.casp-uk.net/casp-tools-checklists.

Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10. https://doi.org/10.1186/1471-2288-7-10.

Moher D, Liberati A, Tetzlaff J, Altman DG, Group P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–9. W64

Yu T, Li T, Lee KJ, Friedman DS, Dickersin K, Puhan MA. Setting priorities for comparative effectiveness research on management of primary angle closure: a survey of Asia-Pacific clinicians. J Glaucoma. 2015;24(5):348–55. https://doi.org/10.1097/IJG.0b013e31829e5616.

Li T, Vedula SS, Hadar N, Parkin C, Lau J, Dickersin K. Innovations in data collection, management, and archiving for systematic reviews. Ann Intern Med. 2015;162(4):287–94. https://doi.org/10.7326/M14-1603.

Barsam A, Allan Bruce DS. Excimer laser refractive surgery versus phakic intraocular lenses for the correction of moderate to high myopia. Cochrane Database Syst Rev. 2014; https://doi.org/10.1002/14651858.CD007679.pub4.

Li S-M, Zhan S, Li S-Y, Peng X-X, Hu J, Law Hua A, et al. Laser-assisted subepithelial keratectomy (LASEK) versus photorefractive keratectomy (PRK) for correction of myopia. Cochrane Database Syst Rev. 2016;2:CD009799. https://doi.org/10.1002/14651858.CD009799.pub2.

Li SM, Wu SS, Kang MT, Liu Y, Jia SM, Li SY, et al. Atropine slows myopia progression more in Asian than white children by meta-analysis. Optom Vis Sci. 2014;91(3):342–50. https://doi.org/10.1097/opx.0000000000000178.

Shortt Alex J, Allan Bruce DS, Evans JR. Laser-assisted in-situ keratomileusis (LASIK) versus photorefractive keratectomy (PRK) for myopia. Cochrane Database Syst Rev. 2013;1:CD005135. https://doi.org/10.1002/14651858.CD005135.pub3.

Settas G, Settas C, Minos E, Yeung Ian YL. Photorefractive keratectomy (PRK) versus laser assisted in situ keratomileusis (LASIK) for hyperopia correction. Cochrane Database Syst Rev. 2012;6:CD007112. https://doi.org/10.1002/14651858.CD007112.pub3.

Walline Jeffrey J, Lindsley K, Vedula Satyanarayana S, Cotter Susan A, Mutti Donald O, Twelker JD. Interventions to slow progression of myopia in children. Cochrane Database Syst Rev. 2011;12:CD004916. https://doi.org/10.1002/14651858.CD004916.pub3.

Wei Mao L, Liu Jian P, Li N, Liu M. Acupuncture for slowing the progression of myopia in children and adolescents. Cochrane Database Syst Rev. 2011; https://doi.org/10.1002/14651858.CD007842.pub2.

Li SM, Ji YZ, Wu SS, Zhan SY, Wang B, Liu LR, et al. Multifocal versus single vision lenses intervention to slow progression of myopia in school-age children: a meta-analysis. Surv Ophthalmol. 2011;56(5):451–60. https://doi.org/10.1016/j.survophthal.2011.06.002.

Shortt AJAB. Photorefractive keratectomy (PRK) versus laser-assisted in-situ keratomileusis (LASIK) for myopia. Cochrane Database Syst Rev. 2006;2:CD005135.

Virgili G, Menchini F. Laser photocoagulation for choroidal neovascularisation in pathologic myopia. Cochrane Database Syst Rev. 2005;4:CD004765.

Saw SM, Shih-Yen EC, Koh A, Tan D. Interventions to retard myopia progression in children: an evidence-based update. Ophthalmology. 2002;109(3):415–21.

Liu YM, Xie P. The safety of orthokeratology - a systematic review. Eye and Contact Lens. 2016;42(1):35–42.

Sun Y, Xu F, Zhang T, Liu M, Wang D, Chen Y, et al. Orthokeratology to control myopia progression: a meta-analysis. PLoS One. 2015;10(4):e0124535.

Si JK, Tang K, Bi HS, Guo DD, Guo JG, Wang XR. Orthokeratology for myopia control: a meta-analysis. Optom Vis Sci. 2015;92(3):252–7. https://doi.org/10.1097/opx.0000000000000505.

Pearce MG. Clinical outcomes following the dispensing of ready-made and recycled spectacles: a systematic literature review. Clin Exp Optom. 2014;97(3):225–33.

Wen D, Huang J, Li X, Savini G, Feng Y, Lin Q, Wang Q. Laser-assisted subepithelial keratectomy versus epipolis laser in situ keratomileusis for myopia: a meta-analysis of clinical outcomes. Clin Exp Ophthalmol. 2014;42(4):323–33.

Chen S, Feng Y, Stojanovic A, Jankov MR 2nd, Wang Q. IntraLase femtosecond laser vs mechanical microkeratomes in LASIK for myopia: a systematic review and meta-analysis. J Refract Surg. 2012;28(1):15–24. https://doi.org/10.3928/1081597x-20111228-02.

Zhang ZH, Jin HY, Suo Y, Patel SV, Montes-Mico R, Manche EE, et al. Femtosecond laser versus mechanical microkeratome laser in situ keratomileusis for myopia: Metaanalysis of randomized controlled trials. J Cataract Refract Surg. 2011;37(12):2151–9. https://doi.org/10.1016/j.jcrs.2011.05.043.

Feng YF, Chen SH, Stojanovic A, Wang QM. Comparison of clinical outcomes between ‘on-flap’ and ‘off-flap’ epi-LASIK for myopia: a meta-analysis. Ophthalmologica. 2012;227(1):45–54. https://doi.org/10.1159/000331280.

Feng Y, Yu J, Wang Q. Meta-analysis of wavefront-guided vs. wavefront-optimized LASIK for myopia. Optom Vis Sci. 2011;88(12):1463–9. https://doi.org/10.1097/OPX.0b013e3182333a50.

Fares U, Suleman H, Al-Aqaba MA, Otri AM, Said DG, Dua HS. Efficacy, predictability, and safety of wavefront-guided refractive laser treatment: metaanalysis. J Cataract Refract Surg. 2011;37(8):1465–75.

Fernandes P, Gonzalez-Meijome JM, Madrid-Costa D, Ferrer-Blasco T, Jorge J, Montes-Mico R. Implantable collamer posterior chamber intraocular lenses: a review of potential complications. J Refract Surg. 2011;27(10):765–76.

Song YY, Wang H, Wang BS, Qi H, Rong ZX, Chen HZ. Atropine in ameliorating the progression of myopia in children with mild to moderate myopia: a meta-analysis of controlled clinical trials. J Ocul Pharmacol Ther. 2011;27(4):361–8.

Chen SH, Feng YF, Stojanovic A, Wang QM. Meta-analysis of clinical outcomes comparing surface ablation for correction of myopia with and without 0.02% Mitomycin C. J Refract Surg. 2011;27(7):530–41. https://doi.org/10.3928/1081597x-20110112-02.

Chen L, Ye T, Yang X. Evaluation of the long-term effects of photorefractive keratectomy correction for myopia in China. Eur J Ophthalmol. 2011;21(4):355–62.

Zhao LQ, Wei RL, Cheng JW, Li Y, Cai JP, Ma XY. Meta-analysis: clinical outcomes of laser-assisted subepithelial keratectomy and photorefractive keratectomy in myopia. Ophthalmology. 2010;117(10):1912–22.

Huang D, Schallhorn SC, Sugar A, Farjo AA, Majmudar PA, Trattler WB, et al. Phakic intraocular lens implantation for the correction of myopia: a report by the American Academy of ophthalmology. Ophthalmology. 2009;116(11):2244–58. https://doi.org/10.1016/j.ophtha.2009.08.018.

Solomon KD. Fernandez de Castro LE, Sandoval HP, Biber JM, Groat B, Neff KD et al. LASIK world literature review: quality of life and patient satisfaction. Ophthalmology. 2009;116(4):691–701.

Cui M, Chen XM, Lu P. Comparison of laser epithelial keratomileusis and photorefractive keratectomy for the correction of myopia: a meta-analysis. Chin Med J. 2008;121(22):2331–5.

Szczotka-Flynn L, Diaz M. Risk of corneal inflammatory events with silicone hydrogel and low Dk hydrogel extended contact lens wear: a meta-analysis. Optom Vis Sci. 2007;84(4):247–56.

Varley GA, Huang D, Rapuano CJ, Schallhorn S, Boxer Wachler BS, Sugar A. LASIK for hyperopia, hyperopic astigmatism, and mixed astigmatism: a report by the American Academy of ophthalmology. Ophthalmology. 2004;111(8):1604–17.

Chang MA, Jain S, Azar DT. Infections following laser in situ keratomileusis: an integration of the published literature. Surv Ophthalmol. 2004;49(3):269–80.

Yang XJ, Yan HT, Nakahori Y. Evaluation of the effectiveness of laser in situ keratomileusis and photorefractive keratectomy for myopia: a meta-analysis. J Med Investig. 2003;50(3–4):180–6.

Sugar A, Rapuano CJ, Culbertson WW, Huang D, Varley GA, Agapitos PJ, et al. Laser in situ keratomileusis for myopia and astigmatism: safety and efficacy: a report by the American Academy of ophthalmology. Ophthalmology. 2002;109(1):175–87.

Rapuano CJ, Sugar A, Koch DD, Agapitos PJ, Culbertson WW, de Luise VP, et al. Intrastromal corneal ring segments for low myopia: a report by the American Academy of ophthalmology. Ophthalmology. 2001;108(10):1922–8.

Jain S, Arora I, Azar DT. Success of monovision in presbyopes: review of the literature and potential applications to refractive surgery. Surv Ophthalmol. 1996;40(6):491–9.

Liang GL, Wu J, Shi JT, Liu J, He FY, Xu W. Implantable collamer lens versus iris-fixed phakic intraocular lens implantation to correct myopia: a meta-analysis. PLoS One. 2014;9(8):e104649. https://doi.org/10.1371/journal.pone.0104649.

Kobashi H, Kamiya K, Hoshi K, Igarashi A, Shimizu K. Wavefront-guided versus non-wavefront-guided photorefractive keratectomy for myopia: meta-analysis of randomized controlled trials. PLoS One. 2014;9(7):e103605. https://doi.org/10.1371/journal.pone.0103605.

Zhao LQ, Zhu H, Li LM. Laser-assisted subepithelial keratectomy versus laser in situ Keratomileusis in myopia: a systematic review and meta-analysis. ISRN Ophthalmol. 2014;2014:672146. https://doi.org/10.1155/2014/672146.

Page MJ, Shamseer L, Altman DG, Tetzlaff J, Sampson M, Tricco AC, et al. Epidemiology and reporting characteristics of systematic reviews of biomedical research: a cross-sectional study. PLoS Med. 2016;13(5):e1002028. https://doi.org/10.1371/journal.pmed.1002028.

Pussegoda K, Turner L, Garritty C, Mayhew A, Skidmore B, Stevens A, et al. Systematic review adherence to methodological or reporting quality. Syst Rev. 2017;6(1):131. https://doi.org/10.1186/s13643-017-0527-2.

Atkinson KM, Koenka AC, Sanchez CE, Moshontz H, Cooper H. Reporting standards for literature searches and report inclusion criteria: making research syntheses more transparent and easy to replicate. Res Synth Methods. 2015;6(1):87–95. https://doi.org/10.1002/jrsm.1127.

Rosman L, Twose C, Li M, Li T, Saldanha I, Dickersin K. Teaching searching in an intensive systematic review course: “how many citations should I expect to review?” (poster). Quebec City: 21st Cochrane Colloquium; 2013.

Higgins JP, Altman DG, Gotzsche PC, Juni P, Moher D, Oxman AD, et al. The Cochrane Collaboration's tool for assessing risk of bias in randomised trials. BMJ. 2011;343:d5928. https://doi.org/10.1136/bmj.d5928.

Clark HD, Wells GA, Huet C, McAlister FA, Salmi LR, Fergusson D, et al. Assessing the quality of randomized trials: reliability of the Jadad scale. Control Clin Trials. 1999;20(5):448–52.

Juni P, Witschi A, Bloch R, Egger M. The hazards of scoring the quality of clinical trials for meta-analysis. JAMA. 1999;282(11):1054–60.

Moher D, Jadad AR, Tugwell P. Assessing the quality of randomized controlled trials. Current issues and future directions. Int J Technol Assess Health Care. 1996;12(2):195–208.

Ioannidis JP. The mass production of redundant, misleading, and conflicted systematic reviews and meta-analyses. Milbank Q. 2016;94(3):485–514. https://doi.org/10.1111/1468-0009.12210.

Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015;4:1. https://doi.org/10.1186/2046-4053-4-1.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097. https://doi.org/10.1371/journal.pmed.1000097.

Higgins JPT, Green S (editors). Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. The Cochrane Collaboration, 2011. Available from http://handbook.cochrane.org.

Acknowledgements

Kay Dickersin conceived the study with TL. We are grateful to Benjamin Rouse and Barbara Hawkins for identifying systematic reviews related to refractive error. Barbara Hawkins provided comments on the data abstraction form. Kay Dickersin and Barbara Hawkins commented on the manuscript.

Funding

This project was supported by grant 1 U01 EY020522 (PI: Kay Dickersin), National Eye Institute, National Institutes of Health. The sponsor or funding organization had no role in the design or conduct of this research.

Availability of data and materials

Data used for this report are available on the Systematic Review Data Repository (SRDR) and from the authors.

Author information

Authors and Affiliations

Contributions

TL conceived the study. TL and EMW developed the data extraction form. EMW and SN extracted the data. EMW analyzed the data and drafted the manuscript. EMW, SM, RC, and TL contributed to interpreting the results, reviewed the manuscript, provided critical revisions, and approved the manuscript for publication.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

EMW, SMN, TL have no financial or other interests. RSC chairs the refractive error PPP panel and is a member on the AAO PPP steering committee.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Search strategies for identifying eyes and vision systematic reviews. (DOCX 42 kb)

Additional file 2:

Data extraction form. (PDF 676 kb)

Additional file 3:

Review characteristics. (XLSX 14 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Mayo-Wilson, E., Ng, S.M., Chuck, R.S. et al. The quality of systematic reviews about interventions for refractive error can be improved: a review of systematic reviews. BMC Ophthalmol 17, 164 (2017). https://doi.org/10.1186/s12886-017-0561-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12886-017-0561-9