Abstract

Background

Countries with high TB burden have expanded access to molecular diagnostic tests. However, their impact on reducing delays in TB diagnosis and treatment has not been assessed. Our primary aim was to summarize the quantitative evidence on the impact of nucleic acid amplification tests (NAAT) on diagnostic and treatment delays compared to that of the standard of care for drug-sensitive and drug-resistant tuberculosis (DS-TB and DR-TB).

Methods

We searched MEDLINE, EMBASE, Web of Science, and the Global Health databases (from their inception to October 12, 2020) and extracted time delay data for each test. We then analysed the diagnostic and treatment initiation delay separately for DS-TB and DR-TB by comparing smear vs Xpert for DS-TB and culture drug sensitivity testing (DST) vs line probe assay (LPA) for DR-TB. We conducted random effects meta-analyses of differences of the medians to quantify the difference in diagnostic and treatment initiation delay, and we investigated heterogeneity in effect estimates based on the period the test was used in, empiric treatment rate, HIV prevalence, healthcare level, and study design. We also evaluated methodological differences in assessing time delays.

Results

A total of 45 studies were included in this review (DS = 26; DR = 20). We found considerable heterogeneity in the definition and reporting of time delays across the studies. For DS-TB, the use of Xpert reduced diagnostic delay by 1.79 days (95% CI − 0.27 to 3.85) and treatment initiation delay by 2.55 days (95% CI 0.54–4.56) in comparison to sputum microscopy. For DR-TB, use of LPAs reduced diagnostic delay by 40.09 days (95% CI 26.82–53.37) and treatment initiation delay by 45.32 days (95% CI 30.27–60.37) in comparison to any culture DST methods.

Conclusions

Our findings indicate that the use of World Health Organization recommended diagnostics for TB reduced delays in diagnosing and initiating TB treatment. Future studies evaluating performance and impact of diagnostics should consider reporting time delay estimates based on the standardized reporting framework.

Similar content being viewed by others

Introduction

In the last two decades, there has been a global push to end the tuberculosis (TB) epidemic by setting aggressive targets with the End TB Strategy [1]. Nonetheless, in 2020, there were an estimated 9.9 million TB cases and 1.3 million deaths, of which an estimated 40% went undiagnosed [2]. These missed diagnoses, made worse by the ongoing COVID-19 pandemic, perpetuate transmission and present significant challenges in ending TB [2]. Implementing diagnostic tools that improve detection and reduce diagnostic and treatment delays is critical in overcoming these gaps in TB care [3, 4].

GeneXpert MTB/RIF® and MTB/RIF Ultra® (Xpert) and line probe assays (LPA) are commercial nucleic acid amplification tests (NAATs) that have good diagnostic accuracy with the capacity to diagnose drug sensitive (DS-TB) and drug resistant TB (DR-TB) within 1–2 days of sample processing [5, 6]. Anticipating improvements in accurate and timely TB diagnosis, these NAATs were recommended by the World Health Organization (WHO) [7, 8]. Since then, unprecedented efforts have been made by National Tuberculosis Programs (NTPs) across the globe to scale up these tests and included them as part of the routine TB diagnostic algorithms [9,10,11]. These NAATs have proven to have high accuracy, and research has increasingly focused on studying their actual clinical impact [10, 12,13,14,15,16]. While there are systematic reviews on the diagnostic accuracy of Xpert and LPAs [6, 17, 18], and others that separately describe diagnostic and treatment delays experienced by TB patients [19], no study has summarized the impact of NAATs on reducing time delays in diagnosis and treatment of TB.

Therefore, the main objective of our systematic review was to summarize the available quantitative evidence on the impact of NAATs on diagnostic and treatment delays compared to that of the standard of care for DS-TB and DR-TB. As the secondary objective, we investigated the potential sources of heterogeneity on the effect estimates, including the period the tests were used (pre-2015, post 2015), empiric treatment rate, HIV prevalence, healthcare level, and type of study design (randomized controlled trial, observational study design). We also describe methodological areas of concern in assessing time delays, an aspect that has not been adequately addressed in previous systematic reviews of diagnostic delays in TB.

Methods

Study selection criteria and operational definitions

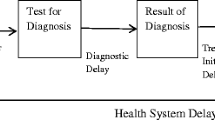

Prior to the review, we developed a conceptual framework for classification of essential time delay components and definitions [20, 21] (Fig. 1). This framework standardized time delays and provided structural guidance in assessing time delays reported in the studies included in this review. We defined diagnostic delay as the time between initial patient contact with a clinic or sputum collection to reporting of results. Treatment delay was defined as the time between results and initiation of anti-TB treatment. And the combination of diagnostic delay and treatment delay was referred to as treatment initiation delay.

Our review focused on the impact of the World Health Organization (WHO)-recommended rapid diagnostics (WRD), specifically Xpert® MTB/RIF and MTB/RIF Ultra assay (Xpert) and GenoType MTBDRplus and Inno-LiPA RifTB (both referred to as LPA here on), because of their rapid uptake at the global level [2]. Several other tests have been recommended since 2020, but we did not include them in our systematic review because data is still limited [22].

We included only peer-reviewed studies that assessed time delays in the process of diagnosis and treatment of DS-TB and DR-TB with the index test as NAAT and a respective comparator test (e.g., smear for Xpert and culture DST for LPAs). We did not restrict our studies based on geography, settings, language, or type of study design. We excluded studies if they: (1) did not include primary data; (2) did not report all data necessary for meta-analysis; (3) were reviews or modelling studies; (4) only reported ‘run-time’ or turnaround time of the test (e.g., “2 h to run” Xpert test); and (5) focused on childhood or extra-pulmonary TB. For conference abstracts, we contacted the authors to see if there was a manuscript in preparation to obtain relevant data. Similarly, we requested original data from the authors when a study did not report time delay estimates as per our study requirements.

Study search strategy, study selection, and data extraction

The present systematic review is an update to the systematic review published in the lead author’s (HS) doctoral thesis in 2016 [23]. The original and updated search were undertaken on January 31, 2015, and October 12, 2020, respectively. We identified eligible studies from MEDLINE, EMBASE, Web of Science, and the Global Health databases that included terms associated with time, like “delay” and “time to treatment” (see Additional file 1 for the complete search strategy). We also consulted references of included articles and previous systematic reviews focusing on the diagnostic accuracy of NAATs, and experts in the fields of TB diagnostics to identify additional studies not included in the database search. After removing duplicates, two reviewers (SGC, ZZQ, or HS—original review; JSL, JHL, or TG—updated review) independently screened titles and abstracts, followed by full-text review for inclusion (HS, SGS—original review; JSL, JHL—updated review). Any discrepancies were resolved by consensus or, in case of the updated review, a third reviewer (HS, TG).

Google Forms (Google LLC, Mountain View, CA, USA) was used for the initial review, but in the updated review, this data was incorporated into Covidence (Veritas Health Innovation, Melbourne, Australia) to manage the review and extract data [24]. The data extraction tools were pilot tested, using five studies in the full text review pool, prior to conducting full data extraction. A set of reviewers (HS—original review; JL, JHL—updated review) extracted the data before it was examined by separate reviewers (SGS—original review, TG—updated review) to resolve any discrepancies in the extracted data. We extracted data on study design, geographic setting, operational context, time delays for both the index and comparator tests, and delay definitions. Units of time were converted into the number of days. An example data extraction tool is available in Additional file 3.

Quality assessment of time delay estimates

Unlike quality assessment tools for diagnostic accuracy studies, there is currently no established method or checklist that can be used to assess the quality of studies investigating time delays or time to event study outcomes [25]. Therefore, we developed a matrix of key methodologic and contextual information necessary to determine the usefulness and comparability of the time delay reported. These included (1) provision of a clear definition of measuring time delay and reporting the time delay estimates (“delay definition”); (2) use of appropriate statistical methods to report and assess changes in time delays (“statistical methods”); (3) evaluating time estimates alongside patient-important outcomes (“patient important outcomes”), which included culture conversion, TB treatment outcomes, infection control and/or contact tracing.

The provision of a clear delay definition was a binary variable with “Yes” and “No” options, where “Yes” indicated that the time delay term was defined clearly indicating its start and end time points with the delay estimate. The other two quality indicators were ranked on a high–medium–low scale. For the statistical method assessment, high quality studies evaluated the distribution of time delay and whether it used proper statistical methods [randomized controlled trial (RCT) or propensity score method for observational studies] that adjust estimates for proper comparison with a measure of variance to assess time delays between the index and the comparator test. Medium-quality studies evaluated the distribution of time delay with uncertainty estimates but did not use appropriate statistical methods for comparative assessment of time delays. And low-quality studies neither evaluated the distribution nor compared the time delay. For patient-important outcomes, high-quality studies analysed the relative risk or odds of improvement in culture conversion with the amount of time saved in TB treatment initiation. Medium quality studies reported time estimate alongside patient-important outcomes but without direct analysis, and low-quality studies did not consider patient-important outcomes at all.

Data synthesis and meta-analysis

We calculated overall medians and IQRs of diagnostic and treatment initiation delay for each diagnostic test (Xpert vs. smear, LPA vs. any culture DST methods) from the medians and means reported by the individual studies. Additionally, using the extracted raw data, we applied the Mann–Whitney U test on overall medians to determine the statistical significance of the median time estimates between the index and comparator tests. We assumed no confounding in the primary studies.

We then conducted a meta-analysis using the quantile estimation (QE) method developed by McGrath et al. to assess the absolute reduction in diagnostic and treatment initiation delay using NAATs [26]. The method involves estimating the variance of the difference of medians of each study and pooling them using the standard inverse variance method. Time to event data are non-normally distributed variables that are primarily reported in medians and IQRs. As units of delay measurements (days) were uniform across all studies, the effect size was chosen to be the raw difference of medians in time delay for both diagnostic and treatment initiation delays. We used a random effects model because the studies differed importantly in characteristics that may lead to variations in the effect size [27, 28]. Between-study heterogeneity was estimated by the method of restricted maximum likelihood. Since this method requires complete data from median (or mean), IQR (or SD), and sample size, studies that did not report all the data points were excluded for the analysis.

Given the multifactorial nature of the studies, we also evaluated the heterogeneity based on the I-squared statistic, where a value greater than 75% is considered to be considerably heterogeneous [28, 29]. We conducted subgroup analyses to identify possible sources of heterogeneity and to assess key factors (pre-2015 vs. post-2015, RCT vs. observational, etc.) that can variably influence the magnitude of our effect size estimate. We specifically chose 2015 as our cut-off time point not only because this was the cut-off for the original systematic review but also enough time had passed since the recommendation to see the effects of the implementation of NAATs in research studies. Further, we assessed for “small study effects” and publication bias with funnel plots followed by Egger’s test to determine their symmetry. We managed and analysed the data using Microsoft Excel 16 (Microsoft Corporation, USA) and R version 4.1.1 (R Foundation for Statistical Learning, Austria).

Results

Search results

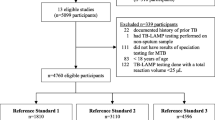

After removing duplicates, we identified 14,776 (original review—7995; updated review—6781) titles and abstracts eligible for title and abstract screening. Of these, 323 were selected for full text review during screening. A total of 45 studies (26 DS-TB and 20 DR-TB) with relevant time delay estimates were ultimately included in this review (Fig. 2).

PRISMA diagram. DS-TB drug-sensitive tuberculosis, DR-TB drug-resistant tuberculosis. *One study [30] reported data for both DS-TB and DR-TB

Description of included studies

Of the 45 studies included in this review, 21 (81%) DS-TB and 15 (75%) DR-TB studies were conducted in Low-and Middle-Income Countries (LMICs) (Tables 1 and 2). One study had estimates for both DS-TB and DR-TB [30]. Overall, half of the studies (17 DS-TB, 7 DR-TB) were conducted in the African region with over two thirds of those in South Africa (n = 15). HIV prevalence was reported by 31 (19 DS-TB, 12 DR-TB) studies, of which about half (16 DS-TB, 4 DR-TB) reported a HIV prevalence of over than 50%. Amongst the DS-TB studies, 7 studies (27%) implemented Xpert as a point-of-care testing (POCT) program, and 15 studies (58%) implemented Xpert on-site, within walking distance of a primary care program or a laboratory.

Quality assessment of time delay estimates

The studies had considerable methodological heterogeneity in the definitions of time delays. When classifying reported time delays according to our operational definitions and by study design, no study reported all sub-components of time delay. All studies evaluating treatment delay used TB treatment initiation time but start and end points for diagnostic delay varied across studies (Tables 1 and 2). Overall, 13 of the 45 studies did not provide a clear definition of the time delay estimates reported (Table 3). Amongst studies included in the DS-TB analysis, 6 (23%) studies employed a randomized control trial (RCT), and 2 studies (8%) were quasi-experimental using pre- and post-implementation study designs. One study used a single-arm interventional pilot study (4%), and the remaining 15 studies were observational (58%). All the studies in the DR-TB analysis were observational. In the use of proper statistical methods for measurement and reporting of delay estimates, 18 studies ranked high, 23 ranked medium, and 2 ranked low. In the evaluation of time estimates alongside patient important outcomes, 7 ranked high, 18 ranked medium, and 18 ranked low.

In all funnel plots (Additional file 2), there were several studies falling outside of the 95% CI, impacting the visualized asymmetry. This may be due to considerable heterogeneity (I2 > 99%) of the studies. However, Egger’s tests—used to assess whether there are systematic differences between high- and low-precision studies—demonstrated no clear evidence of “small study effects.” (p = 0.085–0.462).

Impact of NAATs on delay

For DS-TB analysis, 12 studies were included in the primary analysis for diagnostic delay, and 18 studies were included for treatment initiation delay. The overall median diagnostic delay for smear and Xpert were 3 days and 1.04 days, respectively. The overall median treatment initiation delay for smear and Xpert were 6 days and 4.5 days, respectively. A random effects meta-analysis of the difference of medians showed that the use of Xpert did not show a statistically significant reduction in diagnostic delay [1.79 days (95% CI − 0.27 to 3.85)] compared to smear but showed a statistically significant reduction in treatment initiation delay by 2.55 days (95% CI 0.54–4.56) (Figs. 3 and 4).

For DR-TB analysis, 13 studies were included in diagnostic delays and 12 studies were included in treatment initiation delays. The overall median diagnostic delay for culture DST and LPA were 54 days and 11 days, respectively. The overall median treatment initiation delay for culture DST and LPA were 78 days and 28 days, respectively. A random effects meta-analysis of the difference of medians showed that, in comparison with culture DST, the use of LPA significantly reduced diagnostic delay by 40.09 days (95% CI 26.82–53.37) and treatment initiation delay by 45.32 days (95% CI 30.27–60.37) (Figs. 5 and 6). I2 value of 99.79% and 97.22% for diagnostic and treatment initiation delay indicated considerable heterogeneity.

Comparing the studies from the two different phases of the review (pre-/post-2015), we found no statistical significance in the reduction of diagnostic delays but observed statistical significance in the reduction of treatment initiation delay with a median difference of 2.54 days (95% CI 0.45–4.62) for post-2015 studies and 5.04 days (95% CI 0.09–9.99) for pre-2015 studies. Similarly, subgroup analysis based on study design showed a statistically significant reduction in treatment initiation delay in the RCT group [2.85 days (95% CI 1.16–4.55)] but not in the observational group [1.67 days (95% CI − 1.70 to 5.05)]. When classifying studies by the healthcare systems level, Xpert did not provide meaningful reduction in treatment initiation delay regardless of the location of its placement: 1.27 days (95% CI − 1.45 to 4.00) for primary health care centres and 5.27 days (95% CI − 1.06 to 11.60) for tertiary hospitals. When grouped by POCT status, Xpert test implemented as a POCT service showed statistically significant reductions in treatment initiation delay compared to non-POCT programs. All sub-group analyses with greater than 2 studies showed I2 values greater than 89%, suggesting considerable heterogeneity (Tables 4 and 5).

Discussion

Principal findings

While there are several patient-important impact measures for new diagnostic tests [31], time delay estimates provide direct measure of the timeliness of TB care. To our knowledge, our systematic review of 45 studies is the first to comparatively synthesize and quantify reductions in delays in diagnosis and treatment of DS and DR-TB when the WHO recommended NAATs are used instead of smear (DS-TB) or culture DST (DR-TB). Our random effectives meta-analysis of the differences of median times showed that the use of NAATs improved treatment initiation delay for patients investigated for both DS and DR-TB; however, this benefit was not seen for diagnostic delay for DS-TB (Xpert vs. smear). We also found that the degree of benefit in reducing delays in using NAATs for TB care was highly variable and dependent on how the tests were implemented (e.g., laboratory-based vs. POCT), differences in study design to evaluate impact of NAATs on TB care delays, and large variations in how delays were defined and quantified.

In principle, Xpert and smear are “same-day” tests; therefore, expected reduction in diagnostic delays may be limited for Xpert. As such, in our meta-analysis, we did not find significant reduction in diagnostic delays when using Xpert compared to smear [1.79 days (95% CI − 0.27 to 3.85)]. For treatment delays, our analysis of 18 studies showed that Xpert reduced treatment initiation delays for DS-TB by 2.55 days (95% CI 0.54–4.56) compared to smear, but the degree of this effect was highly variable depending on how and where Xpert was deployed within the health care system. Particularly, in our sub-group analysis, we found that the use of Xpert as non-POCT (at any levels of health system) did not show meaningful improvement in DS-TB treatment initiation delay. Moreover, the ‘hub-and-spokes’ model—where patient samples for Xpert from several community health centres (spokes) are referred to a centralized laboratory (hub) in the system—for Xpert testing evaluated in earlier studies has shown limited impact on improving and optimizing the timeliness of TB care due to operational barriers causing further delays [32,33,34], de-prioritization of Xpert use as an initial test in the national algorithms [35, 36], and continued high empiric treatment [37, 38] rates in certain settings.

In contrast to DS-TB, use of LPA for DR-TB care had resulted in large reduction in delays for DR-TB care. Our meta-analysis results found that use of LPA drastically reduced overall DR-TB care delays by 45.32 days (95% CI 30.27–60.37). This was mainly due to prolonged delays associated with conventional DR-TB diagnostics (culture DST) that takes weeks to diagnose and treat DR-TB patients. However, reduction of these delays were not solely due the implementation of the technology alone. In an earlier phases of LPA implementation in South Africa, use of LPA for DR-TB care were much restricted and centralized at higher levels of the health and laboratory system, and caused treatment initiation delays of more than 50 days [39, 40]. DR-TB care delays gradually improved to 28 days (IQR: 16–40) through the 3-year DR-TB care decentralization program, which included streamlining LPA testing in the clinical practice (years 2009–2011). Moreover, studies from settings with more established healthcare infrastructure (e.g., China and South Korea) also found that operational challenges diminished the potential benefit of rapid molecular testing in improving DR-TB care delays [41,42,43].

Strengths and limitations

For the meta-analysis, we used the Quantile Estimation (QE) method because it had excellent performance in simulation studies that were motivated by our systematic review [26]. One advantage compared to more traditional approaches based on meta-analysing the difference of means is that the QE method uses an effect size that is typically reported by the primary studies (i.e., the difference of medians) rather than one that must be estimated from the summary data of the primary studies (i.e., the difference of means). However, our meta-analysis results should be interpreted with caution because considerable statistical power was lost when restricting to studies that presented all the necessary data for estimating the variance of the difference of medians. Also, the high level of clinical (e.g. participants, outcomes) and methodological heterogeneity (e.g. study design, defining and reporting of time delays) in the studies included in our review translated into high I2 values in all of our meta-analyses results, making generalized interpretation of our summary estimates difficult. We also advise caution in the interpretation of our subgroup analyses because these confounders often complicate the interpretation and lead to wrong conclusions [44].

Delays in TB care occur due to a wide range of patient and health systems risk factors. [46, 48] Studies included in our review did not comparatively assess and adjust for risk factors associated with time delays for both the index (Xpert or LPA) and the comparator (smear or culture DST). This may be because time delay estimates were not the primary outcomes in most of the studies, and thus lacking proper analytical assessment of these outcome measures. Therefore, we were limited to sub-group analyses on key study-level attributes (e.g., HIV prevalence, empiric treatment rate, Xpert placement strategy, and study design), which were highly heterogenous and in many cases, inconclusive in showing that Xpert improved delays in TB care. Moreover, our findings are subject to potential confounding issues—at both health systems (e.g., differences in healthcare system infrastructure, TB care practices, implementation strategies of the index tests) and patient level factors (e.g., symptom levels, age, care-seeking behaviours)—which may bias our effect estimates (number of days reduced in diagnostic and treatment initiation delays) towards or away from the null. Given these reasons, generalizability of our findings may be limited. Likewise, our review underscores a need for more research investigating health systems and patient factors that can impact delays in TB care during and after the implementation of diagnostic tests and strategies that aim to improve the timeliness and quality of TB care. Lastly, despite carrying out comprehensive searches and considering non-English studies, we may have missed some studies in our review. Therefore, we cannot rule out potential publication bias.

In our study, we also investigated consistencies in defining and reporting of time delays across studies with a framework developed as part of our study (Fig. 1). In our quality assessment of the studies reporting time delay estimates (Table 3), we found considerable heterogeneity in defining time delays and close to 30% of studies (13) reported delay estimates without providing clear definitions. Many of the studies included in our review used the same terms to define different components of the delay. For instance, “turnaround time”, “time to detection”, and “laboratory processing time” were used to describe the time from specimen receipt by the lab to test result at the lab, while others employed these same terms to define diagnostic delay, time from specimen collection to notifying the clinic of the test result. In addition, several studies included in our review did not include or inappropriately reported uncertainty ranges (e.g., no IQRs or reported means with IQRs). As time data may be highly skewed, standardizing the practice of reporting delay estimates as medians with their variances or other measures of spread (e.g., IQR or range) can help facilitate synthesis of these studies. Many of these issues have been previously reported by other systematic reviews on TB care delays and our findings reemphasizes the importance in standardizing how TB care delays are defined, measured, and reported [20, 45,46,47,48].

Conclusions

The global rollout of NAATs has dramatically changed the landscape of TB diagnosis in high TB burden settings with improvements in the TB diagnostic infrastructure and the quality of TB prevention and care programs. Our systematic review findings suggest that implementation of NAATs have resulted in a noticeable reduction in delays for TB treatment compared to the conventional methods. However, these improvements did not fully realize the potential benefits of NAATs because of health system limitations [49]. Additionally, we identified methodological concerns in reporting of time delay estimates and emphasize the need to standardize and promote their consistent reporting.

Availability of data and materials

All data generated or analysed during this systematic review are included in this published article and the additional files.

Abbreviations

- DS-TB:

-

Drug-sensitive tuberculosis

- DR-TB:

-

Drug-resistant tuberculosis

- LPA:

-

Line probe assay

- NAAT:

-

Nucleic acid amplification test

- POCT:

-

Point-of-care test

- QE:

-

Quantile estimation

- TB:

-

Tuberculosis

- WHO:

-

World Health Organization

References

World Health Organization. The end TB strategy. 2015. https://www.who.int/teams/global-tuberculosis-programme/the-end-tb-strategy.

World Health Organization. Global tuberculosis report 2021. Geneva: World Health Organization; 2021. https://apps.who.int/iris/handle/10665/346387.

Uys PW, Warren RM, van Helden PD. A threshold value for the time delay to TB diagnosis. PLoS ONE. 2007;2: e757. https://doi.org/10.1371/journal.pone.0000757.

Dye C, Scheele S, Dolin P, Pathania V, Raviglione MC, for the WHO Global Surveillance and Monitoring Project. Global burden of tuberculosis: estimated incidence, prevalence, and mortality by country. JAMA. 1999;282:677–86. https://doi.org/10.1001/jama.282.7.677.

Ling DI, Zwerling AA, Pai M. GenoType MTBDR assays for the diagnosis of multidrug-resistant tuberculosis: a meta-analysis. Eur Respir J. 2008;32:1165–74. https://doi.org/10.1183/09031936.00061808.

Steingart KR, Schiller I, Horne DJ, Pai M, Boehme CC, Dendukuri N. Xpert® MTB/RIF assay for pulmonary tuberculosis and rifampicin resistance in adults. Cochrane Database Syst Rev. 2014. https://doi.org/10.1002/14651858.CD009593.pub3.

Policy statement: automated real-time nucleic acid amplification technology for rapid and simultaneous detection of tuberculosis and rifampicin resistance: Xpert MTB/RIF system. Geneva: World Health Organization; 2011. http://www.ncbi.nlm.nih.gov/books/NBK304235/.

Organization WH. Automated real-time nucleic acid amplification technology for rapid and simultaneous detection of tuberculosis and rifampicin resistance: Xpert MTB/RIF assay for the diagnosis of pulmonary and extrapulmonary TB in adults and children: policy update. World Health Organization; 2013. https://apps.who.int/iris/handle/10665/112472.

Raviglione M, Marais B, Floyd K, Lönnroth K, Getahun H, Migliori GB, et al. Scaling up interventions to achieve global tuberculosis control: progress and new developments. Lancet. 2012;379:1902–13. https://doi.org/10.1016/S0140-6736(12)60727-2.

Pai M, Minion J, Steingart K, Ramsay A. New and improved tuberculosis diagnostics: evidence, policy, practice, and impact. Curr Opin Pulm Med. 2010. https://doi.org/10.1097/MCP.0b013e328338094f.

Small PM, Pai M. Tuberculosis diagnosis—time for a game change. N Engl J Med. 2010;363:1070–1. https://doi.org/10.1056/NEJMe1008496.

Cohen D, Corbett E. Evidence supports TB test, so what now? Cochrane Database Syst Rev. 2013. https://doi.org/10.1002/14651858.ED000051.

Ramsay A, Steingart KR, Pai M. Assessing the impact of new diagnostics on tuberculosis control. Int J Tuberc Lung Dis. 2010;14:1506–7.

Schunemann HJ, Oxman AD, Brozek J, Glasziou P, Bossuyt P, Chang S, et al. GRADE: assessing the quality of evidence for diagnostic recommendations. Evid Based Med. 2008;13:162–3. https://doi.org/10.1136/ebm.13.6.162-a.

Cobelens F, van den Hof S, Pai M, Squire SB, Ramsay A, Kimerling ME. Which new diagnostics for tuberculosis, and when? J Infect Dis. 2012;205:S191–8. https://doi.org/10.1093/infdis/jis188.

Lienhardt C, Espinal M, Pai M, Maher D, Raviglione MC. What research is needed to stop TB? Introducing the TB research movement. PLoS Med. 2011;8: e1001135. https://doi.org/10.1371/journal.pmed.1001135.

Morgan M, Kalantri S, Flores L, Pai M. A commercial line probe assay for the rapid detection of rifampicin resistance in Mycobacterium tuberculosis: a systematic review and meta-analysis. BMC Infect Dis. 2005;5:62. https://doi.org/10.1186/1471-2334-5-62.

Denkinger CM, Schumacher SG, Boehme CC, Dendukuri N, Pai M, Steingart KR. Xpert MTB/RIF assay for the diagnosis of extrapulmonary tuberculosis: a systematic review and meta-analysis. Eur Respir J. 2014;44:435–46. https://doi.org/10.1183/09031936.00007814.

Haraka F, Kakolwa M, Schumacher SG, Nathavitharana RR, Denkinger CM, Gagneux S, et al. Impact of the diagnostic test Xpert MTB/RIF on patient outcomes for tuberculosis. Cochrane Database Syst Rev. 2021. https://doi.org/10.1002/14651858.CD012972.pub2.

Storla DG, Yimer S, Bjune GA. A systematic review of delay in the diagnosis and treatment of tuberculosis. BMC Public Health. 2008;8:15. https://doi.org/10.1186/1471-2458-8-15.

Yimer S, Bjune G, Alene G. Diagnostic and treatment delay among pulmonary tuberculosis patients in Ethiopia: a cross sectional study. BMC Infect Dis. 2005;5:112. https://doi.org/10.1186/1471-2334-5-112.

World Health Organization. WHO consolidated guidelines on tuberculosis: module 3: diagnosis: rapid diagnostics for tuberculosis detection. 2021 update. Geneva: World Health Organization; 2021. https://apps.who.int/iris/handle/10665/342331.

Sohn H. Improving tuberculosis diagnosis in vulnerable populations: impact and cost-effectiveness of novel, rapid molecular assays. Ph.D., McGill University (Canada). 2016. http://www.proquest.com/docview/2510310142/abstract/4880939D9AD64EE7PQ/1.

Li T, Vedula SS, Hadar N, Parkin C, Lau J, Dickersin K. Innovations in data collection, management, and archiving for systematic reviews. Ann Intern Med. 2015;162:287–94. https://doi.org/10.7326/M14-1603.

Bossuyt PM, Reitsma JB, Bruns DE, Gatsonis CA, Glasziou PP, Irwig L, et al. STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. BMJ. 2015. https://doi.org/10.1136/bmj.h5527.

McGrath S, Sohn H, Steele R, Benedetti A. Meta-analysis of the difference of medians. Biom J. 2020;62:69–98. https://doi.org/10.1002/bimj.201900036.

Hardy RJ, Thompson SG. Detecting and describing heterogeneity in meta-analysis. Stat Med. 1998;17:841–56. https://doi.org/10.1002/(SICI)1097-0258(19980430)17:8%3c841::AID-SIM781%3e3.0.CO;2-D.

Borenstein M, Hedges LV, Higgins JPT, Rothstein HR. A basic introduction to fixed-effect and random-effects models for meta-analysis. Res Synth Methods. 2010;1:97–111. https://doi.org/10.1002/jrsm.12.

Higgins JPT, Thompson SG. Quantifying heterogeneity in a meta-analysis. Stat Med. 2002;21:1539–58. https://doi.org/10.1002/sim.1186.

Boehme CC, Nicol MP, Nabeta P, Michael JS, Gotuzzo E, Tahirli R, et al. Feasibility, diagnostic accuracy, and effectiveness of decentralised use of the Xpert MTB/RIF test for diagnosis of tuberculosis and multidrug resistance: a multicentre implementation study. Lancet. 2011;377:1495–505. https://doi.org/10.1016/S0140-6736(11)60438-8.

Schumacher SG, Sohn H, Qin ZZ, Gore G, Davis JL, Denkinger CM, et al. Impact of molecular diagnostics for tuberculosis on patient-important outcomes: a systematic review of study methodologies. PLoS ONE. 2016;11: e0151073. https://doi.org/10.1371/journal.pone.0151073.

Durovni B, Saraceni V, van den Hof S, Trajman A, Cordeiro-Santos M, Cavalcante S, et al. Impact of replacing smear microscopy with Xpert MTB/RIF for diagnosing tuberculosis in Brazil: a stepped-wedge cluster-randomized trial. PLoS Med. 2014;11: e1001766. https://doi.org/10.1371/journal.pmed.1001766.

Churchyard GJ, Stevens WS, Mametja LD, McCarthy KM, Chihota V, Nicol MP, et al. Xpert MTB/RIF versus sputum microscopy as the initial diagnostic test for tuberculosis: a cluster-randomised trial embedded in South African roll-out of Xpert MTB/RIF. Lancet Glob Health. 2015;3:e450–7. https://doi.org/10.1016/S2214-109X(15)00100-X.

Mupfumi L, Makamure B, Chirehwa M, Sagonda T, Zinyowera S, Mason P, et al. Impact of Xpert MTB/RIF on antiretroviral therapy-associated tuberculosis and mortality: a pragmatic randomized controlled trial. Open Forum Infect Dis. 2014;1: ofu038. https://doi.org/10.1093/ofid/ofu038.

Page AL, Ardizzoni E, Lassovsky M, Kirubi B, Bichkova D, Pedrotta A, et al. Routine use of Xpert R MTB/RIF in areas with different prevalences of HIV and drug-resistant tuberculosis. Int J Tuberc Lung Dis. 2015;19(1078–83):i. https://doi.org/10.5588/ijtld.14.0951.

Shete PB, Nalugwa T, Farr K, Ojok C, Nantale M, Howlett P, et al. Feasibility of a streamlined tuberculosis diagnosis and treatment initiation strategy. Int J Tuberc Lung Dis. 2017;21:746–52. https://doi.org/10.5588/ijtld.16.0699.

Theron G, Zijenah L, Chanda D, Clowes P, Rachow A, Lesosky M, et al. Feasibility, accuracy, and clinical effect of point-of-care Xpert MTB/RIF testing for tuberculosis in primary-care settings in Africa: a multicentre, randomised, controlled trial. Lancet. 2014;383:424–35. https://doi.org/10.1016/S0140-6736(13)62073-5.

Auld AF, Fielding KL, Gupta-Wright A, Lawn SD. Xpert MTB/RIF—why the lack of morbidity and mortality impact in intervention trials? Trans R Soc Trop Med Hyg. 2016;110:432–44. https://doi.org/10.1093/trstmh/trw056.

Cox HS, Daniels JF, Muller O, Nicol MP, Cox V, van Cutsem G, et al. Impact of decentralized care and the Xpert MTB/RIF test on rifampicin-resistant tuberculosis treatment initiation in Khayelitsha, South Africa. Open Forum Infect. 2015;2: ofv014. https://doi.org/10.1093/ofid/ofv014.

Hanrahan CF, Dorman SE, Erasmus L, Koornhof H, Coetzee G, Golub JE. The impact of expanded testing for multidrug resistant tuberculosis using geontype MTBDRplus in South Africa: an observational cohort study. PLoS ONE. 2012;7: e49898. https://doi.org/10.1371/journal.pone.0049898.

Jeon D, Kang H, Kwon YS, Yim JJ, Shim TS. Impact of molecular drug susceptibility testing on the time to multidrug-resistant tuberculosis treatment initiation. J Korean Med Sci. 2020;35: e284. https://doi.org/10.3346/jkms.2020.35.e284.

Shi W, Davies Forsman L, Hu Y, Zheng X, Gao Y, Li X, et al. Improved treatment outcome of multidrug-resistant tuberculosis with the use of a rapid molecular test to detect drug resistance in China. Int J Infect Dis. 2020;96:390–7. https://doi.org/10.1016/j.ijid.2020.04.049.

Li X, Deng Y, Wang J, Jing H, Shu W, Qin J, et al. Rapid diagnosis of multidrug-resistant tuberculosis impacts expenditures prior to appropriate treatment: a performance and diagnostic cost analysis. IDR. 2019;12:3549–55. https://doi.org/10.2147/IDR.S224518.

Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, et al editors. Cochrane handbook for systematic reviews of interventions. 1st ed. New York: Wiley; 2019. https://doi.org/10.1002/9781119536604.

Li Y, Ehiri J, Tang S, Li D, Bian Y, Lin H, et al. Factors associated with patient, and diagnostic delays in Chinese TB patients: a systematic review and meta-analysis. BMC Med. 2013;11:156. https://doi.org/10.1186/1741-7015-11-156.

Sreeramareddy CT, Panduru KV, Menten J, Van den Ende J. Time delays in diagnosis of pulmonary tuberculosis: a systematic review of literature. BMC Infect Dis. 2009;9:91. https://doi.org/10.1186/1471-2334-9-91.

Sreeramareddy CT, Qin ZZ, Satyanarayana S, Subbaraman R, Pai M. Delays in diagnosis and treatment of pulmonary tuberculosis in India: a systematic review. Int J Tuberc Lung Dis. 2014;18:255–66. https://doi.org/10.5588/ijtld.13.0585.

Getnet F, Demissie M, Assefa N, Mengistie B, Worku A. Delay in diagnosis of pulmonary tuberculosis in low-and middle-income settings: systematic review and meta-analysis. BMC Pulm Med. 2017;17:202. https://doi.org/10.1186/s12890-017-0551-y.

Salje H, Andrews JR, Deo S, Satyanarayana S, Sun AY, Pai M, et al. The importance of implementation strategy in scaling up Xpert MTB/RIF for diagnosis of tuberculosis in the Indian health-care system: a transmission model. PLoS Med. 2014;11: e1001674. https://doi.org/10.1371/journal.pmed.1001674.

Yoon C, Cattamanchi A, Davis JL, Worodria W, den Boon S, Kalema N, et al. Impact of Xpert MTB/RIF testing on tuberculosis management and outcomes in hospitalized patients in Uganda. PLoS ONE. 2012;7: e48599. https://doi.org/10.1371/journal.pone.0048599.

Kwak N, Choi SM, Lee J, Park YS, Lee C-H, Lee S-M, et al. Diagnostic accuracy and turnaround time of the Xpert MTB/RIF assay in routine clinical practice. PLoS ONE. 2013;8: e77456. https://doi.org/10.1371/journal.pone.0077456.

Chaisson LH, Roemer M, Cantu D, Haller B, Millman AJ, Cattamanchi A, et al. Impact of GeneXpert MTB/RIF assay on triage of respiratory isolation rooms for inpatients with presumed tuberculosis: a hypothetical trial. Clin Infect Dis. 2014;59:1353–60. https://doi.org/10.1093/cid/ciu620.

Cohen GM, Drain PK, Noubary F, Cloete C, Bassett IV. Diagnostic delays and clinical decision-making with centralized Xpert MTB/RIF testing in Durban, South Africa. J Acquir Immune Defic Syndr. 2014;67:e88–93. https://doi.org/10.1097/QAI.0000000000000309.

Cox HS, Mbhele S, Mohess N, Whitelaw A, Muller O, Zemanay W, et al. Impact of Xpert MTB/RIF for TB diagnosis in a primary care clinic with high TB and HIV prevalence in South Africa: a pragmatic randomised trial. PLoS Med. 2014. https://doi.org/10.1371/journal.pmed.1001760.

Sohn H, Aero AD, Menzies D, Behr M, Schwartzman K, Alvarez GG, et al. Xpert MTB/RIF testing in a low tuberculosis incidence, high-resource setting: limitations in accuracy and clinical impact. Clin Infect Dis. 2014;58:970–6. https://doi.org/10.1093/cid/ciu022.

Calligaro GL, Theron G, Khalfey H, Peter J, Meldau R, Matinyenya B, et al. Burden of tuberculosis in intensive care units in Cape Town, South Africa, and assessment of the accuracy and effect on patient outcomes of the Xpert MTB/RIF test on tracheal aspirate samples for diagnosis of pulmonary tuberculosis: a prospective burden of disease study with a nested randomised controlled trial. Lancet Respir Med. 2015;3:621–30. https://doi.org/10.1016/S2213-2600(15)00198-8.

Muyoyeta M, Moyo M, Kasese N, Ndhlovu M, Milimo D, Mwanza W, et al. Implementation research to inform the use of Xpert MTB/RIF in primary health care facilities in high TB and HIV settings in resource constrained settings. PLoS ONE. 2015;10: e0126376. https://doi.org/10.1371/journal.pone.0126376.

Van Den Handel T, Hampton KH, Sanne I, Stevens W, Crous R, Van Rie A. The impact of Xpert( R) MTB/RIF in sparsely populated rural settings. Int J Tuberc Lung Dis. 2015;19:392–8.

Hanrahan CF, Haguma P, Ochom E, Kinera I, Cobelens F, Cattamanchi A, et al. Implementation of Xpert MTB/RIF in Uganda: missed opportunities to improve diagnosis of tuberculosis. Open Forum Infect Dis. 2016;3: ofw068. https://doi.org/10.1093/ofid/ofw068.

Akanbi MO, Achenbach C, Taiwo B, Idoko J, Ani A, Isa Y, et al. Evaluation of gene Xpert for routine diagnosis of HIV-associated tuberculosis in Nigeria: a prospective cohort study. BMC Pulm Med. 2017;17:87. https://doi.org/10.1186/s12890-017-0430-6.

Calligaro GL, Zijenah LS, Peter JG, Theron G, Buser V, McNerney R, et al. Effect of new tuberculosis diagnostic technologies on community-based intensified case finding: a multicentre randomised controlled trial. Lancet Infect Dis. 2017;17:441–50. https://doi.org/10.1016/S1473-3099(16)30384-X.

Mwansa-Kambafwile J, Maitshotlo B, Black A. Microbiologically confirmed tuberculosis: factors associated with pre-treatment loss to follow-up, and time to treatment initiation. PLoS ONE. 2017;12: e0168659. https://doi.org/10.1371/journal.pone.0168659.

Schmidt BM, Geldenhuys H, Tameris M, Luabeya A, Mulenga H, Bunyasi E, et al. Impact of Xpert MTB/RIF rollout on management of tuberculosis in a South African community. S Afr Med J. 2017;107:1078–81. https://doi.org/10.7196/SAMJ.2017.v107i12.12502.

Castro AZ, Moreira AR, Oliveira J, Costa PA, Graca C, Perez MA, et al. Clinical impact and cost analysis of the use of either the Xpert MTB Rif test or sputum smear microscopy in the diagnosis of pulmonary tuberculosis in Rio de Janeiro, Brazil. Rev Soc Bras Med Trop. 2018;51:631–7. https://doi.org/10.1590/0037-8682-0082-2018.

Khumsri J, Hiransuthikul N, Hanvoravongchai P, Chuchottaworn C. Effectiveness of tuberculosis screening technology in the initiation of correct diagnosis of pulmonary tuberculosis at a Tertiary Care Hospital in Thailand: comparative analysis of Xpert MTB/RIF versus sputum AFB Smear. Asia Pac J Public Health. 2018;30:542–50. https://doi.org/10.1177/1010539518800336.

Mugauri H, Shewade HD, Dlodlo RA, Hove S, Sibanda E. Bacteriologically confirmed pulmonary tuberculosis patients: loss to follow-up, death and delay before treatment initiation in Bulawayo, Zimbabwe from 2012–2016. Int J Infect Dis. 2018;76:6–13. https://doi.org/10.1016/j.ijid.2018.07.012.

Agizew T, Chihota V, Nyirenda S, Tedla Z, Auld AF, Mathebula U, et al. Tuberculosis treatment outcomes among people living with HIV diagnosed using Xpert MTB/RIF versus sputum-smear microscopy in Botswana: a stepped-wedge cluster randomised trial. BMC Infect Dis. 2019;19:1058. https://doi.org/10.1186/s12879-019-4697-5.

Le H, Nguyen N, Tran P, Hoa N, Hung N, Moran A, et al. Process measure of FAST tuberculosis infection control demonstrates delay in likely effective treatment. Int J Tuberc Lung Dis. 2019;23:140–6. https://doi.org/10.5588/ijtld.18.0268.

Nalugwa T, Shete PB, Nantale M, Farr K, Ojok C, Ochom E, et al. Challenges with scale-up of GeneXpert MTB/RIF (R) in Uganda: a health systems perspective. BMC Health Serv Res. 2020;20:7. https://doi.org/10.1186/s12913-020-4997-x.

Chryssanthou E, Ängeby K. The GenoType® MTBDRplus assay for detection of drug resistance in Mycobacterium tuberculosis in Sweden. APMIS. 2012;120:405–9. https://doi.org/10.1111/j.1600-0463.2011.02845.x.

Şkenders GK, Holtz TH, Riekstina V, Leimane V. Implementation of the INNO-LiPA Rif. TB® line-probe assay in rapid detection of multidrug-resistant tuberculosis in Latvia. Int J Tuberc Lung Dis. 2011;15:1546–53. https://doi.org/10.5588/ijtld.11.0067.

Jacobson KR, Theron D, Kendall EA, Franke MF, Barnard M, van Helden PD, et al. Implementation of GenoType MTBDRplus reduces time to multidrug-resistant tuberculosis therapy initiation in South Africa. Clin Infect Dis. 2013;56:503–8. https://doi.org/10.1093/cid/cis920.

Lyu J, Kim M-N, Song JW, Choi C-M, Oh Y-M, Lee SD, et al. GenoType® MTBDRplus assay detection of drug-resistant tuberculosis in routine practice in Korea. Int J Tuberc Lung Dis. 2013;17:120–4. https://doi.org/10.5588/ijtld.12.0197.

Gauthier M, Somoskövi A, Berland J-L, Ocheretina O, Mabou M-M, Boncy J, et al. Stepwise implementation of a new diagnostic algorithm for multidrug-resistant tuberculosis in Haiti. Int J Tuberc Lung Dis. 2014;18:220–6. https://doi.org/10.5588/ijtld.13.0513.

Kipiani M, Mirtskhulava V, Tukvadze N, Magee M, Blumberg HM, Kempker RR. Significant clinical impact of a rapid molecular diagnostic test (Genotype MTBDRplus Assay) to detect multidrug-resistant tuberculosis. Clin Infect Dis. 2014;59:1559–66. https://doi.org/10.1093/cid/ciu631.

Raizada N, Sachdeva KS, Chauhan DS, Malhotra B, Reddy K, Dave PV, et al. A multi-site validation in India of the line probe assay for the rapid diagnosis of multi-drug resistant tuberculosis directly from sputum specimens. PLoS ONE. 2014;9: e88626. https://doi.org/10.1371/journal.pone.0088626.

Singla N, Satyanarayana S, Sachdeva KS, Van den Bergh R, Reid T, Tayler-Smith K, et al. Impact of introducing the line probe assay on time to treatment initiation of MDR-TB in Delhi, India. PLoS ONE. 2014;9: e102989. https://doi.org/10.1371/journal.pone.0102989.

Bablishvili N, Tukvadze N, Avaliani Z, Blumberg HM, Kempker RR. A comparison of the Xpert® MTB/RIF and GenoType® MTBDRplus assays in Georgia. Int J Tuberc Lung Dis. 2015;19:676–8. https://doi.org/10.5588/ijtld.14.0867.

Eliseev P, Balantcev G, Nikishova E, Gaida A, Bogdanova E, Enarson D, et al. The impact of a line probe assay based diagnostic algorithm on time to treatment initiation and treatment outcomes for multidrug resistant TB patients in Arkhangelsk region, Russia. PLoS ONE. 2016;11: e0152761. https://doi.org/10.1371/journal.pone.0152761.

Evans D, Schnippel K, Govathson C, Sineke T, Black A, Long L, et al. Treatment initiation among persons diagnosed with drug resistant tuberculosis in Johannesburg, South Africa. PLoS ONE. 2017;12: e0181238. https://doi.org/10.1371/journal.pone.0181238.

Iruedo J, O’Mahony D, Mabunda S, Wright G, Cawe B. The effect of the Xpert MTB/RIF test on the time to MDR-TB treatment initiation in a rural setting: a cohort study in South Africa’s Eastern Cape Province. BMC Infect Dis. 2017;17:91. https://doi.org/10.1186/s12879-017-2200-8.

Evans D, Sineke T, Schnippel K, Berhanu R, Govathson C, Black A, et al. Impact of Xpert MTB/RIF and decentralized care on linkage to care and drug-resistant tuberculosis treatment outcomes in Johannesburg, South Africa. BMC Health Serv Res. 2018;18:973. https://doi.org/10.1186/s12913-018-3762-x.

Ngabonziza J-CS, Habimana YM, Decroo T, Migambi P, Dushime A, Mazarati JB, et al. Reduction of diagnostic and treatment delays reduces rifampicin-resistant tuberculosis mortality in Rwanda. Int J Tuberc Lung Dis. 2020;24:329–39. https://doi.org/10.5588/ijtld.19.0298.

Acknowledgements

We acknowledge the Welch Medical Library at the John Hopkins University School of Medicine and Schulich Library of Physical Sciences, Life Sciences, and Engineering at the McGill University for their help with the database search and in locating full-texts of the articles.

Funding

HS was supported by the New Faculty Start-up Fund from Seoul National University. TG was supported by the Fulbright-Nehru Master’s Fellowship. SM acknowledges support from the National Science Foundation Graduate Research Fellowship Program under Grant No. DGE1745303. The views and information presented are our own. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

Conception and design: HS, MP, SGS. Data acquisition and analysis: JHL, TG, JL, SM, LR, SGS, AB, ZZQ, GG, HS. Interpretation of data: JHL, TG, SM, AB, HS. Writing—original draft: JHL, TG, HS. Writing—review and edit: JHL, TG, JL, SM, LR, SGS, AB, ZZQ, GG, MP, HS. HS supervised the systematic review and takes responsibility for the overall content as the guarantors. JHL and TG contributed equally to this work. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

SS reports working for Foundation for Innovative New Diagnostics (FIND).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Systematic review search strategy. The detailed search strategy for each database searched for this review.

Additional file 2.

Funnel plots. Outputs from the analysis of risk of bias.

Additional file 3.

Data extraction tool. The template for the data extraction tool.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Lee, J.H., Garg, T., Lee, J. et al. Impact of molecular diagnostic tests on diagnostic and treatment delays in tuberculosis: a systematic review and meta-analysis. BMC Infect Dis 22, 940 (2022). https://doi.org/10.1186/s12879-022-07855-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12879-022-07855-9