Abstract

Objectives

The main objective of this study is to evaluate the methodological quality and reporting quality of living systematic reviews (LSRs) on Coronavirus disease 2019 (COVID-19), while the secondary objective is to investigate potential factors that may influence the overall quality of COVID-19 LSRs.

Methods

Six representative databases, including Medline, Excerpta Medica Database (Embase), Cochrane Library, China national knowledge infrastructure (CNKI), Wanfang Database, and China Science, Technology Journal Database (VIP) were systematically searched for COVID-19 LSRs. Two authors independently screened articles, extracted data, and then assessed the methodological and reporting quality of COVID-19 LSRs using the "A Measurement Tool to Assess systematic Reviews-2" (AMSTAR-2) tool and "Preferred Reporting Items for Systematic reviews and Meta-Analyses" (PRISMA) 2020 statement, respectively. Univariate linear regression and multivariate linear regression were used to explore eight potential factors that might affect the methodological quality and reporting quality of COVID-19 LSRs.

Results

A total of 64 COVID-19 LSRs were included. The AMSTAR-2 evaluation results revealed that the number of "yes" responses for each COVID-19 LSR was 13 ± 2.68 (mean ± standard deviation). Among them, 21.9% COVID-19 LSRs were rated as "high", 4.7% as "moderate", 23.4% as "low", and 50% as "critically low". The evaluation results of the PRISMA 2020 statement showed that the sections with poor adherence were methods, results and other information. The number of "yes" responses for each COVID-19 LSR was 21 ± 4.18 (mean ± standard deviation). The number of included studies and registration are associated with better methodological quality; the number of included studies and funding are associated with better reporting quality.

Conclusions

Improvement is needed in the methodological and reporting quality of COVID-19 LSRs. Researchers conducting COVID-19 LSRs should take note of the quality-related factors identified in this study to generate evidence-based evidence of higher quality.

Similar content being viewed by others

What is already known on this topic?

Despite an increasing number of COVID-19 LSRs being published, there have been no studies to assess their methodological and reporting quality, which are crucial for informing clinical practice and policy-making

What this study adds?

Our study aimed to evaluate the methodological and reporting quality of published COVID-19 LSRs and to identify factors that could affect their quality.

What is the implication?

Low-quality COVID-19 LSRs may undermine the confidence of clinicians and policymakers in the evidence, thereby hindering its translation into practice. This study serves as a reference for future researches of COVID-19 LSRs.

Introduction

The 2019 Coronavirus Disease (COVID-19) pandemic is still a major public health problem on a global scale. Subsequent evidence has confirmed that it’s caused by a novel coronavirus, initially referred to as 2019-novel coronavirus (2019-nCoV) by the World Health Organization (WHO) [1]. The WHO declared the COVID-19 outbreak a pandemic on February 11, 2020 [2]. As of September 19, 2022, there have been 610,393,563 confirmed cases of COVID-19 and 6,508,521 deaths reported to WHO [3]. Researchers worldwide are working diligently to understand COVID-19 as soon as possible. However, amidst the massive amount of published evidence, "false information" and "false conclusions" have emerged, forming an "Infodemic" that can increase clinician's workload and hinder problem-solving efforts [4,5,6].

Systematic reviews (SRs) and meta-analysis (MAs) are the results of a rigorous scientific process consisting of several well-defined steps, including a systematic literature search, an evaluation of the quality of each included study and a quantified or narrative synthesis of the results obtained [7]. SRs and MAs are often considered as the highest level of evidence in evidence-based medicine, as they can bridg the gap between clinical research and clinical practice [8, 9]. Healthcare decision-makers in search of reliable information increasingly turn to SRs for the best summary of the evidence [10, 11]. However, traditional SRs are not updated or updated at long intervals (Cochrane SRs require updates every two years), which is inadequate for the rapidly evolving COVID-19 pandemic [12, 13]. Inability to maintain currency under the COVID-19 pandemic may lead to significant inaccuracy [14].

Living systematic reviews (LSRs), proposed by Julian and his colleagues in 2014, are a unique type of SRs that are continually updated as new evidence becomes available [14, 15]. Studies under the COVID-19 pandemic meet exactly three conditions for conducting LSR: (1) The review question is a particular priority for decision-making; (2) There is an important level of uncertainty in the existing evidence; (3) There is likely to be emerging evidence that will impact on the conclusions of the LSR [14, 16]. Therefore, LSRs have become increasingly important under the COVID-19 pandemic.

Well-conducted SRs provide an excellent snapshot of evidence [17], conversely, poor methodological and reporting quality may reduce the confidence of clinicians and policymakers in the conclusions of SRs [18, 19]. Therefore, it is necessary to assess the quality of SRs before applying their conclusions to clinical or public health practice [20]. As a unique kind of SRs, the same holds true for LSRs, which are even more important under the COVID-19 pandemic. Although there have been studies assessing the methodological and reporting quality of COVID-19 SRs [21,22,23], to our knowledge, none have yet evaluated the quality of COVID-19 LSRs.

Therefore, the main objective of this study is to evaluate the methodological quality and reporting quality of LSRs on COVID-19, while the secondary objective is to investigate potential factors that may influence the overall quality of COVID-19 LSRs. The findings of this study will provide useful insights for the development of future COVID-19 LSRs.

Methods

A cross-sectional study was conducted. In this study, it is important to note that given the continued spread of COVID-19 and the rapid development of LSR, we did not have a published protocol prior to conducting this study. This study was reported according to the Strengthening the Reporting of Observational studies in Epidemiology (STROBE) guidelines [24]. (Supplementary material Appendix I).

Search strategy

Six databases including Medline, Excerpta Medica Database (Embase), Cochrane Library, China national knowledge infrastructure (CNKI), Wanfang Database and China Science, Technology Journal Database (VIP) were systematically searched. We searched the databases from their inception until December 9, 2021, and additional searches were conducted on May 13, 2022. The primary search terms included living systematic review, living meta-analysis, etc. (Supplementary material Appendix II). The sample size for this study was all eligible studies.

Given that preprints are not peer-reviewed and results may still change, we did not search preprint databases [25]. We acknowledge that COVID-19 LSRs may have multiple versions due to regular updates, hence we give priority to the version that provides more information.

Inclusion and exclusion criteria

Inclusion criteria: (1) The study type is a SR; (2) The title or abstract clearly identifies it as “living systematic reviews” (using this or similar terminology); (3) The clinical topic of systematic reviews is related to COVID-19.

Exclusion criteria: (1) Unavailable articles; (2) Withdrawn COVID-19 LSRs; (3) Living evidence map.

Selection and information extraction

The retrieved articles were imported into ENDNOTES X8 (Thomson Corporation, Thomson ResearchSoft, USA) software for removing duplicates and selection. The review authors (Jiefeng Luo and Zheng Liu) independently screened articles in duplicate, with any disagreements resolved by a third author (Zhe Chen). The article selection process involved several steps: first, we screened out obviously irrelevant articles based on their titles and abstracts; then, we assessed the remaining articles by reading their full texts.

The data extraction was conducted independently and in duplicate by the review authors (Jiefeng Luo and Zheng Liu), with any disagreements being resolved by a third author (Zhe Chen). The data extraction form was designed in advance based on the pre-extracted data from ten COVID-19 LSRs. The data extraction form included title, first author, year of publication, country and region of publication, journal of publication and eight factors that might affect overall quality of COVID-19 LSRs. These factors include impact factor (IF), number of authors, number of institutions, number of included studies, whether there were international collaborations (yes or no), whether authors stated their funding sources (yes or no), whether the study was pre-registered in any registration platform (yes or no), and whether authors reported compliance with the PRISMA statement (yes or no).

Methodological quality assessment

The methodological quality of COVID-19 LSRs was assessed independently and in duplicate by the review authors (Jiefeng Luo and Zheng Liu), with any disagreements resolved by a third author (Zhe Chen). Although there are numerous tools available for assessing the methodological quality of SRs, we opted for AMSTAR-2 due to its widespread usage and established validity and reliability [19, 26, 27]. AMSTAR-2 consists of 16 domains, of which Domain 2, Domain 4, Domain 7, Domain 9, Domain 11, Domain 13, and Domain 15 are critical domains. Answers for each domain include three options: "yes", "partial yes", and "no". The methodological quality of SRs was divided into four levels according to the following criteria: high (No or one non-critical weakness), moderate (More than one non-critical weakness), low (One critical flaw with or without non-critical weaknesses) and critically low (More than one critical flaw with or without non-critical weaknesses). It is worth noting that Domain 11, 12 and 15 would no longer apply if no meta-analysis has been performed. Considering that multiple non-critical weaknesses may reduce confidence in the review, we defined LSR with more than 4 non-critical weaknesses as "Low".

Reporting quality assessment

The reporting quality of COVID-19 LSRs was assessed independently and in duplicate by the review authors (Jiefeng Luo and Zheng Liu), with any disagreements resolved by a third author (Zhe Chen). PRISMA statement was used to assess the reporting quality of included COVID-19 LSRs. PRISMA is aimed to guide SRs for complete reporting and to improve the transparency and reporting quality of SRs [18]. While there are various PRISMA statement extensions available to facilitate reporting on different types or aspects of SRs, we have chosen to use the PRISMA 2020 statement as the assessment tool for reporting quality of COVID-19 LSRs [28]. This decision was made because we recognized that different versions of the PRISMA statement might result in incomparable items.

PRISMA 2020 statement includes seven sections (title, abstract, introduction, methods, results, discussions and other information) with 27 items, and each item was assessed as "yes", "partial yes", or "no" based on the degree of compliance with the reporting criteria. We calculated the number of "yes" responses for each COVID-19 LSR and defined that the larger the number of "yes" responses, the better the reporting quality of the COVID-19 LSRs.

Statistical analysis

EXCEL 2019 (Microsoft Corporation, WA, USA) was used to quantitatively analyze and qualitatively describe the included COVID-19 LSRs. For all categorical variables such as AMSTAR-2 levels, international collaborations (yes or no), funding (yes or no), pre-registration (yes or no), and PRISMA statement (yes or no), we used frequencies and percentages. For all continuous variables, including the number of "yes" responses of PRISMA 2020 statement, the number of "yes" responses of AMSTAR-2, IF, number of institutions, number of authors, and number of included studies, we used mean, median, standard deviation (SD), and range.

To investigate factors that could potentially affect the methodological quality and reporting quality in COVID-19 LSRs, we conducted linear regression analysis on eight factors as described above. We conducted the linear regression analysis in two steps: firstly, we performed univariate linear regression on the eight factors, and subsequently, we performed multiple linear regression on those factors with statistical differences. We defined factors with statistical differences in multiple linear regression as determinants of quality [29]. We used the variance inflation factor (VIF) to assess multicollinearity among study features, and a VIF ≥ 5 was considered highly correlated [30].

Results

Study selection

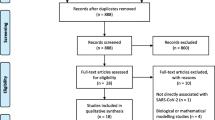

A total of 1,132 articles were initially included, and 1,043 articles remained after removing duplicate articles by ENDNOTES X8. Then 156 articles remained after excluding obviously irrelevant articles by screening the title and abstract. And finally, 64 COVID-19 LSRs were included by reading the full text [31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94]. The flow diagram of the screening process is presented in Fig. 1. The titles and reasons for excluded studies are presented in Appendix VI of the Supplementary Material.

Study characteristics

Table 1 summarizes the characteristics of 64 COVID-19 LSRs we included, with additional details available in Appendix III-V. Most COVID-19 LSRs were published in high-impact Science Citation Index (SCI) journals, with 23% having an IF greater than 10. LSRs involved multiple institutions and authors, with an average of 9.27 institutions and 14.53 authors per LSR. The number of included studies in LSRs ranged from 0 [37, 73]to 728 [67]. Many COVID-19 LSRs followed the PRISMA statement (78.1%), involved collaboration across multiple countries (59.4%), and were funded (81.3%). Additionally, a majority of the COVID-19 LSRs were registered (81.3%).

Methodological quality

The evaluation results of the 64 COVID-19 LSRs based on the AMSTAR-2 had an average of 13 "yes" responses, with a median of 11, a range between 6 and 16, and a standard deviation of 2.68. 50% COVID-19 LSRs were assessed "critically low", 23.4% were "low", 4.7% were "moderate", and only 21.9% were "high". Figure 2 displays the distribution of methodological quality levels.

Domain 1, Domain 4, Domain 5, Domain 8, Domain 9, Domain 11 and Domain 16 were reported by more than 90% of COVID-19 LSRs. The worst methodological quality is Domain 10, with only 31.25% of COVID-19 LSRs reports. Figure 3 shows a heatmap of the assessment results of each domain in the 64 COVID-19 LSRs included by AMSTAR-2. From the figure, it is clear that LSRs adherence to critical domains was better than that of non-critical domains.

Reporting quality

The evaluation results of the 64 COVID-19 LSRs based on the PRISMA 2020 statement had an average of 21 "yes" responses, with a median of 21, a range between 13 and 27, and a standard deviation of 4.18. Figure 4 displays the PRISMA evaluation results for each item of the COVID-19 LSRs, presented as the percentage of "yes" responses.

More than 90% of the COVID-19 LSRs fully reported 9 items (Item 1, Item 3, Item 4, Item 5, Item 6, Item 8, Item 17, Item 23, and Item 26), whereas less than 50% fully reported 5 items (Item 14, Item 15, Item 16, Item 21, and Item 22). The "Title", "Rationale", "Objectives", and "Information sources" had the best reporting quality, with 98% of COVID-19 LSRs fully reporting them. On the other hand, the "Certainty of evidence" had the worst reporting quality, with only 41% of COVID-19 LSRs fully reporting it. Figure 5 shows a heatmap of the assessment results of each item in the 64 COVID-19 LSRs included by PRISMA 2020 statement. From the figure, it is clear that the sections with poor adherence were methods, results and other information.

Results of correlation analyses

The results of univariate and multivariate analyses of the correlation between the eight factors and the overall quality of COVID-19 LSRs are shown in Table 2.

The Table 2 showed that the number of included studies, and registration are associated with AMSTAR-2 levels, and these variables explained a total of 19.2% of the variation in AMSTAR-2 levels; the number of included studies and funding are associated with the number of “yes" in PRISMA 2020 statement, and these variables explained a total of 14.2% of the variation in the number of “yes" in PRISMA 2020 statement.

Discussion

The concept of LSR was proposed by Julian and his colleagues more than nine years ago, but previous studies on LSR were tepid until the outbreak of COVID-19, which triggered a surge in related research [95]. At present, the research methods of LSR are still under exploration. Therefore, it is of great significance to summarize and analyze the existing LSRs quality, and determine potential influencing factors. To our knowledge, this is the first study to assess the quality of COVID-19 LSRs and attempt to identify potential influencing factors. We believe that this is crucial in guiding the implementation of future LSRs under COVID-19.

The methodological quality of 73.4% of COVID-19 LSRs has been assessed as low or critically low. In Domains 10 and Domains 12, the compliance rate were below 50%. The content of Domain 10 is whether SR authors report funding information of the included studies, with a compliance rate of only 31.25%. Studies funded by corporations may be more biased towards the sponsor. Therefore, it is helpful for SR authors to extract and report the funding information of the included studies for readers to judge its influence on the SR. We recommend that future COVID-19 LSRs authors and journal editors adhere to the relevant requirements of Domains 10. Domains 12 assess whether SR authors used Risk of Bias (ROB) tools to evaluate the potential influence of individual studies. The compliance rates for this domain was 31.25%, indicating that a significant proportion of the COVID-19 LSRs included did not meet this criteria. When authors include RCTs of varying quality, Domain 12 becomes particularly crucial, as RCTs with a high risk of bias can distort facts and reduce the credibility of the evidence [96]. Therefore, we recommend that authors of COVID-19 LSRs employ regression analysis to assess the impact of bias on the results when including RCTs of different quality, or restrict the analysis to studies with a low risk of bias to observe the stability of the results.

On the other hand, the average of "yes" responses to PRISMA 2020 statement for each COVID-19 LSR was 21, which only accounted for 77.8% of all items, with items 14, items 21, and items 22 having compliance rates below 50%. Item 14 and Item 21 are whether the authors report "reporting biases" in the methods/results. We speculate that the reason for the low compliance rate for these two items is that in the early stages of conducting the COVID-19 LSR, the number of included studies was small, so the authors did not consider reporting biases (the Cochrane Handbook recommends using a funnel plot to test for reporting biases when including more than 10 studies). We recommend that the authors specify the method for testing "reporting biases" in the protocol before conducting the COVID-19 LSR. When the number of included studies is too small, the reason for not testing "reporting biases" should be explained in the results. Item 22 is to present assessments of the certainty or confidence in the body of evidence for each assessed outcome. Currently, the Grading of Recommendations, Assessment, Development and Evaluations (GRADE) tool is widely used to grade the quality of evidence for each outcome. Murad suggested that the GRADE rating results can be used as triggers for retiring LSRs from the living mode [97]. Therefore, we strongly recommend that authors of COVID-19 LSRs use the GRADE tool to grade the quality of evidence for each outcome.

Through linear regression, we have identified the factors that influence the quality of COVID-19 LSRs, including the number of included studies, funding, and registration (all of which are positively correlated). Given that including more studies may be associated with higher-quality COVID-19 LSRs, we recommend that authors conduct a more comprehensive search and make their utmost efforts to include all eligible studies. Existing evidence has confirmed the high academic value of gray literature during the COVID-19 pandemic [98]. Therefore, authors may consider including eligible gray literature, as appropriate, to enhance the quality of COVID-19 LSRs. Funding may mean a more diverse author team (e.g. methodologists, informaticians), and sustaining LSRs requires funding [99], so we suggest that authors should obtain as much funding as possible for their research. Many studies have shown that registration is positively correlated with SR’s quality [100,101,102], as it helps to avoid authors selectively reporting findings that favor publication. Therefore, we recommend that all authors of COVID-19 LSRs register and present their protocol on websites/journals.

Surprisingly, claiming adherence to the PRISMA statement did not improve the reporting quality of COVID-19 LSRs. We speculate that this may be due to almost half of the LSRs (46.9%) following the PRISMA 2009 statement (as the PRISMA 2020 statement was released in March 2021), which has significant differences from the PRISMA 2020 statement.

The potential association among international cooperation, number of authors, and number of institutions (i.e., more authors means more institutions, which necessarily implies more international collaboration) may be one of the reasons why they do not show a correlation with the quality of COVID-19 LSRs.

Similar to Zheng's findings [95], our study found that over 89% of COVID-19 LSRs were published in SCI journals (This figure was 76.8% in Zheng's findings), with more than 64% of these journals having an IF greater than 5. This reflects the importance that high-impact journals place on COVID-19 LSRs and facilitates the wide dissemination of evidence [22]. In Zheng's findings, over 97% of LSRs were published in English. Similarly, in this study, all the COVID-19 LSRs were published in English. This suggests that LSR authors prioritize international communication of their findings, which could facilitate overcoming language barriers in translating clinical evidence into practice across different countries.

Despite conducting a comprehensive search, we did not identify any studies evaluating the quality of COVID-19 LSRs. Only one study, published in 2023, evaluating the quality of different versions of LSRs was included for comparison in this study. A. Akl and his colleagues assessed the methodological and reporting quality of 64 LSRs (base version) published from February 2013 to April 2021 using AMSTAR-2 and the PRISMA 2009 statement, respectively [103]. The methodological quality of the two studies was generally consistent, except for domains 4, 8, 9,10 and 12. A comparison of the proportion of "yes" in each domain between this study and A. Akl's study for AMSTAR-2 is presented in Fig. 6. It is our speculation that the reason behind these variations is the significant differences in the inter-rater reliability of AMSTAR, which is influenced by the pairing of reviewers. Additionally, A. Akl's study only included 63% of LSRs that are COVID-19 related. Due to the incomparability between PRISMA 2009 statement and PRISMA 2020 statement, we did not compare the differences in reporting quality between this study and A. Akl's study. Unfortunately, the primary objective of A. Akl's study was not to evaluate the quality of LSRs but rather to describe their characteristics and understand their life cycles. Consequently, several important data points, such as the methodological and reporting quality results for each individual LSR, were not accessible. Therefore, A. Akl's study does not provide prescriptive guidance for LSR’s authors. In contrast, our study focuses specifically on COVID-19-related LSRs and the findings can offer valuable insights for future authors of COVID-19 LSRs.

Strengths and limitations

Our study has several advantages. Firstly, we believe that we are the first to evaluate the methodological and reporting quality of COVID-19 LSRs. Secondly, our study identifies potential factors that could impact the quality of COVID-19 LSRs, which could inform future development of such studies. Thirdly, we conducted a systematic search, including an updated search in May 2022, to ensure all eligible COVID-19 LSRs were included. However, our study also has limitations. Firstly, the PRISMA 2020 statement acknowledges that applying it to LSRs presents some challenges, such as reporting key data during the production process (e.g., search frequency, screening frequency, update frequency). Secondly, we used the AMSTAR-2 tool to assess all included COVID-19 LSRs, but this tool is designed for assessing healthcare intervention SRs and is not suitable for evaluating the "living" domain in the production of COVID-19 LSRs.

Conclusion

Improvement is needed in the methodological and reporting quality of COVID-19 LSRs. Researchers conducting COVID-19 LSRs should take note of the quality-related factors identified in this study to generate evidence-based evidence of higher quality.

Availability of data and materials

The data used to support the findings of this study are included within the supplementary information.

References

Lu H, Stratton CW, Tang YW. Outbreak of pneumonia of unknown etiology in Wuhan, China: The mystery and the miracle. J Med Virol. 2020;92(4):401–2.

WHO[homepage on the Internet]. Naming the coronavirus disease (COVID-19) and the virus that causes it. 2020–02–11; Available from:https://www.who.int/emergencies/diseases/novel-coronavirus-2019/technical-guidance/naming-the-coronavirus-disease-(covid-2019)-and-the-virus-that-causes-it, 2022–05–09.

World Health Organization[homepage on the Internet]. WHO Coronavirus Disease (COVID-19) Dashboard. 2022; Available from:https://covid19.who.int/. Accessed 03–09, 2022.

Zarocostas J. How to fight an infodemic. Lancet (London, England). 2020;395(10225):676.

Casigliani V, De Nard F, De Vita E, et al. Too much information, too little evidence: is waste in research fuelling the covid-19 infodemic? BMJ. 2020;370: m2672.

Gazendam A, Ekhtiari S, Wong E, et al. The “Infodemic” of journal publication associated with the novel coronavirus disease. J Bone Joint Surg Am. 2020;102(13): e64.

The Cochrane Library[homepage on the Internet]. Systematic Reviews. Available from:https://swiss.cochrane.org/resources/systematic-reviews, 2022–05–11.

Caldwell PH, Bennett T. Easy guide to conducting a systematic review. J Paediatr Child Health. 2020;56(6):853–6.

Wormald R, Evans J. What makes systematic reviews systematic and why are they the highest level of evidence? Ophthalmic Epidemiol. 2018;25(1):27–30.

Io M. Finding What Works in Health Care: Standards for Systematic Reviews. Washington, DC: The National Academies Press; 2011.

Bero LA, Jadad AR. How consumers and policymakers can use systematic reviews for decision making. Ann Intern Med. 1997;127(1):37–42.

Garner P, Hopewell S, Chandler J, et al. When and how to update systematic reviews: consensus and checklist. BMJ. 2016;354: i3507.

Jadad AR, Cook DJ, Jones A, et al. Methodology and reports of systematic reviews and meta-analyses: a comparison of Cochrane reviews with articles published in paper-based journals. JAMA. 1998;280(3):278–80.

Elliott JH, Turner T, Clavisi O, et al. Living systematic reviews: an emerging opportunity to narrow the evidence-practice gap. PLoS Med. 2014;11(2): e1001603.

Elliott JH, Synnot A, Turner T, et al. Living systematic review: 1. Introduction-the why, what, when, and how. J Clin Epidemiol. 2017;91:23–30.

collaboration C[homepage on the Internet]. Guidance for the production and publication of Cochrane living systematic reviews: Cochrane Reviews in living mode. 2019; Available from:https://community.cochrane.org/sites/default/files/uploads/inline-files/Transform/201912_LSR_Revised_Guidance.pdf. Accessed 03–09, 2022.

D’Souza R, Malhamé I, Shah PS. Evaluating perinatal outcomes during a pandemic: a role for living systematic reviews. Acta Obstet Gynecol Scand. 2022;101(1):4–6.

Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339: b2700.

Shea BJ, Reeves BC, Wells G, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017;358: j4008.

Shea BJ, Bouter LM, Peterson J, et al. External validation of a measurement tool to assess systematic reviews (AMSTAR). PLoS ONE. 2007;2(12): e1350.

do Borges Nascimento IJ, O’Mathúna DP, von Groote TC, et al. Coronavirus disease (COVID-19) pandemic: an overview of systematic reviews. BMC Infect Dis. 2021;21(1):525.

Li Y, Cao L, Zhang Z, et al. Reporting and methodological quality of COVID-19 systematic reviews needs to be improved: an evidence mapping. J Clin Epidemiol. 2021;135:17–28.

Chen Y, Li L, Zhang Q, et al. Epidemiology, methodological quality, and reporting characteristics of systematic reviews and meta-analyses on coronavirus disease 2019: a cross-sectional study. Medicine. 2021;100(47): e27950.

Cuschieri S. The STROBE guidelines. Saudi J Anaesth. 2019;13(Suppl 1):S31-s34.

Iannizzi C, Dorando E, Burns J, et al. Methodological challenges for living systematic reviews conducted during the COVID-19 pandemic: a concept paper. J Clin Epidemiol. 2021;141:82–9.

Lorenz RC, Matthias K, Pieper D, et al. A psychometric study found AMSTAR 2 to be a valid and moderately reliable appraisal tool. J Clin Epidemiol. 2019;114:133–40.

Perry R, Whitmarsh A, Leach V, Davies P. A comparison of two assessment tools used in overviews of systematic reviews: ROBIS versus AMSTAR-2. Syst Rev. 2021;10(1):273.

Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ (Clinical Research Ed). 2021;372: n71.

Zhu Y, Fan L, Zhang H, et al. Is the best evidence good enough: quality assessment and factor analysis of meta-analyses on depression. PLoS ONE. 2016;11(6): e0157808.

Akinwande O, Dikko HG, Agboola S. Variance inflation factor: as a condition for the inclusion of suppressor variable(s) in regression analysis. Open J Stat. 2015;05:754–67.

Allotey J, Chatterjee S, Kew T, et al. SARS-CoV-2 positivity in offspring and timing of mother-to-child transmission: living systematic review and meta-analysis. BMJ. 2022;376: e067696.

Allotey J, Stallings E, Bonet M, et al. Clinical manifestations, risk factors, and maternal and perinatal outcomes of coronavirus disease 2019 in pregnancy: living systematic review and meta-analysis. BMJ. 2020;370: m3320.

Amer YS, Titi MA, Godah MW, et al. International alliance and AGREE-ment of 71 clinical practice guidelines on the management of critical care patients with COVID-19: a living systematic review. J Clin Epidemiol. 2022;142:333–70.

Amorim Dos Santos J, Normando AGC, da Carvalho Silva RL, et al. Oral manifestations in patients with covid-19: a 6-month update. J Dent Res. 2021;100(12):1321–9.

Ansems K, Grundeis F, Dahms K, et al. Remdesivir for the treatment of COVID-19. Cochrane Database Syst Rev. 2021;8(8):Cd014962.

Asiimwe IG, Pushpakom S, Turner RM, Kolamunnage-Dona R, Jorgensen AL, Pirmohamed M. Cardiovascular drugs and COVID-19 clinical outcomes: A living systematic review and meta-analysis. Br J Clin Pharmacol. 2021;87(12):4534–45.

Baladia E, Pizarro AB, Ortiz-Muñoz L, Rada G. Vitamin C for COVID-19: a living systematic review. Medwave. 2020;20(6): e7978.

Bartoszko JJ, Siemieniuk RAC, Kum E, et al. Prophylaxis against covid-19: living systematic review and network meta-analysis. BMJ. 2021;373: n949.

Bell V, Wade D. Mental health of clinical staff working in high-risk epidemic and pandemic health emergencies a rapid review of the evidence and living meta-analysis. Soc Psychiatry Psychiatr Epidemiol. 2021;56(1):1–11.

Bonardi O, Wang Y, Li K, et al. Effects of COVID-19 mental health interventions among children, adolescents, and adults not quarantined or undergoing treatment due to COVID-19 infection: a systematic review of randomised controlled trials. Can J Psychiatry. 2022;67(5):336–50.

Brümmer LE, Katzenschlager S, Gaeddert M, et al. Accuracy of novel antigen rapid diagnostics for SARS-CoV-2: a living systematic review and meta-analysis. PLoS Med. 2021;18(8): e1003735.

Buitrago-Garcia D, Egli-Gany D, Counotte MJ, et al. Occurrence and transmission potential of asymptomatic and presymptomatic SARS-CoV-2 infections: a living systematic review and meta-analysis. PLoS Med. 2020;17(9): e1003346.

Bwire GM, Njiro BJ, Mwakawanga DL, Sabas D, Sunguya BF. Possible vertical transmission and antibodies against SARS-CoV-2 among infants born to mothers with COVID-19: A living systematic review. J Med Virol. 2021;93(3):1361–9.

Cares-Marambio K, Montenegro-Jiménez Y, Torres-Castro R, et al. Prevalence of potential respiratory symptoms in survivors of hospital admission after coronavirus disease 2019 (COVID-19): A systematic review and meta-analysis. Chron Respir Dis. 2021;18:14799731211002240.

Centeno-Tablante E, Medina-Rivera M, Finkelstein JL, et al. Transmission of SARS-CoV-2 through breast milk and breastfeeding: a living systematic review. Ann N Y Acad Sci. 2021;1484(1):32–54.

Ceravolo MG, Andrenelli E, Arienti C, et al. Rehabilitation and COVID-19: rapid living systematic review by cochrane rehabilitation Field - third edition. Update as of June 30th, 2021. Eur J Phys Rehabil Med. 2021;57(5):850–7.

Davidson M, Menon S, Chaimani A, et al. Interleukin-1 blocking agents for treating COVID-19. Cochrane Database Syst Rev. 2022;1(1):Cd015308.

Deeks JJ, Dinnes J, Takwoingi Y, et al. Antibody tests for identification of current and past infection with SARS-CoV-2. Cochrane Database Syst Rev. 2020;6(6):Cd013652.

Dong F, Liu HL, Dai N, Yang M, Liu JP. A living systematic review of the psychological problems in people suffering from COVID-19. J Affect Disord. 2021;292:172–88.

Dzinamarira T, Nkambule SJ, Hlongwa M, et al. Risk factors for COVID-19 infection among healthcare workers. A first report from a living systematic review and meta-analysis. Saf Health Work. 2022;13:263.

Elvidge J, Summerfield A, Nicholls D, Dawoud D. Diagnostics and treatments of COVID-19: a living systematic review of economic evaluations. Value Health. 2022;25:773.

Ghosn L, Chaimani A, Evrenoglou T, et al. Interleukin-6 blocking agents for treating COVID-19: a living systematic review. Cochrane Database Syst Rev. 2021;3(3):Cd013881.

Gómez-Ochoa SA, Franco OH, Rojas LZ, et al. COVID-19 in health-care workers: a living systematic review and meta-analysis of prevalence, risk factors, clinical characteristics, and outcomes. Am J Epidemiol. 2021;190(1):161–75.

Griesel M, Wagner C, Mikolajewska A, et al. Inhaled corticosteroids for the treatment of COVID-19. Cochrane Database Syst Rev. 2022;3(3):Cd015125.

Harder T, Koch J, Vygen-Bonnet S, et al. Efficacy and effectiveness of COVID-19 vaccines against SARS-CoV-2 infection: interim results of a living systematic review, 1 January to 14 May 2021. Euro Surveill. 2021;26(28):2100563.

Harder T, Külper-Schiek W, Reda S, et al. Effectiveness of COVID-19 vaccines against SARS-CoV-2 infection with the Delta (B.1.617.2) variant: second interim results of a living systematic review and meta-analysis, 1 January to 25 August 2021. Euro Surveill. 2021;26(41):2100920.

Helfand M, Fiordalisi C, Wiedrick J, et al. Risk for reinfection after SARS-CoV-2: a living, rapid review for american college of physicians practice points on the role of the antibody response in conferring immunity following SARS-CoV-2 infection. Ann Intern Med. 2022;175:547.

Hernandez AV, Roman YM, Pasupuleti V, Barboza JJ, White CM. Hydroxychloroquine or chloroquine for treatment or prophylaxis of COVID-19: a living systematic review. Ann Intern Med. 2020;173(4):287–96.

Hussain S, Riad A, Singh A, et al. Global prevalence of COVID-19-associated mucormycosis (CAM): living systematic review and meta-analysis. J Fungi (Basel, Switzerland). 2021;7(11):985.

John A, Eyles E, Webb RT, et al. The impact of the COVID-19 pandemic on self-harm and suicidal behaviour: update of living systematic review. F1000Res. 2020;9:1097.

Juul S, Nielsen EE, Feinberg J, et al. Interventions for treatment of COVID-19: A living systematic review with meta-analyses and trial sequential analyses (The LIVING Project). PLoS Med. 2020;17(9): e1003293.

Kirkham AM, Monaghan M, Bailey AJM, et al. Mesenchymal stem/stromal cell-based therapies for COVID-19: First iteration of a living systematic review and meta-analysis: MSCs and COVID-19. Cytotherapy. 2022;24:639.

Korang SK, von Rohden E, Veroniki AA, et al. Vaccines to prevent COVID-19: a living systematic review with trial sequential analysis and network meta-analysis of randomized clinical trials. PLoS ONE. 2022;17(1): e0260733.

Kreuzberger N, Hirsch C, Chai KL, et al. SARS-CoV-2-neutralising monoclonal antibodies for treatment of COVID-19. Cochrane Database Syst Rev. 2021;9(9):Cd013825.

Langford BJ, So M, Raybardhan S, et al. Bacterial co-infection and secondary infection in patients with COVID-19: a living rapid review and meta-analysis. Clin Microbiol Infect. 2020;26(12):1622–9.

Mackey K, King VJ, Gurley S, et al. Risks and impact of angiotensin-converting enzyme inhibitors or angiotensin-receptor blockers on SARS-CoV-2 infection in adults: a living systematic review. Ann Intern Med. 2020;173(3):195–203.

Maguire BJ, McLean ARD, Rashan S, et al. Baseline results of a living systematic review for COVID-19 clinical trial registrations. Wellcome Open Res. 2020;5:116.

Melo AKG, Milby KM, Caparroz A, et al. Biomarkers of cytokine storm as red flags for severe and fatal COVID-19 cases: A living systematic review and meta-analysis. PLoS ONE. 2021;16(6): e0253894.

Michelen M, Manoharan L, Elkheir N, et al. Characterising long COVID: a living systematic review. BMJ Glob Health. 2021;6(9):e005427.

Mikolajewska A, Fischer AL, Piechotta V, et al. Colchicine for the treatment of COVID-19. Cochrane Database Syst Rev. 2021;10(10):Cd015045.

O’Byrne L, Webster KE, MacKeith S, Philpott C, Hopkins C, Burton MJ. Interventions for the treatment of persistent post-COVID-19 olfactory dysfunction. Cochrane Database Syst Rev. 2021;7(7):Cd013876.

Qiu X, Nergiz AI, Maraolo AE, Bogoch II, Low N, Cevik M. The role of asymptomatic and pre-symptomatic infection in SARS-CoV-2 transmission-a living systematic review. Clin Microbiol Infect. 2021;27(4):511–9.

Rada G, Corbalán J, Rojas P. Cell-based therapies for COVID-19: a living, systematic review. Medwave. 2020;20(11): e8079.

Rocha APD, Atallah ÁN, Pinto A, et al. COVID-19 and patients with immune-mediated inflammatory diseases undergoing pharmacological treatments: a rapid living systematic review. Sao Paulo Med J. 2020;138(6):515–20.

Salameh JP, Leeflang MM, Hooft L, et al. Thoracic imaging tests for the diagnosis of COVID-19. Cochrane Database Syst Rev. 2020;9:Cd013639.

Schlesinger S, Neuenschwander M, Lang A, et al. Risk phenotypes of diabetes and association with COVID-19 severity and death: a living systematic review and meta-analysis. Diabetologia. 2021;64(7):1480–91.

Schünemann HJ, Khabsa J, Solo K, et al. Ventilation techniques and risk for transmission of coronavirus disease, including COVID-19: a living systematic review of multiple streams of evidence. Ann Intern Med. 2020;173(3):204–16.

Siemieniuk RA, Bartoszko JJ, Díaz Martinez JP, et al. Antibody and cellular therapies for treatment of covid-19: a living systematic review and network meta-analysis. BMJ. 2021;374: n2231.

Siemieniuk RA, Bartoszko JJ, Ge L, et al. Drug treatments for covid-19: living systematic review and network meta-analysis. BMJ. 2020;370:2980.

Silveira FM, Mello ALR, da Silva FL, et al. Morphological and tissue-based molecular characterization of oral lesions in patients with COVID-19: a living systematic review. Arch Oral Biol. 2022;136: 105374.

Soto-Cámara R, García-Santa-Basilia N, Onrubia-Baticón H, et al. Psychological impact of the COVID-19 pandemic on out-of-hospital health professionals: a living systematic review. J Clin Med. 2021;10(23):5578.

Stroehlein JK, Wallqvist J, Iannizzi C, et al. Vitamin D supplementation for the treatment of COVID-19: a living systematic review. Cochrane Database Syst Rev. 2021;5(5):Cd015043.

Tleyjeh IM, Kashour Z, Riaz M, Hassett L, Veiga VC, Kashour T. Efficacy and safety of tocilizumab in COVID-19 patients: a living systematic review and meta-analysis, first update. Clin Microbiol Infect. 2021;27(8):1076–82.

Valk SJ, Piechotta V, Chai KL, et al. Convalescent plasma or hyperimmune immunoglobulin for people with COVID-19: a rapid review. Cochrane Database Syst Rev. 2020;5(5):Cd013600.

Verdejo C, Vergara-Merino L, Meza N, et al. Macrolides for the treatment of COVID-19: a living, systematic review. Medwave. 2020;20(11): e8074.

Verdugo-Paiva F, Acuña MP, Solá I, Rada G. Remdesivir for the treatment of COVID-19: a living systematic review. Medwave. 2020;20(11): e8080.

Verdugo-Paiva F, Izcovich A, Ragusa M, Rada G. Lopinavir-ritonavir for COVID-19: a living systematic review. Medwave. 2020;20(6): e7967.

Wagner C, Griesel M, Mikolajewska A, et al. Systemic corticosteroids for the treatment of COVID-19. Cochrane Database Syst Rev. 2021;8(8):Cd014963.

Webster KE, O’Byrne L, MacKeith S, Philpott C, Hopkins C, Burton MJ. Interventions for the prevention of persistent post-COVID-19 olfactory dysfunction. Cochrane Database Syst Rev. 2021;7(7):Cd013877.

Wilt TJ, Kaka AS, MacDonald R, Greer N, Obley A, Duan-Porter W. Remdesivir for adults with COVID-19: a living systematic review for American college of physicians practice points. Ann Intern Med. 2021;174(2):209–20.

Wynants L, Van Calster B, Collins GS, et al. Prediction models for diagnosis and prognosis of covid-19: systematic review and critical appraisal. BMJ. 2020;369: m1328.

Xu W, Li X, Dong Y, et al. SARS-CoV-2 transmission in schools: an updated living systematic review (version 2; November 2020). J Glob Health. 2021;11:10004.

Yang J, D’Souza R, Kharrat A, et al. Coronavirus disease 2019 pandemic and pregnancy and neonatal outcomes in general population: a living systematic review and meta-analysis (updated Aug 14, 2021). Acta Obstet Gynecol Scand. 2022;101(1):7–24.

Zhang X, Shang L, Fan G, et al. The efficacy and safety of Janus Kinase inhibitors for patients with COVID-19: a living systematic review and meta-analysis. Front Med. 2021;8: 800492.

Zheng Q, Xu J, Gao Y, et al. Past, present and future of living systematic review: a bibliometrics analysis. BMJ Glob Health. 2022;7(10):e009378.

Harvey LA, Dijkers MP. Should trials that are highly vulnerable to bias be excluded from systematic reviews? Spinal Cord. 2019;57(9):715–6.

Murad MH, Wang Z, Chu H, Lin L, El Mikati IK, Khabsa J, Akl EA, Nieuwlaat R, Schuenemann HJ, Riaz IB. Proposed triggers for retiring a living systematic review. BMJ Evid Based Med. 2023:bmjebm-2022-112100.

Kousha K, Thelwall M, Bickley M. The high scholarly value of grey literature before and during Covid-19. Scientometrics. 2022;127(6):3489–504.

Cochrane collaboration[homepage on the Internet]. Guidance for the production and publication of Cochrane living systematic reviews: Cochrane Reviews in living mode. 2019; Available from:https://community.cochrane.org/sites/default/files/uploads/inline-files/Transform/201912_LSR_Revised_Guidance.pdf. Accessed 03–09, 2022.

Helliwell JA, Thompson J, Smart N, Jayne DG, Chapman SJ. Duplication and nonregistration of COVID-19 systematic reviews: bibliometric review. Health Sci Rep. 2022;5(3): e541.

Sideri S, Papageorgiou SN, Eliades T. Registration in the international prospective register of systematic reviews (PROSPERO) of systematic review protocols was associated with increased review quality. J Clin Epidemiol. 2018;100:103–10.

Ge L, Tian JH, Li YN, et al. Association between prospective registration and overall reporting and methodological quality of systematic reviews: a meta-epidemiological study. J Clin Epidemiol. 2018;93:45–55.

Akl EA, El Khoury R, Khamis AM, et al. The life and death of living systematic reviews: a methodological survey. J Clin Epidemiol. 2023;156:11–21.

Acknowledgements

Not applicable

Funding

This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

Jiefeng Luo: Conceptualization, Data curation, Formal analysis, Software, Writing – original draft. Zhe Chen: Conceptualization, Validation, Writing –review & editing, Methodology. Dan Liu: Writing –review & editing, Supervision, Validation. Hailong Li: Writing –review & editing, Supervision, Data curation. Siyi He: Writing –review & editing. Linan Zeng: Writing –review & editing, Methodology, Resources. Mengting Yang: Investigation, Data curation. Zheng Liu: Investigation, Data curation. Xue Xiao: Project administration, Resources, Supervision, Writing –review & editing. Lingli Zhang: Project administration, Resources, Supervision, Writing –review & editing.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

This study involved no study participants and was exempt from institutional review. All data analysed in this study came from previously published articles.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Appendix I.

STROBE Checklists. Appendix II. SEARCH STRATEGIES. Appendix III. PRISMA statement 2020 assessment results. Appendix IV. AMSTAR-2 assessment results. Appendix V. Characteristics of the included studies. Appendix VI. List of the excluded studies.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Luo, J., Chen, Z., Liu, D. et al. Methodological quality and reporting quality of COVID-19 living systematic review: a cross-sectional study. BMC Med Res Methodol 23, 175 (2023). https://doi.org/10.1186/s12874-023-01980-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-023-01980-y