Abstract

Background

Multi-center studies can generate robust and generalizable evidence, but privacy considerations and legal restrictions often make it challenging or impossible to pool individual-level data across data-contributing sites. With binary outcomes, privacy-protecting distributed algorithms to conduct logistic regression analyses have been developed. However, the risk ratio often provides a more transparent interpretation of the exposure-outcome association than the odds ratio. Modified Poisson regression has been proposed to directly estimate adjusted risk ratios and produce confidence intervals with the correct nominal coverage when individual-level data are available. There are currently no distributed regression algorithms to estimate adjusted risk ratios while avoiding pooling of individual-level data in multi-center studies.

Methods

By leveraging the Newton-Raphson procedure, we adapted the modified Poisson regression method to estimate multivariable-adjusted risk ratios using only summary-level information in multi-center studies. We developed and tested the proposed method using both simulated and real-world data examples. We compared its results with the results from the corresponding pooled individual-level data analysis.

Results

Our proposed method produced the same adjusted risk ratio estimates and standard errors as the corresponding pooled individual-level data analysis without pooling individual-level data across data-contributing sites.

Conclusions

We developed and validated a distributed modified Poisson regression algorithm for valid and privacy-protecting estimation of adjusted risk ratios and confidence intervals in multi-center studies. This method allows computation of a more interpretable measure of association for binary outcomes, along with valid construction of confidence intervals, without sharing of individual-level data.

Similar content being viewed by others

Background

In studies where the outcome variable is binary, a logistic regression model is commonly used for convenient estimation of the adjusted (i.e., conditional on measured covariates) odds ratio comparing exposed to unexposed individuals [1, 2]. However, the odds ratio is not easily interpretable because, unlike the risk ratio, it is not a direct measure of a ratio of probabilities, often of primary interest to patients and clinicians [3]. Although the odds ratio approximates the risk ratio under the rare disease assumption (e.g., odds of the outcome < 10% in all exposure and confounder categories) [4], it can be quite different from the risk ratio and produce misleading results when this assumption is not met [3, 5,6,7,8].

Log-binomial regression can directly estimate adjusted risk ratios without requiring the rare disease assumption, but it is susceptible to non-convergence issues when the maximum likelihood estimators lie near the boundary of the parameter space [9, 10]. Poisson regression is another approach to estimating adjusted risk ratios and does not have any known convergence problems in its parameter space. This approach provides consistent estimates of adjusted risk ratios but incorrect estimates of the variance because it relies on a Poisson distributed, rather than binomially distributed, outcome. In practice, standard implementation of Poisson regression tends to produce conservative confidence intervals [11]. As a solution to these challenges, Zou [12] proposed a modified Poisson regression approach that allows direct estimation of adjusted risk ratios even when the rare disease assumption is not met. This approach avoids the convergence issues typically observed in log-binomial regression and, unlike conventional Poisson regression, provides consistent variance estimates and confidence intervals with the correct nominal coverage.

A growing number of studies are now conducted within multi-center distributed data networks [13]. Such collaborations combine data from multiple sources to generate more reliable evidence using larger and more representative samples. Within these networks, each data-contributing site (i.e., data partner) maintains physical control of their data and may not always be able or willing to share individual-level data for analysis. For example, the Sentinel System is a national program funded by the U.S. Food and Drug Administration to proactively monitor the safety of regulated medical products using electronic healthcare data from multiple data partners [14]. In multi-center studies like those conducted within the Sentinel System, it is often crucial to minimize sharing of sensitive individual-level data to protect patient privacy. The development and applications of analytic methods that enable valid statistical analysis without pooling individual-level data are therefore increasingly important.

Privacy-protecting distributed algorithms to conduct logistic regression analyses have been previously developed [15,16,17,18]. To our knowledge, there are currently no distributed algorithms to estimate adjusted risk ratios via modified Poisson regression while avoiding pooling of individual-level data across data partners. In this paper, we propose such an algorithm and provide example R [19] code to implement the algorithm. We also illustrate in simulated and real-world data examples that our algorithm produces adjusted risk ratio estimates and standard errors equivalent to those obtained from the corresponding pooled individual-level data analysis.

Methods

Theory of modified Poisson regression for pooled individual-level data

We begin by describing the general theory of modified Poisson regression in single-database studies. Let X be a vector of covariates, E a binary exposure indicator (E = 1 if exposed and E = 0 if unexposed), and Y the binary outcome variable (Y = 1 if the outcome occurs and Y = 0 otherwise).

Let Z be a vector of information on the exposure and covariates. Specifically, Z = (1, g(E, XT))T where g(E, XT) is a vector containing a specified function of E and X. Assume the risk of the outcome conditional on E and X can be written as

where β is an unknown vector of parameters. An example of model 1 is \( P\left(Y=1|E,\boldsymbol{X}\right)=\exp \left({\beta}_0+{\beta}_E\ E+{\boldsymbol{\beta}}_{\boldsymbol{X}}^T\boldsymbol{X}\right) \). In this special case, the risk ratio of the outcome comparing the exposed to unexposed and adjusting for covariates X is given by P(Y = 1| E = 1, X)/P(Y = 1| E = 0, X) = exp(βE).

Alternatively, we might assume a more flexible model that allows interactions between E and X: \( P\left(Y=1|\boldsymbol{X},E\right)=\exp \left({\beta}_0+{\beta}_E\ E+{\boldsymbol{\beta}}_{\boldsymbol{X}}^T\boldsymbol{X}+{\boldsymbol{\beta}}_{E\boldsymbol{X}}^TE\boldsymbol{X}\right) \). Under this model, the risk ratio of the outcome comparing the exposed to unexposed and adjusting for covariates X is given by \( P\left(Y=1|E=1,\boldsymbol{X}\right)/P\left(Y=1|E=0,\boldsymbol{X}\right)=\exp \left({\beta}_E+{\boldsymbol{\beta}}_{E\boldsymbol{X}}^T\boldsymbol{X}\right) \), which depends on the value of X.

Suppose we have an independent and identically distributed sample of size n. For each individual i, the following variables are measured: Let Xi be a vector of covariates, Ei a binary exposure indicator (Ei = 1 if exposed and Ei = 0 if unexposed), Yi the binary outcome variable (Yi = 1 if the outcome occurs and Yi = 0 otherwise), and Zi a vector of information on the exposure and covariates, i.e., Zi = (1, g(Ei, XiT))T.

Zou [12] provided the theoretical justification for his proposed approach in the setting of a 2 by 2 table (a binary exposure and no covariates). The justification for this approach can be established more generally using the theory of unbiased estimating equations [20]. Provided model 1 is correctly specified, we have E[{Y − exp(ZTβ)}Z] = 0, which leads to the unbiased estimating equation

Solving (2) for β gives \( \hat{\boldsymbol{\beta}} \), a consistent and asymptotically normal estimator for the true β.

A consistent estimator of the variance of \( \hat{\boldsymbol{\beta}} \) is then given by the sandwich variance estimator [20]

where \( \boldsymbol{H}\left(\hat{\boldsymbol{\beta}}\right)=-\sum \limits_{i=1}^n\exp \left({{\boldsymbol{Z}}_i}^T\hat{\boldsymbol{\beta}}\right){\boldsymbol{Z}}_i{{\boldsymbol{Z}}_i}^T \) and \( \boldsymbol{B}\left(\hat{\boldsymbol{\beta}}\right)=\sum \limits_{i=1}^n{\left\{{Y}_i-\exp \left({{\boldsymbol{Z}}_i}^T\hat{\boldsymbol{\beta}}\right)\right\}}^2{\boldsymbol{Z}}_i{{\boldsymbol{Z}}_i}^T \). Zou [12] referred to this procedure as modified Poisson regression because (2) is equivalent to the score equation for the Poisson likelihood but the variance estimator does not rely on the Poisson distribution assumption (clearly unreasonable for binary outcomes).

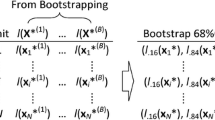

Distributed algorithm for conducting modified Poisson regression in multi-center studies

Suppose the n individuals’ data are physically stored in K data partners that are unable to share their individual-level data with the analysis center. For k = 1, …, K, let Ωk denote the set of indexes of individuals who are members of the k th data partner.

When the individual-level data are available to the analysis center, the estimator \( \hat{\boldsymbol{\beta}} \) and its corresponding variance estimator can be obtained with off-the-shelf statistical software. However, when the individual-level data are not available, (2) cannot be directly solved for β to obtain \( \hat{\boldsymbol{\beta}} \), and \( \hat{\mathit{\operatorname{var}}}\left(\hat{\boldsymbol{\beta}}\right) \) cannot be directly calculated using (3). Here we describe a distributed algorithm that produces identical \( \hat{\boldsymbol{\beta}} \) and \( \hat{\mathit{\operatorname{var}}}\left(\hat{\boldsymbol{\beta}}\right) \) in multi-center studies where individual-level data are not pooled.

We leverage the Newton-Raphson method such that \( \hat{\boldsymbol{\beta}} \) can be obtained using an iteration-based procedure with only summary-level information being shared between the data partners and the analysis center in each iteration. The r th iterated estimate of β using the Newton-Raphson method is

where β(r − 1) is the (r − 1) th iterated estimate of β, \( {\boldsymbol{S}}^{(r)}=\sum \limits_{i=1}^n\left\{{Y}_i-\exp \left({{\boldsymbol{Z}}_i}^T{\boldsymbol{\beta}}^{\left(\mathrm{r}-1\right)}\right)\right\}{\boldsymbol{Z}}_i \), and \( {\boldsymbol{H}}^{(r)}=-\sum \limits_{i=1}^n\exp \left({{\boldsymbol{Z}}_i}^T{\boldsymbol{\beta}}^{\left(\mathrm{r}-1\right)}\right){\boldsymbol{Z}}_i{{\boldsymbol{Z}}_i}^T. \)

We observe that S(r) and H(r) can be re-written as summation of site-specific quantities:

where

and

where

Therefore, to calculate S(r) and H(r), each data partner k = 1, …, K only needs to calculate and share with the analysis center the summary-level information Sk(r) and Hk(r).

Next, consider estimation of the variance of \( \hat{\boldsymbol{\beta}} \). We observe that

where

and

where

To calculate \( \boldsymbol{H}\left(\hat{\boldsymbol{\beta}}\right) \) and \( \boldsymbol{B}\left(\hat{\boldsymbol{\beta}}\right) \), each data partner k = 1, …, K only needs to calculate and share the summary-level information \( {\boldsymbol{H}}_k\left(\hat{\boldsymbol{\beta}}\right) \) and \( {\boldsymbol{B}}_k\left(\hat{\boldsymbol{\beta}}\right) \) after receiving the value of \( \hat{\boldsymbol{\beta}} \) from the analysis center. Unlike in the estimation of β, no iterations are needed in the sandwich variance estimation.

We summarize our distributed algorithm for conducting modified Poisson regression in multi-center studies below.

Point estimation

Step 0 (Determination of starting values)

The analysis center specifies the starting values for the components of β(0) and sends these values to all data partners. Then, for each iteration r until the convergence criteria are met, the following two steps are repeated:

Step 1 (r th iteration of data partners)

Each data partner k = 1, …, K calculates Sk(r) and Hk(r) using (6) and (8), respectively, based on β(r − 1) received from the analysis center. All data partners then share the values of Sk(r) and Hk(r) with the analysis center.

Step 2 (r th iteration of the analysis center)

The analysis center calculates S(r) and H(r) using (5) and (7), respectively. The analysis center then calculates β(r) using (4) and shares the value of β(r) with all data partners.

The iteration procedure is considered to have converged when the change in the estimates between iterations is within a user-specified tolerance value. In numerical studies to be presented later, we considered a convergence criterion to be met at the (R + 1) th iteration if \( \underset{l}{\max}\left|{\delta_l}^{\left(\mathrm{R}+1\right)}\right|<{10}^{-8} \), where δl(R + 1) = βl(R + 1) − βl(R) if ∣βl(R) ∣ < 0.01 and δl(R + 1) = (βl(R + 1) − βl(R))/βl(R) otherwise, and βl(R) is the l th element of β(R). Once achieving convergence, the analysis center shares the final estimate \( \hat{\boldsymbol{\beta}} \) with all data partners.

Variance estimation

Step 1 (Calculation of summary-level information by data partners)

Each data partner k = 1, …, K calculates \( {\boldsymbol{H}}_k\left(\hat{\boldsymbol{\beta}}\right) \) and \( {\boldsymbol{B}}_k\left(\hat{\boldsymbol{\beta}}\right) \) using (10) and (12), respectively, and then shares the values of \( {\boldsymbol{H}}_k\left(\hat{\boldsymbol{\beta}}\right) \) and \( {\boldsymbol{B}}_k\left(\hat{\boldsymbol{\beta}}\right) \) with the analysis center.

Step 2 (Calculation of the variance estimate by the analysis center)

The analysis center calculates \( \boldsymbol{H}\left(\hat{\boldsymbol{\beta}}\right) \) and \( \boldsymbol{B}\left(\hat{\boldsymbol{\beta}}\right) \) using (9) and (11), respectively, and then calculates the estimated variance \( \hat{\mathit{\operatorname{var}}}\left(\hat{\boldsymbol{\beta}}\right) \) using (3).

Due to mathematical equivalence, the above procedure would provide the same point estimates and sandwich variance estimates as the analysis that uses individual-level data pooled across data partners.

Results

Analysis of simulated data

We considered a simulation design that enabled us to assess the performance of the proposed summary-level modified Poisson method in the presence of multiple data partners, multiple covariates (including but not limited to data source indicators), and differences in exposure prevalence and outcome incidence across data partners. Although modified Poisson regression is broadly applicable with rare and common outcomes, here we considered a scenario with common outcomes, where logistic regression would provide biased estimates of adjusted risk ratios.

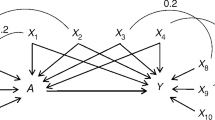

Specifically, we simulated a distributed network with three (i.e., K = 3) data partners and n = 10000 individuals with 5000, 2000, and 3000 individuals contributing data from the first, second, and third data partners, respectively. We considered five covariates X1, X2, X3, X4 and X5. We generated X1 as a Bernoulli variable with a mean (i.e., P(X1 = 1)) of 0.6, X2 as a continuous variable following the standard uniform distribution, X3 as a continuous variable following the unit exponential distribution, X4 as an indicator that an individual contributed data from the first data partner, and X5 as an indicator that an individual contributed data from the second data partner.

The exposure E was generated from a Bernoulli distribution with the probability of being exposed (E = 1) defined as 1/{1 + exp(0.73 − X1 − X2 + X3 − 0.2X4 + 0.2X5)}, indicating a non-randomized study. This setting led to different exposure prevalences across data partners. The resulting exposure prevalence was approximately 40% overall, 43% for the first data partner, 34% for the second data partner, and 38% for the third data partner. The outcome Y was generated from a Bernoulli distribution with the probability of having the outcome (Y = 1) defined as exp(ZTβ) = exp(−0.1 − 0.5E − 0.4X1 − 0.6X2 − 0.5X3 − 0.1X4 + 0.1X5) such that the true adjusted risk ratio comparing the exposed to unexposed was exp(−0.5) = 0.61. The resulting outcome incidence (i.e., risk) varied across the three data partners. This incidence was about 30% for the entire pooled data, 27% for the first data partner, 35% for the second data partner, and 31% for the third data partner.

As the reference, we first fit a modified Poisson regression model using pooled individual-level data (Table 1). We then implemented our proposed distributed algorithm that did not require sharing of individual-level data to estimate β. Based on the starting value β(0) = 0, the analysis took seven iterations to converge. The individual-level and summary-level methods produced identical point estimates and sandwich variance-based standard errors (Table 1). The Additional file 1 provides the summary-level information shared between the data partners and the analysis center during each iteration.

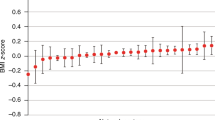

Analysis of real-world data

To further illustrate our method, we analyzed a dataset created from the IBM® Health MarketScan® Research Databases, which contain de-identified individual-level healthcare claims information from employers, health plans, hospitals, and Medicare and Medicaid programs fully compliant with U.S. privacy laws and regulations (e.g., Health Insurance Portability and Accountability Act). The study dataset included 9736 patients aged 18–79 years who received sleeve gastrectomy or Roux-en-Y gastric bypass between 1/1/2010 and 9/30/2015. The outcome of interest was any hospitalization during the 2-year follow-up period after surgery. The exposure variable was set to 1 if the patient received sleeve gastrectomy and 0 if the patient received Roux-en-Y gastric bypass. We estimated the risk ratio of hospitalization comparing sleeve gastrectomy with Roux-en-Y gastric bypass using the pooled individual-level data analysis and the summary-level information approach, adjusting for the following covariates identified during the 365-day period prior to the surgery: age; sex; Charlson/Elixhauser combined comorbidity score; diagnosis of asthma, atrial fibrillation, atrial flutter, coronary artery disease, deep vein thrombosis, gastroesophageal reflux disease, hypertension, ischemic stroke, myocardial infarction, pulmonary embolism, and sleep apnea; use of anticoagulants, assistive walking device, and home oxygen; unique drug classes dispensed and unique generic medications dispensed.

Of the 9736 patients in the study dataset, 7877 (81%) patients underwent the sleeve gastrectomy procedure and 1859 (19%) patients had the Roux-en-Y gastric bypass procedure. The outcome event was not rare in the study, with 1485 (19%) sleeve gastrectomy patients and 608 (33%) Roux-en-Y gastric bypass patients having at least one hospitalization during the two-year follow-up period. We randomly partitioned the dataset into three smaller datasets with 2000, 3000 and 4736 patients to create a “simulated” distributed data network. As the reference, the pooled individual-level data analysis produced \( {\hat{\beta}}_E=-0.4632219 \) with a standard error 0.0422368, and a 95% confidence interval: − 0.5460061, − 0.3804377. These results corresponded to an adjusted risk ratio of \( \exp \left({\hat{\beta}}_E\right)=0.63 \) with a 95% confidence interval: 0.58, 0.68. Based on the starting value β(0) = 0, the proposed summary-level modified Poisson method took seven iterations to converge and produced point estimates and sandwich variance-based standard errors identical to those observed in the corresponding pooled individual-level data analysis (Table 2). The adjusted odds ratio from logistic regression was 0.53. As expected, interpreting the estimated adjusted odds ratio as an estimate of the adjusted risk ratio amplified the protective effect of sleeve gastrectomy compared to Roux-en-Y gastric bypass, resulting in an effect estimate that was further from the null (suggesting a 10% greater relative protective effect) than the modified Poisson regression estimate.

As expected, we had difficulty fitting a log-binomial regression model within this bariatric surgery dataset. Under the starting value β(0) = 0, the first iterated estimate β(1) could not be calculated because the formula for β(1) under the log-binomial regression model includes 1 − exp(ZiTβ(0)) in the denominator, which takes the value 0 when β(0) = 0. We also considered three non-zero starting values. We first set the starting values for all parameters to 0.05, but the analysis stopped at the second iteration due to matrix singularity. We then let the starting values be the estimates obtained from a logistic regression model fit using the entire bariatric surgery dataset, and the analysis converged with \( {\hat{\beta}}_E=-0.7369683 \). Finally, we specified the starting values as the estimates obtained from the modified Poisson regression fit using the entire bariatric surgery dataset, and the analysis converged with \( {\hat{\beta}}_E=-0.4544135 \). These results illustrated the convergence problems of log-binomial regression and the sensitivity of this method to starting values. In comparison, the modified Poisson analysis had no convergence problems and its estimates remained the same as those presented in Table 2 when using these alternative starting values.

Discussion

In this paper, we proposed and demonstrated – in both simulated and real-world data – a method that adapts the modified Poisson approach to directly estimate adjusted risk ratios in multi-center studies where sharing of individual-level data is not always feasible or preferred. Our method produced the same risk ratio estimates and sandwich variance estimates as the corresponding pooled individual-level data analysis without pooling individual-level data across data partners. The required summary-level information does not contain any potentially identifiable individual-level data and therefore offers better privacy protection. Analytic methods like the one we proposed here complement appropriate governance and data use agreements to enable the conduct of multi-center studies, especially when sharing of individual-level data is challenging.

In terms of privacy protection, the proposed summary-level modified Poisson method serves as an intermediate approach between meta-analysis of site-specific effect estimates from modified Poisson analyses and modified Poisson analysis using pooled individual-level data. Compared to meta-analysis of site-specific effect estimates, our method requires more granular information, but the shared information is summary-level without detailed individual-level data. Unlike meta-analysis that generally only produces approximate results, our method produces results identical to those obtained from the corresponding pooled individual-level data analysis.

Compared to the pooled individual-level analysis, however, our method requires multiple file transfers between the data partners and the analysis center. Although this need for information exchange at each iteration means that our proposed method is more labor-intensive to implement in practice, recent advancements in bioinformatics now allow semi-automated or fully-automated file transfers between data partners and the analysis center [17, 21,22,23,24,25,26,27]. For general users who may not have access to such technical infrastructure, we have developed R code that allows manual implementation of our proposed method. This R code (available in Additional file 2) illustrates the analysis of the simulated data from this study but can be easily modified to accommodate different numbers of covariates or different numbers of participating data partners.

We assumed the outcome occurrence between individuals to be independent in our analysis. In some real-world situations, this independence assumption may be violated. In our case, it is possible that individuals who seek care in the same delivery system have correlated outcomes. To account for correlated data, Zou and Donner [28] extended the modified Poisson approach to settings with correlated binary outcomes in single-database studies. Future work will extend the proposed summary-level modified Poisson method to analyze correlated data in multi-center distributed data environments.

Conclusions

In conclusion, we proposed a privacy-protecting approach to directly estimate adjusted risk ratios using modified Poisson regression analysis for multi-center studies. This approach does not require sharing of individual-level data across data partners but produces results that are identical to those obtained from the corresponding pooled individual-level data analysis.

Availability of data and materials

Replication R code for generating and analyzing the simulated data example is available online and can be easily modified by interested readers for their own multi-center analyses. The real-world data created from the IBM® MarketScan® Research Databases are currently not available for public sharing.

References

Prentice RL, Pyke R. Logistic disease incidence models and case-control studies. Biometrika. 1979;66(3):403–11.

Hosmer DW, Lemeshow S, Sturdivant RX. Applied logistic regression. 3rd ed. Hoboken: Wiley; 2013.

Norton EC, Dowd BE, Maciejewski ML. Odds ratios - current best practice and use. JAMA. 2018;320(1):84–5.

Greenland S. Interpretation and choice of effect measures in epidemiologic analyses. Am J Epidemiol. 1987;125(5):761–8.

Altman DG, Deeks JJ, Sackett DL. Odds ratios should be avoided when events are common. BMJ. 1998;317(7168):1318.

Holcomb WL Jr, Chaiworapongsa T, Luke DA, Burgdorf KD. An odd measure of risk: use and misuse of the odds ratio. Obstet Gynecol. 2001;98(4):685–8.

Knol MJ, Le Cessie S, Algra A, Vandenbroucke JP, Groenwold RH. Overestimation of risk ratios by odds ratios in trials and cohort studies: alternatives to logistic regression. CMAJ. 2012;184(8):895–9.

Tajeu GS, Sen B, Allison DB, Menachemi N. Misuse of odds ratios in obesity literature: an empirical analysis of published studies. Obesity. 2012;20(8):1726–31.

Wacholder S. Binomial regression in glim: estimating risk ratios and risk differences. Am J Epidemiol. 1986;123(1):174–84.

Skove T, Deddens J, Petersen MR, Endahl L. Prevalence proportion ratios: estimation and hypothesis testing. Int J Epidemiol. 1998;27(1):91–5.

McNutt LA, Wu C, Xue X, Hafner JP. Estimating the relative risk in cohort studies and clinical trials of common outcomes. Am J Epidemiol. 2003;157(10):940–3.

Zou G. A modified Poisson regression approach to prospective studies with binary data. Am J Epidemiol. 2004;159(7):702–6.

Toh S, Platt R, Steiner JF, Brown JS. Comparative-effectiveness research in distributed health data networks. Clin Pharmacol Ther. 2011;90(6):883–7.

Ball R, Robb M, Anderson SA, Dal PG. The FDA's sentinel initiative - a comprehensive approach to medical product surveillance. Clin Pharmacol Ther. 2016;99(3):265–8.

Fienberg SE, Fulp WJ, Slavkovic AB, Wrobel TA. “Secure” log-linear and logistic regression analysis of distributed databases. In: Domingo-Ferrer J, Franconi L, editors. Privacy in Statistical Databases. PSD 2006. Lecture notes in computer science, vol 4302. Berlin, Heidelberg: Springer; 2006.

Karr AF, Fulp WJ, Vera F, Young SS, Lin X, Reiter JP. Secure, privacy-preserving analysis of distributed databases. Technometrics. 2007;49(3):335–45.

Jiang W, Li P, Wang S, Wu Y, Xue M, Ohno-Machado L, et al. WebGLORE: a web service for grid LOgistic REgression. Bioinformatics. 2013;29(24):3238–40.

El Emam K, Samet S, Arbuckle L, Tamblyn R, Earle C, Kantarcioglu M. A secure distributed logistic regression protocol for the detection of rare adverse drug events. J Am Med Inform Assoc. 2013;20(3):453–61.

R Core Team. R: A Language and Environment for Statistical Computing. Vienna. URL https://www.R-project.org/: R Foundation for Statistical Computing; 2018.

Stefanski LA, Boos DD. The calculus of M-estimation. Am Stat. 2002;56(1):29–38.

Her QL, Malenfant JM, Malek S, Vilk Y, Young J, Li L, et al. A query workflow design to perform automatable distributed regression analysis in large distributed data networks. eGEMs. 2018;6(1):11.

Jiang X, Wu Y, Marsolo K, Ohno-Machado L. Development of a web service for analysis in a distributed network. eGEMs. 2014;2(1):22.

Wolfson M, Wallace SE, Masca N, Rowe G, Sheehan NA, Ferretti V, et al. DataSHIELD: resolving a conflict in contemporary bioscience--performing a pooled analysis of individual-level data without sharing the data. Int J Epidemiol. 2010;39(5):1372–82.

Wu Y, Jiang X, Kim J, Ohno-Machado L. Grid binary LOgistic REgression (GLORE): building shared models without sharing data. J Am Med Inform Assoc. 2012;19(5):758–64.

Lu CL, Wang S, Ji Z, Wu Y, Xiong L, Jiang X, et al. WebDISCO: a web service for distributed cox model learning without patient-level data sharing. J Am Med Inform Assoc. 2015;22(6):1212–9.

Narasimhan B, Rubin DL, Gross SM, Bendersky M, Lavori PW. Software for distributed computation on medical databases: a demonstration project. J Stat Softw. 2017;77(13):22.

Meeker D, Jiang X, Matheny ME, Farcas C, D’Arcy M, Pearlman L, et al. A system to build distributed multivariate models and manage disparate data sharing policies: implementation in the scalable national network for effectiveness research. J Am Med Inform Assoc. 2015;22(6):1187–95.

Zou G, Donner A. Extension of the modified Poisson regression model to prospective studies with correlated binary data. Stat Methods Med Res. 2013;22(6):661–70.

Acknowledgements

The authors thank Qoua Her at the Harvard Pilgrim Health Care Institute for his help with the creation of the real-world dataset. The authors also thank Xiaojuan Li and Jenna Wong at the Harvard Pilgrim Health Care Institute for their comments on an earlier draft of this manuscript.

Funding

Dr. Toh was funded in part by the Patient-Centered Outcomes Research Institute (ME-1403-11305), the National Institute of Biomedical Imaging and Bioengineering (U01 EB023683), and a Harvard Pilgrim Health Care Institute Robert H. Ebert Career Development Award. The funding bodies had no role in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Author information

Authors and Affiliations

Contributions

The draft was written by DS, JGY and ST. DS conceived the idea, wrote the R program and performed all data analyses. JGY and ST helped supervise the presentation of methodology, and the design and interpretations of data analyses. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study, including the secondary data analysis using the IBM® MarketScan® Research Databases that contain de-identified individual-level healthcare claims information, was approved as a non-human subject research project by the Institutional Review Board at Harvard Pilgrim Health Care.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

Reports the shared summary-level information in the simulated data example.

Additional file 2.

Provides replication R code for the data generation and analysis of the simulated data example.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Shu, D., Young, J.G. & Toh, S. Privacy-protecting estimation of adjusted risk ratios using modified Poisson regression in multi-center studies. BMC Med Res Methodol 19, 228 (2019). https://doi.org/10.1186/s12874-019-0878-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-019-0878-6