Abstract

Background

Nanoscale connectomics, which aims to map the fine connections between neurons with synaptic-level detail, has attracted increasing attention in recent years. Currently, the automated reconstruction algorithms in electron microscope volumes are in great demand. Most existing reconstruction methodologies for cellular and subcellular structures are independent, and exploring the inter-relationships between structures will contribute to image analysis. The primary goal of this research is to construct a joint optimization framework to improve the accuracy and efficiency of neural structure reconstruction algorithms.

Results

In this investigation, we introduce the concept of connectivity consensus between cellular and subcellular structures based on biological domain knowledge for neural structure agglomeration problems. We propose a joint graph partitioning model for solving ultrastructural and neuronal connections to overcome the limitations of connectivity cues at different levels. The advantage of the optimization model is the simultaneous reconstruction of multiple structures in one optimization step. The experimental results on several public datasets demonstrate that the joint optimization model outperforms existing hierarchical agglomeration algorithms.

Conclusions

We present a joint optimization model by connectivity consensus to solve the neural structure agglomeration problem and demonstrate its superiority to existing methods. The intention of introducing connectivity consensus between different structures is to build a suitable optimization model that makes the reconstruction goals more consistent with biological plausible and domain knowledge. This idea can inspire other researchers to optimize existing reconstruction algorithms and other areas of biological data analysis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Background

Connectomics, a concept proposed by Sporns [1], aims to comprehensively map the structure of neuronal networks in the nervous system to improve our understanding of how the brain works. Connectomics can be conducted at different spatial scales corresponding to the observational scale of brain imaging, these scales can be roughly divided into the microscale, mesoscale, and macroscale [2]. Nanoscale connectomics, which aims to map the fine connections between neurons with synaptic-level detail, has attracted widespread interest from researchers. Electron microscopy (EM) is currently the only imaging technique with the required synapse-scale resolution and the ability to obtain a sufficiently large data set to encompass a significant number of local neural circuits. Since Sydney began manual mapping of the complete connectome of C. elegans, an effort that lasted over a decade [3], the throughput of EM imaging has increased by several orders of magnitude [4, 5], substantially advancing the field of connectomics. Automated analysis of the EM data is crucial due to the vast data volume. However, the automation of image analysis is insufficient at this time. Currently, the main bottleneck is that automatic reconstruction results still require heavy manual editing [6,7,8]. The primary goal of this research is to improve the accuracy and efficiency of neural structure reconstruction algorithms.

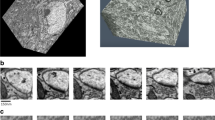

As shown in Fig. 1, the goal of image analysis in connectomics is to reconstruct cellular and subcellular structures on three-dimensional (3D), i.e., the instance segmentation. The sparse distribution of subcellular structures, also known as ultrastructures, including synapses and mitochondria, reduces the reconstruction difficulty to some extent. Due to being well-isolated, most reconstruction algorithms adopt a bottom-up approach and emphasize the acquisition of semantic segmentation [9,10,11,12,13]. To obtain the reconstruction result, Li et al. [14] propose a coarse-to-fine 3D connection algorithm based on intersection-over-union (IoU) to assign the segmentation label. Two straightforward and efficient connection algorithms, a watershed-based method and connected component labeling, are introduced in MitoEM challenge [15]. Despite satisfactory results, the generalization ability of the above methods for complex cases remain unknown, especially in practical applications with random factors such as sample wrinkle and imaging damage.

Compared with ultrastructures, reconstructing neurons proved more challenging lie in: densely intertwined, irregularly-shaped and non-differential staining. Existing methods mainly distinguish them by membrane boundaries, but local minor detection errors may lead to severe merging errors that are time-consuming to correct. Therefore, the prevailing workflow typically consists of two stages [16,17,18,19]. Semantic segmentation of the membrane boundary is performed first, followed by generating over-segmented fragments and determining the connection relationships between the segments [17]. Membrane boundary detectors have demonstrated the ability to provide satisfactory results due to recent advances in convolutional neural network (CNN) [20,21,22,23]. Agglomeration algorithms for determining the connections between fragments have attracted extensive research. Most works perform greedy merging of segments based on the highest probability of similarity [18, 24,25,26]. Others regard the agglomeration as a graph partition problem with globally constrained mathematical properties [16, 27,28,29,30]. Another type of reconstruction algorithm called flood-filling networks [31], tracks from the seed points and uses a recurrent neural network to grow the neuron iteratively by moving the receptive field.

Overview of the proposed method. Most current reconstruction pipelines consist of two-step, ignoring the joint connectivity of the different hierarchies. We extend this pipeline by introducing the connectivity consensus to create an overall optimization framework. Consecutive steps from left to right: given the semantic input map of three structures, we perform segmentation to obtain the 2D instance and construct the graph. Then we combine the hierarchy graph and build the joint optimization model to obtain the connectivity and the reconstruction map simultaneously

We focus on the agglomeration algorithms in this paper. In this scope, the multicut algorithm [28] is widely used due to its clear mathematical formulation and graceful properties [16, 27]. As stated in [32], compared to other greedy algorithms [24], multicut brings better results due to the global objective and without external stopping criteria. On the other hand, since solving multicut is NP-hard, some works are optimized on local search algorithms in order to produce the high quality and fast solutions for large-volume data in the field of connectomics, including the greedy additive edge contraction (GAEC) solver, the Kernighan–Lin solver [33], the Fusion-Move solver [34], and the Block-Wise solver [35]. Agglomerative clustering with average linkage criteria (GASPavg) [25] and mutex watershed (MWS) [30] are signed graph partitioning algorithms and two well-known neuron agglomeration algorithms in connectomics. Specifically, GASPavg uses average linkage as the newly formed edge weight update criterion, replacing the sum linkage update criterion in GAEC, while MWS uses absolute maximum linkage. It is pointed out in [25] that different edge update criterion has a significant impact on the results.

Indeed, the neural structures are highly correlated. How to combine multi-level information in the reconstruction process has recently attracted great attention. In particular, Krasowski et al. [32] incorporate sparse biological priors and boundaries to extend the existing agglomeration algorithms. On this basis, Pape et al. [36] introduce a more general approach to leverage domain-specific knowledge to improve the segmentation result. Recently Wolf et al. [37] propose the semantic mutex watershed for joint graph partitioning and labeling. Out of connectomics, the correlation co-clustering (Co-Clustering) [38] utilizes both low-level and high-level cues to jointly address trajectory-level motion segmentation and multiple object tracking, which is similar to our methods. Levinkov et al. [39] define a combinatorial optimization problem, a general algorithm of lifted multicut algorithm that produces both decomposition and node labeling. Furthermore, several studies utilize prior knowledge in the post-processing step to improve existing results. Some publications underline the morphological characteristics of the reconstructed results [40,41,42], whereas others focused on sub-compartment category attributes [43] or specific labeled membrane data [44].

Nevertheless, the above approach retains a major drawback of a lack of joint connectivity of the different hierarchies. In other words, the reconstruction of each structure is independent and fails to form an overall optimization framework. As the most complex system known, neural structures do not exist in isolation, but have unique affiliations. Specifically, mitochondria, responsible for energy production, are commonly used to estimate the neuronal activity level, which always exists within a neuron. Meanwhile, synapses, which provide information transmission between neurons, quantify the neuronal connectivity strength. Since their advantages are complementary, it is reasonable and desirable to perform joint optimization of the reconstruction results based on different levels of connectivity priors.

To address this issue, we propose a joint optimization model for neural structure agglomeration in connectomics. The model can inherently integrate the connectivity consensus of both types of structures through constraints while yielding significant advantages in optimality (for an overview see Fig. 2). More precisely, the contributions of this paper are threefold:

-

First, to the best of our knowledge, we introduce the concept of connectivity consensus and demonstrate its effectiveness for neural agglomeration in connectomics for the first time.

-

Second, we propose a novel and bio-inspired graph partitioning model for joint optimization of neuron and ultrastructure reconstructions.

-

Third, two structural linkage patterns, including mitochondria and synapses, are explicitly encoded and readily extended to other subcellular structures.

Methods

Motivation

Connectomics aims to reconstruct the fine connections between the neural structures of biological tissues. The image content is rich at the nanoscale, where fine subcellular structure such as mitochondria and synapse are clearly visible, while neurons are spread all around and distinguished by membrane boundaries (as shown in Fig. 1). Exploring the inter-relationships between structures will help EM image analysis. The core idea of this paper is to jointly optimize neuronal segments with boundary cues and ultrastructural regions with structural cues, which has the advantage of complementing the limitations of single connection cues. The flowchart of the method is shown in Fig. 3. The idea underlying joint optimization is that local single connectivity cues are not always reliable due to uncertainties, such as unclear imaging or poor alignment of EM images, whereas introducing inter-relationships can jointly optimize the reconstruction results by incorporating multiple cues.

Connectivity consensus

For neural reconstruction, we introduce the concept of connectivity consensus between each structure. Specifically, mitochondria, as organelles of cellular energy supply, exist in the same cell. Therefore, beside their own connection relationship, the additional prior is that the 3D instance mitochondria are located in the same neuron. The synapse act as a hub for information transmission between different cells, where the pre-synapse is the axon terminal of the previous neuron and the post-synapse is usually the cell body or dendrite of the next neuron. Likewise, in addition to the connection relationship of the synapse itself, the extra connection information is that the neuron connected by the synapse comes from different instances. One of the benefits of connectivity consensus is that sparse ultrastructure connectivity is often more accurate than dense neuron structures.

Two link patterns are defined according to the connectivity consensus. Neuron-Mitochondria reflects the link pattern between neuronal fragments and 2D mitochondria region. Neuron-Synapse represents the link pattern between the neuronal fragments and 2D pre- and post-synapse. Existing methods tend to be sensitive to inaccurate local edge weights, while the entire graph contains a richer global link- and not-link-information, allowing the feasible solution to minimize partitioning errors caused by inaccurate local edge weights

Based on the connectivity consensus, we define two link patterns shown in Fig. 4, to represent the neuron-mitochondria and neuron-synapse hierarchical relationships. Noted that, we categorize the synapses into pre-synapses and post-synapses according to [12], which is in line with the connectome as well as the proposed method (such as Fig. 1). In Fig. 4, green, blue, orange and grey circles indicate the neuron fragment, mitochondria, pre-synapse and post-synapse, respectively. The dashed green line indicates edges connecting two nodes between neuron fragments. The dashed yellow line indicates edges connecting the ultrastructure to the neuronal nodes. The dashed blue and purple lines indicate edges connecting two nodes between mitochondria and synapse, respectively. The connectivity consensus among the various structures implies that the final reconstruction results need to satisfy the connectivity consistency described above. As illustrated in Fig. 4, assuming that the three original neuron edges have two inaccurate edge weights (common but unknown in advance), then the existing method often fails to partition ideally due to the high reliance on local edge cues. On the contrary, the final graph partitioning result produced by our method still meets the plausibility expectation in this case, which is based on the advantage of the connectivity consensus among the structures. Although the forms of the two link patterns are similar, the final feasible solution differs significantly. The difference is that mitochondria bring link information, whereas synapses additionally contain not-link information. Note that although the blue circles both represent mitochondria, these may come from different neurons, which should be partitioned into two categories according to the connectivity consensus. Only when two mitochondria are located within the same neuron, that mitochondrion will be classified into one class.

Optimization model

One of the contributions of this study is how to express the ultrastructures (mitochondria and synapse) and structures (neuron) hierarchies in EM images, enabling joint optimization of different neural structures. Based on connectivity consensus, we assume that the graph composed of neurons, mitochondria and synapses is defined as \(G^{n}=(V^{n}, E^{n})\), \(G^{m}=(V^{m}, E^{m})\) and \(G^{s}=(V^{s}, E^{s})\), respectively, where \(V^{n}\) is the set of nodes composed of neuronal fragments, and \(V^{m}\) and \(V^{s}\) are the segmentation regions of the mitochondria and synapse, respectively. The set of edges indicates that two connected nodes have the potential to belong to the same instance. Each edges with a cost \(w \in R\) reflects its strength. The detailed graph construction is described below.

We further define the entire graph \(G=(V, E)\) containing above structures whose \(V= V^{n}\cup V^{m}\cup V^{s}\) and \(E= E^{n}\cup E^{m}\cup E^{s} \cup E^{a}\), where \(E^{a}\) is defined as an additional edge set \(\left\{ u,v \right\} \in E^a\) that connects a neuron fragment \(u\in V^{n}\) with an ultrastructure region \(v\in V^{m}\cup V^{s}\), indicating the affiliation between them, i.e., belonging to the same object. Note that we try to construct a joint optimization model that obtains the connectivity relationships of both structures through a single once optimization. The optimization problem is expressed as an integer linear programming problem as follows to integrate the connectivity consensus and the hierarchy graphs:

where \(x_{e}\) represents the binary indicator variable of each edge \(e\in E\) in the final partition (1 is cut and 0 is join), and the weight \(w_{e}\in R\) corresponds to each edge is the cost reflecting the attractive or repulsive strength. The objective function (2) is composed of four parts: the graph of neuron fragments, mitochondria and synapses, and the affiliation edges connecting them. \(\lambda _{n},\lambda _{m},\lambda _{s},\lambda _{a}\in [0,1]\) are hyper-parameters derived from the domain knowledge to balance the reconstruction confidence level. \({\rm{cycles}}(G)\) denotes the set of all cycles in G. Inequality (2) constraint a consistent solution without “dangling edges”, i.e., a valid partition [45]. The feasible solution of the optimization problem is a decomposition of the graph G, where each component in the final solution represents the same class.

The primary difference that distinguishes the proposed method from previous approaches, such as asymmetric cuts [32, 46], correlation co-clustering [38] and semantic mutex watershed [37], is the proposed model considers the connectivity of the ultrastructures themselves, i.e., edge connections are added between the nodes of the ultrastructures. This set has two advantages, one is to prevent errors in node assignment, and the other is to overcome the limitations of a single connectivity. In other words, we overcome the fact that the neuron reconstruction relies too much on local boundary cues, while the ultrastructure reconstruction relies too much on local spatial information.

Graph construction method

This section describes the construction of the graphs \(G^{n}=(V^{n}, E^{n})\), \(G^{m}=(V^{m}, E^{m})\) and \(G^{s}=(V^{s}, E^{s})\) and the definition of the affiliation edge set \(E^{a}\). Figure 5 shows examples of the graph construction.

Examples of graph construction. Top neuron graph neighborhood of a single node with local plane-edges (red lines) and cutting-edges (green lines), the edge weight mainly derived from the membrane boundaries. Bottom example of the ultrastructure graph, nodes are ultrastructural regions, edges are represented by red lines, and the edge weight derived from their segmentation overlap

The construction method of neuron graph

The graph \(G^{n}=(V^{n}, E^{n})\) is defined as the region adjacency graph (RAG) composed of the superpixels of neurons, where V is the set of neuronal fragments and \(E\subset V\times V\) represents the set of edges connecting any adjacent nodes. For each edge \(e^n :=\left\{ u,v \right\} \in E^n\), a weight \(w_{e^n}\in R\) is assigned to represent the similar strength of fragments u and v, which is usually obtained from the similarity \(p_{e^n}\in [0,1]\) via negative log-likelihood function, defined as:

where the bias hyper-parameter \(\beta \in [0,1]\) controls the degree of over-segmentation. If \(\beta > 0.5\), then the edge weight \(w_{e^n}\) decreases, otherwise the \(w_{e^n}\) increases. In terms of object function (2), if the edge weights tend to be negative, it means that the stronger repulsive strength of nodes u and v corresponds to over-segmentation. Conversely, it means under-segmentation. Usually, \(\beta\) takes a default value of 0.5, which is adjusted according to the similarity \(p_{e^n}\) correspondingly.

Generally, there are two ways to calculate the similarity \(p_{e^n}\). It can be obtained from the mean affinity of the membrane prediction, with a assumption that the quality of convolutional network output is satisfied [26]. The other more complex method requires multiple steps [36]. First, several descriptions of each edge are extracted from the raw images, boundary predictions and corresponding filtered images (including gaussian filter, hessian filter and laplacian filter). Specifically, the feature set is the same as described in [16], where the extracted features include boundary appearance feature, region statistical feature and shape topology feature. Subsequently, the extracted feature vectors are fed into the classifier to predict whether the contact between two superpixels represents same neuron or not. The difference between these two methods is that the similarity after classifier relearning is more accurate and reliable than the mean affinity value. However, the latter requires more time for feature extraction, and is more suitable for data with low cutting-axis continuity.

The construction method of ultrastructure graph

The definition of ultrastructure graph should consider the connectivity consensus; otherwise, additional errors may be introduced, leading to model failure. Figuratively, a cell may contain several mitochondria, i.e., it is impossible to determine whether the connectivity between different mitochondria is consistent with the connectivity between neuronal fragments. Since the 3D image stacks are aligned, we design a method for calculating the edge weights according to strong context clues. Specifically, for graph \(G^{m}=(V^{m}, E^{m})\), we only add edges that potentially belong to the same instances of mitochondria. For graph \(G^{s}=(V^{s}, E^{s})\), we expect the edges between the pre- or post-synapses to be repulsive, whereas the edges within the pre- or post-synapses are attractive.

For present, we take \(G^{m}\) as an example, where synapse graph \(G^{s}\) is built in a similar way to mitochondria. Formula, we assume image volumes has M sequential slices. For every pair of segmentation region \(u,v\in V^{m}\) in \(M_u\) slice and \(M_v\) slice with \(\left| M_u-M_v \right| \le 2\), we define the probability \(p_{uv}\in [0,1]\) of their plain bounding boxes \(d_u, d_v\) IoU and segmentation area \(s_u, s_v\) overlapping belonging to the same object as:

where \(D_{uv}=\frac{d_u\cap d_v}{d_u\cup d_v}\) measures the similarity of the spatial position, \(S_{uv}=\frac{s_u\cap s_v}{s_u\cup s_v}\) characterizes the invariance of the segment shape, \(\lambda \ge 0\) is a regularization parameter to balance the coefficients of the boxes and segment. We define the edge set as \(E^{m}: =\left\{ (u,v)~|~p_{uv}> 0, \left| M_u-M_v \right| \le 2 \right\}\), which means that only ultrastructures in close contact are considered as potential links. Then, for each edge \(e^m :=\left\{ u,v \right\} \in E^m\), the edge cost \(w_{e^m}\) is derived from \(p_{uv}\) via negative log-likelihood function, similar to Eq. (4). The default set of the bias hyper-parameter \(\beta\) is 0.5. If the image volumes is highly anisotropic, then \(\beta\) decreases. Note that more sophisticated methods have been proposed to characterize the ultrastructural similarity [14], but they have higher computational complexity. The experimental results show that the proposed similarity formula is sufficient to bring about performance improvements due to the constraints of the other edges.

The construction method of affiliation edges

For every neuronal segments \(u\in V^n\) and every ultrastructures region \(v\in V^m\cup V^s\), we define the affiliation edge set \(E^a\) based on the maximum overlap area as:

where \(A(\cdot )\) denote the pixels numbers of overlap area, \(A_t\) represent the area threshold. Regarding affiliation edge definition, we only retain edges with a certain confidence level once the maximum overlap area exceeds \(A_t\). Since this step may filter out some small regions, we believe it can reduce the invalid information resulting from detection errors, which is important for graph construction. In another aspect, it can decrease the model’s complexity because it contains fewer nodes and edges.

Assume \(P^i|_{i=1}^{M}\) is the ultrastructure probability map sequences. We calculate the probability \(p_{uv}\in [0,1]\) reflecting the strength of the affiliation as:

where t is the overlap regions and \(M_v\) denote the corresponding probability map sequence. That is, the probability \(p_{uv}\) is defined as mean response of network output in t. Then for each edge \(e^a :=\left\{ u,v \right\} \in E^a\), the edge weight \(w_{e^a}\) is also transformed from \(p_{uv}\) by Eq. (4). Similarly, the default bias parameter \(\beta\) depends on the segmentation performance of the ultrastructure and has a default value of 0.5. Intuitively, if the segmentation quality of the ultrastructure is satisfactory, then \(\beta > 0.5\); otherwise, \(\beta < 0.5\). Notice that all above parameters are hyper-parameters, which can be verified through a grid search in the validation dataset.

Greedy solver

After the above steps, we have constructed multiple undirected graphs and established an optimization problem, the next step is to solve the model. Since cycle constraints increases exponentially with the number of nodes, finding the optimal solution of the optimization problem is NP-hard. Most existing solvers use greedy heuristic algorithms to generate feasible solutions with good results, although establish neither lower bounds nor approximation certificates [33, 35]. In this paper, we employ the greedy additive edge contraction (GAEC) solver [29], which is a fast local search heuristic, although it operates directly on the nodes without any local pre-clustering. This solver runs only once to monotonically produce deterministic feasible solutions, thus providing low computational cost even for large-volume data. GAEC always makes the greedy choice that most decreases the objective function (2). Specifically, GAEC merges the edges with the highest weights in each loop, contracts the edges and shrinks the graph, and re-computes the cost of the changed edges with sum linkage. These steps are repeated until all edges in the contracted graph have a negative value, then the algorithm terminates. We implement this procedure by query sorting, and the time complexity of the solution is \(O(V^2\log V)\).

Post-processing method

After obtaining the edge state in the entire graph G, we assign a unique label to each connected component. All nodes of each component are assigned the same label. We map the node label to the subgraph \(G^n\), \(G^m\), and \(G^s\) correspondingly. The proposed model may partition different instances of the ultrastructure into the same category according to the high-level prior information of the neurons, which facilitates the detection of specific neural structures such as multi-contact synapses [47]. Since others research focus on 3D instances of the ultrastructure itself, separating incorrectly merged ultrastructures is necessary for the final reconstruction results in this case. We design a simple post-processing strategy to distinguish ultrastructures without edge connections in graph \(G^m\) or \(G^s\). Specifically, if two nodes in \(G^m\) are in the same partition in the final solution of G, but there are no paths connecting them in graph \(G^m\), a new label is assigned to one of the components. This procedure is iterated until there are no more violations of the node assignments. Using this approach, incorrectly merged ultrastructure can be effectively partitioned into several classes based on primary edge priors.

Experiments and results

Datasets

We use the Harris dataset and Snemi dataset for comparison, as described below:

Harris dataset comes from the hippocampus of adult rats with a resolution of \(2\times 2\times 50~nm^{3}\), including the apical dendrite dataset and the spine dataset [48]. Due to the background region and incomplete annotation, we only select the region with neuron groundtruth labeling to facilitate the evaluation of algorithm performance. For apical dendrite dataset, we cut a subvolume of \(1536 \times 1536 \times 100\) voxels , and divided the data into train set (sections 1-50) and test set (sections 51-100). For spine dataset, we cut a subvolume of \(904\times 865 \times 42\) voxels , and divided the data into train set (sections 1–21) and test set (sections 22–42).

Snemi dataset is created by Kasthuri et al. [7] and contains labeled subvolumes from the mouse somatosensory cortex. The dataset contains 100 consecutive slices for training and 100 slices for testing. Each slice has a size of \(1024\times 1024\) and a resolution of \(6\times 6\times 30~nm^{3}\).

Error metrics

Two common error metrics are used to evaluate the reconstruction performance: variation of information (VI) [49] and adapted rand error (ARE) [50]. A lower value corresponds to higher segmentation quality in both metrics. The background was ignored when evaluating. The expression of the VI is as follows:

where S and T are the automatic reconstruction and gold standard respectively, \(H(\cdot )\) represents the conditional entropy, and H(S|T) and H(T|S) quantify over- and under-segmentation, respectively.

Baseline methods

We evaluate the reconstruction metrics from two aspects. For comparing the neuron reconstruction results, we adopt six representative methods with the same input data and graphs, including:

-

MC the standard multicut algorithm with same GAEC solver [28] is used as a baseline, it is a well-known agglomeration algorithm without needing to specify the number of clusters, but relies only on boundary cues.

-

GASPavg agglomerative clustering with average linkage criteria [25] use average linkage as the newly formed edge weight update criterion, which is a more robust method of updating edge weights for inaccurate edge.

-

MWS mutex watershed [30] is an efficient algorithm for signed graph partitioning, it adopt absolute maximum linkage by encode both attractive and repulsive cues with nearly linearithmic complexity.

-

MC w/ KL the standard multicut algorithm with Kernighan-Lin solver [33], where Kernighan-Lin solver starts from any initial cluster and transforms the nodes in each iteration to reduce the decomposition cost.

-

MC w/ FM the standard multicut algorithm with Fusion-Move solver [34], where Fusion-Move solver iteratively fuses the current and the proposal solutions and empirically produces lower objective function for multicut problem.

-

Co-Clustering correlation co-clustering [38], a model that can combine multi-level information but ignore the domain knowledge.

All methods have been used for the partition of signed graphs [27,28,29, 33, 34] and have been widely used in neuron reconstruction [16, 25, 30]. For the proposed method (Ours), we report two versions that without mitochondria information (Ours w/o mito) and without synapse information (Ours w/o syn), to demonstrate the effectiveness of integrating various connectivity relationships.

For comparing the ultrastructure reconstruction results, we employ the popular 3D connected component labeling (CC labeling) [15]. In particular, we propose a baseline (Ours w/o neuron), i.e., when \(\lambda _{n}, \lambda _{a}=0\) in Eq. (2) and \(G^{n}=\emptyset\), this objective function can be treated as a special case to solve only the partition problem of the ultrastructure.

Qualitative comparison of the neuron reconstruction performance from serial patches on two datasets. Left and Middle patches from Harris dataset. Right: patches from Snemi dataset. The first column displays the groundtruth of the neuron. The last four columns show the results generated by Ours, MC, GASPavg, and Co-Clustering, respectively. The split errors are highlighted in the red boxes, and the merge errors are highlighted in the yellow boxes

Experiments on Harris dataset

Experimental setting

In this experiment, we investigate the effect of the proposed model on Harris dataset. The semantic maps of neurons, mitochondria and synapses as a prerequisite to apply the joint optimization model of the neural structure. We train a 3D U-net for membrane/non-membrane pixel-wise semantic segmentation, and use manually annotated masks as ultrastructural semantic maps on Harris dataset. The network architecture is similar to [26], which differs from the standard 3D U-net in three ways: first, the residual module is employed to improve the model network representation; second, the feature maps of different levels are fused by element-wise summation operation, replacing the concatenation operation; third, the network never downsamples the z-axis resolution, in order to adapt to anisotropic data. For the neuron membrane, the output is nearest neighbor affinities as described in [18, 51]. We use binary cross-entropy as the network loss function, and adopt the Adam optimizer for stochastic optimization. The obtained probability map is then smoothed by a Gaussian function, where the local minima serve as seeds for the watershed algorithm to generate the superpixels of the neuron over-segmented fragments. Due to the anisotropy of the data, the superpixels are generated by each 2D image in the stack singularly. From above steps, we set up the optimization problem as follows: we build the region adjacency graph \(G^n\) from the superpixels, that is, edges are also introduced between superpixels in adjacent slices. We train two random forest classifier to calculate the edge weight based on edge and region appearance features following [16]. One of the classifier learn the plane-axis edges (edges between superpixels in same slices) and the other learn the cutting-axis edges (edges between superpixels in adjacent slices), shown in Fig. 5. Since the classifier is supervised learning, to obtain the edge labels required for the training process, the edge is labeled as 0 if the two regions connected by this edge in groundtruth belong to the same neuron, otherwise labeled as 1. Then the features and the corresponding labels are fed into the random forest classifiers. The hyper-parameter of classifiers can be determined by classification accuracy via grid research. After training, those random forest classifiers are used to infer the similarities \(p_{e^n}\) in test dataset.

Since this dataset contains few ultrastructures, we use ImageJ software [52] to annotate the binary mask in order to clarify the effect of the the joint optimization idea. We annotate 45 mitochondria and 50 synapses in the apical dataset, and 12 mitochondria and 8 synapses in the spine dataset. We use 2D connected component labeling of the binary mask to obtain the ultrastructure node set. Follow, the graphs \(G^m\) and \(G^s\) are constructed. The affiliation edge set \(E^a\) is derived from the binary mask with an area threshold \(A_t = 50\).

Results

We compare the neuron reconstruction results and ultrastructure reconstruction results separately. We record the quantitative analysis of neuron reconstruction in Table 1. The best result are highlighted in bold. Overall, introducing additional ultrastructural connectivity constraints show a clear improvement in performance over the previous method. Specifically, our method outperforms the standard MC by \(0.90\%\) (for VI metric) on apical dataset (0.7307 versus 0.7373) and by \(5.66\%\) on spine dataset (0.4084 versus 0.4329). Compared with other popular signed graph optimization strategies, our method performs better in most cases. Although the KL and FM approximate solvers empirically yield lower objective function solutions in multicut problem, this outcome does not always correspond to better reconstruction performance for the neuron task, e.g., for the apical dataset. The main reason may be the inaccurate edge weights since existing methods are sensitive to edge weights. In the spine dataset, the VI of the KL solver is lower than that of the GAEC solver (0.4156 versus 0.4329), but our model can compensate for the discrepancy caused by the approximate solution error and produces better reconstruction performance. The results of ablation studies integrating different structures show that neuron reconstruction performance benefits continuously with the introduction of synapses, mitochondria and full structure. Notice that the result of Ours w/o syn and Ours are consistent in the spine dataset, which may be attributed to two factors: (1) different ultrastructure may be related to the same neuron nodes resulting in the same performance gains, and (2) the edge weights of the synapses in this dataset fail to accurately reflect the connection strength. Unlike the Co-Clustering, the proposed model adds confidence factor derived from domain knowledge to balance the connection strength of each hierarchy. The error metrics confirm that the confidence factor is beneficial to the reconstruction results.

As intuitively shown in Fig. 6, we further qualitatively analyze in which cases our model brings benefits in neuron reconstruction. We observe that ours model can effectively reduce connection errors than the other methods. Observing patches from the spine dataset, it makes sense that the structural information of the mitochondria prevents split errors. While observing patches from the apical dataset, although this neuron does not contain ultrastructure, the nearby mitochondria are constrained in the optimization model, resulting in a reasonable overall result. More examples of neuron segmentation are given in Fig. 7, where each row shows the visualization results of the proposed method in consecutive 9-layer slices. Table 2 illustrates the algorithm advantages, where the metric improvement is measured separately in regions with/without the ultrastructure. The joint optimization model contributes to significant improvements over the baseline in regions with ultrastructure, whereas the other regions exhibit limited improvement due to global optimization. What’s more, the robustness results of the model to hyper-parameters on the Harris dataset are shown in Fig. 8. We record the VI and ARE of the proposed method with MC under different parameters. In most cases, the proposed model outperforms the baseline model.

The results of ultrastructure under the same hyper-parameter are shown in Table 3. Note that we used manually labeled ultrastructural data based on the 2D binary mask information, and our goal is to obtain the 3D label, which is not against our original intention. All error metrics are low because only connection errors exist in the reconstruction results. The result of CC labeling is close to the proposed method, but prone to introduce merge errors for the mitochondria and split errors for the synapse, as indicated by VI-Merge and VI-Split in Table 3. The reason is that CC labeling is more sensitive to changes in the cutting-axis and morphology because it determines whether the binary regions are the same instance by 26-neighborhood connectivity. By the way, the performance of the proposed baseline (i.e. Ours w/o neuron) is comparable to that of the joint optimization (i.e. Ours).

Experiments on the Snemi dataset

Experimental setting

This experiment investigate the robustness and practicability of the proposed method under automatic ultrastructure segmentation algorithm. Similar to Harris dataset, we use the same method to generate the superpixels and RAG \(G^n\). The neuronal fragment probability \(p_{e^n}\) is the mean affinity value derived from the network output. For the ultrastructure, we utilize the same network architecture as in membrane segmentation to obtain the probability result, except for slight differences in the settings. For the mitochondria, we add the 2D instance contour as the target output to distinguish the planar instance results, refer to [15]. For the synapse, we use a model with the target of three channels to train a semantic segmentation network, refer to [12]. The three channels include pre-synaptic region, post-synaptic region, and synaptic region (union of the first two channels). After obtaining the semantic map, we transform them into binary images by using the binarization threshold, and obtain the initial segmentation result using the 2D connected component labeling. In this way, we can obtain pre/post synapses. We present the The input size of all the above networks is \(256\times 256\times 8\), the optimizer is Adam, the training phase uses sufficient data augmentation to improve the generalization performance, and the model is iterated 20,000 times under 2 NVIDIA Tesla V100 GPUs with a batch of 8. The graphs \(G^m\) and \(G^s\) are similar to those in experiments on the Harris dataset, except that the binary mask is generated by binarization of the network output. The construction of the affiliation edges \(E^a\) was conducted with an area threshold of \(A_t = 50\).

Results

We summarize three experiments of neuron reconstruction in Table 4 , including (i) the performance of the six representative methods, (ii) the results of the ablation studies on different ultrastructural connectivity constraints, (iii) the robustness of the potential improvements. Table 4 indicates differences in the neuron reconstruction performance of the six unsigned graph agglomeration methods. Particularly, MWS has the lowest time complexity \(O(V\log V)\) but the results are not ideal. GASPavg, which adopts the new edge update criteria to increase the robustness of the weights, provides a good performance unsurprisingly. Compared to baseline MC, our approach integrates synapses, mitochondria and full information to decrease VI metrics by \(1.25\), \(2.79\) and \(3.60\%\) respectively, demonstrating the effectiveness of joint connectivity cues. Compared to baseline MC, the method of Ours w/o mito, Ours w/o syn and Ours VI metrics lower \(1.25\), \(2.79\) and \(3.60\%\) respectively, demonstrating the effectiveness of joint connectivity cues. Furthermore, as shown in Table 5, the VI of our method that considers only the fragments containing ultrastructures is \(9.70\%\) lower than that of the baseline. Ours also outperforms the Co-Clustering, which only \(6.54\%\) lower than that of the baseline. The qualitative comparison of the reconstruction results in Fig. 6 illustrates the main performance gains of the proposed method. Observation of the patches from Snemi dataset, we find that not-link-information of the synapses reduces the merge error, demonstrating the superiority of our method. The neuron segmentation performance of the proposed method for consecutive 9-layer slices on the Snemi dataset can be seen in Fig. 7. The 3D rendering results of the different algorithms at the synaptic connections of the two neurons are depicted in Fig. 9, which intuitively indicates that our method is the closest to the groundtruth. More 3D rendering example of the other regions in Kasthuri dataset [7] are available in Fig. 10. We also compare the error metric of our method with different confidence factors (with steps of 0.05) as shown in Fig. 8. Our method results in lower errors than the baseline in most cases, indicating the robustness of the proposed method.

Meanwhile, the quantitative result of ultrastructure reconstruction is recorded in Table 3. Unlike the Harris dataset, the ultrastructure is predicted by CNN to simulate the applicability and robustness of our method in real scenarios. From Table 3, the reconstruction performances differ greatly for the mitochondria and synapses. In most cases, the result of our algorithm and the proposed baseline are not inferior to those of CC labeling. Figure 12 gives a visualization of the differences. Specifically, the proposed method prevents a split error for synapse reconstruction because the imaging damage causes a local shift in the EM image. Noteworthy, the proposed baseline (i.e. Ours w/o neuron) is inferior to CC labeling for synapse reconstruction. Our analysis is due to sensitivity to edge weights, fine-tuning the bias hyper-parameter may improve the performance. Meanwhile, the joint optimization approach (i.e. Ours) outperforms CC labeling due to the neuron’s connectivity constraint.

Note that the error metric in Table 3 includes pixel-wise error and connection error. Particularly, the performance of mitochondria is significantly higher than that of synapses due to distinct regional features and shape invariance. We find the lower VI of synapse reconstruction mainly comes from pixel-wise errors, i.e., some synapses are not detected or inaccurately segmented boundaries, which is intuitively represented in Fig. 12. We can conclude that ultrastructure reconstruction is still subject to errors but does not affect the improvement of the neuron. This is based on the assumption that reconstructing subcellular structures is less difficult than reconstructing cellular structures. Moreover, our method only relies on the connection information extracted from the ultrastructure and is therefore insensitive to pixel-level errors. In other words, with the same image quality, we assume the connectivity information of the ultrastructure is more reliable than that of neurons, which is necessary to increase the confidence factor. We confirm this assumption in Fig. 11, where better results are obtained when the confidence factor of the ultrastructural connectivity features is higher than that of the neurons.

Discussion and conclusion

In this paper, we investigate the joint optimization of ultrastructural and neuronal connectivity. We introduce the concept of the connectivity consensus based on biological domain knowledge for 3D agglomeration. We propose a joint graph partitioning model to determine the ultrastructural structural connectivity and membrane boundary connectivity. This method overcomes the limitations of using single connectivity cues. This optimization model has a single and well-defined mathematical objective, allows to produce the multiple structure reconstruction in a one optimization. We have demonstrated the advantage of joint optimization on several public datasets.

The goal of image analysis in connectomics is the 3D reconstruction of neural structures, and the agglomeration algorithm is a fundamental step. However, the reconstruction of neural structures in electron microscopic images is a very challenging task. The main factors affecting the reconstruction performance include imaging resolution, z-axis resolution, data volume, acquisition region and imaging quality, which result in reconstruction performance that may vary greatly between different data. Existing methods have the limitation that single connectivity features are not always reliable. The primary purpose of introducing connectivity consensus between different structures is to build a suitable optimization model that makes the reconstruction goals more consistent with biological plausible and domain knowledge.

Compared with voxel-based, superpixel-based methods are less sensitive to local defects at the membrane boundary. The proposed solution is robust to the over-segmentation issue. Specifically, if under-segmentation occurs in the neuron over-segment fragments, existing agglomeration algorithms are unable to correct such errors, so such errors are generally avoided at the watershed stage by adjusting hyper-parameters. If mitochondria occur under-segmented, this may only lead to performance degradation if two neurons in the plane to which the mitochondria originally belong are identified as one, because additional merge errors of neurons are introduced. However, as in Eqs. (2) and (3), we add the reconstruction confidence parameter \(\lambda\) for different structures, which allows us to easily assign confidence levels based on the initial segmentation performance, as discussed in Fig. 11. In addition, we add 2D instance contour to the ultrastructure segmentation, which further reduces the possibility of under-segmentation. Note that the additional connectivity information used in this paper comes from mitochondria and synapses, which contain link-information and not-link-information that can be extended to other similar structures, such as cell bodies (same as mitochondria) and axon-dendrites (same as synapses). In the future, we plan to analyze the model’s performance for other biological structures and examine its generalization ability. Another important research direction is to extend the optimization model to integrate non-adjacent node relationships, i.e., long-range cues, such as lifted multicut algorithm.

Availability of data and materials

The public dataset supporting the findings is available at the following link. Harris dataset: https://neurodata.io/data/kharris15/ Snemi dataset: https://lichtman.rc.fas.harvard.edu/vast/AC3AC4Package.zip

Abbreviations

- EM:

-

Electron microscopy

- 3D:

-

Three-dimensional

- CNN:

-

Convolutional neural network

- IoU:

-

Intersection-over-union

- RAG:

-

Region adjacency graph

- GAEC:

-

Greedy additive edge contraction

- VI:

-

Variation of information

- ARE:

-

Adapted rand error

- MC:

-

Multicut

- GASPavg:

-

Agglomerative clustering with average linkage criteria

- MWS:

-

Mutex watershed

- KL:

-

Kernighan–Lin solver

- FM:

-

Fusion-Move solver

- CC:

-

Connected component

References

Sporns O, Tononi G, Kötter R. The human connectome: a structural description of the human brain. PLoS Comput Biol. 2005;1(4):42.

Morgan JL, Lichtman JW. Why not connectomics? Nat Methods. 2013;10(6):494–500.

White JG, Southgate E, Thomson JN, Brenner S, et al. The structure of the nervous system of the nematode caenorhabditis elegans. Philos Trans R Soc Lond B Biol Sci. 1986;314(1165):1–340.

Zheng Z, Lauritzen JS, Perlman E, Robinson CG, Nichols M, Milkie D, Torrens O, Price J, Fisher CB, Sharifi N, et al. A complete electron microscopy volume of the brain of adult drosophila melanogaster. Cell. 2018;174(3):730–43.

Yin W, Brittain D, Borseth J, Scott ME, Williams D, Perkins J, Own CS, Murfitt M, Torres RM, Kapner D, et al. A petascale automated imaging pipeline for mapping neuronal circuits with high-throughput transmission electron microscopy. Nat Commun. 2020;11(1):1–12.

Lichtman JW, Pfister H, Shavit N. The big data challenges of connectomics. Nat Neurosci. 2014;17(11):1448–54.

Kasthuri N, Hayworth KJ, Berger DR, Schalek RL, Conchello JA, Knowles-Barley S, Lee D, Vázquez-Reina A, Kaynig V, Jones TR, et al. Saturated reconstruction of a volume of neocortex. Cell. 2015;162(3):648–61.

Motta A, Berning M, Boergens KM, Staffler B, Beining M, Loomba S, Hennig P, Wissler H, Helmstaedter M. Dense connectomic reconstruction in layer 4 of the somatosensory cortex. Science. 2019;366(6469):eaay3134.

Xiao C, Chen X, Li W, Li L, Wang L, Xie Q, Han H. Automatic mitochondria segmentation for em data using a 3d supervised convolutional network. Front Neuroanat. 2018;12:92.

Heinrich L, Funke J, Pape C, Nunez-Iglesias J, Saalfeld S. Synaptic cleft segmentation in non-isotropic volume electron microscopy of the complete drosophila brain. In: International conference on medical image computing and computer-assisted intervention, Springer 2018;317–25 .

Hong B, Liu J, Li W, Xiao C, Xie Q, Han H. Fully automatic synaptic cleft detection and segmentation from em images based on deep learning. In: International conference on brain inspired cognitive systems, Springer 2018;64–74.

Lin Z, Wei D, Jang W-D, Zhou S, Chen X, Wang X, Schalek R, Berger D, Matejek B, Kamentsky L, et al. Two stream active query suggestion for active learning in connectomics. In: Computer Vision–ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XVIII 16, Springer 2020;103–20.

Turner NL, Lee K, Lu R, Wu J, Ih D, Seung HS. Synaptic partner assignment using attentional voxel association networks. In: 2020 IEEE 17th international symposium on biomedical imaging (ISBI), IEEE 2020;1–5.

Li W, Liu J, Xiao C, Deng H, Xie Q, Han H. A fast forward 3d connection algorithm for mitochondria and synapse segmentations from serial em images. BioData Min. 2018;11(1):1–15.

Wei D, Lin Z, Franco-Barranco D, Wendt N, Liu X, Yin W, Huang X, Gupta A, Jang W-D, Wang X, et al. Mitoem dataset: Large-scale 3d mitochondria instance segmentation from em images. In: International conference on medical image computing and computer-assisted intervention, Springer 2020;66–76.

Beier T, Pape C, Rahaman N, Prange T, Berg S, Bock DD, Cardona A, Knott GW, Plaza SM, Scheffer LK, et al. Multicut brings automated neurite segmentation closer to human performance. Nat Methods. 2017;14(2):101–2.

Lee K, Turner N, Macrina T, Wu J, Lu R, Seung HS. Convolutional nets for reconstructing neural circuits from brain images acquired by serial section electron microscopy. Curr Opin Neurobiol. 2019;55:188–98.

Parag T, Tschopp F, Grisaitis W, Turaga SC, Zhang X, Matejek B, Kamentsky L, Lichtman JW, Pfister H. Anisotropic em segmentation by 3d affinity learning and agglomeration 2017. arXiv preprint arXiv:1707.08935

Xie Q, Chen X, Shen L, Li G, Ma H, Hua H. Micro reconstruction system for brain. Syst Eng-Theory Pract. 2017;37(11):3006–17.

Ciresan D, Giusti A, Gambardella L, Schmidhuber J. Deep neural networks segment neuronal membranes in electron microscopy images. Adv Neural Inf Process Syst. 2012;25:2843–51.

Ishii S, Lee S, Urakubo H, Kume H, Kasai H. Generative and discriminative model-based approaches to microscopic image restoration and segmentation. Microscopy. 2020;69(2):79–91.

He J, Xiang S, Zhu Z. A deep fully residual convolutional neural network for segmentation in em images. Int J Wavelets Multiresolut Inf Process. 2020;18(03):2050007.

Wang Z, Liu J, Chen X, Li G, Han H. Sparse self-attention aggregation networks for neural sequence slice interpolation. BioData Min. 2021;14(1):1–19.

Nunez-Iglesias J, Kennedy R, Parag T, Shi J, Chklovskii DB. Machine learning of hierarchical clustering to segment 2d and 3d images. PLoS ONE. 2013;8(8):71715.

Bailoni A, Pape C, Wolf S, Beier T, Kreshuk A, Hamprecht FA. A generalized framework for agglomerative clustering of signed graphs applied to instance segmentation 2019. arXiv preprint arXiv:1906.11713

Lee K, Zung J, Li P, Jain V, Seung HS. Superhuman accuracy on the snemi3d connectomics challenge 2017. arXiv preprint arXiv:1706.00120

Andres B, Kroeger T, Briggman KL, Denk W, Korogod N, Knott G, Koethe U, Hamprecht FA. Globally optimal closed-surface segmentation for connectomics. In: European conference on computer vision, Springer 2012;778–91.

Kappes JH, Speth M, Andres B, Reinelt G, Schn C. Globally optimal image partitioning by multicuts. In: International workshop on energy minimization methods in computer vision and pattern recognition, Springer 2011;31–44.

Keuper M, Levinkov E, Bonneel N, Lavoué G, Brox T, Andres B. Efficient decomposition of image and mesh graphs by lifted multicuts. In: Proceedings of the IEEE international conference on computer vision, 2015;1751–9.

Wolf S, Bailoni A, Pape C, Rahaman N, Kreshuk A, Köthe U, Hamprecht FA. The mutex watershed and its objective: efficient, parameter-free graph partitioning. IEEE Trans Pattern Anal Mach Intell. 2020;43(10):3724–38.

Januszewski M, Kornfeld J, Li PH, Pope A, Blakely T, Lindsey L, Maitin-Shepard J, Tyka M, Denk W, Jain V. High-precision automated reconstruction of neurons with flood-filling networks. Nat Methods. 2018;15(8):605–10.

Krasowski N, Beier T, Knott G, Köthe U, Hamprecht FA, Kreshuk A. Neuron segmentation with high-level biological priors. IEEE Trans Med Imaging. 2017;37(4):829–39.

Levinkov E, Kirillov A, Andres B. A comparative study of local search algorithms for correlation clustering. In: German conference on pattern recognition, Springer 2017;103–14.

Beier T, Hamprecht FA, Kappes JH. Fusion moves for correlation clustering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2015;3507–16.

Pape C, Beier T, Li P, Jain V, Bock DD, Kreshuk A. Solving large multicut problems for connectomics via domain decomposition. In: Proceedings of the IEEE International conference on computer vision workshops, 2017;1–10.

Pape C, Matskevych A, Wolny A, Hennies J, Mizzon G, Louveaux M, Musser J, Maizel A, Arendt D, Kreshuk A. Leveraging domain knowledge to improve microscopy image segmentation with lifted multicuts. Front Comput Sci. 2019;1:6.

Wolf S, Li Y, Pape C, Bailoni A, Kreshuk A, Hamprecht FA. The semantic mutex watershed for efficient bottom-up semantic instance segmentation. In: European conference on computer vision, Springer 2020;208–24.

Keuper M, Tang S, Andres B, Brox T, Schiele B. Motion segmentation & multiple object tracking by correlation co-clustering. IEEE Trans Pattern Anal Mach Intell. 2018;42(1):140–53.

Levinkov E, Uhrig J, Tang S, Omran M, Insafutdinov E, Kirillov A, Rother C, Brox T, Schiele B, Andres B. Joint graph decomposition & node labeling: problem, algorithms, applications. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2017;6012–20.

Meirovitch Y, Matveev A, Saribekyan H, Budden D, Rolnick D, Odor G, Knowles-Barley S, Jones TR, Pfister H, Lichtman JW, et al. A multi-pass approach to large-scale connectomics 2016. arXiv preprint arXiv:1612.02120

Rolnick D, Meirovitch Y, Parag T, Pfister H, Jain V, Lichtman JW, Boyden ES, Shavit N. Morphological error detection in 3d segmentations 2017. arXiv preprint arXiv:1705.10882

Matejek B, Haehn D, Zhu H, Wei D, Parag T, Pfister H. Biologically-constrained graphs for global connectomics reconstruction. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019;2089–98.

Li H, Januszewski M, Jain V, Li PH. Neuronal subcompartment classification and merge error correction. In: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer 2020;88–98.

Liu J, Hong B, Chen X, Xie Q, Tang Y, Han H. An effective ai integrated system for neuron tracing on anisotropic electron microscopy volume. Biomed Signal Process Control. 2021;69:102829.

Chopra S, Rao MR. The partition problem. Math Program. 1993;59(1):87–115.

Kroeger T, Kappes JH, Beier T, Koethe U, Hamprecht FA. Asymmetric cuts: joint image labeling and partitioning. In: German conference on pattern recognition, Springer 2014;199–211.

Liu J, Qi J, Chen X, Li Z, Hong B, Ma H, Li G, Shen L, Liu D, Kong Y, Zhai H, Xie Q, Han H, Yang Y. Fear memory-associated synaptic and mitochondrial changes revealed by deep learning-based processing of electron microscopy data. Cell Rep. 2022;40(5):111151.

Harris KM, Spacek J, Bell ME, Parker PH, Lindsey LF, Baden AD, Vogelstein JT, Burns R. A resource from 3d electron microscopy of hippocampal neuropil for user training and tool development. Sci data. 2015;2(1):1–19.

Meilă M. Comparing clusterings by the variation of information, 2003;173–87.

Arganda-Carreras I, Turaga SC, Berger DR, Cireşan D, Giusti A, Gambardella LM, Schmidhuber J, Laptev D, Dwivedi S, Buhmann JM, et al. Crowdsourcing the creation of image segmentation algorithms for connectomics. Front Neuroanat. 2015;9:142.

Turaga SC, Murray JF, Jain V, Roth F, Helmstaedter M, Briggman K, Denk W, Seung HS. Convolutional networks can learn to generate affinity graphs for image segmentation. Neural Comput. 2010;22(2):511–38.

Schneider CA, Rasband WS, Eliceiri KW. Nih image to imagej: 25 years of image analysis. Nat Methods. 2012;9(7):671–5.

Acknowledgements

The authors would like to thank the anonymous reviewers for their valuable comments and insightful suggestions.

Funding

This work was supported in part by National Science and Technology Innovation 2030 Major Program (2021ZD0204503, 2021ZD0204500), National Natural Science Foundation of China (32171461, 61673381), Strategic Priority Research Program of Chinese Academy of Sciences (XDB32030208, XDA16021104), and Program of Beijing Municipal Science & Technology Commission (Z201100008420004).

Author information

Authors and Affiliations

Contributions

BH designed and conducted the study.BH and LJ wrote the manuscript with the help of HH and LJS. HZ and JZL contributed programming and 3D visualization. XC and QWX helped to revise the manuscript. HH and LJS supervised the ideas and experiments. All authors contributed to the discussion and interpretation of the results and edited the final manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Hong, B., Liu, J., Zhai, H. et al. Joint reconstruction of neuron and ultrastructure via connectivity consensus in electron microscope volumes. BMC Bioinformatics 23, 453 (2022). https://doi.org/10.1186/s12859-022-04991-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12859-022-04991-6