Abstract

Background

Quantitative structure-activity relationship (QSAR) is a computational modeling method for revealing relationships between structural properties of chemical compounds and biological activities. QSAR modeling is essential for drug discovery, but it has many constraints. Ensemble-based machine learning approaches have been used to overcome constraints and obtain reliable predictions. Ensemble learning builds a set of diversified models and combines them. However, the most prevalent approach random forest and other ensemble approaches in QSAR prediction limit their model diversity to a single subject.

Results

The proposed ensemble method consistently outperformed thirteen individual models on 19 bioassay datasets and demonstrated superiority over other ensemble approaches that are limited to a single subject. The comprehensive ensemble method is publicly available at http://data.snu.ac.kr/QSAR/.

Conclusions

We propose a comprehensive ensemble method that builds multi-subject diversified models and combines them through second-level meta-learning. In addition, we propose an end-to-end neural network-based individual classifier that can automatically extract sequential features from a simplified molecular-input line-entry system (SMILES). The proposed individual models did not show impressive results as a single model, but it was considered the most important predictor when combined, according to the interpretation of the meta-learning.

Similar content being viewed by others

Background

Quantitative structure-activity relationship (QSAR) is a computational or mathematical modeling method to reveal relationships between biological activities and the structural properties of chemical compounds. The underlying principle is that variations in structural properties cause different biological activities [1]. Structural properties refer to physico-chemical properties, and biological activities correspond to pharmacokinetic properties such as absorption, distribution, metabolism, excretion, and toxicity.

QSAR modeling helps prioritize a large number of chemicals in terms of their desired biological activities as an in silico methodology and, as a result, significantly reduces the number of candidate chemicals to be tested with in vivo experiments. QSAR modeling has served as an inevitable process in the pharmaceutical industry, but many constraints are involved [2, 3]. QSAR data may involve a very large number of chemicals (more than hundreds of thousands); each chemical can be represented by a variety of descriptors; commonly used fingerprints are very sparse (most of the values are zero), and some features are highly correlated; it is assumed that the dataset contains some errors because relationships are assessed through in situ experiments.

Due to these constraints, it has become difficult for QSAR-based model prediction to achieve a reliable prediction score. Consequently, machine learning approaches have been applied to QSAR prediction. Linear regression models [4] and Bayesian neural networks [5–7] have been used for QSAR prediction. Random forest (RF) [8, 9] is most commonly used algorithm with a high level of predictability, simplicity, and robustness. RF is a kind of ensemble method based on multiple decision trees that can prevent the overfitting from a single decision tree. RF is considered to be the gold standard in this field [2]; thus, newly proposed QSAR prediction methods ofen have their performance compared to RF.

The Merck Kaggle competition in 2012 turned people’s attentions to neural networks. The winning team used multi-task neural networks (MTNNs) [10]. The fundamental learning structure is based on plain feed-forward neural networks; it avoids overfitting by learning multiple bioassays simultaneously. The team obtained results that consistently outperformed RF. Despite achieving high performance using a multi-task neural network, the team ultimately used an ensemble that combined different methods.

Both RF and the aforementioned technique from the Kaggle competition used ensemble learning, a technique which builds a set of learning models and combines multiple models to produce final predictions. Theoretically and empirically, it has been shown that the predictive power of ensemble learning surpasses that of a single individual learner if the individual algorithms are accurate and diverse [11–14]. Ensemble learning manages the strengths and weaknesses of individual learners, similar to how people consider diverse opinions when faced with critical issues.

Ensemble methods, including neural network ensemble based on bootstrap sampling in QSAR (data sampling ensemble) [15]; ensemble against different learning methods for drug-drug interaction [16], Bayesian ensemble model with different QSAR tools (method ensemble) [7], ensemble learning based qualitative and quantitative SAR models [17], Hybrid QSAR prediction model with various learning methods [18], ensembles with different boosting methods [19], Hybridizing feature selection and feature learning in QSAR modeling [20], and ensemble against diverse chemicals for carcinogenicity prediction (representation ensembles) [21] have been extensively used in drug (chemical) research. However, these ensemble approaches limit model diversity to a single subject, such as data sampling, method, and input representation (drug-specific).

To overcome this limitation, we propose a multi-subject comprehensive ensemble with a new type of individual classifier based on 1D-CNNs and RNNs. The detailed key characteristics and contributions of our proposed methods are as follows:

-

Instead of limiting ensemble diversity to a single subject, we combine multi-subject individual models comprehensively. This ensemble is used for combinations of bagging, methods, and chemical compound input representations.

-

We propose a new type of individual QSAR classifier that is an end-to-end neural network model based on one-dimensional convolutional neural networks (1D-CNNs) and recurrent neural networks (RNNs). It automatically extracts sequential features from a simplified molecular-input line-entry system (SMILES).

-

We combine a set of models using second-level combined learning (meta-learning) and provide an interpretation regarding the importance of individual models through their learned weights.

To validate our proposed method, we tested 19 bioassays specified in [10]. In our experiments, we confirmed the superiority of our proposed method by comparing individual models, limited ensemble approaches, and other combining techniques. Further, we identified the importance of the proposed end-to-end individual classifier through an interpretation of second-level meta-learning.

Results

Experimental setup

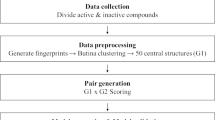

Dataset

A bioassay is a biochemical test to determine or estimate the potency of a chemical compound on targets and has been used for a variety of purposes, including drug development, and environmental impact analysis. In our experiment, we used 19 bioassays downloaded from the PubChem open chemistry database [22], which are listed in Table 1. All bioassays are those specified in [10]. The purpose of the paper was to address multi-task effects; thus, a number of experimental assays are closely related, such as the 1851, 46321*, 48891*, and 6517** series.

From each bioassay, we extracted a PubChem chemical ID and activity outcome (active or inactive). We only used duplicate chemicals once, and we excluded inconsistent chemicals that had both active and inactive outcomes. A class imbalance ratio between active and inactive ranged from 1:1.1 to 1:4.2 depending on the dataset; most bioassays are imbalanced, with an average ratio of 1:2.

Representation of chemical compounds

In our experiment, we used three types of molecular fingerprints PubChem [22], ECFP [23], MACCS [24], and string type SMILES [25]. Because SMILES is a sequential string type descriptor, it is not a proper form for conventional learning methods. We used an end-to-end 1D-CNN and RNN which are capable of handling a sequential forms. On the other hand, a binary vector type fingerprint consists of 1’s and 0’s in a form of non-sequential form. Thus, conventional machine learning approaches such as plain feed-forward neural network are used.

The SMILES and PubChem fingerprint were retrieved from the preprocessed chemical IDs using PubChemPy [26], and ECFP and MACCS fingerprints were retrieved from SMILES using RDKit [27].

Experimental configuration and environment

We followed the same experimental settings and performance measures as described for the multi-task neural network [10]. We randomly divided the dataset into two parts: 75% of the dataset was used as a training set, and the other 25% was used as a testing set. The training dataset was also randomly partitioned into five portions: one for validation, and the remaining four for training (5-fold cross-validation). The prediction probabilities from the 5-fold validations were concatenated as P, and were then used as inputs for the second-level learning.

We ran our experiments on Ubuntu 14.04 (3.5GHz Intel i7-5930K CPU and GTX Titan X Maxwell(12GB) GPU). We used the Keras library package (version 2.0.6) for neural network implementation, the Scikit-learn library package (version 0.18) for conventional machine learning methods, and PubChemPy (version 1.0.3) and RDKit (version 1.0.3) for input representation preparation of the chemical compounds.

Performance comparison with other approaches

Performance comparison with individual models

We compared our comprehensive ensemble method with 13 individual models: the 12 models from the combination of three types of fingerprints (PubChem, ECFP, and MACCS) and four types of learning methods (RF, SVM, GBM, and NN), and a SMILES-NN combination.

As shown in Table 2, the comprehensive ensemble showed the best performance across all datasets, followed by ECFP-RF and PubChem-RF. We can see that the top-3 AUCs (represented in bold) are dispersed across the chemical compound representations and learning methods, except for PubChem-SVM, ECFP-GBM, and MACCS-SVM. The individual SMILES-NN models were within the top-3 ranks of the three datasets. In terms of learning methodology, RF showed the highest number of top-3 AUC values followed by NN, GBM, and SVM. In terms of chemical compound representation, ECFP showed the highest number of top-3 AUC values followed by PubChem, SMILES (compared proportionally), and MACCS. In terms of the averaged AUC, the comprehensive ensemble showed the best performance (0.814), followed by ECFP-RF (0.798) and PubChem-RF (0.794). The MACCS-SVM combination showed the lowest AUC value (0.736). Aside from the best (proposed ensemble) and the worst (MACCS-SVM) methods, all average AUC values were less than 0.80. Predictability depends on the combination of learning method and input representation. Although SVM showed better performance than GBM in ECFP, GBM showed better performance than SVM in MACCS.

Statistical analysis with paired t-tests was performed to evaluate differences between the means of paired outcomes. The AUC scores of the comprehensive ensembles were compared with the top-scored AUC from the individual classifier in each dataset from the five fold cross-validation. Assuming that two output scores y1 and y2 follow normal distributions, the difference between these two scores should also follow a normal distribution. The null hypothesis of no difference between the means of two output scores, calculated as d=y1−y2, indicates that the distribution of this difference has mean 0 and variance \(\sigma ^{2}_{d}\). The comprehensive ensemble achieved an AUC score exceeding the top-scored AUC from an individual classifier in 16 out of 19 PubChem bioassays as shown in Table 3. Let \(\bar {d}, s_{d}\), n denote the mean difference, the standard deviation of the differences, and the number of samples, respectively. The results are significant at a p-value of 8.2×10−7, where the t value is calculated by \(t_{d} = \frac {\bar {d}} {\frac {s_{d}}{\sqrt {n}}} \sim t_{n-1}.\)

Performance comparison with other ensemble approaches

In addition to a comparison with individual models, we compared the proposed ensemble method with other ensemble approaches based on the ensemble subject and combining technique, as shown in Table 4.

The first three columns showe the method ensemble, which combines predictions from RF, SVM, GBM, and NN by fixing them to a particular chemical representation. The ensembles based on PubChem, ECFP, and MACCS showed AUC values of 0.793, 0.796, and 0.784, which are 0.016, 0.015, and 0.018 higher than the average AUC value for the four individual methods based on those representations, respectively. The next five columns show the representation ensembles, which combine the PubChem, ECFP, and MACCS molecular representations by fixing them to a particular learning method. As with the method ensembles, the representation ensembles outperformed the average results from the individual representation models based on their learning methods. In particular, the NN-based individual models showed lower AUCs values than the RF-based models, but the NN-based combined representation ensemble showed a higher AUC value than the RF-based ensemble.

Bagging is an easy-to-develop and powerful technique for class imbalance problems [28]. Figure 1a shows the effectiveness of bagging by comparing a plain neural network (NN) with a bootstrap aggregated neural network (NN-bagging) and a neural network-based representation ensemble (NN-representation ensemble). As shown in Fig. 1a, bagging improved the AUC in both ensemble techniques. As shown in Fig. 1b, the improved AUC by bagging was correlated with the imbalance ratio of the dataset (Pearson’s r=0.69, p-value= 1.1×10−3). The results showed greater improvement with a higher imbalance ratio.

Ensemble effects on class-imbalanced datasets. a Improved average AUC value produced by neural network bagging (NN-bagging) and neural network-based representation ensemble (NN-representation ensemble) over three fingerprints. b Pearson’s correlation (r=0.69, p-value=1.1x 10−3) between the improved AUC values from NN-bagging and the class imbalance ratio. The class imbalance ratio was calculated from the number of active and inactive chemicals, as shown in Table 1

The proposed multi-subject comprehensive ensemble combines all models regardless of learning method or representation: 12 models consisting of the unique combinations of representations (PubChem, ECFP, and MACCS) and learning methods (RF, SVM, GBM, and NN) and the newly proposed SMILES-NN model. All ensembles except for the last column combined the various models by uniform averaging. The comprehensive ensemble outperformed all limited ensemble approaches based on average combining.

In terms of the combination technique, we compared simple uniform averaging with the proposed meta-learning techniques in both comprehensive ensembles. The results of the comprehensive ensemble from Table 2 are presented in the second to the last column of Table 4. The last column in Table 4 shows the performance comparison between meta-learning and the comprehensive ensemble. The multi-task neural networks [10] achieved state-of-the-art performance on 19 PubChem bioassays with performance measurement of the AUC. As shown in Table 5, our approach outperformed multi-task learning in 13 out of 19 PubChem bioassays. From “Convolutional and recurrent neural networks” section, this result was statistically significant at a p-value of 3.9×10−8 in 13 out of 19 datasets and resulted in a higher mean AUC value for the meta-learning network than for the multi-task network.

Performance comparison on other dataset

The Drug Therapeutics Program (DTP) AIDS Antiviral Screen developed an HIV dataset for over 40,000 compounds. These results are categorized into three groups: confirmed inactive (CI), confirmed active (CA) and confirmed moderately active (CM). Following previous research [29], we also combined the latter two labels (CA and CM), resulting it a classification task to discriminate inactive and active.

We evaluated our meta-learning neural network on the HIV dataset following identical experimental settings as described in MoleculeNet [29]. The HIV dataset was divided by scaffold-based splitting into training, validation, and test sets at a ratio of 80:10:10. Scaffold-based splitting separates structurally different molecules into different subgroups [29]. For the performance metrics, we used AU-ROC, accuracy, Matthews correlation coefficient (MCC), and F1-score. Accuracy, MCC, and F1-score were defined as follows:

where TP, FP, FN, and TN represent the number of true positives, false positives, false negatives, and true negatives, respectively. Table 6 shows the results for the comparison between multi-task [10] and meta-learning on the various performance metrics. For meta-learning, we applied our neural networks described in Section 2.3.4 to the multi-task neural network. We repeated the experiments 100 times and calculated the mean test score. In terms of AU-ROC, both neural networks performed similarly, however, meta-learning outperformed multi-task learning in other metrics.

Meta-learning and interpretation of model importance

We made a final decision through meta-learning using the predictions from independent first-level models as input. Any learning algorithm could be used as a meta-learner. We used SVM, which achieved the highest average AUC value in further experiments compared with NN, RF, GBM, and ordinary regression.

We interpreted the importance of the models through their learned weights. In the process of meta-learning, a weight is assigned to each model, and this weight could be interpreted as the model importance. As shown in Fig. 2, the degree of darkness for each method is slightly different depending on the dataset, just as the best prediction method and representation depends on the datasets (Table 2). A darker color indicates a higher weight and importance. PubChem-SVM, ECFP-GBM, and MACCS-SVM showed low importance, while SMILES-NN and ECFP-RF showed high importance throughout the dataset. The SMILES-NN model did not show as high a performance as an individual model, but it was regarded as the most important model.

Discussion

Ensemble learning can improve predictability, but it requires a set of diversified hypotheses; bagging requires a set of randomly sampled datasets, a method ensemble needs to exploit diverse learning methods, and a representation ensemble needs to prepare diversified input representations. A comprehensive ensemble requires diversified datasets, methods, and representations across multi-subjects; thus, it has difficulties in preparation and learning efficiency for these hypotheses.

Diversity is a crucial condition for ensemble learning. RF was superior to NN among the individual models, but NN outperformed RF in the representation ensemble. This is presumably due to model variation diversities caused by random initialization and random dropout of the neural network. In addition to model variation diversity, SMILES seems to contribute to ensemble representation diversity. The SMILES-based model did not show impressive results as an individual model, but it was considered the most important predictor when combined.

The proposed comprehensive ensemble exploits diversities across multi-subjects and exhibits improved predictability compared to the individual models. In particular, the neural network and SMILES contribute to diversity and are considered important factors when combined. However, the proposed ensemble approach has difficulties associated with these diversities.

Conclusions

We proposed a multi-subject comprehensive ensemble due to the difficulties and importance of QSAR problems. In our experiments, the proposed ensemble method consistently outperformed all individual models, and it exhibited superiority over limited subject ensemble approaches and uniform averaging. As part of our future work, we will focus on analyzing as few hypotheses as possible or combinations of hypotheses while maintaining the ensemble effect.

Methods

Ensemble learning

Ensemble learning builds a set of diversified models and combines them. Theoretically and empirically, numerous studies have demonstrated that ensemble learning usually yields higher accuracy than individual models [11, 12, 30–32]; a collection of weak models (inducers) can be combined to produce a single strong ensemble model.

Framework

Ensemble learning can be divided into independent and dependent frameworks for building ensembles [33]. In the independent framework, also called the randomization-based approach, individual inducers can be trained independently in parallel. On the other hand, in the dependent framework (also called the boosting-based approach), base inducers are affected sequentially by previous inducers. In terms of individual learning, we used both independent and dependent frameworks, e.g., RF and gradient boosting, respectively. In terms of combining learning, we treated the individual inducers independently.

Diversity

Diversity is well known as a crucial condition for ensemble learning [34, 35]. Diversity leads to uncorrelated inducers, which in turn improves the final prediction performance [36]. In this paper, we focus on the following three types of diversity.

-

Dataset diversity

The original dataset can be diversified by sampling. Random sampling with replacement (bootstrapping) from an original dataset can generate multiple datasets with different levels of variation. If the original and bootstrap datasets are the same size (n), the bootstrap datasets are expected to have (\(1-\frac {1}{e}\)) (≈63.2% for n) unique samples in the original data, with the remainder being duplicated. Dataset variation results in different prediction, even with the same algorithm, which produces homogeneous base inducers. Bagging (bootstrap aggregating) belongs to this category and is known to improve unstable or relatively large variance-error factors [37].

-

Learning method diversity

Diverse learning algorithms that produce heterogeneous inducers yield different predictions for the same problem. Combining the predictions from heterogeneous inducers leads to improved performance that is difficult to achieve with a single inducer. Ensemble combining of diverse methods is prevalently used as a final technique in competitions, that presented in [10]. We attempted to combine popular learning methods, including random forest (RF) [8, 38], support vector machine (SVM) [39], gradient boosting machine (GBM) [40], and neural network (NN).

-

Input representation diversity

Drugs (chemical compounds) can be expressed with diverse representations. The diversified input representations produce different types of input features and lead to different predictions. [21] demonstrated improved performance by applying ensemble learning to a diverse set of molecular fingerprints. We used diverse representations from PubChem [22], ECFP [23], and MACCS [24] fingerprints and from a simplified molecular input line entry system (SMILES) [25].

Combining a set of models

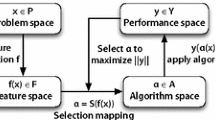

For the final decision, ensemble learning should combine predictions from multiple inducers. There are two main combination methods: weighting (non-learning) and meta-learning. Weighting method, such as majority voting and averaging, have been frequently used for their convenience and are useful for homogeneous inducers. Meta-learning methods, such as stacking [41], are a learning-based methods (second-level learning) that use predictions from first-level inducers and are usually employed in heterogeneous inducers. For example, let fθ be a classifier of an individual QSAR classifier with parameter θ, trained for a single subject (drug-specific task) p(X) with dataset X that outputs y given an input x. The optimal θ can be achieved by

Then, the second-level learning will learn to maximize output y by learning how to update the individual QSAR classifier \(\phantom {\dot {i}\!}f_{\theta ^{*}}\). “First-level: individual learning” section details the first-level learning and, “Second-level: combined learning” section details the second-level learning.

Chemical compound representation

Chemical compounds can be expressed with various types of chemical descriptors that represent their structural information. One representative type of chemical compound descriptor is a molecular fingerprint. Molecular fingerprints are encoded representations of a molecular structure as a bit-string; these have been studied and used in drug discovery for a long time. Depending on the transformation to a bit-string, there are several types of molecular fingerprints: structure key-based, topological or path-based, circular, and hybrid [42]. Structure key-based fingerprints, such as PubChem [22] and MACCS [24], encode molecular structures based on the presence of substructures or features. Circular fingerprints, such as ECFP [23], encode molecular structures based on hashing fragments up to a specific radius.

Another chemical compound representation is the simplified molecular-input line-entry system (SMILES) [25], which is a string type notation expressing a chemical compound structure with characters, e.g., C,O, or N for atoms, = for bonds, and (,) for a ring structure. SMILES is generated by the symbol nodes encountered in a 2D structure in a depth-first search in terms of a graph-based computational procedure. The generated SMILES can be reconverted into a 2D or 3D representation of the chemical compound.

Examples of SMILES and molecular fingerprints of leucine, which is an essential amino acid for hemoglobin formation, are as follows:

-

SMILES string: CC(C)CC(C(=O)O)N

-

PubChem fingerprint: 1,1,0,0,0,0,0,0,0,1,1,0,0,0,1,0,⋯

-

ECFP fingerprint: 0,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,⋯

-

MACCS fingerprint: 0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,⋯

(Most values in this molecular fingerprint are zero).

Figure 3 shows the two-levels of learning procedure. First-level learning is an individual learning level from diversified learning algorithms and chemical compound representations. The prediction probabilities produced from first-level learning models are used as inputs for second-level learning. Second-level learning makes the final decision by learning the importance of individual models produced from the first-level predictions.

Learning procedure of the proposed comprehensive ensemble. The individual i-th learning algorithm \(\mathcal {L}_{i}\) outputs its prediction probability Pi for the training dataset through 5-fold cross-validation. The n diverse learning algorithms produce n prediction probabilities (P1,P2,⋯,Pn). The probabilities are concatenated and then used as input to the second-level learning algorithm \(\boldsymbol {\mathcal {L}}\), which makes a final decision \(\hat {y}\). a First-level learning. b Second-level learning

Notation

The notation used in our paper is as follows:

-

x: preprocessed chemical compound-representation input, where x can be a particular type of molecular fingerprints or SMILES.

-

h: hidden representation

-

\(\mathcal {L}\): first-level individual learning algorithm (\(\mathcal {L}_{i}\): i-th algorithm, i={1,⋯,n})

-

\(\boldsymbol {\mathcal {L}}\): second-level learning algorithm

-

P: predicted probability from the individual model (Pi: predicted probability from the \(\mathcal {L}_{i}\))

-

\(\hat {y}\): final predicted decision from the second-level learning

-

σ: activation function (σs: sigmoid, σr: rectified linear unit (ReLU), and σt: hyperbolic tangent)

-

n: total number of individual algorithms

First-level: individual learning

With a combination of learning algorithms and chemical compound input representations, we generated thirteen kinds of individual learning models: nine models from conventional machine learning methods, three models from a plain feed-forward neural network, and one model from the 1D-CNN and RNN-based newly proposed neural network model.

Conventional machine learning methods

Among the conventional machine learning methods, we used SVM, RF, and GBM with three types of molecular fingerprints, resulting in nine combination models consisting of all unique pairs of learning algorithms (SVM, RF, and GBM) and fingerprints (PubChem, ECFP, and MACCS). We set the penalty parameter to 0.05 for the linear SVM, and the number of estimators was set to 100 for RF and GBM based on a grid search and experimental efficiency. The prediction probabilities from these learning methods are used as inputs for second-level learning. However, SVM outputs a signed distance to the hyperplane rather than a probability. Thus, we applied a probability calibration method to convert the SVM results into probabilistic outputs.

Plain feed-forward neural network

We used a plain feed-forward neural network (NN) for the vector-type fingerprints: PubChem-NN, ECFP-NN, and MACCS-NN. The neural network structure consists of three fully connected layers (Fcl) with 512, 64, and 1 units in each layer and using, the ReLU, tanh, and sigmoid activation functions, respectively,

The sigmoid activation function outputs a probability for binary classification. We used the Adam optimizer [43] with binary cross-entropy loss (learning rate: 0.001, epoch: 30, and mini-batch size: 256).

Convolutional and recurrent neural networks

To learn key features through end-to-end neural network learning automatically, we used a SMILES string as input and exploited the neural network structures of the 1D-CNNs and RNNs. A CNN is used to recognize the short-term dependencies, and an RNN is used as the next layer to learn long-term dependencies from the recognized local patterns.

As illustrated in Fig. 4 of the preprocessing step, the input SMILES strings were preprocessed with one-hot encoding [44–46], which sets only the corresponding symbol to 1 and others to 0. The input is truncated/padded to a maximum length of 100. We only consider the most frequent nine characters in SMILES and treat the remaining symbols as OTHERS, thus the encoding dimension was reduced to 10.

As illustrated in Fig. 4 of the neural networks step, the preprocessed input x was fed into the CNN layer without pooling (CNN filter length: 17, number of filters: 384). Then, the outputs from the CNN were fed into the GRU layer (dimension: 9, structure: many-to-many).

where h is the output of GRU layer, σr is the ReLU, and σt is the hyperbolic tangent. The output h was flattened and then fed into a fully connected neural network.

where P is the output probability from the sigmoid activation function for binary classification. The output P is subsequently used for second-level learning as in the last step in Fig. 4.

We used dropout for each layer (CNN: 0.9, RNN: 0.6, first Fcl: 0.6) and an Adam optimizer (learning rate: 0.001, epoch: 120, mini-batch size: 256) with binary cross-entropy. Most of these hyperparameters were empirically determined.

Second-level: combined learning

We combined the first-level predictions generated from the set of individual models to obtain the final decision.

We have n individual learning algorithms \(\mathcal {L}_{i}\), where i={1,⋯,n}, and the i-th model outputs the prediction probability Pi for a given x. We can determine the final prediction \(\hat {y}\) by weighting, wi:

where if the weight wi=1/n,∀i indicates, uniform averaging.

As another technique, we can combine the first-level output predictions through meta-learning. The performance of individual methods varies depending on each dataset as shown in “Performance comparison with individual models” section; there is no invincible universal method. The learned weights from the individual models are applied to the corresponding datasets. Thus, we use learning based combining methods (meta-learning) rather than simple averaging or voting.

where \(\boldsymbol {\mathcal {L}}\) is a second-level learning algorithm, and any machine learning method can be applied this level. All Pi, where i={1,2,⋯,n} are concatenated and used as inputs. The model importance imposes a weight wi on Pi and is determined through meta-learning.

Availability of data and materials

The datasets generated and/or analyzed during the current study are available at http://data.snu.ac.kr/QSAR/.

Abbreviations

- 1D-CNNs:

-

One-dimensional convolutional neural networks

- AU-PRC:

-

Area under the curve of the receiver operating characteristic curve

- AUC:

-

Area under the curve

- GBM:

-

Gradient boosting machine

- GRU:

-

Gated recurrent units

- HTS:

-

High throughput screening

- MTNN:

-

Multi-task neural networks

- NN:

-

Neural network

- QSAR:

-

Quantitative structure-activity relationship

- RF:

-

Random forest

- RNNs:

-

Recurrent neural network

- SMILES:

-

simplified molecular-input line-entry system

- SVM:

-

Support vector machine

References

Verma J, Khedkar VM, Coutinho EC. 3d-qsar in drug design-a review. Curr Top Med Chem. 2010; 10(1):95–115.

Ma J, Sheridan RP, Liaw A, Dahl GE, Svetnik V. Deep neural nets as a method for quantitative structure–activity relationships. J Chem Inf Model. 2015; 55(2):263–74.

Golbraikh A, Wang XS, Zhu H, Tropsha A. Predictive qsar modeling: methods and applications in drug discovery and chemical risk assessment. Handb Comput Chem. 2016:1–48. https://doi.org/10.1007/978-94-007-6169-8_37-3.

Luco JM, Ferretti FH. Qsar based on multiple linear regression and pls methods for the anti-hiv activity of a large group of hept derivatives. J Chem Inf Comput Sci. 1997; 37(2):392–401.

Burden FR, Winkler DA. Robust qsar models using bayesian regularized neural networks. J Med Chem. 1999; 42(16):3183–7.

Burden FR, Ford MG, Whitley DC, Winkler DA. Use of automatic relevance determination in qsar studies using bayesian neural networks. J Chem Inf Comput Sci. 2000; 40(6):1423–30.

Pradeep P, Povinelli RJ, White S, Merrill SJ. An ensemble model of qsar tools for regulatory risk assessment. J Cheminformatics. 2016; 8(1):48.

Svetnik V, Liaw A, Tong C, Culberson JC, Sheridan RP, Feuston BP. Random forest: a classification and regression tool for compound classification and qsar modeling. J Chem Inf Comput Sci. 2003; 43(6):1947–58.

Zakharov AV, Varlamova EV, Lagunin AA, Dmitriev AV, Muratov EN, Fourches D, Kuz’min VE, Poroikov VV, Tropsha A, Nicklaus MC. Qsar modeling and prediction of drug–drug interactions. Mol Pharm. 2016; 13(2):545–56.

Dahl GE, Jaitly N, Salakhutdinov R. Multi-task neural networks for qsar predictions. arXiv preprint. 2014. arXiv:1406.1231.

Dietterich TG. Ensemble methods in machine learning In: Goos G, Hartmanis J, Van Leeuwen JP, editors. International Workshop on Multiple Classifier Systems. Springer: 2000. p. 1–15.

Hansen LK, Salamon P. Neural network ensembles. IEEE Trans Pattern Anal Mach Intell. 1990; 12(10):993–1001.

Ju C, Bibaut A, van der Laan M. The relative performance of ensemble methods with deep convolutional neural networks for image classification. J Appl Stat. 2018; 45(15):2800–18.

Ezzat A, Wu M, Li X, Kwoh C-K. Computational prediction of drug-target interactions via ensemble learning. In: Computational Methods for Drug Repurposing. Springer: 2019. p. 239–54. https://doi.org/10.1007/978-1-4939-8955-3_14.

Agrafiotis DK, Cedeno W, Lobanov VS. On the use of neural network ensembles in qsar and qspr. J Chem Inf Comput Sci. 2002; 42(4):903–11.

Thomas P, Neves M, Solt I, Tikk D, Leser U. Relation extraction for drug-drug interactions using ensemble learning. Training. 2011; 4(2,402):21–425.

Basant N, Gupta S, Singh KP. Predicting human intestinal absorption of diverse chemicals using ensemble learning based qsar modeling approaches. Comput Biol Chem. 2016; 61:178–96.

Wang W, Kim MT, Sedykh A, Zhu H. Developing enhanced blood–brain barrier permeability models: integrating external bio-assay data in qsar modeling. Pharm Res. 2015; 32(9):3055–65.

Afolabi LT, Saeed F, Hashim H, Petinrin OO. Ensemble learning method for the prediction of new bioactive molecules. PloS ONE. 2018; 13(1):0189538.

Ponzoni I, Sebastián-Pérez V, Requena-Triguero C, Roca C, Martínez MJ, Cravero F, Díaz MF, Páez JA, Arrayás RG, Adrio J, et al.Hybridizing feature selection and feature learning approaches in qsar modeling for drug discovery. Sci Rep. 2017; 7(1):2403.

Zhang L, Ai H, Chen W, Yin Z, Hu H, Zhu J, Zhao J, Zhao Q, Liu H. Carcinopred-el: Novel models for predicting the carcinogenicity of chemicals using molecular fingerprints and ensemble learning methods. Sci Rep. 2017; 7(1):2118.

Wang Y, Xiao J, Suzek TO, Zhang J, Wang J, Bryant SH. Pubchem: a public information system for analyzing bioactivities of small molecules. Nucleic Acids Res. 2009; 37(suppl 2):623–33.

Morgan H. The generation of a unique machine description for chemical structures-a technique developed at chemical abstracts service. J Chem Doc. 1965; 5(2):107–13.

Durant JL, Leland BA, Henry DR, Nourse JG. Reoptimization of mdl keys for use in drug discovery. J Chem Inf Comput Sci. 2002; 42(6):1273–80.

Weininger D. Smiles, a chemical language and information system. 1. introduction to methodology and encoding rules. In: Proc. Edinburgh Math. SOC: 1970. p. 1–14. https://doi.org/10.1021/ci00057a005.

Swain M. PubChemPy: a way to interact with PubChem in Python. 2014.

Landrum G. Rdkit: Open-source cheminformatics. 2006. https://pubchempy.readthedocs.io/en/latest/. Accessed 4 Mar 2012.

Galar M, Fernandez A, Barrenechea E, Bustince H, Herrera F. A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans Syst Man Cybern Part C (Appl Rev). 2012; 42(4):463–84.

Wu Z, Ramsundar B, Feinberg EN, Gomes J, Geniesse C, Pappu AS, Leswing K, Pande V. Moleculenet: a benchmark for molecular machine learning. Chem Sci. 2018; 9(2):513–30.

Wei L, Wan S, Guo J, Wong KK. A novel hierarchical selective ensemble classifier with bioinformatics application. Artif Intell Med. 2017; 83:82–90.

Huang M-W, Chen C-W, Lin W-C, Ke S-W, Tsai C-F. Svm and svm ensembles in breast cancer prediction. PloS ONE. 2017; 12(1):0161501.

Xiao Y, Wu J, Lin Z, Zhao X. A deep learning-based multi-model ensemble method for cancer prediction. Comput Methods Prog Biomed. 2018; 153:1–9.

Rokach L. Ensemble-based classifiers. Artif Intell Rev. 2010; 33(1-2):1–39.

Tumer K, Ghosh J. Error correlation and error reduction in ensemble classifiers. Connect Sci. 1996; 8(3-4):385–404.

Krogh A, Vedelsby J. Neural network ensembles, cross validation, and active learning. In: NIPS: 1995. p. 231–8.

Hu X. Using rough sets theory and database operations to construct a good ensemble of classifiers for data mining applications. In: Data Mining, 2001. ICDM 2001, Proceedings IEEE International Conference On. IEEE: 2001. p. 233–40. https://doi.org/10.1109/icdm.2001.989524.

Breiman L. Bagging predictors. Mach Learn. 1996; 24(2):123–40.

Breiman L. Random forests. Mach Learn. 2001; 45(1):5–32.

Vapnik V. The nature of statistical learning theory. 2013. https://doi.org/10.1007/978-1-4757-3264-1.

Friedman JH. Greedy function approximation: a gradient boosting machine. Ann Stat. 2001; 29:1189–232.

Wolpert DH. Stacked generalization. Neural Netw. 1992; 5(2):241–59.

Cereto-Massagué A, Ojeda MJ, Valls C, Mulero M, Garcia-Vallvé S, Pujadas G. Molecular fingerprint similarity search in virtual screening. Methods. 2015; 71:58–63.

Kingma D, Ba J. Adam: A method for stochastic optimization. arXiv preprint. 2014. arXiv:1412.6980.

Winter R, Montanari F, Noé F, Clevert D-A. Learning continuous and data-driven molecular descriptors by translating equivalent chemical representations. Chem Sci. 2019; 10(6):1692–701.

Peric B, Sierra J, Martí E, Cruañas R, Garau MA. Quantitative structure–activity relationship (qsar) prediction of (eco) toxicity of short aliphatic protic ionic liquids. Ecotoxicol Environ Saf. 2015; 115:257–62.

Choi J-S, Ha MK, Trinh TX, Yoon TH, Byun H-G. Towards a generalized toxicity prediction model for oxide nanomaterials using integrated data from different sources. Sci Rep. 2018; 8(1):6110.

Acknowledgments

The authors would like to thank the anonymous reviewers of this manuscript for their helpful comments and suggestions.

Funding

Publication costs were funded by Seoul National University. This research was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (Ministry of Science and ICT) [2014M3C9A3063541, 2018R1A2B3001628], the Brain Korea 21 Plus Project in 2018, and the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health and Welfare, Republic of Korea [HI15C3224]. The funding bodies did not play any roles in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Author information

Authors and Affiliations

Contributions

SK and HB designed and carried out experiments, performed analysis, and wrote the manuscript. JJ participated in experiments and editing the manuscript. SY conceived and supervised the research and edited the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Sunyoung Kwon and Ho Bae are equal contributors.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Kwon, S., Bae, H., Jo, J. et al. Comprehensive ensemble in QSAR prediction for drug discovery. BMC Bioinformatics 20, 521 (2019). https://doi.org/10.1186/s12859-019-3135-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12859-019-3135-4