Abstract

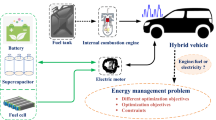

The new energy vehicle plays a crucial role in green transportation, and the energy management strategy of hybrid power systems is essential for ensuring energy-efficient driving. This paper presents a state-of-the-art survey and review of reinforcement learning-based energy management strategies for hybrid power systems. Additionally, it envisions the outlook for autonomous intelligent hybrid electric vehicles, with reinforcement learning as the foundational technology. First of all, to provide a macro view of historical development, the brief history of deep learning, reinforcement learning, and deep reinforcement learning is presented in the form of a timeline. Then, the comprehensive survey and review are conducted by collecting papers from mainstream academic databases. Enumerating most of the contributions based on three main directions—algorithm innovation, powertrain innovation, and environment innovation—provides an objective review of the research status. Finally, to advance the application of reinforcement learning in autonomous intelligent hybrid electric vehicles, future research plans positioned as “Alpha HEV” are envisioned, integrating Autopilot and energy-saving control.

Similar content being viewed by others

1 Introduction

The future transportation system revolves around two major themes: Autopilot and energy-saving driving. New energy vehicles in China, including electric vehicles (EVs), plug-in hybrid electric vehicles (PHEVs), and fuel cell vehicles (FCVs), stand as the core carrier. Positioned at the forefront of automotive advancements, new energy vehicles pave the way toward clean, green, and sustainable transportation [1].

EVs are powered by batteries as primary energy sources, with motors converting electrical energy into kinetic energy to propel the vehicle. As a result, the research focus lies on the advancement of motors, batteries, and electronic control systems [2]. While EVs hold immense potential, there are ongoing endeavors to address challenges such as enhancing driving range, developing fast charging solutions, ensuring safety measures, and establishing recycling and cascade utilization methods. Furthermore, the widespread promotion necessitates the development of infrastructure to support integration into daily life. FCVs utilize propulsion systems equipped with fuel cells and power batteries. Operating on hydrogen, fuel cell systems generate electricity through the electrochemical reaction between hydrogen and oxygen, emitting water as a byproduct. This electro-electric coupling positions FCVs as an environmentally friendly solution for the future [3]. Notably, FCVs present advantages for long-distance passenger or freight transportation, addressing the limitations of driving range in PEVs. Their quick refueling time, coupled with their eco-friendly nature and cleanliness, makes them an ideal choice for various applications, holding the promise of transforming transportation. However, FCVs are still facing challenges, including infrastructure, cost, and durability of fuel cells, hydrogen storage and distribution, hydrogen production, and recycling issues, resulting in the status not being ideal [4]. Although the above two types of cars have multiple sources of energy, only the electric motor serves as the power source, converting electrical energy into mechanical energy to propel the vehicle forward.

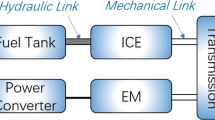

Hybrid electric vehicles (HEVs) represent an innovation integrating both gasoline and batteries as energy sources. By harnessing the power of internal combustion engines (ICEs) and motors, HEVs offer an efficient approach to propulsion. Moreover, the advent of rechargeable batteries has led to the development of PHEVs allowing for longer electric driving capabilities [5]. One of the mechanisms employed in HEVs to achieve energy savings lies in optimizing the operation of the engine within the high-efficiency range. Simultaneously, the motor mainly serves a key role in regenerative braking, converting braking energy into usable electricity. Nowadays, Hybrid powertrains can be mainly classified into three types: series, parallel, and hybrid, each offering unique advantages to suit diverse conditions [6]. Series HEVs can be likened to that of PEVs with a range extender. The ICE connects to the generator, converting mechanical energy into electric energy, and it allows the ICE to work within a high-efficiency range, and the motor serves as the sole power source for propulsion and regenerative braking. Parallel HEVs offer more complex and adaptable driving modes and can be subdivided into P0-P4 configurations based on the different positions of motors. Treating the P2 as an example, both the ICE and the motor can function independently to propel the HEV. When the demand is big, these two power sources can simultaneously deliver power through a mechanical structure. Hybrid HEVs stand as an excellent achievement of engineering with their sophisticated structure. Its primary essence lies in the power-split mechanism, engineered with planetary gears, with the Prius standing as a quintessential Hybrid HEV. One notable feature involves the integration of motors and generators, enabling simultaneous operations in driving and charging. Due to the intricate structure and technological challenges, only a handful of manufacturers have successfully achieved the proficiency required to develop Hybrid HEVs [7].

The design of the HEV needs a multi-faceted endeavor, encompassing configuration screening, parameter matching, and energy management [8]. The configuration design shapes the dynamic interplay among each power and transmission component, considering factors like technical foundations, potential challenges, and user requirements. The parameter matching needs an extreme balance, as it not only influences the performance of the vehicle but also has implications for manufacturing costs [9]. Moreover, it is essential to ensure that the dynamic can satisfy minimum requirements while considering various scenes, including extreme environments. This attention to detail guarantees the capability of HEVs to perform exceptionally across various conditions. The energy management strategy (EMS) plays a core role in enhancing energy-saving performance. It efficiently distributes power flow while adhering to constraints, leading to optimizations in fuel economy, exhaust emissions, battery characteristics, and other objectives [10, 11]. Now, three types of EMS have been summarized and proposed: rule-based, optimization-based, and learning-based EMS [12]. Rule-based EMS relies on a series of experiences to determine power distribution among various power sources. It is computationally efficient and often implemented in real controllers. Rule-based EMS can be further categorized into deterministic rules and fuzzy rules. However, one main limitation is the requirement of extensive experimental data, as well as limited adaptability to random scenes. Optimization-based EMS transforms the EMS into an optimization problem. By defining an objective function and considering system constraints, these strategies determine the control sequence that corresponds to the target within the given environment, such as fuel consumption and lifespan. Optimization-based EMSs are divided into global and instantaneous optimization. Global optimization-based EMSs adopt solvers such as dynamic programming (DP) [13] and Pontryagin’s minimum principle (PMP) [14], and the instantaneous optimization-based EMS use algorithms like equivalent consumption minimum strategy (ECMS) [15] and model predictive control (MPC) [16].

The birth of the learning-based EMS benefits from the development of artificial intelligence (AI), especially deep learning (DL) and reinforcement learning (RL). While some reviews have offered insights by categorizing algorithms and contributions belonging to RL-based EMSs [12, 17,18,19,20,21,22,23,24,25,26,27]. By contrast, especially for rule-based and optimization-based EMSs, many reviews have comprehensively revealed the novel research status. Because of the abundance of existing literature, this paper actively avoids the repetitive content of traditional EMSs, and the latest survey and review focuses on the reinforcement learning (RL)-based EMS and aims to present a thorough and up-to-date review by enumerating all contributions and drawing from research experiences. Given the relatively short development time, the total number of literature within an acceptable range makes it feasible to list and summarize the achievements of all RL-based EMSs.

The main contributions and the remainder of the paper are organized as follows. For the macroscopic grasp of historical development, Section 2 summarizes a brief history, famous scholars, and important achievements of DL, RL, and deep reinforcement learning (DRL) in the form of a timeline, and this is also the first time that the development process is fully displayed in the form of figures. Section 3 summarizes all contributions of RL-based EMSs for hybrid power systems and provides a comprehensive review. It collects 266 papers from databases such as Web of Science, IEEE Xplore, and ScienceDirect, focusing on EV, energy management, and RL as keywords. The state-of-the-art status is analyzed based on innovations in algorithms, powertrains, and environments for further discussion. Section 4 envisions future research aimed at developing an autonomous intelligent HEV, with "Alpha HEV" as the ultimate goal. Section 5 concludes with key opinions and insights.

2 Brief Development History of DL/RL/DRL

In this section, the timeline in Figure 1 presents a brief history of DL, RL, and DRL, including the significant achievements of notable scholars and forming a historical perspective that enhances comprehension of the evolution.

2.1 The Development History of DL

DL takes a leading position in the realm of machine learning (ML), representing a groundbreaking methodology aimed at uncovering intricate patterns and representations concealed within extensive datasets. Its objective is to replicate human-like analytical and learning capabilities, enabling machines to learn and grasp diverse forms of data, such as text, images, and sounds [29]. As shown in Figure 1, the roots of DL can be traced back to the 1940s when W.S. McCulloch and W. Pitts sought to simulate a neural reaction within the human brain when processing information. They developed a simplified artificial neuron model known as MCP [30]. It encompassed the fundamental functions of basic neurons: linear weighting, summation, and non-linear activation. Expanding upon the foundational model, in 1958, Frank Rosenblatt proposed the Perceptron [31], a two-layer feedforward network based on the MCP. The Perceptron can be employed to classify binary linear problems by mapping input matrices to output values and making decisions based on thresholds and weights. By adopting the loss minimization and gradient descent, training could yield a linear plane for classification. However, it was proven that the Perceptron was limited to linear problems. In 1982, John J. Hopfield designed the Hopfield Network [32], considered the earliest recurrent neural network (RNN). It links the output of each neuron to the input of other neurons and forms an innovation that has been critical for the future. Another breakthrough came from backpropagation, which led to the development of a multilayer feedforward network known as Back Propagation (BP). Geoffrey Everest Hinton proposed the BP in 1986 [33]. It involved signal propagation, error backpropagation, and weight updates. The BP network addressed the limitations of Perceptron, enabling nonlinear classification and becoming the milestone in DL. Two other key contributions were attributed to the Elman network [34] and the LeNet network [35]. The Elman network proposed by Jeffrey Elman in 1990, functioned as a feedforward network with local memory units, local feedback connections, and a multilayer structure, and it aims for speech recognition. The LeNet network, proposed by Yann LeCun in 1998, was the first convolutional neural network (CNN). Although effects were limited by data and computing power, the LeNet can successfully recognize handwritten fonts. Long short-term memory (LSTM) [36], proposed by Sepp Hochreiter in 1997, solved the vanishing gradient and long-term dependencies and became a basic model for processing and forecasting events in time series data. The core elements of an LSTM cell consist of three gates: the input gate, the forget gate, and the output gate. These gates regulate the flow of information, allowing it to retain key information over long sequences. In 2000, a feedforward neural language model (NML) [37] was proposed by Yoshua Bengio which employs neural networks to model the probability distribution of the natural text. It plays a key role in the field of natural language processing (NLP), particularly in tasks involving language generation and language understanding. Hence, Geoffrey Hinton, Yann Le Cun, and Yoshua Bengio are recognized as the "Big Three of Deep Learning."

In recent years, many amazing achievements have been witnessed, and the generative model ChatGPT, developed by OpenAI, is regarded as the most famous product. Before that, the generative adversarial network (GAN) [38], proposed by Ian Goodfellow in 2014, utilizes the two-module framework consisting of one generator and one discriminator to achieve impressive outputs through mutual learning. The training of GAN becomes a confrontation process: the generator and the discriminator will engage in competition with each other to improve their capabilities. Simply speaking, the generator tries to generate more realistic data, while discriminators try to distinguish real data from generated data. Eventually, the performance of the generator steadily enhances, resulting in generated samples that resemble the distribution of real data. Therefore, the GAN performs well in many tasks, including image generation, image super-resolution, style transfer, etc. In the same year, Kyunghyun Cho proposed a gated recurrent unit (GRU) [39], a simplified LSTM with fewer parameters. It solves the challenges of long-term memory and gradients by employing gating units. The model incorporates reset and update gates, determining how data combines with previous memory and how much memory will be retained. Compared to the LSTM, the GRU is demonstrated that it is beneficial in improving training efficiency and is favored. Additionally, in 2017, Ashish Vaswani from Google Brain published and proposed the Transformer [40], which is a network structure with significant influence. The Transformer has profoundly influenced NLP by introducing the self-attention mechanism, enabling it to capture long-range dependencies effectively. Its performance across diverse NLP tasks, propagation of pre-training and fine-tuning methods, and expansion into domains beyond NLP highlight its wide-reaching influence on DL and its practical applications. Compared with RNNs and CNNs, the self-attention mechanism enables the model to process sequence data by simultaneously considering all positions in the input, while the ability of parallel computing makes it feasible to handle lengthy information.

DL comprises three elements in Figure 2: algorithms, data, and computing power. As the global academic community continues to propose advanced algorithms and networks, a notable contribution has been made by ImageNet, introduced by Prof. Feifei Li. The ImageNet serves as a visualization database for object recognition, encompassing over 20000 categories and more than 14 million annotated images [41]. ImageNet large-scale visual recognition challenge (ILSVRC) becomes one of the most esteemed competitions in computer vision (CV). Many outstanding networks, including AlexNet, ZFNet, VGG, GoogLeNet, and ResNet, have emerged, with 2010 acknowledged as the dawn of DL. The champion of ILSVRC in 2012 was AlexNet, a collaborative effort by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton [42]. The core contributions lie in introducing the CNN composed of convolutional layers and fully connected layers, pioneering the adoption of ReLU as the activation function, devising the Dropout method to mitigate overfitting, adopting mini-batch gradient descent with momentum for convergence, utilizing data augmentation to combat overfitting, and employing the parallel computing of the NVIDIA graphics processing unit (GPU) to accelerate the training. In 2013, the champion ZFNet [43] mainly made modifications to the size, number, and convolutional strides of the kernel, and the next year witnessed an influence on the champion GoogLeNet [44] and the runner-up VGG [45]. GoogLeNet proposed the Inception structure, retaining more features within the input data. By eliminating the first two fully connected layers of AlexNet and employing average pooling, GoogLeNet reduced its parameter count to 5 million, a 12-fold reduction compared to AlexNet. Then, GoogLeNet designed auxiliary classifiers in intermediate layers to solve the vanishing gradient. VGG, proposed by a Visual Geometry Group at Oxford University, abandoned the large-scale kernels such as 11×11 and 5×5, instead using some smaller 3×3 kernels to achieve a larger receptive field. Moreover, VGG eliminated a local response normalization (LRN) used by AlexNet. Generally, a deeper neural network enables the extraction of more sophisticated features. However, the increase in the number of layers leads to flaws such as a vast number of parameters and the risk of overfitting. In 2015, the ILSVRC championship ResNet [46], proposed by Kaiming He, addressed these challenges with a key contribution: the residual module. The core idea lies in introducing "skip connections," where the input is directly added to the output port, preserving the original information and facilitating the gradient flow during backpropagation. Moreover, it introduced batch normalization to combat the vanishing gradient, mitigating reliance on initialization. At the same time, a unique initialization method was proposed specifically for the activation function ReLU.

Three elements of deep learning [28]

For computing power, AI computing has followed several trends. Firstly, the widespread adoption of specialized hardware accelerators such as GPUs and Tensor Processing Units (TPUs) has significantly improved the computational efficiency of tasks. Secondly, the heterogeneous computing platforms integrating different processors have enabled more efficient computation. Additionally, major cloud computing providers offer specialized cloud services, such as Amazon and Google, which provide flexible computing resources and high-performance hardware infrastructure. Of course, GPU is currently the most commonly used and popular. The GPU has contributed to the rise in computing power. Under the background of developing DL, GPUs serve as indispensable tools akin to shovels in a gold rush. NVIDIA defined GPUs in 1999, and under the leader Jensen Huang, the specialized processors were defined for computationally intensive tasks. Compared to central processing units (CPUs), GPUs offer advantages in parallel computing and performance, and they have revolutionized the gaming market, redefined computer graphics, and transformed parallel computing. Consequently, GPUs are widely adopted, specifically in game engines and rendering, allowing for rapid calculations of elements such as geometry, light, and shadows, facilitating the creation of more realistic visual effects. In the realm of DL, there are a large number of matrix computations and tensor operations involved. The parallel computing of GPUs can significantly accelerate the training process and make it possible to deal with large-scale datasets and models. Nowadays, the latest NVIDIA DGX equipped GH200, A100 or H100 provides solutions for large-scale AI infrastructure, and the first DGX was donated to Open AI, the artificial intelligence team that developed ChatGPT. NVIDIA DGX SuperPOD has become a one-stop AI platform that can cope with challenging AI and high-performance workloads. In this era, computing power has become the engine to promote the development of AI.

2.2 The Development History of RL

The basic process of RL in Figure 3 contains two basic modules: the Agent and the Environment, along with three variables: state, action, and reward. A basic process of learning can be described as when the Agent, guided by the current strategy, outputs an action based on the state of the Environment, the Environment executes the action, transitions to the next state, and generates a related reward. Relying on an instant reward, the Agent determines the loss and the gradient to update the current strategy. Through the iterative trial and error to carry out the above process, the Agent struggles for the optimal action corresponding to each state. When the convergence of the mean reward to its maximum, it means the acquisition of the optimal policy within the current environment [47]. After decades of development, the system of RL can be classified into three types based on the scope of application: DP, Monte Carlo (MC), and Temporal Difference (TD).

The DP-based RL belongs to the model-based and offline learning category, and it can in some cases be used to solve problems in discrete state and action spaces, where the agent tries to learn the policy to make optimal decisions in a given environment. The DP proposed by Richard Bellman [48] in 1954 aims to decompose complex large-scale problems into subproblems and combine subsolutions to construct the final optimal solution for the original problem. MC-based RL [49], falling under the model-free and offline learning category, was proposed by Stanislaw Ulam in 1949. The MC relies on data description, with a large of samples forming an accurate reflection. MC-based Reinforce [50], proposed by Ronald J. Williams in 1987, introduced the gradient descent to update policies. Faced with a large number of model-free tasks from the real world, it is difficult to solve them using DP, and the MC-based method heavily relying on sampling necessitates the completion of each episode before learning, making it challenging to satisfy the efficiency in applications. Richard Sutton proposed the TD algorithm in 1988 [51], a model-free and online learning-based category. Based on the indicator of on-policy and off-policy, TD-based RL contains SARSA (on-policy) [52] and Q-Learning (off-policy) [53], and a core difference lies in how to calculate the target prediction when updating the value function. SARSA was proposed by Gavin Adrian Rummery in 1994, and officially renamed by Sutton in 1996. Q-Learning originated from the work of Watkins, who proposed the TD-based Q-Learning algorithm and the multi-step TD in 1989 and analyzed the convergence in 1992 [54]. Q-learning has become the core algorithm in RL and serves as a foundational achievement for the development of RL. Furthermore, the experience replay, employed in various algorithms, was proposed by Lin in 1992 [55]. Subsequently, research shifted towards function approximation. Leemon Baird [56] and John Tsitsiklis [57] delved into related studies in 1995 and 1996, respectively. Richard Sutton published the book "Reinforcement Learning: An Introduction" in 1998, which was regarded as the bible of RL, and then he analyzed the policy gradient integrated with function approximation in 1999 [58]. Additionally, Inverse RL (IRL) was introduced by Andrew Ng and Stuart Russell in 2000 [59] and is usually utilized to define the reward function, with an apprenticeship architecture published in 2004 [60]. In 2006, the Monte Carlo Search Tree (MCST) was proposed by Rémi Coulom [61], influencing the creation of AlphaGo.

The above are classic examples of traditional RL, laying a theoretical and algorithmic foundation for the development and application of DRL algorithms.

2.3 The Development History of DRL

For RL, early-stage limitations in data and computing power hindered the progress, and RL has to deal with stability and reliability problems. In the meantime, the trial-and-error of RL agents led to models getting stuck or failing to converge to optimal solutions, and the uncertainty and unreliability made RL challenging for applications. On the other hand, the table-based RL had severe limitations, such as the "Curse of Dimensionality" and "Discretization Error".

In recent years, there have been significant advancements, driven by the efforts of teams like DeepMind and OpenAI. DeepMind, founded by Demis Hassabis in 2010 and later acquired by Google in 2014, has played a crucial role in RL. It proposed various DRL algorithms suitable for different tasks. In 2013, Volodymyr Mnih from DeepMind proposed the first DRL algorithm called Deep Q-Network (DQN) [62]. The improved version with target networks was officially in 2015 [63], demonstrating superior control in Atari 2600. In the improved DQN, the main improvement is to first use the neural network to parametrically fit the original value table, and then suppress the instability in the training through the target network, and also use experience replay to effectively break the correlation problem between each training sample. Next, more algorithms were successively proposed, such as deep deterministic policy gradient (DDPG) by Timothy P. Lillicrap [64], prioritized experience replay (PRE) by Tom Schauul [65], trust region policy optimization (TRPO) by John Schulman [66], and deep recurrent q-network (DRQN) by Matthew Hausknecht [67]. In 2016, DeepMind made a breakthrough in the game of Go named Alpha Go [68]. By integrating DL, RL, and MCST, Alpha Go defeated Fan Hui, Lee Sedol, and Ke Jie. More improved versions of AlphaGo Zero [69] and AlphaZero [70] obtained greater achievement in mastering board games. Other classic DRL algorithms or improvement measures have also been proposed, including Double DQN (DDQN) by Hasselt [71], dueling network by Wang [72], asynchronous advantage actor-critic (A3C) by Mnih [73], proximal policy optimization (PPO) by Schulman [74], soft actor-critic (SAC) by Haarnoja [75], twin delayed deep deterministic policy gradient (TD3) by Fujimoto [76], noise network by Fortunato [77], and rainbow by Hessel [78].

DRL has also been applied to other fields. In 2019, Oriol Vinyals proposed the AlphaStar achieved grandmaster-level performance in StarCraft II [79]. AlphaStar is a remarkable achievement that has captivated the world of Esports. This groundbreaking AI has mastered the control strategies of all races in the game. In 2020, MuZero [80] proposed by Julian Schrittwieser grasps considerable effects in Atari and board games such as Go, Chess, and Shogi. Recent advancements include AlphaFold proposed by John Jumper [81] in 2021, for predicting protein structures, as well as the application in the Gran Turismo (GT) Sport defeating top players on the PlayStation by Sony, named GT Sophy [82], and successfully controlling superheated plasma in nuclear fusion reactors under the collaboration of DeepMind and Swiss Federal Institute of Technology in Lausanne [83]. In 2023, scholars from Tsinghua University, Cao Zhong and Feng Shuo, made contributions to autopolit [84] and safety testing [85] relying on DRL algorithms.

3 The Survey and Review of RL-Based EMSs

3.1 The State-of-the-Art Survey of Research Status

As of July 21, 2023, a state-of-the-art survey was completed in major academic databases such as Web of Science, IEEE Xplore, and ScienceDirect, and a total of 266 papers have been searched about the keywords: electric vehicle, energy management, and RL. According to all current literature, the comprehensive survey about RL-based EMSs contains the universities and institutions, and contributions published in conference and journal papers. Through sorting and analysis, all contributions can be classified into algorithm innovation, powertrain innovation, and environmental innovation. Due to the length of the paper and the large amount of data, the most detailed content in the form of tables is uploaded at https://github.com/KaysenC/Reinforcement-Learning-based-Energy-Management-for-Hybrid-Power-Systems.

The detailed tables mainly include the following contents:

-

(1)

Statistics on the earliest time and the number of results for RL-based EMSs for universities and institutions.

-

(2)

Statistics on authors, powertrains, algorithms, and contributions of conference papers.

-

(3)

Statistics on authors, powertrains, algorithms, and contributions of journal papers (algorithm innovation, powertrain innovation, and environmental innovation).

Moreover, the VISIO file containing the timeline depicted in Figure 1 has been uploaded, and we encourage scholars to contribute enhancements and rectifications.

Within the collection, there are 71 conference papers [19, 86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155] and 195 research papers [12, 17, 18, 20,21,22,23,24,25,26,27, 156,157,158,159,160,161,162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201,202,203,204,205,206,207,208,209,210,211,212,213,214,215,216,217,218,219,220,221,222,223,224,225,226,227,228,229,230,231,232,233,234,235,236,237,238,239,240,241,242,243,244,245,246,247,248,249,250,251,252,253,254,255,256,257,258,259,260,261,262,263,264,265,266,267,268,269,270,271,272,273,274,275,276,277,278,279,280,281,282,283,284,285,286,287,288,289,290,291,292,293,294,295,296,297,298,299,300,301,302,303,304,305,306,307,308,309,310,311,312,313,314,315,316,317,318,319,320,321,322,323,324,325,326,327,328,329,330,331,332,333,334,335,336,337,338,339]. Figure 4 reveals the representation of the publication number over the years, highlighting the development of RL-based EMSs for hybrid power systems. An obvious aspect is that the origin application of RL can be traced back to as early as 2012, and it commenced its significant development in 2018. Figure 5 reveals the top 15 journals with the number of papers, and the pioneering work of introducing RL into EMSs was completed by scholars from National Chiayi University, who focused on the hybrid electric bicycle [158]. Since then, this revolutionary field has expanded to other universities such as the University of Southern California, the University of Michigan, the University of California, the Beijing Institute of Technology, and Chongqing University, with their team developing a unique set of technical routes.

It is key to note that the achievements of RL in other fields like Egames and Autopilot, have yielded numerous notable results published in prestigious journals such as Nature and Science. Therefore, there is potential for RL-based EMSs of hybrid power systems, with contributions extending beyond optimization, adaptability, and generalization. More scholars are investigating the RL-based EMS for FCVs, HEVs, EVs equipped with supercapacitors or hybrid battery systems, (HEBs), hybrid electric tracked vehicles (HETVs), etc., and Q-learning is considered the most popular. Subsequently, Qi et al. [169] introduced the use of DRL and defined the third category of learning-based EMSs. Liu [164,165,166,167, 177] made numerous contributions and proposed RL-based EMSs that minimize fuel consumption across various conditions with the help of mathematical theories like transition probability matrix (TPM) and Kullback-Leibler (KL) divergence. He et al. [165] also proposed a predictive EMS, combining speed prediction and RL, and the proposed strategy was validated by hardware-in-the-loop (HIL). Qi et al. [95] employed the DQN to learn EMS based on historical mileage information, while Li et al. [162] used an actor-critic (AC) architecture for continuous state and action spaces. In the next few years, classic algorithms and improved approaches like Q-Learning and experience replay have usually been adopted, as have popular algorithms like DQN, Double DQN, Dueling DQN, DDPG, and priority experience replay [161]. More recently, some improved algorithms such as SAC, PPO, TD3, A3C, and transfer learning (TL) have been tentatively applied. Additionally, AMSGrad, Fast Q-Learning, NAG-Adam, and Munchausen SAC have been tried and utilized to improve efficiency. Meanwhile, multi-agent reinforcement learning (MARL) gained more attention. For some typical scenarios like car following and traffic flow, MARL, like multi-agent deep deterministic policy gradient (MADDPG), facilitated cruising driving and energy management. Scholars also tried to combine RL agents with rule-based or optimization-based EMSs. Relying on the stability of PMP/ECMS, researchers begin to employ RL to adjust adaptive parameters such as the co-state or equivalent factor (EF), as well as LSTM and learning vector quantization (LVQ) networks are utilized to improve accuracy and efficiency within the MPC algorithm.

According to statistics on the current status, the year 2018 marked the end of the initial stage and the beginning of the development stage of learning-based EMSs. The following content summarizes and reviews all of the contributions after 2018, focusing on journal papers.

3.2 The Comprehensive Review of Research Status

Between 2019 and July 2023, a total of 166 journal papers were published, focusing on contributions categorized into algorithm, powertrain, and environment, and Tables 1, 2, and 3 present representative papers for each of these categories.

3.2.1 Algorithm Innovation

Algorithm innovation often plays a pivotal role throughout, indicating that when improving efficiency and addressing inherent flaws.

First of all, emerging researchers made contributions by employing various RL algorithms. Subsequently, individuals delved beyond Q-Learning, exploring alternative algorithms like SARSA, Dyna-H, and DDPG. Furthermore, challenges posed by the "curse of dimensionality" and "discretization error" prompted scholars to pivot towards DRL algorithms. The quest for algorithmic innovation represents a significant advancement in the field, fostering a dynamic and vibrant research environment. For instance, Fast Q-Learning in Ref. [174], DDPG in Ref. [176], Dyna-H in Ref. [177], Dueling structure in Ref. [178], distributed DRL with A3C and DPPO in Ref. [198], TD3 in Ref. [196], SAC in Ref. [211], and Nash Q-Learning of MARL in Ref. [246]. Techniques like PER have gained more attention, and the adoption of TL has commenced. These contributions aim to enhance the training efficiency, solve flaws like overestimation, and achieve more efficient nonlinear fitting of value functions. For the current research, Guo et al. [183], Lee et al. [185], Lian et al. [187], Wang et al. [221], and Xu et al. [223] designed TL-based EMSs, and Lian et al. [187] analyzed the transfer process in detail for four hybrid power systems. Scholars have used the latest algorithms more frequently, meaning the advantages such as TD3 and MARL are grasped. Furthermore, when the reward function contains multiple items, Lv et al. [214] used IRL to determine the suitable weight of each item.

Moreover, one branch is dedicated to enhancing efficiency through self-designed methods. For example, Li et al. [176] improved the exploration by storing optimal results based on DP-based EMSs in the experience pool. Many researchers have utilized heuristic experience to guide the RL agent in the action space, like the brake-specific fuel consumption (BSFC) curve [186] and battery characteristics, or focused on updating the TPM by discriminative mechanisms, like KL divergence [174, 213, 216, 229], and induced matrix norm (IMN) [201] for modeling the environment and triggering the update. Some results have been achieved by combining rule-based and learning-based policies, capitalizing on strengths, and compensating for limitations. Tang et al. [220] merged learning-based EMSs with the rule-based engine start-stop, controlling the working period of the engine and enabling it to work efficiently when required. Xu et al. [199] adopted the ECMS-based EMS and heuristic control to pre-initialize the Q-table as the warm start, and Wu et al. [244] utilized a rule-based mode control to eliminate unreasonable exploration. The above are all auxiliary improvements to the RL agent in the control process.

Then, RL is regarded as a controller for key parameters in traditional EMSs. It involves selecting the co-state for PMP-based EMSs and the EF for ECMS-based EMSs to promote adaptability in stochastic environments. Guo et al. [182], Lee et al. [193], and Hu et al. [209, 210] made main contributions similar to the above idea. Various problems in the simulation have been pointed out. Hu et al. [209] identified several main challenges like deployment inefficiency, safety constraints, and the gap between virtual simulation and the real world, and they are incorporating data from both real and simulated environments to guide RL agents.

In addition, the specific parameters and settings that affect the training process are analyzed. Xu et al. [223] discuss the impact of introducing noise in action and parameter spaces. Other scholars explore novel optimizers like AMSgrad [181] or delve into hyperparameters [190] such as discretization, and experience pool. Wang et al. [243] provide a comparison of 13 RL-based EMSs, analyzing various aspects like reward functions, computational efficiency, and convergence.

Finally, the safety of RL has received significant attention. During the training, RL agents learn the optimal strategy by trial and error while balancing the exploration-exploitation. Empirical evidence suggests that RL overlooks the dynamic of powertrains when generating actions, resulting in abrupt changes in actions. To address this problem, measures such as the penalty term for unreasonable actions [196], designing the coach mechanism to ensure training safety [202], and the rule-based controller to eliminate unreasonable distribution have been employed to constrain control actions [244]. Zhou et al. [204] and Hu et al. [210, 231] are taking a heuristic rule to eliminate irrational allocation and ensure safe exploration. Wang et al. [221] adopt the action masking technology to prevent unreasonable actions. Therefore, it is crucial to give enough attention to the safety of RL to ensure their practical deployment, as achievements realized at the simulation level may not effectively translate into real-world environments.

3.2.2 Powertrain Innovation

Powertrain innovation means promoting the diversification of powertrains and the realization of modeling schemes. This goes beyond the traditional focus on fuel economy and SOC, and the target aims at achieving multi-objective optimization, such as efficiency, temperature, and lifespan.

Firstly, as current research progresses, literature reflects significant diversity in terms of hybrid power systems, such as EVs with hybrid battery systems (high power battery and high energy battery) or supercapacitors, FCVs with fuel cells and batteries, and the three-energy system with fuel cells, batteries, and supercapacitors. Therefore, power distribution can also appear in EVs and FCVs, which means that EMSs are not only suitable for gasoline-electric hybrid systems. As to some special-purpose vehicles, some also regard them as targets, such as the rail transit adopted by Yang et al. [255], hybrid construction vehicles (HCVs) targeted by Zhang et al. [272], and electric-hydraulic HEVs targeted by Zhang et al [293, 294]. Deng et al. [258] proposed the RL-based EMS that minimizes hydrogen consumption and fuel cell aging costs for the unique fuel cell railway vehicle. In addition, for many researchers from the Beijing Institute of Technology, HETVs from special vehicles and series/parallel HEVs from public transportation are studied, and the difficulty of control is also significantly increased [280].

Another item lies in focusing on temperature and lifespan. Many efforts have been dedicated to alleviating degradation and extending life through the utilization of various models. When the capacity decays to 80% of the initial capacity, the battery is treated as scrapped. Li et al. [252] built equivalent circuit models, electro-thermal models, and aging models for hybrid battery systems equipped with high-energy and high-power batteries. Zhang et al. [292] focused on the lithium-plating suppressed effect and designed the hybrid particle swarm optimization to complete the parameter identification. Haskara et al. [262] and Deng et al. [279] took temperature as the main goal and realized the temperature management of the cabin by adding heating ventilation air conditioning. Wu et al. [253] introduced the over-temperature and multi-stress-driven degradation costs.

Next, some scholars merged their expertise from unique domains and introduced specialized models, which made the modeling method closer to actual components and reflected many effects. Zhang et al. [256, 271] performed research on the dedicated dual-mode combustion engine with the spark ignition (SI) and homogeneous charge compression ignition (HCCI) modes. Wang et al. [267] took a waste heat recovery system based on the organic Rankine cycle. Hong et al. [281] and Zhang et al. [294] completed a training process of power distribution and mode switching strategy by using the RL for electro-hydraulic hybrid power systems.

Finally, some scholars make partial contributions based on improving the dynamic model, which is also the essential direction that is currently lacking in the EMS. Han et al. [280] added the lateral dynamics of the vehicle and introduced the steering resistance into the design of the EMS, resulting in the vehicle model getting rid of the status of just focusing on the longitudinal dynamics in the past. In this regard, there is still a lot of work to be done. For a real car, an ideal strategy, the high-fidelity dynamics model, an experienced driver, and a smooth driving environment are all factors to focus on.

3.2.3 Environmental Innovation

Environmental innovation represents the advancement of EMSs, involving the integration of technologies from more fields to enhance eco-driving. The development of autopilot and communication paves the way for energy-saving control for intelligent connected HEVs (ICHEVs) [340].

First, SOC planning and velocity prediction are primarily revolved. By using historical data or so-called connected information such as vehicle-to-vehicle (V2V) or vehicle-to-infrastructure (V2I), to gain insights into the environment, the future short-term SOC and velocity trajectories could be planned. For SOC planning, there are local SOC trajectories designed by Guo et al. [295] and the space-domain-indexed SOC trajectory obtained by Li et al. [296] through a history of cumulative trip information. Similarly, Zhang et al. [301] are trying to employ GPS to complete the global planning of the SOC. Another item is the short-term prediction of speed, which not only allows RL to grasp future information but also researchers to integrate RL with the MPC framework. For speed prediction, Chen et al. [299], Yang et al. [322], and Wang et al. [334] adopted the multi-step Markov chain as predictors, and Liu et al. [329] and Kim et al. [317] utilized LSTM as the predictive tool. Studies have also demonstrated the advantages of the LSTM in velocity prediction, ensuring both accuracy and efficiency.

Additionally, the hierarchical structure under connected environments starts to be focused on, and more factors like driving conditions and driving styles reflecting randomness and personalized influences, are usually taken into account. Zhang et al. [302] researched eco-driving with route planning in the environment and energy management in the system. Li et al. [307] assumed that DDPG is used in the connected traffic environment to realize the reference speed planning in the car-following scene, and the A-ECMS is utilized for energy management. Peng et al. [331, 332] and Zhang et al. [338] both aimed at eco-driving and combined adaptive cruise control (ACC) with EMSs based on RL to achieve co-optimization in terms of velocity and power distribution. Moreover, in the car-following scenario, maintaining a safe following distance and maintaining driving comfort has also become one of the main goals.

Another area is the construction of featured driving cycles. While most EMSs use standard driving cycles, the features in real-world scenarios, influenced by many factors such as traffic signals, traffic flow, pavement properties, and weather, are overlooked. Therefore, research has begun constructing featured driving cycles based on real data, using techniques such as principal component analysis (PCA) and K-means, which provide a realistic and intuitive reflection of velocity. He et al. [304] constructed the traffic environment containing information on surrounding vehicles and signal lights in the SUMO. Chang et al. [310], Tang et al. [320], and Huang et al. [327] adopted PCA and clustering algorithms to form the featured driving cycle. For the ramp scenario, Lin et al. [318] proposed a DDPG-based merging controller. Yan et al. [321] proposed DRL-based launch control to select the appropriate start time for reducing frequent starting and stopping through the traffic intersection. Moreover, Chen et al. [324] employed a traffic-in-the-loop simulator under various urban scenarios.

Next, more AI and ML technologies have been integrated. For instance, object detection algorithms, you only look once (YOLO), are utilized to identify traffic signals and estimate traffic flow according to the number of surrounding cars by Wang et al. [309], and Tang et al. [319] employ the YOLO to detect the leading car and measure the following distance in the car-following scene. In addition, considering the impact of different road surfaces on driving safety, Chen et al. [311] trained the VGG16 neural network to identify and estimate the optimal slip rate for safe braking. Chen et al. [323] also built a lane-level map through the route and geographic data from Google Maps and Google Earth and adopted multiple DRL agents to achieve integrated control of the ICHEV. Moreover, driving condition recognition is often mentioned, and learning vector quantization (LVQ)-based recognition is employed by Chang et al. [310], Fang et al. [312], and Liu et al. [328], while Yang et al. [322] employees probabilistic neural networks for pattern recognition.

Finally, MARL algorithms and cloud and edge computing platforms have emerged as the burgeoning direction. Wang et al. [335] used independent SAC belonging to the MARL to research eco-driving and EMSs. Peng et al. [331] proposed a similar idea and used MARL to achieve the ACC and EMS tasks for eco-driving. Furthermore, Both Hu et al. [306] and Li et al. [308] proposed a training concept of cloud platforms and edge computing, which will be an inevitable method in the future. For a generalized strategy, large-scale computing devices on cloud platforms can satisfy the requirements for speed and computing power, and for personalized strategies, edge devices assigned to individuals, such as NVIDIA Jetson, are ideal training machines.

3.3 Discussion on RL-Based EMSs

Figure 6 summarizes the majority of the research contributions, forming the most intuitive expression, which is beneficial for researchers to quickly grasp the mainstream.

However, the enhancement of research popularity and the increase in the number of literature could only represent the positive aspect of the development of RL-based EMSs, but there are also some difficulties worth discussing. Next, the discussion on RL-based EMSs for hybrid power systems will also be carried out from three aspects: algorithm, data, and computing power.

-

(1)

Algorithm: In terms of algorithm improvements, TD3, SAC, and MARL are currently the most popular modules. They are committed to refining the training process of RL by enhancing various improvements to networks, amplifying both exploration and exploitation, and expanding the scale of training modes. Meanwhile, targeting online scenarios, the on-policy-based PPO that does not rely on experience pools has also achieved significant accomplishments. In fact, if we liken RL agents to students, researchers act as teachers responsible for educating neural networks. Currently, most literature focuses on gradually improving training schemes in offline simulation and overcoming inherent flaws. This is like saying that teachers should designate different plans for different teaching contents in daily classes, and they should match the abilities and personalities of different students to achieve better guidance. Therefore, for the development of RL-based EMSs, several challenges should be encountered:

-

a)

Selecting the appropriate algorithm for different tasks.

-

b)

Adjusting neural network structures, state spaces, action spaces, reward functions, and hyperparameters.

-

c)

Verifying the generalization, safety, and robustness of trained agents in offline scenarios.

-

d)

Bridging the gap between simulation environments and the real world during offline training.

-

e)

Addressing the limitation of depending solely on offline simulation for modeling and training, the research aims to accomplish the ongoing updating of RL-based EMSs in the complex real-world while ensuring control safety.

-

f)

The most debated topic in the AI community revolves around the necessity for trained models to comply with human morals and law. Otherwise, it may result in the emergence of "Terminators" rooted in silicon-based life forms as agents evolve.

Nowadays, progress is focused on addressing stages such as generalization and safety, online learning, and handling gaps. If RL is going to be deployed on real HEVs, there is still much work to be finished.

-

a)

-

(2)

Data: As mentioned earlier, RL is one of the subsets of ML, distinct from DL in that it does not heavily manipulate labeling. It relies solely on a defined reward to assess the quality of outputs relative to inputs. Therefore, both DL and RL as sample-driven forms strive to fit complex and abstract relationships between all inputs and outputs. Regarding RL-based EMSs, the most controversial aspects lie in the degree of modeling of HEVs in offline training environments and the effectiveness of simulating driving conditions. Similar to autonomous driving, the environment in which hybrid power systems operate is complex, dynamic, and subject to various influencing factors such as temperature, aging, wear and tear, and potential accidents. Thus, if RL agents are to be trained for HEVs or autonomous driving, the dynamic nature of the environment and the "long tail" scenarios that cannot be exhaustively traversed pose significant challenges in training data collection. Currently, researchers from the University of Zurich have for the first time applied DRL to real unmanned aerial vehicles [341] and achieved championship-level effect in drone races against humans. They utilized residual models of dynamics to compensate for the samples in the simulation environment. However, it is noted that trained DRL agents fail when faced with different lighting or collisions leading to drone crashes, indicating a lack of robustness comparable to human drivers.

-

(3)

Computing power: Although MathWorks has released a toolkit for RL, Python-based frameworks like TensorFlow and PyTorch remain the primary modeling environments for DRL agents. By operating in the form of tensor on GPUs, training efficiency can be significantly enhanced. In practice, for RL-based EMSs, the demand for GPU computing power is not particularly evident, as the main architecture typically consists of fully connected networks, and the input state is represented as tensor-form data. Instead, the reliance is more on Simulink-based powertrains and rendered 3D scenes of driving environments like CARLA and NVIDIA Driven sim. However, achieving end-to-end energy-saving autonomous driving with vehicle visualization will be a challenge for RL. Drawing from developments in robotics, training multiple agents in a 3D training environment will require substantial computing power. The NVIDIA Omniverse platform and the NVIDIA Isaac Sim provide tools for robot simulation and data generation, offering realistic and physically accurate virtual environments for developing, testing, and managing robots. Additionally, creating physically accurate large-scale simulations will enable the development of real-world digital twins, necessitating extensive support, such as NVIDIA OVX to accelerate AI-enabled workloads.

Finally, as stated in the tenets of the two AI teams, Deep Mind, and OpenAI: "Solve intelligence. Use it to make the world a better place" and DRL is the essential key that opens the door to the future era of AI.

4 The Future of Autonomous Intelligent HEVs

The advancement of autonomous driving and RL algorithms presents an enticing opportunity for developing autonomous intelligent HEVs, which may be named the "Alpha HEV", and we have forecasted the dependable technical strategy to attain complete control through RL.

Firstly, it completely gets rid of the backward simulation, the crude method of calculating the demand power and the dynamic, and easy models of engines and batteries. Not only to strengthen the modeling of each component, such as the engine, motor, battery, gearbox, clutch, shaft, brake, etc. but also to improve the vehicle model as a whole. Incorporating a forward simulation that includes a driver, the vehicle body should also be equipped with more degrees of freedom, and essential parts such as suspensions, tires, and body must be included. These enhancements enable the vehicle to respond realistically to external environments and internal systems, resulting in accurate and reliable simulations. Furthermore, the modeling method of road surfaces becomes necessary. Beginning with basic features like slope, curvature, and road signs, more advanced factors should be involved like road materials, road aging, and dynamic variations influenced by weather. These elements not only impact energy-efficient driving but also play a key role in safety, comfort, and other aspects, as depicted in Figure 7.

Then, achieving intelligent control through RL requires a fusion of multi-modal information. Commands in Figure 8 for the car encompass a wide range of functionalities, including acceleration, braking, steering, and managing the engine and gearbox within a powertrain. The intricate control facilitates efficient driving and the integration of various components for better performance. Drawing inspiration from an analogy of DQN in the Atari 2600, HEVs can also benefit from visual perception and high-definition maps from autopilot systems. This involves adding vehicle vision to RL agents, allowing them to navigate the path forward. Relying on vehicle vision and HD maps is reliable for perceiving surrounding vehicles and obtaining path information, and the ultimate goal is to achieve integrated control by using mult-agents, effectively allowing RL to fully take over all the controlled components.

Finally, there are more strict requirements for perception, decision-making, planning [342], software calibration, and hardware computing power. Taking inspiration from the Full Self-Driving (FSD) of Tesla, a three-dimensional perception space is constructed from real-time images captured by eight cameras, allowing for a comprehensive understanding of the surroundings in Figure 9, and this perception space is extended into the temporal dimension to ensure accurate perception of temporarily obscured objects. Then, a path is determined and planned, and the vehicle is controlled to track the route while the powertrain is managed at the same time. As an even more ambitious idea, a large DRL agent that acts as both a driver and an engineer is trained to handle all tasks solely from real-time images, and the perception and decision-making about autonomous driving will become cognition about the driving environment and passenger needs. While this notion presents significant challenges, it holds the potential to revolutionize autonomous driving. In conclusion, the deployment of RL agents in the "Alpha HEV" hinges on end-to-end autopilot functionality, resilient perception systems, and sophisticated decision-making algorithms, requiring collaboration among industry leaders to achieve safe and energy-saving autopilot.

5 Conclusions

This paper provides a state-of-the-art survey and review of the status in the field of RL-based EMSs for hybrid power systems. Firstly, it begins by tracing the development history of DL, RL, and DRL and highlighting many milestones and famous scholars. As the painter, we very much welcome and thank all subsequent scholars for their corrections and more comprehensive additions to this timeline in Figure 1. Then, the focus shifts to shifts to RL-based EMSs, where a total of 266 papers have been collected as of July 21, 2023. The detailed tables summarizing all of the content have been uploaded at https://github.com/KaysenC/Reinforcement-Learning-based-Energy-Management-for-Hybrid-Power-Systems based on scholars, years, target powertrains, algorithms, and contributions. Moreover, statistical information is presented to illustrate the annual growth of research papers, providing valuable insights into the evolving interest in the field. Then, a comprehensive review of the current status is completed, with a novel emphasis on algorithm innovation, powertrain innovation, and environmental innovation. At the same time, difficulties that may arise in the subsequent development stages of RL-based EMSs are discussed from the aspects of algorithms, data, and computing power.

The ultimate goal is named "Alpha HEV," an autonomous intelligent HEV, and three main directions are highlighted: enhancing modeling, full takeover by DRL, and cognitive-oriented energy-saving autonomous driving.

In all, this paper summarizes the latest research status and presents the promising outlook for DRL-based HEVs. The pursuit of autonomous intelligent HEVs holds great potential to revolutionize the automotive industry, leading to efficient and environmentally friendly vehicles.

Data availability

The data used in this study are described in detail within this manuscript and are available upon request in electronic format. The datasets are referenced within the text and corresponding citations are provided for reader access. Readers are encouraged to utilize these data for further research and analysis within reasonable bounds, while maintaining appropriate citation and data usage standards. (https://github.com/KaysenC/Reinforcement-Learningbased-Energy-Management-for-Hybrid-Power-Systems) For further inquiries regarding data availability or access to datasets, please contact the authors.

Abbreviations

- A3C:

-

Asynchronous advantage actor-critic

- AC:

-

Actor-critic

- ACC:

-

Adaptive cruise control

- ADVISOR:

-

Advanced vehicle simulation

- AI:

-

Artificial intelligence

- BP:

-

Back propagation

- BSFC:

-

Brake-specific fuel consumption curve

- CNN:

-

Convolutional neural network

- CPU:

-

Central processing unit

- CV:

-

Computer vision

- DDPG:

-

Deep deterministic policy gradient

- DDQN:

-

Double DQN

- DL:

-

Deep learning

- DP:

-

Dynamic programming

- DQL:

-

Deep Q-learning

- DQN:

-

Deep Q-network

- DRL:

-

Deep reinforcement learning

- DRQN:

-

Deep recurrent Q-network

- ECMS:

-

Equivalent consumption minimum strategy

- EF:

-

Equivalent factor

- EMS:

-

Energy management strategy

- ERDEV:

-

Extended-range delivery electric vehicles

- FCV:

-

Fuel cell vehicle

- FSD:

-

Full self-driving

- GAN:

-

Generative adversarial network

- GPS:

-

Global positioning system

- GPU:

-

Graphics processing unit

- GRU:

-

Gated recurrent unit

- GT:

-

Gran turismo

- HCCI:

-

Homogeneous charge compression ignition

- HEB:

-

Hybrid electric bus

- HETV:

-

Hybrid electric tracked vehicle

- HEV:

-

Hybrid electric vehicle

- HIL:

-

Hardware-in-the-loop

- ICE:

-

Internal combustion engine

- ICHEV:

-

Intelligent connected HEV

- ILSVRC:

-

ImageNet large-scale visual recognition challenge

- IMN:

-

Induced matrix norm

- IRL:

-

Inverse RL

- KL:

-

Kullback-Leibler

- LSTM:

-

Long short-term memory

- LVQ:

-

Learning vector quantization

- MADDPG:

-

Multi-agent deep deterministic policy gradient

- MARL:

-

Multi-agent reinforcement learning

- MC:

-

Monte Carlo

- MCST:

-

Monte Carlo Search Tree

- ML:

-

Machine learning

- MPC:

-

Model predictive control

- NLP:

-

Natural language processing

- NML:

-

Neural language model

- PCA:

-

Principal component analysis

- PEV:

-

Pure electric vehicle

- PHEV:

-

Plug-in hybrid electric vehicle

- PMP:

-

Pontryagin’s minimum principle

- PPO:

-

Proximal policy optimization

- PRE:

-

Prioritized experience replay

- RL:

-

Reinforcement learning

- RNN:

-

Recurrent neural network

- SAC:

-

Soft actor-critic

- SI:

-

Spark ignition

- TD:

-

Temporal difference

- TD3:

-

Twin delayed deep deterministic policy gradient

- TL:

-

Transfer learning

- TPM:

-

Transition probability matrix

- TRPO:

-

Trust region policy optimization

- V2I:

-

Vehicle-to-infrastructure

- V2V:

-

Vehicle-to-vehicle

- YOLO:

-

You only look once

References

Z Liu, H Hao, X Cheng, et al. Critical issues of energy efficient and new energy vehicles development in China. Energy Policy, 2018, 115: 92-97.

H He, F Sun, Z Wang, et al. China’s battery electric vehicles lead the world: Achievements in technology system architecture and technological breakthroughs. Green Energy and Intelligent Transportation, 2022: 100020.

X Zhao, L Wang, Y Zhou, et al. Energy management strategies for fuel cell hybrid electric vehicles: Classification, comparison, and outlook. Energy Conversion and Management, 2022, 270: 116179.

Z Li, A Khajepour, J Song. A comprehensive review of the key technologies for pure electric vehicles. Energy, 2019, 182: 824-839.

M F M Sabri, K A Danapalasingam, M F Rahmat. A review on hybrid electric vehicles architecture and energy management strategies. Renewable and Sustainable Energy Reviews, 2016, 53: 1433-1442.

D D Tran, M Vafaeipour, Baghdadi M El, et al. Thorough state-of-the-art analysis of electric and hybrid vehicle powertrains: Topologies and integrated energy management strategies. Renewable and Sustainable Energy Reviews, 2020, 119: 109596.

Y Cao, M Yao, X Sun. An overview of modelling and energy management strategies for hybrid electric vehicles. Applied Sciences, 2023, 13(10): 5947.

H Pei, X Hu, Y Yang, et al. Designing multi-mode power split hybrid electric vehicles using the hierarchical topological graph theory. IEEE Transactions on Vehicular Technology, 2020, 69(7): 7159-7171.

X Hu, J Han, X Tang, et al. Powertrain design and control in electrified vehicles: A critical review. IEEE Transactions on Transportation Electrification, 2021, 7(3): 1990-2009.

B HomChaudhuri, R Lin, P Pisu. Hierarchical control strategies for energy management of connected hybrid electric vehicles in urban roads. Transportation Research Part C: Emerging Technologies, 2016, 62: 70-86.

M A Hannan, F A Azidin, A Mohamed. Hybrid electric vehicles and their challenges: A review. Renewable and Sustainable Energy Reviews, 2014, 29: 135-150.

A S Mohammed, S M Atnaw, A O Salau, et al. Review of optimal sizing and power management strategies for fuel cell/battery/super capacitor hybrid electric vehicles. Energy Reports, 2023, 9: 2213-2228.

J Peng, H He, R Xiong. Rule based energy management strategy for a series–parallel plug-in hybrid electric bus optimized by dynamic programming. Applied Energy, 2017, 185: 1633-1643.

S Zhang, X Hu, S Xie, et al. Adaptively coordinated optimization of battery aging and energy management in plug-in hybrid electric buses. Applied Energy, 2019, 256: 113891.

H Li, A Ravey, A N’Diaye, et al. Online adaptive equivalent consumption minimization strategy for fuel cell hybrid electric vehicle considering power sources degradation. Energy Conversion and Management, 2019, 192: 133-149.

F Zhang, X Hu, R Langari, et al. Energy management strategies of connected HEVs and PHEVs: Recent progress and outlook. Progress in Energy and Combustion Science, 2019, 73: 235-256.

X Hu, T Liu, X Qi, et al. Reinforcement learning for hybrid and plug-in hybrid electric vehicle energy management: Recent advances and prospects. IEEE Industrial Electronics Magazine, 2019, 13(3): 16-25.

R Ostadian, J Ramoul, A Biswas, et al. Intelligent energy management systems for electrified vehicles: Current status, challenges, and emerging trends. IEEE Open Journal of Vehicular Technology, 2020, 1: 279-295.

Q Feiyan, L Weimin. A review of machine learning on energy management strategy for hybrid electric vehicles. 2021 6th Asia Conference on Power and Electrical Engineering (ACPEE), 2021: 315–319.

T Liu, W Tan, X Tang, et al. Driving conditions-driven energy management strategies for hybrid electric vehicles: A review. Renewable and Sustainable Energy Reviews, 2021, 151: 111521.

C Song, K Kim, D Sung, et al. A review of optimal energy management strategies using machine learning techniques for hybrid electric vehicles. International Journal of Automotive Technology, 2021, 22: 1437-1452.

A H Ganesh, B Xu. A review of reinforcement learning based energy management systems for electrified powertrains: Progress, challenge, and potential solution. Renewable and Sustainable Energy Reviews, 2022, 154: 111833.

R Venkatasatish, C Dhanamjayulu. Reinforcement learning based energy management systems and hydrogen refuelling stations for fuel cell electric vehicles: An overview. International Journal of Hydrogen Energy, 2022, 47(64): 27646-27670.

M Al-Saadi, M Al-Greer, M Short. Reinforcement learning-based intelligent control strategies for optimal power management in advanced power distribution systems: A survey. Energies, 2023, 16(4): 1608.

J Gan, S Li, C Wei, et al. Intelligent learning algorithm and intelligent transportation-based energy management strategies for hybrid electric vehicles: A review. IEEE Transactions on Intelligent Transportation Systems, 2023.

D Qiu, Y Wang, W Hua, et al. Reinforcement learning for electric vehicle applications in power systems: A critical review. Renewable and Sustainable Energy Reviews, 2023, 173: 113052.

D Xu, C Zheng, Y Cui, et al. Recent progress in learning algorithms applied in energy management of hybrid vehicles: A comprehensive review. International Journal of Precision Engineering and Manufacturing-Green Technology, 2023, 10(1): 245-267.

NVIDIA. DGX Platform. Available: https://www.nvidia.com/en-us/data-center/dgx-platform/.

Y LeCun, Y Bengio, G Hinton. Deep learning. Nature, 2015, 521(7553): 436-444.

W S McCulloch, W Pitts. A logical calculus of the ideas immanent in nervous activity. The Bulletin of Mathematical Biophysics, 1943, 5: 115-133.

F Rosenblatt. The perceptron: a probabilistic model for information storage and organization in the brain. Psychological Review, 1958, 65(6): 386.

J J Hopfield. Neural networks and physical systems with emergent collective computational abilities. Proceedings of the National Academy of Sciences, 1982, 79(8): 2554-2558.

D E Rumelhart, G E Hinton, R J Williams. Learning representations by back-propagating errors. Nature, 1986, 323(6088): 533-536.

J L Elman. Finding structure in time. Cognitive Science, 1990, 14(2): 179-211.

Y LeCun, L Bottou, Y Bengio, et al. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 1998, 86(11): 2278-2324.

S Hochreiter, J Schmidhuber. Long short-term memory. Neural Computation, 1997, 9(8): 1735-1780.

Y Bengio, R Ducharme, P Vincent, et al. A Neural Probabilistic Language Model. Journal of Machine Learning Research, 2003, 3: 1137-1155.

I Goodfellow, J Pouget-Abadie, M Mirza, et al. Generative adversarial networks. Communications of the ACM, 2020, 63(11): 139-144.

K Cho, Merriënboer B Van, D Bahdanau, et al. On the properties of neural machine translation: Encoder-decoder approaches. 2014. arXiv preprint https://arxiv.org/abs/1409.1259

A Vaswani, N Shazeer, N Parmar, et al. Attention is All You Need. Advances in Neural Information Processing, 2017, 30: 5998–6008.

J Deng, W Dong, R Socher, et al. Imagenet: A large-scale hierarchical image database. 2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009: 248-255.

A Krizhevsky, I Sutskever, G E Hinton. ImageNet classification with deep convolutional neural networks. Communications of the ACM, 2017, 60(6): 84-90.

M D Zeiler, R Fergus. Visualizing and understanding convolutional networks. Computer Vision–ECCV 2014: 13th European Conference, 2014: 818-833.

C Szegedy, W Liu, Y Jia, et al. Going deeper with convolutions. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015: 1–9. https://doi.org/10.48550/arXiv.1409.4842.

K Simonyan, A Zisserman. Very deep convolutional networks for large-scale image recognition. 2014. arXiv preprint https://arxiv.org/abs/1409.1556

K He, X Zhang, S Ren, et al. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016: 770-778.

K Arulkumaran, M P Deisenroth, M Brundage, et al. Deep reinforcement learning: A brief survey. IEEE Signal Processing Magazine, 2017, 34(6): 26-38.

R Bellman. The theory of dynamic programming. Bulletin of the American Mathematical Society, 1954, 60(6): 503-515.

N Metropolis, S Ulam. The monte carlo method. Journal of the American Statistical Association, 1949, 44(247): 335-341.

R J Williams. Reinforcement-learning connectionist systems (Technical Report NU-CCS-87–3). Boston, MA: Northeastern University, College of Computer Science, 1987.

R S Sutton. Learning to predict by the methods of temporal differences. Machine Learning, 1988, 3: 9-44.

G ARummery, M Niranjan. On-line Q-learning using connectionist systems. Cambridge, 1994.

C J C H Watkins. Learning from delayed rewards. Dissertation, Cambridge University, 1989.

C J C H Watkins, P Dayan. Q-learning. Machine Learning, 1992, 8: 279-292.

L J Lin. Reinforcement learning for robots using neural networks. Carnegie Mellon University, 1992.

L Baird. Residual algorithms: Reinforcement learning with function approximation. Machine Learning Proceedings 1995, 1995: 30-37.

J Tsitsiklis, Roy B Van. Analysis of temporal-diffference learning with function approximation. Advances in Neural Information Processing Systems, 1996, 9.

R S Sutton, D McAllester, S Singh, et al. Policy gradient methods for reinforcement learning with function approximation. Advances in Neural Information Processing Systems, 1999, 12.

A Y Ng, S Russell. Algorithms for inverse reinforcement learning. ICML, 2000, 1: 2.

P Abbeel, A Y Ng. Apprenticeship learning via inverse reinforcement learning. Proceedings of the Twenty-First International Conference on Machine Learning, 2004:1, https://doi.org/10.1145/1015330.1015430.

R Coulom. Efficient selectivity and backup operators in Monte-Carlo tree search. International Conference on Computers and Games, 2006: 72-83.

V Mnih, K Kavukcuoglu, D Silver, et al. Playing atari with deep reinforcement learning. 2013. https://arxiv.org/abs/1312.5602

V Mnih, K Kavukcuoglu, D Silver, et al. Human-level control through deep reinforcement learning. Nature, 2015, 518(7540): 529-533.

T P Lillicrap, J J Hunt, A Pritzel, et al. Continuous control with deep reinforcement learning. 2015. arXiv preprint https://arxiv.org/abs/1509.02971

T Schaul, J Quan, I Antonoglou, et al. Prioritized experience replay. 2015. arXiv preprint https://arxiv.org/abs/1511.05952

J Schulman, S Levine, P Abbeel, et al. Trust region policy optimization. International Conference on Machine Learning, 2015: 1889-1897.

M Hausknecht, P Stone. Deep recurrent q-learning for partially observable mdps. 2015 AAAI Fall Symposium Series, 2015.

D Silver, A Huang, C J Maddison, et al. Mastering the game of Go with deep neural networks and tree search. Nature, 2016, 529(7587): 484-489.

D Silver, J Schrittwieser, K Simonyan, et al. Mastering the game of go without human knowledge. Nature, 2017, 550(7676): 354-359.

D Silver, T Hubert, J Schrittwieser, et al. A general reinforcement learning algorithm that masters chess, shogi, and go through self-play. Science, 2018, 362(6419): 1140-1144.

Hasselt H Van, A Guez, D Silver. Deep reinforcement learning with double q-learning. Proceedings of the AAAI Conference on Artificial Intelligence, 2016, 30(1), https://doi.org/10.1609/aaai.v30i1.10295.

Z Wang, T Schaul, M Hessel, et al. Dueling network architectures for deep reinforcement learning. International Conference on Machine Learning, 2016: 1995-2003.

V Mnih, A P Badia, M Mirza, et al. Asynchronous methods for deep reinforcement learning. International Conference on Machine Learning, 2016: 1928-1937.

J Schulman, F Wolski, P Dhariwal, et al. Proximal policy optimization algorithms. 2017. arXiv preprint https://arxiv.org/abs/1707.06347

T Haarnoja, A Zhou, P Abbeel, et al. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. International Conference on Machine Learning, 2018: 1861-1870.

S Fujimoto, H Hoof, D Meger. Addressing function approximation error in actor-critic methods. International Conference on Machine Learning, 2018: 1587-1596.

M Fortunato, M G Azar, B Piot, et al. Noisy networks for exploration. 2017. arXiv preprint https://arxiv.org/abs/1706.10295

M Hessel, J Modayil, Hasselt H Van, et al. Rainbow: Combining improvements in deep reinforcement learning. Proceedings of the AAAI Conference on Artificial Intelligence, 2018, 32(1), https://doi.org/10.1609/aaai.v32i1.11796.

O Vinyals, I Babuschkin, W M Czarnecki, et al. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature, 2019, 575(7782): 350-354.

J Schrittwieser, I Antonoglou, T Hubert, et al. Mastering atari, go, chess and shogi by planning with a learned model. Nature, 2020, 588(7839): 604-609.

J Jumper, R Evans, A Pritzel, et al. Highly accurate protein structure prediction with AlphaFold. Nature, 2021, 596(7873): 583-589.

P R Wurman, S Barrett, K Kawamoto, et al. Outracing champion Gran Turismo drivers with deep reinforcement learning. Nature, 2022, 602(7896): 223-228.

J Degrave, F Felici, J Buchli, et al. Magnetic control of tokamak plasmas through deep reinforcement learning. Nature, 2022, 602(7897): 414-419.

Z Cao, K Jiang, W Zhou, et al. Continuous improvement of self-driving cars using dynamic confidence-aware reinforcement learning. Nature Machine Intelligence, 2023, 5(2): 145-158.

S Feng, H Sun, X Yan, et al. Dense reinforcement learning for safety validation of autonomous vehicles. Nature, 2023, 615(7953): 620-627.

R Abdelhedi, A Lahyani, A C Ammari, et al. Reinforcement learning-based power sharing between batteries and supercapacitors in electric vehicles. 2018 IEEE International Conference on Industrial Technology (ICIT), 2018: 2072-2077.

H Chaoui, H Gualous, L Boulon, et al. Deep reinforcement learning energy management system for multiple battery based electric vehicles. 2018 IEEE Vehicle Power and Propulsion Conference (VPPC), 2018: 1-6.

Y Fang, C Song, B Xia, et al. An energy management strategy for hybrid electric bus based on reinforcement learning. The 27th Chinese Control and Decision Conference (2015 CCDC), 2015: 4973-4977.

R C Hsu, S M Chen, W Y Chen, et al. A reinforcement learning based dynamic power management for fuel cell hybrid electric vehicle. 2016 Joint 8th International Conference on Soft Computing and Intelligent Systems (SCIS) and 17th International Symposium on Advanced Intelligent Systems (ISIS), 2016: 460-464.

S A Kouche-Biyouki, S M A Naseri-Javareshk, A Noori, et al. Power management strategy of hybrid vehicles using sarsa method. Electrical Engineering (ICEE), 2018: 946-950.

X Lin, Y Wang, P Bogdan, et al. Reinforcement learning based power management for hybrid electric vehicles. 2014 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), 2014: 33-38.

C Liu, Y L Murphey. Power management for plug-in hybrid electric vehicles using reinforcement learning with trip information. 2014 IEEE Transportation Electrification Conference and Expo (ITEC), 2014: 1-6.

C Liu, Y L Murphey. Analytical greedy control and Q-learning for optimal power management of plug-in hybrid electric vehicles. 2017 IEEE Symposium Series on Computational Intelligence (SSCI), 2017: 1-8.

T Liu, C Yang, C Hu, et al. Reinforcement learning-based predictive control for autonomous electrified vehicles. 2018 IEEE Intelligent Vehicles Symposium (IV), 2018: 185-190.

X Qi, Y Luo, G Wu, et al. Deep reinforcement learning-based vehicle energy efficiency autonomous learning system. 2017 IEEE Intelligent Vehicles Symposium (IV), 2017: 1228-1233.

S Yue, Y Wang, Q Xie, et al. Model-free learning-based online management of hybrid electrical energy storage systems in electric vehicles. IECON 2014-40th Annual Conference of the IEEE Industrial Electronics Society, 2014: 3142-3148.