Abstract

Background

The swine production is a very important economic matter, occupying prominent position in the worldwide market. However, it appears as the greater impacting activity for the water resources. Researches point a swine manure production of 105.6 million m3/year in Brazil, which resulted in a piggery wastewater rich in solids, nutrients, heavy metals, and pathogens. Moreover, the water consumption for swine production is approximately 15 L/animal/day in southern Brazil, resulting in an unsustainable water resource demand. Thereby, this study verifies the viability of two parallel stabilization reservoirs as a technology for polishing treated piggery wastewater. This technology has been shown effective in reducing organic matter, nutrients, and pathogens in the treatment of the effluents with low or high organic load rate. The reservoirs can improve effluent quality with minimal energy costs to simple operations. The technique would promote the value of the effluent through its reuse for agricultural irrigation. The study was conducted at a farm in the city of Braço do Norte, Santa Catarina, in southern Brazil; this region has one of the largest densities of pigs in the world, which causes serious environmental problems.

Results

The effluent monitoring program included operation during both cold seasons (period I) and warm seasons (period II). The performance of the reservoirs improved continuously during the cold seasons, with the removal efficiencies of total biochemical oxygen demand (BOD5), total Kjeldahl nitrogen (TKN), and Escherichia coli reaching 52%, 64%, and 99.9%, respectively, with an effluent concentration of 144 mg·L−1 for BOD5 and 256 mg·L−1 for TKN. During the warm seasons, the BOD5, TKN, and E. coli removal efficiencies increased to 85%, 77%, and 99.9%, respectively, with an effluent concentration of 52 mg·L−1for BOD5 and 136 mg·L−1 for TKN, which indicates that seasonal factors greatly influence the removal of these variables. E. coli concentrations were not verified into stabilization reservoirs on both periods.

Conclusions

The results of this study confirmed that the stabilization reservoirs are capable experimental units promoting improved quality of the treated effluent. A seasonal influence was evident. The results demonstrated that the effluent was a good alternative for unrestrained irrigation use. The microbiological quality complies with the World Health Organization recommendations. The reuse of this treated effluent can reduce pig manure impacts on the environment and water resources.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Freshwater resources and population densities are unevenly distributed worldwide, and water demands already exceed the available supply in many regions of the world. Agricultural irrigation is the largest single usage of water (Food and Agriculture Organization FAO2003). In addition to conventional resources, non-conventional water sources offer complementary supplies that can be used to partially relieve water shortages in regions where renewable water resources are extremely scarce (Oweis et al.2004) and in regions with frequent drought periods, such as southern Brazil (Quadros and Lourenço2002).

Wastewaters from urban and agricultural sources have great potential for reuse as sources of water, organic matter, nutrients, and soil conditioning agents. However, the use of wastewater can also have negative effects, such as increased soil salinity, excessive leaching of nutrients and heavy metals, and human health risks from exposure to pathogens (Qadir and Oster2004). Piggery wastewater contains high concentrations of nitrogen, phosphorus, and organic matter in both soluble and particulate forms. The volume of wastewater produced and its composition mainly depend on specific animal nutrition and farming practices. Direct piggery wastewater discharge can cause eutrophication of water bodies or soil contamination due to nitrate infiltration (Flotats et al.2009). Brazil's water reuse regulations are not clearly established; the water quality standards in the literature are mostly related to the reuse of effluent from sewage treatment plants, and these standards are based on or supplemented by worldwide recommendations and regulations (Blum2003). However, agricultural wastewater reuse is a common practice even without specific guidelines.

Farms in Brazil generally use biodigesters and stabilization ponds for the treatment of piggery wastewater because such systems are natural biological treatment processes with a high potential for managing manure. However, the high concentration of algal biomass, nutrients, organic matter, and pathogens in the effluent requires final polishing (Belli Filho et al.2001). In this context, the development of low cost technologies that promote the additional removal of these pollutants and allow the reuse of treated effluent is essential for the establishment of sustainable farming practices.

Storing wastewater in reservoirs can improve microbiological quality and significantly reduce the high concentrations of organic matter and nutrients with minimal energy costs to simple operations. These systems are presented as a key component in the treatment of agricultural wastewater preceding its reuse in irrigation (Friedler et al.2003). Given these considerations, the aim of this study is to verify the viability of treated piggery wastewater for reuse in agricultural irrigation.

Methods

Experimental site

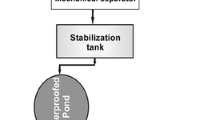

The experiment was conducted at a farm in the city of Braço do Norte, Santa Catarina, in southern Brazil (latitude 28°15′30″ and longitude 49°10′30″), which is in the region of the country that has the highest swine density. The farm had approximately 3,000 full-cycle animals, with 200 matrices, that were all kept in a confined system. The site includes rugged topography that makes agricultural land disposal difficult. The daily flow rate of produced piggery wastewater was 20 m3·day−1; 15 m3·day−1 was sent to the treatment system, and the remaining 5 m3·day−1 was stored in a settling pond for use in agricultural irrigation as a nutrient source. The farm's treatment plant consisted of a biodigester followed by an anaerobic pond, a facultative pond, and a maturation pond; a rock filter was used for effluent polishing (Figure1).

Experimental units and operational conditions

The stabilization reservoirs (R1 and R2) used in this research were two identical fiberglass circular tanks (Figure2A) operated in parallel as a batch system, with the following physical characteristics: a total volume of 10 m3 each, a depth of 2.5 m, a base diameter of 2.1 m, and a surface diameter of 2.4 m. The reservoirs were fed with treated piggery wastewater taken from the maturation pond of the tertiary stabilization ponds. The reservoirs (R1 and R2) were operated in batch cycles with two distinct periods: period I was during the cold seasons, and period II was during the warm seasons. Samples for monitoring the performance of the reservoirs were taken weekly from R1 and R2 at 10:00 to 11:00 am. The samples were collected from the central point of the reservoir at depths of 0.15, 1.15, and 2.00 m from the liquid surface (Figure2B); such sampling was carried out in order to verify the existence of water column stratification. Stratification of water columns determines the general availability of light and nutrients for phytoplankton growth affecting the removal efficiencies of the reservoirs and implies on the phytoplankton dynamics affecting its mortality by sedimentation. A total of 45 samples (n) were collected from each reservoir during period I, and during period II, 66 total samples were taken from R1, while 42 total samples were taken from R2. The main characteristics of the raw manure and the treated piggery wastewater used in this study are presented in Table1.

Analytical methods

The samples were analyzed for pH, temperature, dissolved oxygen (DO), total chemical and total biochemical oxygen demand (COD and BOD5, respectively), total dissolved solids (TDS), total Kjeldahl nitrogen (TKN), total phosphorus (TP), chlorophyll a, total coliforms, Escherichia coli (E. coli), and the sodium absorption ratio (SAR). The dissolved oxygen concentration, pH, and temperature inside the reservoirs were measured online with a multiparameter sonde (YSI 6600; YSI Inc., OH, USA). All analyses were conducted according to Standard Methods (American Public Health Association APHA2005). The COD concentration was determined by the closed reflux, colorimetric method (Standard Method (SM) 5220 D). The BOD5 was determined using the manometric method (SM 5210 D), in which the sample was digested during 5 days of incubation on a shaker base at 20 ± 1°C. The TDS were determined using SM 2540 B, in which the samples were centrifuged at 4,000 rpm for 20 min and dried to a constant weight at 105°C. TKN was determined using acid digestion and distillation followed by back titration of the boric acid distillates using 0.02 N sulfuric acid (SM 4500-N org B). Chlorophyll a was determined by the colorimetric method (Nush1980) with extraction in 80% ethanol. The samples were filtered within 12 h of collection, and the filter was frozen prior to extraction. Total coliform and E. coli analyses were performed using a chromogenic medium (Colilert-IDEXX®; IDEXX Laboratories, Inc., ME, USA). The samples were analyzed using the Quanti-Tray®/2000 (IDEXX Laboratories, Inc.) method and were incubated at 37°C for 24 h. Yellow wells indicated total coliforms, and yellow/fluorescent wells indicated the presence of E. coli. The evaluation of potential risks associated with soil permeability, such as salinity and sodicity, was based on the diagram by the US Salinity Laboratory Staff (Ayers and Westcot1985) of the relationship between electrical conductivity (EC) and the SAR in the effluent.

Statistical analysis

In order to verify the presence of the stratification throughout the reservoirs' depth, the analysis of variance (ANOVA) with STATISTICA® 7.0 software was performed. The Tukey test with 5% of significance level was used to compare the average values of the variables obtained between the three depths of the samples points.

Results and discussion

After statistical analysis, no difference was observed for values obtained between the three depths of the sample points (Figure2B). Thereby, the results presented in this study are based on average values of the sampling layers. Despite the large difference between the sampling layers (approximately 1.0 m), it is possible that the small surface area (approximately 4.5 m2) of each sample point has contributed to none thermal stratification. In addition, the reservoir arrangement on the surface, not buried, may also have contributed to none stratification; the walls of the reservoirs are fully exposed to sunlight, which allows homogeneous distribution of heat throughout the reservoirs' depth.

In situ measured variables and algal biomass

During the 11 months of monitoring, two distinct periods were clearly differentiated based on seasonal influences: period I included the cold seasons, and period II included the warm seasons. Table2 shows the dissolved oxygen concentration, pH, and temperature results.

During period I, the temperatures in both reservoirs averaged to 17°C, with a decrease from 19°C during the fall season to 16°C during the winter season. DO increased during this period from average values from 1.0 to 1.5 mg·L−1. Period II, which included the warm seasons, had temperatures that ranged from 22°C to 27°C (with average values of 25°C). In the early spring, the rising solar radiation intensity resulted in rising temperatures, which allowed for a high rate of photosynthesis. This increase can be verified by the chlorophyll a concentrations (Figure3), which remained in the range of 200 to 300 μg·L−1 during this period. Afshar and Saadatpour (2009) investigated the eutrophication of deep reservoirs and reported that the concentration of chlorophyll a within a reservoir is highly dependent on the total nutrient load and climatic conditions, such as temperature and solar radiation. Moreover, they obtained results that were similar to the present study and reported that as the temperature or total nutrient load drops, the algal growth rate diminishes.

The high rate of photosynthesis verified at the beginning of period II (warm seasons) led to an increase in DO concentrations. This increase in DO was particularly consequential at the beginning of period II when DO concentrations over 3.0 mg·L−1 were recorded, which contributed to the greater efficiency of physical-chemical removal during this period (Figures4 and5). The pH values averaged 7.9 ± 0.2 throughout the monitoring period. The reservoirs were found to be an effective buffer unit for maintaining an effluent with a pH of approximately 8.

Physical-chemical removal performance

The average concentrations of total COD, total BOD5, TKN, and TP in the influent entering the reservoirs were 2,340, 280, 700, and 74 mg·L−1 during the cold seasons and 1,800, 403, 540, and 72 mg·L−1 during the warm seasons, respectively. The quality of the effluent was optimal at the end of the warm seasons when the reservoir contents had relatively low concentrations of COD, BOD5, TKN, and TP, and the removal efficiencies of the physical-chemical variables were all at their highest.

Figure4 shows the concentrations of COD and BOD5 for both reservoirs during the two distinct seasonal periods. During period I, the performance of the reservoirs improved continuously, and after 120 days of storage, the COD removal efficiency for R1 and R2 (Figure4A) reached 58% and 70%, respectively, with an effluent concentration of 941 mg·L−1 for R1 and 563 mg·L−1 for R2. The main difference between the reservoirs was that R1 was used during fall and winter, while R2 was operated mostly during winter with low temperatures and a less concentrated influent. The results from period II show that during the warm season (Figure4A), the COD removal efficiency for R1 after 190 days of storage increased to 78%. For R2, after 130 days of storage, the concentration remained in the same range as in period I, with a removal of 66%. During period II, the reservoirs produced an average effluent COD concentration of 479 and 485 mg·L−1 for R1 and R2, respectively. These results match those verified by Barthel et al. (2008), who reported an effluent concentration of 435 mg·L−1 (COD) using maturation ponds to treat piggery wastewater with an organic surface loading rate of 32 kg COD·ha−1·day−1 and a hydraulic retention time of 70 days.

The average BOD5 concentrations (Figure4B) in the R1 effluent ranged from 131 mg·L−1 in period I to 66 mg·L−1 in period II, which corresponded to removal efficiencies of 52% and 86%, respectively. Effluent concentrations for R2 varied from 144 mg·L−1 in period I to 52 mg·L−1 in period II, which corresponded to efficiencies of 46% and 85%, respectively. The quality of the effluent was optimal at the end of the warm seasons in both units. This result could likely be due to the long storage time (190 days for R1), suitable temperature (25°C on average), the nutrient conditions, and higher biomass concentrations (Figure3) during this period. Costa et al. (2006) studied a maturation pond for treating piggery wastewater with an organic surface loading rate of 35 kg BOD·ha−1·day−1 and observed that the organic matter concentrations decreased to 788 mg·L−1 (COD) and 307 mg·L−1 (BOD5) with removal efficiencies of 24% and 18%, respectively.

Figure5 shows the concentrations of TKN and TP during the storage periods. It is shown that the reservoirs differed in their capacity to remove nitrogen and phosphorus, as was observed with the organic matter removal. This behavior could be explained by the storage period, which in this study was dependent on the seasonal influence. The temporal variation of TKN concentrations in the reservoirs during the two periods is presented in Figure5A. These concentrations decreased throughout the storage periods, with an average effluent concentration that was higher during the cold seasons (250 mg·L−1 for R1 and 256 mg·L−1 for R2) than in the warm seasons (136 mg·L−1 for R1 and 121 mg·L−1 for R2). These concentrations corresponded to the removal efficiencies of approximately 65% and 63% during period I for R1 and R2, respectively, and 77% and 74% during the period II for R1 and R2, respectively. These results are in agreement with those obtained in the studies conducted by Costa et al. (2006) that showed a removal efficiency of 68% for TKN, with an effluent concentration of 112 mg·L−1. Barthel et al. (2008) also showed a removal efficiency in the range of 74%, with an effluent concentration of 114 mg·L−1. The primary mechanisms for the removal of nitrogenous nutrients were likely algal assimilation and sedimentation, as the registered pH values (<9.0) would not stimulate the volatilization of ammonium nitrogen. The effluent temperatures were in the range of 20°C to 30°C, with a pH of 8.0, which resulted in percentages of non-ionized ammonia between 3.82% and 7.46%.

Figure5B shows TP concentrations for the reservoirs. R1 showed variability in TP concentrations during the cold seasons, with an average of 56 mg·L−1, which resulted in a low removal efficiency of approximately 43%. During the warm seasons, the TP removal efficiency increased (70% for R1 and 68% for R2), and R2 exhibited lower concentrations in the effluent of approximately 13 mg·L−1. According to El Halouani et al. (1993), TP can be removed by assimilation of the biomass (bacterial and algal) and by chemical precipitation, and pH values of greater than 7.6 could result in a removal efficiency of greater than 75% due to the precipitation of calcium phosphate.

Bacterial removal performance

Figure6 shows the coliform die-off in the reservoirs. Bacterial indicators of fecal contamination are present in high concentrations in the influents, and the reservoirs reduced the density of fecal microorganisms by 90% to 99.9%. It was observed that there was a gradual decrease of the values to concentrations that were lower than 103 MPN·100 mL−1 for total coliforms. E. coli concentrations were not verified for R1 within 60 and 30 days for periods I and II, respectively. R2 demonstrated the same result within 30 days in both periods. According to Keraita et al. (2008), the fecal coliform die-off exhibits an exponential decrease in stabilization ponds, where the rates are higher during the initial days of storage and then decrease during the remainder of the monitoring period. These two phases can be clearly observed in the die-off of E. coli presented in Figures6A,B, with an exponentially decreasing phase early in the storage period and a slower or stable phase during the rest of the period.

During period I, the removal of the concentration of total coliforms was two orders of magnitude, and it was three orders of magnitude during period II. E. coli removal reached four orders of magnitude for both periods (I and II); these values of bacterial removal are comparable to those in the range of 3 to 4 log units as reported by Macauley et al. (2006). The results of that study, which used chlorine and ozone for the disinfection of piggery wastewater stabilization ponds, showed final coliform concentrations ranging from 103 to 104 MPN·100 mL−1. The main mechanisms of the coliform die-off were the large storage periods with no effluent input during monitoring, as well as the solar radiation and water temperature that remained in the same range throughout the depth of the reservoirs. These reservoirs were not buried, and their walls were completely exposed, which promoted homogeneous heat distribution. Cirelli et al. (2009) used similar conditions in a study of E. coli concentrations in a wastewater reservoir. They reported significant correlations between the die-off coefficient and the solar radiation intensity and water temperature. These authors also observed that E. coli removal was faster during batch operations of the reservoirs.

Risks in soil salinity-sodicity

Table3 summarizes the results of variables for water reuse in irrigation and shows the risks of changes to soil salinity-sodicity. An increase in the soil sodium concentration, compared to the remaining soil concentration of calcium and magnesium (soil sodicity), can cause soil waterproofing. This condition, which is associated with an increase in soil salinity, affects the water infiltration rate in the soil (Blum2003).

It was observed that the decrease in TDS concentration changed the risks of soil sodicity for R2 during period II. The lowest average values of TDS (1,833 ± 202 and 2,157 ± 225 mg·L−1) were obtained in period II, which resulted in lower EC values; therefore, fewer restrictions of the effluent's reuse were found during the warm seasons. According to Costa et al. (1995), piggery wastewater has a high TDS concentration, which can result in high levels of EC (2,000 to 5,000 μS·cm−1).

The recommendations for agricultural irrigation in Brazil (Bernardo1995), based on the diagram by the US Salinity Laboratory Staff (Ayers and Westcot1985), report that an effluent with very high salinity (from 2,250 to 5,000 μS·cm−1) can be used in well-drained soils and with crops that have a high salt tolerance. An effluent with a slight sodicity risk can be used in most soils. However, irrigation using effluent with moderate sodicity risk requires organic soil that has good permeability, and the irrigation reuse of this effluent type should be avoided for fine-textured soils.

Benevides et al. (2008) studied wastewater stabilization ponds for agricultural irrigation and reported an EC value of 781 μS·cm−1 and a SAR average value of 5.3, indicating a slight risk of soil sodicity and a high risk of soil salinity. However, Leal et al. (2009), using treated wastewater for sugar cane irrigation in the city of Lins, São Paulo, Brazil, reported a SAR value of 10.9 and an EC of 840 μS·cm−1. These authors found an increase in soil sodicity with continuous use of effluent irrigation. However, they obtained good results for sugar cane crop growth and concluded that the treated sewage irrigation should not be used to supply 100% of the water for crops.

Comin et al. (2007) evaluated the effects of using piggery wastewater and mineral fertilizer (urea) on the soil quality and crop yields of oat and corn. The authors observed the occurrence of soil acidification with the use of the chemical fertilizer (urea). Moreover, they verified that the crop yield of oats was not influenced by the difference between the types of fertilizers. Additionally, the yield of corn crop that was treated with piggery wastewater irrigation was similar to that of the chemical fertilizer treatment, and both treatments showed a higher corn crop yield than the unfertilized treatment.

Conclusions

The results of this study confirmed that the stabilization reservoirs are capable experimental units for reaching satisfactory removal efficiencies, promoting improved quality of the treated effluent, and decreasing organic matter, nutrient and pathogen concentrations. A seasonal influence was evident, and major removal efficiencies were observed with greater effluent stabilization during the warm seasons.

At the end of the storage periods, the results pointed to the effluent as a good alternative for unrestricted irrigation, which allows farmers and consumers to have free access to the crops. The microbiological quality complies with the WHO (2006) recommendations which advise that E. coli concentrations should be lower than 103 MPN·100 mL−1 to present no health risk from direct contact with the effluent. Risks in soil salinity-sodicity allow the effluent to be reused for irrigation, provided that the effluent is not continuously applied.

The reuse of this treated effluent can reduce pig manure impacts on the environment and water resources. Furthermore, this practice promotes the sustainable use of water sources, which would reduce water consumption once it is possible to substitute part of the water used for irrigation with this treated effluent, which would be especially valuable during periods of drought.

Authors' information

VFV is a sanitary and environmental engineer and a doctoral student at the Federal University of Santa Catarina, Brazil. RAM is a biologist and a post-doctoral student at the same university. PBF is also a sanitary and environmental engineer, industrial chemistry PhD, an expert in wastewater treatment and atmospheric pollution, and professor and researcher at the Federal University of Santa Catarina. RHRC is a civil engineer, treatment and water quality PhD, expert in treatment and water quality and wastewater treatment, and professor and researcher at the same university.

References

Afshar A, Saadatpour M: Reservoir eutrophication modeling, sensitivity analysis, and assessment: application to Karkheh reservoir. Iran Envirom Eng Science 2009,26(7):1227–1238.

American Public Health Association (APHA): Standard methods for the examination of water and wastewater. 21st edition. APHA, AWWA (American Water Works Association), and WEF (Water Environment Federation), Washington DC; 2005.

Ayers RS, Westcot DW: Water quality for agriculture. Irrigation and drainage paper number 29. FAO, Rome; 1985.

Barthel L, Oliveira PAV, Costa RHR: Plankton biomass in secondary ponds treating piggery waste. Braz Arch Biol Technol 2008,51(6):1287–1298. 10.1590/S1516-89132008000600025

Belli Filho P, Castilhos AB Jr, Costa RHR, Soares SR, Perdomo CC: Tecnologias para o tratamento de dejetos de suínos (Technologies for the treatment of pig slurry). Braz J Agri Environ Eng 2001,5(1):166–170.

Benevides RM, Mota FSB, Aquino MD: Aspectos sanitários e agronômicos do uso de esgotos tratados na irrigação do capim tanzânia (health aspects and agricultural use of treated wastewater for irrigation of guinea grass). In 13th Luso-Brazilian symposium of sanitary and environmental engineering. Belém do Pará, Pará, Brazil; 2008. 9–13 March 2008 9–13 March 2008

Bernardo S: Manual de irrigação (irrigation manual). 6th edition. Federal University of Viçosa, Minas Gerais; 1995.

Blum JRC: Critérios e padrões de qualidade da água (criteria and standards for water quality). In Reúso de água (water reuse). Edited by: Mancuso PCS, Santos HF. Manole, São Paulo; 2003:125–174.

Cirelli GL, Consoli S, Juanico M: Modelling Escherichia coli concentration in a wastewater reservoir using an operational parameter MRT%FE and first order kinetics. J Environ Manag 2009, 90: 604–614. 10.1016/j.jenvman.2007.12.015

Comin JJ, Dortzbach D, Sartor LR, Belli Filho P: Adubação prolongada com dejetos suínos e os efeitos em atributos químicos e físicos do solo na produtividade em plantio direto sem agrotóxicos (Long-term pig manure fertilization and its effects on soil chemical and physical attributes and on crop productivity with no-till, no-pesticide practices). Brazilian Journal of Agroecology 2007,2(20):1540–1543.

Costa RHR, Silva FCM, Oliveira PAV: Preliminary studies on the use of lagoons in the treatment of hog waste products. In 3rd IAWQ international specialist conference and workshop. João Pessoa, Paraíba, Brazil; 1995. 27–31 March 1995 27–31 March 1995

Costa RHR, Araujo IS, Belli Filho P: Aerated facultative pond and maturation pond in-series for treatment of piggery wastes. In 7th IAWQ international specialist conference on waste stabilization ponds. Bangkok, Thailand; 2006. 25–27 September 2006 25–27 September 2006

El Halouani H, Picot B, Casellas C, Pena G, Bontoux J: Elimination de l'azote et du phosphore dans um lagunage à haut rendement. Revue des Sciences de l′eau 1993, 6: 47–61.

Food and Agriculture Organization (FAO): Review of world water resources by country. Water reports 23. FAO, Rome, Italy; 2003.

Flotats X, Bonmati A, Fernandez B, Magri A: Manure treatment technologies: on farm versus centralized strategies. NE Spain as case study. Bioresource Technol 2009,100(22):5519–5526.

Friedler E, Juanico M, Shelef G: Simulation model of wastewater stabilization reservoirs. Ecol Eng 2003,20(2):121–145. 10.1016/S0925-8574(03)00009-0

Keraita B, Drechsel P, Konradsen F: Using on-farm sedimentation ponds to improve microbial quality of irrigation water in urban vegetable farming in Ghana. Water Sci Technol 2008,57(4):519–525. 10.2166/wst.2008.166

Leal RMP, Herpin U, Fonseca AF, Firme LP, Montes CR, Melfi AJ: Sodicity and salinity in a Brazilian Oxisol cultivated with sugarcane irrigated with wastewater. Agr Water Manage 2009,96(2):307–316. 10.1016/j.agwat.2008.08.009

Macauley JJ, Qiang Z, Adams CD, Surampalli R, Mormile MR: Disinfection of swine wastewater using chlorine, ultraviolet light and ozone. Water Res 2006,40(10):2017–2026. 10.1016/j.watres.2006.03.021

Nush EA: Comparison of different methods for chlorophyll and phaeopigment determination. Arch Hydrobiology 1980, 14: 14–36.

Oweis TY, Hachum A, Bruggeman A: Indigenous water-harvesting systems in west Asia and North Africa. International Center for Agricultural Research in the Dry Areas (ICARDA), Aleppo; 2004:173.

Qadir M, Oster JD: Crop and irrigation management strategies for saline–sodic soils and waters aimed at environmentally sustainable agriculture. Sci Total Environ 2004,323(1–3):1–19.

Quadros MFL, Lourenço MCM: Estudo da estiagem no oeste catarinense em 2001/2002. Avaliação do desempenho da previsão climática do CPTEC (study of drought in western Santa Catarina in 2001/2002. Performance evaluation of climate prediction at CPTEC). In XII Brazilian congress of meteorology. Foz de Iguaçu, Paraná, Brazil; 2002. 25–30 November 2002 25–30 November 2002

WHO: Wastewater use in agriculture: guidelines for the safe use of wastewater, excreta and grey water. WHO, Geneva; 2006.

Acknowledgments

The authors wish to thank the National Council of Technological and Scientific Development (CNPq), Foundation for Research and Innovation of Santa Catarina (Fapesc), and Petrobrás Ambiental for their financial support of this research in terms of costs. We are also grateful to the Improving Coordination of Graduate and Postgraduate (Capes) for providing the masters degree student scholarships for VFV.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

VFV carried out the acquisition, analysis, and interpretation of data, and drafted the manuscript. RAM helped in the acquisition and interpretation of data, and performed the statistical analysis. RHRC conceived of the study, participated in its design and coordination, and revised the manuscript critically for important intellectual content. PBF participated in the design of the study and helped draft the manuscript. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Velho, V.F., Mohedano, R.A., Filho, P.B. et al. The viability of treated piggery wastewater for reuse in agricultural irrigation. Int J Recycl Org Waste Agricult 1, 10 (2012). https://doi.org/10.1186/2251-7715-1-10

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/2251-7715-1-10