Abstract

Abstract

When we discretize nonlinear Volterra integral equations using some numerical, such as collocation methods, the arising algebraic systems are nonlinear. Applying quasilinear technique to the nonlinear Volterra integral equations gives raise to linear Volterra integral equations. The solutions of these equations yield a functional sequence quadratically convergent to the solution. Then, we use collocation method based on Chebyshev polynomials and a modified Clenshaw-Curtis quadrature and obtain a numerical solution. Error analysis has been performed, and the method has been applied on three numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The method of quasilinearization has been started by Bellman and Kalaba [1], generalized by Lakshmikantham [2, 3], and applied to a variety of problems by [4–7]. Based on the idea of quasilinearization [1], the generalized quasilinear technique in [8] and later extended in [2] offers two monotonic sequences of linear iterations, uniformly and quadratically convergent to the unique solution of the initial value problem:

Consider the nonlinear Volterra integral equation:

Applying iterative processes to solve this equation, when k(t s u) is nondecreasing with respect to u and satisfies a Lipschitz condition, the successive approximations method [9] yields a monotonic sequence, uniformly convergent to the solution of Equation (1). But the iterations that define the sequences are all nonlinear, and the rate of convergence is linear. The iterations employed in the monotone iterative technique [10, 11] and their convergence rate are linear. The method of the quasilinearization is developed by [12] to solve Equation (1) by the iterative scheme:

for p=1,2,⋯, where u0(t) is the lower solution of Equation (1), defined in the following. This scheme is linear; under nondecreasing monotonicity and convexity conditions on k(t s u), it is quadratically convergent to the unique solution of Equation (1) and then rapidly in comparison with the successive processes in [9–11]. The purpose of this paper is to employ numerical methods to approximate the solution of the linear integral equations (2) in a piecewise continuous polynomial space and then generate a sequence of approximation solutions where converge to the unique solution of the nonlinear integral equation (1) under some conditions on k(t s u) as mentioned above. For numerical integration and quadrature formulae, we employ Chebyshev polynomials and a modified Clenshaw-Curtis quadrature and its error analysis.

This paper has been organized as follows: section “Volterra integral inequalities and generalized quasilinearization” contains a general framework for the idea of quasilinearization used to solve the integral equations. Section “Piecewise polynomials space and collocation method” is devoted to derivation of step by step collocation method in a piecewise polynomials space to approximate the solution of the integral equations. In section “Fully discretizing using quadrature formulae and their errors”, fully discretizing of the algebraic system is obtained by using a modified Clenshaw-Curtis quadrature and its error analysis is given. In section “Convergence of the fully discretized solution”, the convergence of the method is discussed and the numerical examples are given in section “Numerical examples”.

Volterra integral inequalities and generalized quasilinearization

For and T>0, let J=[0,T] and D={(t,s)∈J×J:s≤t}, consider

where and .

Definition 1. A function is called a lower solution of Equation (3) on J if

and an upper solution if the reversed inequality holds.

It is shown in [12] that if v0(t) and w0(t) in are lower and upper solutions of Equation (3), respectively, then v0(t)≤w0(t) holds on J, when k(t s u) is nondecreasing in u for each fixed (t s)∈D and satisfies one-sided Lipschitz condition

provided v0(0)≤w0(0). If this holds, there exists a solution u(t) of Equation (3) such that

and this solution is unique.

Now, for and v0(t)≤u≤w0(t) on J, let

and ∥u∥=ma xt∈J|u(t)|. By defining two iterative schemes as two linear integral equations

and

for p=1,2,⋯, and , lower and upper solutions of Equation (3) respectively, the following theorem in [12] shows the quadratically convergence of two sequences {v p (t)} and {w p (t)} derived from Equations (4) and (5) to the unique solution of Equation (3).

Theorem 1. Assume that , v0(t)≤w0(t) on J are lower and upper solutions of Equation (3) on J, respectively.

for (t,s,u)∈Ω.

Then, the two iterative schemes (4) and (5) define a nondecreasing sequence {v p (t)} and a nonincreasing sequence {w p (t)} in such that v p →u and w p →u uniformly on J, and the following quadratic convergent estimates hold:

Also, these two sequences satisfy the relation

The following two lemmas in [13] and [14] will be used during this work. In the whole of the work, we refer to ∥.∥ as the maximum norm of the functions or matrices.

Lemma 1. Suppose that A is a matrix such that ∥A∥<1. Then, the matrix (I−A) is nonsingular and

Lemma 2. (Discrete Gronwall Inequality) Let for all the following inequality be satisfied:

Then, for all

Piecewise polynomials space and collocation method

We set the partition {0=τ0<τ1<⋯<τ N =T} on J and put h n =τ n −τn−1 with h=max n {h n } and indicate the above partition by J h . In the theory of interpolation, it is well known that the minimum value of the remainder term is obtained when the polynomial interpolation is carried out on the zeros of Chebyshev polynomials [15]. The first-kind Chebyshev polynomials are defined by the relation

and the zeros of these polynomials in an increasing arrangement are as

We define the mapping δ n :[−1,1]↦[τn−1,τ n ] with the relation

Now, consider the linear Volterra integral equation (4). It may be shown in the form of

where

and

Definition 2. Suppose that J h is a given partition on J. The piecewise polynomial space with μ≥0, −1≤d≤μ is defined by

where σ n =(τn−1,τ n ] and Π μ denotes the space of the polynomials of degree not exceeding μ, and it is easy to see that is a linear vector space.

With this definition, we select the Lagrange polynomials constructed on the zeros of the Chebyshev polynomial T m (z) as a basis for Πm−1 on the subinterval σ n and approximate the solution of the integral equation (6) in the piecewise polynomial space using collocation method. Then, by letting tn,k=δ n (z k ) and Y h ={tn,k:n=1,…,N, k=1,…,m}, the collocation points, this collocation solution in the subinterval σ n may be written as

for p=1,2,…, where and L k (z) are the Lagrange polynomials defined by the relation

We observe that this collocation solution is not necessary to be continuous on J. Then, we must let d=−1 and . For t∈σ i , Equation (6) may be written as

where vp,i(z)=v p (δ i (z)).The collocation equation is defined by replacing the exact solution v p (t) with the collocation solution in Equation (6) on the collocation points Y h .

Then, using Equation (10) for ti,k∈Y h , the collocation equation has the form

for k=1,…,m, where we have used Equation (9) and by denoting the following vectors and matrices forms,

Equation (11) is transformed to the linear system

where I m is the identity matrix with dimension m.

Fully discretizing using quadrature formulae and their errors

The fundamental theorem of orthonormal Fourier expansions in the space C[−1,1] implies that for any f∈C[−1,1], there exists an expansion of Chebyshev polynomials as follows:

where the prime denotes that the first term is halved. Integration of both sides of this expansion gives:

Then, we approximate the integral (14) by M + 1 first terms of the series:

and the coefficients a i also are approximated by the closed Gauss-Chebyshev rule. This is the Clenshaw-Curtis quadrature for estimating the integral (14), but we modify this rule and use it to the components of the matrices , , , and with the open Gauss-Chebyshev because this rule does not use the end points of the intervals and uses one node less than close Gauss-Chebyshev and same accuracy. M nodes open Gauss-Chebyshev rule for approximating a r has the form

and we use the following equation as an approximation for the integral (14):

For the components of the matrices and , we use change of variable z=θ k (w):[−1,1]↦[−1,z k ] defined by

For the error analysis of this quadrature formula using the equality (14), we have

To compute , we utilize conclusions in [16] which presents the equality

Moreover, the coefficients cr,l depend on the properties of the function f(z)T r (z) using the following lemma which follows from results in [17].

Lemma 3. Let f(z)∈Cp[−1,1], and suppose f(p + 1)(z) exists and is continuous in [−1,1] except possibly for a finite number of finite discontinuities, then for some C f and all i>0 the fourier coefficients a i satisfies in the relation

Substituting this result into (17), we find

where is related to the function f(z)T r (z), and we have used the following relation in [18]

Applying Equations (18) to (16) and reusing lemma (3) for , we obtain the bound

where the constant C f is related to the function f(z).

According to this numerical quadrature, we define fully discretized matrices related to the matrices (12) as

and refresh the linear system (13) for them

where . Then, we define the fully discretized solution on σ i by the relation

Theorem 2. There exists an such that for any partition J h with , the linear system (20) has a unique solution for 1≤i≤N and p=1,2,….

Proof. We first give a bound for the then use the lemma (1). The matrix has the components

Then,

Now using lemma (1), the matrix is nonsingular if we get

and for this selection

and the linear system (20) has the unique solution

It is easy to see that with this choice of h, the linear system (13) has a unique solution because of

For the complete error analysis, we need the following bounds in the next section which we can conclude them until now:

The last error bounds are obtained by applying Equation (19) to the entries of the matrices , , , and .

Convergence of the fully discretized solution

To analyze the error of the fully descretized solution, we employ the Cauchy remainder for polynomial interpolation in σ i

where . The error function on σ i satisfies in the equation

with . Then,

where . Now, we need a bound for the . Letting Equation (23) in Equation (10) for t=ti,kand applying notation (12), we obtain

for k=1,…,m. The fully discretized version of this equation is

From these two equations, we obtain

where and . This is equivalent to the linear system

and . With the selection , this system has the unique solution

We use this equation and find a bound for the . Taking norm of both sides yields

and employing the bounds (22), we get

but from Equation (21), satisfies in the inequality

then, we can apply lemma (2) to this inequality and after simplification deduce

From this relation, we get

and a bound for is obtained using Equation (26) as follows

Taking these two results into Equation (27) after simplification and noticing that h K u φ α h =α h −1, we have

We utilize lemma (2) again to this inequality and conclude

Calculating the sums separately

and setting in Equation (19), we get

and using the following relations

By recalling the value of , yields to

Setting this bound in Equation (25), we obtain the main result as follows

In this error bound, the behavior of the and when N is increasing must be specified. To do this for simplicity, we assume that the partition J h is uniform, that is . With this selection for large N

and when N increases, we deduce that the error bound is asymptotically equal to

After deriving fully discretized solution , an approximation to the solution v p (t) of linear integral equations (6), we can write general error bound as follows:

The first part of this general error bound is due to the quasilinearization, and it has been proven in Theorem (1) that is quadratically convergent. The second term is due to collocation which has a convergence order O(hm), and the third part of the above error is due to the quadrature formula which is convergent with the order O((2M)−p−1).

Numerical examples

In what follows, the method presented in this paper is applied to solve three numerical examples of the integral equation (3).

Example 1. The following integral equation is discussed in [19]:

where −1≤t≤1, and k(t s u(s))=(t−2s)u2(s) is convex and nondecreasing with respect to u for u≥0. The exact solution is u(t)=1−t2, and v0(t)=0 is a lower solution. The iterative scheme is

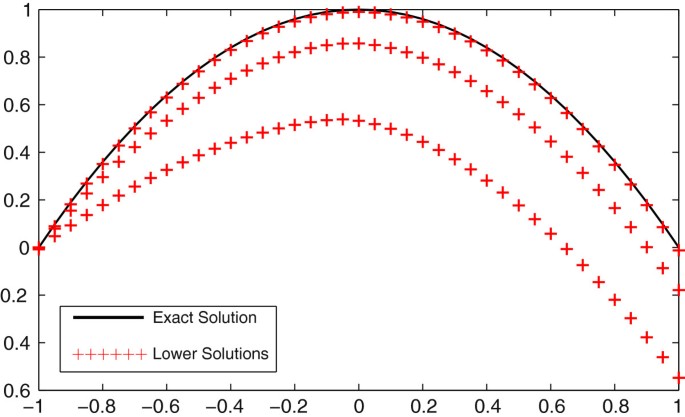

We employed the presented method for N=4, m=3, and M=4. We obtained the absolute values of the errors for Equation (29), which are shown in Table 1 and Figure 1; these results verify the convergence of the sequence to the exact solution.

Example 2. The second example,

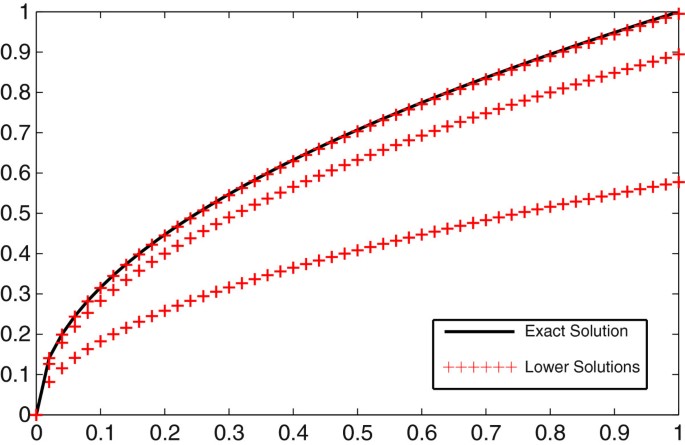

has the exact solution and the lower solution . The absolute values of the errors are presented in Table 2 and Figure 2.

Example 3. The third example, is the following integral equation:

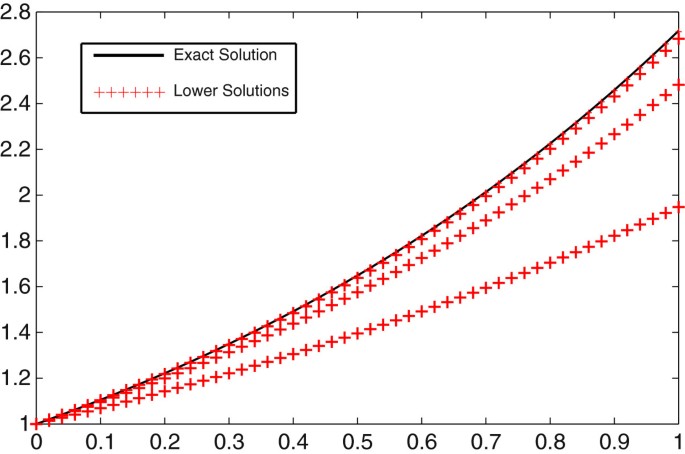

This equation has the exact solution u(t)=etand the lower solution v0(t)=1 + t. Table 2 shows the absolute values of the errors, and the convergence of the sequence to the exact solution is shown in Figure 3.

Conclusions

In this paper, we computed an error bound for the numerical solution of nonlinear Volterra integral equations that contain the quasilinearization, collocation, and the numerical quadrature errors separately and showed the effects of the collocation approximation and numerical quadratures on the accuracy of the approximated solution. The provided numerical examples confirm our results.

References

Bellman R, Kalaba RE: Quasilinearization and Nonlinear Boundary Value Problems. American Elsevier Publishing Co., New York;

Lakshmikantham V, Leela S, Sivasundaram S: Extensions of the method of quasilinearization. J. Opt. Th. Appl 5: 315–321.

Lakshmikantham V: Further improvement of generalized quasilinearization. Nonlinear Analysis 27: 315–321.

Dricia Z, McRae FA, Vasundhara Devi J: Quasilinearization for functional differential equations with retardation and anticipation. Nonlinear Analysis 70: 1763–1775.

Cabada A, Nieto JJ, Pita-da-Veiga R: A note on rapid convergence of approximate solutions for an ordinary Dirichlet problem. Dynamics of Continuous, Discrete and Impulsive Systems 4: 23–30.

Lakshmikantham V, Leela S, McRae FA: Improved generalized quasilinearization method. Nonlinear Analysis 24: 1627–1637.

Neito JJ: Generalized quasilinearization method for a second order ordinary differential equation with Dirichlet boundary conditions. Proc. Amer. Math. Soc 125: 2599–2604.

Lakshmikantham V, Malek S: Generalized quasilinearization. Nonlinear World 1: 59–63.

Walter W: Differential and Integral Equations. Springer, Berlin;

Ladde GS, Lakshmikantham V, Vatsala AS: Monotone Iterative Techniques for Nonlinear Differential Equations. Pitman, Boston;

Ladde GS, Lakshmikantham V, Pachpatte BG: The method of upper and lower solutions and Volterra integral equations. J. Integral Eqs 4: 353–360.

Pandit SG: Quadratically converging iterative schemes for nonlinear Volterra integral equations and an application. J. AMSA 7: 169–178.

Datta B: Numerical Linear Algebra and Applications. Brooks/Cole Publishing Company, Kentucky;

Agarwal RP: Difference Equations and Inequalities. Markel Dekker Inc., New York;

Davis PJ: Interpolation and Approximation. Dover Publications, New York;

Delves LM, Mohamed JL: Computational methods for integral equations. Cambridge University Press, New York;

Bain M, Delves LM: The convergence rates of expansions in Jacobi polynomials. Numer Math 27: 219–225.

Delves LM, Freeman TL: Analysis of Global Expansion Methods: Weakly Asymptotically Diagonal Systems. Academic, London;

Maleknejad K, Sohrabi S, Rostami Y: Numerical solution of nonlinear Volterra integral equations of the second kind by using Chebyshev polynomials. Appl. Math. Comput 188: 123–128.

Acknowledgements

The authors thank the referee for the good recommendations.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors conceived the study and participated in its design and coordination, drafted the manuscript, and participated in the sequence alignment. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Rashidinia, J., Najafi, E. & Arzhang, A. An iterative scheme for numerical solution of Volterra integral equations using collocation method and Chebyshev polynomials. Math Sci 6, 60 (2012). https://doi.org/10.1186/2251-7456-6-60

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/2251-7456-6-60