Abstract

Introduction

Optimal practice and feedback elements are essential requirements for maximal motor recovery in patients with motor deficits due to central nervous system lesions.

Methods

A virtual environment (VE) was created that incorporates practice and feedback elements necessary for maximal motor recovery. It permits varied and challenging practice in a motivating environment that provides salient feedback.

Results

The VE gives the user knowledge of results feedback about motor behavior and knowledge of performance feedback about the quality of pointing movements made in a virtual elevator. Movement distances are related to length of body segments.

Conclusion

We describe an immersive and interactive experimental protocol developed in a virtual reality environment using the CAREN system. The VE can be used as a training environment for the upper limb in patients with motor impairments.

Similar content being viewed by others

Background

Stroke, third leading cause of death in Western countries, contributes significantly to disabilities and handicaps. Up to 85% of patients have an initial arm sensorimotor dysfunction with impairments persisting for more than 3 months [1, 2]. Several principals guide motor recovery. In animal stroke models, experience-dependent plasticity is driven through salient, repetitive and intensive practice [3, 4]. However, in humans, unguided practice of reaching without feedback about movement patterns used, even if enhanced or intensive, may reinforce compensatory movement strategies instead of encouraging recovery of pre-morbid movement patterns [5, 6]. While desirable for some patients with severe impairment and poor prognosis, for others, compensation may limit the potential for recovery [7–10].

Levin and colleagues have shown that recovery of pre-morbid movement patterns after repetitive reaching training is facilitated when either compensatory trunk movements were restricted [11] or information about missing motor elements was provided [6, 12]. This suggests that more salient, task-relevant feedback may result in greater motor gains after stroke. Virtual reality (VR) technologies provide adaptable media to create environments for assessment and training of arm motor deficits using enhanced feedback [13]. This paper describes a virtual environment (VE) that incorporates practice and feedback elements necessary for maximal motor recovery. It introduces: 1) originality and motivation to the task; 2) varied and challenging practice of high-level motor control elements, and 3) optimal, multimodal feedback about movement performance and outcome.

Methods

A VE simulating elevator buttons was developed to practice pointing movement (Fig. 1). Target placement challenges individuals to reach into different workspace areas and motivation is provided as feedback about motor performance. Peripherals are connected to a PC (Dual Xeon 3.06 GHz, 2 GB RAM, 160 GB hard drive) running a CAREN (Computer Assisted Rehabilitation Environment; Motek BV) platform providing 'real-time' integration of 3D hand, arm and body position data with the VE. The system includes a head-mounted display (HMD, Kaiser XL50, resolution 1024 × 768, frequency 60 Hz), an Optotrak Motion Capture System (Northern Digital), a CyberGlove® (Immersion), and a dual-head Nvidia Quatro FX3000 graphics card (70 Hz) providing high-speed stereoscopic representation of the environment created on SoftImage XSI.

The 3D visual scene displayed through the HMD promotes a sense of presence in the VE [14]. To simulate stereovision, two images of the same environment are generated in each HMD camera position with an offset corresponding to inter-ocular distance. The Optotrak system tracks movement in the virtual space via infrared emitting diodes (IREDs) placed on body segments. Optotrak provides higher sampling rates and shorter latencies for acquiring positional data compared to other systems, e.g., electromagnetic. Longer latencies may be associated with cybersickness. Head and hand position are determined by tracking rigid bodies on the HMD and CyberGlove respectively.

Presence is enhanced with the 22-sensor CyberGlove, permitting the user to see a realistic reproduction of his/her hand in the VE. Haptic feedback is not provided (i.e., force feedback on button depression). Hand position from Optotrak tracking is relayed to CyberGlove software, which calculates palm and finger position/orientation. Final fingertip position determines target acquisition with accuracy adjusted to the participant's ability.

Experimental Setup

The system permits repetitive training of goal-directed arm movements to improve arm motor function. In the current setup, elevator buttons (targets), displayed in 2 rows of 3, 6 cm × 6 cm targets (Fig. 2), are arranged on a virtual wall in the ipsilateral and contralateral arm workspace requiring different combinations of arm joint movements for successful pointing. Center-to-center distance between adjacent targets is 26 cm (Fig. 2A). Targets are displayed at a standardized distance equal to the participant's arm length (Fig. 2B) to facilitate collision detection. Middle targets are aligned with the sternum, with the mid-point between rows at shoulder height.

A global system axis is calibrated using a grid of physical targets having the exact size and relative position as those in the VE, with its origin at the center of the target grid (Fig. 3). Extreme right and left target distances (1,4,3,6) are corrected for arm's length by offsetting target depth along the sagittal plane (Fig. 4) so that they can be reached without trunk displacement.

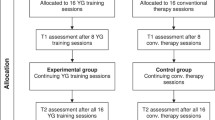

Based on findings that improvement in movement time of a reaching task occurred after 25–35 trials in patients with mild-to-moderate hemiparesis [7], the initial training protocol includes 72 trials. This represents twice the number needed for motor learning and is considered intensive. Trials are equally and randomly distributed across targets. Twelve trials per target are recorded, 3 blocks of 24 movements each, separated by rest periods. Recording time and intertrial intervals are adjusted according to subject ability. Task difficulty is progressed by manipulating movement speed and precision requirements.

Feedback

Effects of different types of feedback on motor learning can be studied. Feedback is provided as knowledge of results (KR) and performance (KP). Movement speed and precision (KR) and motor performance (joint movement patterns, KP) auditory and visual feedback is provided to enhance motor learning [6, 12]. Subjects are verbally cued to reach to a target as well as by a change in target color (yellow, Fig. 5A,B). Subjects receive positive feedback (KR) in the form of a 'ping' sound and change in target color (green) when the movement is both within the stipulated time and area. Negative feedback (buzzer sound) is provided if the movement is not rapid or precise enough. Finally, the subject receives KP in the form of a 'whoosh' sound and red colored target if trunk displacement exceeds an adjustable default value of 5 cm. According to previous studies, non-disabled subjects use up to 1.7 ± 1.6 cm of trunk movement to reach similarly placed targets [15].

Elevator scenes: A. Spheres represent marker positions on the subject's arm and trunk and the cube in front of Target 1 is the offset added to detect collision between the fingertip and the target. B. The virtual environment as it appears to the subject in the head-mounted display. The subject is cued to reach Target 3. The participant's score is indicated on the top right of each panel.

Preliminary Results

We compared motor performance and movement patterns made to the 6 targets between the VE and PE (Fig. 6) in 15 patients with hemiparesis and 8 age-matched non-disabled controls. Position data (x, y, z) from the finger, arm and trunk were interpolated and filtered and trajectories were calculated. Kinematics measured were endpoint velocity, pointing error and trajectory smoothness. Peak endpoint velocity was determined from magnitude of the tangential velocity obtained by differentiation of index marker positional data. Endpoint error was calculated as the root-mean-square error of endpoint position with respect to the target. Trajectory smoothness was computed as the curvature index defined as ratio of actual endpoint path length to a straight line joining starting and end positions such that a straight line has an index of 1 and a semicircle has an index of 1.57 [16].

Fig. 6 shows mean endpoint trajectories for one patient with moderate hemiparesis (A) and one non-disabled subject (B) reaching to the 3 lower targets in both environments. The non-disabled subject made movements twice as fast as the patient. In both subjects, movement speed was lower in the VE. Endpoint precision was comparable, ranging from 257–356 mm in the PE and 275–370 mm in the VE for the non-disabled subject and from 263–363 mm in the PE and 275–379 mm in the VE for the patient. Movements tended to be less precise and more curved in VE compared to the PE (curvature index: non-disabled-PE: 1.02–1.03; VE: 1.04–1.05; patient-PE: 1.15–1.22; VE: 1.16–1.32). Results suggest some differences in movements performance in a VE compared to a PE of similar physical dimensions. From a usability standpoint, only 2 patients of those screened could not use the HMD. Of those who participated, all reported that the VE was more enjoyable and motivating than the PE and it encouraged them to do more practice.

Conclusion

A VR system was developed to study effects of enhanced feedback on motor learning and arm recovery in patients with neurological dysfunction. Effects will be contrasted with those from practice in similarly constructed PEs using different types of feedback.

References

Carod-Artal J, Egido JA, Gonzalez JL, Varela de Seijas E: Quality of life among stroke survivors evaluated 1 year after stroke: experience of a stroke unit. Stroke 2000, 31: 2995-3000.

Olsen TS: Arm and leg paresis as outcome predictors in stroke rehabilitation. Stroke 1990, 21: 247-251.

Teasell R, Bayona NA, Bitensky J: Plasticity and reorganization of the brain post stroke. Top Stroke Rehabil 2005, 12: 11-26.

Nudo RJ, Milliken G: Reorganization of movement representations in primary motor cortex following focal ischemic infarcts in adult squirrel monkeys. J Neurophysiol 1996, 75: 2144-2149.

Cirstea MC, Levin MF: Compensatory strategies for reaching in stroke. Brain 2000, 123: 940-953. 10.1093/brain/123.5.940

Cirstea MC, Ptito A, Levin MF: Effect of type of feedback and cognitive impairment in arm motor skill re-acquisition in stroke. Stroke 2006, 37: 1237-1242. 10.1161/01.STR.0000217417.89347.63

Allred RP, Maldonado MA, Hsu JE, Jones TA: Training the "less-affected" forelimb after unilateral cortical infarcts interferes with functional recovery of the impaired forelimb in rats. Restor Neurol Neurosci 2005, 23: 297-302.

Taub E, Miller NE, Novack TA, et al.: Technique to improve chronic motor deficit after stroke. Arch Phys Med Rehab 1993,74(4):347-354.

Ada L, Canning C, Carr JH, Kilbreath SL, Shepherd RB: Task specific training of reaching and manipulation. In Insights into Grasp and Reach Movements. Edited by: Bennett KMB, Castiello U. Cambridge: Elsevier; 1994:239-265.

Levin MF: Should stereotypic movement synergies seen in hemiparetic patients be considered adaptive? Behav Brain Sci 1997, 19: 79-80.

Michaelsen SM, Dannenbaum R, Levin MF: Task-specific training with trunk restraint on arm recovery in stroke: randomized control trial. Stroke 2006, 37: 186-192. 10.1161/01.STR.0000196940.20446.c9

Cirstea MC, Levin MF: Improvement in arm movement patterns and endpoint control depends on type of feedback during practice in stroke survivors. Neurorehabil Neural Repair 2007, 21: 1-14. 10.1177/15459683071112010206

Stanton D, Foreman N, Wilson PN: Uses of virtual reality in clinical training: developing the spatial skills of children with mobility impairments. Stud Health Technol Informatics 1998, 58: 219-232.

McNeill MDJ, Pokluda L, McDonough SM, Crosbie J: Immersive virtual reality for upper limb rehabilitation following stroke. Proceedings of IEEE International Conference on Systems, Man and Cybernetics 2004.

Levin MF, Cirstea MC, Michaelsen SM, Roby-Brami A: Use of trunk for reaching targets placed within and beyond the reach in adult hemiparesis. Exp Brain Res 2002, 143: 171-180. 10.1007/s00221-001-0976-6

Archambault P, Pigeon P, Feldman AG, Levin MF: Recruitment and sequencing of different degrees of freedom during pointing movements involving the trunk in healthy and hemiparetic subjects. Exp Brain Res 1999,126(1):55-67. 10.1007/s002210050716

Acknowledgements

Supported by Canadian Institutes of Health Research (CIHR) and Canadian Foundation for Innovation (CFI). Thanks to Eric Johnstone and Christian Beaudoin for construction of the PE and VE respectively and to participants of preliminary experiments. Consent obtained from LAK for Fig. 1.

Author information

Authors and Affiliations

Corresponding author

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Subramanian, S., Knaut, L.A., Beaudoin, C. et al. Virtual reality environments for post-stroke arm rehabilitation. J NeuroEngineering Rehabil 4, 20 (2007). https://doi.org/10.1186/1743-0003-4-20

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1743-0003-4-20