Abstract

The problem of blind source separation (BSS) of convolved acoustic signals is of great interest for many classes of applications. Due to the convolutive mixing process, the source separation is performed in the frequency domain, using independent component analysis (ICA). However, frequency domain BSS involves several major problems that must be solved. One of these is the permutation problem. The permutation ambiguity of ICA needs to be resolved so that each separated signal contains the frequency components of only one source signal. This article presents a class of methods for solving the permutation problem based on information theoretic distance measures. The proposed algorithms have been tested on different real-room speech mixtures with different reverberation times in conjunction with different ICA algorithms.

Similar content being viewed by others

1 Introduction

Blind source separation (BSS) is a technique of recovering the source signals using only observed mixtures when both the mixing process and the sources are unknown. Due to a large number of applications for example in medical and speech signal processing, BSS has gained great attention. This article considers the case of BSS for acoustic signals observed in a real environment, i.e., convolutive mixtures, focusing on speech signals in particular. In recent years, the problem has been widely studied and a number of different approaches have been proposed [1, 2]. Many state-of-the-art unmixing methods of acoustic signals are based on independent component analysis (ICA) in the frequency domain, where the convolutions of the source signals with the room impulse response are reduced to multiplications with the corresponding transfer functions. So for each frequency bin, an individual instantaneous ICA problem arises [2].

Due to the nature of ICA algorithms, obtaining a consistent ordering of the recovered signals is highly unlikely. In case of frequency domain source separation, this means that the ordering of outputs may change for each frequency bin. In order to correctly estimate source signals in the time domain, all separated frequency bins need to be put in a consistent order. This problem is also known as the permutation problem.

There exist several classes of algorithms giving a solution for the permutation problem. Approaches presented in [3–6] try to find permutations by considering the cross statistics (such as cross correlation or cross cumulants etc.) of the spectral envelopes of adjacent frequency bins. In [7] algorithms were proposed, that make use of the spectral distance between neighboring bins and try to make the impulse response of the mixing filters short, which corresponds to smooth transfer functions of the mixing system in the frequency domain. The algorithm proposed by Kamata et al. [8] solves the problem using the continuity in power between adjacent frequency components of the same source. A similar method was presented by Pham et al. [9]. Baumann et al. [10] proposed a solution by comparing the directivity patterns resulting from the estimated demixing matrix in each frequency bin. Similar algorithms were presented in [11–13]. In [14] it was suggested to use the direction of arrival (DOA) of source signals, determined from the estimated mixing matrices, for the problem solution. The approach in [15] is to exploit the continuity of the frequency response of the mixing filter. A similar approach was presented in [16] using the minimum of the L1-norm of the resulting mixing filter and in [17] using the minimum distance between the adjacent filter coefficients. In [18] the authors suggest to use the cosine between the demixing coefficients of different frequencies as a cost function for the problem solution. Sawada et al. [19] proposed an approach based on basis vector clustering of the normalized estimated mixing matrices. In [20] a hybrid approach combines spectral continuity, temporal envelope and beamforming alignment with a psychoacoustic post-filter, and in [21] the permutation problem was solved using a maximum-likelihood-ratio between the adjacent frequency bins.

However with growing number of the independent components, the complexity of the solution grows. This is true not only because of the factorial increase of permutations to be considered, but also because of the degradation of the ICA performance. So not all of the approaches mentioned above perform equally well for an increasing number of sources.

The goal of this article is to investigate the usefulness of information theoretic distance measures for the solution of the permutation ambiguity problem. For this purpose it is assumed that the amplitudes of the estimated independent signals possess a Rayleigh distribution [22] and the logarithms of the amplitudes possess a generalized Gaussian distribution (GGD). It should be noted that the approach in [23] is based on a similar assumption, namely that the extracted signals are generalized Gaussian distributed. The authors handle the problem by comparing the parameters of the GGD of each frequency bin. However the resulting algorithm solves the permutation problem only partially and requires a combination with another approach, for instance [24].a In contrast, the algorithms proposed in this article deal with the problem in a self-contained way and require no completion by other approaches.

The resulting approaches will be tested on different speech mixtures recorded in real environments with different reverberation times in combination with different ICA algorithms, such as JADE [25], INFOMAX [4, 26], and FastICA [27, 28].

2 Problem formulation

This section provides an introduction into the problem of blind separation of acoustic signals.

At first a general situation will be considered. In a reverberant (real) room, N acoustic signals s(t) = [s1(t),...s N (t)] are simultaneously active (t represents the time index). The vector of the source signals s(t) is recorded with M microphones placed in the room, so that an observation vector x(t) = [x1(t), ... x M (t)] results. Due to the time delay and to the signal reflections, the resulting mixture x(t) is a result of a convolution of the source signal s(t) with an unknown filter tensor where is the k-th (k ∈ [1...K]) M × N matrix with filter coefficients and K is the filter length. This problem can be summarized by

The term n(t) denotes the additive sensor noise. Now the problem is to find a filter matrix so that by applying it to the observation vector x(t), the source signals can be estimated via

In other words, for the estimated vector y(t) and the source vector s(t), y(t) ≈ s(t) should hold.

This problem is also known as cocktail-party-problem. A common way to deal with the problem is to reduce it to a set of instantaneous separation problems, for which efficient approaches exist.

For this purpose, the time-domain observation vectors x(t) are transformed into a frequency domain time series by means of the short time Fourier transform (STFT)

where Ω is the angular frequency, τ represents the frame index, and w(t) is a window function (e.g., Hanning window) of length NFFT, τ represents the frame index and corresponds to the time shift of he window and R is the shift size, in samples, between successive windows [29]. Transforming Equation (1) into the frequency domain reduces the convolutions to multiplications with the corresponding transfer functions, so that for each frequency bin an individual instantaneous ICA problem

arises. A(Ω) is the mixing matrix in the frequency domain, S(Ω, τ) = [S1(Ω, τ), ..., S N (Ω, τ)] represents the source signals, X(Ω, τ) = [X1(Ω, τ), ..., X M (Ω, τ)], denotes the observed signals, and N(Ω, τ) is the frequency domain representation of the additive sensor noise. In order to reconstruct the source signals unmixing matrix W(Ω) ≈ P-1(Ω)A-1(Ω) is derived using complex-valued ICA, so that

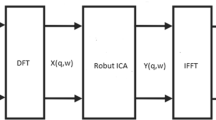

holds. Here Ŷ(Ω, τ) = [Ŷ1(Ω, τ), ..., Ŷ N (Ω, τ)] is the time frequency representation of the permutated ICA outputs. In order to solve the permutation problem a correction matrix P(Ω) for each frequency bin has to be found, which is the main topic of this article. The data flow of the whole application is shown in Figure 1.

3 Permutation correction

This section gives an overview over the applied permutation correction methods. To resolve the permutations, the probability density functions (pdfs) of the magnitudes or of the logarithms of the magnitudes of the resulting frequency bins are compared. At this point, the assumption is made that adjacent frequency bins of the same source signal possess similar distributions.

3.1 Speech density modeling

3.1.1 Distribution of the speech magnitudes

As shown in [22], for speech signals the distribution of the magnitudes of spectral components can be described by the Rayleigh distribution. The pdf of the Rayleigh distribution of a random variable x is given by

where σ is a shape parameter that can be estimated e.g., by using the maximum likelihood estimator [30].

For the vector of random variables x = (x1, x2,... x N ), the multivariate Rayleigh distribution can be written as follows

where

is the symmetric positive definite covariance matrix of x,

is a vector of the decorrelated random variables and is the shape parameter for the signal [31][32].b

3.1.2 Distribution of the logarithms of the speech magnitudes

For the approximation of the logarithms of the speech magnitudes the GGD is applied. The PDF of the GGD of a random variable x is given by

where μ is the mathematical expectation of x. The scale parameter a is obtained by

and the Gamma function is given by

The β-parameter describes the distribution shape and σ is the standard deviation of x. However, the β-parameter is unknown and needs to be estimated e.g., by using the maximum likelihood estimator [33] or the moment estimator [34, 35].

For the vector of random variables x = (x1, x2,... x N ), the multivariate generalized Gaussian PDF can be written as follows

where Σ is the covariance matrix of x and is a vector of the decorrelated random variables (Equation (9)) [33].

3.2 Distance measures

Suppose the pdfs of magnitudes in two adjacent frequency bins

and

of separated speech signals Ŷ(Ω, τ) are known. To solve the permutation ambiguity problem, it is necessary to define a pairwise similarity measure d(·, ·) between two PDFs, so the overall dependence (distance) results in

where k ∈ [1, NFFT - 1] is the frequency index,

is a permutation of Ŷ(Ω k , τ), π(x) defines a permutation of the components of the vector x and N is the number of separated signals. The total distance D between a permutated vector of frequency bins, ŶP(Ω k , τ), and a reference vector in bin k + 1, is a sum of distances between each pair and Ŷ n (Ωk+1, τ).

Below, several information theoretic similarity measures will be considered, which seem to be suitable for the solution of the permutation ambiguity problem. But first a definition of entropy or "self-information" is necessary.

The generalized formulation of entropy was given by Rényi and is known as the Rényi entropy in information theory [36, 37]. The Rényi differential entropy of order α, where α ≥ 0, for a random variable with a pdf f(x) whose support is a set  , is defined as

, is defined as

It can be shown, that in limit for α → 1, H α (f(x)) converges to the Shannon entropy [37, 38],

Similarly to the marginal entropy above, the joint entropy of a vector of random variables x = (x1, x2, ..., x N ) is defined as

where f(x) is the multivariate pdf.

At this point it is possible to introduce the necessary dependence measures that will be used as the pairwise similarity measure d(·, ·) in Equation (16):

-

Rényi generalized divergence between two distributions f(x) and g(x) of order α, where α ≥ 0, is defined [36] as

(21)

Special cases of Equation (21) [39] are the

-

Bhattacharyya coefficient

(22) -

Kullback-Leibler divergence

(23) -

Log distance

(24)

where E [·] denotes the statistical expectation according to f(x),

-

and log of the maximum ratio

(25)

Rényi's divergence describes the alikeness between two distributions. The smaller the Rényi divergence, the more similar the distributions are. The main advantage of the Rényi divergence is the small computational burden. The problem in using the Rényi divergence is the fact that this measure is not symmetric, so typically d α (f(x)||g(x)) ≠ d α (g(x)||f(x)), and not bounded, so infinite values can arise.

-

Mutual information for a vector of random variables X = (X1, X2, ..., X K ) is defined as the Kullback-Leibler divergence between the product of the distribution functions and the multivariate distribution f x (x)

(26)(27)(28)(29)

where is the marginal entropy and H1(f x (x)) is the joint entropy of X.

Mutual information gives the amount of information contained in the random variables of X. Since for the computation of the term f x (x) is taken into account i.e., the dependencies are considered, the mutual information is a stronger cost function than Rényi divergence and using it for resolving the permutations, better results are to be expected.

-

The Jensen-Rényi divergence of the vector of random variables X = (X1, X2, ..., X K ) of order α, where α ≥ 0, is defined [40] as

(30)

The Jensen-Rényi divergence is based on the Kullback-Leibler divergence and can be seen as an extension of it with the difference that it is symmetricc and always of finite value. On the other hand, due to the fact that the distributions of the random variables are compared indirectly using the average , the Jensen-Rényi divergence can be seen as an alternative to the mutual information [41]. In fact, as shown in [42], both measures show similar characteristics.

-

The modified Jensen-Rényi divergence. The Jensen-Rényi-divergence from the Equation (30) measures the distance between two distributions f X (x) and f Y (x) in respect to a third point in the distribution space. In this case, the third point is chosen as the average of the two distributions. This approach is justified because of the concavity of the entropy in distribution space

(31)

In principle, it is possible to define the distance in respect to any other point, if the assumption of the concavity for this point holds. Such a point can be chosen as an average over the random variables, the distributions of which are currently analyzed.

For the entropy of a random variable X

holds, and for the entropy of the sum of two random variables X and Y [38, 43]

||·||α denotes the α norm operator and ٭ stands for convolution. Using the entropy power inequality [38] for the case of α = 1, and extending Young's inequality [44] for the case of α ≠ 1, it can be shown [45], that

Since

holds, the inequality in Equation (34) can be rewritten as

So, at this point a modification of the Jensen-Rényi divergence is proposed. This distance measure of the vector of random variables X = (X1, X2, ..., X K ) of order α, where α ≥ 0, is defined as

where In the way the modified Jensen-Rényi divergence is used here, this distance measure describes the amount of new information coming to a spectrogram if an adjacent frequency bin Y(Ωk+1, τ) is included. The lesser the new information provided, the closer the frequency bins are. This modification has less computational burden than the classical Jensen-Rényi divergence, since for , only one pdf has to be calculated instead of K in the Jensen-Rényi divergence. Furthermore, for the entropy there exists an analytical solution, which improves the accuracy of the results.

3.3 The Permutation correction algorithm

In this section the actual permutation correction algorithm will be discussed. As mentioned before, it will be assumed that subsequent frequency bins of the same source signal possess similar distributions. The similarity between the frequency bins is measured by applying the measures given in Equations (21),(29), (30), and (37) in the optimization of Equation (16).

However, as mentioned in [14] the use of only one frequency bin as a reference bin for the correction causes a risk of a misalignment of the algorithm. To avoid this problem, the approach presented in [5] uses an average value of the already corrected frequency bins. So, the Equation (16) will be redefined as

where L is the number of the already corrected frequency bins to be used for the averaging. Then the correction algorithm can be implemented as described in Algorithm 1.

Algorithm 1

-

1.

Initialization: Start with the frequencyd Set k = NFFT/2.

-

2.

Estimate the parameters of the Rayleigh distribution of |Ŷ(Ω k , τ)| and of the average of L already corrected bins , with using Equations (6)-(9).

-

3.

Calculate as defined in Equation (38) for all possible permutations of |Ŷ(Ω k , τ)|.

-

4.

Choose the permutation π+(|Ŷ(Ω k , τ)|) with the most dependent value of D.

-

5.

Correct the current frequency bin in order with the best permutation π+(|Ŷ(Ω k , τ)|).

-

6.

Decrement k and if k ≠ 0 go to Step 2.

The same scheme can be applied on the logarithms of the spectral magnitudes of the signals log |Ŷ(Ω k , τ)| instead of |Ŷ(Ω k , τ)| and using generalized Gaussian instead of Rayleigh distributions. In that case Algorithm 2 results.

Algorithm 2

-

1.

Initialization: Start with the frequency k = NFFT/2.

-

2.

Estimate the GGD parameters of log |Ŷ(Ω k , τ)| and of the average of L already corrected bins , with using Equations (10)-(13).e

-

3.

Calculate as defined in Equation (38) for all possible permutations of |Ŷ(Ω k , τ)|.

-

4.

Choose the permutation π+(log |Ŷ(Ω k , τ)|) with the most dependent value of D.

-

5.

Correct the current frequency bin in order with the best permutation π+(log |Ŷ(Ω k , τ)|).

-

6.

Decrement k and if k ≠ 0 go to Step 2.

The Algorithms 1 and 2 will be used in the following sections for the experimental comparison of the distance measures given in Equations (21),(29), (30), and (37).

4 Experiments and results

4.1 Conditions

For the evaluation of the proposed approaches, two different sets of recordings were used. In the first data set, different audio files from the TIDigits database [46] were used and mixtures with up to four speakers were recorded under real room conditions. The distance between speakers and the center of a linear microphone array was varied between 0.9 and 2 m. The second dataset was recorded by Sawada [47]. Here also mixtures with up to four speakers are presented. All of the mixtures were made with the same number of microphones as the number of speakers in the mixture (M = N), i.e., in each mixture a determined problem is considered so the classical ICA algorithms for source separation can be applied. The experimental setups are presented schematically in Figure 2 and the experimental conditions are summarized in Tables 1, 2, 3, and 4.

4.2 Parameter settings

The algorithms were tested on all recordings, which were first transformed to the frequency domain at a resolution of NFFT = 1, 024. For calculating the spectrogram, the signals were divided into overlapping frames with a Hanning window and an overlap of 3/4 · NFFT.

4.3 ICA performance measurement

For calculation of the effectiveness of the proposed algorithm, the improvement Δ SIR of the signal to interference ratio

was used as a measure of the separation performance and the signal to distortion ratio (SDR)

as a measure of the signal quality. Here is the i-th separated signal with only the source s j active, and is the observation obtained by microphone k when only s j is active. α and δ are parameters for phase and amplitude chosen to optimally compensate the difference between and [19].

For measuring the performance of the proposed algorithms on all speakers present in a mixture recording, an average Δ SIR

and SDR

were used, where N is the number of speakers in the considered mixture.

4.4 Experimental results

In this section the experimental results of the signal separation will be compared. All the mixtures from Tables 1, 2, 3, and 4 were separated by JADE, INFOMAX, and the FastICA algorithm and the permutation problem was solved using either Algorithm 1 or 2 from Section 3.3 and distance measures from Equations (21), (29), (30), and (37). For each result the performance is calculated using Equations (39) and (40).

Figures 3 and 4 show the behavior of three different approaches in terms of Δ SIR and SDR (Equations (41) and (42)) over the mixtures for the Infomax approach.

In Tables 5 and 6, the separation results are averaged for each distance measure for the mixtures of 2, 3, and 4 signals separately. M2 in Tables 5 and 6 contains the average Δ SIR/SDR of the separation results of Mix. 1, Mix. 4, Mix. 7 and Mix. 10, cf. Tables 1, 2, 3, and 4. Similarly, M3 contains the separation results of Mix. 2, Mix. 5, Mix. 8 and Mix. 11, and M4 those of Mix. 3, Mix. 6, Mix. 9 and Mix. 12.

4.5 Discussion

The calculated results show the usefulness of the proposed method for permutation correction, though not all of the applied distance measures perform equally. As already mentioned above, the best results were achieved using mutual information and the modified Jensen-Rényi divergence, while results obtained using generalized Rényi divergence are rather poor. This is especially the case, if α = 2 is used. Of all the applied distance measures based on the generalized Rényi divergence, the best performance was achieved in the case of the Bhattacharyya coefficient, i.e., α = 0.5. A similar tendency can be seen with "classical" Jensen-Rényi divergence. Here the best results were achieved using α = 1. In contrast, correction based on mutual information and the modified Jensen-Rényi divergence provides stable good results.

The poor performance in the case of generalized Rényi divergence can be explained by fact that the assumed models of the probability distribution of the amplitudes and log amplitudes are not exact enough to be used with generalized Rényi divergence for a successful solution of the permutation problem. As can be seen in Figure 5, the distribution of the lower frequency bins of a speech signal can be only roughly modeled with Rayleigh pdf. In contrast the higher frequencies can be seen as Rayleigh distributed variables. This causes a high error rate in the permutation correction at lower frequencies, when the generalized Rényi divergence is used. Since, as shown in [48], the lower frequencies play a more significant role in BSS of the speech signals than the higher frequencies, the low values of the SIR and SDR are explainable. The same holds for the distribution of the log amplitudes. This problem can be solved using a more exact model for the pdf of the speech signals such as Rayleigh mixture models (RMM) for the amplitudes or Gaussian mixture models (GMM) for the log amplitudes and is the subject of the future study.

Comparison of the assumed pdfs and the histograms of the speech signal at different frequencies. Histograms of the amplitudes of a clean speech signal (white bar) and the Rayleigh probability distribution functions (black line) that were calculated for the clean speech data at (a) 1,000 Hz, (b) 2,000 Hz, (c) 3,000 Hz and (d) 4,000 Hz

Furthermore, the effects of the various reverberation times on the performance of different distance measures are going to be studied in the future. While, as it can be seen in Figures 3 and 4, the performance of the separation system decreases with a growing reverberation time of the environment, the effect of reverberation time on different methods of permutation correction should be analyzed in a more exact way.

On the other hand, the assumption of the Rayleigh distribution and GGD is good enough for permutation correction with mutual information and the modified Jensen-Rényi divergence, since these distance measures are not as sensitive to the inter frequency-bin pdf perturbations as the generalized Rényi divergence. Furthermore, in this case there exists an analytical solution for modified Jensen-Rényi divergence, which reduces the computational burden of the algorithm and improves the accuracy of the solution.

As it can be seen in Tables 5 and 6, the separation performance of Algorithm 1 is slightly better than the performance of Algorithm 2. A possible explanation for this issue is the fact that for the Rayleigh pdf just one parameter has to be estimated instead of 2 parameters in case of GGD. Furthermore the estimation of the GGD parameters is more complicated than the estimation of the σ in case of Rayleigh distribution. These might cause the uncertainties and errors in the permutation correction.

In the next step, the best four of the proposed methods were compared with some other approaches from Section 1. In Table 7, the results of the comparison are shown. As can be seen, the proposed method (Algorithm 1 with the modified Jensen-Rényi divergence, α = 2) performs better than other algorithms in terms of Δ SIR in most cases.

5 Conclusions

In this article, a method for the permutation correction in convolutive source separation has been presented. The approach is based on the assumption that magnitudes of speech signals adhere to a Rayleigh distribution and the logarithms of magnitudes can be modeled by a GGD. The assumption of Rayleigh or GG distributed signals allows to use information theoretic similarity measures. The information theoretic distance measures are used to detect similarities in subsequent frequency bins after bin-wise source separation is completed, in order to group the frequency bins coming from the same source. Beside the existing information theoretic distance measures, a modification of the Jensen-Rényi divergence is proposed. This modified distance measure shows very good results for the considered problem.

The proposed method has been tested on different reverberant speech mixtures in connection with different ICA algorithms. The experimental results and the comparison with today's state-of-the-art approaches for permutation correction show the usefulness of the proposed method. Further, the experimental results have shown that the method performs best using either the mutual information or the modified Jensen-Rényi divergence criterion (Tables 5 and 6). This fact may be explained at least partially by the ability of the Jensen-Renyi divergence and the mutual information to utilize temporal dependence structure, which puts these two criteria ahead of the Rényi generalized divergence and its special cases of the Kullback-Leibler divergence and the log maximum ratio, which we considered as alternatives.

Appendix 1

To calculate the distance measures from Equation (21), (29), (30), and (37), in most cases an integral has to be solved. The Rényi differential entropy (Equation (18)) in case of the Rayleigh distribution is calculated as

where σ is the shape parameter of the Rayleigh distribution and

Setting α → 1 the Equation (44) becomes

where γ is the Euler-Mascheroni constant γ ≈ 0.57722.

For the GG distribution, the entropies can be computed as

and

The solution of the Equation (46) is given in [38]. The solutions of the Equations (44) and (48) were derived using MATHEMATICA. For the distance measures without an analytical solution the trapezoidal rule for numerical integration was applied [49].

Appendix 2

Since information theoretic similarity measures make use only of the pdfs of the signals, a question may arise, as to whether temporal dependence structures of the signals are utilized at all in the suggested framework. The temporal structure is taken into account indirectly in the applied similarity measures, since each of the measures contains a term where either the joint probability, the pdf of the mean value of the random variables (Equation (37)), the mean of the pdf or a quotient of the pdfs is considered. These are the terms where the values of the distribution functions produced at the same time domain window are "compared".

To demonstrate this issue, the following example was constructed: We compare signal U1(τ), which contains amplitudes of a speech signal at the frequency f = 3219.2 Hz, U2(τ) which is the same signal as U1(τ) but time delayed, and U3(τ), which contains a amplitudes of the same speech signal as U1(τ) at the frequency f = 3230 Hz, the next frequency bin, with additional Gaussian noise, see Figure 6.

Constructed signals for demonstration. (a) Signal U1(τ) is a speech signal at the frequency f = 3, 219.2 Hz, (b) U2(τ) is the same signal as U1(τ) but time delayed and (c) signal U3(τ) contains amplitudes of the same speech signal as U1(τ) at the frequency f = 3, 230 Hz (the next frequency bin) with an additional Gaussian noise.

For each signal pair 〈U1(τ), U2(τ)〉, 〈U1(τ), U3(τ)〉, 〈log(U1(τ)), log(U2(τ))〉 and 〈log(U1(τ)), log(U3(τ))〉, the similarity measures from Equations (21), (29), (30), and (37) were applied. The results of the signal comparison can be found in Tables 8 and 9.

As can be seen, for this example each similarity measure that was considered in this article rates U1(τ) more similar to U3(τ) than to U2(τ),f which implies that the temporal dependencies and correlations were not ignored during the computation of the probability distribution functions.

In contrast to the other measures, in the case of the Rényi generalized divergence defined in Equation (21), and in its special cases of the Kullback-Leibler divergence and the log maximum ratio, the time dependency can not be taken into account in this manner. Still, these similarity measures can also be used for permutation correction, since the situation we considered in the example above is rather artificial and cannot be expected for realistic situations with two speech signals as the desired sources.

Endnotes

aIn the cases where no permutation correction by the means of the comparison of the GGD parameters is possible, the problem is handled by applying the correlation based permutation correction approach. bEquation (7) is a special case of the multivariate Weibull distribution with α = 1 and c i = 2 [32, Equation (14)]. cE.g. . dThe proposed algorithm solves the permutations problem starting with the higher frequency bins. The first frequency bin in this case is the bin with k = NFFT/2 + 1. Since there is no other definition of the correct order of the signals, the signal order in frequency bin k = NFFT/2+1 will be assumed as correct. eFor the experiments from the Section 4 the parameter β was calculated using the approximation for the inverse function as proposed in [34]. fThe more dependent the signals are, the higher the value of the mutual information Equation (29) becomes, while simultaneously, the values of the similarity measures from (21), (30), and (37) decrease.

References

Mansour A, Kawamoto M: ICA papers classified according to their applications and performances. IEICA Trans. Fundam 2003, E86-A(3):620-633.

Pedersen MS, Larsen J, Kjems U, Parra LC: Convolutive blind source separation methods. In Springer Handbook of Speech Processing and Speech Communication. Springer Verlag, Berlin/Heidelberg; 2008:1065-1094.

Anemüller J, Kollmeier B: Amplitude modulation decorrelation for convolutive blind source separation. In Proc. ICA 2000. Helsinki; 2000:215-220.

Mejuto C, Dapena A, Castedo L: Frequency-domain infomax for blind separation of convolutive mixtures. In Proc. ICA 2000. Helsinki; 2000:315-320.

Murata N, Ikeda S, Ziehe A: An approach to blind source separation based on temporal structure of speech signals. Neurocomputing 2001, 41(1-4):1-24.

Reju VG, Koh SN, Soon IY: Partial separation method for solving permutation problem in frequency domain blind source separation of speech signals. Neurocomputing 2008, 71: 2098-2112.

Parra L, Spence C, De Vries B: Convolutive blind source separation based on multiple decorrelation. In Proc. IEEE NNSP Workshop. Cambridge, UK; 1998:23-32.

Kamata K, Hu X, Kobatake H: A new approach to the permutation problem in frequency domain blind source separation. In Proc. ICA 2004. Granada, Spain; 2004:849-856.

Pham D-T, Servière C, Boumaraf H: Blind separation of speech mixtures based on nonstationarity. IEEE Signal Processing and Its Applications, Proceedings of the Seventh International Symposium 2003, 73-76.

Baumann W, Kolossa D, Orglmeister R: Maximum likelihood permutation correction for convolutive source separation. ICA 2003 2003, 373-378.

Kurita S, Saruwatari H, Kajita S, Takeda K, Itakura F: Evaluation of frequency-domain blind signal separation using directivity pattern under reverberant conditions. ICASSP2000 2000, 3140-3143.

Ikram M, Morgan D: A beamforming approach to permutation alignment for multichannel frequency-domain blind speech separation. ICASSP02 2002, 881-884.

Mitianoudis N, Davies M: Permutation alignment for frequency domain ICA using subspace beamforming methods. Proc. ICA 2004, LNCS 3195 2004, 669-676.

Sawada H, Mukai R, Araki S, Makino S: A robust approach to the permutation problem of frequency-domain blind source separation. IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2003) 2003, V: 381-384.

Pham D-T, Servière C, Boumaraf H: Blind separation of convolutive audio mixtures using nonstationarity. Proc. ICA2003 2003, 981-986.

Sudhakar P, Gribonval R: A sparsity-based method to solve permutation indeterminacy in frequency-domain convolutive blind source separation. In Independent Component Analysis and Signal Separation: 8th International Conference, ICA 2009, Proceedings. Paraty, Brazil; 2009.

Baumann W, Köhler B-U, Kolossa D, Orglmeister R: Real time separation of convolutive mixtures. In Independent Component Analysis and Blind Signal Separation: 4th International Symposium, ICA 2001, Proceedings. San Diego, USA; 2001.

Asano F, Ikeda S, Ogawa M, Asoh H, Kitawaki N: Combined approach of array processing and independent component analysis for blind separation of acoustic signals. IEEE Trans. Speech Audio Proc 2003, 11(3):204-215.

Sawada H, Araki S, Mukai R, Makino S: Blind extraction of a dominant source from mixtures of many sources using ICA and time-frequency masking. Proc. ISCAS 2005 2005, 5882-5885.

Wang W, Chambers JA, Sanei S: A novel hybrid approach to the permutation problem of frequency domain blind source separation. In Proc. 5th International Conference on Independent Component Analysis and Blind Signal Separation, ICA 2004. Granada, Spain; 2004:530-537.

Mitianoudis N, Davies ME: Audio source separation of convolutive mixtures. IEEE Trans. Audio Speech Process 2003, 11(5):489-497.

Ephraim Y, Malah D: Speech enhancement using a minimum mean square error log-spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process 1985, 33: 443-445.

Mazur R, Mertins A: Solving the permutation problem in convolutive blind source separation. Proc. ICA 2007, LNCS 4666 2007, 512-519.

Ikeda S, Murata N: A method of blind separation based on temporal structure of signals. Proc. Int. Conf. on Neural Information Processing 1998, 737-742.

Cardoso J-F: High order contrasts for independent component analysis. Neural Comput 1999, 11: 157-192.

Bell A, Sejnowski T: An information-maximization approach to blind separation and blind deconvolution. Neural Comput 1995, 7: 1129-1159.

Hyvärinen A, Oja E: A fast fixed-point algorithm for independent component analysis. Neural Comput 1997, 9: 1483-1492.

Mitianoudis N, Davies M: New fixed-point solutions for convolved mixtures. In Proc. ICA2001. San Diego, CA; 2001:633-638.

Allen JB, Rabiner LR: A unified approach to short-time Fourier analysis and synthesis. Proc. IEEE 1977, 65: 1558-1564.

Lee KR, Kapadia CH, Brock DB: On estimating the scale parameter of the Rayleigh distribution from doubly censored samples. Statistische Hefte 1980, 21(1):14-29.

Hoffman WC: The joint distribution of n successive outputs of a linear detector. J. Appl. Phys 1954, 25: 1006-1007.

Darbellay GA, Vajda I: Entropy expressions for multivariate continuous distributions. IEEE Trans. Inf. Theory 2000, 46(2):709-712.

Boubchir L, Fadili JM: Multivariate statistical modeling of images with the curvelet transform. IEEE Signal Processing and Its Applications, 2005. Proc. of the Eighth International Symposium 2005, 2: 747-750.

Dominguez-Molina JA, Gonzalez-Farias G, Rodriguez-Dagnino RM: A practical procedure to estimate the shape parameter in the generalized Gaussian distribution. CIMAT Tech. Rep. I-01-18_eng.pdf [Online] [http://www.cimat.mx/reportes/enlinea/I-01-18_eng.pdf]

Prasad R: Fixed-point ICA based speech signal separation and enhancement with generalized Gaussian model. PhD Thesis 2005. [http://citeseer.ist.psu.edu/prasad05fixedpoint.html]

Rényi A: On measures of entropy and information. In Selected Papers of Alfred Rényi. Volume 2. Akaemia Kiado, Budapest; 1976:565-580.

Principe JC, Xu D, Fisher JW III: Information-theoretic learning. In Unsupervised Adaptive Filtering. Edited by: Haykin S. Wiley, New York; 2000:265-319.

Cover TM, Thomas JA: Elements of Information Theory. Wiley, New York; 1991.

Hero AO, Ma B, Michel O, Gorman JD: Alpha divergence for classification, indexing and retrieval. Technical Report 328, Comm. and Sig. Proc. Lab., Dept. EECS, Univ. Michigan 2001.

Hamza AB, Krim H: Jensen-Rényi divergence measure: theoretical and computational perspectives. In Proc. ISlT 2003. Yokohama, Japan; 2003.

Martins AFT, Figueiredo MAT, Aguiar PMQ, Smith NA, Xing EP: Nonextensive entropic kernels. ICML 08: Proc. of the 25th International Conference on Machine Learning, ACM 2008, 307: 640-647.

He Y, Hamza AB, Krim H: A generalized divergence measure for robust image registration. IEEE Trans. Signal Process 2003, 51(5):1211-1220.

Arndt C: Information measures: information and its description in science and engineering. In Signals and Communication Technology. 2nd edition. Springer, Berlin; 2004.

Barthe F: Optimal Youngs inequality and its converse: a simple proof. Geom. Funct. Anal 1998, 8(2):234-242.

Bercher JF, Vignat C: A Renyi entropy convolution inequality with application. In Proc. EUSIPCO. Tolouse, France; 2002.

Leonard RG: A Database for speaker-independent digit recognition. Proc. ICASSP 84 1984, 3: 42.11.

Sawada H[http://www.kecl.ntt.co.jp/icl/signal/sawada/demo/bss2to4/index.html]

Jafari MG, Plumbley MD: The role of high frequencies in convolutive blind source separation of speech signals. In Proc. 7th Int. Conf. on Independent Component Analysis and Signal Separation, ICA 2007. London, UK; 2007.

Schwarz HR: Numerische Mathematik. B.G. Teubner, Stuttgart; 1997.

Hoffmann E, Kolossa D, Orglmeister R: A batch algorithm for blind source separation of acoustic signals using ICA and time-frequency masking. In Proc. 7th Int. Conf. on Independent Component Analysis and Signal Separation, ICA 2007. London, UK; 2007.

Sawada H, Araki S, Makino S: Measuring dependence of bin-wise separated signals for permutation alignment in frequency-domain BSS. Circuits and Systems, 2007. ISCAS 2007. IEEE International Symposium on (2007) 2007, 3247-3250.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Hoffmann, E., Kolossa, D., Köhler, BU. et al. Using information theoretic distance measures for solving the permutation problem of blind source separation of speech signals. J AUDIO SPEECH MUSIC PROC. 2012, 14 (2012). https://doi.org/10.1186/1687-4722-2012-14

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-4722-2012-14