Abstract

This paper deals with the asymptotic stability and boundedness of the solution of a time-varying impulsive Volterra integro-dynamic system on time scales in which the coefficient matrix is not necessarily stable. We generalize to a time scale some known properties concerning the asymptotic behavior and boundedness from the continuous case.

Similar content being viewed by others

1 Introduction

Impulsive differential systems represent a natural framework for mathematical modelling of several processes in the applied sciences [1–4]. Basic qualitative and quantitative results about impulsive Volterra integro differential equations were studied in the literature (see [5–8]). Volterra-type equations (integral and integro-dynamic) on time scales were studied in [9–15]. In [16] the authors presented a theory for linear impulsive dynamic systems on time scales and recently in [17] various results concerning the asymptotic stability and boundedness of Volterra integro-dynamic equations on time scales were developed. Motivated by these papers, we generalize these results to impulsive integro-dynamic systems on time scales.

2 Preliminaries

In this paper we assume that the reader is familiar with the basic calculus of time scales. Let be the space of n-dimensional column vectors with a norm . Also, with the same symbol we denote the corresponding matrix norm in the space of matrices. If , then we denote by its conjugate transpose. We recall that and the following inequality holds for all and . A time scale is a nonempty closed subset of ℝ. The set of all rd-continuous functions will be denoted by .

The notations , , and so on, denote time scale intervals such as , where . Also, for any , let and .

We denote by ℛ (respectively ) the set of all regressive (respectively positively regressive) functions from to ℝ. The space of all rd-continuous and regressive functions from to ℝ is denoted by . Also,

We denote by the set of all functions that are differentiable on with its delta-derivative . The set of rd-continuous (respectively rd-continuous and regressive) functions is denoted by (respectively by ). We recall that a matrix-valued function A is said to be regressive if is invertible for all , where I is the identity matrix. For a comprehensive review on time scales, we refer the reader to [18] and [19].

Lemma 2.1 ([[18], Theorem 2.38])

Let . Then .

Lemma 2.2 ([[18], Theorem 6.2])

Let with . Then

Theorem 2.3 ([[13], Theorem 7])

Let with and assume that is integrable on . Then

It is easy to verify that the above result holds for .

Lemma 2.4 ([[16], Lemma 2.1])

Let , , and , . Then

implies

Consider the Volterra time-varying impulsive integro-dynamic system

where A (not necessarily stable) is an matrix function and F is an n-vector function, which is piecewise continuous on , K is an matrix function, which is piecewise continuous on , , , with and the impulsive points are right dense. Note that represents the left limit of at and represents the right limit of at .

The rest of the paper is organized as follows. In Section 3, we investigate the asymptotic behavior of solutions of system (1), which generalizes the continuous version () of [[8], Theorem 2.5]. In Section 4 we discuss the uniform boundedness of solutions of (1) by constructing a Lyapunov functional. Further results for boundedness, uniform boundedness and stability of solutions will also be developed.

3 Asymptotic stability

Our first result in this section is to present a system equivalent to (1) which involves an arbitrary function.

Theorem 3.1 Let be an continuously differentiable matrix function with respect to s on with for each . Then (1) is equivalent to the system

where

and

Proof Let be any solution of (1) on . If we take , then for , we have

and by (1) it follows that

Integration from to t yields

Using Theorem 2.3, we obtain

By a change of variables in the double integral term, we have

Using (3) and (4), we obtain

From (1), we have

For , we obtain

Hence, is a solution of (2).

Conversely, let be any solution of (2) on . We shall show that it satisfies (1). Consider

Then by (2) and (3) we have

Using (4), we obtain

Again by Theorem 2.3, we have

Now, by setting , then for , we get

Integrating (6) from to t yields

and therefore, we have

Since , substituting (7) in (5), we obtain

which implies , by the unique solution of Volterra integral equations [12] and the fact that . Hence is a solution of (1). □

For our next result we assume that matrix B commutes with its integral, so B commutes with its matrix exponential, that is, , [20].

Theorem 3.2 Let and . Assume that matrix B commutes with its integral. If

then every solution of (1) satisfies

where .

Proof Let be the solution of (2) and define . Then

Substituting for from (2) and integrating from to t, we obtain

Using Theorem 2.3 and applying the semigroup property of exponential functions [[18], Theorem 2.36], we obtain

For , we have

Hence, using (8) and applying the norm on (10), we obtain (9), which completes the proof. □

In the next theorem we present sufficient conditions for asymptotic stability.

Theorem 3.3 Let be an continuously differentiable matrix function with respect to s on Ω such that

-

(a)

the assumptions of Theorem 3.2 hold,

-

(b)

,

-

(c)

,

-

(d)

and

-

(e)

, where : as ,

where , , λ are positive real constants.

If , then every solution of (1) tends to zero exponentially as .

Proof In view of Theorem 3.1 and the fact that satisfies (a), it is enough to show that every solution of (2) tends to zero as . From (a) and using (9), we obtain

Since

then by Lemma 2.1 and the fact that , we obtain

Using (11), (b), (c) and (d), we have

From Theorem 3.2, we have

which implies

Lemma 2.4 yields that

Using [[18], Theorem 2.36], (e) and the fact that , we obtain

where [[18], Exercise 2.28]. By Lemma 2.2, we have , so we obtain

Hence, in view of (e) and the fact , we obtain the required result. □

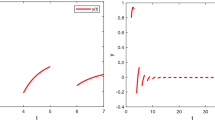

Example 3.4 Let us consider the Volterra integro-dynamic equation

where , , and the impulsive points . Now consider so then . The matrix function given in (4) becomes

In the following, we check the assumptions of Theorem 3.3 when .

Let . Then we have

and

Here the constants are and . From (15) it follows that

Then from (16) we obtain that is a positive function, and

from which it follows that

and

Since and , then we have that . Therefore, since all the assumptions of Theorem 3.3 hold for system (14), it follows that the solution of (14) tends to zero exponentially as .

If , then all points are right scattered and there is no impulse condition. So, from [[17], Example 3.7] it follows that the solution of (14) tends to zero exponentially as .

Theorem 3.5 Let such that for and

-

(i)

assumptions (a), (b), (d) and (e) of Theorem 3.3 hold,

-

(ii)

and ,

-

(iii)

for ,

-

(iv)

for some ,

where , , , δ and θ are positive real numbers such that , .

If , then every solution of (1) tends to zero exponentially as .

Proof From (4), we obtain

which implies

Since , , then from (i), (ii) and (iii), (17) becomes

and

Integrating the above inequality and using (iv), we obtain

Substituting (19) in (11), we obtain

Lemma 2.4 yields that

Using [[18], Theorem 2.36], (e) and the fact that , we obtain

Then by Lemma 2.2, we have

Hence, in view of (i) and , we obtain the required result. □

Corollary 3.6 Let be an continuously differentiable matrix function with respect to s on with for each . Then (1) is equivalent to the impulsive dynamic system

where

and

Proof The proof follows an argument similar to that in Theorem 3.1 with . □

4 Boundedness

In the first result of this section, we give sufficient conditions to insure that (1) has bounded solutions. Our results apply to (1) whether is stable, identically zero, or completely unstable, and do not require to be constant nor to be a convolution kernel. Let and be continuous matrices, . Let and assume that is an regressive matrix. The unique matrix solution of the initial valued problem

is called the impulsive transition matrix (at s) and it is denoted by (see [[16], Corollary 3.1]). Also, if is an regressive matrix satisfying

then

Theorem 4.1 Let be the solution of (23), and suppose that there are positive constants N, J and M such that

-

(i)

,

-

(ii)

,

-

(iii)

.

Then all the solutions of (1) are uniformly bounded, and the zero solution of the corresponding homogenous equation of (1) is uniformly stable with the initial condition .

Proof Consider the following functional

The derivative of along a solution of (1) satisfies

From [[18], Theorem 1.117], we obtain

or

By using (25), [[18], Theorems 1.75] and [[16], Theorem 3.4], we have the following expression:

which implies that

where . Substituting (28) in (27) yields

From (24) and (26), we have

and it is easy to see that

Thus

where

Let . Then by (25), (ii) is precisely . By (29) and (i)-(iii),

If for some constant, and if , then by (26) we obtain

Now, either there exists such that for all , and thus is uniformly bounded, or there exists a monotone sequence tending to infinity such that and as , and by (ii) and (30) we have

a contradiction. This completes the proof. □

In the second part of this section, we consider system (1) with bounded and suppose that

is defined and continuous on Ω. The matrix on is defined by

Then (1) is equivalent to the system

Theorem 4.2 Let and . Assume that commutes with its integral. If

then every solution of (1) with satisfies

where .

Proof Let be the solution of (1) and define . Then

Substituting for from (33) and integrating from to t, yields

Applying the integration by parts on the second term of the right-hand side [[18], Theorem 1.77] and the semigroup property of exponential functions [[18], Theorem 2.36], we obtain

For , we have

Hence, using (34) and applying the norm on (36), we obtain (35), which completes the proof. □

Assume that the hypotheses of Theorem 4.2 hold for next results.

Theorem 4.3 Let be a solution of (1). If on for some , is bounded and , with β sufficiently small, then is bounded.

Proof For the given and bounded , there is with

Substituting (37) in (35), we obtain

Let β be chosen so that . Then

Let and . If is not bounded, then there exists a first with , and then

a contradiction. This completes the proof. □

Theorem 4.4 If in (1), on for some , and for β sufficiently small, then the zero solution of (1) with the initial condition is uniformly stable.

Proof Let be given. We wish to find such that , , and implies . Let with δ yet to be determined. If , then . From (35) with ,

First take β so that and δ so that . If and if there exists with , we have

a contradiction. Thus the zero solution is uniformly stable. The proof is complete. □

Example 4.5 Let us consider the following system:

where and . It is easy to check that

By using (31) and (39), we obtain

This implies that and

Finally, by taking the supremum over t in (40), over , we obtain

Since , so we can choose such that for β sufficiently small. It follows that all the assumptions of Theorem 4.3 are satisfied, hence all the solutions of (38) are bounded. Moreover, Theorem 4.4 yields that the zero solution of (38) is uniformly stable on an arbitrary time scale.

References

Bainov DD, Kostadinov SI: Abstract Impulsive Differential Equations. World Scientific, New Jersey; 1995.

Bainov DD, Simeonov PS: Impulsive Differential Equations: Periodic Solutions and Applications. Longman, New York; 1993.

Lakshmikantham V, Bainov DD, Simeonov PS: Theory of Impulsive Differential Equations. World Scientific, Singapore; 1989.

Samoilenko AM, Perestyuk NA: Impulsive Differential Equations. World Scientific, Singapore; 1995.

Akca H, Berezansky L, Braverman E: On linear integro-differential equations with integral impulsive conditions. Z. Anal. Anwend. 1996, 15: 709-727. 10.4171/ZAA/724

Akhmetov MU, Zafer A, Sejilova RD: The control of boundary value problems for quasilinear impulsive integro-differential equations. Nonlinear Anal. 2002, 48: 271-286. 10.1016/S0362-546X(00)00186-3

Grossman SI, Miller RK: Perturbation theory for Volterra integrodifferential system. J. Differ. Equ. 1970, 8: 457-474. 10.1016/0022-0396(70)90018-5

Rao MRM, Sathananthan S, Sivasundaram S: Asymptotic behavior of solutions of impulsive integrodifferential systems. Appl. Math. Comput. 1989, 34(3):195-211. 10.1016/0096-3003(89)90104-5

Adivar M: Principal matrix solutions and variation of parameters for Volterra integro-dynamic equations on time scales. Glasg. Math. J. 2011, 53(3):463-480. 10.1017/S0017089511000073

Adivar M: Function bounds for solutions of Volterra integro dynamic equations on time scales. Electron. J. Qual. Theory Differ. Equ. 2010, 7: 1-22.

Grace SR, Graef JR, Zafer A: Oscillation of integro-dynamic equations on time scales. Appl. Math. Lett. 2013, 26(4):383-386. 10.1016/j.aml.2012.10.001

Kulik T, Tisdell CC: Volterra integral equations on time scales: basic qualitative and quantitative results with applications to initial value problems on unbounded domains. Int. J. Differ. Equ. 2008, 3(1):103-133.

Karpuz, B: Basics of Volterra integral equations on time scales. arXiv:1102.5588v1 [math.CA] 28 Feb (2011).

Xing Y, Han M, Zheng G: Initial value problem for first order integro-differential equation of Volterra type on time scales. Nonlinear Anal. 2005, 60(3):429-442.

Li Y, Zhang H: Extremal solutions of periodic boundary value problems for first-order impulsive integrodifferential equations of mixed-type on time scales. Bound. Value Probl. 2007., 2007: Article ID 073176

Lupulescu V, Zada A: Linear impulsive dynamic systems on time scales. Electron. J. Qual. Theory Differ. Equ. 2010, 11: 1-30.

Lupulescu V, Ntouyas SK, Younus A: Qualitative aspects of a Volterra integro-dynamic system on time scales. Electron. J. Qual. Theory Differ. Equ. 2013, 5: 1-35.

Bohner M, Peterson A: Dynamic Equations on Time Scales. An Introduction with Applications. Birkhäuser, Boston; 2001.

Bohner M, Peterson A: Advances in Dynamic Equations on Time Scales. Birkhäuser, Boston; 2003.

DaCunha JJ: Transition matrix and generalized matrix exponential via the Peano-Baker series. J. Differ. Equ. Appl. 2005, 11(15):1245-1264. 10.1080/10236190500272798

Acknowledgements

The authors would like to thank the anonymous referees of this paper for very helpful comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Agarwal, R.P., Awan, A.S., O’Regan, D. et al. Linear impulsive Volterra integro-dynamic system on time scales. Adv Differ Equ 2014, 6 (2014). https://doi.org/10.1186/1687-1847-2014-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1847-2014-6