Abstract

This paper presents an algorithm to accelerate the Halpern fixed point algorithm in a real Hilbert space. To this goal, we first apply the Halpern algorithm to the smooth convex minimization problem, which is an example of a fixed point problem for a nonexpansive mapping, and indicate that the Halpern algorithm is based on the steepest descent method for solving the minimization problem. Next, we formulate a novel fixed point algorithm using the ideas of conjugate gradient methods that can accelerate the steepest descent method. We show that, under certain assumptions, our algorithm strongly converges to a fixed point of a nonexpansive mapping. We numerically compare our algorithm with the Halpern algorithm and show that it dramatically reduces the running time and iterations needed to find a fixed point compared with that algorithm.

MSC:47H10, 65K05, 90C25.

Similar content being viewed by others

1 Introduction

Fixed point problems for nonexpansive mappings [[1], Chapter 4], [[2], Chapter 3], [[3], Chapter 1], [[4], Chapter 3] have been investigated in many practical applications, and they include convex feasibility problems [5], [[1], Example 5.21], convex optimization problems [[1], Corollary 17.5], problems of finding the zeros of monotone operators [[1], Proposition 23.38], and monotone variational inequalities [[1], Subchapter 25.5].

Fixed point problems can be solved by using useful fixed point algorithms, such as the Krasnosel’skiĭ-Mann algorithm [[1], Subchapter 5.2], [[6], Subchapter 1.2], [7, 8], the Halpern algorithm [[6], Subchapter 1.2], [9, 10], and the hybrid method [11]. Meanwhile, to make practical systems and networks (see, e.g., [12–15] and references therein) stable and reliable, the fixed point has to be found at a faster pace. That is, we need a new algorithm that approximates the fixed point faster than the conventional ones. In this paper, we focus on the Halpern algorithm and present an algorithm to accelerate the search for a fixed point of a nonexpansive mapping.

To achieve the main objective of this paper, we first apply the Halpern algorithm to the smooth convex minimization problem, which is an example of a fixed point problem for a nonexpansive mapping, and indicate that the Halpern algorithm is based on the steepest descent method [[16], Subchapter 3.3] for solving the minimization problem.

A number of iterative methods [[16], Chapters 5-19] have been proposed to accelerate the steepest descent method. In particular, conjugate gradient methods [[16], Chapter 5] have been widely used as an efficient accelerated version of most gradient methods. Here, we focus on the conjugate gradient methods and devise an algorithm blending the conjugate gradient methods with the Halpern algorithm.

Our main contribution is to propose a novel algorithm for finding a fixed point of a nonexpansive mapping, for which we use the ideas of accelerated conjugate gradient methods for optimization over the fixed point set [17, 18], and prove that the algorithm converges to some fixed point in the sense of the strong topology of a real Hilbert space. To demonstrate the effectiveness and fast convergence of our algorithm, we numerically compare our algorithm with the Halpern algorithm. Numerical results show that it dramatically reduces the running time and iterations needed to find a fixed point compared with that algorithm.

This paper is organized as follows. Section 2 gives the mathematical preliminaries. Section 3 devises the acceleration algorithm for solving fixed point problems and presents its convergence analysis. Section 4 applies the proposed and conventional algorithms to a concrete fixed point problem and provides numerical examples comparing them.

2 Mathematical preliminaries

Let H be a real Hilbert space with the inner product and its induced norm , and let ℕ be the set of all positive integers including zero.

2.1 Fixed point problem

Suppose that is nonempty, closed, and convex. A mapping is said to be nonexpansive [[1], Definition 4.1(ii)], [[2], (3.2)], [[3], Subchapter 1.1], [[4], Subchapter 3.1] if

The fixed point set of is denoted by

The metric projection onto C [[1], Subchapter 4.2, Chapter 28] is denoted by . It is defined by and (). is nonexpansive with [[1], Proposition 4.8, (4.8)].

Proposition 2.1 Suppose that is nonempty, closed, and convex, is nonexpansive, and . Then

-

(i)

[[1], Corollary 4.15], [[2], Lemma 3.4], [[3], Proposition 5.3], [[4], Theorem 3.1.6] is closed and convex.

-

(ii)

[[1], Theorem 3.14] if and only if ().

Proposition 2.1(i) guarantees that if , exists for all .

This paper discusses the following fixed point problem.

Problem 2.1 Suppose that is nonexpansive with . Then

2.2 The Halpern algorithm and our algorithm

The Halpern algorithm generates the sequence [[6], Subchapter 1.2] [9, 10] as follows: given and satisfying , , and ,

Algorithm (1) strongly converges to () [[6], Theorem 6.17], [9, 10].

Here, we shall discuss Problem 2.1 when is the set of all minimizers of a convex, continuously Fréchet differentiable functional f over H and see that algorithm (1) is based on the steepest descent method [[16], Subchapter 3.3] to minimize f over H. Suppose that the gradient of f, denoted by ∇f, is Lipschitz continuous with a constant and define by

where and stands for the identity mapping. Accordingly, satisfies the nonexpansivity condition (see, e.g., [[12], Proposition 2.3]) and

Therefore, algorithm (1) with can be expressed as follows.

This implies that algorithm (3) uses the steepest descent direction [[16], Subchapter 3.3] of f at , and hence algorithm (3) is based on the steepest descent method.

Meanwhile, conjugate gradient methods [[16], Chapter 5] are popular acceleration methods of the steepest descent method. The conjugate gradient direction of f at () is , where and , which, together with (2), implies that

Therefore, by replacing in algorithm (3) with defined by (4), we can formulate a novel algorithm for solving Problem 2.1.

Before presenting the algorithm, we provide the following lemmas which are used to prove the main theorem.

Proposition 2.2 [[6], Lemmas 1.2 and 1.3]

Let be sequences with (). Suppose that , , and . Then .

Proposition 2.3 [[19], Lemma 1]

Suppose that weakly converges to and . Then .

3 Acceleration of the Halpern algorithm

We present the following algorithm.

Algorithm 3.1

Step 0. Choose , , and arbitrarily, and set , . Compute .

Step 1. Given , compute by

Compute as follows.

Put , and go to Step 1.

We can check that Algorithm 3.1 coincides with the Halpern algorithm (1) when () and .

This section makes the following assumptions.

Assumption 3.1 The sequences and satisfya

Moreover, in Algorithm 3.1 satisfies

Let us do a convergence analysis of Algorithm 3.1.

Theorem 3.1 Under Assumption 3.1, the sequence generated by Algorithm 3.1 strongly converges to .

Algorithm 3.1 when () is the Halpern algorithm defined by

Hence, the nonexpansivity of T ensures that, for all and for all ,

Suppose that . From (5), we have . Assume that for some . Then (5) implies that . Hence, induction guarantees that

Therefore, we find that is bounded. Moreover, since the nonexpansivity of T ensures that is also bounded, (C5) holds. Accordingly, Theorem 3.1 says that if satisfies (C1)-(C3), Algorithm 3.1 when () (i.e., the Halpern algorithm) strongly converges to . This means that Theorem 3.1 is a generalization of the convergence analysis of the Halpern algorithm.

3.1 Proof of Theorem 3.1

We first show the following lemma.

Lemma 3.1 Suppose that Assumption 3.1 holds. Then , , and are bounded.

Proof We have from (C1) and (C4) that . Accordingly, there exists such that for all . Define . Then (C5) implies that . Assume that for some . The triangle inequality ensures that

which means that for all , i.e., is bounded.

The definition of () implies that

The nonexpansivity of T and (6) imply that, for all and for all ,

Therefore, we find that, for all and for all ,

which, together with (C4) and (), means that, for all and for all ,

Induction guarantees that, for all and for all ,

Therefore, is bounded.

The definition of () and the boundedness of and imply that is also bounded. This completes the proof. □

Lemma 3.2 Suppose that Assumption 3.1 holds. Then

-

(i)

.

-

(ii)

.

-

(iii)

, where .

Proof (i) Equation (6), the triangle inequality, and the nonexpansivity of T imply that, for all ,

which, together with () and (C4), implies that, for all ,

On the other hand, from and (), we have that, for all ,

We also find that, for all ,

where . Hence, (7) and (8) ensure that, for all ,

Proposition 2.2, (C1), (C2), and (C3) lead us to

(ii) From (), (C1) means that . Since the triangle inequality ensures that (), we find from (9) that

From the definition of (), we have, for all ,

Since Equation (10) and guarantee that the right-hand side of the above inequality converges to 0, we find that

-

(iii)

From the limit superior of , there exists () such that

(12)

Moreover, since is bounded, there exists () which weakly converges to some point (∈H). Equation (10) guarantees that weakly converges to .

We shall show that . Assume that , i.e., . Proposition 2.3, (11), and the nonexpansivity of T ensure that

This is a contradiction. Hence, . Hence, (12) and Proposition 2.1(ii) guarantee that

This completes the proof. □

Now, we are in a position to prove Theorem 3.1.

Proof of Theorem 3.1 The inequality (), (6), and the nonexpansivity of T imply that, for all ,

where () and . We thus have that, for all ,

Proposition 2.2, (C1), (C2), and Lemma 3.2(iii) lead one to deduce that

This guarantees that generated by Algorithm 3.1 strongly converges to . □

Suppose that is bounded. Then we can set a bounded, closed convex set C () such that can be computed within a finite number of arithmetic operations (e.g., C is a closed ball with a large enough radius). Hence, we can compute

instead of in Algorithm 3.1. From , the boundedness of C means that is bounded. The nonexpansivity of T guarantees that (), which means that is bounded. Therefore, (C5) holds. We can prove that Algorithm 3.1 with (13) strongly converges to a point in by referring to the proof of Theorem 3.1.

Let us consider the case where is unbounded. In this case, we cannot choose a bounded C satisfying . Although we can execute Algorithm 3.1, we need to verify the boundedness of . Instead, we can apply the Halpern algorithm (1) to this case without any problem. However, the Halpern algorithm would converge slowly because it is based on the steepest descent method (see Section 1). Hence, in this case, it would be desirable to execute not only the Halpern algorithm but also Algorithm 3.1.

4 Numerical examples and conclusion

Let us apply the Halpern algorithm (1) and Algorithm 3.1 to the following convex feasibility problem [5], [[1], Example 5.21].

Problem 4.1 Given a nonempty, closed convex set (),

where one assumes that .

Define a mapping by

where () stands for the metric projection onto . Since () is nonexpansive, T defined by (14) is also nonexpansive. Moreover, we find that

Therefore, Problem 4.1 coincides with Problem 2.1 with T defined by (14).

The experiment used an Apple Macbook Air with a 1.30GHz Intel(R) Core(TM) i5-4250U CPU and 4GB DDR3 memory. The Halpern algorithm (1) and Algorithm 3.1 were written in Java. The operating system of the computer was Mac OSX version 10.8.5.

We set , , (), and () in Algorithm 3.1 and compared Algorithm 3.1 with the Halpern algorithm (1) with (). In the experiment, we set () as a closed ball with center and radius . Thus, () can be computed with

or if .

We set , , (), and . The experiment used random vectors () generated by the java.util.Random class so as to satisfy . We also used the java.util.Random class to set a random initial point in .

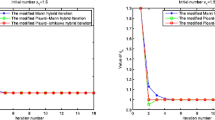

Figure 1 describes the behaviors of for the Halpern algorithm (1) and Algorithm 3.1 (proposed). The x-axis and y-axis represent the elapsed time and value of . The results show that compared with the Halpern algorithm, Algorithm 3.1 dramatically reduces the time required to satisfy . We found that the Halpern algorithm took 850 iterations to satisfy , whereas Algorithm 3.1 took only six.

This paper presented an algorithm to accelerate the Halpern algorithm for finding a fixed point of a nonexpansive mapping on a real Hilbert space and its convergence analysis. The convergence analysis guarantees that the proposed algorithm strongly converges to a fixed point of a nonexpansive mapping under certain assumptions. We numerically compared the abilities of the proposed and Halpern algorithms in solving a concrete fixed point problem. The results showed that the proposed algorithm performs better than the Halpern algorithm.

Endnote

Examples of and satisfying (C1)-(C4) are and (), where .

References

Bauschke HH, Combettes PL: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, Berlin; 2011.

Goebel K, Kirk WA Cambridge Studies in Advanced Mathematics. In Topics in Metric Fixed Point Theory. Cambridge University Press, Cambridge; 1990.

Goebel K, Reich S: Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings. Dekker, New York; 1984.

Takahashi W: Nonlinear Functional Analysis. Yokohama Publishers, Yokohama; 2000.

Bauschke HH, Borwein JM: On projection algorithms for solving convex feasibility problems. SIAM Rev. 1996, 38(3):367–426. 10.1137/S0036144593251710

Berinde V: Iterative Approximation of Fixed Points. Springer, Berlin; 2007.

Krasnosel’skiĭ MA: Two remarks on the method of successive approximations. Usp. Mat. Nauk 1955, 10: 123–127.

Mann WR: Mean value methods in iteration. Proc. Am. Math. Soc. 1953, 4: 506–510. 10.1090/S0002-9939-1953-0054846-3

Halpern B: Fixed points of nonexpansive maps. Bull. Am. Math. Soc. 1967, 73: 957–961. 10.1090/S0002-9904-1967-11864-0

Wittmann R: Approximation of fixed points of nonexpansive mappings. Arch. Math. 1992, 58(5):486–491. 10.1007/BF01190119

Nakajo K, Takahashi W: Strong convergence theorems for nonexpansive mappings and nonexpansive semigroups. J. Math. Anal. Appl. 2003, 279: 372–379. 10.1016/S0022-247X(02)00458-4

Iiduka H: Iterative algorithm for solving triple-hierarchical constrained optimization problem. J. Optim. Theory Appl. 2011, 148: 580–592. 10.1007/s10957-010-9769-z

Iiduka H: Fixed point optimization algorithm and its application to power control in CDMA data networks. Math. Program. 2012, 133: 227–242. 10.1007/s10107-010-0427-x

Iiduka H: Iterative algorithm for triple-hierarchical constrained nonconvex optimization problem and its application to network bandwidth allocation. SIAM J. Optim. 2012, 22(3):862–878. 10.1137/110849456

Iiduka H: Fixed point optimization algorithms for distributed optimization in networked systems. SIAM J. Optim. 2013, 23: 1–26. 10.1137/120866877

Nocedal J, Wright SJ Springer Series in Operations Research and Financial Engineering. In Numerical Optimization. 2nd edition. Springer, Berlin; 2006.

Iiduka H: Acceleration method for convex optimization over the fixed point set of a nonexpansive mapping. Math. Program. 2014. 10.1007/s10107-013-0741-1

Iiduka H, Yamada I: A use of conjugate gradient direction for the convex optimization problem over the fixed point set of a nonexpansive mapping. SIAM J. Optim. 2009, 19(4):1881–1893. 10.1137/070702497

Opial Z: Weak convergence of the sequence of successive approximation for nonexpansive mappings. Bull. Am. Math. Soc. 1967, 73: 591–597. 10.1090/S0002-9904-1967-11761-0

Acknowledgements

We are sincerely grateful to the associate editor Lai-Jiu Lin and the two anonymous reviewers for helping us improve the original manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sakurai, K., Iiduka, H. Acceleration of the Halpern algorithm to search for a fixed point of a nonexpansive mapping. Fixed Point Theory Appl 2014, 202 (2014). https://doi.org/10.1186/1687-1812-2014-202

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1812-2014-202