Abstract

When estimating channel parameters in linearly modulated communication systems, the iterative expectation-maximization (EM) algorithm can be used to exploit the signal energy associated with the unknown data symbols. It turns out that the channel estimation requires at each EM iteration the a posteriori probabilities (APPs) of these data symbols, resulting in a high computational complexity when channel coding is present. In this paper, we present a new approximation of the APPs of trellis-coded symbols, which is less complex and requires less memory than alternatives from literature. By means of computer simulations, we show that the Viterbi decoder that uses the EM channel estimate resulting from this APP approximation experiences a negligible degradation in frame error rate (FER) performance, as compared to using the exact APPs in the channel estimation process.

Similar content being viewed by others

1 Introduction

When the channel between a source and destination node is not known, it is primordial for the destination to estimate this channel in order to decode the transmitted information. Typically, the source assists the destination with this task by transmitting known pilot symbols along with the unknown data symbols. Making use of only these pilot symbols, the destination is able to estimate the channel. The drawback of this pilot-aided method is that the channel information contained in the data part of the signal is not harvested during the estimation. Hence, in order to obtain an accurate channel estimate, a large number of pilot symbols should be present, yielding a substantial reduction of both power and bandwidth efficiency.

To accommodate these problems, the iterative expectation-maximization (EM) algorithm [1, 2] can be used to also exploit the signal energy associated with the unknown data symbols during the channel estimation; this way, much less pilot symbols are needed to achieve a given estimation accuracy. Application of the EM algorithm requires that at each iteration the a posteriori probabilities (APPs) of these data symbols be calculated. When using a trellis code to map the information bits on the data symbols, the Viterbi algorithm [3] minimizes the frame error rate (FER) by performing maximum likelihood (ML) sequence detection. The exact APPs of the trellis-coded data symbols are obtained by means of the Bahl-Cocke-Jelinek-Raviv (BCJR) algorithm [4], which however is roughly three times as complex as the Viterbi algorithm [5].

Several low-complexity approximations of the BCJR algorithm have been proposed in the literature, mainly in the context of iterative soft decoding of concatenated codes, referred to as turbo decoding. Among them are the max-log maximum a posteriori probability (MAP) algorithm [5] and the soft-output Viterbi algorithm (SOVA) [6], which are roughly twice as complex as the Viterbi algorithm [7], and the soft-output M-algorithm (SOMA) [8], which reduces complexity by considering only the M most likely states at each trellis section. Some improvements of the SOVA algorithm in the context of turbo decoding have been presented in [9–13]. Whereas these referenced papers make use of the approximate APPs inside the iterative decoder, we focus on using the approximate APPs only in the iterative estimation algorithm and use the standard Viterbi algorithm (which does not need symbol APPs) for decoding. Because an accurate approximation of the true APPs is less critical for the proper operation of the EM algorithm, we propose a simpler approximation of the APP computation with roughly half the complexity of max-log MAP and with substantially less memory requirements. We compare the resulting EM algorithm in terms of estimation accuracy and FER of the Viterbi decoder, with the cases where the EM estimator uses either the true APPs, or the APPs resulting from SOMA, or the APPs that are computed under the simplifying assumption of uncoded transmission.

1.1 Notations

All vectors are row vectors and in boldface. The Hermitian transpose, statistical expectation, the m th element, the first m elements, and estimate of the row vector x are denoted by xH, E [x], x m , x1:m, and , respectively.

2 System description

A source transmits to the destination a frame consisting of a sequence of Kp pilot symbols cp and a sequence of K data symbols c; the latter is obtained by applying Kb information bits b to a trellis encoder [5]. We assume that the pilot symbols have a constant magnitude and that the data symbols satisfy , with Es denoting the average symbol energy at the transmitter. Considering a channel that is characterized by a channel gain h that is constant over the frame and a noise contribution n, the signal received by the destination r t = (rp, r) is defined by

The elements of np and n are independent zero mean circular symmetric complex Gaussian random variables with variance N0. The destination produces a channel gain estimate ĥ and uses a Viterbi decoder to obtain the ML information bit sequence decision with belonging to the set of all information bit sequences of length Kb. If the estimate ĥ were equal to the actual channel gain h, the Viterbi decoder would minimize the FER given by .

As the operation of the Viterbi decoder is well documented in the literature [3], we only briefly recall its main features. The decoder makes use of the fact that the trellis encoder can be described as a finite-state machine. At the start of the m th symbol interval, the encoder accepts a vector u m of Nb information bits (the information bit sequence b is partitioned as b = (u1,⋯,u K )) and outputs a coded data symbol c m which is given by the output equation c m = g (S m ,u m ); here S m denotes the encoder state at the start of the m th symbol interval. At the end of the m th symbol interval, the encoder has reached the state Sm + 1 given by the state equation Sm + 1 = f (S m ,u m ). For any m, S m belongs to the set Σ = {σ1,⋯,σ L }, with L denoting the number of encoder states. The resulting trellis code has a rate of Nb information bits per coded symbol. The Viterbi algorithm recursively computes the sequences and their log-likelihood for all σ l ∈ Σ, and m = 1,⋯,K; here, is the ML sequence consisting of m data symbols that yields Sm + 1 = σ l . The log-likelihood of is given by –Λ m (σ l )/N0, where the path metric Λ m (σ l ) satisfies the following recursion:

and must be consistent with the encoder operation (i.e., a bit vector , satisfying both and , must exist). The quantity denotes the branch metric corresponding to , which is given by

The value of that results from (3) is , the last element of . The recursion starts from Λ0 (σ l ), which is determined by the a priori distribution of the initial state S0. The ML data sequence decision is given by , where . The ML decision is the information bit sequence consistent with , , and the encoder operation. The Viterbi decoder operation requires the storage of L data symbol sequences of length K. The above is straightforwardly extended to (i) multidimensional trellis coding, where a transition from state S m to state Sm + 1 gives rise to multiple data symbols, and (ii) the presence of termination bits at the encoder input to impose a known final state SK + 1.

3 Estimation strategy

As mentioned before, the source transmits pilot symbols cp to assist the destination with the channel estimation process. The ML pilot-based estimate of h that uses only rp is given by [14]

The estimate (5) gives rise to a mean-squared estimation error (MSE) that is equal to

Hence, for given Es, the estimation accuracy is improved by increasing Kp.

When exploiting also the data part of the received signal, i.e., r, the ML estimate of h is given by

Because of the summation over all possible data sequences, obtaining from (8) the ML estimate for large Kb is computationally complex. Fortunately, the EM algorithm [1] allows to compute the ML estimate iteratively. For the problem at hand, the EM channel estimate during the i th iteration is obtained as

where and denote the a posteriori mean of c m and |c m |2, respectively:

When the data symbols have a constant magnitude, the numerator of (9) reduces to (Kp + K)Es. The iterations are initialized with the pilot-based estimate from (5), which we denote as .

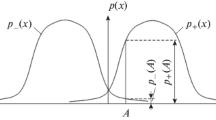

The APP (for notational convenience, we drop the iteration index) of c m can be expressed as

where ∝ means equal within a normalization factor, and the summation is over all valid codewords with c m equal to α. Making use of the finite-state description of the encoder, the APPs (12) can be computed efficiently for m = 1,⋯,K by means of the BCJR algorithm [4]. However, its complexity is still about three times that of the Viterbi algorithm [5]. Hence, assuming that the EM algorithm converges after I iterations, the BCJR algorithm must be applied I times, after which, the Viterbi algorithm (with ) is used to detect the information bit sequence. The resulting complexity is 3I + 1 times that of a single use of the Viterbi decoder.

The MSE resulting from (8) cannot be obtained in closed form. Therefore, we resort to the modified Cramer-Rao bound (MCRB) [15], which is a fundamental lower bound on the MSE performance of any unbiased estimate. For the observation model (1)-(2), the MCRB reduces to

Comparison of (6) with (13) indicates the possibility of substantially reducing the MSE when also including the data portion r of the observation in the estimation process, especially when K ≫ Kp.

4 Complexity reduction

In order to avoid the computational complexity associated with the BCJR algorithm (or the max-log MAP or SOMA approximations), we consider two reduced-complexity approximations for computing the APPs. In the first algorithm (A 1), we do not exploit the code properties and compute the APPs as if the transmission was uncoded. The second algorithm (A 2), which exploits the path metrics from the Viterbi decoder to approximate the APPs, represents our main contribution.

4.1 Algorithm A 1

A trivial low-complexity approximation is obtained by simply ignoring the code constraint during the EM iterations. More specifically, it is assumed that the data symbols are statistically independent, which implies that the received samples r k with k = 1,…,K and k ≠ m do not contain any information about c m . In (12), we can thus replace r by r m . This yields

where λ m (α) follows from (4).

4.2 Algorithm A 2

Here, we present a new low-complexity approximation to the APP computation, that makes use only of the Viterbi decoder metrics {Λ m (σ l )} of the surviving paths. The APP approximation makes use of the following simplifications:

-

(i)

We ignore future observations. More specifically, we approximate the APP of a symbol c m by conditioning on only the past and present observations r1:m. This APP is obtained by simply replacing in the right-hand side of (12) the vectors r and c by r1:m and c1:m, respectively:

(16)

-

(ii)

From all paths yielding Sm + 1 = σ l (l = 1, 2, …, L), we only keep the most likely path that corresponds to the symbol sequence ; the likelihood of the other, non-surviving, paths is assumed to be zero. This yields the approximation

(18) -

(iii)

We replace in (18) the summation over the valid symbol sequences by a maximization and finally obtain the approximation

(19)

Hence, from all surviving sequences with , only the sequence with the highest likelihood contributes to the APP approximation (19). Taking into account the relation between the Viterbi decoder path metric Λ m (σ l ) from (3) and the likelihood of , we obtain from (19) the approximation

with

Hence, when the surviving path with the largest likelihood at the end of the m th trellis section has c m = α, our APP approximation for the symbol c m is largest for c m = α. Approximating the APPs for m = 1,⋯,K using (21) yields a complexity similar to that of the Viterbi algorithm. Hence, assuming that the EM algorithm converges after I iterations, the complexity as compared to a single use of the Viterbi decoder is I + 1 times for algorithm A 2, whereas it is 3I + 1 times when the APP computation is according to the BCJR algorithm. Note that unlike the Viterbi algorithm, the computation of the APP (21) of c m does not require to store the data symbol decisions for n < m, so that algorithm A 2 uses considerably less memory than the Viterbi algorithm does.

Whereas simplifications similar to (ii) and (iii) have also been applied to APP algorithms from literature (e.g., max-log MAP), this is not the case for simplification (i). As the APP algorithms from the literature also make use of future observations, the APP of c m requires updating each time future observations rm + 1, rm + 2, … become available, yielding a higher computational complexity and more memory requirements. Hence, approximation (i) is crucial for obtaining a very simple APP computation.

5 Numerical results

We consider a trellis encoder consisting of an eight-state rate 1/2 (15,17)8 convolutional encoder with known initial and final states, followed by Gray mapping of the convolutional encoder output bits to 4-QAM symbols. Each frame contains Kp = 5 pilot symbols and K = 200 data symbols (including four termination symbols). We consider both an Additive white Gaussian noise (AWGN) channel with h = 1 and a Rayleigh fading channel with . We investigate the performance of the estimator and the Viterbi decoder by means of Monte-Carlo simulations, in terms of MSE and FER, respectively. The EM algorithm has essentially converged after only one iteration, i.e., I = 1; for I = 1, the complexity reduction obtained by computing the APPs using the new algorithm A 2 instead of the BCJR algorithm is about a factor of 2. In the following, we consider the APP computation according to the BCJR algorithm, the SOMA (M = 4) version of the exact APP algorithm from [16] and the above A1 and A2 algorithms.

The MSE performance for the AWGN channel and the Rayleigh fading channel is depicted in Figures 1 and 2, where the MCRB (13) is used as benchmark. The MSE performance of the EM-based channel estimators (a) converges to the MCRB with increasing signal-to-noise ratio (SNR) and (b) outperforms the system where only pilot symbols are used to estimate h. Ranking the APP algorithms according to the resulting MSE, we see that BCJR (which computes exact APPs) performs best, SOMA (which takes past, present, and future observations into account) is a very close second, and A2 (which ignores future observations) is only slightly worse than BCJR and SOMA; A1 (which uses only the present observation) is considerably worse than BCJR, SOMA, and A2 for low and medium SNRs.

Figures 3 and 4 show the FER of the Viterbi decoder for the AWGN channel and the Rayleigh fading channel as a function of Eb/N0, with Eb denoting the energy per information bit. Hence,

where Kter is the number of termination symbols. As benchmark, we use the FER of a reference system with (K,Kp) = (200,0) where the channel coefficient h is known to the receiver. Hence, as compared to this reference system, the system with (K,Kp) = (200,5) suffers from an irreducible power efficiency loss of dB because of the presence of pilot symbols; the actual degradation will exceed 0.11 dB because of channel estimation errors. We observe that (a) the A2, SOMA, and BCJR algorithms yield essentially the same FER performance and require, for given FER, about 0.11 dB more Eb/N0 than the reference system: for these algorithms, the channel estimation is sufficiently accurate so that the degradation is mainly determined by the power efficiency loss caused by the pilot symbol insertion. (b) The A1 algorithm performs worse than the A2, SOMA, and BCJR algorithms because ignoring the code constraints when computing the APPs yields less accurate channel estimates. (c) The FER performance is worst when only pilot symbols are used to estimate h. Hence, from a computational complexity and memory requirement point of view, it is advantageous to compute the trellis-coded symbol APPs in the EM algorithm by means of the new algorithm A2 (that ignores future observations) rather than the considered APP algorithms from the literature (that take also future observations into account).

6 Conclusions

EM-based channel estimation in the presence of trellis-coded modulation requires the use of the BCJR algorithm to efficiently compute the exact symbol APPs. As the computational complexity of the BCJR algorithm is about three times that of the Viterbi algorithm which we use for decoding, we have proposed a new approximation to the APP computation that beats the main APP algorithms from the literature in terms of computational complexity and memory requirements. By means of computer simulations, we have pointed out that when using the new APP computation instead of the exact APPs from the BCJR algorithm, the resulting Viterbi decoder FER performances are essentially the same. Hence, this motivates the use of the new APP approximation in the context of EM channel estimation for trellis-coded modulation.

So far, we have limited our attention to a time-invariant channel, i.e., the channel gain takes a constant value h during a frame. For time-varying channels, a similar approximation of the symbol APPs can be derived. Denoting by h m the channel gain associated with the data symbol c m , the branch metric corresponding to becomes

Note that (23) is obtained by replacing in (4) ĥ by , which denotes the estimate of h m . Collecting the channel gain estimates at the data symbol positions into the vector ĥ, the likelihood can be decomposed as

where , with given by (23). Hence, the symbol APP approximations for time-varying channels are simply obtained by replacing in the APP approximations A1 and A2 for time-invariant channels the quantity by the right-hand side of (23) instead of (4). The resulting approximated symbol APPs can be used in, for instance, a MAP EM channel estimation algorithm that exploits the correlation between the time-varying channel gains [17].

References

Dempster A, Laird N, Rubin DB: Maximum likelihood from incomplete data via the EM algorithm. J. Roy. Stat. Soc 1977, 39(1):1-38.

Choi J: Adaptive and Iterative Signal Processing in Communications. Cambridge University Press, New York; 2006.

Viterbi AJ: Error bounds for convolutional codes and an asymptotically optimum decoding algorithm. IEEE Trans. Inform. Theor 1967, 13: 260-269.

Bahl L, Cocke J, Jelinek F, Raviv J: Optimal decoding of linear codes for minimizing symbol error rate. IEEE Trans. Inform. Theor 1974, IT-20(2):284-287.

Lin S, Costello D: Error Control Coding. Pearson Education Inc., Upper Saddle River; 2004.

Hagenauer J: A Viterbi algorithm with soft-decision output and its applications. GLOBECOM 1989, 3: 1680-1686.

Mansour M, Bahai A: Complexity based design for iterative joint equalization and decoding. Proc. IEEE VTC 2002, 4: 1699-1704.

Mehlan R, Meyr H: Soft output M-algorithm equalizer and trellis-coded modulation for mobile radio communication. Proc. IEEE VTC 1992, 2: 586-591.

Papaharalabos S, Sweeney P, Evans BG, Mathiopoulos PT: Improved performance SOVA turbo decoder. IEE Proc. Commun 2006, 153(5):586-590. 10.1049/ip-com:20050247

Hamad AA: Performance enhancement of SOVA based decoder in SCCC and PCCC schemes. SciRes. Wireless Engineering and Technology 2013, 4: 40-45. 10.4236/wet.2013.41006

Motwani R, Souvignier T: Reduced-complexity soft-output Viterbi algorithm for channels characterized by dominant error events. In IEEE Global Telecommunications Conference. Miami, FL, USA; 6–10 Dec 2010:1-5.

Yue DW, Nguyen HH: Unified scaling factor approach for turbo decoding algorithms. IET Commun 2010, 4(8):905-914. 10.1049/iet-com.2009.0125

Huang CX, Ghrayeb A: A simple remedy for the exaggerated extrinsic information produced by SOVA algorithm. IEEE Trans. Wireless Commun 2006, 5(5):996-1002.

Proakis JG: Digital Communications. McGraw-Hill, New York; 1995.

Andrea AD, Mengali U, Reggiannini R: The modified Cramer-Rao bound and its applications to synchronization problems. IEEE Trans. Commun 1994, 42(234):1391-1399.

Lee L: Real-time minimum-bit-error probability decoding of convolutional codes. IEEE Trans. Commun 1974, COM-22: 146-151.

Aerts N, Moeneclaey M: SAGE-based estimation algorithms for time-varying channels in amplify-and-forward cooperative networks. In International Symposium on Information Theory and its Applications (ISITA). Honolulu, HI, USA; 28–31 Oct 2012:672-676.

Acknowledgements

This research has been funded by the Interuniversity Attraction Poles Programme initiated by the Belgian Science Policy Office and is also supported by the FWO project G.0022.11 ‘Advanced multi-antenna systems, multi-band propagation models and MIMO/cooperative receiver algorithms for high data rate V2X communications’.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Aerts, N., Avram, I. & Moeneclaey, M. Low-complexity a posteriori probability approximation in EM-based channel estimation for trellis-coded systems. J Wireless Com Network 2014, 153 (2014). https://doi.org/10.1186/1687-1499-2014-153

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1499-2014-153