Abstract

Background

Prior research has found that television viewing is associated with poor diet quality, though little is known about its long-term impact on diet, particularly during adolescence. This study examined the associations between television viewing behavior with dietary intake five years later.

Methods

Survey data, which included television viewing time and food frequency questionnaires, were analyzed for 564 middle school students (younger cohort) and 1366 high school students (older cohort) who had complete data available at Time 1 (1998–1999) and five years later at Time 2 (mean age at Time 2, 17.2 ± 0.6 and 20.5 ± 0.8 years, respectively). Regression models examined longitudinal associations between Time 1 television viewing behavior and Time 2 dietary intake adjusting for sociodemographic characteristics, Time 1 dietary intake, and Time 2 total daily energy intake.

Results

Respondents were categorized as limited television users (<2 hours/daily), moderately high television viewers (2–5 hours/daily), and heavy television viewers (≥5 hours/daily). Among the younger cohort, Time 1 heavy television viewers reported lower fruit intake and higher sugar-sweetened beverage consumption than the other two groups. Among the older cohort, watching five or more hours of television per day at Time 1, predicted lower intakes of fruits, vegetables, whole grain and calcium-rich foods, and higher intakes of trans fat, fried foods, fast food menu items, snack products, and sugar-sweetened beverages (products commonly advertised on television) five years later.

Conclusion

Television viewing in middle and high school predicted poorer dietary intake five years later. Adolescents are primary targets of advertising for fast food restaurants, snack foods, and sugar-sweetened beverages, which may influence their food choices. Television viewing, especially during high school, may have long-term effects on eating choices and contribute to poor eating habits in young adulthood.

Similar content being viewed by others

Background

Obesity and related health problems are increasing in adolescents in the United States. In the mid-1960s, less than 5% of 12–19 year olds were overweight [1]. Forty years later, national survey data indicate that 17% of adolescents are obese and another 17% are overweight [2]. On average, adolescents aged 14–18 years in the United States watch over 18 hours of television per week [3] and previous research has suggested that high rates of television viewing may be linked to obesity and adiposity [4]. During the time spent watching television, not only do adolescents expend little energy [5], but they are exposed to numerous advertisements that can influence the type and amount of food desired, requested, and consumed [4, 6]. Fast foods, high-fat, high-sugar foods, and sugar-sweetened beverages are heavily advertised during prime time programs that target all age groups, including adolescents [7]. Exposure to food and beverage advertisements may foster unhealthy perceptions about food and nutrition [8], contribute to a significant proportion of total daily energy consumed while watching television [9], and promote weight gain [4].

The relationship between the sedentary behavior of television viewing and dietary behaviors has been explored in children and adolescents; however, most of this work has been cross-sectional in nature. Cross-sectional studies have found that television viewing behavior is associated with less than optimal eating behaviors, including lower fruit and vegetable intake [10, 11], higher sugar-sweetened beverage consumption [11, 12]; and higher fast food intake [11]. Little research has examined the longitudinal relationship between television viewing and diet. Two studies using data from Planet Health, a 19-month obesity reduction intervention for middle school students, found that each additional hour of television beyond the baseline number of hours watched resulted in a reduction of almost one fruit and vegetable serving per week [13] and increased servings of baked sweet goods, candy, fast food, fried potatoes, salty snacks, and sugar-sweetened beverages – foods commonly advertised on television [14]. We are unaware of any longitudinal studies that have examined the relationship between television viewing and diet in older adolescents.

The current study explores whether time spent watching television during middle school and high school is associated with eating behaviors five years later, as youth transition from middle school into high school and from high school to the post-high school years or young adulthood. The middle school and high school years are crucial developmental periods, as adolescents may form behaviors that continue throughout their adult lives. We hypothesized that adolescents who watched more television when they were younger would report poorer eating behaviors five years later.

Methods

Study design and population

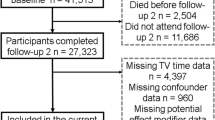

Project EAT (Eating Among Teens) is a longitudinal study of socioenvironmental, personal, and behavioral determinants of dietary intake and weight status in adolescents. In Project EAT-I, middle and high school students (n = 4746) completed in-class surveys and anthropometric measures during the 1998–1999 academic year (Time 1). Five years later (2003–2004; Time 2), Project EAT-II re-surveyed respondents by mail as middle school students progressed from early adolescence to middle adolescence and entered high school (younger cohort), and the high school students progressed from middle adolescence to late adolescence and entered young adulthood (older cohort). Of the original Project EAT-I sample, 2516 participants completed surveys at Time 2; 32.0% (n = 805) were in the younger cohort (middle school students at Time 1 and high school students at Time 2) and 68.0% (n = 1711) were in the older cohort (high school students at Time 1 and young adults at Time 2). Only respondents who reported on their television viewing and dietary behaviors at both Time 1 and Time 2 were retained for the current analysis. The final samples were ethnically and socioeconomically diverse groups of 564 middle adolescents (younger cohort) and 1366 young adults (older cohort) for a total of 1930 participants (Table 1). The mean age of the younger cohort was 12.8 ± .7 years at Time 1 and 17.2 ± .6 years at Time 2. The mean age of the older cohort was 15.9 ± .8 years at Time 1 and 20.5 ± .8 years at Time 2. Study protocols for both waves of Project EAT were approved by the University of Minnesota's Institutional Review Board Human Subjects Committee.

Measures

Time spent watching television at time 1

Items adapted from the Planet Health study were used to assess usual time spent watching television and videos [15]. Respondents were asked, "In your free time on an average weekday (Monday-Friday), how many hours do you spend watching TV & videos?" Response categories were 0, 1/2, 1, 2, 3, 4, and 5+ hours. A similar question was asked regarding weekend (Saturday or Sunday) television and video viewing habits. In Project EAT-I, two-week test-retest correlations for these survey items ranged from .66 to .80 [16]. The validation of similar items has been described in detail elsewhere [17]. Categorical responses were summed, weighted, and averaged according to weekday and weekend to represent the average number of hours per day spent watching television and videos. To provide a valid comparison with national guidelines on television viewing, respondents were categorized as limited television viewers (<2 hours/daily), moderately high television viewers (2–5 hours/daily), or heavy television viewers (≥5 hours daily). These cut points were based on American Academy of Pediatrics guidelines for daily television [18] and Kaiser Family Foundation's definition of heavy television use [3]. Throughout the manuscript, this variable is referred to as television viewing, although it also includes video watching.

Dietary intake and fast food intake at time 2

Dietary intakes (servings/day) of fruits, vegetables (excluding french fries), whole grains (excluding chips), snack foods, fried foods, sugar-sweetened beverages, and calcium-rich foods in the past year were measured using the Youth/Adolescent Food Frequency Questionnaire (YAQ). The reliability and validity of the YAQ have been examined in prior studies that concluded the tool provides acceptable estimates of dietary intake for adolescents [19–21]. Total calories and the percentages of total energy intake from total fat, saturated fat, and trans fat were also assessed using the YAQ. Past week frequency of eating from a fast food restaurant was assessed on the Project EAT survey. Respondents were asked, "In the past week, how often did you eat something from a fast food restaurant (like McDonald's, Burger King, Hardee's, etc)?" This assessment of fast food intake has not been rigorously validated, but is comparable to the measures used by several other studies to assess fast food intake in adolescents and adults [22–24]. Response options (never, 1–2, 3–4, 5–6, 7, or more than 7 times/week) were recoded (0, 1.5, 3.5, 5.5, 7, or 9 times/week) to create a score that represented the number of times eaten from a fast food restaurant in the past week.

Anthropometric variables

At Time 2, height (m) and weight (kg) were self-reported by survey respondents, which are highly correlated with objectively measured values [25]. Body mass index (BMI) was calculated as kg/m2.

Demographic variables

Respondents self-reported their age, gender, and race/ethnicity. Socioeconomic status (SES) was assessed by an algorithm based primarily on highest level of parental educational attainment, and also accounted for family eligibility for public assistance, eligibility for free or reduced-cost school meals, and parental employment status [26]. Data from Time 1 were used to define the demographic variables.

Statistical analysis

All analyses were run separately for the younger and older cohorts. Multivariate regression models adjusted for gender, race/ethnicity, SES, Time 1 dietary intake, and Time 2 total daily energy intake were used to examine the association of Time 1 television viewing with Time 2 dietary intake outcomes. P-values from tests for linear trend across the three television viewing categories are presented in Tables 2 and 3 using an alpha level of 5% to determine statistical significance. Also, statistically significant differences in outcomes between categories of television viewing are indicated by differing superscripts, using an alpha level of 1% to partially adjust for multiple comparisons. All tests are 2-sided. Regression models also adjusting for Time 2 BMI did not differ from the models that did not adjust for BMI, therefore only data unadjusted for BMI are presented. Because the samples are racially and economically diverse, post-hoc analyses were completed to examine potential racial/ethnic and socioeconomic status interactions with Time 1 television use. Because of the post-hoc nature of these tests, a more conservative alpha level of 1% was used. All analyses were conducted using SAS version 9.1 (2003, SAS Institute, Cary, NC) and were weighted using the response propensity method [27] so the Project EAT-II sample resembled the Project EAT-I sample. With this method, the inverse of the estimated probability that an individual responded at Time 2 was used as the weight. Use of response propensity weights in this study helped influence estimates so that they are close to the estimates that would have been obtained had all of the students who participated at Time 1 completed surveys at Time 2. Concern regarding the missing-at-random assumption was lessened by the finding that after weighting and adjustment for demographic characteristics, baseline television viewing and dietary intake among responders were not significantly different from baseline television viewing and dietary intake among nonresponders of Project EAT-II.

Results

Among the younger cohort (middle school students at Time 1), 31.2% reported limited television use (<2 hours/day), 47.9% moderately high use (2–5 hours/day), and 20.9% heavy use (≥5 hours/day) at Time 1. Time 1 heavy television viewers reported lower fruit intake at Time 2 than Time 1 limited television viewers and higher sugar-sweetened beverage consumption than Time 1 moderately high television viewers. No other differences in dietary intake by television viewing categories were found among the younger cohort.

Among the older cohort (high school students at Time 1), 35.6% were limited, 48.6% were moderately high, and 15.8% were heavy television viewers at Time 1. Television viewing while in high school was associated with the consumption of several foods five years later (Table 3). Time 1 heavy television viewers reported lower intakes of fruits and vegetables, and higher consumption of snack foods, as compared to Time 1 limited and moderately high television viewers. Time 1 heavy television viewers reported fewer servings of calcium-rich foods, greater percentage of total calories from trans fat, and greater servings of sugar-sweetened beverages at Time 2, as compared to Time 1 limited television viewers. Additionally, participants who watched more than 2 hours of television while in high school reported consuming less servings of whole grains and more servings of fried foods, and ate more times per week at a fast food restaurant than participants who watched <2 hours of television at Time 1.

Post-hoc analyses revealed that race/ethnicity, but not socioeconomic status, interacted with the relationship between Time 1 television use and Time 2 dietary intake for participants in the older cohort. Black and Hispanic participants who watched more than five hours of television when they were in high school reported significantly greater total energy intake than white participants (2683 kcal and 2748 kcal, respectively, compared to 1908 kcal; p = .01). Black participants who watched fewer than 2 hours of television while in high school reported a higher calcium-rich food intake than white participants (2.9 servings compared to 2.2 servings; p = .006). Among participants in the younger cohort, neither race/ethnicity nor socioeconomic status interacted with the relationship between Time 1 television use and any of the dietary behavior outcomes.

Discussion

The current study examined the longitudinal relationship between television viewing of adolescents in middle school and high school and their dietary intake five years later. Among participants in the older cohort, those who watched more than five hours of television per day when they were in high school reported less healthful eating habits (lower intakes of fruits, vegetables, whole grains and calcium-rich foods, and higher intakes of snack foods, fried foods, fast food, sugar-sweetened beverages, and trans fat). In contrast, television viewing in middle school only predicted lower fruit and greater sugar-sweetened beverage consumption five years later. Thus, television viewing during adolescence longitudinally predicted poorer dietary intake patterns five years later, with stronger and more consistent patterns seen during the transition from high school to young adulthood than during the transition from middle school to high school. Both time periods are critical developmental periods for adolescents, in which they are forming lifelong behaviors. However, behaviors exhibited in high school may more strongly influence behaviors reported in subsequent years than behaviors exhibited during middle school. Additionally, there may be environmental influences for which the current study did not have data, such as food and beverage television advertising, which may influence the eating behaviors of the cohorts differently.

Several cross-sectional studies have found a negative correlational association between television viewing and diet among students in preschool [28], elementary school [11, 29, 30], middle and high school [12, 31, 32], and college [33, 34]. However, only two studies, both using data from Planet Health, which engaged middle school students in an obesity prevention intervention, have reported longitudinal relationships between greater amounts of television viewing and lower fruit and vegetable intake [13] and higher sweet and salty snack foods, fast food, and sugar-sweetened beverages [14] – results similar to those found in the current study. However, there are substantive differences between the Planet Health studies and the current study. Planet Health was an intervention that targeted reducing television viewing; the current study explored the influence of previous television viewing behavior over a longer period of time (5 years compared to 19 months); and the current study examined the transition from high school to the post-high school years, which to the authors' knowledge, has not been examined by any other research.

Among both cohorts, categories of food and beverages (snack foods, sugar-sweetened beverages, and fast foods) commonly advertised on television [7] were associated with previous television viewing behavior in the current study. Healthy foods, such as fruits, vegetables, whole grains, milk, and low-fat items are rarely advertised on television [6]. Repeated exposure to high calorie, low nutrient foods may increase desire for these foods and subsequently purchases and consumption of advertised products. Adolescents who watch too much television become adults who watch too much television [35], and thus continue to be exposed to advertisements for unhealthy foods. Although young people may be aware that many foods advertised on television are not healthy, they may chose to ignore or do not fully realize the consequences, because the actors they see advertising and eating the foods in the commercials are usually not overweight [36].

Post-hoc analyses in the older cohort showed there was an interaction with race/ethnicity in the observed relationship between television viewing and dietary intake. The stronger influence found in minority, especially black, participants may reflect the types of advertisements or program content most likely to reach these racial/ethnic groups. More food advertisements that focus on fast food, candy, and soda are shown during programs targeted to black Americans than programs targeted to general audiences [37].

There is also the issue of eating while viewing television. Studies have found that for some adolescents, a significant proportion of their total daily energy intake is consumed while viewing television [30, 38]. Viewing television while eating may cause a distraction resulting in a lack of awareness of actual food consumption or overlooking satiety cues [33, 39], which may lead to overconsumption. If these habits are formed at a younger age, they may continue as adolescents become older.

To our knowledge, this is the first study to examine the long-term longitudinal association between television viewing and dietary intake during the transition through the adolescent years into young adulthood. The strengths of this study are a prospective design; use of large, socioeconomically and racially diverse samples; and the examination of multiple dietary behaviors in two age cohorts. A limitation of the current study is that specific mechanisms through which television viewing may be influencing dietary behavior cannot be examined with these data. For example, the type and content of commercials watched by the participants are unknown. Future research is needed to explore these issues in more detail. Although the longitudinal nature of the current study allows for the assessment of temporality of the association between television viewing and dietary behavior, it does not allow for an assessment of causality. Television use may be overestimated in this study because the measure used to assess television use also included video use. It is unknown whether television viewing and video viewing influence dietary behaviors differently. However, among adolescents the proportion of total screen time spent watching videos is small compared to the proportion of time spent watching television [3].

Conclusion

Findings from this study indicate that television viewing during adolescence, especially viewing during the high school years, predicts future eating habits. The research presented in this article begins to explore the television-diet paradigm of adolescents as they transition into young adulthood. However, from the current analyses, it cannot be determined if the relationship is causal and it cannot be determined the extent to which specific mechanism(s) are driving the association. Previous work in this area has proposed that the mechanisms through which television viewing influences obesity are: displacement of physical activity, promotion of increased energy intake while watching television, promotion of increased energy intake through advertising, and reduction in resting metabolic rate [40]. However, further examination has indicated that the association between television viewing and physical activity is weak [41]. Increasingly, researchers have begun to propose that television advertising and foods eaten in front of the television may be the primary underlying mechanism driving this relationship [28, 30, 42].

Adolescents are one of the primary targets of food and beverage advertising and television continues to be the main form of media for advertising to the general public [4]. There are potential negative impacts of advertising and marketing campaigns on dietary quality and food purchasing behavior during adolescence. Adolescence is a critical developmental period when individuals begin to make independent decisions about how to feed themselves. In particular, during the transition from high school to young adulthood youth begin to assume much greater responsibility for preparing or purchasing their own food, and potentially establish long-term adult eating behaviors. While much of the scientific discussion in this area, to date, has focused on short-term influences of television on dietary behavior, our work suggests that these associations are real and may, in fact, be sustained over the long-term. In addition to devising interventions to reduce television viewing time in adolescents, health professionals may need to develop interventions focusing on the promotion of healthy food choices, in general and while watching television, and overcoming media influences.

References

Ogden CL, Flegal KM, Carroll MD, Johnson CL: Prevalence and trends in overweight among US children and adolescents, 1999–2000. JAMA. 2002, 288 (14): 1728-1732. 10.1001/jama.288.14.1728.

Ogden CL, Carroll MD, Curtin LR, McDowell MA, Tabak CJ, Flegal KM: Prevalence of Overweight and Obesity in the United States, 1999–2004. JAMA. 2006, 295 (13): 1549-1555. 10.1001/jama.295.13.1549.

Roberts D, Foehr UG, Rideout V: Generation M: media in the lives of 8–18 year-olds. 2005, Menlo Park: Kaiser Family Foundation, 1-145.

Institute of Medicine: Food marketing to children and youth: threat or opportunity?. 2006, Washington, DC: The National Academies Press

Klesges RC, Shelton ML, Klesges LM: Effects of Television on Metabolic Rate: Potential Implications For Childhood Obesity. Pediatrics. 1993, 91: 281-286.

Gantz W, Schwartz N, Angelini JR, Rideout V: Food for thought: Television food advertising to children in the United States. 2007, Menlo Park: Kaiser Family Foundation, 1-55.

IOM: Preventing Childhood Obesity: Health in the Balance. 2005, Washington, DC: The National Academies Press

Byrd-Bredbenner C, Grasso D: Health, medicine, and food messages in television commercials during 1992 and 1998. Journal of School Health. 2000, 70 (2): 61.

Matheson DM, Wang Y, Klesges LM, Beech BM, Kraemer HC, Robinson TN: African-American girls' dietary intake while watching television. Obesity research. 2006, 12: 32-37. 10.1038/oby.2004.266.

Lowry R, Wechsler H, Galuska DA, Fulton JE, Kann L: Television viewing and its associations with overweight, sedentary lifestyle, and insufficient consumption of fruits and vegetables among US high school students: differences by race, ethnicity, and gender. The Journal of school health. 2002, 72 (10): 413-421.

Utter J, Scragg R, Schaaf D: Associations between television viewing and consumption of commonly advertised foods among New Zealand children and young adolescents. Public health nutrition. 2006, 9 (5): 606-612. 10.1079/PHN2005899.

Kremers SP, Horst van der K, Brug J: Adolescent screen-viewing behaviour is associated with consumption of sugar-sweetened beverages: The role of habit strength and perceived parental norms. Appetite. 2007, 48 (3): 345-350. 10.1016/j.appet.2006.10.002.

Boynton-Jarrett R, Thomas TN, Peterson KE, Wiecha J, Sobol AM, Gortmaker SL: Impact of television viewing patterns on fruit and vegetable consumption among adolescents. Pediatrics. 2003, 112 (6 Pt 1): 1321-1326. 10.1542/peds.112.6.1321.

Wiecha JL, Peterson KE, Ludwig DS, Kim J, Sobol A, Gortmaker SL: When children eat what they watch: impact of television viewing on dietary intake in youth. Archives of pediatrics & adolescent medicine. 2006, 160 (4): 436-442. 10.1001/archpedi.160.4.436.

Gortmaker SL, Peterson K, Wiecha J, Sobol AM, Dixit S, Fox MK, Laird N: Reducing obesity via a school-based interdisciplinary intervention among youth: Planet Health. Arch Pediatr Adolesc Med. 1999, 153 (4): 409-418.

Utter J, Neumark-Sztainer D, Jeffery R, Story M: Couch potatoes or french fries: are sedentary behaviors associated with body mass index, physical activity, and dietary behaviors among adolescents?. J Am Diet Assoc. 2003, 103 (10): 1298-1305. 10.1016/S0002-8223(03)01079-4.

Schmitz KH, Harnack L, Fulton JE, Jacobs DR, Gao S, Lytle LA, Van Coevering P: Reliability and validity of a brief questionnaire to assess television viewing and computer use by middle school children. J Sch Health. 2004, 74 (9): 370-377.

American Academy of Pediatrics: Children, adolescents, and television. Pediatrics. 2001, 107 (2): 423-426. 10.1542/peds.107.2.423.

Perks SM, Roemmich JN, Sandow-Pajewski M, Clark PA, Thomas E, Weltman A, Patrie J, Rogol AD: Alterations in growth and body composition during puberty. IV. Energy intake estimated by the youth-adolescent food-frequency questionnaire: validation by the doubly labeled water method. The American journal of clinical nutrition. 2000, 72 (6): 1455-1460.

Rockett HR, Breitenbach M, Frazier AL, Witschi J, Wolf AM, Field AE, Colditz GA: Validation of a youth/adolescent food frequency questionnaire. Preventive medicine. 1997, 26 (6): 808-816. 10.1006/pmed.1997.0200.

Rockett HR, Wolf AM, Colditz GA: Development and reproducibility of a food frequency questionnaire to assess diets of older children and adolescents. J Am Diet Assoc. 1995, 95 (3): 336-340. 10.1016/S0002-8223(95)00086-0.

French SA, Harnack L, Jeffery RW: Fast food restaurant use among women in the Pound of Prevention study: dietary, behavioral and demographic correlates. Int J Obes Relat Metab Disord. 2000, 24 (10): 1353-1359. 10.1038/sj.ijo.0801429.

Niemeier HM, Raynor HA, Lloyd-Richardson EE, Rogers ML, Wing RR: Fast food consumption and breakfast skipping: predictors of weight gain from adolescence to adulthood in a nationally representative sample. J Adolesc Health. 2006, 39 (6): 842-849. 10.1016/j.jadohealth.2006.07.001.

Schmidt M, Affenito SG, Striegel-Moore R, Khoury PR, Barton B, Crawford P, Kronsberg S, Schreiber G, Obarzanek E, Daniels S: Fast-food intake and diet quality in black and white girls: the National Heart, Lung, and Blood Institute Growth and Health Study. Archives of pediatrics & adolescent medicine. 2005, 159 (7): 626-631. 10.1001/archpedi.159.7.626.

Himes JH, Hannan P, Wall M, Neumark-Sztainer D: Factors associated with errors in self-reports of stature, weight, and body mass index in Minnesota adolescents. Ann Epidemiol. 2005, 15 (4): 272-278. 10.1016/j.annepidem.2004.08.010.

Story M, Neumark-Sztainer D, French S: Individual and environmental influences on adolescent eating behaviors. J Am Diet Assoc. 2002, 102 (3 Suppl): S40-51. 10.1016/S0002-8223(02)90421-9.

Little R: Survey nonresponse adjustments for estimates of means. International Statistical Review. 1986, 54 (2): 137-139.

Francis LA, Birch LL: Does Eating during Television Viewing Affect Preschool Children's Intake?. Journal of the American Dietetic Association. 2006, 106 (4): 598-600. 10.1016/j.jada.2006.01.008.

Coon KA, Goldberg J, Rogers BL, Tucker KL: Relationships between use of television during meals and children's food consumption patterns. Pediatrics. 2001, 107 (1): E7-10.1542/peds.107.1.e7.

Matheson DM, Killen JD, Wang Y, Varady A, Robinson TN: Children's food consumption during television viewing. The American journal of clinical nutrition. 2004, 79 (6): 1088-1094.

Scully M, Dixon H, White V, Beckmann K: Dietary, physical activity and sedentary behaviour among Australian secondary students in 2005. 2007, 22: 236-245.

Snoek HM, van Strien T, Janssens JM, Engels RC: The effect of television viewing on adolescents' snacking: individual differences explained by external, restrained and emotional eating. J Adolesc Health. 2006, 39 (3): 448-451. 10.1016/j.jadohealth.2005.12.020.

Blass EM, Anderson DR, Kirkorian HL, Pempek TA, Price I, Koleini MF: On the road to obesity: Television viewing increases intake of high-density foods. Physiology & behavior. 2006, 88 (4–5): 597-604. 10.1016/j.physbeh.2006.05.035.

Stroebele N, de Castro JM: Television viewing is associated with an increase in meal frequency in humans. Appetite. 2004, 42 (1): 111-113. 10.1016/j.appet.2003.09.001.

Foster JA, Gore SA, West DS: Altering TV viewing habits: an unexplored strategy for adult obesity intervention?. American journal of health behavior. 2006, 30 (1): 3-14.

Byrd-Bredbenner C, Finckenor M, Grasso D: Health related content in prime-time television programming. 2003, Taylor & Francis, 8: 329-341.

Henderson VR, Kelly B: Food advertising in the age of obesity: content analysis of food advertising on general market and african american television. J Nutr Educ Behav. 2005, 37 (4): 191-196. 10.1016/S1499-4046(06)60245-5.

Matheson DM, Wang Y, Klesges LM, Beech BM, Kraemer HC, Robinson TN: African-American girls' dietary intake while watching television. Obesity research. 2004, 12 (Suppl): 32S-37S. 10.1038/oby.2004.266.

Bellisle F, Dalix AM, Slama G: Non food-related environmental stimuli induce increased meal intake in healthy women: comparison of television viewing versus listening to a recorded story in laboratory settings. Appetite. 2004, 43 (2): 175-180. 10.1016/j.appet.2004.04.004.

Robinson TN: Television viewing and childhood obesity. Pediatric clinics of North America. 2001, 48 (4): 1017-1025. 10.1016/S0031-3955(05)70354-0.

Biddle SJ, Gorely T, Stensel DJ: Health-enhancing physical activity and sedentary behaviour in children and adolescents. Journal of sports sciences. 2004, 22 (8): 679-701. 10.1080/02640410410001712412.

Matheson DM, Wang Y, Klesges LM, Beech BM, Kraemer HC, Robinson TN: African-American girls' dietary intake while watching television. Obesity research. 2004, 12 (Suppl): 32S-37S. 10.1038/oby.2004.266.

Acknowledgements

DJBA would like to thank Peter J. Hannan, M. Stat., for his assistance with data analysis. This study was supported by grant R40-MC00319 (PI: Neumark-Sztainer) from the Maternal and Child Health Bureau (Title V, Social Security Act), Health Resources and Services Administration, Department of Health and Human Services, and by the Adolescent Health Protection Research Training grant number T01-DP000112 (PI: Bearinger) from the Centers for Disease Control and Prevention, Department of Health and Human Services.

Author information

Authors and Affiliations

Corresponding author

Additional information

Authors' contributions

DJBA conceived the current study, completed the data analysis, and drafted the manuscript. NIL assisted in the acquisition of the data and helped to draft and revise the manuscript. MCN helped to draft and revise the manuscript. DNS designed the larger studies (Project EAT I & II), acquired the data, and helped to revise the manuscript. MS assisted in the design of the larger studies (Project EAT I & II), assisted in the acquisition of the data, and helped to revise the manuscript.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Barr-Anderson, D.J., Larson, N.I., Nelson, M.C. et al. Does television viewing predict dietary intake five years later in high school students and young adults?. Int J Behav Nutr Phys Act 6, 7 (2009). https://doi.org/10.1186/1479-5868-6-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1479-5868-6-7