Abstract

Background

The validity of research synthesis is threatened if published studies comprise a biased selection of all studies that have been conducted. We conducted a meta-analysis to ascertain the strength and consistency of the association between study results and formal publication.

Methods

The Cochrane Methodology Register Database, MEDLINE and other electronic bibliographic databases were searched (to May 2009) to identify empirical studies that tracked a cohort of studies and reported the odds of formal publication by study results. Reference lists of retrieved articles were also examined for relevant studies. Odds ratios were used to measure the association between formal publication and significant or positive results. Included studies were separated into subgroups according to starting time of follow-up, and results from individual cohort studies within the subgroups were quantitatively pooled.

Results

We identified 12 cohort studies that followed up research from inception, four that included trials submitted to a regulatory authority, 28 that assessed the fate of studies presented as conference abstracts, and four cohort studies that followed manuscripts submitted to journals. The pooled odds ratio of publication of studies with positive results, compared to those without positive results (publication bias) was 2.78 (95% CI: 2.10 to 3.69) in cohorts that followed from inception, 5.00 (95% CI: 2.01 to 12.45) in trials submitted to regulatory authority, 1.70 (95% CI: 1.44 to 2.02) in abstract cohorts, and 1.06 (95% CI: 0.80 to 1.39) in cohorts of manuscripts.

Conclusion

Dissemination of research findings is likely to be a biased process. Publication bias appears to occur early, mainly before the presentation of findings at conferences or submission of manuscripts to journals.

Similar content being viewed by others

Background

Synthesis of published research is increasingly important in providing relevant and valid research evidence to inform clinical and health policy decision making. However, the validity of research synthesis based on published literature is threatened if published studies comprise a biased selection of the whole set of all conducted studies [1].

The observation that many studies are never published was termed "the file-drawer problem" by Rosenthal in 1979 [2]. The importance of this problem depends on whether or not the published studies are representative of all studies that have been conducted. If the published studies are a random sample of all studies that have been conducted, there will be no bias and the average estimate based on the published studies will be similar to that based on all studies. If the published studies comprise a biased sample of all studies that have been conducted, the results of a literature review will be misleading [3]. For example, the efficacy of a treatment will be exaggerated if studies with positive results are more likely to be published than those with negative results.

Publication bias is defined as "the tendency on the parts of investigators, reviewers, and editors to submit or accept manuscripts for publication based on the direction or strength of the study findings" [4]. The existence of publication bias was first suspected by Sterling in 1959, after observing that 97% of studies published in four major psychology journals provided statistically significant results [5]. In 1995, the same author concluded that the practices leading to publication bias had not changed over a period of 30 years [6].

Evidence of publication bias can be classified as direct or indirect [7]. Direct evidence includes the acknowledgement of bias by those involved in the publication process (investigators, referees or editors), comparison of the results of published and unpublished studies, and the follow-up of cohorts of registered studies [8]. Indirect evidence includes the observation of disproportionately high percentage of positive findings in the published literature, and a larger effect size in small studies as compared with large studies. This evidence is indirect because factors other than publication bias may also lead to the observed disparities.

In a Health Technology Assessment (HTA) report published in 2000, we presented a comprehensive review of studies that provided empirical evidence of publication and related biases [8]. The review found that studies with significant or favourable results were more likely to be published, or were likely to be published earlier, than those with non-significant or unimportant results. There was limited and indirect evidence indicating the possibility of full publication bias, outcome reporting bias, duplicate publication bias, and language bias. Considering that the spectrum of the accessibility of research results (dissemination profile) ranges from completely inaccessible to easily accessible, it was suggested that a single term 'dissemination bias' could be used to denote all types of publication and related biases [8].

Since then, many new empirical studies on publication and related biases have been published. For example, Egger et al provided further empirical evidence on publication bias, language bias, grey literature bias, and MEDLINE index bias [9], and Moher et al evaluated language bias in meta-analyses of randomised controlled trials [10]. Recently, more convincing evidence on outcome reporting bias has been published [11–13]. In addition, a large number of studies of publication bias in conference abstracts have been published [14]. As this new empirical evidence may strengthen or contradict the empirical evidence included in the previous review, we have updated our review of dissemination bias, by synthesizing findings from newly and previously identified studies [15]. This paper focuses on findings from a review of cohort studies that provided direct evidence on dissemination bias by investigating the association between publication and study results.

Methods

Criteria for inclusion

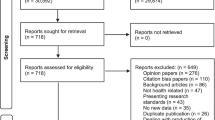

This review included any studies that tracked a cohort of studies and reported the rate of publication by study results. The relevant empirical studies may have tracked a cohort of protocols approved by research ethics committees, registered by research funding bodies, submitted to regulatory authorities, presented at conferences, or submitted to journals. Primary studies included in such cohorts could be clinical trials, observational or basic research. We separated the cohorts into four subgroups according to stages in a simplified pathway of research publication from inception to the journal publication (Figure 1). A study that followed up a cohort of research from the beginning (even if retrospectively) was termed an inception cohort study. A regulatory cohort study refers to a study that examined formal publication of clinical trials submitted to regulatory authorities (such as the US Food and Drug Administration or similar). An abstract cohort study investigated the subsequent full publication of abstracts presented at conferences. A manuscript cohort study followed the publication fate of manuscripts submitted to journals. Studies that did not provide sufficient data to compare the rates of publication between studies with different results were excluded.

Literature Search strategy

The identification of cohort studies for this review was conducted as part of a comprehensive search for empirical and methodological studies on research dissemination bias. We searched MEDLINE, the Cochrane Methodology Register Database (CMRD), EMBASE, AMED and CINAHL to August 2008 (see Additional file 1). PubMed, PsycINFO and OpenSIGLE were searched in May 2009 to locate more recently published studies. References (titles with or without abstracts) identified by the MEDLINE and CMRD searches were examined independently by two reviewers, while those from other databases were assessed by one reviewer. The reference lists of retrieved studies and reviews were examined to identify additional studies.

Data Extraction and Synthesis

Data extraction included type of cohort, clinical speciality, design of studies, duration of follow-up, definition of study results, and the rate of publication by study results. One reviewer extracted data directly into tables, which were checked by a second reviewer.

The outcome, study publication, was usually defined as full publication in a journal, but study results were often categorised differently between included cohorts. We used the classifications 'statistically significant (p ≤ 0.05)' versus 'non-significant (p > 0.05)' or 'positive' versus 'non-positive'. Positive results included results that were considered to be 'significant', 'positive', 'favourable', 'important', 'striking', 'showed effect', or 'confirmatory', while non-positive results were labelled as being 'negative', 'non-significant', 'less or not important', 'invalidating', 'inconclusive', 'questionable', 'null', or 'neutral'.

The validity of the included cohort studies was not formally assessed in this review, due to a lack of reliable tools for assessment of methodological studies. However, we tried to identify and summarise the main methodological limitations in the included studies.

Data from the included studies were first summarised in narrative form. The odds ratio was used as the outcome statistic to measure the association between study results and subsequent publication. In existing reviews of cohort studies of publication bias, results from different studies have been quantitatively combined [14, 16, 17], although significant heterogeneity across individual studies has lead to some controversy [18]. We felt it helpful to provide pooled estimates after separating the included cohort studies into appropriate subgroups, in order to improve statistical power and generalisability. Results from individual studies were quantitatively pooled using random-effects meta-analysis. Heterogeneity within each subgroup was measured using the I 2 statistic, considering heterogeneity to be moderate or high when I 2 is greater than 50% [19]. Rucker et al pointed out that, given the same between-study variance, the value of I 2 will increase rapidly as the sample size of individual studies increases in meta-analysis [20]. Therefore, clinical and methodological relevance were the most important issue to consider when deciding whether the results from individual studies could be quantitatively combined in meta-analysis.

Funnel plots were used to assess the association between the point estimates of log odds ratio (a measure of extent of publication bias) and the precision of estimated log odds ratio (inverse of standard errors). The visual assessment of these plots was supported by a formal statistical test using the regression method suggested by Peters et al [21].

Results

Forty-eight cohort studies provided sufficient data to assess rate of publication by study results. These consisted of 12 inception cohort studies [22–33], four regulatory cohort studies [34–37], 28 abstract cohort studies [38–65], and four manuscript cohort studies [66–69]. Eight inception cohort studies were excluded because they did not provide data on the results of unpublished studies or did not examine the association between publication and study results [12, 13, 70–75].

Main characteristics of included cohort studies

The main characteristics of the included cohort studies are summarised by subgroup in four additional files (Additional file 2, 3, 4 and 5). One inception cohort study [32] and four abstract cohort studies [44, 49, 63, 65] were available only in abstract form. Of the 12 cohort studies in the inception subgroup, that assessed the fate of research from its inception, eight did not restrict the field of research, and four were limited to AIDS/HIV [29], health effects of passive smoking [30], complementary medicine [33], or eye diseases [32]. Of the four cohort studies in the regulatory subgroup, two did not specify clinical fields [34, 36] and two included studies of anti-depressants [35, 37]. All of the cohort studies of conference abstracts were restricted to a specific clinical field, such as emergency medicine, anaesthesiology, perinatal studies, cystic fibrosis, or oncology. Of the four cohorts of journal manuscripts, two examined manuscripts submitted to general medical journals (JAMA, BMJ, Lancet, and Annals of Internal Medicine) [66, 69] while two included manuscripts submitted to the American version of the Journal of Bone and Joint Surgery [67, 68].

Four of the 12 cohort studies in the inception group, all four studies in the regulatory group, and nine of the 28 cohort studies in the abstract group included only clinical trials. The remaining cohort studies included research of different designs, although separate data for clinical trials was available in some of these studies. Follow-up time ranged from 1 to 12 years in the inception cohort studies, and from 2 to 5 years in the regulatory or abstract cohort studies.

Authors of inception cohort studies used postal questionnaires or telephone interviews of investigators or both to obtain information on results of unpublished studies. The response rate to the survey of investigators ranged from 69% to 92% (see Additional file 2). Information on study results was already available in five regulatory cohort studies and in all abstract cohort studies. Bibliographic databases were usually searched to decide publication status.

Study results were categorised as statistically significant (p < 0.05) or non-significant in 19 studies, and a wide range of different methods were used to categorise study results as, for example, positive versus negative, confirmatory versus inconclusive, striking versus unimportant.

Pooled estimates of publication bias

Table 1 summarises the main results of the meta-analyses. The formal publication of statistically significant results (p < 0.05) could be compared with that of non-significant results in four inception cohort studies, one regulatory cohort study, 12 abstract cohort studies and two manuscript cohort studies (Figure 2). The rate of publication of studies in the four inception cohorts ranged from 60% to 93% for significant results and from 20% to 86% for non-significant results. The rate of full publication of meeting abstracts ranged from 37% to 81% for statistically significant results, and from 22% to 70% for non-significant results. Heterogeneity across the four cohort studies from the inception subgroup was statistically significant (I2 = 61%, p = 0.05). There was no statistically significant heterogeneity across studies within the cohort studies of abstracts and cohort studies of manuscripts. The pooled odds ratio for publication bias by statistical significance of results was 2.40 (95% CI: 1.18 to 4.88) for the four inception cohort studies, 1.62 (95% CI: 1.34 to 1.96) for the 12 abstract cohort studies, and 1.15 (95% CI: 0.64 to 2.10) for the two manuscript cohort studies (Figure 2). Only one regulatory cohort study was included in Figure 2, reported an odds ratio of 11.06 (95% CI: 0.56 to 21.9.7) for publication of statistically significant vs. non-significant results.

To include data from other cohort studies, a positive result was loosely defined as important, confirmatory or significant, while a 'non-positive' result included negative, non-important, inconclusive or non-significant results. This more inclusive definition of positive results allowed the inclusion of all 12 inception cohort studies, four regulatory cohort studies, 27 abstract cohort studies, and four manuscript cohort studies (Figure 3). There was statistically significant heterogeneity across cohort studies within regulatory (p = 0.04) and abstract subgroups (p < 0.001). Pooled estimates of odds ratios consistently indicated that studies with positive results were more likely to be published than studies with non-positive results, but this was not true after submission for publication (Figure 3).

Types of studies included in the cohort studies varied from basic experimental, observational and qualitative research to clinical trials. When the analyses were restricted to clinical trials, the results were similar to that based on all studies, and there was no significant heterogeneity in the extent of publication bias among the included inception cohorts and abstract cohorts of clinical trials (Figure 4 and Figure 5).

Funnel plots constructed separately for the four subgroups of cohort studies were not statistically significantly asymmetric (Figure 6).

Factors associated with publication bias

Some cohort studies have examined the impacts of other factors on biased publication of research. The factors investigated include study design, type of study, sample size, funding source, and investigators' characteristics. However, only a few of the included cohort studies reported findings regarding factors associated with publication bias and findings from different studies were often inconsistent.

Easterbrook et al conducted subgroup analyses to examine susceptibility to publication bias amongst various subgroups of studies. They found that observational, laboratory-based experimental studies and non-randomised trials had a greater risk of publication bias than randomised clinical trials. Factors associated with less bias included a concurrent comparison group, a high investigator rating of study importance and a sample size greater than 20 [28].

Dickersin and colleagues investigated the association between the risk of publication bias and type of study (observational, clinical trial), multi or single centre, sample size, funding source and principle investigators' characteristics (such as sex, degree, rank). They found no statistically significant association between any factors examined and publication bias [26]. In a different inception cohort study, Dickersin and Min reported that the odds ratio for publication bias was significantly different between multi-centre versus single centre studies, and between studies with a female principle investigator and studies with a male principle investigator [27]. They did not find an association between publication bias and the use of randomisation or blinding, having a comparison group or a larger sample size [27].

Stern and Simes found that the risk of publication bias tended to be greater for clinical trials (odds ratio 3.13, 95% CI: 1.76 to 5.58) than other studies (for all quantitative studies odds ratio 2.32, 95% CI: 1.47 to 3.66). When analyses were restricted to studies with a sample size ≥100, publication bias was still evident (hazard ratio 2.00, 95% CI: 1.09 to 3.66) [31].

Discussion

This updated analysis yielded results similar to previous reviews: studies with statistically significant or positive results are more likely to be formally published than those with non-significant or non-positive results [14, 16–18]. In 1997, Dickersin combined the results from four inception cohort studies [26–28, 31] and found that the pooled adjusted odds ratio for publication bias (publication of studies with significant or important results versus those with unimportant results) was 2.54 (95% CI: 1.44 to 4.47) [16]. A recent systematic review of inception cohort studies of clinical trials found the existence of publication bias and outcome reporting bias, although pooled meta-analysis was not conducted due to perceived differences between studies [18]. A Cochrane methodology review of publication bias by Hopewell et al[17] included five inception cohort studies of trials registered before the main results being known [22, 26, 27, 29, 31], in which the pooled odds ratio for publication bias was 3.90 (95% CI: 2.68 to 5.68). In a Cochrane methodology review by Scherer et al, the association between the subsequent full publication and study results was examined in 16 of 79 abstract cohort studies [14], finding that the subsequent full publication of conference abstracts was associated with positive results (pooled OR = 1.28, 95% CI: 1.15 to 1.42).

Our review is the first to enable an explicit comparison of results from cohort studies of publication bias with fundamentally different sampling frames. Biased selection for publication may affect research dissemination over the whole process from before study completion, to presentation of findings at conferences, manuscript submission to journals, and formal publication in journals (Figure 1). It seems that publication bias occurs mainly before the presentation of findings at conferences and before the submission of manuscript to journals (Figure 2 and Figure 3). The subsequent publication of conference abstracts was still biased but the extent of publication bias tended to be smaller as compared to all studies conducted. After the submission of a manuscript for publication, editorial decisions were not clearly associated with study results. However, publication bias may still be an issue for rejected manuscripts, if the possibility of their re-submission to a different journal is associated with the study results. One excluded cohort study (in which data on publication bias was not available) found that psychological research with statistically significant results was more likely to be submitted for publication than research with non-significant results (74% versus 4%) [72].

Since the acceptance of manuscripts for publication by journal editors was not determined by the direction or strength of study results, the existence of publication bias may be largely due to biased selection by investigators of submitted studies. This is supported by data suggesting that a large proportion of submitted papers show significant or positive results (72%) in four cohort studies (Table 2). Since authors inevitably consider the possibility of their manuscripts being rejected before the submission, submitted studies with negative results may be a biased selection of all studies with negative results. In addition, although no conflict of interest was declared in the four cohort studies of submitted manuscripts, this kind of study will always need support or collaboration from journal editors. In prospective studies, editors' decision on manuscript acceptance may be influenced by their awareness of the ongoing study [69]. Therefore, biased selection for publication by journals cannot be completely ruled out. In Olson et al's cohort study of manuscripts submitted to JAMA, there was a tendency that studies with significant results had a higher rate of acceptance than studies with non-significant or unclear results (20.4% vs 15.2%, p = 0.07) [69]. In the cohort study by Okike et al, a subgroup analysis of 156 manuscripts with a high level of evidence (level I or II) found that the acceptance rate was significantly higher for studies with positive or neutral results than studies with negative results (37%, 36% and 5% respectively; p = 0.02) [68].

Important and convincing evidence on the existence of publication bias comes from the inception cohort studies. Study results were defined differently among the empirical studies assessing publication bias. The most objective method would be to classify quantitative results as statistically significant (p < 0.05) or not. However, this was not always possible or appropriate. When other methods were used to classify study results as important or not, bias may be introduced due to inevitable subjectivity.

Large cohort studies on publication bias were often highly diverse in terms of research questions, designs, and other study characteristics. Many factors (e.g., sample size, design, research question, and investigators' characteristics) may confound the association as they are associated with both study results and the possibility of publication. However, due to insufficient data, it was impossible to exclude the impact of confounding factors on the observed association between study results and formal publication. To improve our understanding of factors associated with publication bias findings from qualitative research on the process of research dissemination may be helpful [76, 77].

There was a statistically significant heterogeneity within subgroups of inception, regulatory and abstract cohort studies (Table 1), although restricting analyses to clinical trials reduced the heterogeneity (Figure 4 and 5). The observed heterogeneity may be a result of differences in study designs, research questions, how the cohorts were assembled, definitions of study results, and so on. For example, the significant heterogeneity across inception cohort studies was due to one study by Misakian and Bero (Figure 2 and Figure 3) [30]. After excluding this cohort study, there was no longer significant heterogeneity across inception cohort studies (see Notes to Figure 2 and Figure 3). The cohort study by Misakian and Bero included research on health effects of passive smoking, and the impact of statistical significance of results on publication may be different from studies of other research topics [30].

The four cohorts of trials submitted to regulatory authorities showed a greater extent of publication bias than other subgroups of cohort studies (Figure 3) [34–37]. Only 855 primary studies were included in the regulatory cohort studies, and two of the four regulatory cohort studies focused on trials of antidepressants [35, 37]. Therefore, these regulatory cohort studies may be a biased selection of all possible cases.

Studies of publication bias themselves may be as vulnerable as other studies to the selective publication of significant or striking findings [1, 78–80]. In this review, the funnel plot asymmetry was non-significant for each of the four research cohorts (Figure 6). However, we identified a large number of reports of full publication of meeting abstracts, and the association between study results and full publication had not been reported in most of these reports. It is often unclear whether this association had not been examined, or was not reported because the association proved to be non-significant. As an example, Zaretsky and Imrie reported no significant difference (p = 0.53) in the rate of subsequent publication of 57 meeting abstracts between statistically significant and non-significant results; but this study could not be included in the analysis due to insufficient data [65].

Implications

Despite many caveats regarding the available empirical evidence on publication bias, there is little doubt that dissemination of research findings is likely to be a biased process. All funded or approved studies should be prospectively registered and these registers should be publicly accessible. Regulatory authorities should also provide publicly accessible databases of all studies received from pharmaceutical industry. Investigators should be encouraged and supported to present their studies at conferences. A thorough literature search should be conducted in systematic reviews to identify all relevant studies, including searches of registers of clinical trials and available databases of unpublished studies.

Conclusion

There is consistent empirical evidence that the publication of a study that exhibits statistically significant or 'important' results is more likely to occur than the publication of a study that does not show such results. Indirect evidence indicates that publication bias occurs mainly before the presentation of findings at conferences and the submission of manuscripts to journals.

References

Begg CB, Berlin JA: Publication bias; a problem in interpreting medical data. J R Statist Soc A. 1988, 151: 445-463. 10.2307/2982993.

Rosenthal R: The "file drawer problem" and tolerance for null results. Psychol Bull. 1979, 86 (3): 638-641. 10.1037/0033-2909.86.3.638.

Begg CB, Berlin JA: Publication bias and dissemination of clinical research. J Natl Cancer Inst. 1989, 81: 107-115. 10.1093/jnci/81.2.107.

Dickersin K: The existence of publication bias and risk factors for its occurrence. JAMA. 1990, 263 (10): 1385-1389. 10.1001/jama.263.10.1385.

Sterling T: Publication decisions and their possible effects on inferences drawn from tests of significance - or vice versa. Am Stat Assoc J. 1959, 54: 30-34. 10.2307/2282137.

Sterling TD, Rosenbaum WL, Weinkam JJ: Publication decisions revisited - the effect of the outcome of statistical tests on the decision to publish and vice-versa. American Statistician. 1995, 49 (1): 108-112. 10.2307/2684823.

Sohn D: Publications bias and the evaluation of psychotherapy efficacy In reviews of the research literature. Clin Psychol Rev. 1996, 16 (2): 147-156. 10.1016/0272-7358(96)00005-0.

Song F, Eastwood AJ, Gilbody S, Duley L, Sutton AJ: Publication and related biases. Health Technol Assess. 2000, 4 (10): 1-115. 10.3310/hta4100.

Egger M, Juni P, Bartlett C, Holenstein F, Sterne J: How important are comprehensive literature searches and the assessment of trial quality in systematic reviews? Empirical study. Health Technol Assess. 2003, 7: 1-76.

Moher D, Pham B, Lawson ML, Klassen TP: The inclusion of reports of randomised trials published in languages other than English in systematic reviews. Health Technol Assess. 2003, 7 (41): 1-90.

Chan AW, Altman DG: Identifying outcome reporting bias in randomised trials on PubMed: review of publications and survey of authors. BMJ. 2005, 330 (7494): 753-10.1136/bmj.38356.424606.8F.

Chan AW, Hrobjartsson A, Haahr MT, Gotzsche PC, Altman DG: Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA. 2004, 291 (20): 2457-2465. 10.1001/jama.291.20.2457.

Chan AW, Krleza-Jeri K, Schmid I, Altman DG: Outcome reporting bias in randomized trials funded by the Canadian Institutes of Health Research [see comment]. CMAJ. 2004, 171 (7): 735-740.

Scherer RW, Langenberg P, von Elm E: Full publication of results initially presented in abstracts. Cochrane Database Syst Rev. 2007, MR000005-2

Song F, Parekh S, Hooper L, Loke Y, Ryder JJ, Sutton AJ, Hing C, Shing C, Pang C, Harvey I: Dissemination and publication of research findings: an updated review of related biases. Health Technol Assess. 2010,

Dickersin K: How important is publication bias? A synthesis of available data. Aids Educ Prev. 1997, 9 (1 SA): 15-21.

Hopewell S, Loudon K, Clarke MJ, Oxman AD, Dickersin K: Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database Syst Rev. 2009, MR000006-1

Dwan K, Altman DG, Arnaiz JA, Bloom J, Chan AW, Cronin E, Decullier E, Easterbrook PJ, Von Elm E, Gamble C, et al: Systematic review of the empirical evidence of study publication bias and outcome reporting bias. PLoS ONE. 2008, 3 (8): e3081-10.1371/journal.pone.0003081.

Higgins JP, Thompson SG, Deeks JJ, Altman DG: Measuring inconsistency in meta-analyses. BMJ. 2003, 327 (7414): 557-560. 10.1136/bmj.327.7414.557.

Rucker G, Schwarzer G, Carpenter JR, Schumacher M: Undue reliance on I2 in assessing heterogeneity may mislead. BMC Med Res Methodol. 2008, 8: 79-10.1186/1471-2288-8-79.

Peters JL, Sutton AJ, Jones DR, Abrams KR, Rushton L: Comparison of two methods to detect publication bias in meta-analysis. JAMA. 2006, 295 (6): 676-680. 10.1001/jama.295.6.676.

Bardy AH: Bias in reporting clinical trials. Br J Clin Pharmacol. 1998, 46 (2): 147-150. 10.1046/j.1365-2125.1998.00759.x.

Cronin E, Sheldon T: Factors influencing the publication of health research. Int J Technol Assess Health Care. 2004, 20 (3): 351-355. 10.1017/S0266462304001175.

Decullier E, Lheritier V, Chapuis F: Fate of biomedical research protocols and publication bias in France: retrospective cohort study [see comment]. BMJ. 2005, 331 (7507): 19-10.1136/bmj.38488.385995.8F.

Decullier E, Chapuis F: Impact of funding on biomedical research: a retrospective cohort study. BMC Public Health. 2006, 6: 165-10.1186/1471-2458-6-165.

Dickersin K, Min YI, Meinert CL: Factors influencing publication of research results. Follow up of applications submitted to two institutional review boards. JAMA. 1992, 267 (3): 374-378. 10.1001/jama.267.3.374.

Dickersin K, Min YI: NIH clinical trials and publication bias. Online J Curr Clin Trials. 1993, Doc No 50 (3):

Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR: Publication bias in clinical research. Lancet. 1991, 337 (8746): 867-872. 10.1016/0140-6736(91)90201-Y.

Ioannidis J: Effect of the statistical significance of results on the time to completion and publication of randomized efficacy trials. JAMA. 1998, 279 (4): 281-286. 10.1001/jama.279.4.281.

Misakian AL, Bero LA: Publication bias and research on passive smoking: comparison of published and unpublished studies. JAMA. 1998, 280 (3): 250-253. 10.1001/jama.280.3.250.

Stern JM, Simes RJ: Publication bias: evidence of delayed publication in a cohort study of clinical research projects. BMJ. 1997, 315 (7109): 640-645.

Wormald R, Bloom J, Evans J, Oldfled K: Publication bias in eye trials [abstract]. Second International Conference Scientific Basis of Health Science and 5th Annual Cochrane Colloquium. 1997, Amsterdam, The Netherlands: Cochrane Collaboration

Zimpel T, Windeler J: [Publications of dissertations on unconventional medical therapy and diagnosis procedures--a contribution to "publication bias"]. Forschende Komplementarmedizin und Klassische Naturheilkunde. 2000, 7 (2): 71-74. 10.1159/000021324.

Lee K, Bacchetti P, Sim I: Publication of clinical trials supporting successful new drug applications: a literature analysis. PLoS Med. 2008, 5 (9): e191-10.1371/journal.pmed.0050191.

Melander H, Ahlqvist-Rastad J, Meijer G, Beermann B: Evidence b(i)ased medicine--selective reporting from studies sponsored by pharmaceutical industry: review of studies in new drug applications. BMJ. 2003, 326 (7400): 1171-1173. 10.1136/bmj.326.7400.1171.

Rising K, Bacchetti P, Bero L: Reporting bias in drug trials submitted to the Food and Drug Administration: review of publication and presentation. PLoS Med. 2008, 5 (11): e217-10.1371/journal.pmed.0050217.

Turner EH, Matthews AM, Linardatos E, Tell RA, Rosenthal R: Selective publication of antidepressant trials and its influence on apparent efficacy. N Engl J Med. 2008, 358 (3): 252-260. 10.1056/NEJMsa065779.

Akbari-Kamrani M, Shakiba B, Parsian S: Transition from congress abstract to full publication for clinical trials presented at laser meetings. Lasers Med Sci. 2008, 23 (3): 295-299. 10.1007/s10103-007-0484-4.

Brazzelli M, Lewis SC, Deeks JJ, Sandercock PAG: No evidence of bias in the process of publication of diagnostic accuracy studies in stroke submitted as abstracts. J Clin Epidemiol. 2009, 62 (4): 425-430. 10.1016/j.jclinepi.2008.06.018.

Callaham ML, Wears RL, Weber EJ, Barton C, Young G: Positive-outcome bias and other limitations in the outcome of research abstracts submitted to a scientific meeting [erratum appears in JAMA 1998 Oct 14;280(14):1232]. JAMA. 1998, 280 (3): 254-257. 10.1001/jama.280.3.254.

Castillo J, Garcia Guasch R, Cifuentes I: Fate of abstracts from the Paris 1995 European Society of Anaesthesiologists meeting. Eur J Anaesthesiol. 2002, 19 (12): 888-893.

Cheng K, Preston C, Ashby D, O'Hea U, Smyth RL: Time to publication as full reports of abstracts of randomized controlled trials in cystic fibrosis. Pediatr Pulmonol. 1998, 26 (2): 101-105. 10.1002/(SICI)1099-0496(199808)26:2<101::AID-PPUL5>3.0.CO;2-P.

DeBellefeuille C, Morrison CA, Tannock IF: The fate of abstracts submitted to a cancer meeting: factors which influence presentation and subsequent publication. Ann Oncol. 1992, 3: 187-191.

Delamere F, Williams H: To what extent are conference abstracts reporting randomised controlled trials of skin diseases published subsequently? [abstract]. 13th Cochrane Colloquium; 2005 Oct 22 26; Melbourne, Australia. 2005, 101-

Eloubeidi MA, Wade SB, Provenzale D: Factors associated with acceptance and full publication of GI endoscopic research originally published in abstract form. Gastrointest Endosc. 2001, 53 (3): 275-282.

Evers JL: Publication bias in reproductive research. Hum Reprod. 2000, 15 (10): 2063-2066. 10.1093/humrep/15.10.2063.

Glick N, MacDonald I, Knoll G, Brabant A, Gourishankar S: Factors associated with publication following presentation at a transplantation meeting. Am J Transplant. 2006, 6 (3): 552-556. 10.1111/j.1600-6143.2005.01203.x.

Ha TH, Yoon DY, Goo DH, Chang SK, Seo YL, Yun EJ, Moon JH, Lee YJ, Lim KJ, Choi CS: Publication rates for abstracts presented by korean investigators at major radiology meetings. Korean J Radiol. 2008, 9 (4): 303-311. 10.3348/kjr.2008.9.4.303.

Halpern SH, Palmer C, Angle P, Tarshis J: Published abstracts in obsteterical anesthesia: full publication rates and data reliability. Anesthesiology. 2001, 94 (1A): A69-

Harris IA, Mourad M, Kadir A, Solomon MJ, Young JM: Publication bias in abstracts presented to the annual meeting of the American Academy of Orthopaedic Surgeons. J Orthop Surg (Hong Kong). 2007, 15 (1): 62-66.

Harris IA, Mourad MS, Kadir A, Solomon MJ, Young JM: Publication bias in papers presented to the Australian Orthopaedic Association Annual Scientific Meeting. ANZ J Surg. 2006, 76 (6): 427-431. 10.1111/j.1445-2197.2006.03747.x.

Hashkes PJ, Uziel Y: The publication rate of abstracts from the 4th Park City Pediatric Rheumatology meeting in peer-reviewed journals: what factors influenced publication?. J Rheumatol. 2003, 30 (3): 597-602.

Kiroff GK: Publication bias in presentations to the Annual Scientific Congress. ANZ J Surg. 2001, 71 (3): 167-171. 10.1046/j.1440-1622.2001.02058.x.

Klassen TPWNRKSKHLCWRMD: Abstracts of randomized controlled trials presented at the society for pediatric research meeting: an example of publication bias [comment]. Arch Pediatr Adolesc Med. 2002, 156 (5): 474-479.

Krzyzanowska MK, Pintilie M, Tannock IF: Factors associated with failure to publish large randomized trials presented at an oncology meeting. JAMA. 2003, 290 (4): 495-501. 10.1001/jama.290.4.495.

Landry VL: The publication outcome for the papers presented at the 1990 ABA conference. J Burn Care Rehabil. 1996, 17 (1): 23A-26A. 10.1097/00004630-199601000-00002.

Peng PH, Wasserman JM, Rosenfeld RM: Factors influencing publication of abstracts presented at the AAO-HNS Annual Meeting. Otolaryngol Head Neck Surg. 2006, 135 (2): 197-203. 10.1016/j.otohns.2006.04.006.

Petticrew M, Gilbody S, Song F: Lost information? The fate of papers presented at the 40th society for Social Medicine Conference. J Epidemiol Community Health. 1999, 53 (7): 442-443. 10.1136/jech.53.7.442.

Sanossian N, Ohanian AG, Saver JL, Kim LI, Ovbiagele B: Frequency and determinants of nonpublication of research in the stroke literature. Stroke. 2006, 37 (10): 2588-2592. 10.1161/01.STR.0000240509.05587.a2.

Scherer RW, Dickersin K, Langenberg P: Full publication of results initially presented in abstracts. A meta-analysis. JAMA. 1994, 272: 158-162. 10.1001/jama.272.2.158.

Smith WA, Cancel QV, Tseng TY, Sultan S, Vieweg J, Dahm P: Factors associated with the full publication of studies presented in abstract form at the annual meeting of the American Urological Association. J Urol. 2007, 177 (3): 1084-1088. 10.1016/j.juro.2006.10.029. discussion 1088-1089.

Timmer A, Hilsden RJ, Cole J, Hailey D, Sutherland LR: Publication bias in gastroenterological research - a retrospective cohort study based on abstracts submitted to a scientific meeting. BMC Med Res Methodol. 2002, 2 (1): 7-10.1186/1471-2288-2-7.

Vecchi S, Belleudi V, Amato L, Davoli M: Full publication of abstracts submitted at a major international conference on drug addiction: a longitudinal study [abstract]. 14th Cochrane Colloquium; 2006 October 23 26; Dublin, Ireland. 2006

Zamakhshary M, Abuznadah W, Zacny J, Giacomantonio M: Research publication in pediatric surgery: a cross-sectional study of papers presented at the Canadian Association of Pediatric Surgeons and the American Pediatric Surgery Association. J Pediatr Surg. 2006, 41 (7): 1298-1301. 10.1016/j.jpedsurg.2006.03.042.

Zaretsky Y, Imrie K: The fate of phase III trial abstracts presented at the American Society of Hematology. Blood. 2002, 100 (11): 185a-

Lee KP, Boyd EA, Holroyd-Leduc JM, Bacchetti P, Bero LA: Predictors of publication: characteristics of submitted manuscripts associated with acceptance at major biomedical journals. Med J Aust. 2006, 184 (12): 621-626.

Lynch JR, Cunningham MRA, Warme WJ, Schaad DC, Wolf FM, Leopold SS: Commercially funded and United States-based research is more likely to be published; good-quality studies with negative outcomes are not. J Bone Joint Surg - Am. 2007, 89 (5): 1010-1018. 10.2106/JBJS.F.01152.

Okike K, Kocher MS, Mehlman CT, Heckman JD, Bhandari M: Publication bias in orthopaedic research: an analysis of scientific factors associated with publication in the Journal of Bone and Joint Surgery (American Volume). J Bone Joint Surg - Am. 2008, 90 (3): 595-601. 10.2106/JBJS.G.00279.

Olson CM, Rennie D, Cook D, Dickersin K, Flanagin A, Hogan JW, Zhu Q, Reiling J, Pace B: Publication bias in editorial decision making. JAMA. 2002, 287 (21): 2825-2828. 10.1001/jama.287.21.2825.

Blumle A, Antes G, Schumacher M, Just H, von Elm E: Clinical research projects at a German medical faculty: follow-up from ethical approval to publication and citation by others. J Med Ethics. 2008, 34 (9): e20-10.1136/jme.2008.024521.

Druss BG, Marcus SC: Tracking publication outcomes of National Institutes of Health grants. Am J Med. 2005, 118 (6): 658-663. 10.1016/j.amjmed.2005.02.015.

Cooper H, Charlton K: Finding the missing science: the fate of studies submitted for review by a human subjects committee. Psychological Methods. 1997, 2 (4): 447-452. 10.1037/1082-989X.2.4.447.

Hahn S, Williamson PR, Hutton JL: Investigation of within-study selective reporting in clinical research: follow-up of applications submitted to a local research ethics committee. J Eval Clin Pract. 2002, 8 (3): 353-359. 10.1046/j.1365-2753.2002.00314.x.

Pich J, Carne X, Arnaiz J-A, Gomez B, Trilla A, Rodes J: Role of a research ethics committee in follow-up and publication of results. Lancet. 2003, 361 (9362): 1015-1016. 10.1016/S0140-6736(03)12799-7.

von Elm E, Rollin A, Blumle A, Huwiler K, Witschi M, Egger M: Publication and non-publication of clinical trials: longitudinal study of applications submitted to a research ethics committee. Swiss Med Wkly. 2008, 138 (13-14): 197-203.

Dickersin K, Ssemanda E, Mansell C, Rennie D: What do the JAMA editors say when they discuss manuscripts that they are considering for publication? Developing a schema for classifying the content of editorial discussion. BMC Med Res Methodol. 2007, 7: 44-10.1186/1471-2288-7-44.

Calnan M, Smith GD, Sterne JAC: The publication process itself was the major cause of publication bias in genetic epidemiology. J Clin Epidemiol. 2006, 59 (12): 1312-1318. 10.1016/j.jclinepi.2006.05.002.

Laupacis A: Methodological studies of systematic reviews: is there publication bias?. Arch Intern Med. 1997, 157: 357-10.1001/archinte.157.3.357.

Dubben H-H, Beck-Bornholdt H-P: Systematic review of publication bias in studies on publication bias. BMJ. 2005, 331 (7514): 433-434. 10.1136/bmj.38478.497164.F7.

Song F: Review of publication bias in studies on publication bias: studies on publication bias are probably susceptible to the bias they study. BMJ. 2005, 331 (7517): 637-638. 10.1136/bmj.331.7517.637-c.

Hopewell S, Clarke M, Stewart L, Tierney J: Time to publication for results of clinical trials. Cochrane Database Syst Rev. 2007, MR000011-2

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2288/9/79/prepub

Acknowledgements

This methodological review was funded by the UK Department of Health HTA Research Methodology Programme (code 06/92/02). The views expressed in this report are those of the authors and do not necessarily reflect those of the funders. The authors take the responsibility for any errors. We would like to thank Julie Reynolds for providing secretary support to this review.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

FS, YKL, LH, JR, AJS and IH developed the review protocol. SP, JR and FS conducted literature search. SP, FS, LH, YKL, JR and CH extracted and/or checked data from included studies. FS, AJS and IH provided methodological support. FS analysed data and drafted the manuscript. All authors commented on the final manuscript.

Electronic supplementary material

12874_2008_395_MOESM1_ESM.PDF

Additional file 1: Literature search strategies. Strategies used to search MEDLINE and Cochrane Methodology Register for relevant empirical and methodological studies on publication bias. (PDF 4 KB)

12874_2008_395_MOESM2_ESM.PDF

Additional file 2: Main characteristics of inception cohort studies. Shows the main characteristics of studies that followed up cohorts of research from the beginning, including research approved by research ethics committee and those registered by research funding bodies. (PDF 17 KB)

12874_2008_395_MOESM3_ESM.PDF

Additional file 3: Main characteristics of regulatory cohort studies. Shows the main characteristics of studies that followed up trials submitted to regulatory authorities. (PDF 6 KB)

12874_2008_395_MOESM4_ESM.PDF

Additional file 4: Main characteristics of abstract cohort studies. Shows the main characteristics of studies that followed up abstracts presented at conferences. (PDF 22 KB)

12874_2008_395_MOESM5_ESM.PDF

Additional file 5: Main characteristics of manuscript cohort studies. Shows the main characteristics of studies that followed up cohorts of manuscripts submitted to journals for publication. (PDF 5 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Song, F., Parekh-Bhurke, S., Hooper, L. et al. Extent of publication bias in different categories of research cohorts: a meta-analysis of empirical studies. BMC Med Res Methodol 9, 79 (2009). https://doi.org/10.1186/1471-2288-9-79

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2288-9-79