Abstract

Background

Assessment of disagreement among multiple measurements for the same subject by different observers remains an important problem in medicine. Several measures have been applied to assess observer agreement. However, problems arise when comparing the degree of observer agreement among different methods, populations or circumstances.

Methods

The recently introduced information-based measure of disagreement (IBMD) is a useful tool for comparing the degree of observer disagreement. Since the proposed IBMD assesses disagreement between two observers only, we generalized this measure to include more than two observers.

Results

Two examples (one with real data and the other with hypothetical data) were employed to illustrate the utility of the proposed measure in comparing the degree of disagreement.

Conclusion

The IBMD allows comparison of the disagreement in non-negative ratio scales across different populations and the generalization presents a solution to evaluate data with different number of observers for different cases, an important issue in real situations.

A website for online calculation of IBMD and respective 95% confidence interval was additionally developed. The website is widely available to mathematicians, epidemiologists and physicians to facilitate easy application of this statistical strategy to their own data.

Similar content being viewed by others

Background

As several measurements in clinical practice and epidemiologic research are based on observations made by health professionals, assessment of the degree of disagreement among multiple measurements for the same subjects under similar circumstances by different observers remains a significant problem in medicine. If the measurement error is assumed to be the same for every observer, independent of the magnitude of quantity, we can estimate within-subject variability for repeated measurements by the same subject with the within-subject standard deviation, and the increase in variability when different observers are applied using analysis of variance 1. However this strategy is not appropriate for comparing the degree of observer disagreement among different populations or various methods of measurement. Bland and Altman proposed a technique to compare the agreement between two methods of medical measurement allowing multiple observations per subject 2 and later Schluter proposed a Bayesian approach 3. However, problems arise when comparing the degree of observer disagreement between two different methods, populations or circumstances. For example, one issue is whether during visual analysis of cardiotocograms, observer disagreement in estimation of the fetal heart rate baseline in the first hour of labor is significantly different from that in the last hour of labor when different observers assess the printed one-hour cardiotocography tracings. Another issue that remains to be resolved is whether interobserver disagreement in head circumference assessment by neonatologists is less than that by nurses. To answer to this question, several neonatologists should evaluate the head circumference in the same newborns under similar circumstances, followed by calculation of the measure of interobserver agreement, and the same procedure repeated with different nurses. Subsequently, the two interobserver agreement measures should be compared to establish whether interobserver disagreement in head circumference assessment by neonatologists is less than that by nurses.

Occasionally, intraclass correlation coefficient (ICC), a measure of reliability, and not agreement 4 is frequently used to assess observer agreement in situations with multiple observers without knowing the differences between the numerous variations of the ICC 5. Even when the appropriate form is applied to assess observer agreement, the ICC is strongly influenced by variations in the trait within the population in which it is assessed 6. Consequently, comparison of ICC is not always possible across different populations. Moreover important inconsistencies can be found when ICC is used to assess agreement 7.

Lin’s concordance correlation coefficient (CCC) is additionally applicable to situations with multiple observers. The Pearson coefficient of correlation assesses the closeness of data to the line of best fit, modified by taking into account the distance of this line from the 45-degree line through the origin 8 9 10 11 12 13. Lin objected to the use of ICC as a way of assessing agreement between methods of measurement, and developed the CCC. However, similarities exist between certain specifications of the ICC and CCC measures. Nickerson, C. 14showed the asymptotic equivalence among the ICC and CCC estimators. However, Carrasco and Jover 15demonstrated the equivalence between the CCC and a specific ICC at parameter level. Moreover, a number of limitations of ICC, such as comparability of populations and its dependence on the covariance between observers, described above, are also present in CCC 16. Consequently, CCC and ICC to measure observer agreement from different populations are valid only when the measuring ranges are comparable 17.

The recently introduced information-based measure of disagreement (IBMD) provides a useful tool to compare the degree of observer disagreement among different methods, populations or circumstances 18. However, the proposed measure assesses disagreement only between two observers, which presents a significant limitation in observer agreement studies. This type of study generally requires more than just two observers, which constitutes a very small sample set.

Here, we have proposed generalization of the information-based measure of disagreement for more than two observers. As sometimes in real situations some observers do not examine all the cases (missing data), our generalized IBMD is set to allow different numbers of examiners for various observations.

Methods

IBMD among more than two observers

A novel measure of disagreement, denoted ‘information-based measure of disagreement’ (IBMD), was proposed 18 on the basis of Shannon’s notion of entropy 19, described as the average amount of information contained in a variable. In this context, the sum over all logarithms of possible outcomes of the variable is a valid measure of the amount of information, or uncertainty, contained in a variable 19. IBMD, use logarithms to measures the amount of information contained in the differences between two observations. This measure is normalized and satisfies the flowing properties: it is a metric, scaled invariant with differential weighting 18.

N was defined as the number of cases and xij as observation of the subject i by observer j. The disagreement between the observations made by observer pair 1 and 2 was defined as:

We aim to measure the disagreement among measurements obtained by several observers, allowing different number of observations in each case. Thus, maintaining ‘N’ as the number of cases, we consider Mi, i = 1,..,N, as the number of observations in case i.

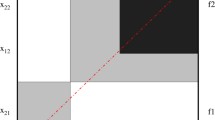

Therefore considering N vectors, one for each case, (x11,…,x1M1),…,(x N1,…,x NMN ) with non-negative components, the generalized information-based measure of disagreement is defined as:

with the convention

This coefficient equals 0 when the observers agree or when there is no disagreement, and increases to 1 when the distance, i.e. disagreement among the observers, increases.

The standard error and confidence interval was based on the nonparametric bootstrap, by resampling the subjects/cases with replacement, in both original and generalized IBMD measures. The bootstrap uses the data from a single sample to simulate the results if new samples were repeated over and over. Bootstrap samples are created by sampling with replacement from the dataset. A good approximation of the 95% confidence interval can be obtained by computing the 2.5th and 97.5th percentiles of the bootstrap samples. Nonparametric resampling makes no assumptions concerning the distribution of the data. The algorithm for a nonparametric bootstrap is as follows 20:

-

1.

Sample N observations randomly with replacement from the N cases to obtain a bootstrap data set.

-

2.

Calculate the bootstrap version of IBMD.

-

3.

Repeat steps 1 and 2 a B times to obtain an estimate of the bootstrap distribution.

For confidence intervals of 90–95 percent B should be between 1000 and 2000 21 22. In the results the confidence intervals were calculated with B equal to 1000.

Software for IBMD assessment

Website

We have developed a website to assist with the calculation of IBMD and respective 95% confidence intervals 23. This site additionally includes computation of the intraclass correlation coefficient (ICC). Lin’s concordance correlation coefficient (CCC) and limits of agreement can also be measured when considering only two observations per subject. The website contains a description of these methods.

PAIRSetc software

PAIRSetc 24 25, a software that compares matched observations, provide several agreement measures, among them the ICC, the CCC and the 95% limits of agreement. This software is constantly updated with new measures introduced on scientific literature, in fact, a coefficient of individual equivalence to measure agreement, based on replicated readings proposed in 2011 by Pan et al. 26 27and IBMD, published in 2010, were already include.

Examples

Two examples (one with real data and the other with hypothetical data) were employed to illustrate the utility of the IBMD in comparing the degree of disagreement.

A gymnast’s performance is evaluated by a jury according to rulebooks, which include a combination of the difficulty level, execution and artistry. Let us suppose that a new rulebook has been recently proposed and subsequently criticized. Some gymnasts and media argue that disagreement between the jury members in evaluating the gymnastics performance with the new scoring system is higher than that with the old scoring system, and therefore oppose its use. To better understand this claim, consider a random sample of eight judges evaluating a random sample of 20 gymnasts with the old rulebook, and a different random sample of 20 gymnasts with the new rulebook. In this case, each of the 40 gymnasts presented only one performance based on pre-defined compulsory exercises, and all eight judges simultaneously viewed the same performances and rated each gymnast independently, while blinded to their previous medals and performances. Both scoring systems ranged from 0 to 10. The results are presented in Table 1.

Visual analysis of the maternal heart rate during the last hour of labor can be more difficult than that during the first hour. We believe that this is a consequence of the deteriorated quality of signal and increasing irregularity of the heart rate (due to maternal stress). Accordingly, we tested this hypothesis by examining whether in visual analysis of cardiotocograms, observer disagreement in fetal heart rate baseline estimation in the first hour of labor is lower than that in the last hour of labor when different observers assess printed one-hour cardiotocography tracings. To answer this question, we evaluated the disagreement in maternal heart rate baseline estimation during the last and first hour of labor by three independent observers.

Specifically, the heart rates of 13 mothers were acquired, as secondary data collected in Nélio Mendonça Hospital, Funchal for another study, during the initial and last hour of labor, and printed. Three experienced obstetricians were asked to independently estimate the baseline of the 26 one-hour segments. Results are presented in Table 2. The study procedure was approved by the local Research Ethics Committees and followed the Helsinki declaration. All women who participate in the study gave informed consent to participate.

Results

Hypothetical data example

Using IBMD in the gymnast’s evaluation, we can compare observer disagreement and the respective confidence interval (CI) associated with each score system.

The disagreement among judges was assessed as IBMD = 0.090 (95%CI = [0.077;0.104]) considering the old rulebook and IBMD = 0.174 (95%CI = [0.154;0.192]) with new rulebook. Recalling that the value 0 of the IBMD means no disagreement (perfect agreement), these confidence intervals clearly indicate significantly higher observer disagreement in performance evaluation using the new scoring system, compared with the old system.

Real data example

The disagreement among obstetricians in baseline estimation, considering the initial hour of labor, was IBMD = 0.048 (95%CI = [0.036;0.071]), and during the last hour of labor, IBMD = 0.048 (95%CI = [0.027;0.075]). The results indicate no significant differences in the degree of disagreement among observers between the initial and last hour of labor.

Discussion

While comparison of the degree of observer disagreement is often required in clinical and epidemiologic studies, the statistical strategies for comparative analyses are not straightforward.

Intraclass correlation coefficient is several times used in this context, however sometimes without careful in choosing the correct form. Even when the correct form of ICC is used to assess agreement, its dependence on variance does not always allow the comparability of populations. Other approaches to assess observer agreement have been proposed 28 29 30 31 32 33, but comparative analysis across populations is still difficult to achieve. The recently proposed IBMD is a useful tool to compare the degree of disagreement in non-negative ratio scales 18, and its proposed generalization allowing several observers overcomes an important limitation of this measure in this type of analysis where more than two observers are required.

Conclusions

IBMD generalization provides a useful tool to compare the degree of observer disagreement among different methods, populations or circumstances and allows evaluation of data by different numbers of observers for different cases, an important feature in real situations where some data are often missing.

The free software and available website to compute generalized IBMD and respective confidence intervals facilitates the broad application of this statistical strategy.

References

Bland M: An introduction to medical statistics. 3rd edition. 2000, Oxford: Oxford University Press

Bland JM, Altman DG: Agreement Between Methods of Measurement with Multiple Observations Per Individual. J Biopharm Stat. 2007, 17 (4): 571-582. 10.1080/10543400701329422.

Schluter PJ: A multivariate hierarchical Bayesian approach to measuring agreement in repeated measurement method comparison studies. BMC Med Res Methodol. 2009, 9: 6-10.1186/1471-2288-9-6.

De Vet H: Observer Reliability and Agreement. Encyclopedia of Biostatistics. 2005, John Wiley & Sons, Ltd

Shrout PE, Fleiss JL: Intraclass Correlations - Uses in Assessing Rater Reliability. Psychol Bull. 1979, 86 (2): 420-428.

Muller R, Buttner P: A critical discussion of intraclass correlation coefficients. Stat Med. 1994, 13 (23–24): 2465-2476.

Costa-Santos C, Bernardes J, Ayres-de-Campos D, Costa A, Amorim-Costa C: The limits of agreement and the intraclass correlation coefficient may be inconsistent in the interpretation of agreement. J Clin Epidemiol. 2011, 64 (3): 264-269. 10.1016/j.jclinepi.2009.11.010.

Barnhart HX, Haber MJ, Lin LI: An overview on assessing agreement with continuous measurements. J Biopharm Stat. 2007, 17 (4): 529-569. 10.1080/10543400701376480.

Carrasco JL, King TS, Chinchilli VM: The concordance correlation coefficient for repeated measures estimated by variance components. J Biopharm Stat. 2009, 19 (1): 90-105. 10.1080/10543400802527890.

King TS, Chinchilli VM, Carrasco JL: A repeated measures concordance correlation coefficient. Stat Med. 2007, 26 (16): 3095-3113. 10.1002/sim.2778.

Lin L, Hedayat AS, Wu W: A unified approach for assessing agreement for continuous and categorical data. J Biopharm Stat. 2007, 17 (4): 629-652. 10.1080/10543400701376498.

Lin LI: A concordance correlation coefficient to evaluate reproducibility. Biometrics. 1989, 45 (1): 255-268. 10.2307/2532051.

Lin LI, Hedayat AS, Sinha B, Yang M: Statistical methods in assessing agreement: models, issues and tools. J Am Stat Assoc. 2002, 97: 257-270. 10.1198/016214502753479392.

Nickerson C: A Note on "A Concordance Correlation Coefficient to Evaluate Reproducibility". Biometrics. 1997, 53: 1503-1507. 10.2307/2533516.

Carrasco JL, Jover L: Estimating the generalized concordance correlation coefficient through variance components. Biometrics. 2003, 59 (4): 849-858. 10.1111/j.0006-341X.2003.00099.x.

Atkinson G, Nevill A: Comment on the Use of Concordance Correlation to Assess the Agreement between Two Variables. Biometrics. 1997, 53: 775-777.

Lin L, Chinchilli V: Rejoinder to the Letter to the Editor from Atkinson and Nevill. Biometrics. 1997, 53 (2): 777-778.

Costa-Santos C, Antunes L, Souto A, Bernardes J: Assessment of disagreement: a new information-based approach. Ann Epidemiol. 2010, 20 (7): 555-561. 10.1016/j.annepidem.2010.02.011.

Shannon CE: The mathematical theory of communication. 1963. MD Comput. 1997, 14 (4): 306-317.

Carpenter J, Bithell J: Bootstrap confidence intervals: when, which, what? A practical guide for medical statisticians. Stat Med. 2000, 19 (9): 1141-1164. 10.1002/(SICI)1097-0258(20000515)19:9<1141::AID-SIM479>3.0.CO;2-F.

Efron B, Tibshirani RJ: An Introduction to the Bootstrap (Chapman & Hall/CRC Monographs on Statistics & Applied Probability). 1994, Chapman and Hall/CRC

Davison AC, Hinkley DV: Methods and their Application (Cambridge Series in Statistical and Probabilistic Mathematics). 1997, Cambridge University Press

On-line calculator of IBMD: [http://disagreement.med.up.pt]

Abramson J: WINPEPI (PEPI-for-Windows): computer programs for epidemiologists. Epidemiologic Perspectives & Innovations. 2004, 1 (1): 6-10.1186/1742-5573-1-6.

Abramson JH: WINPEPI updated: computer programs for epidemiologists, and their teaching potential. Epidemiol Perspect Innov. 2011, 8 (1): 1-10.1186/1742-5573-8-1.

Pan Y, Haber M, Barnhart HX: A new permutation-based method for assessing agreement between two observers making replicated binary readings. Stat Med. 2011, 30 (8): 839-853. 10.1002/sim.4136.

Pan Y, Haber M, Gao J, Barnhart HX: A new permutation-based method for assessing agreement between two observers making replicated quantitative readings. Stat Med. 2012, 31 (20): 2249-2261. 10.1002/sim.5323.

Luiz RR, Costa AJ, Kale PL, Werneck GL: Assessment of agreement of a quantitative variable: a new graphical approach. J Clin Epidemiol. 2003, 56 (10): 963-967. 10.1016/S0895-4356(03)00164-1.

Monti K: Folded empirical distribution function curves-mountain plots. Am Stat. 1995, 33: 525-527.

Quan H, Shih WJ: Assessing reproducibility by the within-subject coefficient of variation with random effects models. Biometrics. 1996, 52 (4): 1195-1203. 10.2307/2532835.

Lin LI: Total deviation index for measuring individual agreement with applications in laboratory performance and bioequivalence. Stat Med. 2000, 19 (2): 255-270. 10.1002/(SICI)1097-0258(20000130)19:2<255::AID-SIM293>3.0.CO;2-8.

Escaramis G, Ascaso C, Carrasco JL: The total deviation index estimated by tolerance intervals to evaluate the concordance of measurement devices. BMC Med Res Methodol. 2010, 10: 31-10.1186/1471-2288-10-31.

Barnhart HX, Kosinski AS, Haber MJ: Assessing individual agreement. J Biopharm Stat. 2007, 17 (4): 697-719. 10.1080/10543400701329489.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2288/13/47/prepub

Acknowledgements

We acknowledge Paula Pinto from Nélio Mendonça Hospital, Funchal, who allowed us to use the maternal heart rate dataset collected for her PhD studies, approved by the local Research Ethics Committees.

This work was supported by the national science foundation, Fundação para a Ciência e Tecnologia, through FEDER founds though Programa Operacional Fatores de Competitividade – COMPETE through the project CSI2 with the reference PTDC/EIA-CCO/099951/2008, through the project with the reference PEST-C/SAU/UI0753/2011 and though the PhD grant with the reference SFRH /BD/70858/2010.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

There are any non-financial competing interests (political, personal, religious, ideological, academic, intellectual, commercial or any other) to declare in relation to this manuscript.

Authors’ contributions

TH, LA and CCS have made substantial contributions to article conception and design. They also have been involved the analysis and interpretation of data and they draft the manuscript. JB have been involved in article conception and he revised the manuscript critically for important intellectual content. MM and DS were responsible for the creation of the software and also were involved in the data analysis. All authors read and approved the final manuscript.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Henriques, T., Antunes, L., Bernardes, J. et al. Information-based measure of disagreement for more than two observers: a useful tool to compare the degree of observer disagreement. BMC Med Res Methodol 13, 47 (2013). https://doi.org/10.1186/1471-2288-13-47

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2288-13-47