Abstract

Abstract

We prove new bounds on the average sensitivity of the indicator function of an intersection of k halfspaces. In particular, we prove the optimal bound of . This generalizes a result of Nazarov, who proved the analogous result in the Gaussian case, and improves upon a result of Harsha, Klivans and Meka. Furthermore, our result has implications for the runtime required to learn intersections of halfspaces.

AMS Subject Classification

Primary; 52C45

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Background

One of the most important measures of the complexity of a Boolean function is that of its average sensitivity, namely

where xi above is x with the ith coordinate flipped. The average sensitivity and related measures of noise sensitivity of a Boolean function have found several applications, perhaps most notably to the area of machine learning (see for example [1]). It has thus become important to understand how large the average sensitivity of functions in various classes can be.

Of particular interest is the study of the sensitivity of certain classes of algebraically defined functions. Gotsman and Linial [2] first studied the sensitivity of polynomial threshold functions (i.e. functions of the form f(x)=sgn(p(x)) for p a polynomial of bounded degree). They conjectured exact upper bounds on the sensitivity of polynomial threshold functions of limited degree, but were unable to prove them except in the case of linear threshold functions (when p is required to be degree 1). Since then, significant progress has been made towards proving this Conjecture. The first non-trivial bounds for large degree were proven in [3] by Diakonikolas et al. in 2010. Since then, progress has been rapid. In [4], the Gaussian analogue of the Gotsman-Linial Conjecture was proved, and in [5] the correct bound on average sensitivity was proved to within a polylogarithmic factor.

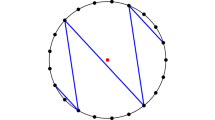

Another potential generalization of the degree-1 case of the Gotsman-Linial Conjecture (which bounds the sensitivity of the indicator function of a halfspace) would be to consider the sensitivity of the indictor function of the intersection of a bounded number of halfspaces. The Gaussian analogue of this question has already been studied. In particular, Nazarov has shown (see [6]) that the Gaussian surface area of an intersection of k halfspaces is at most . This suggests that the average sensitivity of such a function should be bounded by . Although this bound has been believed for some time, attempts to prove it have been unsuccessful. Perhaps the closest attempt thus far was by Harsha, Klivans and Meka who show in [7] that an intersection of k sufficiently regular halfspaces has noise sensitivity with parameter ε at most log(k)O(1)ε1/6. In this paper, we prove that the bound of is in face correct. In particular, we prove the following Theorem:

Theorem 1.

Let f be the indicator function of an intersection of k half spaces in n variables, then

It should also be noted that Nazarov’s bound follows as a Corollary of Theorem 1, by replacing Gaussian random variables with averages of Bernoulli random variables. It is also not hard to show that this bound is tight up to constants. In particular:

Theorem 2.

If k≤2n, there exists a function f in n variables given by the intersection of at most k half spaces so that

Our proof of Theorem 1 actually uses very little information about halfspaces. In particular, we use only the fact that linear threshold functions are monotonic in the following sense:

Definition 1.

We say that a function is unate if for all i, f is either increasing with respect to the ith coordinate or decreasing with respect to the ith coordinate.

We prove Theorem 1 by means of the following much more general statement:

Proposition 1.

Let f1,…,f k :{±1}n→{0,1}, be unate functions and let F:{±1}n→{0,1} be defined as . Then

The application of Theorem 1 to machine learning is via a slightly different notion of noise sensitivity than that of the average sensitivity. In particular, we define the noise sensitivity as follows

Definition 2.

Let f:{±1}n→{0,1} be a Boolean function. For a parameter ε∈(0,1) we define the noise sensitivity of f with parameter ε to be

where x and y are Bernoulli random variables where y is obtained from x by randomly and independently flipping the sign of each coordinate with probability ε.

Using this notation, we have that

Corollary 1.

If f:{±1}n→{0,1} is the indicator function of the intersection of k halfspaces, and ε∈(0,1) then

Remark 1.

This is false in general for intersections of unate functions, since if f is the tribes function on n variables (which is unate) then so long as ε=Ω(log−1(n)).

Finally, using the L1 polynomial regression algorithm of [1], we obtain the following:

Corollary 2.

The concept class of intersections of k halfspaces with respect to the uniform distribution on {±1}n is agnostically learnable with error o p t+ε in time .

Proofs of the sensitivity bounds

The proof of Proposition 1 follows by a fairly natural generalization of one of the standard proofs for the case of a single unate function. In particular, if f:{±1}n→{0,1} is unate, we may assume without loss of generality that f is increasing in each coordinate. In such a case, it is easy to show that

In fact, this technique can be extended to prove bounds on the sensitivity of unate functions with given expectation. In particular, Lemma 1 below provides an appropriate bound. Our proof of Proposition 1 turns out to be a relatively straightforward generalization of this technique. In particular, we show that by adding the f i one at a time, the change in sensitivity is bounded by a similar function of the change in total expectation.

Lemma 1.

Let S:{±1}n→{0,1} and let , then

Proof.

Note that:

We now prove Proposition 1.

Proof.

Let . Let S m (x)=F m (x)−Fm−1(x). Let . Our main goal will be to show that , from which our result follows easily.

Consider . We assume without loss of generality that f m is increasing in every coordinate.

where xi denotes x with the ith coordinate flipped. We make the following claim:

Claim. For each x,i,

Proof. Our proof is based on considering two different cases.

Case 1: f m (x)=f m (xi)=0

In this case, F m (x)=Fm−1(x) and F m (xi)=Fm−1(xi), and thus both sides of Equation 1 are 0.

Case 2: f m (x)=1 or f m (xi)=1

Note that replacing x by xi leaves both sizes of Equation 1 the same. We may therefore assume without loss of generality that x i =1. Since f m is increasing with respect to the ith coordinate, f m (x)≥f m (xi). Since at least one of them is 1, f m (x)=1. Therefore, F m (x)=1. Therefore, since

and

Equation 1 follows.

By the claim we have that

Where the on the third line above, we are letting y=xi, and the last line is by Lemma 1.

Hence, we have that

Let . By concavity of the function for x∈(0,1), we have that

This completes our proof.

Theorem 1 follows from Proposition 1 upon noting that 1−f is a disjunction of k linear threshold functions, each of which is unate. Our proof of Theorem 1 shows that the bound can be tight only if a large number of the halfspaces cut off an incremental volume of roughly 1/k. It turns out that this bound can be achieved when we take a random collection of halfspaces with such volumes. Before we begin to prove Theorem 2, we need the following Lemma:

Lemma 2.

For an integer n and 1/2>ε>2−n there exists a linear threshold function f:{±1}n→{0,1} so that

and

Proof.

This is easily seen to be the case if we let f(x) be the indicator function of for t as large as possible so that this event takes place with probability at least ε.

Proof.

We note that it suffices to show that there is such as f given as the indicator function of a union of at most k half-spaces, as 1−f will have the same average sensitivity and will be the indicator function of an intersection. Let ε=1/k, and let f be the function given to us in Lemma 2. We note that if , then f is sufficient and we are done. Otherwise let . For s∈{±1}n let f s (x)=f(s1x1,…,s n x n ). We note for each s that f s (x) is a linear threshold function with and .

Let

for s i independent random elements of {±1}n. We note that F(x) is always the indicator of a union of at most k half-spaces, but we also claim that

This would imply our result for appropriately chosen values of the s i .

We note that is 21−n times the number of pairs of adjacent elements x,y of the hypercube so that F(x)=1,F(y)=0. This in turn is at least 21−n times the sum over 1≤i≤m of the number of pairs of adjacent elements of the hypercube x,y so that and so that for all j≠i.

On the other hand, for each i, 21−n times the number of pairs of adjacent elements x,y so that is

For each of these pairs, we consider the probability over the choice of s j that or for some j≠i. We note that for each fixed x and j that

Thus, by the union bound, the probability that either or for some j≠i is at most 1/2. Therefore, the expected number of adjacent pairs x,y with , and for all j≠i is at least . Therefore,

as desired. This completes our proof.

Learning theory application

The proofs of Corollaries 1 and 2 are by what are now fairly standard techniques, but are included here for completeness. The proof of Corollary 1 is by a technique of Diakonikolas et al. in [8] for bounding the noise sensitivity in terms of the average sensitivity.

Proof.

As the noise sensitivity is an increasing function of ε for ε<1/2, we may round ε down to 1/⌈ε−1⌉, and thus it suffices to consider ε=1/m for some integer m. We note that the pair of random variables x, y used to define the noise sensitivity with parameter ε can be generated in the following way:

-

1.

Randomly divide the n coordinates into m bins.

-

2.

Randomly assign each coordinate a value in {±1} to obtain z.

-

3.

For each bin randomly pick b i ∈{±1}. Obtain x from z by multiplying all coordinates in the i th bin by b i for each i.

-

4.

Obtain y from x by flipping the sign of all coordinates in a randomly chosen bin.

We note that this produces the same distribution on x and y since x is clearly a uniform element of {±1}n and the ith coordinate of y differs from the corresponding coordinate of x if and only if i lies in the bin selected in step 4. This happens independently and with probability 1/m for each coordinate.

Next let f be the indicator function of an intersection of at most k halfspaces. Note that after the bins and z are picked in steps 1 and 2 above that f(x) is given by g(b) where g is the indicator function of an intersection of at most k halfspaces in m variables. In the same notation, f(y)=g(b′) where b′ is obtained from b by flipping the sign of a single random coordinate. Thus, by definition, . Hence,

This completes our proof.

Corollary 2 will now follow by using this bound to bound the weight of the higher degree Fourier coefficients of such an f and then using the L1 polynomial regression algorithm of [1].

Proof.

Let f be the indicator function of an intersection of k halfspaces. Let f have Fourier transform given by

It is well known that for ρ∈(0,1) that

Therefore, we have that

By Corollary 1, this tells us that

Setting ρ=ε2/(C log(k)) for sufficiently large values of C yields

Our claim now follows from [1] Remark 4.

References

Kalai, AT, Klivans, AR, Mansour, Y, Servedio, RA: Agnostically Learning Halfspaces. In: Proceedings of the 46th Foundations of Computer Science (2005).

Gotsman C, Linial N: Spectral properties of threshold functions. Combinatorica 1994, 14: 35–50. 10.1007/BF01305949

Diakonikolas, I, Raghavendra, P, Servedio, RA, Tan, LY: Bounding the average sensitivity and noise sensitivity of polynomial threshold functions. In: Proceedings of the 42nd ACM Symposium on Theory of Computing. ACM (2010).

Kane, DM: The Gaussian surface area and noise sensitivity of degree-d polynomial threshold functions. In: Proceedings of the 25th Annual Conference on Computational Complexity, pp. 205–210 (2010).

Kane, DM: The Correct, Exponent for the Gotsman-Linial Conjecture. In: Proceedings of the 28th Annual Conference on Computational Complexity (2013).

Adam, R, Klivans, RO, Servedio, RA: Learning geometric concepts via gaussian surface area. In: Proceedings of the 49th Foundations of Computer Science, pp. 541–550 (2008).

Harsha, P, Kilvans, AR, Meka, R: An invariance principle for polytopes. In: Proceedings of the 42nd ACM Symposium on Theory of Computing (2010).

Diakonikolas, I, Raghavendra, P, Tan, LY: Average sensitivity and noise sensitivity of polynomial threshold functions. Manuscript available at ., [http://arxiv.org/abs/0909.5011]

Acknowledgements

This work was done with the support of an NSF postdoctoral research fellowship.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author has no competing interests.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kane, D. The average sensitivity of an intersection of half spaces. Mathematical Sciences 1, 13 (2014). https://doi.org/10.1186/s40687-014-0013-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40687-014-0013-6