Abstract

In this contribution, the nonlinear dynamics of a non-autonomous model of two neurons based on the Hopfield neural network is considered. Using activation gradients as bifurcation control parameters, the properties of the model include dissipation with the existence of attractors and equilibrium points with their stability. Using traditional nonlinear analysis tools such as bifurcation diagrams, the graph of the maximum Lyapunov exponent, phase portraits, two-parameter diagrams, and attraction basins, the complex behaviour of the two-dimensional Hopfield neural network has been investigated and several windows of multistability involving the coexistence of up to four coexisting attractors have been found. Besides, the results of our numerical simulation of the multistability have been further supported using some Pspice simulation. The effect of the fractional-order derivative is also explored, and it is found that the route toward chaos is completely different when the order q of the HNN is varied between \(0<q<1\). Finally, a compressive sensing approach is used to compress and encrypt color images based on the sequences of the above-mentioned system. The plain color image is decomposed into Red, Green, and Blue components. The Discrete Wavelet Transform (DWT) is applied to each component to obtain the corresponding sparse components. Confusion keys are obtained from the proposed chaotic system to scramble each sparse component. The measurement matrices obtained from the chaotic sequence are used to compress the confused sparse matrices corresponding to the Red, Green, and Blue components. Each component is quantified and a diffusion step is then applied to improve the randomness and, consequently, the information entropy. Experimental analysis of the proposed method yields a running time (t) of 6.85 ms, a maximum entropy value of 7.9996 for global and 7.9153 for local, an encryption throughput (ET) value of 114.80, and a number of cycles (NC) of 20.90. Analysis of these metrics indicates that the proposed scheme is competitive with some recent literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The artificial Hopfield neural network (AHNN) is a model of artificial neural network that tends to mimic the memory function of the biological brain. It is a particularly intriguing model in that it attempts to replicate the critical mnemonic function in language mastery and the learning process. A significant number of artificial neural network models inspired by the initial model designed by Hopfield in 1984 have been developed to date. Despite its consideration of a large number of neurons, this model has this singularity in that it remains relatively simple. The studies carried out on AHNNs have the advantage that they would allow an understanding of a certain number of pathologies affecting memory function and provide a starting point for their resolution. As a result, dynamic behaviors such as chaos [1,2,3], hyperchaos [4,5,6], multistability [7,8,9], and hidden attractors [4, 5] have piqued the interest of researchers. Recent interesting works published in the literature, such as those of [10,11,12,13,14,15,16], can attest to the interest in the study of systems exhibiting such behaviors. For example, in [10], they investigated a chaotic Lorenz system modified by artificial neural networks, which allowed them to transform its chaotic behavior into motion, then create a chaotic motion video. The authors [11,12,13] applied an adaptive sliding mode control strategy, the passive control technique, and the indirect field control strategy to control the chaotic behavior of the dynamics of a fractional-order Hopfield neural network with memristor, a chaotic system, and a 3-phase induction motor, respectively. [14,15,16] proposed Jerk, memristive, and hyperjerk circuits, whose studies of the systems describing them presented chaotic attractors, periodic attractors, the coexistence of multiple attractors, bubbles of bifurcations, and many other phenomena. [15] has realized a chaos-based random number generator from the new memristive chaotic system they proposed and performed an algorithm for touchless fingerprint encryption has been established.

To facilitate theoretical analysis and experimental implementation, many small HNNs have been intensively studied [1, 3, 5, 17,18,19,20,21,22]. Complex dynamic behaviors such as periodic, chaotic, or coexisting attractors, crisis scenarios [1, 3, 5, 17,18,19,20, 22], hyperchaos [5, 6], relaxation oscillations [21], and hidden attractors [5], to name a few, have generally been revealed in these small HNNs. Most of these small HNNs listed are at least three-order autonomous [1, 3, 5, 17,18,19,20], but there are still other smaller non-autonomous and autonomous second-order ones that have been recently developed. As such, we can list the works of [23,24,25,26] who studied in-depth HNNs models with two neurons and highlighted complex dynamic behaviors. For example, the model investigated in [23] was able to exhibit in its non-autonomous model of HNN with two neurons a certain number of behaviors due to the stimulus. These include limit cycles, chaotic attractors, a bifurcation doubling the period, coexisting modes of bifurcations, and twin attractors. The latter is very useful for applications based on HNN in associative memory [27], image processing, and pattern recognition [28]. [24] subsequently considered a memristor as a synapse of self-connection of the previous non-autonomous model of two-neuron-based HNN studied in [23]. The memristor can remember the total electrical charge that passes through it over time [29]. This unique ability makes it behave like an electrical synapse in neural networks [30,31,32,33]. Several behaviors such as bursting and Hopf busters have been revealed by considering the memristor. [25, 26] constructed autonomous HNNs with ideal and non-ideal memristors, respectively, to emulate the effect of electromagnetic induction between two neurons. In [34], have recently proposed a fuzzy integral sliding mode technique for the synchronization and control of memristive neural networks. This control method combines a fuzzy logic controller with integral sliding mode control to provide fast and smooth results. In recent years, fractional calculus has provided reliable tools for the easy modeling of natural phenomena by the lightest possible equations. Moreover, the form of any real system is fractional. Precision in model formulation and sensitivity in design, require the need to treat neural systems as fractional-order differential equations. For this purpose, the dynamics of fractional order neural networks of Hopfield type have been studied by addressing notions such as stability and multi-stability (coexistence of several different stable states), bifurcations, and chaos [35]. Based on the stability analysis, the authors identified critical fractional-order values for which Hopf bifurcations may occur. The control methods for synchronizing a class of fractional-order neural networks have been devised by [36, 37]. [36] realized an adaptation mechanism that adjusts the parameters of the controller using an appropriate sliding surface, while [37] used the Lyapunov stability theorem. The authors [37] also proposed an algorithm for a new crypto-system based on the designed adaptive method for encryption / decryption of unmanned aerial vehicle color images.

The activation of neurons is most often ensured by a differentiated limited monotonic function, which plays a determining role in the appearance of complex dynamic behaviors in HNN [38]. The hyperbolic tangent function has been used to perform non-linear activation functions in small HNNs, previously named because of its feasibility because of the execution of the circuit [39,40,41]. Recently, Bocheng Bao et al. [18] took into account the effect of the activation gradient of the activation functions of neurons on the dynamics of a three-neuron-based HNN. The activation gradient, also called the gain in scale parameter, somehow translates the response speed of the neuron. The authors have thus demonstrated the implication of the activation gradient, which has hitherto been overlooked in the appearance of complex phonemes in network dynamics. Furthermore, phenomena such as coexisting limit cycle oscillations, attractors coexisting in chaotic spirals, chaotic double scrolls, and scenarios of crisis could thus be exhibited by considering the effect of the activation gradient. But the authors considered that all the activation gradients associated with the activation functions of the different neurons of their HNN were identical and linked. This is closer to the ideal case than the real. For example, a pathology that can considerably affect the balance in the response of the network. Furthermore, in their model, they did not analyze the effect that consideration of activation gradients on the network could have with the presence of a stimulus. It will be interesting for us here to take into account all these considerations in the model proposed by [23]. This model has the advantage that it is small because it consists of two neurons and already involves a stimulus. Therefore, it is extremely essential to study the effects of different activation gradients on the dynamic behavior of this small non-autonomous HNN. In this article, a two-neuron-based non-autonomous HNN with a sinusoidal stimulus involving different activation gradients associated with the activation functions of neurons is presented, in which the dynamic behaviours are related to activation gradients and include limit cycles, chaotic attractors, coexisting of twin attractors, a period-doubling bifurcation, and coexisting bifurcation modes. To get an idea of the effects of the fractional order derivative on its dynamics, the fractional version of the HNN is also considered.

The quick development of communications through the internet and computers has encouraged the design of secure encryption and decryption algorithms for transferred data. In this area of research, chaos-based encryption has received great attention from the scientific community [42]. Yu and collaborators are the first team to demonstrate that chaotic sequences can be exploited to generate a measurement matrix with restricted isometry property [43]. From this work, many authors have been attracted to the areas of chaos and compressive sensing for image compression and encryption. Zhou and collaborators proposed an image encryption scheme using a 1-D chaotic map [44]. Performance analysis indicated a secure algorithm able to withstand various forms of attack, but the encryption time is not acceptable. Ahmad et al. have designed a simple chaos-based permutation substitution encryption method to secure digital images in a cloud-based smart city environment [45]. Experimental results indicated a simple and secure algorithm. Hadi and co-workers proposed and analyzed a new fractional-order hyperchaotic memristor oscillator [46]. The oscillator is then applied to increase the key space of the voice encryption scheme. The proposed algorithm is hardly robust to brute force attacks, but it is observed that the encryption process is solely based on a simple x-or operation. Consequently, any type of attack except brute force attack can tackle the algorithm. Additionally, most of these algorithms are usually limited by the bandwidth saturation of the transmission channel as the data is not compressed at the sending end. Based on the above mentioned drawbacks, it is clear that the field of compressive sensing is not yet mature. Our work will try to solve some of these problems. It is observed that more and more huge data can be compressed and encrypted before transmission at reduced bandwidth. One of the most prominent compression encryption techniques has been demonstrated to be compressive sensing [47]. Zhou and co-workers used hyperchaotic sequences jointly with 2-D compressive sensing to design and analyze a new compression encryption algorithm. Chai and collaborators combined chaotic sequences, cellular automata, and ECA to design a block-based compressive sensing algorithm to compress and encrypt images [48]. The results indicated efficient and secured methods against some current cryptanalysis techniques. However, the key space of these algorithms is limited due to the use of a low-dimensional chaotic system with reduced complexity. Let us recall that in the compressive sensing process, compression is usually exploited to reduce the size of a given dataset with the aim of easing further processing in general or reducing the encryption time in this particular case [49]. In addition, it is well known that the more the signal is sparse, the easier it is to reconstruct. Strong sparse methods, such as local 2-D sparsity in the space domain, nonlocal 3-D sparsity in the transform domain, or a combination of local 2-D sparsity in the space domain and nonlocal 3-D sparsity in the transform domain, have been used by some authors [50]. These authors achieved good reconstruction performances, but the corresponding algorithms usually consume time and power. To solve this difficulty in this paper, we will apply a discrete wavelet transform (DWT) to achieve the sparsity property. Moreover, in compressive sensing, we need to use a measurement matrix that follows the restricted isometry property (RIP) [51]. Many compressive sensing algorithms have been proposed utilizing a variety of measurement matrices, including Gaussian random matrix, partial orthogonal matrix, Hadamard matrix, and circular matrix. These matrices are usually of a large size and cannot be constructed on the receiver side. Therefore, a problem with bandwidth is usual in these cases during the transmission process. To solve this problem, in this work, the measurement matrix of the proposed compressive sensing algorithm is designed using the sequences of the proposed chaotic system. The main contributions of this work can be summarized as:

-

1.

Introduce a two-neuron model based on HNN with variable gradient under the influence of an external alternating current.

-

2.

Use nonlinear analysis tools to show that the introduced HNN is able to exhibit more complex dynamical behaviour compared to the previous model.

-

3.

Use the compressive sensing approach with chaotic sequences to compress and encrypt images. The plain color image is decomposed into R, G, and B components. The DWT is applied to each component to obtain the corresponding sparse components. Confusion keys are obtained from the proposed chaotic system to scramble each sparse component. The chaos-based measurement matrices are used to compress the confused sparse matrices corresponding to the R, G, and B components. Each component is quantified and a diffusion step is then applied to improve the randomness and, consequently, the information entropy.

-

4.

The measurement matrix of the proposed compressive sensing is designed using the sequences of the proposed chaotic system. This method is applied to reduce bandwidth saturation given that most of the methods in the literature use communication channels to send the huge measurement matrix to the receiving/decryption end, thereby inducing saturation of the bandwidth.

The next sections of this article will be organized as follows: In Sect. 2, the connection topology considering the activation gradients and the mathematical model is described. The dissipative and symmetrical nature of the network are detailed there, the equilibrium points are illustrated graphically, and the determination of the characteristic equation from the Jacobian matrix is also presented. In Sect. 3, the dynamic behaviors associated with an activation gradient under the presence of the stimulus are revealed numerically by bifurcation diagrams and dynamic evolution in the parameter space as well as phase portraits. In Sect. 4, analog analyses are performed in Pspice to verify compliance with digital simulations. Section 5 presents compressive sensing-based image compression and encryption. Finally, in Sect. 6, the conclusions of this work are summarized and future directions are provided.

2 Model description

2.1 Proposed mathematical model involving neuron activation gradients

The form of the HNN involving an activation gradient for the two neurons is given in Eq. (1):

Where \(x=\left[ {x_{1} ,x_{2} } \right] ^{T}\) is the state vector of neurons,\(tanh(\beta x)=\left[ {tanh(\beta _{1} x_{1} ),tanh(\beta _{2} x_{2} )} \right] ^{T}\) are the activation functions of hyperbolic tangent-type neurons with adjustable gradients and w represents a synaptic weight matrix \(2\times 2\) describing the strength of the connections between the two neurons of the network and I is the sinusoidal stimulus\(I=I_{m} sin(2\pi F\tau )\).

The new HNN model that we propose is given by Eq. (1). The corresponding connection topology between the two neurons is represented in Fig. 1a. Figure 1b shows the internal connections of one neuron with its neighboring neurons. These internal connections involve the external connections coming from neighboring neurons and the self-connection weights associated with the activation function of the neuron, taking into account the gradient as well as the derivative of the state variable of the neuron considered. In contrast to the model proposed by [18], in this architecture, each neuron has an activation gradient independent of those of the other neurons. This consideration makes this model more flexible and allows the consideration of different electrical activities of neurons. The model considered by [23] can be found at any time, simply by considering the activation gradients of Eq. (1) all identical and equal to one.

The mathematical model corresponding to the configuration of the synaptic weight matrix (2) is given by the following Eq. (3):

2.2 Dissipation and symmetry

The evaluation of the dissipative nature of a system is based on the computation of the rate of contraction of the volume of this system over time [52, 53]. The system (3) can be written in the following vector form:

The divergence of the vector field f is given by the expression (6):

The divergence f measures how the volume changes rapidly under the flow \(\phi _{t} \) of f. Let Vbe a volume of the phase space and \(V(t)=\phi _{t} \left( V \right) \), the image of V by \(\phi _{t} \left( V \right) \). Liouville’s theorem or the divergence theorem states that:

With

For all \(\beta _{j} x_{j} (j=1,2)\), \(0\le \left( {1-tanh^{2}(\beta _{j} x_{j} )} \right) \le 1\), as \(-1\le tanh(\beta _{j} x_{j} )\le 1\) with an appropriate choice of gains \(\beta _{1} \) and \(\beta _{2} \)in (8) the divergence \(\nabla .f\) can be negative, thus the system can be also dissipative. Besides, the volume elements contract after a unit of time, which reduces a volume \(V_{0} \)by one factor \(exp(\nabla .f.t)\). As a result, all orbits in the system are eventually limited to a specific subset with a zero volume and the asymptotic movement settles on an attractor [54]. The system (3) is invariant at the change of coordinate \(\left( {x_{1} ,x_{2} ,\tau } \right) \leftrightarrow \left( {-x_{1} ,-x_{2} ,-\tau } \right) \), it means that it is symmetric. This symmetry will be at the origin of the appearance of paired solutions in the network by a change of sign of the initial conditions [55].

2.3 Equilibrium points and stability related to gradient values

The equilibrium points of system (3) are obtained by making its left-hand member equal to zero at \(\tau =0\), this amounts to solving the system of equations given by expression (9):

After developments and arrangements of the system given in (9), the equilibrium points (trivial and non-trivial) are obtained by expression (10) referred to as the graphical approach [18, 23] applied using MATLAB:

Where \(n\in {\mathrm{N}}\) is the numbering index of equilibrium points \(x_{1e}^{n} \), which corresponds to the graphical intersections with the abscissa of the solution curve represented by Eq. (11):

Equations (10) and (11) depend on the activation gradients (\(\beta _{j=1,2} )\) of the neurons. The curves presented in Fig. 2 represent different electrical activities of the neurons, which are hyperbolic tangent functions. These curves plotted in Fig. 2 imply a standard gradient for \(\beta _{j} =1\) (whose standard gain curve), weak fo r \(\beta _{j} <1\) (whose flat sigmoid gain curve), and high for \(\beta _{j} >1\) (whose step-like gain curve) which corresponds to electrical activities of standard neurons, slow and fast, respectively [18, 56]. For standard gradients (\(\beta _{j=1,2} =1)\), the solution curves are given in Fig. 3a which is in accordance with those obtained by [18] forthree different values of the parameter k. From that figure, there is only one intersection point for \(k>2.1896\), five intersection points for \(k<2.1896\) and three intersections for a critical value \(k=2.1896\) corresponding respectively to one, five, and three equilibrium points. Now let’s consider \(k=2.385\) that corresponding to only one trivial equilibrium point. Figure 3b, c, show the influence of the activation gradients of the first (\(\beta _{1} )\) and second (\(\beta _{2} )\) neurons respectively on the solution curves. The results provided in Fig. 3b, c show that the equilibrium points change from only one to three, then to five when the values of the activation gradients of the first and second neurons are decreased and increased, respectively. These changes are undergone by the solution curves according to the values of the activation gradients of the first and second neurons, which are favorable for the transition from only one to three, then to five intersections of the solution curve with abscissa. From this observation, it is easy to choose which gradient to act on to obtain consequent electrical activity in the network.

The Jacobian matrix derived from Eq. (3), for equilibrium points \(P_{n=0,1,2,3,4} \) is given in (12):

The function curve described by Eq. 11 and their intersection points with respect to gradients \(\beta _{j=1,2} =1\) in (b); equilibrium points varying with respective gradients \(\beta _{1} \) and \(\beta _{2} \) in (c) and (d) respectively

The characteristic equation associated with (12), specified in Eq. (13), is obtained from the MATLAB software:

Where,

With,

The coefficients of the characteristic polynomial (14) are all non-zero. The equilibrium points \(P_{n=0,1,2,3,4} \) will be stable if only if for all the values \(a_{1} >0\) and \(a_{0} >0_{\, }\) according to the Routh-Hurwitz criterion. For the specific case of the trivial equilibrium point \(P_{0} =\left( {0,0} \right) \), we have the stability if the conditions (16) are respect if not \(P_{0} \) will unstable.

The activation gradients will be \(\beta _{2} =1\), \(\beta _{1} =tuneable\) and \(k=2.385\), \(I_{m} =2.5\), \(F=0.1\) in the rest of the document unless otherwise indicated. For this configuration, the trivial equilibrium point \(P_{0} \) will be unstable for \(\beta _{1} <20\) and stable for\(\beta _{1} >20\).

The computation of the corresponding eigenvalues is carried out in Table 1 for some values of the gradient \(\beta _{1} \). This table shows that the nature of stability of the trivial equilibrium varies with respect to \(\beta _{1} \) and is of type Hopf bifurcation point for \(\beta _{1} =20\) because the eigenvalues are pure imaginary.

3 Numerical results

The numerical integrations are based on the 4th order Runge-Kutta algorithm for the precision and speed of convergence with an integration time step \(\Delta \tau =0.005\). The plot of the bifurcation diagram consists of taking the local maxima of the variable during the variation of the activation gradient of the first neuron, performed on the integration time interval \(1500\le \tau \le 2000\) for a time step \(\Delta \tau =0.005\). The plot of the largest Lyapunov exponent diagram is done using the Wolf method in the time interval \(9000\le \tau \le 10,000\) with the time step \(\Delta \tau =0.005\) [57].

3.1 Bifurcation diagrams and phase portraits

The superimposition of the bifurcation diagrams in Fig. 4a shows the effect induced by the variation of the first neuron gradient through electrical activities on the dynamics of the model (3) in the range of \(0.6\le \beta _{1} \le 1.8\). It is easy to see in this diagram the evolution of the activation gradient (\(\beta _{1} )\), the appearance of the zones of periodic behaviours, followed by zones of chaotic behaviours. The Wolf algorithm [57] was used to generate the superimposed diagrams of the largest Lyapunov exponents in Fig. 4b, which confirms the observed bifurcations. The computational method used for those bifurcation diagrams is provided in Table 2. For different discrete values of the gradient, some phase portraits can be represented concerning the limit cycles and chaotic attractors in the \((x_{1} -x_{2} )\) plane.

An enlargement of two of the periodic windows of the bifurcation diagram of Fig. 4a is shown in Fig. 5 for the activation gradient belonging to the ranges of \(1.218\le \beta _{1} \le 1.29\) and \(1.231\le \beta _{1} \le 1.233\). Figure 5a, b depict the coexistence of four bifurcation diagrams that differ in a variety of ways. These diagrams argue for the existence of the phenomenon of multistability in these ranges. The methods and initial conditions for obtaining these diagrams are given in Table 2.

Enlargement of bifurcation diagram Fig. 4a, b exhibiting the coexistence of four different bifurcation diagrams

3.2 Coexistence of attractors and basins of attraction

The notion of multistability, or coexistence of multiple attractors, is a very important phenomenon in chaotic dynamical systems because of the flexibility it offers the system and its adapted applications in information engineering [19, 58, 59]. Multistability has caught the attention of many researchers in recent years [60,61,62,63,64], because it encompasses a diversity of many stable states in a system. The study of the coexistence of attractors in the HNNs would allow an understanding in depth of its dynamic effect on the aspects of the treatment of brain information and cognitive function [19]. We have highlighted through the HNN model given in Eq.(3) the coexistences of periodic and chaotic twin attractors, considering the activation gradient values \(\beta _{1} =1.2182\) and \(\beta _{1} =1.232_{\, }\) respectively, as presented in Figs. 6 and 7. The basins of attraction that enable us to obtain each of the coexisting attractors are shown in Fig. 8. As it can be seen on these attraction basins, four different colors enable us to further support the coexisting attractors presented previously.

These basins of attraction correspond to those of the self-excited attractors because they intercede with the open neighborhood of other equilibrium points. This is not the case for hidden attractors whose basins of attraction do not cross the open neighborhood of the other points of equilibrium. Let us note here that the coexistence of more than two attractors in the small models of HNNs with two neurons was raised with the consideration of the memristor as weight, which increases the complexity of the model. Therefore, the consideration of the gradient makes this small model of HNN with two neurons the simplest in the world, showing on the one hand, the coexistence of more than two attractors and, on the other hand, twin attractors. This demonstrates to our satisfaction that taking unbalanced gradients into account makes the model (3) in terms of behavior more complex and interesting than those already existing, considering gradients identical or not. As a result, the model (3), which allows for the coexistence of two twin attractors (see Figs. 6 and 7), can constitute a storage mechanism with a higher capacity than existing similar small models.

3.3 Gradients in the parameter space

Figure 9 shows the effect of the variation of two different activation gradients of the neurons on the dynamics of the model of HNN (3) exposed in the parameter space in the \(\left( {\beta _{1} ,\beta _{2} } \right) \) plane. We can note from Fig. 9a that the dynamics of the HNN (3) has two main distinct regions, one chaotic in red and the other periodic in blue, where the periodic region is dominant. The coded colors contained in Fig. 9b correspond to values of the maximum Lyapunov exponent \((\lambda _{\max } )\). These values are indicated on the graduations of the color bar of the corresponding figure. In this figure, we can appreciate the effects of the electrical activities in the network (3) via transitions of the zones of periodic behaviours (where \(\lambda _{\max } <0)\) towards areas of chaotic behaviours (where \(\lambda _{\max } >0)\) and vice versa during the variation of activation gradients.

4 Circuit design

In this section, the HNN (3) model will be studied in the form of a circuit or an analog calculator in PSpice. The analog calculator equivalent to the mathematical model (3) is set up essentially by electronic components. This rigorous and inexpensive strategy is employed because it has been used for experimental studies of some existing systems [19, 20] or to emulate other complex systems [1, 65,66,67]. Furthermore, such a theoretical model plays a significant role in practical chaos-based applications such as secure communications, the random number generator, and trajectory planning for autonomous robots [68, 69].

4.1 Analog circuit synthesis for neuron activation function involving gradients

The nonlinear terms involving the two activation gradients of each neuron involved in the model (3) are realized by two hyperbolic tangent functions with adjustable independent gains. The design of the HNN (3) model must necessarily have gone through the setting up of modules realizing the hyperbolic tangent functions with activation gradients (see Eq. 3). Based on the circuit diagram shown in [18], the modules allowing the synthesis of these functions are obtained (see Fig. 10a). This circuit uses a pair of differential transistors, two operational amplifiers, a voltage source, a current source, and eight resistors.

With

Where, \(V_{in} \) is the input voltage of the module, \(V_{T} =26mV\) is the thermal voltage of the transistors, \(R_{\beta _{j} } \) is a variable resistor to adjust the gain \(\beta _{j} (j=1,2)\), \(R=10k\,\Omega \) and \(R_{C} =1k\,\Omega \) fixed resistors. The values of the supply voltages and the constant currents of the modules are \(+Vcc=15V\) and \(I_{0} =1.1mA\) respectively [39]. The resistance values for adjusting the gradients are set in (19):

4.2 Analog circuit synthesis for the HNN-2 model involving gradients

Referring to [18, 23], the analog circuit of the HNN model (3) consists of two integrators involving two inverters in feedback loops and variable gain hyperbolic tangent functions, as shown in Fig. 10b.

Where \(X_{1} \) and \(X_{2} \) denote capacitor voltages \(C_{1} \) and\(C_{2} \) respectively; with \(C_{1} =C_{2} =C=100nF\) and \(R=10k\,\Omega \).

The system of Eq. (20) is equivalent to (3) considering the following equalities:

4.3 Validation by PSpice analog simulation

The implementation of the analog circuits of Fig. 10 in PSPICE has led to the results of Fig. 11. The coexistence of two twin attractors of different types is shown in this figure, in (a) a period-7 twin attractor and in (b) chaotic twin attractors whose initial conditions are \(V(X_{1} (0),X_{2} (0))=(0.1,{+0.1}/ {-0.4})\) and \((0.1,\pm 4.1)\) respectively. These coexisting attractors are similar to the ones obtained following the numerical approach in Fig. 7 [22, 70, 71]. All these results were obtained by considering Final step: 680ms; No-Print Delay: 500ms; Step Ceiling: 2\(\upmu \mathrm{s}\) and setting the variable resistor at \(R_{\beta _{1} } =532.9\Omega \).

Coexistence of two twin attractors obtained in PSPICE with \(R_{\beta _{1} } =532.9\Omega \): a, b shows period-7 twin attractors and chaotic twin attractors respectively. The initial-conditions for these attractors are \(V(X_{1} (0),X_{2} (0))=(0.1,{+0.1}/{-0.4})\) and \((0.1,\pm 4.1)\) respectively

5 Fractional-order of the HNN model

Caputo’s derivative is one of the commonly used methods for solving fractional differential equations, [72] which is defined as

Where \(\Gamma (\bullet )\) is a gamma function with the form of \(\Gamma \left( \alpha \right) =\int _0^{+\infty } {t^{\alpha -1}e^{-t}dt} \) and the simple form of Caputo’s derivative is expressed as follows when the positive integer is \(n=1\) [73]

Using Caputo’s derivative, the fractional-order model of HNN introduced in Eq. (3) is given in Eq.(24)

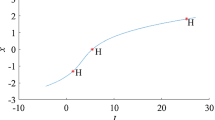

When \(\beta _{2} =1\), \(\beta _{1} =1 \quad k=2.385\), \(I_{m} =2.5\), \(F=0.1\), and \(q=tuneable\) the effect of the variation of the order is explore on the dynamics of the considered HNN as presented in Fig. 12

In that figure, for the considered set of parameters, the HNN model displays a symmetric chaotic attractor when \(q=1\). When q is reduced, the HNN model moves from a symmetric chaotic attractor to an asymmetric chaotic attractor. When that control parameter is further reduced, the HNN model moves from an asymmetric chaotic to a periodic attractor. This transition is further justified by the bifurcation diagram of Fig. 13a.

When Comparing Fig. 13b with Fig. 4a, the modification of the order of the HNN \(\left( {q=0.998} \right) \) allows to change the transition toward chaos observed in the considered model when the order was considered at \(q=1\).

6 Application to compressive sensing based image encryption

6.1 Compressive sensing

Compressive sensing is a prominent sampling-reconstruction technique that achieves compression during the sampling stage. This technique is used when the data meets the sparsity property in some domains. Generally, a given input signal is first represented on an orthogonal basis to meet the sparsity property [74]. Then a measurement matrix is used to sample only the components that best define the input signal. A reconstruction technique is used to recover the original signal.

Let us consider an orthogonal basis \(\psi =[\psi _{1} ,\psi _{2} ...\psi _{n} ]\) where a signal x can be represented as:

Where \(\alpha =\psi ^{T}x\) is the representation of x in the orthogonal basis\(\psi \). If \(\alpha \) contain \(k\left( {k\le n} \right) \) nonzero coefficients then \(\alpha \) is said to be k-sparse. For a sparse signal, the compressive sensing approach is directly applied to the signal. But if the signal is not sparse, a transformation can be applied to achieve the sparsity property. Let us recall that compression is usually used to reduce the size of a given dataset to ease further processing in general or reduce the encryption time in this particular case [49]. In addition, it is well known that, the more the signal is sparse, the easier its reconstruction. Strong sparse methods, such as local 2-D sparsity in the space domain, non-local 3-D sparsity in the transform domain, or a combination of local 2-D sparsity in the space domain and non-local 3-D sparsity in the transform domain, have been used by some authors [50]. These authors achieved good reconstruction performances, but the corresponding algorithms usually consume time and power. In this paper, we will apply the discrete wavelet transform (DWT) to achieve the sparsity property. When this sparsity property is satisfied a vector y of size \(m\times 1\) is linearly obtained from x as follows:

Where \(\Omega \) is the sensor matrix defined as the product of the orthogonal basis \(\psi \) with the measurement matrix \(\Phi \) (of size \(m\times n)\). Note that to recover the original x the sensor matrix \(\Phi \) must satisfy the restricted isometry property as defined in [75]. The main problem of compressive sensing is to recover x from y. to achieve this goal the following optimization problem should be solved:

To solve the problem defined in Eq. (27) reconstruction algorithms are usual including matching pursuit (MP) [76], orthogonal matching pursuit (OMP) [76], subspace pursuit (SP), smooth l\(_{0}\) algorithm (SL\(_{0})\) [77], Newton smoothed l\(_{0}\) norm (NSL\(_{0})\) [77]. In this work, we selected orthogonal matching pursuit (OMP) to recover the signal as it is one of the most efficient reconstruction methods. The measurement matrix is designed using a circular matrix based on the chaotic sequence. This technique is used to avoid bandwidth saturation with the measurement matrix and, consequently, to decrease the overall computational complexity.

6.2 Compression-encryption method

In this section, we deal with the encryption method based on compressive sensing. The plain color image is decomposed into red (R), green (G), and blue (B) components. The discrete wavelet transform is applied to each component to obtain the corresponding sparse components. Confusion keys are obtained from the above proposed chaotic system to scramble each sparse component. The measurement matrices obtained from the chaotic sequence are used to compress the confused sparse matrices corresponding to the R, G, and B components. Each component is quantified and a diffusion step is finally applied to improve the randomness and, consequently, the information entropy. The following steps describe the whole compression encryption scheme. Note that as the proposed scheme is symmetric, the decryption process is the reverse of the encryption scheme.

Step 1: Read the plain color image of size (\(h\times n)\) and decompose to various R, G and B components. Then apply DWT to these components to achieve the sparse matrices R\(_{S}\), G\(_{S,}\) and B\(_{S}\).

Step 2: Using initial seed and parameters as indicated by Fig. 7b solve the system and store chaotic sequences as \(X_{1i} \) and \(X_{2i} \) each with \(h\times n\) elements then permute the sparse matrices as follow:

-

(a)

Sort the elements of sequences \(X_{1i} \) and \(X_{2i} \) in ascending order respectively as \(S_{1i} \,\,=\,\,sort(X_{1i} )\) and \(S_{2i} \,\,=\,\,sort(X_{2i} )\).

-

(b)

Obtain the indexes of each element of sequence \(S_{1i} \) in sequence \(S_{2i} \) as \(I_{i} =index(S_{1i} \,\,in\,\,S_{2i} )\).

-

(c)

Apply swap function to scramble the positions of pixels in the sparse matrices R\(_{S}\), G\(_{S,}\) and B\(_{S}\) as:

$$\begin{aligned} R_{p}= & {} swap(R_{s} ,I) \\ G_{p}= & {} swap(G_{s} ,I) \\ B_{p}= & {} swap(B_{s} ,I) \end{aligned}$$Step 3: Using initial seed and parameters as indicated by Fig. 7b solve the system and store chaotic sequences as \(X_{1i} \) and \(X_{2i} \) each with \(m\times n\) elements where \(m=floor(CR\times h)\) and CR is the compression ratio. Then use these sequences to design the measurement matrix as:

-

(a)

Combine the chaotic sequences \(X_{1i} \) and \(X_{2i} \) to achieve new random sequence \(Y_{i} \) defined as:

$$\begin{aligned} Y_{i} =\frac{X_{1i} +X_{2i} }{2} \end{aligned}$$(28) -

(b)

Apply equidistant sampling on the random sequence \(Y_{i} \) to achieve new random sequence \(Y_{i}^{\,'} \) as:

$$\begin{aligned} Y_{i}^{\,'} =1-2Y_{2+is} \end{aligned}$$(29)Where s is the sampling interval and \(i=0,1,2,.......m\times n\).

-

(c)

The final measurement matrix of size \(m\times n\) is obtained by quantifying the sequence \(Y_{i}^{\,'} \) as:

$$\begin{aligned} \Phi =\sqrt{\frac{2}{h}} \left( {{\begin{array}{*{20}c} {Y_{11}^{\,'} } &{} \cdots &{} {Y_{1n}^{\,'} } \\ \vdots &{} \ddots &{} \vdots \\ {Y_{m1}^{\,'} } &{} \cdots &{} {Y_{mn}^{\,'} } \\ \end{array} }} \right) \end{aligned}$$(30)Where \(\sqrt{\frac{2}{h}} \) is used for normalization.

-

(a)

Step 4: Measure each permuted red (\(R_{p} )\), green (\(G_{p} )\) and blue (\(B_{p} )\) components using the measurement matrix to obtain the measured components \(R_{m} ,\,G_{m} \) and \(B_{m} \) as:

Step 5: Apply quantization on each measured component \(R_{m} ,\,G_{m} \) and \(B_{m} \) as:

Step 6: After quantization the obtained matrices present poor entropies values. To solve the problem the matrices \(R_{m} ,\,G_{m} \) and \(B_{m}\) are confused as:

Step 7: Combine \(R_{c} \), \(G_{c} \) and \(B_{c} \) to get the final cipher image.

6.3 Results and analyses

Four different images are employed to evaluate the proposed compression encryption technique. The working environment is composed of 64 bits laptop equipped with Intel Core\(^{\mathrm{TM}}\) i7-3630QM, 16GB RAM, a 2.6GHz CPU, and provided with MATLAB R2016b. The chaotic system is solved with parameters and initial conditions as in Fig. 7b. The results are presented in Fig. 15.

6.3.1 Performances of compression

In order to evaluate the performance of compression and reconstruction in the compression-encryption scheme under consideration, four different images are considered and the tests are conducted with various compression ratios (CR\(=\)0.2, CR\(=\)0.2, CR\(=\)0.3, CR\(=\)0.4, CR\(=\)0.5, CR\(=\)0.6, CR\(=\)0.7, CR\(=\)0.8, CR\(=\)0.9). Then the Peak Signal-to-Noise Ratio (PSNR) values between the plain image and the reconstructed image are computed in decibels as indicated by Eq. 34.

where P and D represent the pixel values of the plain and the decrypted images, respectively. The results are presented in Table 3 and Fig. 16. From these results, the PSNR values are above 30 dB showing high reconstruction performances. The PSNR value obtained in this work in the particular case of Image 1 for CR\(=\)0.5 is compared to some recent works in the literature (Table 4). The common base of comparison with the selected references is the utilization of chaos/ hyperchaos based pseudorandom sequences in the process. But the particularity of our case is that these sequences are from the dynamics of an adjustable gradient HNN. From this comparison, our algorithm is better than those of Refs [75, 78,79,80] in terms of reconstruction of the compressed image.

6.3.2 Histogram

A histogram test is applied to evaluate the distribution of each pixel in the image [70, 81]. Usually, the histogram of a given plain image is randomly distributed, whereas the histogram of the corresponding cipher is uniformly distributed. To evaluate the robustness of the proposed encryption against histogram-based attacks, one image is selected among the test data (Image 1). The histogram of the said image is computed and represented in Fig. 17 with the corresponding cipher in the red (R), green (G), and blue (B) planes. It is obvious that the uniformly distributed histogram belongs to the cipher image, and consequently, the proposed technique can resist attacks based on histogram analysis. Let us mention that this test can also be conducted for the rest of the test data (Image 2, Image 3, and image 4).

6.3.3 Correlation coefficients

The correlation coefficient of adjacent pixels\(C_{mn} \) is one of the major tools used to evaluate the distribution of a given pixel with its neighbouring pixels in horizontal, vertical, and/or diagonal directions [71, 82]. Usually, the values of the correlation coefficients of adjacent pixels for a given plain image are close to unity in all directions. A good encryption algorithm should produce a cipher image with correlation coefficients of adjacent pixels close to zero in all directions. To evaluate the correlation coefficients\(C_{mn} \) in this work, the following formula is exploited:

Here, \(m_{x} \), \(n_{x} \) refers to the values of adjacent pixels and A refers to the whole amount of nearby pixel pairs. The distribution of correlation coefficients \(C_{mn} \) for red (R) components is plotted in Fig. 18. The adjacent pixels are linearly related in the horizontal, vertical, and diagonal directions for plain components, showing high correlation between adjacent pixels. Whereas random distributions are observed in the case of corresponding ciphers, indicating that no correlation exists between adjacent pixels. The same results have been observed in the green (G) and blue (B) components. Consequently, the proposed algorithm is able to resist attacks based on histogram analysis.

6.3.4 Information entropy

Information entropy is usually applied to investigate the level of randomness in an image [83, 84]. The global entropy of a given image Y is computed as:

\(p(y_{a} )\) is the probability of \(y_{a} \) and b refers the pixel bit level. Local entropy provides more sensitive values of the entropy as the pixels are considered individually. It is well known that a good encryption algorithm should produce ciphers with entropy values near 8 for 8-bit images. Table 5 presents the results of the global and local entropy values of the considered test images and the corresponding cipher. From these results, it is obvious that the proposed algorithm is robust to attacks based on entropy analysis.

6.3.5 NPCR and UACI

The encryption algorithm can also be evaluated by using NPCR (Number of Pixels Change Rate) and the UACI (Unified Average Changing Intensity) computed respectively by [85, 86]:

Where P and C are two encrypted data achieved from encrypted data different in just on pixel. r and s are the size of the data image. For 8-bit images the threshold values of NPCR and UACI are defined given by:

Table 6 provides the results of NPCR and UACI for four test images. It is obvious that the proposed algorithm can resist any differential attack given the presented values are above the threshold values.

6.3.6 Key space analysis

The key space of an encryption/decryption scheme is the set of different keys that can be exploited to encrypt and decrypt the information [87]. In order to resist brute force attacks a well-designed algorithm is required to have a very large key space. The threshold value is \(2^{100}\approx 10^{30}\). In this work the considered chaotic oscillator can be rewritten as:

where the parameters and initial states \(\beta _{1} ,\beta _{2} ,I_{m},k,a,b,c,\tilde{{x}}_{1} ,\tilde{{x}}_{2} \) are the main keys of the algorithm. If we consider \(10^{16}\) as the precision of calculations the total key space of the proposed scheme is computed as \(10^{16\times 9}=10^{144}>10^{30}\) consequently the proposed scheme is resistive to any form of brute force attacks.

6.3.7 Complexity and comparative analyses

One of the most important metrics for analyzing an algorithm is to assess its complexity [87]. Some current metrics of this evaluation include the running time (t) or the Encryption Throughput (ET) and the Number of Cycles (NC). The encryption time (t) is computed using the “tic-toc” MATLAB function, while ET and NC are computed as:

A well-designed algorithm is required to consume less encryption time, less NC, and high ET to ease the implementation. The results of the computations are summarized in Table 7 using test images of size 256*256*3. The working environment is characterized by a 2.4GHz processor, Intel ®core TM i7-3630QM, 16 GB of RAM, and MATLAB R2016b software. It is also important to report the comparative analysis of the proposed methods with some recent literature. The comparison tools include but are not limited to complexity in terms of encryption and decryption time, NPCR, UACI, entropy, and key sequence. This comparative analysis shows that the proposed cryptosystem has high-security issues and is competitive with the fastest chaos-based cryptosystems. This is mainly due to pseudorandom sequences based on the dynamics of an adjustable gradient HNN and the utilization of lightweight techniques like DWT to sparse our input images.

7 Conclusion

A novel compressive sensing image encryption scheme using the dynamics of adjustable gradients HNN was considered in this paper. Firstly, the effects of the activation gradients of neurons are explored. The graphical representation of the solutions showed the influence of first and second neuron activation gradients with respect values. The analysis of the stability reveals that it depends on initial conditions that are associated with the values of activation gradients. Using nonlinear dynamics analysis tools with MATLAB, rich and varied dynamics that depend on the activation gradient of the first neuron have been highlighted. Phenomena showing the complexity of the model, such as the coexistence of two twin attractors, are exhibited, and the representations of the different basins of attraction have shown that they are all self-excited. For engineering applications, it is implemented in PSpice and yields results similar to those obtained from the numerical approach [39, 66,67,68,69, 91, 92]. From all of this, it is clear that an imbalance of neuron gradients that would lead to electrical activity in an HNN network would increase its complexity, give rise to interesting and different behaviors from those known. Finally, the sequences of the chaotic system are applied to design a strong measurement matrix for compressive sensing-based image encryption. In the same line, the sequences are employed in the confusion and diffusion steps. Security analyses indicated a good encryption algorithm.

Data availability

The data used in this research work are available from the authors by reasonably request.

References

Z. Njitacke, J. Kengne, H. Fotsin, A plethora of behaviors in a memristor based Hopfield neural networks (HNNs). International Journal of Dynamics and Control 7(1), 36–52 (2019)

Z. Njitacke et al., Uncertain destination dynamics of a novel memristive 4D autonomous system. Chaos, Solitons & Fractals 107, 177–185 (2018)

Z. Dan, W. zhi Huang, Y. Huang, Chaos and rigorous verification of horseshoes in a class of Hopfield neural networks. Neural Computing and Applications 19(1), 159–166 (2010)

V.-T. Pham et al., Hidden hyperchaotic attractor in a novel simple memristive neural network. Optoelectronics and Advanced Materials, Rapid Communications 8(11–12), 1157–1163 (2014)

V.T. Pham et al., A novel memristive neural network with hidden attractors and its circuitry implementation. Science China Technological Sciences 59(3), 358–363 (2016)

Z.T. Njitacke et al., Extremely rich dynamics from hyperchaotic Hopfield neural network: Hysteretic dynamics, parallel bifurcation branches, coexistence of multiple stable states and its analog circuit implementation. The European Physical Journal Special Topics 229(5), 1133–1154 (2020)

Z. Njitacke et al., Dynamical analysis of a novel 4-neurons based Hopfield neural network: Emergences of antimonotonicity and coexistence of multiple stable states. International Journal of Dynamics and Control 7(3), 823–841 (2019)

Z.T. Njitacke, J. Kengne, H.B. Fotsin, Coexistence of multiple stable states and bursting oscillations in a 4D Hopfield neural network. Circuits, Systems, and Signal Processing 39(6), 3424–3444 (2020)

Z. Njitacke, J. Kengne, Complex dynamics of a 4D Hopfield neural networks (HNNs) with a nonlinear synaptic weight: Coexistence of multiple attractors and remerging Feigenbaum trees. AEU-International Journal of Electronics and Communications 93, 242–252 (2018)

M.E. Cimen et al., Modelling of a Chaotic System Motion in Video with Artiıficial Neural Networks. Chaos Theory and Applications 1(1), 38–50 (2019)

K. Rajagopal et al., Dynamical analysis, sliding mode synchronization of a fractional-order memristor Hopfield neural network with parameter uncertainties and its non-fractional-order FPGA implementation. The European Physical Journal Special Topics 228(7), 2065–2080 (2019)

Y. Adiyaman et al., Dynamical Analysis, Electronic Circuit Design and Control Application of a Different Chaotic System. Chaos Theory and Applications 2(1), 8–14

A.S.K. Tsafack et al, Chaos control using self-feedback delay controller and electronic implementation in IFOC of 3-phase induction motor. Chaos Theory and Applications. 2(1), 40-48

K.G. Honoré et al., Theoretical and experimental investigations of a jerk circuit with two parallel diodes. Chaos Theory and Applications. 2(2), 52-57

Q. Lai et al., Design and implementation of a new memristive chaotic system with application in touchless fingerprint encryption. Chinese Journal of Physics 67, 615–630 (2020)

G.D. Leutcho et al., A modified simple chaotic hyperjerk circuit: coexisting bubbles of bifurcation and mixed-mode bursting oscillations. Zeitschrift für Naturforschung A 75(6), 593–607 (2020)

Z.T. Njitacke, J. Kengne, Nonlinear dynamics of three-neurons-based Hopfield neural networks (HNNs): Remerging Feigenbaum trees, coexisting bifurcations and multiple attractors. Journal of Circuits, Systems and Computers 28(07), 1950121 (2019)

B. Bao et al., Dynamical effects of neuron activation gradient on Hopfield neural network: Numerical analyses and hardware experiments. 29(04), 1930010 (2019)

B. Bao et al., Numerical analyses and experimental validations of coexisting multiple attractors in Hopfield neural network. Nonlinear Dynamics 90(4), 2359–2369 (2017)

B. Bao et al., Coexisting behaviors of asymmetric attractors in hyperbolic-type memristor based Hopfield neural network. Frontiers in Computational Neuroscience 11, 81 (2017)

Y. Zheng, L. Bao, Slow-fast dynamics of tri-neuron Hopfield neural network with two timescales. Communications in Nonlinear Science and Numerical Simulation 19(5), 1591–1599 (2014)

S. DoublaI saac, Z.T. Njitacke, J. Kengne, Effects of Low and High Neuron Activation Gradients on the Dynamics of a Simple 3D Hopfield Neural Network. International Journal of Bifurcation and Chaos 30(8), 2050159 (2020)

Q. Xu et al., Numerical analyses and breadboard experiments of twin attractors in two-neuron-based non-autonomous Hopfield neural network. The European Physical Journal Special Topics 227(6), 777–786 (2018)

Q. Xu et al., Two-neuron-based non-autonomous memristive Hopfield neural network: numerical analyses and hardware experiments. AEU-International Journal of Electronics and Communications 96, 66–74 (2018)

C. Chen et al., Coexisting multi-stable patterns in memristor synapse-coupled Hopfield neural network with two neurons. Nonlinear Dynamics 95(4), 3385–3399 (2019)

C. Chen et al., Non-ideal memristor synapse-coupled bi-neuron Hopfield neural network: Numerical simulations and breadboard experiments. AEU-International Journal of Electronics and Communications 111, 152894 (2019)

J. Yang et al., A novel memristive Hopfield neural network with application in associative memory. Neurocomputing 227, 142–148 (2017)

J.J. Hopfield, Pattern recognition computation using action potential timing for stimulus representation. Nature 376(6535), 33–36 (1995)

L. Chua, Everything You Wish to Know About Memristors but Are Afraid to Ask, in Handbook of Memristor Networks. (Springer, 2019), pp. 89–157

A. Serb et al., Unsupervised learning in probabilistic neural networks with multi-state metal-oxide memristive synapses. Nature Communications 7(1), 1–9 (2016)

Z. Wang et al., Memristors with diffusive dynamics as synaptic emulators for neuromorphic computing. Nature Materials 16(1), 101–108 (2017)

L. Chua, V. Sbitnev, H. Kim, Neurons are poised near the edge of chaos. International Journal of Bifurcation and Chaos 22(04), 1250098 (2012)

Y. Zhang et al., Memristive model for synaptic circuits. IEEE Transactions on Circuits and Systems II: Express Briefs 64(6), 767–771 (2016)

A. Yousefpour, H. Jahanshahi, D. Gan, Fuzzy integral sliding mode technique for synchronization of memristive neural networks, in Mem-Elements for Neuromorphic Circuits with Artificial Intelligence Applications. (Elsevier, 2021), pp. 485–500

E. Kaslik, S. Sivasundaram, Nonlinear dynamics and chaos in fractional-order neural networks. Neural Networks 32, 245–256 (2012)

A. Yousefpour et al., Robust adaptive control of fractional-order memristive neural networks, in Mem-elements for Neuromorphic Circuits with Artificial Intelligence Applications. (Elsevier, 2021), pp. 501–515

M. Roohi, C. Zhang, Y. Chen, Adaptive model-free synchronization of different fractional-order neural networks with an application in cryptography. Nonlinear Dynamics 100(4), 3979–4001 (2020)

A.C. Mathias, P.C. Rech, Hopfield neural network: the hyperbolic tangent and the piecewise-linear activation functions. Neural Networks 34, 42–45 (2012)

S. Duan, X. Liao, An electronic implementation for Liao’s chaotic delayed neuron model with non-monotonous activation function. Physics Letters A 369(1–2), 37–43 (2007)

T. Banerjee, D. Biswas, Theory and experiment of a first-order chaotic delay dynamical system. International Journal of Bifurcation and Chaos 23(06), 1330020 (2013)

V.T. Pham et al., Dynamics and circuit of a chaotic system with a curve of equilibrium points. International Journal of Electronics 105(3), 385–397 (2018)

N. Tsafack et al., A new chaotic map with dynamic analysis and encryption application in internet of health things. IEEE Access 8, 137731–137744 (2020)

L. Yu et al., Compressive sensing with chaotic sequence. IEEE Signal Processing Letters 17(8), 731–734 (2010)

Y. Zhou et al., Cascade chaotic system with applications. IEEE transactions on cybernetics 45(9), 2001–2012 (2014)

A. Alanezi et al., Securing digital images through simple permutation-substitution mechanism in cloud-based smart city environment. Security and Communication Networks, 2021. 2021

H. Jahanshahi et al., A new fractional-order hyperchaotic memristor oscillator: Dynamic analysis, robust adaptive synchronization, and its application to voice encryption. Applied Math and Computation 383, 125310 (2020)

X. Chai et al., Color image compression and encryption scheme based on compressive sensing and double random encryption strategy. Signal Processing 176, 107684 (2020)

X. Chai et al., An efficient chaos-based image compression and encryption scheme using block compressive sensing and elementary cellular automata. Neural Computing and Applications 32(9), 4961–4988 (2020)

M. Qiao et al., Deep learning for video compressive sensing. APL Photonics 5(3), 030801 (2020)

M. Qiao et al., Snapshot Interferometric 3D Imaging by Compressive Sensing and Deep Learning. arXiv preprint arXiv:2004.02633, (2020)

H.-P. Yin et al., Survey of compressed sensing. Control and Decision 28(7), 1441–1445 (2013)

R.C. Hilborn, Chaos and nonlinear dynamics: an introduction for scientists and engineers. (2000): Oxford University Press on Demand

A.H. Nayfeh, B. Balachandran, Applied nonlinear dynamics: analytical, computational, and experimental methods (John Wiley & Sons, 2008)

S. Dadras, H.R. Momeni, A novel three-dimensional autonomous chaotic system generating two, three and four-scroll attractors. Physics Letters A 373(27), 3637–3642 (2009)

S. Strogatz, Nonlinear Dynamics and Chaos (AddisonWesley. Reading, MA, 1994)

J.J. Hopfield, Neurons with graded response have collective computational properties like those of two-state neurons. Proceedings of National Academy of Sciences 81(7), 3088–3092 (1984)

A. Wolf et al., Determining Lyapunov exponents from a time series. Physica D: Nonlinear Phenomena 16(3), 285–317 (1985)

Z. NJITACKE et al., Multistability and its Annihilation in the Chua’s Oscillator with Piecewise-Linear Nonlinearity. Chaos Theory and Applications. 2(2): 77-89

Z. T. Njitacke, et al., Heterogeneous Multistability in a Novel System with Purely Nonlinear Terms. International Journal of Electronics, (2020)

A.N. Negou, J. Kengne, Dynamic analysis of a unique jerk system with a smoothly adjustable symmetry and nonlinearity: Reversals of period doubling, offset boosting and coexisting bifurcations. AEU-International Journal of Electronics and Communications 90, 1–19 (2018)

J. Kengne, S. Njikam, V.F. Signing, A plethora of coexisting strange attractors in a simple jerk system with hyperbolic tangent nonlinearity. Chaos, Solitons & Fractals 106, 201–213 (2018)

G. Leutcho, J. Kengne, L.K. Kengne, Dynamical analysis of a novel autonomous 4-D hyperjerk circuit with hyperbolic sine nonlinearity: chaos, antimonotonicity and a plethora of coexisting attractors. Chaos, Solitons & Fractals 107, 67–87 (2018)

R.M. Tagne, J. Kengne, A.N. Negou, Multistability and chaotic dynamics of a simple Jerk system with a smoothly tuneable symmetry and nonlinearity. International Journal of Dynamics and Control 7(2), 476–495 (2019)

J. Kengne et al., Effects of symmetric and asymmetric nonlinearity on the dynamics of a novel chaotic jerk circuit: Coexisting multiple attractors, period doubling reversals, crisis, and offset boosting. Chaos, Solitons & Fractals 121, 63–84 (2019)

A. Babloyantz, C. Lourenço, Brain chaos and computation. International Journal of Neural Systems 7(04), 461–471 (1996)

L. Fortuna, M. Frasca, A. Rizzo, Chaotic pulse position modulation to improve the efficiency of sonar sensors. IEEE Transactions on Instrumentation and Measurement 52(5), 1809–1814 (2003)

R.L. Filali, M. Benrejeb, P. Borne, On observer-based secure communication design using discrete-time hyperchaotic systems. Communications in Nonlinear Science and Numerical Simulation 19(5), 1424–1432 (2014)

C.K. Volos, I.M. Kyprianidis, I.N. Stouboulos, Image encryption process based on chaotic synchronization phenomena. Signal Processing 93(5), 1328–1340 (2013)

R. Modeste Nguimdo, R. Tchitnga, P. Woafo, Dynamics of coupled simplest chaotic two-component electronic circuits and its potential application to random bit generation. Chaos: An Interdisciplinary Journal of Nonlinear Science 23(4), 043122 (2013)

T. Nestor et al., A multidimensional hyperjerk oscillator: dynamics analysis, analogue and embedded systems implementation, and its application as a cryptosystem. Sensors 20(1), 83 (2020)

N. Tsafack et al., Design and implementation of a simple dynamical 4-D chaotic circuit with applications in image encryption. Information Sciences 515, 191–217 (2020)

M. Caputo, Linear models of dissipation whose Q is almost frequency independent. Annales Geophysicae 19(4), 383–393 (1966)

M.J. Wang et al., Bursting, dynamics, and circuit implementation of a new fractional-order chaotic system with coexisting hidden attractors. Journal of Computational and Nonlinear Dynamics 14(6), 071002 (2019)

R. Heckel, M. Soltanolkotabi. Compressive sensing with un-trained neural networks: Gradient descent finds a smooth approximation. in International Conference on Machine Learning. (2020). PMLR

N. Zhou et al., Image compression-encryption scheme based on hyper-chaotic system and 2D compressive sensing. Optics & Laser Technology 82, 121–133 (2016)

E. Liu, V.N. Temlyakov, The orthogonal super greedy algorithm and applications in compressed sensing. IEEE Transactions on Information Theory 58(4), 2040–2047 (2011)

H. Mohimani, M. Babaie-Zadeh, C. Jutten, A fast approach for overcomplete sparse decomposition based on smoothed \$\(\backslash ell^{0}\)\$ norm. IEEE Transactions on Signal Processing 57(1), 289–301 (2008)

Z. Gan et al., An effective image compression-encryption scheme based on compressive sensing (CS) and game of life (GOL). Neural Computing and Applications 32(13), 14113–14141 (2020)

T. Chen et al., Image encryption and compression based on kronecker compressed sensing and elementary cellular automata scrambling. Optics & Laser Technology 84, 118–133 (2016)

G. Hu et al., An image coding scheme using parallel compressive sensing for simultaneous compression-encryption applications. Journal of Visual Communication and Image Representation 44, 116–127 (2017)

L. Yuan, S. Zheng, Z. Alam, Dynamics analysis and cryptographic application of fractional logistic map. Nonlinear Dynamics 96(1), 615–636 (2019)

Z. T. Njitacke et al., Window of multistability and its control in a simple 3D Hopfield neural network: application to biomedical image encryption. Neural Computing and Applications, p. 1-20 (2020)

B. Abd-El-Atty et al., Optical image encryption based on quantum walks. Optics and Lasers in Engineering 138, 106403 (2021)

L. Chen et al., Chaos in fractional-order discrete neural networks with application to image encryption. Neural Networks 125, 174–184 (2020)

A.A. Abd El-Latif et al., Quantum-inspired blockchain-based cybersecurity: Securing smart edge utilities in iot-based smart cities. Information Processing & Management 58(4), 102549 (2021)

H.S. Alhadawi et al., A novel method of S-box design based on discrete chaotic maps and cuckoo search algorithm. Multimedia Tools and Applications, 1-18 (2020)

H. Karmouni, M. Sayyouri, H. Qjidaa, A novel image encryption method based on fractional discrete Meixner moments. Optics and Lasers in Engineering 137, 106346 (2021)

A.-V. Diaconu, Circular inter-intra pixels bit-level permutation and chaos-based image encryption. Information Sciences 355, 314–327 (2016)

K. Jithin, S. Sankar, Colour image encryption algorithm combining Arnold map, DNA sequence operation, and a Mandelbrot set. Journal of Information Security and Applications 50, 102428 (2020)

L. Liu, Q. Zhang, X. Wei, A RGB image encryption algorithm based on DNA encoding and chaos map. Computers & Electrical Engineering 38(5), 1240–1248 (2012)

S. Duan et al., Small-world Hopfield neural networks with weight salience priority and memristor synapses for digit recognition. Neural Computing and Applications 27(4), 837–844 (2016)

C. Lakshmi et al., Hopfield attractor-trusted neural network: an attack-resistant image encryption. Neural Computing and Applications 32(15), 11477–11489 (2020)

Acknowledgements

This work is partially funded by the Polish National Science Center under the Grant 14 No.2017/27/B/ST8/01330.

Author information

Authors and Affiliations

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Isaac, S.D., Njitacke, Z.T., Tsafack, N. et al. Novel compressive sensing image encryption using the dynamics of an adjustable gradient Hopfield neural network. Eur. Phys. J. Spec. Top. 231, 1995–2016 (2022). https://doi.org/10.1140/epjs/s11734-022-00472-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1140/epjs/s11734-022-00472-2