Abstract

We propose a comprehensive framework able to address both the predictability of the first and of the second kind for high-dimensional chaotic models. For this purpose, we analyse the properties of a newly introduced multistable climate toy model constructed by coupling the Lorenz ’96 model with a zero-dimensional energy balance model. First, the attractors of the system are identified with Monte Carlo Basin Bifurcation Analysis. Additionally, we are able to detect the Melancholia state separating the two attractors. Then, Neural Ordinary Differential Equations are applied to predict the future state of the system in both of the identified attractors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Understanding the qualitative behaviour and dynamics of high-dimensional chaotic models of complex systems is a challenging task. The Earth’s climate [1,2,3], but also power grids [4], the human brain [5, 6], and perception [7] or gene expression networks [8] all exhibit, in certain range of the system’s parameters, multiple attractors with different basins of attractions. Energy balance climate models exhibit the well known hysteresis behaviour with respect to the solar radiation between a cold snowball earth state and a warmer state corresponding to the present-day climate, with discontinuous transitions taking place at the lower and upper boundary of the region of bistability [1, 2, 9,10,11,12,13]. Recently, it has become apparent that the climate system might indeed feature more than two competing states, associated with a complex partitioning of the phase space in competing basins of attraction [14,15,16,17]. Indeed, multistability also almost always gives rise to a potential abrupt change of the system when a bifurcation point is—adiabatically—reached and one state loses it stability. In the context of geosciences, the crossing of a bifurcation point is usually referred to as tipping point [18]. Studying them is one of the crucial challenges of understanding and hopefully mitigating climate change [19]. The notion of tipping point has been recently widened to accmomodate transitions that are caused specifically by the presence of a non-vanishing rate of change of the parameter of interest or by noise [20, 21]. Far from the tipping point, when the system is a regime of multistability, transitions between the competing states is not possible in the case of autonomous dynamics, as the asymptotic state is determined by the initial condition, depending on which basin of attraction it belongs to. Initial conditions located on the basin boundaries—which have vanishing Lebesgue measure—are, instead, attracted to the edge states, which are saddles located on such basin boundaries [12, 22, 23]. Such saddles determine the global instabilities of the system and, additionally, if the system is, under fairly general conditions, forced with Gaussian noise, they are the gateways for noise-induced transitions between competing metastable states [3, 13, 17, 24].

To characterise multistable systems, one needs to recover information on each competing attractor, so that it is possible to dynamically distinguish them. Indeed, one should be able to compute dynamical properties as, e.g., different Lyapunov exponents or the basin stability [25]. Any approach aimed at performing predictions in potentially multistable systems thus needs to first identify the different attractors and their basins of attractions to be able to make meaningful predictions on either of them. In this article, we will present a two-part framework to approach high-dimensional, spatiotemporally chaotic models, to understand their complex behaviour and their basins of attraction, and then predict future states of each of their attractors. In Lorenz’ terminology, in this paper we try to bundle together a methodology to address both the predictability of the first kind—the model sensitivity to inaccurate initial conditions, hindering infinitely long deterministic predictions—and of the second kind, associated with the uncertainty on the asymptotic state reached by the system [26].

We proceed as follows. The Monte Carlo Basin Bifurcation Analysis (MCBB) [27] is used to identify the basins of attractions with the largest volumes and how the volume changes when control parameters of the system are changed. MCBB makes use of random sampling and clustering techniques to quantify the volume of the basins of attraction and track how they change when control parameters of the system are varies. Based on the MCBB results, we learn which trajectories are asymptotically evolving towards which attractor.

For achieving predictability of the first kind, we apply a hybrid approach that complements potentially incomplete models with data-driven methods. Most numerical models describing a real world system and especially those of Earth system models, can be seen as incomplete in some regard. This can be due to, e.g., neglecting higher order terms or by an unknown external influence. Predicting even only partially known chaotic systems has been successfully done with hybrid approaches that combine knowledge of the governing equation with data-driven methods [28, e.g.]. One particular promising approach are Neural Differential Equation or Universal Differential Equations [29, 30] that enable us to train artificial neural networks (ANNs) inside of the differential equations.

We will test our framework on a new prototypical bistable model that we introduce below. The model is constructed by coupling a zero-dimensional classic energy balance model featuring bistability with the Lorenz96 (L96) model [31], which has gained prominence as prototypical system featuring spatially extended chaos. This model will serve as an ideal testbed for our approach.

The paper is structured as follows: First, we will introduce the Bistable Climate Toy Model, recap the dynamic properties of its key ingredient, the Lorenz96 model, and investigate the basic properties of the full coupled toy model. Subsequently, we will apply our two-part approach by first identifying and tracking the attractors of the model with MCBB, and then demonstrating the Neural Differential Equation approach on the model by replacing its energy balance model with an ANN and making predictions of the model.

2 Bistable climate toy model

The Bistable Climate Toy Model is set up by coupling a Lorenz ‘96 (L96) model [31, 32] to a zero-dimensional energy balance model (EBM). The L96 model describes a highly nontrivial dynamics on a one-dimensional periodic lattice composed of N grid points, and is rapidly becoming a reference for studying non-equilibrium steady states in spatially extended systems. The L96 model features processes of advection, forcing, and dissipation. It can be thought of as representative of the dynamics of the atmosphere along one latitudinal circle [31, 32], even if such correspondence is more metaphorical than actual, because the L96 model does not correspond to a truncated version of any known fluid dynamical system. The L96 model has rapidly gained relevance in many different research areas to study bifurcations [33, 34], to test parametrizations [35,36,37,38], to investigate extreme events [39,40,41], to improve data assimilation schemes [42, 43] and ensemble forecasting techniques [44, 45], to develop new tools for investigating predictability [46, 47], and for addressing basic issues in non-equilibrium statistical mechanics [48,49,50,51]. The L96 model is formulated as follows:

with periodic boundary conditions given by \(X_{j-N}=X_j=X_{j+N}\) \(\forall j=1,\ldots ,N\). The parameter F describes the forcing acting on the model, \(\gamma \) controls the intensity of the dissipation and, thus, of the contraction of phase space volume, and the nonlinear term on the right hand side describes a non-standard advection. The energy of the system \(E=1/2\sum _{n=1}^N X_n^2\) is conserved in the unviscid and unforced limit and acts as generator of the time translation, even though the system is not Hamiltonian. For a detailed analysis of the mechanics and energetics of the L96 model and of a generalisation thereof we refer to [52].

If \(\gamma =1\) (which is the default choice in most studies) and \(N\gg 1\), the model’s attractor is the fixed point \(X_k=F\), \(k=1,\ldots ,N\) for \(0\le F\le 8/9\). This fixed point loses stability as F is increased and, after a complex set of bifurcations [33, 34, 53], the system settles in a chaotic regime for \(F\ge 5.0\) [32]. In the regime of strong forcing and developed turbulence the properties of the L96 model are extensive with respect to the number of nodes N [51, 52], and one can establish power laws that accurately describe how some fundamental properties of the system—such as its energy and Lyapunov exponents—depend of the parameter F [51].

As far as numerical evidence goes, in the turbulent regime the L96 model possesses a unique asymptotic state characterised by a physical measure supported on a compact attractor. To introduce multistability in the L96 model, an efficient strategy is to suitably couple it with a multistable (say, bistable) model, according to the strategy described in [54]. Our simple bistable model of reference is the EBM of the form:

where V(T) is a confining potential (\(V(T)\rightarrow \infty \) sufficiently fast as \(|T|\rightarrow \infty \)) with two local minima separated by a local maximum. We then come to the following coupled L96-EBM model:

where the usual periodic boundary conditions apply (\(X_{j-N}=X_j=X_{j+N}\) \(\forall j=1,\ldots ,N\)), and \({\mathcal {E}}=E/N\). The value used for the different parameters— all intended to be non-negative—can be found in Table 1. The L96 model and the EBM are uncoupled if one sets \(\alpha =\beta =0\). The coupling between the two models can be explained as follows. If the temperature of the EBM is higher (lower) than the reference temperature \({{\tilde{T}}}\), the L96 model receives an enhanced (reduced) forcing, mimicking—in very rough terms—the presence of higher energy in the atmosphere. A negative feedback in the system is introduced as follows. If the energy per site of the L96 component of the model exceeds the average value realised in the uncoupled case \(\bar{{\mathcal {E}}}\approx 0.6 F^{4/3}\) [51], the temperature of the system is accordingly reduced. Note that, according to the framework set in [54], the L96 model is the fast component and the EBM is the slow component of the coupled model, where the fast component acts as an almost stochastic forcing on the slow component, and the slow component modulates the dynamics of the fast component. We remark that since the coupling constants \(\alpha \) and \(\beta \) are \({\mathcal {O}}(1)\), one cannot use asymptotic methods such as averaging or homogenization to obtain a reduced equation for the temperature T; the dynamics of the system is truly high dimensional.

The system possess (at least) two competing attractors, associated with disjoint basins of attraction. Hence, the asymptotic state of the system depends on its initial conditions. We assume that each attractor possesses one physical measure that is practically selected when performing the numerical integration of the model. In absence of stochastic forcing, no transitions can take place between the two competing attractors.

In Fig. 1, we portray the two competing attractors of the model corresponding to the Warm (W) state and Snowball (SB) state state in the reduced phase state constructed by performing a projection on the variables \({\mathcal {M}}=1/N\sum _{j=1}^NX_j\), \({\mathcal {E}}\), and T. One sees clearly that the W state has higher temperature and larger values for the mean and for the intensity of the fluctuations of the dynamic variables with respect to the SB state, in agreement with the actual features of the competing W and SB states of the climate system [3, 10, 55]. We additionally portray the Melancholia (M) state sitting between the two competing attractors. The M state is a saddle embedded in the boundary between the two co-existing basins of attraction and attracts the orbits whose initial conditions are on such basin boundary [12]. The M state, which is the gateway for the noise-induced transitions between the co-existing attractors regardless of the kind of noise included in the system [3, 13], has been constructed using the edge-tracking algorithm [56], and features non-trivial dynamics. Indeed, as in [12], it is a chaotic saddle.

3 Methods

In the following, we outline a two-part framework to analyse and predict spatiotemporally chaotic system such as the Bistable Climate Toy Model. First we demonstrate how the attractors of the system can be can be identified. Based on that knowledge, a method to make predictions of states on both attractors is then introduced.

3.1 Monte Carlo Basin Bifurcation Analysis

Multistability is a universal phenomenon of complex systems and is most likely present in several sub-systems of Earth’s climate [57, 58, 59, e.g.], as well as in the energy balance of the Earth [1,2,3]. When analysing and working with high-dimensional models, knowledge of the largest basins of attractions is instrumental to understanding the model itself. The recently introduced Monte Carlo Basin Bifurcation Analysis (MCBB) [27] is a numerical method tailored for analysing basins of attraction of high-dimensional systems and how they vary when control parameters of the system are changed. The aim of MCBB is to find classes of similar attractors of a high-dimensional system that collectively have the largest basin of attraction with respect to a measure of initial conditions \(\rho _0\) and how these classes of attractors and their basin volumes change when a control parameter p is changed in a range \(I_p\). Conceptually, it is situated in between a thorough bifurcation analysis and a macroscopic order parameter. By utilizing random sampling and clustering techniques, MCBB learns classes of similar attractors \({\mathcal {C}}\) and their basins. To regard two attractors \({\mathcal {A}}\) as part of the same class, MCBB requires a notion of continuity of an invariant measure \(\rho _{\mathcal {A}}\) on the attractor: if for a control parameter p the difference between \(\rho _{\mathcal {A}}(p)\) and \(\rho _{\mathcal {A}}(p+\varDelta p)\) vanishes smoothly for \(\varDelta p\rightarrow 0\), we classify them as similar. Figure 2 illustrates this continuity requirement. Note that if the change in the measure scales linearly with \(\varDelta p\) in the limit of small values of \(\varDelta p\), one can say that linear response theory applies for the measure \(\rho _{\mathcal {A}}\) [60]. By sampling trajectories these classes can be built with a suitable pseudometric on the space of these measures. Directly comparing the high-dimensional trajectories with each other would put us close to a bifurcation analysis, but is potentially prohibitively expensive. We might also not be interested in an in-depth bifurcation analysis, but rather in a coarser definition of similarity. For the case of a climate model, similar climate regimes may for example be of interest.

Therefore, to identify the different classes of attractors, suitable statistics \({\mathcal {S}}_{i}\) are measured on every system dimension k for every trajectory, here, the mean \(E_k\), the variance \(\text {Var}_k\) and the Kullback-Leibler divergence to a normal distribution \(\text {KL}_k\). Using these statistics on each of the system dimension separately, MCBB achieves better scalability with the system dimension N. The N-dimensional trajectory of the i-th of in total \(N_{T}\) trials is \(\mathbf {x}^{(i)}(t)\), then all statistics are measured separately on each dimension of \(\mathbf {x}^{(i)}(t)\). This results in three \((N\times N_{T}\)) matrices, one for each statistic \({\mathcal {S}}_{i}\), so that \(\mathbf {S}_{k,ij} = {\mathcal {S}}_k(x_j^{(i)}(t))\).

A distance matrix of each trajectory to each other is then computed from these statistics with

where \(p^{(i)}\) is the control parameter used to generate the i-th trajectory and \(w_i\) are free parameters of the method.

If one wishes not to distinguish between symmetric configurations, as we also do in the application to the Bistable Climate Toy Model, the weighted difference can also be replaced with a Wasserstein distance of histograms over the statistics. Especially in these cases two different points (x, y) may have \(D(x,y) = 0\), this is why we are referring to D as a pseudometric and not a metric.

Sketch of the notion of continuity of invariant measures \(\rho _{\mathcal {A}}\) that MCBB requires for attractors to belong to the same class of attractors. Solid red lines indicate stable states and dashed lines unstable states. If the difference between \(\rho _{\mathcal {A}}(p)\) and \(\rho _{\mathcal {A}}(p\pm \varDelta p)\) vanishes smoothly for \(\varDelta \rightarrow 0\) they are considered to be within the same class. In the algorithm this is realised through a chain of connection via \(\epsilon _{DB}\) neighbourhoods

After computing the distance matrix \(\mathbf {D}\) of all trials to each other, the classes of attractors can then be identified by applying a clustering algorithm to the matrix. Density-based clustering algorithms such as DBSCAN [61] are ideally suited for this purpose, since DBSCAN relies on a similar notion of continuity as required by MCBB. One sample is connected to another sample if it is within \(\epsilon _{DB}\)-neighbourhood of the sample. A cluster is then formed by all the samples that have a chain of connections to each other. The results of applying DBSCAN to sample trajectories is \(\mathbf {C} = \text {DBSCAN}(\left\{ \mathbf {D}\right\} )\), where each sample trajectory is assigned to one of the \(N_C\) clusters \(C_i\) with \(i\in [1;N_C]\). Note that DBSCAN can also identify samples as outliers. We regard all outliers, if there are any, as the zeroth cluster. The approximate relative basin size of a class of asymptotic states can then be computed by applying a sliding parameter window and normalizing the results with

Here, \(CL_i^{(p)}\) is the number of trials in cluster i at (sliding) parameter window p. This is the most important result from MCBB. \(b_{{\mathcal {C}}_i}(p)\) shows the size of the largest basins of the system and how they react to changes of the control parameter p. In the results we will also see other possibilities to further evaluate the collected results from MCBB. By identifying the attractors, we also know which initial conditions are part of which basin. After achieving information about the attractors of the systems and their basins, we can investigate these individual attractor and apply further methods to predict trajectories on them.

The MCBB method was designed with high dimensional systems in mind. This is why per-dimension statistics are used as inputs for the clustering algorithm instead of the potentially high-dimensional trajectories directly. Nevertheless, the computational complexity of MCBB very much depends on the system in question. The most expensive parts are N times integrating the system and the computation of the distance matrix. For high-dimensional systems, the integration far outweighs the computation of the distance matrix. For more details of MCBB, the reader is referred to [27].

3.2 Neural ordinary differential equations

Most—if not all—numerical models of real-world processes are incomplete in some sense. Be it due to unknown effects that are not modelled, or by omitting higher-order terms of known effects on purpose. A classical example are subgrid-scale processes that are not explicitly resolved in general circulation models of the Earth’s atmosphere and oceans, and hence need to be parametrized [62, 63]. Hybrid modelling methods can remedy deficiencies of incomplete models by combining an incomplete process-based model with data-driven methods that learn to compensate these deficiencies. This approach seems especially promising with climate models as we have easy access to observational data and the climate system is so immensely complex that every model of it is always incomplete in some sense. We will demonstrate the Neural Ordinary Differential Equation (NODE) approach [29, 30], which provides an elegant way to construct and parametrize such hybrid models, on the Bistable Climate Toy model. NODEs integrate universal functions approximators such as artifical neural networks (ANN) directly into differential equation, so that the universal functions approximators become a part of the equation:

where \({\mathcal {N}}(x,t;\varTheta )\) is a data-driven function approximator such as an Artificial Neural Network (ANN) with parameters \(\varTheta \). Integrating the NODE, just as integrating a regular ODE, yields a predicted trajectory \({\hat{x}}(t;\varTheta )\) at discretized time steps \(i_t\). The integration time can be freely set, but our previous results show that for chaotic systems very short integration times are necessary to ensure that the learning process succeeds [64]. Similar to regular ANNs, NODEs are a supervised learning method and their parameters, in our case only the parameters of the ANN, are found by minimizing a loss function, most commonly the least-squares error between observed and model-predicted trajectories:

using a stochastic, adaptive gradient descent with weight decay [65]. Additional regularization of the parameters of the ANN may be added to avoid overfitting. In order to train the model one needs to be able to compute gradients of the loss function with respect to all the parameters of the NODE.

While a regular ANN relies on backpropagation—which is essentially the chain rule—to compute gradients of numerical solutions of differential equations is not as straight forward. However, with methods from adjoint sensitivity analysis and response theory in conjunction with automatic differentiation techniques, such gradients can be computed as well. For a detailed overview of the algorithms used for this purpose, please see [29, 30].

4 Results

4.1 Attractors of the model

We apply MCBB to the Bistable Climate Toy Model by sampling \(N_T=15,000\) trajectories with initial conditions drawn from \({\mathcal {U}}(-7;7)\) for the L96 state variables X and \({\mathcal {U}}(240,300)\) for the temperature T. The solar constant is varied within \(S\in [5;20]\). The trajectories are integrated for 400 time units and the first \(80\%\) of the trajectory are not included in the analysis to avoid transient effects. Only the statistics \(E_k\), \(\text {Var}_k\), \(\text {KL}_k\) of the L96 model are used for the identification of the attractors. In the distance matrix computation according to Eq. (4), the defaults weights of [1, 0.5, 0.25] are chosen. The results are not sensitive to small variations of those weights. Figure 3 shows the approximate relative basin volume estimated by MCBB. Two classes, i.e., clusters, are found. The system is multistable in the interval of around \(S\in [7;15]\) with each of the basins being approximately equal-sized with respect to the distributions of initial conditions chosen. For \(\epsilon _{DB}=0.05\) the clustering algorithm detects several outliers. These are mostly the trajectories that spend long time near the Melancholia state (M), because they are initialised near the basin boundary [66]. As shown in Fig. 5, they exhibit a saddle-like behaviour for the EBM variable. When \(\epsilon _{DB}\) is increased to 0.1 or larger these states are not resolved separately anymore and the algorithm only finds the cold and warm state, as shown in Fig. 3. Further insights can be gained with a sliding-histogram approach. For each sliding parameter window a histogram is fitted to all collected values of the EBM mean for each of the two attractors. Figure 4 clearly shows the two stable branches of the EBM and its respective values of the forcing F. As one can expect the “blue” cluster in Fig. 3 exhibits the much larger values of the forcing (see Fig. 4b) and is thus the warm state of the model and the “red” cluster in Fig. 3 is the cold state of the model. Again, the hysteresis behaviour of the model is evidently shown. For \(S<7\) only the the cold state is stable and for \(S>15\) only the warm state is stable.

Sliding histogram plot of the mean of the EBM variable of the Bistable Climate Toy Model computed with MCBB. The two histogram plots of each of the identified states are joined together to better illustrate the two stable branches of the EBM and their hysteresis behaviour. On the y-axis the value of complete effective forcing term of the L96, so \(F*= F\left( 1+\beta \frac{T-{\tilde{T}}}{\varDelta _T}\right) \), is shown. The relative magnitude of each of these values appearing in the individual sliding histograms is shown in shades of blue and red, however, in this case are mostly all zero or one

4.2 Predicting the model

MCBB is thus able to identify the two attractors of the system. These two attractors will, in general, exhibit different properties, like, e.g., different maximum Lyapunov exponent. The maximum Lyapunov exponents computed with the method of [67] (implementation of DynamicalSystems.jl [68]) are \(\lambda ^{(\text {cold}}_{\text{ max }}\approx 1.04\) for the cold and \(\lambda ^{(\text {warm}}_{\text{ max }}\approx 2.60\) for the warm state. The larger Lyapunov exponent of the warm state shows that, as expected, the L96 model is more chaotic for larger values of the forcing. When we want to predict the model’s behavior, we have to be aware of that and evaluate predictions on both attractors separately. MCBB also classifies all initial conditions that were used by the algorithms to either of the attractors. In that way, we have many possible initial conditions for prediction on these attractors. We demonstrate the capabilities of the NODE approach by “forgetting” the equation of the EBM and replacing it with an ANN. In this case this is an artificial example, but in many observational scenarios and model, one has incomplete models whose deficiencies can be corrected with the NODE approach. In our case replacing the EBM with an ANN is supposed to mirror setups of more realistic models in which one probably has much better knowledge of the governing equations of the atmosphere than the energy balance. In principal it would also be possible to replace the L96 or part of it with an ANN. Setups similar to this, studying the application of NODEs to spatiotemporally chaotic systems have been explored in [64].

For the ANN we combine two different kind of layers: (i) dense layers that apply a nonlinear function, called activation function, to a weighted sum of all its inputs and (ii) convolutional layers that perform a discrete convolution with the convolution filters as learnable parameters. For details on these layers, the reader is referred to standard textbooks such as [69, 70].

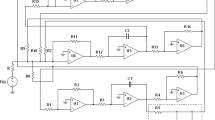

The ANN \({\mathcal {N}}\) is set up to have the same input variables as the EBM in Eq. (3) has arguments: all variables of the L96 model and the EBM itself. Figure 6 shows the ANN used. Due to the spatial input, convolutional layers are best suited for those variables and, therefore, have only the L96 variables as inputs. The forcing, the result of the EBM itself, skips these layers and inputs directly into the dense layers. The swish activation function [71] \(\text {swish}(x)=x/(1+\exp {(-x)})\) is used as an activation function and MaxPooling layers reduce the dimension by downsampling it.

By replacing the EBM the full NODE reads

where \(\varTheta \) are the parameters of all ANN layers. An adaptive stochastic gradient descent with weight decay (AdamW) [65] is used to minimize the loss function:

Similar to earlier results for applying NODE techniques to spatiotemporally chaotic systems [64], we found that integrating the NODE for long time spans does not significantly decrease the loss on neither the training nor the validation set, but increases the computational complexity massively. Therefore, the NODE is only integrated for \(\varDelta t=0.05\) with only one time step saved. Hence, we minimize the one-step-ahead loss of the predicted states \((\hat{\mathbf {X}},{\hat{F}})\) against the training data \((\mathbf {X},F)\). As training data two separated trajectories, each 100 time steps long (at \(\varDelta t=0.05\)), are used. These trajectories are integrated from initial conditions drawn randomly, one from each state within the basins identified by MCBB, i.e., the two shaded area, as shown in Fig. 3. The initial 2000 time steps are discarded to avoid transient dynamics and the following 100 time steps of each of the two trajectories are used as the training set. Subsequent time steps of each of the trajectories are saved as validation set. Thus, the NODE is trained to model the full system with both attractors.

ANN setup used to replace the EBM in the NODE. Convolutional layers with two filters, i.e., channels, a \((3\times 1)\) kernel and a swish activation function are used on the L96 variables, the output of these layers and the old forcing value F are used as inputs of two dense layers. \(N_{in}\) is chosen to have the correct input dimension which depends on the size of the L96 model

To evaluate the predictions made by the NODE, we compute a non-normalized error:

and normalized error

on the L96 variables, where \(<.>_t\) indicates an average over all discrete timesteps \(i_t\). The forecast length or valid time of the NODE is then the first time step \(t_v\), where \(e(i_t) > 0.4\), in accordance with [28]. The valid time might be expressed in terms of the Lyapunov time \(\lambda _{\text{ max }}t = \lambda _{\text{ max }}\varDelta t\cdot i_t\). Similar to how the NODE was trained with data from both attractors, we also predict and evaluate trajectories from both attractors. Figure 7 shows the trajectories of the NODE that were integrated from initial conditions of the first time step outside of the training dataset for both attractors.

As expected the valid time in terms of discrete time steps is much smaller for the warm state than for the cold state as it is less chaotic, i.e., exhibits a smaller \(\lambda _{\text{ max }}\). In terms of the the rescaled valid time in Lyapunov times it is comparable, yet still a little smaller for the warm state. For the cold state the valid time 169 time steps or \(8.52\lambda _{\text{ max }}t\) and for the warm state it is 39 time steps or \(5.02\lambda _{\text{ max }}t\).

5 Discussion

We have presented a framework for addressing the predictability of the first kind and of the second kind in high-dimensional chaotic systems. First, we understand the qualitative properties of the system by discovering the attractors with the largest basins of attractors and evaluate how the volume of these basins changes when control parameters of the system are varied. This might be of great relevance especially in the case several competing asymptotic states, each associated with different basins of attraction, exist. As we gathered knowledge on the attractors of the systems, the NODE approach allows one to predict the evolution of the systems even when the model is only incomplete with respect to the data it is trained with. With the NODE we replaced a sub-module of the model with a data-driven function approximator in the form of an ANN. This approach has the potential to be applied to more complex coupled models in conditions, where only incomplete or no knowledge of a specific part of the model is available. When predicting observational data with models, this could also be used to account for unknown or neglected effects in the model. The Bistable Climate Toy Model introduced here is an ideal testbed for this approach. The model is built by coupling a bistable EBM to the L96 model and exhibits two competing attractors in a vast range of the model’s parameters. These attractors correspond to a cold and a warm state, for which we are able to identify the basins of attractions and define the bifurcations conducive to tipping points. We are also able to identify accurately which trajectories lead to which of the attractors, so that we use these as training data for the NODE. In the subsequent application of the NODE approach, we purposefully forgot a part of the model, the EBM, and replaced it with an ANN. The NODE can model these introduced deficiencies for both attractors at the same time and make accurate prediction even when only presented with very short training datasets for the data-driven part to be trained on. The methods presented are in principle capable of investigating stochastic systems as well which is an exiting avenue of future research with the presented approach. The results on the presented toy model can also be seen as a first step towards analysing and predicting more complex climate models with the presented methods. Especially applying the NODE approach to, e.g., atmospheric models and observational data is a highly promising outlook.

Availability of data and materials

My manuscript has no associated data or the data will not be deposited.

References

M.I. Budyko, The effect of solar radiation variations on the climate of the earth. Tellus 21, 611–619 (1969). https://doi.org/10.3402/tellusa.v21i5.10109

W.D. Sellers, A global climatic model based on the energy balance of the earth-atmosphere system. J. Appl. Meteorol. 8, 392–400 (1969). https://doi.org/10.1175/1520-0450(1969)008<0392:AGCMBO>2.0.CO;2. https://journals.ametsoc.org/jamc/article-pdf/8/3/392/4975545/1520-0450(1969)008_0392_agcmbo_2_0_co_2.pdf

V. Lucarini, T. Bódai, Global stability properties of the climate: Melancholia states, invariant measures, and phase transitions. Nonlinearity 33, R59–R92 (2020) https://doi.org/10.1088/361-6544/aa86cc

J. Machowski, J. Bialek, J. Bumby, Power system dynamics: stability and control, 2nd edn. (Wiley, USA, 2008)

A. Babloyantz, A. Destexhe, Low-dimensional chaos in an instance of epilepsy. Proceedings of the National Academy of Sciences 83, 3513–3517 (1986). https://www.pnas.org/content/83/10/3513. arXiv:https://www.pnas.org/content/83/10/3513.full.pdf

W.W. Lytton, Computer modelling of epilepsy. Nat. Rev. Neurosci. 6 (2008)

J.-L. Schwartz, N. Grimault, J.-M. Hupé, B.C.J. Moore, D. Pressnitzer, Multistability in perception: binding sensory modalities, an overview. Philos. Trans. R. Soc. B. Biol. Sci. 367, 896–905 (2012) . https://doi.org/10.1098/rstb.2011.0254

P. Smole, D. Baxter, J. Byrne, Mathematical modeling of gene networks. Neuron 26, 567–580 (2000)

M. Ghil, Climate stability for a Sellers-type model. J. Atmos. Sci. 33, 3–20 (1976)

V. Lucarini, K. Fraedrich, F. Lunkeit, Thermodynamic analysis of snowball earth hysteresis experiment: efficiency, entropy production, and irreversibility. Quart. J. R. Meterol. Soc. 136, 2–11 (2010)

M. Ghil, V. Lucarini, The physics of climate variability and climate change. Rev. Mod. Phys. 92, 035002 (2020). https://doi.org/10.1103/RevModPhys.92.035002

V. Lucarini, T. Bódai, Edge states in the climate system: exploring global instabilities and critical transitions. Nonlinearity 30, R32–R66 (2017)

V. Lucarini, T. Bódai, Transitions across melancholia states in a climate model: reconciling the deterministic and stochastic points of view. Phys. Rev. Lett. 122, 158701 (2019). https://doi.org/10.1103/PhysRevLett.122.158701

J.P. Lewis, A.J. Weaver, M. Eby, Snowball versus slushball earth: dynamic versus nondynamic sea ice? J. Geophys. Res. Oceans 112, C11014 (2007). https://doi.org/10.1029/2006JC004037

D.S. Abbot, A. Voigt, D. Koll, The jormungand global climate state and implications for neoproterozoic glaciations. J. Geophys. Res. Atmos. 116(2011). https://doi.org/10.1029/2011JD015927

M. Brunetti, J. Kasparian, C. Vérard, Co-existing climate attractors in a coupled aquaplanet. Clim. Dyn. 53, 6293–6308 (2019). https://doi.org/10.1007/s00382-019-04926-7

G. Margazoglou, T. Grafke,, A. Laio, V. Lucarini, Dynamical landscape and multistability of the earth’s climate (2020). arXiv:2010.10374

T.M. Lenton et al. Tipping elements in the earth’s climate system. Proceedings of the National Academy of Sciences 105, 1786–1793 (2008). https://www.pnas.org/content/105/6/1786

T.M. Lenton et al. Climate tipping points—too risky to bet against (2019)

M. Callaway, T.S. Doan, J. S.W. Lamb, M. Rasmussen, The dichotomy spectrum for random dynamical systems and pitchfork bifurcations with additive noise (2013). arXiv:1310.6166

P. Ashwin, S. Wieczorek, R. Vitolo, P. Cox, Tipping points in open systems: bifurcation, noise-induced and rate-dependent examples in the climate system. Philos. Trans. R. Soc. A. Math. Phys. Eng. Sci. 370, 1166–1184 (2012)

C. Grebogi, E. Ott, J.A. Yorke, Fractal basin boundaries, long-lived chaotic transients, and unstable-unstable pair bifurcation. Phys. Rev. Lett. 50, 935–938 (1983). https://doi.org/10.1103/PhysRevLett.50.935

J. Vollmer, T.M. Schneider, B. Eckhardt, Basin boundary, edge of chaos and edge state in a two-dimensional model. N. J. Phys. 11, 013040 (2009). https://doi.org/10.1088/1367-2630/11/013040

R. Graham, A. Hamm, T. Tél, Nonequilibrium potentials for dynamical systems with fractal attractors or repellers. Phys. Rev. Lett. 66, 3089–3092 (1991). https://doi.org/10.1103/PhysRevLett.66.3089

P.J. Menck, J. Heitzig, N. Marwan, J. Kurths, How basin stability complements the linear-stability paradigm. Nat. Phys. 9, 89–92 (2013). https://doi.org/10.1038/nphys2516

E.N. Lorenz, The physical bases of climate and climate modelling. climate predictability. In GARP Publication Series, 132–136 (WMO, 1975)

Maximilian Gelbrecht, F. H., Jürgen Kurths. Monte carlo basin bifurcation analysis. N. J. Phys. 22, 033032 (2020). https://doi.org/10.1088/367-2630/7a05

J. Pathak et al., Hybrid forecasting of chaotic processes: using machine learning in conjunction with a knowledge-based model. Chaos: An Interdiscip. J. Nonl. Sci. 28, 041101 (2018). https://doi.org/10.1063/1.5028373

R. T.Q. Chen, Y. Rubanova, J. Bettencourt, D. Duvenaud, Neural ordinary differential equations (2018). arXiv:1806.07366

C. Rackauckas et al. Universal differential equations for scientific machine learning (2020). arXiv:2001.04385

E. Lorenz, Predictability: a problem partly solved. In Seminar on Predictability, 4-8 September 1995, vol. 1, 1–18. ECMWF (ECMWF, Shinfield Park, Reading, 1995). https://www.ecmwf.int/node/10829

E.N. Lorenz, Designing chaotic models. J. Atmos. Sci. 62, 1574–1587 (2005). https://doi.org/10.1175/JAS3430.1

D.L. van Kekem, A.E. Sterk, Travelling waves and their bifurcations in the lorenz-96 model. Phys. D: Nonl. Phenomena. 367, 38–60 (2018). http://www.sciencedirect.com/science/article/pii/S0167278917301094

D.L. van Kekem, A.E. Sterk, Wave propagation in the lorenz-96 model. Nonl. Processes Geophys. 25, 301–314 (2018). https://npg.copernicus.org/articles/25/301/2018/

D. Wilks, Effects of stochastic parametrizations in the Lorenz ’96 system. Quart. J. R. Meteorol. Soc. 131, 389–407 (2005)

H.M. Arnold, I.M. Moroz, T.N. Palmer, Stochastic parametrizations and model uncertainty in the lorenz system. Philos. Trans. R. Soc. A: Math. Phys. Eng. Sci. 371, 20110479 (2013). https://doi.org/10.1098/rsta.2011.0479

G. Vissio, V. Lucarini, A proof of concept for scale-adaptive parametrizations: the case of the Lorenz 96 model. Quart. J. R. Meteorol. Soc. 144, 63–75 (2018)

A. Chattopadhyay, P. Hassanzadeh, D. Subramanian, Data-driven predictions of a multiscale lorenz 96 chaotic system using machine-learning methods: reservoir computing, artificial neural network, and long short-term memory network. Nonl. Processes Geophys. 27, 373–389 (2020). https://npg.copernicus.org/articles/27/373/2020/

R. Blender, V. Lucarini, Nambu representation of an extended lorenz model with viscous heating. Phys. D: Nonl. Phenomena. 243, 86–91 (2013). http://www.sciencedirect.com/science/article/pii/S0167278912002497

A.E. Sterk, D.L. van Kekem, Predictability of extreme waves in the lorenz-96 model near intermittency and quasi-periodicity. Complexity 2017, 9419024 (2017). https://doi.org/10.1155/2017/9419024

G. Hu, T. Bódai, V. Lucarini, Effects of stochastic parametrization on extreme value statistics. Chaos: An Interdiscip. J. Nonl. Sci. 29, 083102 (2019). https://doi.org/10.1063/1.5095756

A. Trevisan, F. Uboldi, Assimilation of standard and targeted observations within the unstable subspace of the observation-analysis-Forecast cycle system. J. Atmos. Sci. 61, 103–113 (2004)

J. Brajard, A. Carrassi, M. Bocquet, L. Bertino, Combining data assimilation and machine learning to emulate a dynamical model from sparse and noisy observations: A case study with the lorenz 96 model. J. Comput. Sci. 44, 101171 (2020). http://www.sciencedirect.com/science/article/pii/S1877750320304725

D.S. Wilks, Comparison of ensemble-mos methods in the lorenz 96 setting. Meteorol. Appl. 13, 243–256 (2006)

W. Duan, Z. Huo, An approach to generating mutually independent initial perturbations for ensemble forecasts: orthogonal conditional nonlinear optimal perturbations. J. Atmos. Sci. 73, 997–1014 (2016). https://doi.org/10.1175/JAS-D-15-0138.1

S. Hallerberg, D. Pazó, J. López, M. Rodríguez, Logarithmic bred vectors in spatiotemporal chaos: structure and growth. Phys. Rev. E Stat. Nonl. Soft Matter Phys. 81, 1–8 (2010)

M. Carlu, F. Ginelli, V. Lucarini, A. Politi, Lyapunov analysis of multiscale dynamics: the slow bundle of the two-scale lorenz 96 model. Nonl. Processes Geophys. 26, 73–89 (2019). https://npg.copernicus.org/articles/26/73/2019/

R.V. Abramov, A.J. Majda, New approximations and tests of linear fluctuation-response for chaotic nonlinear forced-dissipative dynamical systems. J. Nonl. Sci. 18, 303–341 (2008). https://doi.org/10.1007/s00332-007-9011-9

V. Lucarini, S. Sarno, A statistical mechanical approach for the computation of the climatic response to general forcings. Nonl. Processes Geophys. 18, 7–28 (2011)

V. Lucarini, Stochastic perturbations to dynamical systems: a response theory approach. J. Stat. Phys. 146, 774–786 (2012). https://doi.org/10.1007/s10955-012-0422-0

G. Gallavotti, V. Lucarini, Equivalence of non-equilibrium ensembles and representation of friction in turbulent flows: the Lorenz 96 Model. J. Stat. Phys. 156, 1027–1065 (2014)

Gabriele Vissio, Valerio Lucarini, Mechanics and thermodynamics of a new minimal model of the atmosphere. Eur. Phys. J. Plus 135, 807 (2020). https://doi.org/10.1140/epjp/s13360-020-00814-w

D.L. van Kekem, A.E. Sterk, Symmetries in the lorenz-96 model. Int. J. Bifurcation Chaos 29, 1950008 (2019). https://doi.org/10.1142/S0218127419500081

T. Bódai, V. Lucarini, Rough basin boundaries in high dimension: can we classify them experimentally? Chaos: An Interdiscip. J. Nonl. Sci. 30, 103105 (2020). https://doi.org/10.1063/5.0002577

R.T. Pierrehumbert, D. Abbot, A. Voigt, D. Koll, Climate of the neoproterozoic. Ann. Rev. Earth Plan. Sci. 39, 417 (2011)

J.D. Skufca, J.A. Yorke, B. Eckhardt, Edge of chaos in a parallel shear flow. Phys. Rev. Lett. 96, 174101 (2006)

M. Hirota, M. Holmgren, E.H. Van Nes, M. Scheffer, Global resilience of tropical forest and savanna to critical transitions. Science 334, 232–235 (2011). https://science.sciencemag.org/content/334/6053/232

C. Ciemer et al., Higher resilience to climatic disturbances in tropical vegetation exposed to more variable rainfall. Nat. Geosci. 12, 174–179 (2019). https://doi.org/10.1038/s41561-019-0312-z

R.M. May, Thresholds and breakpoints in ecosystems with a multiplicity of stable states. Nature 269, 471–477 (1977). https://doi.org/10.1038/269471a0

D. Ruelle, A review of linear response theory for general differentiable dynamical systems. Nonlinearity 22(4), 855–870 (2009)

M. Ester, X. Xu, H. peter Kriegel, J. Sander, Density-based algorithm for discovering clusters in large spatial databases with noise. Proceedings Of The Acm Sigkdd International Conference On Knowledge Discovery And Data Mining pages, 226–231 (1996)

J. Berner et al., Stochastic parameterization: toward a new view of weather and climate models. Bull. Am. Meteorol. Soc. 98, 565–588 (2017). https://doi.org/10.1175/BAMS-D-15-00268.1

C.L.E. Franzke, T.J. O’Kane, J. Berner, P.D. Williams, V. Lucarini, Stochastic climate theory and modeling. Wiley Interdiscip. Rev. Clim. Change 6, 63–78 (2015)

M. Gelbrecht, N. Boers, J. Kurths, Neural partial differential equations for chaotic systems. N. J. Phys. 23(2021)

I. Loshchilov, F. Hutter, Decoupled weight decay regularization (2017). arXiv:1711.05101

V. Lucarini, J. Wouters, Response formulae for n-point correlations in statistical mechanical systems and application to a problem of coarse graining. J. Phys. A: Math. Theor. 50, 355003 (2017)

G. Benettin, L. Galgani, A. Giorgilli, J.-M. Strelcyn, Lyapunov characteristic exponents for smooth dynamical systems and for hamiltonian systems; a method for computing all of them. part 2: Numerical application. Meccanica 15, 21–30 (1980). https://doi.org/10.1007/BF02128237

G. Datseris, Dynamicalsystems.jl: A julia software library for chaos and nonlinear dynamics. J. Open Source Softw. 3, 598 (2018). https://doi.org/10.21105/joss.00598

I. Goodfellow, Y. Bengio, A. Courville, Deep Learning (MIT Press, 2016). http://www.deeplearningbook.org

E. Alpaydin, Introduction to Machine Learning. Adaptive Computation and Machine Learning (MIT Press, Cambridge, MA, 2014), 3 edn

P. Ramachandran, B. Zoph, Q.V. Le, Searching for activation functions (2017). arXiv:1710.05941

C. Rackauskas, Q. Nie, Differentialequations.jl–a performant and feature-rich ecosystem for solving differential equations in julia. J. Open Res. Softw. 5(1):15 (2017)

M. Innes, et al. Fashionable modelling with flux. CoRR abs/1811.01457 (2018). arXiv:1811.01457

Acknowledgements

This paper was developed within the scope of the IRTG 1740/TRP 2015/50122-0, funded by the DFG/FAPESP. The authors thank the German Federal Ministry of Education and Research and the Land Brandenburg for supporting this project by providing resources on the high performance computer system at the Potsdam Institute for Climate Impact Research. NB and VL acknowledge funding from the European Union’s Horizon 2020 research and innovation program under Grant Agreement No. 820970 (TiPES). NB acknowledges funding by the Volkswagen foundation. JK acknowledges funding by the Russian Ministry of Education and Science of the Russian Federation Agreement No. 075-15-2020-808.

We wish to acknowledge the authors of the Julia libraries DiffEqFlux.jl [30], DifferentialEquations.jl [72] and Flux.jl [73] that were used for this study.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gelbrecht, M., Lucarini, V., Boers, N. et al. Analysis of a bistable climate toy model with physics-based machine learning methods. Eur. Phys. J. Spec. Top. 230, 3121–3131 (2021). https://doi.org/10.1140/epjs/s11734-021-00175-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1140/epjs/s11734-021-00175-0