Abstract

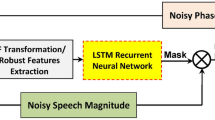

The speech recognition system has become a vital technology enabling seamless human–computer interactions, even in noisy public places. To enhance the performance of various applications like machine translation, natural language processing, spoken language understanding, and text generation, speech enhancement (SE) techniques play a crucial role. In this study, we introduce a novel approach termed (GA-DOA) for optimizing speech enhancement tasks. Our method combines an improved short-time Fourier transform (STFT) and an optimized deep U-Net, with GA-DOA used to fine-tune the parameters. Additionally, feature extraction employs Mel-frequency cepstral coefficients (MFCCs), spectral features, and one-dimensional convolutional neural networks (1D-CNN). To select the most effective features, we employ GA-DOA-assisted feature selection. These optimized features are then fed into our proposed hybrid model for speech recognition (HMSR), which integrates bidirectional long short-term memory (BiLSTM) with the gated recurrent unit (GRU). Experimental results reveal that our proposed model achieves superior recognition rates and significantly lowers the word error rate (WER), thereby demonstrating enhanced system performance, even in noisy environments.

Similar content being viewed by others

Data Availability Statement

This manuscript has associated data in a data repository. [Authors’ comment: The developed ASR model utilizes speech audio from four datasets sourced which are publically available from three different databases: the Multilingual and Code-Switching ASR Challenge dataset, the LibriSpeech ASR corpus, and the Crowdsourced High-Quality Kannada Multi-Speaker Speech Dataset. Datasets 1 and 4—Multilingual and Code-Switching ASR Challenge datasets: These datasets, obtained from [23], consist of three Indian languages: Hindi, Marathi, and Odia. Dataset 2—LibriSpeech ASR corpus: This dataset [24] is derived from audiobooks selected for the LibriVox project. Dataset 3—Crowdsourced High-Quality Kannada Multi-Speaker Speech dataset: This dataset [25] comprises recordings from native Kannada speakers located in Karnataka. For the additive noise, we took the noisy samples from NOISEX-92 [39] database and mixed them with different noises at different SNR levels.]

References

P. Bawa, V. Kadyan, Noise robust in-domain children speech enhancement for automatic Punjabi recognition system under mismatched conditions. Appl. Acoust. 175, 107810 (2021)

G. Thimmaraja Yadava, H.S. Jayanna, Enhancements in automatic Kannada speech recognition system by background noise elimination and alternate acoustic modelling. Int. J. Speech Technol. 23(1), 149–167 (2020)

N. Upadhyay, H.G. Rosales, Bark scaled oversampled WPT based speech recognition enhancement in noisy environments. Int. J. Speech Technol. 23(1), 1–12 (2020)

P. Wang, K. Tan et al., Bridging the gap between monaural speech enhancement and recognition with distortion-independent acoustic modeling. IEEE/ACM Trans . Audio Speech Lang. Process. 28, 39–48 (2019)

C.H. You, M. Bin, Spectral-domain speech enhancement for speech recognition. Speech Commun. 94, 30–41 (2017)

Y. Shao, C.-H. Chang, Bayesian separation with sparsity promotion in perceptual wavelet domain for speech enhancement and hybrid speech recognition. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 41(2), 284–293 (2010)

C. Donahue, B. Li, R. Prabhavalkar, Exploring speech enhancement with generative adversarial networks for robust speech recognition, in: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, 2018, pp. 5024–5028

G. Kovács, L. Tóth, D. Van Compernolle, Selection and enhancement of gabor filters for automatic speech recognition. Int. J. Speech Technol. 18(1), 1–16 (2015)

X. Xiao, S. Zhao, D.H. Ha Nguyen, X. Zhong, D.L. Jones, E.S. Chng, H. Li, Speech dereverberation for enhancement and recognition using dynamic features constrained deep neural networks and feature adaptation. EURASIP J. Adv. Signal Process. 2016(1), 1–18 (2016)

J. Novoa, J. Fredes, V. Poblete, N.B. Yoma, Uncertainty weighting and propagation in DNN-HMM-based speech recognition. Comput. Speech Lang. 47, 30–46 (2018)

C. Fan, J. Yi, J. Tao, Z. Tian, B. Liu, Z. Wen, Gated recurrent fusion with joint training framework for robust end-to-end speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 29, 198–209 (2020)

J. Cadore, F.J. Valverde-Albacete, A. Gallardo-Antolín, C. Peláez-Moreno, Auditory-inspired morphological processing of speech spectrograms: applications in automatic speech recognition and speech enhancement. Cogn. Comput. 5(4), 426–441 (2013)

J. Ming, D. Crookes, Speech enhancement based on full-sentence correlation and clean speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 25(3), 531–543 (2017)

B.K. Khonglah, A. Dey, S. Prasanna, Speech enhancement using source information for phoneme recognition of speech with background music. Circuits Syst. Signal Process. 38(2), 643–663 (2019)

N. Moritz, K. Adiloğlu, J. Anemüller, S. Goetze, B. Kollmeier, Multi-channel speech enhancement and amplitude modulation analysis for noise robust automatic speech recognition. Comput. Speech Lang. 46, 558–573 (2017)

J. Xue, T. Zheng, J. Han, Exploring attention mechanisms based on summary information for end-to-end automatic speech recognition. Neurocomputing 465, 514–524 (2021)

L. Chai, J. Du, Q.-F. Liu, C.-H. Lee, A cross-entropy-guided measure (CEGM) for assessing speech recognition performance and optimizing DNN-based speech enhancement. IEEE/ACM Trans. Audio Speech Lang. Process. 29, 106–117 (2020)

Y.-H. Tu, J. Du, C.-H. Lee, Speech enhancement based on teacher-student deep learning using improved speech presence probability for noise-robust speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 27(12), 2080–2091 (2019)

R.A. Ramadan, K. Yadav, Nonlinear acoustic noise cancellation based automatic speech recognition system (NANC-ASR) with convolutional neural networks. Int. J. Speech Technol. 25(3), 605–613 (2022)

S. Lokesh, P. Malarvizhi Kumar, M. RamyaDevi, P. Parthasarathy, C. Gokulnath, An automatic Tamil speech recognition system by using bidirectional recurrent neural network with self-organizing map. Neural Comput. Appl. 31(5), 1521–1531 (2019)

N. Saleem, J. Gao, M.I. Khattak, H.T. Rauf, S. Kadry, M. Shafi, Deepresgru: residual gated recurrent neural network-augmented Kalman filtering for speech enhancement and recognition. Knowl.-Based Syst. 238, 107914 (2022)

P. Agrawal, S. Ganapathy, Modulation filter learning using deep variational networks for robust speech recognition. IEEE J. Sel. Top. Signal Process. 13(2), 244–253 (2019)

A. Diwan, R. Vaideeswaran, S. Shah, A. Singh, S. Raghavan, S. Khare, V. Unni, S. Vyas, A. Rajpuria, C. Yarra, et al., Multilingual and code-switching ASR challenges for low resource Indian languages, arXiv preprint arXiv:2104.00235 (2021)

V. Panayotov, G. Chen, D. Povey, S. Khudanpur, Librispeech: an asr corpus based on public domain audio books, in, IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE 2015, 5206–5210 (2015)

F. He, S.-H. C. Chu, O. Kjartansson, C. Rivera, A. Katanova, A. Gutkin, I. Demirsahin, C. Johny, M. Jansche, S. Sarin, K. Pipatsrisawat, Open-source Multi-speaker Speech Corpora for Building Gujarati, Kannada, Malayalam, Marathi, Tamil and Telugu Speech Synthesis Systems, in: Proceedings of The 12th Language Resources and Evaluation Conference (LREC), European Language Resources Association (ELRA), Marseille, France, 2020, pp. 6494–6503. https://www.aclweb.org/anthology/2020.lrec-1.800

J.-W. Hwang, R.-H. Park, H.-M. Park, Efficient audio-visual speech enhancement using deep u-net with early fusion of audio and video information and RNN attention blocks. IEEE Access 9, 137584–137598 (2021)

H. Zhang, H. Huang, H. Han, Attention-based convolution skip bidirectional long short-term memory network for speech emotion recognition. IEEE Access 9, 5332–5342 (2020)

G. Cybenko, Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2(4), 303–314 (1989)

V. Nair, G. E. Hinton, Rectified linear units improve restricted boltzmann machines, in: Proceedings of the 27th international conference on machine learning (ICML-10), pp. 807–814 (2010)

J.-R. Cano, Analysis of data complexity measures for classification. Expert Syst. Appl. 40(12), 4820–4831 (2013)

S. Mirjalili, A. Lewis, The whale optimization algorithm. Adv. Eng. Softw. 95, 51–67 (2016)

A. Siabi-Garjan, R. Hassanzadeh, A computational approach for engineering optical properties of multilayer thin films: particle swarm optimization applied to bruggeman homogenization formalism. Eur. Phys. J. Plus 133, 1–11 (2018)

W.-T. Pan, A new fruit fly optimization algorithm: taking the financial distress model as an example. Knowl.-Based Syst. 26, 69–74 (2012)

W. Feng, Convergence analysis of whale optimization algorithm. J. Phys: Conf. Ser. 1757(1), 012008 (2021). https://doi.org/10.1088/1742-6596/1757/1/012008

Q. Zhao, C. Li, Two-stage multi-swarm particle swarm optimizer for unconstrained and constrained global optimization. IEEE Access 8, 124905–124927 (2020)

B. Xing, W.-J. Gao, B. Xing, W.-J. Gao, Fruit Fly Optimization Algorithm. Innovative Computational Intelligence: A Rough Guide to 134 Clever Algorithms (Springer, Berlin, 2014)

A.K. Bairwa, S. Joshi, D. Singh, Dingo optimizer: a nature-inspired metaheuristic approach for engineering problems. Math. Probl. Eng. 2021, 1–12 (2021)

H. Peraza-Vázquez, A.F. Peña-Delgado, G. Echavarría-Castillo, A.B. Morales-Cepeda, J. Velasco-Álvarez, F. Ruiz-Perez, A bio-inspired method for engineering design optimization inspired by dingoes hunting strategies. Math. Probl. Eng. 2021, 1–19 (2021)

A. Varga, H.J. Steeneken, Assessment for automatic speech recognition: Ii. noisex-92: a database and an experiment to study the effect of additive noise on speech recognition systems. Speech Commun. 12(3), 247–251 (1993). https://doi.org/10.1016/0167-6393(93)90095-3

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kumar, T.N.M., Kumar, K.G., Deepak, K.T. et al. Group Attack Dingo Optimizer for enhancing speech recognition in noisy environments. Eur. Phys. J. Plus 138, 1145 (2023). https://doi.org/10.1140/epjp/s13360-023-04775-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjp/s13360-023-04775-8