Abstract

Although entanglement is a basic resource for reaching quantum advantage in many computation and information protocols, we lack a universal recipe for detecting it, with analytical results obtained for low-dimensional systems and few special cases of higher-dimensional systems. In this work, we use a machine learning algorithm, the support vector machine with polynomial kernel, to classify separable and entangled states. We apply it to two-qubit and three-qubit systems, and we show that, after training, the support vector machine is able to recognize if a random state is entangled with an accuracy up to \(92\%\) for the two-qubit system and up to \(98\%\) for the three-qubit system. We also describe why and in what regime the support vector machine algorithm is able to implement the evaluation of an entanglement witness operator applied to many copies of the state, and we describe how we can translate this procedure into a quantum circuit.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantum entanglement [1] is one of the main features that distinguish between classical and quantum states and represents one of the basic ingredients for reaching quantum advantage in computation and information protocols [2,3,4]. An N-particle state is entangled if its density operator cannot be written as tensor product or sum of tensor products of N single-particle density operators or, in other words, if the state of the system cannot be entirely described considering its N single components only. Despite the simplicity of such a definition, the problem of identifying, classifying, and quantifying entanglement is mathematically extremely hard. Many analytical and numerical results have been obtained in the study of bipartite entanglement, including the well-known positive partial transpose (PPT) criterion, which establishes that, for two-qubit and qubit-qutrit states, the positivity of the partial transposition of the density operator provides a necessary [5] and sufficient [6] condition for entanglement. In spaces of higher dimension, however, the PPT criterion offers only a necessary condition for separability [5], reflecting the fact that entanglement of an N-particle system (with \(N\ge 3\)) is much richer in comparison with the bipartite case. This is because quantum entanglement is a “monogamous” property that can be shared among the different parts in many non-equivalent ways and not freely, since the degree of entanglement between any two of its parts influences the degree of entanglement that can be shared with a third part [7, 8]. In the last two decades, there has been a great effort to recognize if a state with \(N\ge 3\) is entangled and many analytic results have been found, see the review papers [9,10,11]. However, even in the simplest case of three qubits, a general solution is still missing.

In this paper, we tackle the still open question of finding if a given state is entangled or separable as a classification problem.

Many classification problems can be solved efficiently by machine learning (ML) algorithms [12, 13], whose goal is to find a way to infer, from the available labeled data, the class of an unlabeled data point. Neural network ML algorithms for classification of entangled states have already been adopted in previous works, such as in Ref. [14] for the classification of two-qubit states labeled by means of Bell’s inequalities, or in Ref. [15], where a classifier is trained to recognize separable states of multi-qubit systems.

Another well-known ML algorithm is the support vector machine (SVM) [16], which has been proved to be very solid in solving classification problems in image recognition [17], medicine [18, 19], and biophysics [20] among others. A collection of applications of the SVM algorithm can be found in the review paper [21]. The SVM algorithm has been recently investigated also for classification of entanglement in some specific examples. In Ref. [22], two-qubit states have been analyzed via a bootstrap-aggregating convex-hull approximation, which was benchmarked against a SVM that uses a radial basis function kernel: an error rate of 8.4 was found. In [23], instead, SVM with a linear kernel was used to construct a witness operators for four-qubit states.

In this work, we put forward a new approach to SVM, exploiting the so-called kernel trick [13] with polynomial kernels of variable degree n, in which we train a SVM to classify entangled and separable states with a high predictive power, specializing the analysis first to the case of the two-qubit system, as a benchmark, and then generalizing it to the classification of different classes of entangled states of a three-qubit system. We present an accurate analysis of the performance of the algorithm which, combined with a large sample set, provides a separating hyperplane with accuracy of 92% in the case of n=8. The performance of the algorithm is evaluated through the following metrics: the accuracy a, corresponding to the percentage of true positives, i.e., the (either separable or entangled) states that are correctly classified, the precision p that measures the percentage of predicted entangled states that are really entangled, and the recall r that measures the percentage of entangled states that are correctly classified. The hyperparameters of the algorithm are chosen to get \(p=1\) and the maximum possible value of r on the validation set.

We exploit the geometrical interpretation of SVM to effectively implement an entanglement witness operator, which does not misclassify separable states as entangled while also minimizing the number of entangled states that are misclassified as separable. Such witness operator is explicitly constructed for a linear kernel, obtaining a standard witness functional over the space of density matrices of the system, as well as in the case of a nonlinear polynomial kernel of degree n. In the latter case, which corresponds to the construction of a witness functional over n-copies of the system, the performance of the algorithm increases. Finally, we present a digital quantum circuit, which is able to execute the construction and the evaluation of such entanglement witnesses.

The content of the paper is as follows: In Sect. 2.1, we review the entanglement classification for two-qubit and three-qubit systems, with emphasis on the analytical results that we use to generate the dataset of pure and mixed states [10, 22,23,24,25,26]. In Sect. 2.2, we describe the SVM algorithm and the kernel trick. In Sect. 3.1, we relate the separating hypersurface obtained via the SVM to the evaluation of an entanglement witness operator, providing for that also an operational procedure that can be implemented on a digital quantum computer.

In Sect. 3.2, we train the SVM with two-qubit states for which we have an exact classification, i.e., the PPT criterion, and eventually test our method on a large sample. Section 3.3 is devoted to the extension of our analysis to three-qubit systems. Finally, in Sect. 4 we draw our conclusion and give outlooks for possible future research directions.

2 Methods

2.1 Entanglement classification

In this section, we give a brief overview of the entanglement in two-qubit and three-qubit systems, with emphasis on the different analytical results found in the literature to classify entangled and separable states.

Let us first consider a pure quantum state \(|\psi \rangle \) defined in the Hilbert space \(\mathcal {H}^{\otimes N}\) of N identical particles and described by the density operator \(\hat{\rho }=|\psi \rangle \langle \psi |\).

The state \(\hat{\rho }\) can be written as a product state of \(M\le N\) density operators [27] as

where M is the number of non-product terms with which the state can be written. If \(M=N\), the state is called fully separable, and each \(\hat{\rho }_j\) acts on the one-particle space \(\mathcal {H}\). If \(M<N\), at least one of the density operators \(\hat{\rho }_j\) describes more than one particle. If \(M=1\), the state \(\hat{\rho }\) is called genuinely multipartite entangled (GME).

In the case of a two-qubit state, with \(N=2\) and \(\mathcal {H}=\mathbb {C}^2\), the system can either be separable, with \(M=2\), or entangled, with \(M=1\). In case the system is composed by three qubits, labeled as A,B and C, we have \(N=3\) and \(\mathcal {H}=\mathbb {C}^2\). The state is fully separable (SEP) when \(M=3\), and therefore, Eq.(1) reads as \(\hat{\rho }=\hat{\rho }_A\otimes \hat{\rho }_B\otimes \hat{\rho }_C\). When \(M=2\) , the state is called biseparable, and the density operator can be written as \(\hat{\rho }_A\otimes \hat{\rho }_{BC}\) (A-BC), \(\hat{\rho }_B\otimes \hat{\rho }_{AC}\) (B-AC) or \(\hat{\rho }_C\otimes \hat{\rho }_{AB}\) (C-AB). When \(M=1\) the state is GME. GME states play an important role to devise robust distribution protocols [28].

Mixed states are a statistical mixture of pure states [1] and are described by a density operator of the form

where \(\sum _ip_i=1\) and \(\hat{\rho }_i\) are pure states. The mixed state \(\hat{\rho }\) is fully separable when each pure state \(\hat{\rho }_i\) is fully separable.

For a two-qubit system (as well as for a qubit-qutrit system), the PPT criterion [5, 6] offers a necessary and sufficient condition for labeling a quantum state as entangled: a two-qubit state is separable if and only if the partial transposition of the density matrix returns a density matrix. Since the number of negative eigenvalues of the partially transposed density matrix is at most one [29], the PPT criterion can be simplified to the calculation of an expectation value. In [30], the authors have found an explicit description of the PPT criterion in terms of a witness operator applied on four copies of the state.

In all other cases, the PPT criterion offers only a necessary condition for separability [5].

The set of three-qubit states, called A, B and C, in terms of their level of entanglement. GME is the set of genuinely multipartite entangled states, SEP is the set of fully separable states, and A-BC, B-AC, C-AB represent the three possible factorization of the density operator in the biseparable case. Lines between sets of biseparable states represent the convex hull of biseparable states. The states are represented by circles. The segments joining them are their convex combinations. In the figure are represented convex combination of a state in B-AC with states in GME (black continuous line), in C-AB (green dashed line), and in A-BC (red dotted line). Depending on the weights given to the convex combination, the resulting state can lie in a different subset

When considering the statistical mixture of states of three qubits, it is not straightforward to tell if the state is separable, biseparable, or GME. Figure 1 shows the general classification of three-qubit states. The convex set of fully separable states is represented by the innermost circle, which is surrounded by the three kinds of biseparable states (A-BC,B-AC,C-AB) and finally by the GME states (largest circle). The segment joining two points represents the convex combination of two states. A statistical mixture of states can lie in any of the aforementioned classes. When the state is a statistical mixture of biseparable states, with respect to different partitions, the result can either be fully separable, or biseparable with respect to one of the partitions (green dashed segment in Fig.1), or biseparable with respect to none of the partitions (red dotted segment in Fig.1), thus forming the convex hull of the biseparable states. It is known that all GME states can be obtained by applying SLOCC (stochastic local operations and classical communication) operations [22] either on the GHZ state

or on the W state

To correctly label as entangled or separable the three-qubit states used to construct the dataset for the ML classifier, we will resort to the analytical calculation of several entanglement measures [31]. In the remaining of this section, we are going to introduce these analytical results, and we refer to Appendix B for the description of the states. In general, an entanglement measure \(\mu \) is a real function defined on the set of the states that does not increase on average under the application of a SLOCC transformation [22, 31], for which two states belong to the same class if you can transform one into the other with an invertible local operation, and the application of SLOCC brings a state into a class with lower or equal value of any entanglement measure.

Pure states can be uniquely [22] labeled with the entanglement entropy S, the GME-concurrence \(C_{\text {GME}}\) and the three-tangle \(\tau \). The entanglement entropy \(S_m\) [32] corresponds to the Von-Neumann entropy calculated on the reduced system \(\hat{\rho }_{m}=\text {Tr}_{ABC-m}[\hat{\rho }]\), with \(m=\)A,B,C:

Genuinely multipartite entanglement concurrence [33] is defined as

where \(\mathcal {P}=\{AB,BC,AC\}\) is the set of the possible subsystems. Finally, the three-tangle \(\tau \) [7] accounts for the genuinely multipartite entanglement of the GHZ class. Given the reduced density operator \(\hat{\rho }_{AB}\) of the subsystem of the qubits A and B, we define the matrix \(\tilde{\rho }_{AB} = (\sigma _y\otimes \sigma _y)\hat{\rho }_{AB}(\sigma _y\otimes \sigma _y)\), and we denote as \(\lambda _{AB}^{1},\lambda _{AB}^{2}\) the square root of the first and second eigenvalues of \(\tilde{\rho }_{AB}\hat{\rho }_{AB}\). Then, the three-tangle is

with \(\lambda ^{i}_{AC}\) defined in a similar manner as \(\lambda ^{i}_{AB}\), for \(i=1,2\).

In the case of mixed states, the entanglement measures defined above fail to classify entangled states. One needs to calculate the convex-roof extension (CRE) [34] of an entanglement measure \(\mu \) defined as

where P stands for all the possible decompositions \(\{p_i,\hat{\rho }_i\}\) such that \(\hat{\rho }=\sum _ip_i\hat{\rho }_i\), with \(\sum _ip_i=1\) and \(\hat{\rho }_i\) is a pure state. This is in general difficult to calculate, and numerical solutions are usually very costly. Therefore, we use particular types of mixed states for which the analytic solutions for the CRE of the three-tangle [25, 26, 35] and the CRE of GME-concurrence [10, 23, 24] are available. These are the GHZ-symmetric states, the X-states, and the statistical mixture of GHZ and W states, which are defined in Appendix A, which will be used in the following to construct the three-qubit dataset of the SVM algorithm. Table 1 summarizes how the labels are assigned to these different type of states.

2.2 Support vector machine

In this section, we give a general introduction of the SVM.

Suppose that we have a dataset divided into two classes, composed of m pairs \(\{(\textbf{x}_i,y_i)\}\). The i-th observation \(\textbf{x}_i\in \mathbb {R}^d\) describes the characteristics of the point, while \(y_i=\pm 1\) labels its class. The SVM [13, 16] is a ML classification algorithm based on the assumption that a hyperplane, or more generally a \(d-1\) surface, which separates the two classes in the feature space exists. The hyperplane is defined through a linear decision function \(f(\textbf{x})\), with parameters \(\textbf{w}\in \mathbb {R}^d\), called weights, and \(b\in \mathbb {R}\), called bias, given as

The SVM algorithm aims to find the hyperplane \((\textbf{w},b)\) that maximizes the margin \(1/\Vert \textbf{w}\Vert \) (\(\Vert \cdot \Vert \) being the Euclidean norm) between itself and the closest points of the two classes. Figure 2 shows a two-dimensional dataset made of two classes, the circles and the trangles, that are separated by a hyperplane.

Representation of the separating line between two data classes, the circles and the triangles, in a two-dimensional plane. The two classes are linearly separable by the straight line \(y=wx+b\). The margin, i.e., the distance between the hyperplane and the closest data point of each class, is 1/|w|. The lines \(y-wx-b=\pm 1\) cross the support vectors, here drawn with a filled shape. The slack variables \(\zeta _1\) and \(\zeta _2\) penalize the possible misclassification of the two gray triangle points

Maximizing the margin corresponds to finding

We can assume that the hyperplane defined by the decision function f is not able to perfectly separate the two classes. Thus, we can introduce m slack variables \(\zeta _i\), one for each observation \(\textbf{x}_i\), to account for the constraints of f in Eq. (9) that are not satisfied. Hence, Eq.(10) is refined into

The constants \(\lambda _{y_{i}}\ge 0\) are called regularization parameters. Geometrically, the action of the slack variables \(\zeta _i\) can be seen as a non-smooth deviation of the hyperplane, as shown in Fig.2.

In order to construct a Lagrange function from which we can variationally derive Eq. (11a), we use the so-called Karush–Kuhn–Tucker (KKT) complementary conditions [13]: after introducing dual variables \(\alpha _i\) and \(\lambda _{y_i}\), the constraints can be re-expressed as:

These KKT conditions state that if the variable \(\textbf{x}_i\) lies on the correct side of the hyperplane, with \(y_i(\textbf{w}_i^T\textbf{x}_i+b)>1\), then \(\alpha _i=0\) and \(\zeta _i=0\). On the other hand, if the variable \(\textbf{x}_i\) lies exactly on the margin, with \(y_i(\textbf{w}_i^T\textbf{x}_i+b)=1\), then \(\alpha _i =\lambda _{y_i}\), and \(\zeta _i=0\). Lastly, if the point \(\textbf{x}_i\) lies on the wrong side of the hyperplane, we have \(0<\alpha _i<\lambda _{y_i}\) and \(\zeta _i>0\). In general, a number \(m'<m\) of points \(\textbf{x}_i\) have a corresponding dual variable \(\alpha _i\ne 0\). These points, that lie on the separating hypersurface, are called support vectors.

The KKT conditions allow us to write the Lagrange function of the problem as

where \(\alpha _i,\beta _i\ge 0\) are the Lagrange multipliers introduced for the constraints (11b) and (11c), respectively. The optimality condition is satisfied when the partial derivatives with respect to the primal variables \(\textbf{w},b,\zeta _i\) vanish,

Substituting Eqs. (14a), (14b) and (14c) into Eq. (13) yields the Lagrange function expressed in terms of the multipliers \(\mathbb {\alpha }=(\alpha _1,\dots ,\alpha _m)\)

where K is called kernel matrix, and the component \(K_{ij}\) corresponds to the inner product \(\langle \textbf{x}_i,\textbf{x}_j\rangle \) between the feature vectors.

The matrix K encodes the power of the SVM algorithm, which is able to perform a separation with a higher-degree decision function. In principle, to do so one has to embed the feature vectors into a higher-dimensional space with an embedding function \(\Phi :\mathbb {R}^d\rightarrow \mathbb {R}^D\), with \(D>d\), and find the linear hyperplane that separates the data classes in the embedding space \(\mathbb {R}^D\). As a result, the Lagrange function can be written as in Eq.(15), with \(K_{ij}=\langle \Phi (\textbf{x}_i),\Phi (\textbf{x}_j)\rangle \) being the inner product in the embedding space. The procedure of adopting a specific kernel matrix K in order to move to a higher-dimensional space is known as kernel trick [13].

One common choice is the polynomial kernel of degree n, with \(K(\textbf{x},\textbf{x}')=(\langle \textbf{x},\textbf{x}'\rangle +1)^n\). It corresponds to embedding the d dimensional vectors \(\textbf{x}\) and \(\textbf{x}'\) into a space of dimension [13]

where we can look for a separating \((D-1)\)-surface. The components of \(\Phi (\textbf{x})\) in the D-dimensional space come from the D terms in the multinomial expansion of degree n. The constant 1 plays an important role, as it allows the presence of all the terms up to degree n in the decision function f, while in its absence the decision function has only the terms of degree exactly n. As the value of D is extremely large for real case scenarios, the kernel trick saves us from having to explicitly embed the data in a much larger space and offers a smart way of coping with the embedding process.

The classification of a new data point \(\textbf{x}\) consists simply in calculating the decision function

and the label will be given by the sign of f(x). Note that the sum is made only over the \(m'\) support vectors, as for the other points the Lagrange multipliers are zero.

3 Results and discussion

3.1 SVM-derived entanglement witnesses

In this section, we show how the separating hyperplane provided by the SVM algorithm as a result of the minimization in Eq. (11a) can be translated into a Hermitian operator that we can measure to detect entanglement. We also describe a procedure that can be implemented on a quantum computer in order to measure its mean value. The hyperplane inferred by the SVM has a huge similarity with the concept of entanglement witness. The classification in the SVM is calculated by considering the sign of the decision function f(x). In a similar manner, an entanglement witness operator \(\hat{W}\) is such that if \(\text {Tr}[\hat{W}\hat{\rho }]>0\) then the state \(\hat{\rho }\) is entangled [27], providing a sufficient but not necessary condition for entanglement detection. The convexity and closure of separable states ensure us that we can find such operator [1].

Schematic representation of a classification performed on a convex set. a the separating hyperplane, represented by the dotted line, divides the two classes \(+1\) on the right, and \(-1\) on the left, with a certain amount of true and false positives, \(T_+,F_+\), and true and false negatives \(T_{-},F_{-}\), therefore the precision \(p<1\). b the separating hyperplane has been built to mimic the behavior of an entanglement witness operator \(\hat{W}\). In this case, there are not false positives, and the precision \(p=1\)

Consistently with the choices of Table 1, we assign to the separable states the label \(-1\) and to the entangled states the label \(+1\). In order to evaluate the performance of the classifier, we use the accuracy

where m is the total number of states considered in the dataset. Here \(T_{+}, T_{-}\) are the number of true positives/negatives, respectively, i.e., the number of entangled/separable states correctly classified as entangled/separable. Furthermore, the precision p and the recall r associated with the classification of entangled states are given by:

where \(F_{+}, F_{-}\) represent the number of false positives/negatives, respectively, i.e., the number of separable/entangled states misclassified as entangled/separable. The condition \(p=1\) indicates that we are not misclassifying separable states as entangled. Therefore, the hyperplane \((\textbf{w},b)\) that we find with this condition respects the definition of entanglement witness, see Fig. 3. We can get this condition by fine-tuning the regularization hyperparameters \(\lambda _{+}\) and \(\lambda _{-}\) defined in equation (13). In fact, we assign to each data point the regularization parameter \(\lambda _+\) or \(\lambda _{-}\) depending on whether it belongs to the class of entangled or separable states, respectively. The condition \(\lambda _{+}>\lambda _{-}\) penalizes the misclassification of entangled states. On the other hand, the condition \(\lambda _{-}>\lambda _{+}\) penalizes the misclassification of separable states, favoring thus the classifier to correctly predict \(-1\), leading in turn to a higher precision in classification of entangled states at the cost of a lower recall. In terms of the classification algorithm, the witness operator \(\hat{W}\) is related to a hyperplane with perfect precision of entangled states. When the number of false positive \(F_{+}\) is minimized, we mimic an entanglement witness operator \(\hat{W}\), with the property that \(\text {Tr}[\hat{W}\hat{\rho }]>-b\) if and only if the state is entangled, with b being the bias of the hyperplane, see Eq. (9).

The operation \(\text {Tr}[\hat{W}\hat{\rho }]\) is related to a SVM hyperplane with linear kernel, \(K(\textbf{x}_i,\textbf{x}_j)=\textbf{x}_i^T\textbf{x}_j\). In the following, we will show that a more precise classification of entangled states can be carried out by nonlinear kernels of degree n. The latter correspond to exotic witness operators \(\hat{W}_n\) that work on n copies of the state \(\hat{\rho }\).

With little algebra, one can show that the decision function (17) can be written as:

with the nonlinear witness operator \(\hat{W}_n\) given by

where \(m'\) is the number of support vectors states. Here, \(\mathbbm {1}_2^{(k)}\) indicates the identity matrix in the space of k qubits, with dimension \(2^k\). The operators \(\hat{\omega }_i\) are the density operators of the \(i-th\) support vector state, which can be found, together with the values of \(\alpha _{i,l}\), via a classical training of the SVM classifier.

In this way, the classification of an entangled state has become the evaluation of the mean value of the Hermitian operator \(\hat{W}_n\) on the state \(\hat{\rho }^{\otimes n}\). As far as we know, Eq. (22) is a novel equation that relates a classical SVM hyperplane to a nonlinear witness operator.

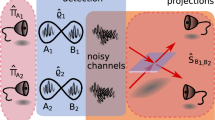

In order to measure the mean value of the Hermitian operator \(\hat{W}_n\) , we can use the procedure described in [36] and shown in Fig. 4, where a controlled unitary operator C-U, with \(U=\exp (-i\hat{W}_n t)\), is applied on a system composed by the n copies of the system \(\hat{\rho }\).

Scheme for measuring the mean value of the operator \(\hat{W}_n\). The control qubit is in the state \(|+\rangle \). A controlled unitary gate with operator \(\exp (-i\hat{W}_nt)\) is applied on the n copies of the system state \(\hat{\rho }\). The measurement is performed on the control qubit after a \(\pi /2\) rotation \(R_x\). Thus, the mean value is obtained as \(\langle \hat{W}_n\rangle =\frac{p_1-p_0}{2t}\), with \(p_0\) and \(p_1\) being the probability that the outcome of the measurement is 0 or 1, respectively

The control qubit is initialized in the state \(|+\rangle \). For small t, we can expand the operator \(U\approx 1 -it\hat{W}_n+\mathcal {O}(t^2)\). Measuring the mean value of the Pauli matrix \(\langle \sigma _y\rangle \) on the control qubit corresponds to measuring \(t\langle \hat{W}_n\rangle \). This can be achieved by transforming the \(\sigma _y\) basis into the computational basis with the operator \(R_x(\pi /2)=\exp (-i\sigma _x\pi /4)\), and measuring the control qubit. The probability of getting as outcome the state \(|0\rangle \) is \(p_0=1/2-t\langle \hat{W}_n\rangle \), whereas the probability of getting the outcome \(|1\rangle \) is \(p_1=1/2+t\langle \hat{W}_n\rangle .\) Calculating \(p_1-p_0\) and dividing by 2t lead to the wanted result. Notice that the operator U is not a local operation, and therefore, the state \(\hat{\rho }\) after the protocol will not in general conserve the level of entanglement. Thus, if the goal of the circuit is to use an entangled state as input of a quantum protocol, we would need \(n+1\) copies of \(\hat{\rho }\).

3.2 Two-qubit system

In this section, we show the results of the SVM classification for a two-qubit system. In order to provide the SVM a suitable dataset, we need to uniformly sample the density matrices. In principle, different measures might be used (see, for example, Ref. [37]), but in order to avoid problems that might emerge with monotonicity and overparametrization [38], we decided to work with the Hilbert–Schmidt (HS) measure [39]. Thus, we generate \(4\times 4\) matrices A with \(\mathbb {C}\)-number elements \(A_{ij}\in \mathcal {N}(0,1)\), \(\mathcal {N}(0,1)\) being the normal distribution with zero mean and unitary variance. The quantum state is obtained from the positive trace-one Hermitian matrix \(\hat{\rho }=A A^\dagger /\text {Tr}[A A^\dagger ]\).

Uniformly generating two-qubit quantum states accordingly to the HS measure leads to an imbalance in the classes, as the ratio between separable and entangled states is approximately 1 to 4 (\(\approx 0.24\)) [40]. We balance our dataset in order to get \(50\%\) of entangled and \(50\%\) of separable states. This inter-class balance allows us to have more control of the hyperparameters. Indeed, in this case, when \(\lambda _-=\lambda _+\) the SVM does not prefer any labeling on a new data point. Hence, we separately generate an equal number of entangled and separable states, labeling them with the PPT criterion [6]. Our dataset consists of \(10^6\) samples for each class, \(98\%\) of which are used in the training set, \(1\%\) are used in the validation set, and \(1\%\) are used in the test set. Each data point lives in the feature space \(\mathbb {R}^{15}\) and is obtained by taking the real and imaginary part of the components of the \(4\times 4\) density matrix, which is Hermitian and trace-one.

Two qubits. Precision p and recall r defined in Eqs. (19) and (20) of the two-qubit entanglement classifier as a function of the regularization parameter \(\lambda _{-}\) (\(\lambda _+=1\)), on a validation set of \(10^4\) samples for each class in the case of polynomial kernel of degree a \(n=2\), b \(n=3\), and c \(n=4\). The vertical dotted line refers to the point \(\lambda _{-}=\lambda _n^*\) for each \(n=2,3,4\), where r is maximum with the condition \(p=1\) satisfied. (d) The accuracy on the validation set for the classifier different degrees \(n=2,3,4\). The empty markers correspond to \(\lambda _-=\lambda _n^*\)

In order to fine-tune the hyperparameters, we look at the validation set. The accuracy (18), the precision and the recall (19),(20) depend on the ratio \(\lambda _{-}/\lambda _+\). Figure 5 shows the obtained precisions and recalls for different degrees n of the kernel, \(n=2\) (a), \(n=3\) (b), \(n=4\) (c), and the resulting accuracies on the validation set, calculated varying the hyperparameters \(\lambda _{\pm }\). Note that using the imbalanced training set, the result would have been analogous, but shifted, and we would have got the same results using a larger value of \(\lambda _+.\) For each degree of the polynomial kernel n, we find with a grid search the value of \(\lambda _{-}/\lambda _+\) s.t. on the validation set \(p=1\) and r is maximum. This point, with \(\lambda _{-}=\lambda _n^*\) and \(\lambda _{+}=1\), is located at the orange vertical line in the plots in Figs. 5a, b and c. Figure 5(d) shows the total accuracy evaluated on the validation set. We see that the classification accuracy increases for higher degree n. Note that the highest accuracy is reached in correspondence of the point \(\lambda _+=\lambda _{-}\), corresponding to a balanced dataset with an equal number of entangled and separable states. In fact, the value \(\lambda _{-}/\lambda _+\) where we reach the highest accuracy changes depending on the population of the two classes in the dataset.

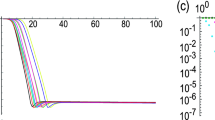

Two qubits. a The accuracy obtained by the n degree polynomial classifier of two-qubit states, with \(n=1,2,\cdots ,8\). The classifier gains accuracy with a higher n. The trend of the curve is the same for the three sets, showing that the classifier is able to generalize. b The accuracy of the classifier on the two classes

Different degrees n of the polynomial kernel lead to different performances of the algorithm. Figure 6a shows the accuracy of the classifier with \(\lambda _{+}=1\) and \(\lambda _{-}=\lambda _n^*\) on training, validation, and test set for \(n=1,2,\cdots ,8\). We see that by increasing the degree n we get a better accuracy in classification on the test set, reaching the value \(a\approx 0.92\) for the polynomial SVM with degree 8, which indicates that the classifier is capable of generalization. From Fig. 6b, we see that accuracy is almost one for separable states, meaning that we selected the hyperparameters such that separable states are almost always correctly classified, while it tends to a value slightly higher than 0.8 for entangled states.

We notice that the SVM is not able to fully recover the PPT criterion, which we know can be obtained by a polynomial function of degree \(n=4\) [29]. We will discuss the reasons why in Appendix B.

3.3 Three-qubit system

In the same spirit as the two-qubit classification, we look for the hyperplane that minimizes the number of misclassified separable states. The goal is to be as close as possible to the border of the convex set of the separable states and to the definition of entanglement witness. However, the three-qubit classification of entangled state has a different inherent nature, as we aim to classify our state into three different classes: the class of fully separable states, the class of biseparable states and the class of GME states. This is achieved by introducing two classifiers. We call SEP-vs-all the classifier that aims at splitting the space into fully separable states and either biseparable or GME states; this classifier defines the operator witness \(\hat{W}_S\). We call GME-vs-all the classifier that aims at splitting the GME states from the set of biseparable and fully separable states; this corresponds to the operator witness \(\hat{W}_E\). Note that the classifiers here defined are able to distinguish only the GME states that belong to the classes that we have used in the dataset, as shown in Table 1. In fact, they are not able to classify states whose classes have not appeared in the training set. This is intrinsic in the nature of ML classifiers, which rely on known data to label new states.

For a three-qubit system, the feature space is composed of 63 real numbers describing the real and imaginary parts of the independent components of the \(8\times 8\) Hermitian and trace-one density matrix. The datasets of the two classifiers are obtained generating \(2\times 10^5\) states, \(90\%\) of which are used in the training set, \(5\%\) are used in the validation set, and \(5\%\) are used in the test set. Pure and mixed fully separable states are easily generated by construction and labeled as \(-1\) for both the datasets of the two classifiers. In the SEP-vs-all classifier, mixed fully separable states are the \(50\%\) of the dataset, whereas they are \(25\%\) of the dataset used for the GME-vs-all classifier.

GME states are labeled as \(+1\) for both SEP-vs-all and GME-vs-all classifiers.

The GME states are labeled according to the three-tangle for generated pure GHZ states [22], defined in Eq. (3), GHZ-symmetric states [35], and statistical mixture of GHZ, W, and \(\bar{W}\) states [25]. In the case of mixed X-state [26], we use the GME-concurrence, Eq. (6). We refer the reader to Appendix B for the definition of these states. Once we have generated these states, a random local unitary transformation was applied on each qubit to increase the number of data and fill the different components of the density operator. Each category of GME states is generated with the same number of samples, and they are \(25\%\) of the SEP-vs-all dataset, and \(50\%\) of the GME-vs-all dataset. The qualitative difference in the datasets of the two classifiers lies in the label of biseparable states, as given in Table 1. For the classifier SEP-vs-all, only pure biseparable states can be considered in the dataset, as a mixture of biseparable state can also be fully separable. These states are generated and labeled as \(+1\). Their category represents the \(25\% \) of this dataset. On the other hand, the dataset of classifier GME-vs-all contains the mixture of biseparable states with respect to the same partition and labeled as \(-1\). This is because the mixture of biseparable states with respect to different partitions can either be biseparable or fully separable, as shown in Fig 1. They are the \(25\%\) of the samples in the GME-vs-all dataset.

Let us examine the accuracy of both classifiers on the different classes of states that we considered.

Figure 7a shows the accuracy on the test set of the classifiers GME-vs-all for different values of the degree n, \(n=2,3,\cdots ,10\). Figure 8a shows the accuracy obtained for the classifier SEP-vs-all on the test set. Figures 7b and 8b show the classification accuracy for each category of entanglement states used in the dataset on the corresponding test set. We notice that GHZ+W states are more difficult to classify for both GME-vs-all and SEP-vs-all classifiers. Instead, GHZ symmetric states are correctly labeled with an accuracy \(\approx 1\) by the GME-vs-all classifier, and with accuracy \(>0.9\) for \(n\ge 3\) by the SEP-vs-all classifier.

Three qubits. The accuracy obtained by the GME-vs-all classifier. a The accuracy on the training, validation and test sets. b The accuracy for different entanglement-type states used in the training set, as a function of the kernel degree n. The results are shown at the fixed ratio \(\lambda _{-}/\lambda _+\) obtained maximizing r on the validation set with the constraint \(p=1\)

Contrary to what happens for the two-qubit classifier, we observe that the GME-vs-all classifier shows overfitting for \(n>4\). This feature is captured by the spread between the accuracies of the training and the validation sets shown in Fig. 7a. The same happens to the SEP-vs-all classifier for \(n>6\).

Three qubits. The accuracy obtained by the SEP-vs-all classifier. a The accuracy on the training, validation and test sets. b The accuracy for different entanglement-type states used in the training set, as a function of the kernel degree n. The results are shown at the fixed ratio \(\lambda _{-}/\lambda _+\) obtained maximizing r on the validation set with the constraint \(p=1\)

4 Conclusions and remarks

In this work, we have investigated the possibility of using a SVM for classification of entangled states, for two-qubit and three-qubit systems.

The SVM algorithm, which is known to be suitable for binary classification of data points belonging to convex sets, learns the best hyperplane that separates the set of separable states from the set of entangled states. This construction has a strong analogy with that of an entanglement witness operator, for which the sign of the mean value calculated over the state is related to the separability of the latter. However, witness operators are usually linear operators. In this paper, we have shown that, exploiting the SVM algorithm and the kernel trick, we can use many copies of the state and construct non-linear witness operators, which show an increasing classification accuracy. We believe that these constructions can help us to gain knowledge about the geometry of the set of entangled states.

In the application of the SVM to the two-qubit system, we have evaluated accuracy, precision, and recall for kernels of degree n up to 8, reaching an increasing accuracy. For three-qubit systems, we have trained two classifiers, named SEP-vs-all, which distinguishes fully separable states to all the others, and GME-vs-all, for detection of GME states, with kernels of degree n up to 10. The SEP-vs-all classifier shows an accuracy on the test set of \(92.5\%\), whereas the GME-vs-all classifier reaches an accuracy of \(98\%\), in the best case corresponding to \(n=4\). Furthermore, we have checked the accuracy among the different types of states that we have used in the dataset. We stress here that the detection of entanglement by the SVM is limited to the classes that belong to the training set, as the SVM is not expected to generalize its results to unseen classes. It is up to the users of the algorithm to feed the SVM with a proper training set that fits their purpose.

Overall, the SVM algorithm has shown a high accuracy in detecting entanglement. We have also discussed how the classifier can be trained to favor higher precision in recognition of entangled states, at the expenses of a lower global accuracy. This feature can be used in quantum computation algorithms, where entanglement plays an important role for quantum advantage. Moreover, if a quantum protocol needs a specific type of entangled state, the SVM algorithm can be trained to recognize it and use it as input.

Although in this paper we obtain specific results for the case of two and three qubits, the SVM protocol provides a good method that can be used for more complicated systems, by training the machine on the specific data of the system. In this respect, it could be interesting to consider a larger number of qubits or even qudits. Indeed, entanglement classification is still an open line of research both for the theoretical and for fundamental aspects; we believe machine learning algorithms can provide a useful tool of analysis.

Data Availability Statement

This manuscript has associated data in a data repository. [Authors’ comment: The data are available upon reasonable request.]

Code availability

The code is available upon reasonable request.

References

R. Horodecki, P. Horodecki, M. Horodecki, K. Horodecki, Quantum entanglement. Rev. Mod. Phys. 81, 865–942 (2009). https://doi.org/10.1103/RevModPhys.81.865

R. Jozsa, N. Linden, On the role of entanglement in quantum-computational speed-up. Proc. R. Soc. London A 459(2036), 2011–2032 (2003). https://doi.org/10.1098/rspa.2002.1097. (Publisher: Royal Society. Accessed 2022-08-02)

W.K. Wootters, W.S. Leng, Quantum entanglement as a quantifiable resource [and discussion]. Phil. Trans R. Soc. A 356(1743), 1717–1731 (1998). (Publisher: The Royal Society. Accessed 2022-08-02)

O. Gühne, G. Tóth, Entanglement detection. Phys. Rep. 474(1), 1–75 (2009). https://doi.org/10.1016/j.physrep.2009.02.004. (Accessed 2022-01-28)

A. Peres, Separability criterion for density matrices. Phys. Rev. Lett. 77, 1413–1415 (1996). https://doi.org/10.1103/PhysRevLett.77.1413

M. Horodecki, P. Horodecki, R. Horodecki, Separability of mixed states: Necessary and sufficient conditions. Phys. Lett. A 223(1), 1–8 https://doi.org/10.1016/S0375-9601(96)00706-2arXiv:quant-ph/9605038. Accessed 2021-12-13

V. Coffman, J. Kundu, W.K. Wootters, Distributed entanglement. Phys. Rev. A 61, 052306 (2000). https://doi.org/10.1103/PhysRevA.61.052306

T.J. Osborne, F. Verstraete, General monogamy inequality for bipartite qubit entanglement. Phys. Rev. Lett. 96, 220503 (2006). https://doi.org/10.1103/PhysRevLett.96.220503

A. Acín, D. Bruß, M. Lewenstein, A. Sanpera, Classification of mixed three-qubit states. Phys. Rev. Lett. 87(4), 040401 (2001). https://doi.org/10.1103/PhysRevLett.87.040401. (Publisher: American Physical Society. Accessed 2023-02-22)

C. Eltschka, J. Siewert, Quantifying entanglement resources. J. Phys. A Math. Theor. 47(42), 424005 https://doi.org/10.1088/1751-8113/47/42/424005 . Accessed 2022-05-29

S. Szalay, Multipartite entanglement measures. Phys. Rev. A 92(4), 042329 https://doi.org/10.1103/PhysRevA.92.042329. Accessed 06-08-2022

S.B. Kotsiantis, I.D. Zaharakis, P.E. Pintelas, machine learning: a review of classification and combining techniques. Artif. Intell. Rev. 26(3), 159–190 https://doi.org/10.1007/s10462-007-9052-3 . Accessed 2022-08-02

B. Schölkopf, A.J. Smola, Learning with Kernels: support vector machines, regularization, optimization, and beyond. Adaptive Computation and Machine Learning. MIT Press

Y.C. Ma, M.H. Yung, Transforming Bell’s inequalities into state classifiers with machine learning. Npj Quantum Inf. 4(1), 1–10 https://doi.org/10.1038/s41534-018-0081-3. Number: 1 Publisher: Nature Publishing Group. Accessed 2022-08-02

C. Harney, S. Pirandola, A. Ferraro, M. Paternostro, Entanglement classification via neural network quantum states. New J. Phys. 22(4), 045001 https://doi.org/10.1088/1367-2630/ab783d. Accessed 2022-08-02

B.E. Boser, I.M. Guyon, V.N. Vapnik, A training algorithm for optimal margin classifiers. In: Proceedings of the fifth annual workshop on computational learning theory. COLT ’92, pp 144–152. Association for Computing Machinery (1992). https://doi.org/10.1145/130385.130401. Accessed 02-08-2022

M.A. Chandra, S.S. Bedi, Survey on SVM and their application in imageclassification. Int. J. Inf. Technol. 13(5), 1–11 (2021). https://doi.org/10.1007/s41870-017-0080-1

A. Hazra, S.K. Mandal, A. Gupta, Study and analysis of breast cancer cell detection using naïve Bayes, SVM and ensemble algorithms. Int. J. Comput. Appl. 145(2), 39–45 (2016). https://doi.org/10.5120/ijca2016910595

V.J. Kadam, S.S. Yadav, S.M. Jadhav, Soft-margin SVM incorporating feature selection using improved elitist GA for arrhythmia classification, in Intelligent Systems Design and Applications. ed. by A. Abraham, A.K. Cherukuri, P. Melin, N. Gandhi (Springer, Berlin, 2020), pp.965–976

Y.D. Cai, X.J. Liu, X.B. Xu, G.P. Zhou, Support vector machines for predicting protein structural class. BMC Bioinf 2(1), 3 (2001). https://doi.org/10.1186/1471-2105-2-3. (Accessed 2022-09-08)

J. Cervantes, F. Garcia-Lamont, L. Rodríguez-Mazahua, A. Lopez, A comprehensive survey on support vector machine classification: applications, challenges and trends. Neurocomputing 408, 189–215 (2020). https://doi.org/10.1016/j.neucom.2019.10.118. (Accessed 2022-09-06)

W. Dür, G. Vidal, J.I. Cirac, Three qubits can be entangled in two inequivalent ways. Phys. Rev. A 62(6), 062314 https://doi.org/10.1103/PhysRevA.62.062314 . Accessed 2022-02-22

C. Eltschka, A. Osterloh, J. Siewert, A. Uhlmann, Three-tangle for mixtures of generalized GHZ and generalized W states. New J. Phys. 10(4), 043014 (2008). https://doi.org/10.1088/1367-2630/10/4/043014

C. Eltschka, J. Siewert, A quantitative witness for Greenberger-Horne-Zeilinger entanglement. Sci. Rep. 2(1), 942 https://doi.org/10.1038/srep00942 . Accessed 2022-05-29

E. Jung, M.R. Hwang, D. Park, J.W. Son, Three-tangle for rank-three mixed states: mixture of Greenberger–Horne–Zeilinger, W, and flipped- W states. Phys. Rev. A 79(2), 024306 (2009). https://doi.org/10.1103/PhysRevA.79.024306. (Accessed 2022-08-01)

S.M.H. Rafsanjani, M. Huber, C.J. Broadbent, J.H. Eberly, Genuinely multipartite concurrence of \(n\)-qubit \(x\) matrices. Phys. Rev. A 86, 062303 (2012). https://doi.org/10.1103/PhysRevA.86.062303

M.A. Nielsen, I.L. Chuang, Quantum Computation and Quantum Information: 10th Anniversary Edition. Cambridge University Press (2010). https://books.google.it/books?id=-s4DEy7o-a0C

T.J.G. Apollaro, C. Sanavio, W.J. Chetcuti, S. Lorenzo, Multipartite entanglement transfer in spin chains. Phys. Lett. A 384(15), 126306 (2020). https://doi.org/10.1016/j.physleta.2020.126306. (Accessed 2022-09-19)

Sanpera, A., Tarrach, R., Vidal, G.: Local description of quantum inseparability. Phys. Rev. A 58(2), 826–830 https://doi.org/10.1103/PhysRevA.58.826. Number: 2 Publisher: American Physical Society. Accessed 2022-08-03

R. Augusiak, M. Demianowicz, P. Horodecki, Universal observable detecting all two-qubit entanglement and determinant-based separability tests 77(3), 030301 (2008). https://doi.org/10.1103/PhysRevA.77.030301. (Accessed 2023-05-06)

M.B. Plenio, S. Virmani, An introduction to entanglement measures. Quantum Inf. Comput. 7(1), 1–51 (2007)

V. Vedral, The role of relative entropy in quantum information theory. Rev. Mod. Phys. 74, 197–234 (2002). https://doi.org/10.1103/RevModPhys.74.197

W.K. Wootters, Entanglement of formation of an arbitrary state of two qubits. Phys. Rev. Lett. 80, 2245–2248 (1998). https://doi.org/10.1103/PhysRevLett.80.2245

A. Uhlmann, Entropy and optimal decompositions of states relative to a maximal commutative subalgebra. Open Syst. Inf. Dyn. 5(3), 209–228. https://doi.org/10.1023/A:1009664331611. Accessed 2022-08-03

R. Lohmayer, A. Osterloh, J. Siewert, A. Uhlmann, Entangled three-qubit states without concurrence and three-tangle. Phys. Rev. Lett. 97, 260502 (2006). https://doi.org/10.1103/PhysRevLett.97.260502

F. Tacchino, A. Chiesa, S. Carretta, D. Gerace, Quantum computers as universal quantum simulators: State-of-the-art and perspectives. Adv. Quantum Technol. 3, 1900052 (2020). https://doi.org/10.1002/qute.201900052

K. Życzkowski, P. Horodecki, A. Sanpera, M. Lewenstein, Volume of the set of separable states 58(2), 883–892 https://doi.org/10.1103/PhysRevA.58.883 . Number: 2. Accessed 2022-01-26

P.B. Slater, A priori probabilities of separable quantum states. J. Phys. A Math. Gen. 32, 5261 (1999). https://doi.org/10.1088/0305-4470/32/28/306

K. Zyczkowski, H.J. Sommers, Induced measures in the space of mixed quantum states. J. Phys. A Math. Theor. 34(35), 7111–7125 (2001). https://doi.org/10.1088/0305-4470/34/35/335

P.B. Slater, Dyson indices and hilbert-schmidt separability functions and probabilities. J. Phys. A Math. Theor. 40(47), 14279–14308 (2007). https://doi.org/10.1088/1751-8113/40/47/017

R.F. Werner, Quantum states with Einstein-Podolsky-Rosen correlations admitting a hidden-variable model. Phys. Rev. A 40, 4277–4281 (1989). https://doi.org/10.1103/PhysRevA.40.4277

S. Imai, N. Wyderka, A. Ketterer, O. Gühne, Bound entanglement from randomized measurements. Phys. Rev. Lett. 126(15), 150501 (2021). https://doi.org/10.1103/PhysRevLett.126.150501. (Publisher: American Physical Society. Accessed 2023-05-17)

Acknowledgements

We thank T. Apollaro, M. Consiglio and D.Vodola for useful discussions.

Funding

Open access funding provided by Alma Mater Studiorum - Università di Bologna within the CRUI-CARE Agreement. This research is funded by the International Foundation Big Data and Artificial Intelligence for Human Development (IFAB) through the project “Quantum Computing for Applications”. E. E. was partially supported by INFN through the project “QUANTUM”, the QuantERA 2020 Project “QuantHEP” and the “National Centre for HPC, Big Data and Quantum Computing”.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Appendices

Appendix A: Three-qubit entangled mixed states

In this appendix, we briefly describe the three-qubit GME states that we generated for the dataset of the SEP-vs-all and GME-vs-all classifiers.

A GHZ symmetric state is characterized by three fundamental properties, i) it is invariant under index permutation of the qubits, ii) it is invariant under simultaneous spin flip of the qubits, and iii) it is invariant under the rotation

This state can be written as a mixture of the two GHZ states, with density operators \(\hat{\rho }_{\text {GHZ}_\pm }\), and of the maximally mixed state with density operator \(\mathbb {1}/8\),

When \(q=0\), we have the so-called Werner state [41], with a GHZ state combined with the maximally mixed state. In Refs. [10, 23, 24], Eltschka et al. gave a complete classification of GHZ symmetric states in terms of the relation between the coefficients p and q. However, we shall note that there exist GHZ symmetric states that are GME, despite having both three-tangle and GME-concurrence null [42].

The X-state owes its name to the shape of the density matrix, which has non-null values on the diagonal and anti-diagonal elements. Thus, the X-state density operator can be written as the sum \(\hat{D}+\hat{A}\), with \(\hat{D}=\text {diag}(a_1,a_2,\dots ,a_n,b_n,\dots ,b_1)\) the \(2n\times 2n\) diagonal matrix, and \(\hat{A}=\text {anti-diag}(z_1,\dots ,z_n,z_n^*,\dots ,z_1^*)\) being an anti-diagonal matrix. The conditions \(Tr[\hat{D}]=1\) and \(\hat{D}\ge \hat{A}\) ensure that the operator \(\hat{D}+\hat{A}\) describes a physical state. The GME-concurrence of the X-state can be analytically calculated [26], and when its value is positive, the X-state belongs to the GME class. Note that the GHZ symmetric states are particular X-states with \(z_i=0\) for \(i= 2,\dots ,n\).

Another class that we have used to train our classifier is the statistical mixture of GHZ, W, and the flipped W state \(\bar{\text {W}} = \frac{1}{\sqrt{3}}(|011\rangle +|101\rangle +|110\rangle )\), that we have labeled as GHZ+W, with density operator

The analytic calculation of the three-tangle measure for those states has been given in [23, 25, 35].

Appendix B: Analysis of the two-qubit classifier

We dedicate this section to the analysis of the classifier for entangled and separable two-qubit states. In this case, it is known that the PPT criterion can be expressed as a degree \(n=4\) equation in the density matrix coefficients [29], which gives an analytical formula to classify all the possible, pure and mixed, two-qubit states. Despite so, the SVM does not provide an accuracy equal to 1 result on either the training, the validation or the test set, because the algorithm looks for the surface that minimizes the distance to support vectors, which in turn are constructed from the data set. Thus, in general, the precision can increase if we use larger data sets but can never reach exactly one.

Also, the precision depends on the distribution of the data set. In order to verify that our algorithm does indeed converge to the correct hypersurface, we analyzed the distribution of the entanglement of formation C [33] of the entangled states belonging to the training set and to the support vectors, as shown in Fig. 9. We notice that increasing the degree of the polynomial kernel, the support vectors of the entangled states class have, on average, a lower level of entanglement of formation. This confirms that, with a higher precision of the classifier, the support vectors are found closer to the boundary of the two classes.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sanavio, C., Tignone, E. & Ercolessi, E. Entanglement classification via witness operators generated by support vector machine. Eur. Phys. J. Plus 138, 936 (2023). https://doi.org/10.1140/epjp/s13360-023-04546-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjp/s13360-023-04546-5