Abstract

At the LHC era, the detector systems are operating at the harsh hadronic environment with the unprecedentedly high particle flux. Position-sensitive silicon devices are usually positioned at the innermost regions of the experimental setups and must cope with highly non-uniform radiation fields. At the end of LHC Run 2, fluence in silicon trackers reached 10\(^{15}\) n\(_{\mathrm{eq}}\)/cm\(^2\). Initial simulation studies predict that the maximal fluence for the HL-LHC may be up to two orders of magnitude higher than the one seen in LHC Run 1 and Run 2. In this paper, two general-purpose physics event generators used for simulation of proton–proton collisions for the radiation damage studies at LHC energies: PYTHIA 8.2 and Dpmjet-III are compared. Fluences obtained using these models, with the latest tuning to the LHC data, in detectors situated close to the proton–proton interaction point are determined as well. We also indicate a potential new method for actual fluence estimation using experiment real-time data monitoring system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and motivation

The Large Hadron Collider (LHC) at CERN was designed to collide bunches of protons with a centre-of-mass energy, \(\sqrt{s}\), up to 14 TeV. To accomplish a rich physics programme, covering high-precision standard model (SM) measurements and searches of new physics, the LHC is able to provide 2808 bunches with more than 10\(^{11}\) protons each and collide them with a frequency of 40 MHz (at the nominal conditions). LHC can also supply ion beams. During the years 2010–2012 (Run 1) and 2015–2018 (Run 2), the proton beams collided with \(\sqrt{s}=6.5-7\) TeV and 13 TeV, respectively. The Run 3 data taking period will start in the year 2022, and the instantaneous luminosity will be doubled approaching 2.2\(\times \)10\(^{34}\) cm\(^{-2}\)s\(^{-1}\). The LHC programme will be continued beyond the year 2035 (during Run 4 called high luminosity LHC, HL-LHC) with the aim to collect 3000 fb\(^{-1}\) of data [1]. Run 4 will be preceded by a significant upgrade of the accelerator together with the substantial change in all main detector trackers due to the radiation damage.

The total cross-section for proton–proton interaction at \(\sqrt{s}=8\) TeV is \(\sigma _{\mathrm{tot}} = (96.07\pm 0.18 \pm 0.31)\) mb [2], out of this 71.5 mb is related to the inelastic processes. Assuming the nominal LHC luminosity to be 10\(^{34}\) cm\(^{-2}\)s\(^{-1}\), in each second of the experiments’ operation, about 96\(\times \)10\(^7\) proton–proton (pp) interactions occur. At the LHC energies, a few hundreds of particles may be produced per one pp bunch-crossing with momenta that range over many orders of magnitude. The planned HL-LHC runs will double this number, making the radiation damage the main concern in any detector system.

Detectors at the LHC experiments are situated around collision points, along the beam line (usually considered as the z axis in the Cartesian coordinate system). The acceptance of ATLAS and CMS tracking detectors covers the pseudorapidity regionFootnote 1\(|\eta |<2.5\) (central part), whereas LHCb is capable to fully reconstruct particles at lower angles with respect to the beam line: 2\(<\eta<\)5 (forward) [3]. The design of both ATLAS pixel inner tracker and CMS tracker for Run 4 assumes the optimal performance covering \(|\eta |<4\) [4, 5].

At the end of LHC Run 2 data taking period, the inner parts of silicon trackers of the LHCb and ATLAS were irradiated with a particle fluence that reached 10\(^{15}\) n\(_{\mathrm{eq}}\)/cm\(^2\) [6]. The increase of the luminosity in Run 3 will cause an increase of the particle fluence as well, reaching annually value close to the fluence produced in both Run 1 and 2. It should be stressed that the current semiconductor technologies cannot operate properly beyond fluences of 10\(^{16}\) n\(_{\mathrm{eq}}\)/cm\(^2\) [7]. The negative impact of the radiation damage effects can be to some extent mitigated by adjusting the operation conditions or replacing the active elements of the detector. It is normal practice, when making such decisions, to take into consideration the results of the simulation of the particle fluence together with the continuous monitoring of the actual state of the detector.

The fluence in the LHC environment is highly non-uniform. It comes from particles emerging from the high-energy pp collisions and the interactions of these particles with the sub-detectors, supports and shielding. The radiation field is composed of charged hadrons and leptons, neutrons and photons. The proportion of the different particle types at a given point of the radiation field depends on the distance and angle with respect to the proton beams and the detector’s material. The only way to estimate such complex radiation filed is through a reliable Monte Carlo (MC) simulation.

Estimation of the particle fluence is necessary both for the monitoring of the current state of a detector and to predict whether all of the components would be able to operate until the end of the planned data taking period. Last but not least, simulation of the fluence is indispensable for the verification of the radiation damage models [7].

In general, the simulation procedure of particle fluence traversing the LHC detectors consists of two components: generation of particles (i.e., the final state system) originating from a proton–proton collision at the LHC energies, and the subsequent simulation of particle transport in the detectors’ material. The former is elaborated in this document.

The analysis of the two event generators, which are most commonly used in radiation damage studies, is done, firstly without any particle selection criteria which could bias the comparison. The models’ parameters are set on values compatible with two main tunes designed for the LHC Run 1 and Run 2 data taking conditions. Distribution of hadron multiplicity in an event and spectrum of kinetic energy are of the main interest in describing potential damage in silicon structure caused by traversing particles. Eventually, a simple and universal detector geometry is designed for fluence estimation as a function of radius and distance from the interaction point along the beamline. This part aims to spot issues that require consideration while planning new high-energy or high-luminosity experiments. If any differences are observed at the generator level, they will be naturally propagated to the particle transport and fluence simulation’s discrepancies in the design of future detectors.

The main innovation of the presented analysis, which motivated this paper, is the inclusion, within the FLUKA processing chain, of other general-purpose event physics generator to be able to compare in a transparent way the fluence based on different models. Although a few attempts were made to perform a similar task, our analysis also aimed at providing a generic and easily repeatable simulation setup using official FLUKA release with the help of the authors of the code [8].

One of the most important results of this analysis is a proposal of a brand new way of evaluating the actual particle fluence using a technique based on the detector’s real-time hit and track reconstruction that can be commissioned for Run 3 and Run 4 data taking periods and beyond. Such analysis’s main idea relies on comparing the flux of charged particles reconstructed in the detector with the simulated oneFootnote 2. This will depend critically on correct estimation of the component describing the neutral primary particles produced in a pp collision, mainly neutronsFootnote 3, to evaluate the total flux. The experiment-specific discrepancies between the number of reconstructed and simulated particles must be carefully studied and quantified. Such differences may come from many sources, including the different physics models used in the event generators and the concrete tracking system’s particular construction features. The simulated data will most probably need to be corrected to match the observed hit distributions by evaluating the appropriate re-weighting function. Simultaneously, the neutral component can be extracted from the generated events and corrected using the same re-weighting function applied for the charged one. Also, the detector-specific acceptance can be added by studying differences between the generated and simulated events. This kind of analysis may be pursed even further, provided that a particular detector is equipped with a high-performance particle identification (PID) system. In that case, the charged fluence component can be divided into a proton, kaon, and pion fluences, respectively, increasing the total fluence estimation precision. Described procedure’s quality can be cross-checked independently by the complementary leakage current analysis using the known dependence of the radiation-induced leakage current on the total particle fluence. The proposed new online fluence estimation will be vitally important for monitoring the high-precision silicon trackers exposed to extremely heavy irradiation during the Run 3 and 4 data taking period.

1.1 Tunes of Monte Carlo event generators

Two generators of the high-energy pp collision used in particle fluence simulation are compared: PYTHIA 8.2 [9] and Dpmjet-III [10]. They are two general-purpose generators commonly used to study the impact of radiation on detectors in nuclear, medical and high-energy physics. In addition, Herwig 3.0 [11] is often used. However, it had not been fully developed to study radiation effects on detectors, and therefore, it is not considered in this analysis.

The history of PYTHIA and Dpmjet lasts more than three decades, and the employed physical models were a few times revised during this period. The optimisation of the model parameters’ settings to reproduce existing experimental data is referred to as tune. The first significant tune was developed according to the Tevatron discoveries and was later revised for better agreement with LHC pp data at \(\sqrt{s}=7-8\) TeV [12].

The next set of tunes (PYTHIA 8 A2, Monash) was applied to improve the data-MC agreement for pp collisions at \(\sqrt{s}\) = 13 TeV at the beginning of the LHC Run 2. Better accordance was achieved but discrepancies were still reported, especially in charged-particle multiplicity distributions for low-\(p_T\) particles. Albeit each consecutive tune improved the data-MC correspondence and major dissimilarities diminished when particles with higher transverse momentum were considered for the analysis [13, 14], none of the generator described the whole phase space very well. In addition, the change in the generator settings resulted in the overestimation of the fiducial inelastic cross-section with respect to the ATLAS measurements and therefore was further updated with the PYTHIA 8 A3 tune [15].

The event generators are mostly tuned on high-momentum particles produced at the central rapidity. Particles produced at a low polar angle with respect to the beamline and particles with low momentum or transverse momentum, which are crucial for radiation damage, are reconstructed with lower efficiency.

2 Physics models for hadronic interactions

The proton is not a fundamental particle but contains quarks and gluons (called partons) interacting via the strong and Coulomb force. The type of interaction and the composition of the final state depend on the proton energy. At the lowest collision energy (\(\sqrt{s}\) below 1.5 GeV), protons are considered as charged point-like objects that scatter elastically without any energy loss. As the energy increases, the inelastic processes prevail, driven by the interaction at the partonic level. With the further rise of available energy, the projectile parton’s resolution reveals the proton’s finer and finer structure as in deep inelastic scattering (DIS). At the LHC energies, the total proton–proton cross-section is dominated by the inelastic proton–proton scattering, and the contribution of the elastic part is about 25%.

Kinematics of pp collision is described by the four-momentum transfer \(Q^2\), that is regarded as a scale at which the parton probes proton, and Mandelstam variable t that describes the momentum transfer between partons. In the scattering experiment, \(Q^2=-t\) and, due to the uncertainty principle, the resolution power of the parton is proportional to \(1/\sqrt{t}\). With the increase in the centre-of-mass energy \(\sqrt{s}\) and the four-momentum transfer \(Q^2\), the finer structure of protons is resolved, and more gluons and pairs of sea quarks take part in the scattering. Each of these partons carries a fraction of the protons’ momentum, denoted as x.

At high collision energy, gluon density grows much faster than the quark density; therefore, LHC is essentially a gluon–gluon collider and provides access to the kinematic region never reached before: high \(Q^2\) and low x.

The interaction between protons involves a wide spectrum of processes highly dependent on the fraction of the proton’s momenta carried by the partons x and the momentum transfer t between partons. Due to the high energy and high density of partons, multiparton scattering at LHC occurs very often. The process is usually regarded as hard if characterised by large momentum component perpendicular to the beam direction \(p_T\). This is equivalent to the parton interactions with a large t, since \(t \simeq p_T^2\). Hard processes are therefore reconstructed in the central part of the detectors. In contrast, most of the interactions with low momentum transfer (so-called soft) result in the final states produced with a larger longitudinal component of momentum in the more forward direction. Each hard interaction between partons from two protons is usually accompanied by the soft interaction (so-called Underlying Event) which comprises multiple-parton interactions, interactions between beam remnants, radiation of gluons in the initial and final state. All mentioned processes give rise to the particles with a low \(p_T\). Therefore, one can assume that pp collisions, even at high energy, are dominated by soft processes.

2.1 Hard and soft regime in proton–proton cross-section

The interactions among hadrons consist of a mix of processes driven by interactions at the partonic level. The hard (or semi-hard) interactions involve parton–parton interactions with large momentum transfer that is sufficient to resolve the structure of a hadron. Processes with \(p_T>\) 2 GeV/c can be described by perturbative QCD (pQCD). Hard scattering results in jets in the final state and particles with high transverse momentum. Soft interactions are characterised by an energy scale of the order of the hadron size (1 fm), which corresponds to the momentum transfer \(|t|\propto 1/R^2 \approx \) 1 (GeV/c)\(^2\). At such large distances, perturbative QCD cannot be applied. In such events, particles in the final state have large longitudinal, but small transverse momentum. Because of the dominant contribution of soft processes, the description of pp interactions involves both perturbative and non-perturbative terms, the latter being the subject of modelling that introduces considerable uncertainties.

The overall physics model of a hadronic collision comprises three components that depend on the interaction’s energy scale: the model of the initial state partons density, the process of interaction (creation of parton showers) and hadronisation (formation of the stable hadrons). From these factors, only hard scattering is derived from the first principles and does not require any tune; the other processes’ description is based on empirical models.

Proton is a composite, and extended object, whose dimension parallel to the beamline is Lorentz-contracted while accelerated to the LHC energies. Therefore, collided protons look like flat disks travelling in a direction perpendicular to the disk surfaces in which, due to the time dilation, quarks and gluons do not interact among themselves (partons are “frozen”). Therefore, there is no interference between the initial state interaction nor the final state interaction between partons in the same hadron. We can assume that the interaction occurs only between partons from different protons and can be considered probing of the proton’s internal structure with the resolution depending on the momentum transfer between scattered partons. The probability of a hard scattering with a large momentum transfer depends on the chance of finding two partons, one from each proton, within the short distance. At the LHC energy, this probability is quite high and, occasionally, even more than one hard scattering may occur in addition to soft interactions. This phenomenon is referred to as double parton-scattering (DPS) and is considered in the current physics models for the particle production at the LHC.

Thanks to the lack of interference during the scattering of partons from different hadrons, the description of the inelastic proton–proton collision is based on the concept of factorisation of the cross-section [16]. It is assumed that the cross-section can be computed as a convolution of perturbative hard scattering cross-section of point-like partons and universal factors called parton density functions (PDFs) that are assumed to be long-distance non-perturbative quantities and are conveniently written in terms of kinematic variables x and \(Q^2\):

where \(\sigma ^k_{ij}\) is the QCD hard-scattering cross-section for the kth process between parton i and j, with a momentum transfer t. Functions \(f_i^{1,2}(x_{1,2},Q^2)\) describe the probability for finding a parton i with a fraction x of the proton momentum (\(0<x \le 1\)) when the proton is probed at the scale of \(Q^2\). The main problem in the total hadron cross-section is that it can be neither calculated not measured for the full range of x and \(Q^2\). One need to know the distribution of partons in a hadron and the partonic cross-section, both for very small and maximal momentum transfers.

Therefore, the realisation of the factorisation idea in event generators depends on the physics model and requires fixing the momentum scale for hard and soft interaction. Since the hard component is described by pQCD, the main concern is how to deal with the low-\(p_T\) component.

Phenomenological models for soft-hadron interaction used in general-purpose event generator like PYTHIA, SHERPA or HERWIG take as a start the perturbative parton–parton interaction with the models of parton showers, hadronisation and multiparton interaction (MPI). This approach enables tuning of the parameters for the better agreement with data and, thanks to the well-known cross-section in the hard regime, provides the predictive power for the LHC physics. It, however, does not describe well the very soft region.

Another approach is undertaken by the PHOJET, EPOS or Dpmjet generators. These tools use Regge theory when partons’ interaction comes from the colour chains that hadronise and produce particles. MPIs are introduced by the exchange of additional objects, like hard pomerons. Higher multiplicities in Run 2 LHC data enforced a general revision of this approach [17].

2.2 Proton-proton cross-section

The total pp cross-section \(\sigma _{\mathrm{tot}}\) includes elastic \(\sigma _{\mathrm{el}}\) and inelastic \(\sigma _{\mathrm{in}}\) processes. The latter contains several components: diffractive \(\sigma _{\mathrm{dif}}\) (single, double diffractive and central production) and non-diffractive \(\sigma _{\mathrm{ND}}\) processes:

In an elastic collision, protons do not change the initial energy and move almost along the beamline after the interaction. Elastic interactions are driven by the long-ranged Coulomb interaction and, much stronger, short-ranged strong interaction. The former is well-known and predictable within the QED; the latter is hard to describe since the perturbative QCD is not applicable in the low-momentum-transfer region, where most of the elastic hadron interactions occur.

Inelastic processes are driven by parton–parton interaction described in Eq. (1) and contain both soft (like diffractive) and hard components. \(\sigma _{\mathrm{tot}}\) is not calculable in the framework of the perturbative QCD. Hence, the Regge theory, which considers exchange of set of particles that belong to the Regge trajectory as a cause of interaction, is used for the prediction of the \(\sigma _{\mathrm{tot}}\) high energy behaviour.

The soft contribution is parametrised by the soft pomeron exchange, which leads to the peripheral production of partons and, eventually, hadrons. In hard interactions, mainly among valence quarks, all intermediate partons are characterised by large virtualities and all scattering amplitudes can be calculated using the pQCD. Sea quarks and gluons share a small fraction of proton’s energy and interact via non-perturbative small momentum transfer reactions that appear as long cascades of partons. Created sea quark-antiquark pairs are connected by soft pomeron and undergo both soft and hard interactions [18]. In pQCD, the pomeron is regarded as a series of gluon ladders.

In single diffractive (SD) processes, one of the protons survives the collision, while the other breaks up and produces some particles in a limited pseudorapidity region. The unbroken proton moves at a very small angle with respect to the proton beamline. The SD events are characterised by a large rapidity gap between the outgoing proton and the produced system. In double diffractive (DD) events, both protons dissociate into the system of particles separated by the rapidity gaps. As a special case of a diffractive reaction, central production (CD) may be considered. In these processes, both protons survive the collision losing an only small amount of energy. A few particles (most often two) are produced centrally, with a rapidity gap from each of the protons. CD process is much less frequent than SD and DD but can provide valuable information on the proton’s structure and dynamics. In non-diffractive events, many particles are produced but, contrary to the diffractive events, no gap in rapidity occurs.

The relative contribution of different terms is established based on physical models with experimental inputs. In general, it can be evaluated that at the LHC energies the elastic cross-section amounts to about 25% of the total cross-section. The non-diffractive part is about 60% and diffractive interactions about 15%. These values vary depending on the applied model and \(\sqrt{s}\).

One can recognise the type of process while looking at the final states of the collision. Two protons with the energy that is equal to the beam energy outgoing almost parallel to the beam are a clear signal of an elastic interaction. In the SD events, one proton emerges from interaction at a very small polar angle, and several particles (mostly pions) are produced with large rapidity gap with respect to the proton. DD processes provide a few dozen particles from protons’ dissociation separated by the rapidity gap, and most of them cannot be detected. On average, 200 hadrons are produced in inelastic ND processes and this fraction predominantly is reconstructed in the detector’s acceptance.

The particles’ energy spectrum and the distribution of the event multiplicities in a different type of proton–proton interactions at \(\sqrt{s}\) = 14 TeV are shown in Fig. 1.

The various components of the total pp cross-section have also a different pseudorapidity distribution, see Fig. 2. These plots show particles produced in half a million pp collisions at \(\sqrt{s}\) = 14 TeV, generated with PYTHIA 8.2 generator.

Distribution of the pseudorapidity of the particles participating in: non-diffractive inelastic interactions (which is shown on the left) and single diffraction (on the right). More than 70% of produced hadrons are pions, 40% of particles are produced in the central region and 25% (35% of total number of protons and 20% of pions) at \(|\eta |>5\) (along the beam line)

It can be noted that most of the particles reconstructed in pp interactions at the LHC energies originate from the inelastic ND processes or are the products of protons dissociation in diffractive events. Since the central collisions are more likely to contain high-\(p_T\) partons, most of the particles with higher transverse momentum are produced in the central region of the detectors.

2.3 PYTHIA 8.2

PYTHIA is a Monte Carlo event generator of hadron–hadron collision based on perturbative QCD which describe properly parton scattering above some \(p_T\) value (\(p_T \gtrsim 2\) GeV/c), extended to the low-\(p_T\) region with models for the soft part. It generates a wide spectrum of processes, starting from both soft- and hard-QCD processes to Higgs production and exotic particle production and covers all components of the total hadronic cross-section. This generator keeps on evolving, and the currently used version PYTHIA 8.2 includes tunes A2 and A3 originating from the latest LHC results from Run 1 and 2 data taking period, respectively [9].

The total proton–proton cross-section is described in frame of Donnachie–Landshoff parametrisation as [19]:

All topologies represented in Eq. (2) are included with the amplitudes within Regge theory with one pomeron (P) and one Reggeon (R) term. The parameters A, B, P and R are obtained from the Regge fits to data and give: \(A=21.70\), \(P=0.0808\), \(B=56.08\), \(R=0.4525\) [9]. The prediction of the \(\sigma _{\mathrm{tot}}\) behaviour as the \(\sqrt{s}\) increases is revised as soon as new experimental results became available. One of the major corrections had to be applied after the observation from the first LHC runs at higher \(\sqrt{s}\), that the parton–parton cross-section became larger than the total proton–proton cross-section. It seems that at high energy each of the incoming protons is viewed as a beam of partons and more than one interaction among them occurred in one pp collision. MPI phenomenon was expected, and therefore, based on experimental evidences, generators had to be corrected for the better agreement.

The elastic cross-section is related to the total cross-section via the optical theorem [20] and is parametrised as an exponentially decreasing function of the Mandelstam invariant t. In PYTHIA, the inelastic component is obtained by the subtraction of the elastic cross-section from the total cross-section: \(\sigma _{\mathrm{in}}^{pp}(s)=\sigma _{\mathrm{tot}}^{pp}(s)-\sigma _{\mathrm{el}}^{pp}(s)\). The split of the inelastic cross-section into SD, DD and CD components is based on the specific generator parametrisations which are available for possible tunes. Eventually, the non-diffractive part of hadronic interaction (often called in experiment minimum bias events) is evaluated by integrating the diffractive components and subtracting them from \(\sigma _{\mathrm{in}}^{pp}\).

Recent results from TOTEM, ALPHA and LHCb updated information on \(\sigma _{\mathrm{tot}}^{pp}(s)\) and \(\sigma _{\mathrm{el}}^{pp}(s)\) at LHC energies \(\sqrt{s}\) = 7–8 TeV and 13 TeV [21]. It resulted in more reliable parametrisation of Eq. (3) and revision of the previous version of the generators. The models for diffractive interactions were populated with hard-pomeron exchange with the additional possibility of MPIs and initial and final state radiation as well [21].

The limitation of Eq. (1) comes from the substantial contribution of the soft, non-perturbative component of the pp interaction. The hard scattering cross-section can be considered above some \(p_{\mathrm{Tmin}}\) value only:

where \(p_T\) is the transverse momentum of the outgoing parton in the parton–parton centre-of-mass frame.

The scale \(Q_{\mathrm{min}}^2=p_{\mathrm{Tmin}}^2\) can be regarded as a point that connects the soft and hard components of the generator. The choice of \(p_{\mathrm{Tmin}}\) is of a crucial meaning—the lower it is chosen the higher average number of MPIs and particles produced in the final state.

The perturbative parton–parton scattering cross-section given by Eq. (1) and 4, dominated by the t-channel gluon exchange, is divergent at low-momentum transfers as \(1/t^2\sim 1/p_T^4\), The divergence in Eq. (4) may be regularised by the introduction of a threshold parameter \(p_{T0}\) as: \(1/p_T^4 \rightarrow 1/(p_T^2+p_{T0}^2)^2\). In such a way, the perturbative limit is reached as \(p_T\rightarrow 0\) with a smooth fall at a scale \(p_{T0}\) [21]. This approach is supported by the fact that at low \(Q^2\) (low \(p_T\)) the resolution of the probing projectile is poor and the partons inside the proton are screened by one another. Therefore, as \(p_T \rightarrow 0\) the cross-section takes small but nonzero value that depends on the matter distribution inside the hadron [22].

A dependency on the proton–proton centre-of-mass energy should be introduced in addition since at higher energies partons are probed at smaller x, where the parton density increases and the distance of colour screening decreases: \(p_{T0}=p_{T0}^{\mathrm{ref}}(\sqrt{s}/\sqrt{s}_0)^\epsilon \). The parameter \(\sqrt{s}_0\) is given at a reference energy and \(p_{T0}^{\mathrm{ref}}\) is \(p_{T0}\) at \(\sqrt{s}_0\). It means that at given \(\sqrt{s}\) the number of MPIs depends on the \(p_{T0}\); smaller values of \(p_{T0}\) result in more MPIs because of the increase of the MPI cross-section and higher value of the average particle multiplicity. In that way, the parameter \(p_{T0}\) is scaled with respect to reference value \(p_{T0}^{\mathrm{ref}}\) that is tuned to the experimental results at given \(\sqrt{s}_0\). The introduction of the \(p_{T0}\) in PYTHIA generator allowed to include the soft scattering regions into hard scattering regime with the price of a special parameter tuning.

The strong couple constant \(\alpha _s\) became also divergent at low \(p_T\) and had to be regulated by the cut-off parameter \(p_{T0}\) as well. It resulted in change of the scale of \(\alpha _s\) since it had to be determined in the scale of \(\alpha _s(p_T^2+p_{T0}^2)\) instead of \(\alpha _s(p_{T}^2)\).

Parton distribution functions (PDFs) recorded in the LHAPDF libraries [23] are available in PYTHIA 8.2. Initially, PDFs included a leading-order contribution only but the recent tunes provided by ATLAS and CMS included next-to-leading-order (NLO) for the better description of LHC data [24].

To summarise, the particle production in proton–proton collisions generated by PYTHIA 8.2 depends on a number of parameters. The most significant are: \(p_{T0}^{\mathrm{ref}}\), \(\epsilon \), \(\alpha _s\). The parameter \(p_{T0}\) separates the perturbative from the non-perturbative regions and depends on the centre-of-mass energy \(\sqrt{s}\). Therefore, the reference value \(p_{T0}^{\mathrm{ref}}\) at chosen reference value \(\sqrt{s_0}\) is set with a power-like dependency on \(\sqrt{s}\) with parameter \(\epsilon \).

The comparison of the hadron (pions, protons, kaons, neutrons) multiplicity generated with different settings of \(p_{T0}\) and \(\epsilon \) is shown in Fig. 3. The values of parameters represent the tunes of PYTHIA 8 provided by the LHC experiments after Run 1 and Run 2 [21]. Parton distribution was taken form LHPDF:CT09MCS distribution [25]. The value \(\alpha _s\) was set to \(\alpha _s\) = 0.130 at \(\sqrt{s}_0\) = 7 TeV according to the experimental tunes. Choice of such values might seem questionable since PYTHIA 8 has plenty of other parameters which are correlated and have to be determined simultaneously by comparison with data [15]. Still, this analysis aims to check whether one can expect essential discrepancies in event generators used to estimate particle fluence. Hence, parameters that govern the event multiplicity tend to be the most important ones.

Events from proton–proton collisions at \(\sqrt{s}\) = 14 TeV were generated with different PYTHIA 8.2 settings. Three pseudorapidity regions, relevant for experiments, were defined: \(|\eta |>\)5—particles are produced very close to the beamline, beyond the acceptance of any experiment, \(2.5<|\eta |<5\) —in the forward direction, within the LHCb acceptance and \(|\eta |<2.5\)—in the central detectors.

Smaller values of \(p_{T0}\) result in a higher number of particles produced in high-multiplicity events. The power \(\epsilon \) has less influence on the multiplicity distributions, albeit higher value increases the \(p_{T0}\) cut-off, thus reducing the number of particles from a collision.

In one proton–proton collision at \(\sqrt{s}\) = 14 TeV about 100 hadrons (charged pions, kaons, protons and neutrons) are produced. The mean multiplicity varies between 81 and 105 for the \(p_{T0}^{\mathrm{ref}}\) = 2.0–2.5 GeV/c. The difference in settings of the \(\epsilon \) parameter in the range \(\epsilon \) = 0.2–0.3 (for the same \(p_{T0}^{\mathrm{ref}}\)) is at the level of 5%.

The much higher multiplicities (above 110) were obtained with \(p_{T0}^{\mathrm{ref}}\) = 1.9 GeV/c and \(\epsilon \) = 0.2. This is displayed for better understanding of the tendency: if the cut-off of the hard scale was set as a lower value, the number of produced particles turned out to increase substantially due to the higher contribution from MPI. The first estimation based on predictions before the LHC era assumed the \(p_{T0}^{\mathrm{ref}}\) to be slightly above 1.2 GeV/c and provided on average more than 150 hadrons in one inelastic proton–proton interaction at \(\sqrt{s}\) = 14 TeV. The results from LHC Run 1 and the first part of Run 2 shifted the parameter towards higher values and simulated events with lower multiplicities.

The proper setting of the generator parameters involves much more sophisticated methods for data-MC comparison and is beyond this document’s scope. The procedure of PYTHIA 8 optimisation can be studied, for example, in [15, 24].

2.4 DPMJET-III

The concept of the Dpmjet event generator is based on two-component dual parton model (DPM) which consists of soft hadronic processes, described by the exchange of pomerons within the Regge theory [20], and hard processes described by perturbative parton scattering [26]. Both non-perturbative and perturbative QCDs describe the hadronic final states combined in one common framework and separated by the parameter \(p_T^{\mathrm{cutoff}}\). Dpmjet inherited the model for the proton–proton interaction from the PHOJET generator [10] what means that each individual scattering is simulated with PHOJET.

The topologically complicated diagrams describe the scattering of protons in DPM with multiple exchanges of pomerons in the t-channel. The dominant contribution includes elementary soft interaction between protons split into coloured systems of valence quark and diquark. Two chains are stretched between quark and diquark from different protons forming a colour-neutral system that eventually moves apart and hadronise. As protons’ energy increases, the diagrams with the topological expansion of the chains between sea partons give rise to multiparton scattering. In hadron–hadron interactions, several pomerons can be exchanged in parallel, what stands for MPIs. This concept comprises an exchange of a single, double, triple or even loop of pomerons, both hard and soft. Any experimentally known process, like single or double diffraction, can be attributed to one of the types of exchanged pomerons or combinations.

Differential elastic proton–proton cross-section is evaluated as a sum of scattering amplitudes expanded as a series of partial waves with different angular momentums. The total cross-section is related to the elastic cross-section via optical theorem; therefore, the inelastic cross-section \(\sigma _{\mathrm{in}}\) in Dpmjet-III is an internal element of the model and includes diffractive processes without any external tunes. The model can be updated as soon as one can obtain the appropriate measurements of \(\sigma _{\mathrm{tot}}\) or \(\sigma _{\mathrm{el}}\).

The Dpmjet-III is the latest version embedded in the FLUKA package [8, 27]. The revision of the program used in this study, bundled with FLUKA and labelled by authors as Dpmjet-III 2016+, was revised and updated to face up with the LHC data at \(\sqrt{s}\) = 7 TeV and differs significantly from the standalone, not updated, version of the program [28]. The main difference lies in a new approach to the perturbative scale given by \(p_T^{\mathrm{cutoff}}\) and its dependency on the \(\sqrt{s}\), what effectively increased number of MPIs and removed the problem of the deficit of high multiplicity events. Pomeron acquired its own partonic structure invoking that way multiple parton interactions. The updated Dpmjet-III generator, which is associated with FLUKA, is called with a modification that enables multiple parton–parton interactions. In addition, particle density functions from CT14LO set were chosen for the revised version PHOJET/Dpmjet-III. However, the model’s principle has been not changed—it includes one soft and hard pomerons together with an “effective” Reggeon. Therefore, the same model is used for soft and hard components, with the binding parameter tuned to data. In this model, all parameters are fixed and cannot be changed individually. This differentiates Dpmjet-III from PYTHIA, where a vast of parameters can be changed according to the experiments’ preferences and different purposes of the analysis. FLUKA with the embedded Dpmjet-III is currently the most commonly used program for the simulation of radiation environment in the LHC [7].

3 Comparison of generators for particle production

Both generators, Dpmjet-III and PYTHIA, use factorisation theorem, which allows hadronic cross-sections to be expressed in terms of parton-level cross-sections convoluted with hadron partonic densities. Dpmjet-III originally contained a description of the soft interaction only, and the hard QCD processes were included at the later stage. PYTHIA, on the contrary, was intentionally dedicated to model the high energy collisions with high transverse momentum transfers, and the size of the soft component was tuned to follow the experimental results. Both generators share the method of hadronisation but with different sets of PDFs from the LHAPDF library [23].

Although physics models employed in the PYTHIA 8.2 and Dpmjet-III generators differ in the approach to the soft and hard components of the total cross-section, both are tuned to the latest experimental data and should not provide substantially different results. But regardless the total pp cross-section is the same, the contribution of diffractive events may be different, leading to different angular distributions. Different approach to MPIs would manifest in different multiplicity and energy distribution.

Radiation damage in the bulk of silicon sensors depends on the number of particles produced in the collision, the angle with respect to the proton beamline and the energy spectrum, especially in the low part, of particles traversing the detector. Therefore, in this study, the comparison of event multiplicities, particles transverse momenta, angular distribution and energy spectra is provided.

3.1 Multiplicity distribution and energy spectra

Depending on the event generator’s release and parameters, the number of particles created in one proton–proton collision may differ significantly. Protons, neutrons, charged pions and kaons, which are regarded as hadrons in this document, are of the crucial meaning for the radiation damage in the bulk of silicon.

The average multiplicity of hadrons produced in both generators is shown in Table 1. This table also contains the composition of hadron flux given with respect to the number of hadrons \(N_h\) produced in one interaction. Elastic proton–proton collisions are excluded from this comparison.

The average number of hadrons produced in one proton–proton collision is slightly higher (5% for \(p_{T0}^{\mathrm{ref}}\) = 2.3 GeV/c) in the case of PYTHIA 8.2 with respect to Dpmjet-III. This effect reflects the origin of generators—the family of PYTHIA generators were created to describe the high-multiplicity high-p\(_T\) events with the extension to the soft processes. Dpmjet-III produces a higher number of pions than PYTHIA 8.2, which is understandable in soft processes, whereas the production of heavier baryons requires more momentum transfer and occurs less often.

Distributions of the event multiplicity for one of PYTHIA settings (\(p_{T0}^{\mathrm{ref}}\) = 2.3 GeV/c, \(\epsilon \) = 0.2) and Dpmjet-III are shown in Fig. 4. Distributions are displayed in three previously defined regions of pseudorapidity: \(|\eta |>5\), \(2.5<|\eta |<5\) and in \(|\eta |<2.5\).

Distribution of multiplicity, i.e. number of hadrons (pions, protons, neutrons, kaons) produced in one proton–proton collision in PYTHIA 8.2 with \(p_{T0}^{\mathrm{ref}}\) = 2.3 GeV/c and Dpmjet-III. Multiplicity is calculated in three pseudorapidity regions: \(|\eta |>\)5, 2.5\(<|\eta |<\)5, and \(|\eta |<\)2.5, respectively

Dpmjet-III generates more low-multiplicity events than PYTHIA 8.2 and very few events with more than 400 hadrons per interaction. The majority of particles are produced in the central region because partons in proton collisions may either propagate as beam remnants moving in the forward direction or populate the central regions with a high number of low-\(p_T\) particles.

The energy spectra plotted separately for each type of particles are shown in Fig. 5. Both generators produce a similar number of pions with comparable spectra shapes. Apparently, the number of protons with the highest energies (from diffractive events) produced by Dpmjet-III slightly exceeds the PYTHIA 8.2 case. It results in a lower number of particles produced by Dpmjet-III in the central region.

Distribution of pseudorapidity for all particles with a subset of particles with the energy above 100 GeV is shown in Fig. 6Footnote 4. The majority of particles are produced in the central and forward region, but protons carry the highest energies in the beam pipe region, i.e. in the not instrumented region. These particles come from the diffractive low-multiplicity events and very rarely can be detected in any LHC silicon tracker. Mean energy of particles is about 250 MeV, see Figs. 6 and 7.

Left: distribution of the pseudorapidity of particles produced in PYTHIA 8.2 and Dpmjet-III generators. Particles with energy greater than 100 GeV have pseudorapidity \(|\eta |>4\); therefore, they are beyond the acceptance of the LHC tracking detectors. Distributions are scaled to one proton–proton collision. Right: dependency of the kinetic energy on pseudorapidity (Dpmjet-III). Since the highest multiplicities are observed in the central part, this region is populated with particles with the lowest energies

3.2 Transverse momentum distribution

Distribution of the particles’ transverse momentum \(p_T\) shows agreement between both generators with the small excess of Dpmjet-III events with higher \(p_T\) which originate from particles with high energy, see the left panel of Fig. 8. More distinctive difference is visible in the distribution of transverse momenta \(p_T\) as a function of pseudorapidity \(\eta \) (right plot in Fig. 8). Dpmjet-III generates relatively more low-\(p_T\) particles at higher pseudorapidity, i.e. in the very forward region. In consequence, one observes a drop in production of such particles in the central region where the majority of PYTHIA 8.2 particles are generated.

The correlation of the mean momentum of the produced particles with the corresponding event multiplicity \(N_h\) is shown in Fig. 9, where the comparison of mean \(p_T\) of events generated by Dpmjet-III and PYTHIA 8.2 is presented. It is noticeable, especially on the projection of these distributions in Fig. 10, that PYTHIA 8.2 events contain more events with higher \(\langle p_T \rangle \).

3.3 Minimum-bias events

Minimum-bias data comprise of the events selected with the minimal experimental impact, i.e. reconstructed within the detector acceptance with minimal requirements by the triggers. This class of events could be considered as an experimental realisation of non-diffractive inelastic interactions and is usually used for data-MC comparison. Although each LHC experiment selects minimum-bias events with different criteria, we would like to find the common part that would explain the possible difference in the fluence simulation. In this analysis, the same experimental selection criteria are applied to compare generators rather than experiments.

We applied the following cuts to select minimum-bias sample: at least one particle in the event must be within detector acceptance \(|\eta |<5\), the kinetic energy must be lower than 100 GeV and the transverse momentum \(p_T\) greater than 250 MeV/c.

The spectrum of kinetic energy and pseudorapidity distribution, as shown in Fig. 11, indicates that minimum-bias cuts have more severe effects in case of the particles produced by Dpmjet-III generator. In the case of PYTHIA 8.2, almost 90% of particles passed these cuts, whereas, in the case of Dpmjet-III, this is the only 70%. After applying the cuts, the maximum of \(\eta \) distribution in case of events generated by PYTHIA 8.2 is higher by approximately 20% with respect to Dpmjet-III, whereas the difference for all generated particles, before the minimum-bias selection criteria, is only 4% (compare Fig. 6).

A more significant rejection factor of particles produced by Dpmjet-III reflects the softer structure of the \(\langle p_T \rangle \) distribution. The most substantial effect pertains to pions in non-diffractive and protons from diffractive events.

The distribution of \(\langle p_T \rangle \) and the dependency of the minimum-bias events multiplicity \(N_h\) on the \(\langle p_T \rangle \), defined with above criteria, are depicted in Fig. 12. The comparison illustrates that the PYTHIA 8.2 generates minimum-bias events that are characterised by the harder structure.

The comparison of selected features of particles produced by the two event generators such as energy, momentum, transverse momentum, and pseudorapidity has been made. The most interesting result shows about 10% higher event multiplicity prediction of PYTHIA 8.2. There are no significant differences in the energy spectrum, but events generated by PYTHIA 8.2 have higher mean transverse momentum and populate more the central region of pseudorapidity. Very generic experimental criteria imposed on generated events show their influence on pseudorapidity and transverse momentum distributions. For both generators, particles produced at the very forward region are not accepted by the minimum-bias cuts, but, also, the number of Dpmjet-III particles is further reduced, mainly from the central region. This result shows the potential impact on the calculation of fluence because the minimum-bias criteria applied at the generator level might reduce the number of reconstructable particles in case of Dpmjet-III. This can also be one reason why the ATLAS experiment observes an excess of fluence simulated by PYTHIA 8.2 and GEANT4 in comparison with fluence determined by leakage current evolution in time. In contrast, in simulation-based on Dpmjet-III fluence agrees with measurement [7].

4 Fluence simulation in the LHC environment

The impact of different physics models used for the generation of LHC events may influence the distributions of particle fluence predicted for the detectors, especially at small distances from the interaction point. The impact of secondary particles created in nuclear reactions with the material present within the detector acceptance or stray radiation becomes more important at higher distances from the IP.

For this study, the simulation tool FLUKA is used [8, 27]. This is a software platform that includes event generator, a package for geometry description and finally, the particle transport code to simulate interaction with matter. The Dpmjet-III generator is embedded in FLUKA and is used as a default generator for proton–proton collisions. It can be replaced with other generators, like PYTHIA 8.2, by modifying a specialised interface routine.

The FLUKA package provides a set of tools for the visualisation and calculation of the variety of dosimetry parameters, like neutron equivalent and particle fluence, total ionising dose, deposited energy and more. Fluence is defined as the number of particles incident on a sphere of given cross-sectional area perpendicular to the direction of each particle. Fluence can also be expressed equivalently, as the sum of track lengths of the particles’ trajectories in a unit of volume [29].

The damage occurring to the device is usually normalised to the fluence of neutrons with the kinetic energy of 1 MeV, which would result in the deposition of the same non-ionising energy causing equivalent damage to the material. Thus, in general, the fluence depends on particle multiplicity in an event, energy and polar angle of their tracks with respect to the beamline (z-axis). Further from the IP, the secondary radiation in the material is an additional component to the fluence. The 1 MeV equivalent neutron fluence \(\phi _{\mathrm{eq}}\) is calculated internally in the FLUKA program and considers the energy spectrum of the damaging particles and the standard tables with the damage functions [30].

4.1 Geometry of a typical LHC experiment

The physics program of any LHC experiment requires accurate information on the position of production and decay vertices and very efficient track reconstruction. For better precision, the first measured point on track should be as close as possible to the interaction point. This is necessary for the lifetime measurements and to resolve multiple primary vertices that are created in collision with more than one proton–proton interaction (so-called pile-up events). Therefore, highly granulated silicon detectors are usually situated in the very close proximity to the IP.

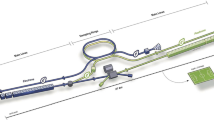

A typical LHC silicon tracker, in a general-purpose detector, comprises the cylindrical barrels with silicon sensors placed parallel to the beamline (along the z axis). For the analysis presented here, we created a hypothetical detector with five of such layers with 300-\(\upmu \)m-thick silicon sensors, see Fig. 13. The radii of the barrels are: 6 cm, 8 cm, 10 cm, 12 cm and 14 cm, respectively. The collisions of protons at the \(\sqrt{s}\) = 14 TeV are provided by the Dpmjet-III and PYTHIA 8.2 generators. In the silicon trackers positioned further downstream with respect to the interaction point and away from the beam pipe, one needs to consider also secondary particles produced in the material of detectors and shielding, but at such small radii, the dominant component of fluence comes from hadrons directly produced in the pp collisions. The particle transport and track reconstruction are performed within FLUKA package. Two-dimensional distribution of pion and proton fluence obtained for this geometry is reported in Fig. 13. Dependency of pions and protons fluence on the distance from IP, for the increasing radius of the detector, is shown in Fig. 14. Fluence is calculated for the amount of data that correspond to 1 fb\(^{-1}\) of luminosity assuming the same value for the inelastic cross-section for both generators, \(\sigma _{pp}\) = 80 mb.

Two-dimensional distribution of pion (left) and proton (right) fluence. Detector consists of five 300-\(\upmu \)m-thick silicon cylindrical barrels of radius 6 cm, 8 cm. 10 cm, 12 cm, and 14 cm, respectively. Simulation is based on a sample with 10\(^5\) events from pp interactions at \(\sqrt{s}\) = 14 TeV generated by Dpmjet-III and reconstructed with FLUKA program. IP is at the \((x, y, z)=(0, 0, 0)\) cm

Pion (left) and proton (right) fluence as a function of the distance from the IP. Events are simulated by Dpmjet-III generator, and tracks are reconstructed in FLUKA. Plots show the fluence at five radii in five layers of cylindrical barrels (geometry from Fig. 13). Values are scaled to 1 fb\(^{-1}\) of luminosity (\(\sigma _{pp}\) = 80 mb)

It is evident that in any detector geometry, the highest fluence is expected at small radial distances from the IP because of the highest density of tracks. However, the z-dependency has more complex behaviour. It is observed that the particle fluence has a minimum at \(z=0\) cm since few particles are produced perpendicular to the proton beam direction. This is especially visible in case of protons, which are more often produced at lower angles, whereas pions are more abundant at higher angles. At the high-z tail the distribution of fluence saturates, see Fig. 14. One can understand it as a purely geometrical effect: when particle traverses consecutive layers at different radii, the track lengths measured in equal detector thickness are the same. The volume element in which tracks’ length is measured, increases with the radius, so the tracks’ length density decreases for higher radius. But when fluence is evaluated further from the IP in z-direction, this effect is compensated by the higher density of particles produced at lower polar angles. Therefore, the particle fluence, calculated as the track-length density, is approximately constant at detectors further from the IP than about \(z>\) 40 cm.

The fluence of pions, protons, neutrons and kaons is usually expressed in the standard neutron equivalence units (\(\phi _{\mathrm{eq}}\)), considering the number of particles, their energy and polar angle. Particle fluence distributions, for respective particle types, are weighted with the energy-dependent displacement damaged functions D(E) and combined [30]. The damage function accounts for both the cross-section for displacing silicon atoms and the energy released in creating displacements. Most of the particles produced in the collision have energy below 3 GeV. Damage functions are experimentally determined curves that weight the damage caused by a different type of particles with respect to a neutron of 1 MeV kinetic energy [30]. In the case of particles with kinetic energies above 1 GeV, this function is constant but for lower energies is higher and depends strongly on energy and varies significantly between different types of particles. Therefore, low energy particles contribute the most significant to the neutron equivalence fluence.

The z-dependency of \(\phi _{\mathrm{eq}}\) for layers of detectors with different radii is presented in Fig. 15. Particles traversing the detector in the central region have lower energies than those at very low polar angles (compare Figs. 6 and 15). Therefore, particles produced at the highest angles, although they constitute less than 25% of the total amount of particles, contribute the most significant to \(\phi _{\mathrm{eq}}\). The neutron equivalence fluence peaks at the \(z=0\) cm (contrary to the particle fluence) and saturates slower at higher distances than distributions of particles fluence. The decrease of \(\phi _{\mathrm{eq}}\) along the z direction is due to particles with higher energy, which are more often produced at very low polar angles and contribute to \(\phi _{\mathrm{eq}}\) with the lower value of the damage function D(E).

4.2 Comparison of particle fluence

Dependency of the neutron equivalent fluence \(\phi _{\mathrm{eq}}\) on the radius and the distance from the IP, simulated with PYTHIA 8.2 and Dpmjet-III, is shown in Fig. 16 (left). At the radius \(R=6\) cm, fluence reaches the value \(\phi _{\mathrm{eq}}\) = 1.7\(\times \)10\(^{12}\) n\(_{\mathrm{eq}}\)/cm\(^2\) for 1 fb\(^{-1}\) of data, whereas the detectors at \(z= 100\) cm are 30% less irradiated. This ratio drops to 16% in case of stations at \(R=12\) cm and decreases as radius increases. Fluence, averaged over the radius between 0.5 cm and 14 cm, is presented in the right panel of Fig. 16. The neutron equivalence fluence distribution is almost uniform for radius greater than 12 cm, but for trackers installed closer to the beamline, the stronger z-dependency of the fluence is visible.

Comparison of Dpmjet-III and PYTHIA 8.2 simulation of neutron equivalence fluence as a function of z (distance from IP along the beamline). Fluence is calculated at radius 6 cm (solid line) and 12 cm (dashed line) (left). Distribution of fluence averaged over from radii 0.5 cm to 14 cm (right). Values are scaled to 1 fb\(^{-1}\) of luminosity (\(\sigma _{pp}\) = 80 mb)

Comparing the Dpmjet-III and PYTHIA 8.2 simulations, one can see agreement in detectors very close to the IP but further from it along the z-axis, small excess (8% at \(R=6\) cm) of PYTHIA 8.2 fluence is noticeable. This can be explained while comparing the number and energy of pions produced in each generator. Although PYTHIA 8.2 generates up to 10% (depending on the tunes) more particles than Dpmjet-III in one pp collision, for the latter the fraction of generated pions with respect to all hadrons is higher. Therefore, the number of pions expected for the amount of data that corresponds to 1 fb\(^{-1}\) of integrated luminosity in case of PYTHIA 8.2 is almost 10% lower than in Dpmjet-III. In the low-z regions (high polar angle \(\theta \)), the highest contribution to \(\phi _{\mathrm{eq}}\) comes from pions; therefore, Dpmjet-III shows small excess of \(\phi _{\mathrm{eq}}\) at \(z=0\) cm in comparison with PYTHIA 8.2. In the case of protons, which populates small polar angles, PYTHIA 8.2 generates about 40% more particles than Dpmjet-III, which gives higher fluence at higher z.

The comparison of particle fluence for all types of hadrons simulated with the two generators is shown in Fig. 17. It is visible that fluence of protons, neutrons, and kaons diverges significantly among generators, but in case of pions, the difference is much smaller. The contribution of pions in the neutron equivalence fluence is the most substantial; therefore, a smaller difference between generators is caused mainly by the smaller difference in the pion component. In the more distant places, protons and neutrons contribute in larger amount and differences are more significant.

Comparison of neutron equivalence fluence simulated by Dpmjet-III and PYTHIA 8.2 at various radius R and z-positions is summarised in Table 2.

The statistical uncertainties in case of Monte Carlo samples are negligible (at the level of 1%). The uncertainty of PYTHIA 8.2 generation connected with parameter settings is 10–12%, which accounts for the change in the generator tunes according to the LHC data taking periods. The most significant contribution to systematic uncertainty comes from the damage functions parametrisation used for the neutron equivalence calculation. According to the latest recommendations, it may reach as much as 30% [7]. In the presented comparison, this effect is negligible since the same damage functions were applied for both generators.

5 Summary

The current and future high-energy experiments require precise reconstruction of tracks and production and decay vertices in events that comprise hundreds of particles. Silicon trackers are usually situated in the harshest radiation environment and under the influence of the mixed radiation field. It has been proved that the currently used silicon structures should operate without compromising the quality of the physics data up to \(\phi _{\mathrm{eq}}=10^{16}\) n\(_{\mathrm{eq}}\)/cm\(^2\) [7]. The fluence in the proximity to the IP, predicted for Run 3 and 4 at the LHC, may reach this limit and will be orders of magnitude higher in the foreseen future experiments like HL-LHC [1] and FCC (future circular collider) [31, 32]. Fluence cannot be measured directly and must be simulated instead. In this paper, we performed, for the first time, a detailed comparison of the most popular tools for the generation of proton–proton events at LHC energies and transport codes used for the simulation of track reconstruction. Also, we focused not only on the total neutron equivalent fluence but analysed respective fluences coming from different particle species since the damaging effects strongly depend on the type of impinging particles.

Extensive studies of the most commonly used generators: PYTHIA 8.2 and Dpmjet-III showed that the former produces about 10% more hadrons in each proton–proton collision. This effect originates from a different physics model used to describe proton–proton interactions. PYTHIA generator uses pQCD for the parton–parton interactions, whilst Dpmjet-III model originates from soft-hadronic interactions dominated by hard pomeron exchange and multiple parton scattering for high-\(p_T\) processes. PYTHIA models are tuned to the LHC data, predominantly obtained from the central detectors [22]. Dpmjet-III was revised after the LHC Run 1 to correct the problem of deficit of the high-multiplicity events [8]. There are no significant differences in the distributions of pseudorapidity, energy, and transverse momentum in the central regions, but Dpmjet-III tends to produce more particles with higher energy in the very forward direction.

The fluence of particles in the inner part of detectors (close to the interaction point and to the beam line) consists almost entirely of the primary particles produced in proton–proton interaction. Since pions constitute about 80% of the total number of hadrons, the distribution of \(\phi _{\mathrm{eq}}\) is the most sensitive to the discrepancy in pions’ production. Dpmjet-III generates fewer particles per event, but with a higher fraction of pions than PYTHIA 8.2; hence, both generators show a reasonable agreement in the distribution of \(\phi _{\mathrm{eq}}\) for the regions close to the IP. A small excess in \(\phi _{\mathrm{eq}}\) in the case of Dpmjet-III is observed as \(z \simeq 0\). The influence of protons and neutron grows up for regions at high-z tails. Therefore, the difference in fluence simulated by PYTHIA 8.2 in a given z-position amounts to 7% over the Dpmjet-III. The z-dependency of the \(\phi _{\mathrm{eq}}\) becomes almost uniform for the more distant radial regions of the detector.

This analysis also showed the significant impact of PYTHIA 8.2 generator parameters settings on the multiplicity distributions and the effect of minimum-bias selection criteria on the number of particles that are further transported through the detector material to perform track reconstruction. Since Dpmjet-III generates more particles in the forward direction, a larger fraction of them is rejected by the selection cuts. This observation is important if \(\phi _{\mathrm{eq}}\) is simulated by methods that depend on the tracks reconstructed by standard algorithms since only tracks reconstructed with good quality and within the detector acceptance are further processed. It should also be considered while comparing the simulation among LHC experiments due to differences in the definition of minimum-bias events. Therefore, based on the performed analysis here, we advocate a new approach for radiation damage studies. At least two models should be used, and detailed analysis of the respective fluences coming from different hadron species should be considered. A careful study of hadron multiplicity as a function of their pseudorapidity and energy spectrum is of the essence. The overall fluence estimation can also be enhanced using partially data-driven approach using the online hit and track reconstruction monitoring to estimate the actual charged component of the produced particle flux. Next, based on the respective models, the neutral component can be added to estimate the total particle fluence.

The sensors’ leakage current increases proportionally to \(\phi _{\mathrm{eq}}\); therefore, this measurement can be regarded as a direct method of comparison between simulated fluence with the independent estimation based on the measurement of the leakage current, while sensors are exposed to radiation. However, this measurement also depends on the model used to describe microscopic changes in the silicon structure (e.g. Hamburg model) and damage functions that were determined for low-energy hadrons and includes about 30% of uncertainty [33]. Such a study is currently performed in all LHC experiments, see, for example, [6, 34], and will be of the most importance as far as projects for the future high-energy and high-luminosity collides are concerned. The comparison of physics models used for the fluence simulation is the first step that may explain observed discrepancies.

The analysis presented in this paper revealed a major weakness of the fluence estimation technique based on the leakage current measurement that relies strongly on the MC models. As shown in the text, the correct prediction of the total fluence is critically affected by the modelling of the charged and neutral hadron energy spectra and spatial distributions. Various analyses performed by both ATLAS and CMS related to their very forward tracker detectors revealed large discrepancies between the predicted and measured quantities. One can track down the problem with reliable predictions of currently used physics event generators tuned to reproduce the events observed in the central part of the LHC general-purpose detectors. To mitigate this problem, we propose a new technique based on real-time hit and track monitoring. This new technique would allow for a very fast estimation of the fluence produced by the charged hadrons. High hit- and track-reconstruction efficiency paired with an excellent particle identification system would even allow for evaluating the fluence produced by the respective hadron species. That also gives a possibility for cross-checking the quality of the MC model using the data. One would still need to use the MC model to get information regarding the neutral component to calculate the total fluence. However, the dependency on the MC model is quite different from that needed in the leakage current-based measurements. Thus, one should consider this new method as a complementary one and potentially much stronger since it allows at the same time to perform validation of the used MC model. Since the new analysis requires a dedicated calibration data stream, we intend to implement the analysis for LHC Run 3 and Run 4 data taking periods.

Notes

Pseudorapidity is defined as: \(\eta = -\mathrm{ln}(\mathrm{tg} ~\theta /2)\) and reflects the distribution of the particle’s polar angle with respect to the beam axis.

Note that by the simulation procedure we mean the full detector simulation—including particle tracking through detector material and emulation of the respective detectors’ response. We distinguish them from the generated events that constitute directly the output of a given physics generator code.

In our analysis, we primarily focus on the detectors close to the interaction point and beamline. Thus, we ignore the neutral particle flux from the secondary sources, such as reflection from far-detector construction structures. For instance, such secondary neutron flux may be very important for calorimeters.

Tracks reconstructed with good quality usually have momentum below 100 GeV/c. Therefore, particles with energy above 100 GeV may not be reconstructed by standard tracking algorithms but still contribute to the fluence and interact with the tracking detectors.

References

G. Apollinari, O. Bruening, T. Nakamoto, L. Rossi, High Luminosity Large Hadron Collider HL-LHC, CERN Yellow Report CERN-2015-005, pp. 1–19

The ATLAS Collaboration, Measurement of the total cross section from elastic scattering in pp collisions at \(\sqrt{s}\)=8 TeV with the ATLAS detector. Phys. Lett. B 761, 158 (2016)

LHCb Collaboration The LHCb Detector at the LHC, JINST 3, S08005 (2008)

ATLAS Experiment, ATLAS Inner Tracker Pixel Detector Technical Design Report ATL-TDR-030, LHCC-2017-021

CMS Collaboration, The Phase-2 Upgrade of the CMS Tracker Technical Design Report CERN-LHCC-2017-009, CMS-TDR-014

K. Akiba et al., (LHCb VELO group) radiation damage effects and operation of the LHCb vertex locator IEEE trans. Nucl. Sci. 65, 1127–1132 (2018)

The RD50 Collaboration - Radiation hard semiconductor devices for very high luminosity colliders, http://rd50.web.cern.ch/rd50/

T.T. Bohlen, F. Cerutti, M.P.W. Chin, A. Fasso, A. Ferrari, P.G. Ortega, A. Mairani, P.R. Sala, G. Smirnov, V. Vlachoudis, The FLUKA code: developments and challenges for high energy and medical applications. Nucl. Data Sheets 120, 211–214 (2014)

T. Sjostrand, An introduction to PYTHIA 8.2. Comput. Phys. Commun. 191, 159–177 (2015)

S. Roesler et al., The Monte Carlo event generator DPMJET-III, hep-ph/0012252 SLAC-PUB-8740

J. Bellm, S. Gieseke, D. Grellscheid et al., Herwig 7.0/Herwig++ 3.0 release note. Eur. Phys. J. C 76, 196 (2016)

LHCb Collaboration, Measurement of charged particle multiplicities and densities in pp collisions at \(\sqrt{s}\)=7 tTeV in the forward region, Eur. Phys. J. C 74, 2888 (2014)

ATLAS Collaboration, Charged-particle distributions at low transverse momentum in \(\sqrt{s}\)=13 TeV pp interactions measured with the ATLAS detector at the LHC Eur. Phys. J. C 76 502 (2016)

ATLAS Collaboration, Charged-particle distributions in pp interactions measured with the ATLAS detector at the LHC Phys. Lett. B 758 67–88 (2016)

ATLAS Collaboration, The Pythia 8 A3 tune description of ATLAS minimum bias and inelastic measurements incorporating the Donnachie-Landshoff diffractive model ATL-PHYS-PUB-2016-017

J.C. Collins, D.E. Soper, G.F. Sterman, Factorization of hard processes in QCD. Adv. Ser. Direct. High Energy Phys. 5, 1–91 (1988)

A. Buckley et al., General-purpose event generators for LHC physics. Phys. Rep. 504, 145–233 (2011)

H.J. Drescher, M. Hladik, S. Ostapchenko, T. Pierog, K. Werner, Parton-based Gribov-Regge theory. Phys. Rep. 350, 93–289 (2001)

A. Donnachie, P. Landshoff, Total cross sections. Phys. Lett. B 296, 227–232 (1992)

P.D.B. Collins, An Introduction to Regge Theory and High-Energy Physics, Cambridge Monographs on Mathematical Physics (Cambridge University Press, Cambridge, 1977)

P. Bartalini et al., Multi-parton interactions at the LHC advanced series on directions in high energy physics, 29, (2018)

A. Moraes, C. Buttar, I. Dawson, Prediction for minimum bias and the underlying event at LHC energies. Eur. Phys. J. C 50, 435 (2007)

J.R. Andersen et al., Les Houches 2013: Physics at TeV Colliders, Standard Model Working Group Report arXiv:1405.1067 [hep-ph]

M. Katzy, QCD Monte-Carlo model tunes for the LHC. Prog. Part. Nucl. Phys. 73, 141–187 (2013)

Tomas Kasemets, Torbjörn Sjöstrand, A comparison of new MC-adapted parton densities. Eur. Phys. J. C 69, 19–29 (2010)

A. Capella et al., Dual parton model. Phys. Rep. 236, 225–329 (1994)

A. Ferrari, P.R. Sala, A. Fasso, and J. Ranft, FLUKA: a multi-particle transport code CERN-2005-10 (2005), INFN/TC05/11, SLAC-R-773

A. Fedynitch, R. Engel, Revision of the high energy hadronic interaction models PHOJET/DPMJET-III. 14th International Conference on Nuclear Reaction Mechanisms, Italy, (2015), CERN-Proceedings-2015-001

ICRU Report No. 85, Fundamental Quantities and Units for Ionizing Radiation (Revised) Journal of the ICRU 11 No 1 (2011), Oxford University Press

A. Vasilescu, G. Lindstroem, Displacement damage in silicon, on-line compilation

A. Abada, M. Abbrescia, S.S. AbdusSalam et al., Future circular collider conceptual design report, vol. 1: FCC physics opportunities. Eur. Phys. J. C 79, 474 (2019)

M. Benedikt, F. Zimmermann, The physics and technology of the future circular collider. Nat. Rev. Phys. 1, 238–240 (2019)

M. Moll, Radiation Damage in Silicon Particle Detectors, PhD Thesis, Universitat Hamburg 1999, DESY-THESIS-1999-040

The ATLAS collaboration, Measurement of Radiation Damage through Leakage Current Monitoring of the ATLAS Pixel Detector, ATL-INDET-PUB-2019-001

Acknowledgements

This work was supported by the National Research Centre, Poland (NCN), Grant No. UMO-2016/21/B/ST2/01083 and MEiN DIR/WK/2017/2020/04-1. The datasets generated and analysed during the current study are available in the https://gitlab.cern.ch/mucha/agh-generators4rd repository.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Oblakowska-Mucha, A., Szumlak, T. Comparison of selected general-purpose event generators for particle fluence simulation in LHC silicon trackers. Eur. Phys. J. Plus 136, 1036 (2021). https://doi.org/10.1140/epjp/s13360-021-02012-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjp/s13360-021-02012-8