Abstract

We investigate the robustness with respect to random stimuli of a dynamical system with a plastic self-organising vector field, previously proposed as a conceptual model of a cognitive system and inspired by the self-organised plasticity of the brain. This model of a novel type consists of an ordinary differential equation subjected to the time-dependent “sensory” input, whose time-evolving solution is the vector field of another ordinary differential equation governing the observed behaviour of the system, which in the brain would be neural firings. It is shown that the individual solutions of both these differential equations depend continuously over finite time intervals on the input signals. In addition, under suitable uniformity assumptions, it is shown that the non-autonomous pullback attractor and forward omega limit set of the given two-tier system depend upper semi-continuously on the input signal. The analysis holds for both deterministic and noisy input signals, in the latter case in a pathwise sense.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Noise is ubiquitous in real systems. Models of real systems, including living ones, often come in the form of ordinary differential equations explicitly and deterministically incorporating random time-dependent terms. For practical purposes, it is important to evaluate the robustness of various devices to random perturbations, such as the stability against noise of the frequency of an electronic clock [3]. This is often formulated in terms of the continuous dependence of solutions, and the upper semi-continuous dependence of attractors, on the input signal or a parameter.

However, it is well known that when the system parameters are near a critical state, noise can lead to dramatic changes in the observed behaviour, called noise-induced transitions in Horsthemke & Lefever [14]. Noise-induced phenomena have been observed in many models of real systems, such as a climate model [1]. However, perhaps the best known example is an excitable biological neuron with its various dynamical models, such as [11], which produce a high-amplitude spike in the transmembrane voltage when the applied signal is above a certain relatively small threshold, and remain quiescent otherwise [25].

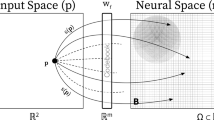

We consider the robustness to noise of a simplified version of a dynamical system proposed as a conceptual phenomenological model of an intelligent system by Janson & Marsden [17, 18]. This simplified model, which has been explicated and analysed mathematically by Janson & Kloeden [16], takes the form of a system of ordinary differential equations (ODEs)

where x(t) and a(z, t) take values in \({\mathbb {R}}^d\). In addition, c is a deterministic vector function taking values in \({\mathbb {R}}^d\), and \(\eta (t)\) \(\in {\mathbb {R}}^m\) is sensory stimulus or training data with \(m \le d\). Note that the solution a(z, t) of (2) depends on z \(\in \) \({\mathbb {R}}^d\) as a fixed parameter in the vector field c. However, when a is substituted into ODE (1), z becomes the state variable x.

We can interpret (1)–(2) as a system of ODEs with an unconventional structure. The existence and uniqueness of solutions, as well as their continuity in initial data, were established in [16] under typical assumptions. In addition, under a dissipativity condition on the vector field c of equation (2), it was shown that the vector field a of equation (1) also satisfies a dissipativity condition and hence that equation (1) has a pullback attractor and a forward omega limit set.

The above dynamical system was inspired by the unique feature of the brain, its structural plasticity, including that of inter-neuron connections [6] and of excitability thresholds of individual neurons [28]. Importantly, this plasticity is self-organised, i.e. the respective parameters evolve spontaneously thanks to internal mechanisms, rather than being set from the outside. Self-organisation of the behaviour has been traditionally captured by dynamical systems [12], either autonomous or non-autonomous. However, the existence of two (albeit interconnected) levels of self-organisation in the brain, namely of the neuron variables and of the structural parameters, and their established link to the brain’s cognitive functions, gave rise to the hypothesis in [17] that a general cognitive dynamical system, whether biological or artificial, should consist of two tiers. Being applied to the brain, this hypothesis suggests that the first tier (1) should describe the potentially observable dynamics x of all neurons, which is governed by a vector field a of the brain arising from the time-varying brain architecture and hence evolving with time. The second tier (2) should describe the self-organised evolution of a governed by the non-observable (hidden) vector field c under the influence of sensory stimuli \(\eta (t)\).

The brain operates in the presence of various sources of noise [10], and it is generally important to assess the robustness of the cognitive processes to random forces. Here, we analyse how sensitive the system (1)-(2) is to the changes in stimuli \(\eta (t)\). For a noisy input signal \(\eta (t,\omega )\), the system (1)–(2) becomes a system of random ODEs, i.e. RODEs, which are interpreted pathwise as ODEs, see [13]. Hence, the results established in [16] apply pathwise to this case, too.

In this paper we investigate the dependence of the pullback attractor and forward omega limit set on the changes in the input signal \(\eta \) for an appropriate class of input signals. This analysis is pathwise, so applies to both deterministic and noisy input signals. In particular, we show that they depend upper semi-continuously on the input signals. The asymptotic behaviour of non-autonomous dynamical systems will be briefly recalled in Sect. 2, and then basic qualitative theoretical results of [16] for the coupled system (1)–(2) and the underlying assumptions will be given in Sect. 3. The main contributions of the paper start in Sect. 4 where the continuity of the vector field a, considered as the solution of the ODE (2), in the input is established along with the corresponding continuity of the solution x of the ODE (1). Then in Sect. 5 the upper semi-continuity of the pullback attractor and of the omega limit set of the ODE (1) in the input signal \(\eta \) is shown to hold. These results will be presented in the deterministic setting. Their interpretation in the noisy setting will be indicated in Sect. 6 and their practical implications discussed in Sect. 7.

2 Asymptotic behaviour of non-autonomous dynamical systems

Non-autonomous dynamical systems have always been of interest in physics, if not explicitly as such, for example as biological oscillations, see the collection of papers in Stefanovska & McClintock [22]. Some basic results on non-autonomous dynamical systems and their attractors are summarised here. Further details can be found in the monographs Kloeden & Rasmussen [23] and Kloeden & Yang [24].

The solution mapping of a non-autonomous ODE such as (1) generates a non-autonomous dynamical system on the state space \({\mathbb {R}}^d\) expressed in terms of a 2-parameter semi-group, which is often called a process. Define

Definition 1

A process is a mapping \(\phi :{\mathbb {R}}_{\ge }^+\times {\mathbb {R}}^d\rightarrow {\mathbb {R}}^d\) with the following properties:

-

(i)

initial condition: \(\phi (t_0,t_0,x_0)=x_0\) for all \(x_0\in {\mathbb {R}}^d\) and \(t_0\in {\mathbb {R}}\);

-

(ii)

2-parameter semi-group property: \(\phi (t_2,t_0,x_0)=\phi (t_2,t_1,\phi (t_1,t_0,x_0))\) for all \(t_0\le t_1 \le t_2\) in \({\mathbb {R}}\) and \(x_0\in {\mathbb {R}}^d\);

-

(iii)

continuity: the mapping \((t,t_0,x_0)\mapsto \phi (t,t_0,x_0)\) is continuous.

2.1 Pullback attractors

The appropriate concept of a non-autonomous attractor involves a family \({\mathfrak {A}}\) \(=\) \(\{ A(t): t \in {\mathbb {R}}\}\) of non-empty compact subsets A(t) of \({\mathbb {R}}^d\), which is invariant in the sense that A(t) \(=\) \(\phi (t,t_0,A(t_0))\) for all t \(\ge \) \(t_0\). Such an invariant family \({\mathfrak {A}}\) is called a pullback attractor if it is attracting in the pullback sense, i.e.

Figure 1 provides a graphical illustration of the concept of pullback convergence to the pullback attractor \({\mathfrak {A}}\) (a collection of points on the solid line, blue online), which can be considered as a geometric object in “phase space–time”, i.e. in (x, t) space, with cross sections A(t) as a set of points (in Fig. 1 only one point) in x space at time t. The definition of the pullback convergence involves two times: the fixed “current” time t, at which the cross-section A(t) is considered, and the start time \(t_0<t\), at which the phase trajectories are launched in the past, which is allowed to vary and to ultimately tend to \(-\infty \). In Fig. 1, the cross section of \({\mathfrak {A}}\) at any time t would be represented by just one point. The pullback convergence to A(t) at a fixed time t means that the phase trajectories x(t) (dashed lines), which start from some initial conditions at some past time \(t_0\), approach A(t) at time t. The distance between x(t) and A(t) at time t shrinks to zero as \(t_0\) tends to \(-\infty \), i.e. A(t) is a “fixed target”.

The existence and uniqueness of a global pullback attractor for a non-autonomous dynamical system on \({\mathbb {R}}^d\) are implied by the existence of a positive invariant pullback absorbing set, which often has a geometrically simpler shape, such as a ball.

Definition 2

A non-empty compact set B of \({\mathbb {R}}^d\) is called a pullback absorbing set for a process \(\phi \) if for each t \(\in \) \({\mathbb {R}}\) and every bounded set D there exists a \(T_{t,D}\) \(\in \) \({\mathbb {R}}^+\) such that

Such a set B is said to be \(\phi \)-positive invariant if

Illustration of pullback convergence, where the “current” time t is fixed, and the start time \(t_0 \rightarrow - \infty \). Different phase trajectories x(t) (dashed lines), which start at different times \(t_0\) in the past, here from the same initial state, approach the pullback attractor (solid line, blue online) at the “current” time t. The further \(t_0\) is from t, the closer x(t) is to the attractor

Theorem 1

[23, Theorem 3.18] Suppose that a non-autonomous dynamical system \(\phi \) on \({\mathbb {R}}^d\) has a positive invariant absorbing set B. Then it has a unique pullback attractor \({\mathfrak {A}}\) \(=\) \(\{ A(t): t \in {\mathbb {R}} \}\) with component sets defined by

In the physics literature [26] there exists a concept of a snapshot attractor, which appears to be related to the pullback attractor, but is not as rigorously defined.

If the invariant family \({\mathfrak {A}}\) is attracting in the forward sense, i.e.

it is called a forward attractor. Figure 2 provides a graphical illustration of the concept of forward convergence to the forward attractor \({\mathfrak {A}}\) (a collection of points on the solid line, blue online), which can be considered as a geometric object in “phase space–time”, i.e. in (x, t) space, with cross sections A(t) as a set of points (in Fig. 2 only one point) in x space at time t. Just like with pullback convergence, the definition of the forward convergence involves two times, albeit having different meanings. Namely, it is assumed that the start time \(t_0\), at which the initial conditions are specified, is fixed. However, the time \(t \ge t_0\), at which solutions are considered, grows without bound and is allowed to tend to \(\infty \). The forward convergence to A(t) means that the phase trajectories x(t) (dashed lines), which start from some initial conditions at some fixed time \(t_0\), approach A(t) as \(t \rightarrow \infty \), i.e. A(t) is essentially a “moving target”.

Illustration of forward convergence, where the initial time \(t_0\) is fixed, and the “current” time \(t \rightarrow \infty \). Different phase trajectories x(t) (dashed lines), which start at time \(t_0\) from different initial states, approach the forward limit set (solid line, blue online) as t increases. The further t is from \(t_0\), the closer x(t) is to the attractor

These two types of convergence, forward and pullback, coincide in the autonomous case, but otherwise are usually independent of each other. A pullback attractor is sometimes represented as a “non-autonomous set” [23], i.e. a space-time tube \(\bigcup _{t\in {\mathbb {R}} } A(t) \times \{t\}\). In brief, the pullback attractor depends only on the past vector field of the system. In particular, it does not depend on the future values of the input signal yet unknown at the moment of observation. Contrary to that, a forward attractor depends on the future vector field, and hence cannot be found at any present moment of time if the future signal is unknown. To detect the forward attractor, we need to wait until the input signal, and generally the whole vector field, becomes available for a sufficiently long time after the present moment. Therefore, without the knowledge of the future vector field and on the basis of our up-to-date knowledge of it, the best way to characterise the system’s asymptotic behaviour is with a pullback attractor.

2.2 Forward limit sets

Forward attractors may seem more natural in applications, but they often do not exist and, when they do exist, they might not be unique, see Kloeden and Yang [24]. Moreover, both forward and pullback attractors assume that the system exists for all time, in particular for all past time. This is obviously not true or necessary in many biological systems, though an artificial “past” can sometimes be usefully invented, e.g. as a fixed autonomous system.

An alternative characterisation of asymptotic behaviour is given by omega limit sets, which do not require the dynamical system to be defined in the distant past. The above definition of a non-autonomous dynamical system can be easily modified to hold only for \((t,t_0)\) \(\in \) \({\mathbb {R}}_{\ge }^+(T^*)\) \(=\) \(\left\{ (t,t_0)\in {\mathbb {R}}\times {\mathbb {R}}: t\ge t_0 \ge T^* \right\} \) for some \(T^*\) > \(-\infty \).

When the system has a non-empty positive invariant compact absorbing set B, as in the situation here, the forward omega limit set

exists for each \(t_0\) \(\ge \) \(T^*\), where the upper bar denotes the closure of the set under it. The set \(\varOmega (t_0)\) is thus a non-empty compact subset of the absorbing set B for each \(t_0\) \(\in \) \({\mathbb {R}}\).

Moreover, these sets are increasing in \(t_0\), i.e. \(\varOmega (t_0)\) \(\subset \) \(\varOmega (t'_0)\) for \(t_0\) \(\le \) \(t'_0\), and the closure of their union

is a compact subset of B, which attracts all of the dynamics of the system in the forward sense, i.e.

for all bounded subsets D of \({\mathbb {R}}^d\), \(t_0\) \(\ge \) \(T^*\). The omega limit set \(\varOmega _B\) is often called the forward attracting set (it need not be invariant, see (Kloeden and Yang [24]).

To illustrate the difference between a pullback attractor and a forward attracting set, consider Example 1 below, adapted from a discrete-time example in (Kloeden and Yang [24], Chapter 10).

Example 1

Consider a non-autonomous ODE

Here, the term \(\left( 1+ \frac{0.9t}{1+\left| t\right| } \right) =\lambda (t)\), which can be regarded as input signal, is a monotonically increasing function of time t, such that \(\lambda (t) \) < 1 for t < 0 and \(\lambda (t) \) > 1 for t > 0. Moreover, \( \lambda (t) \rightarrow 1.9 =: {\bar{\lambda }}\) as \(t \rightarrow + \infty \). Note that the \(\lambda (t)\) never reaches the limit \({\bar{\lambda }}\).

In order to understand the origin of the non-autonomous attractors or limit sets, and generally the behaviour of non-autonomous dynamical systems, it might be convenient to relate them to the snapshots of the vector field at different time moments [15]. Namely, the vector field f(x, t) of (3) is shown in Fig. 3a for several representative values of t, namely, for \(t=-2\) (red online), \(t=0\) (black), \(t=2\) (green online), and \(t=50\) (blue online). For \(t \le 0\), f(x, t) has only one zero and an everywhere negative slope, whereas for \(t > 0\), f(x, t) has three zeros and both positive and negative slopes. By looking at Fig. 3a, one can predict that while time t is non-positive, all phase trajectories starting at \(t_0<t\) approach \(x=0\), although do not reach it exactly. However, as one takes the limit \(t_0 \rightarrow -\infty \), all phase trajectories converge to \(x=0\) asymptotically, so \(x=0\) is a pullback attractor for all \(t \le 0\). Moreover, once the solution x(t) reaches \(x=0\) at \(t<0\), it remains there for all positive times t as well, since \(x=0\) is the stationary solution of (3). Therefore, \(x=0\) is a pullback attractor of system (3) for all values of t.

However, \(x=0\) is neither a forward attractor, nor a forward limit set of (3), as illustrated in Fig. 3b where solid lines of various colours show several typical solutions starting from various initial conditions at finite start times \(t_0\).

Illustration of the difference between a pullback attractor and a forward limit set in system (3). a Vector field f(x, t) at four different times t, namely, at \(t=-2\) (red online), \(t=0\) (black), \(t=2\) (green online), and \(t=50\) (blue online). Filled circles are zeros of f at the respective times. b Pullback attractor is \(x=0\) at all values of t, and hence coincides with t-axis, as explained in text. Solid lines of various colours represent typical solutions starting from various initial conditions at various finite times \(t_0\). The forward attracting set \(\varOmega _B\) is the interval \([1-{\bar{\lambda }},{\bar{\lambda }}-1]=[-0.9,0.9]\)

Note, that for any finite values of \(t_0\), the phase trajectories converge either to \(x=1-{\bar{\lambda }}=-0.9\) from \(x(t_0) <0\), or to \(x={\bar{\lambda }}-1=0.9\) from \(x(t_0) >0\). These are the zeros of the asymptotic vector field, which satisfy

for which \(\frac{\partial f(x,t)}{\partial x}<0 \). For \(x>0\) we have

By a similar argument, for \(x<0\) we obtain \(x=1-{\bar{\lambda }}=-0.9\). The solutions starting at \(t_0\) from positive (negative) initial conditions remain positive (negative) for all \(t>t_0\), since they cannot cross the steady state solution \(x=0\) of (3).

Here, the forward attracting set \(\varOmega _B\) is the interval \([1-{\bar{\lambda }},{\bar{\lambda }}-1]\), and the interval B \(=\) \([-2,2]\) is positive invariant and absorbing set. In this Example there is no forward attractor as in Fig. 2.

2.2.1 Asymptotic invariance of forward attracting sets

The forward attracting set \(\varOmega _B\) is generally not invariant. In Fig. 3 it is positive invariant, but this need not hold in other examples. Often \(\varOmega _B\) satisfies one of the following weaker asymptotic forms of invariance under appropriate conditions.

Definition 3

A set A is said to be asymptotically positive invariant for a process \(\phi \) on \({\mathbb {R}}^d\) if for every \(\varepsilon \) > 0 here exists a \(T(\varepsilon )\) such that

for each \(t_0\) \(\ge \) \(T(\varepsilon )\), where \(B_{\varepsilon }\left( A\right) \) \(:=\) \(\{ x \in {\mathbb {R}}^d\ : \, \mathrm{dist}_{{\mathbb {R}}^d}\left( x,A\right) < \varepsilon \}\).

Definition 4

A set A is said to be asymptotically negative invariant for a process \(\phi \) if for every a \(\in \) A, \(\varepsilon \) > 0 and T > 0, there exist \(t_\varepsilon \) and \(a_\varepsilon \) \(\in \) A such that

We know from Kloeden and Yang [24, Chapter 12] that \(\varOmega _B\) is both asymptotically positive and negative invariant if the following assumption holds.

Assumption 1

There exists a \(T^*\) \(\ge \) 0 such that the process \(\phi \) on \({\mathbb {R}}^d\) is Lipschitz continuous in initial conditions in the absorbing set B on finite time intervals \([t_0,t_0+T]\) uniformly in \(t_0\) \(\ge \) \(T^*\), i.e. there exists a constant \(L_B\) > 0 independent of \(t_0\) \(\ge \) \(T^*\) such that

for all \(x_0\), \(y_0\) \(\in \) B and \(T^*\) \(\le \) \(t_0\) \(\le \) \(\tau \) < t \(\le \) \(\tau +T\).

It essentially requires that the future time variation in the system is not too irregular. See the Appendix for conditions ensuring the validity of this assumption for the system (1)–(2).

3 Qualitative properties of dynamical systems with self-organised vector fields

The following results were established in Janson and Kloeden [16] under the stated assumptions.

3.1 Existence and uniqueness of solutions

Here we regard the stimulus \(\eta (t)\) as a given input in the model. In [16] it was assumed to be continuous. Here we will strengthen this to assume that the these inputs are uniformly bounded. This is not unreasonable biologically. Later we will assume that all of the input signals take values in a common bounded subset \(\varSigma \).

Assumption 2

\(\eta \) : \({\mathbb {R}}\) \(\rightarrow \) \({\mathbb {R}}^m\) is continuous and uniformly bounded, i.e. take values in a bounded subset \(\varSigma \) of \({\mathbb {R}}^m\).

3.1.1 Existence and uniqueness of the observable behaviour x(t) of (1)

We start from requiring continuity of both a and its gradient \(\nabla _x a(x,t)\) with respect to the state vector.

Assumption 3

a : \({\mathbb {R}}^d\times {\mathbb {R}}\) \(\rightarrow \) \({\mathbb {R}}^d\) and \(\nabla _x a\) : \({\mathbb {R}}^d\times {\mathbb {R}}\) \(\rightarrow \) \({\mathbb {R}}^{d\times d}\) are continuous in both variables (x, t) \(\in \) \({\mathbb {R}}^d\times {\mathbb {R}}\).

Assumption 4

a : \({\mathbb {R}}^d\times {\mathbb {R}}\) \(\rightarrow \) \({\mathbb {R}}^d\) satisfies the dissipativity condition \(\left<a(x,t),x\right>\) \(\le \) \(-1\) for \(\Vert x\Vert \) \(\ge \) \(R^*\) for some \(R^*\).

We have the following theorem from [16].

Theorem 2

Suppose that Assumptions 2, 3 and 4 hold. Then for every initial condition \(x(t_0)\) \(=\) \(x_0\), the ODE (1) has a unique solution x(t) \(=\) \(x(t;t_0,x_0)\), which exists for all t \(\ge \) \(t_0\). Moreover, these solutions are continuous in the initial conditions, i.e. the mapping \((t_0,x_0)\) \(\mapsto \) \(x(t;t_0,x_0)\) is continuous.

3.1.2 Existence and uniqueness of the vector field a(x, t) as a solution of (2)

The ODE (2) for the velocity field a(x, t) is independent of the solution \(x(t;t_0,x_0)\) of the ODE (1). We need the following assumption to provide the existence and uniqueness of a(x, t) for all future times t > \(t_0\) and to ensure that this solution satisfies Assumptions 3 and 4.

Assumption 5

c : \({\mathbb {R}}^d\times {\mathbb {R}}^d \times {\mathbb {R}}^m\times {\mathbb {R}}\) \(\rightarrow \) \({\mathbb {R}}^d\) and \(\nabla _a c \) : \({\mathbb {R}}^d \times {\mathbb {R}}^d \times {\mathbb {R}}^m\times {\mathbb {R}}\) \(\rightarrow \) \({\mathbb {R}}^{d\times d}\) are continuous in all variables.

Assumption 6

\(\nabla _a c \), \(\nabla _x c \) : \({\mathbb {R}}^d \times {\mathbb {R}}^d \times {\mathbb {R}}^m\times {\mathbb {R}}\) \(\rightarrow \) \({\mathbb {R}}^{d\times d}\) is continuous in all variables.

Assumption 7

There exist constants \(\alpha \) and \(\beta \) (which need not be positive) such that \(\left<a, c(a,x,y,t)\right>\) \(\le \) \(\alpha \Vert a\Vert ^2 +\beta \) for all (x, y, t) \(\in \) \({\mathbb {R}}^d \times {\mathbb {R}}^m\times {\mathbb {R}}\).

Assumption 8

There exist \(R^*\) such that

Summarising from the above, we have following theorem from [16].

Theorem 3

Suppose that Assumptions 2, 5, 6, 7 and 8 hold. Further, suppose that \(a_0(x)\) is continuously differentiable and satisfies the dissipativity condition in Assumption 4. Then the non-autonomous ODE (2) has a unique solution a(x, t) for the initial condition \(a(x,t_0)\) \(=\) \(a_0(x)\), which exists for all t \(\ge \) \(t_0\) and satisfies Assumptions 3 and 4.

This theorem gives the existence, uniqueness, continuity and dissipativity of the velocity vector field a governing the behaviour of (1).

3.2 Asymptotic behaviour of the ODE (1)

The proof of the next theorem taken from [16] is based on results in Kloeden and Rasmussen [23] and Kloeden and Yang [24].

Theorem 4

Suppose that Assumptions 2, 3 and 4 hold. Then the non-autonomous dynamical system generated by the ODE (1) describing the observable behaviour has a global pullback attractor \({\mathfrak {A}}\) \(=\) \(\{ A(t): t \in {\mathbb {R}}\}\), which is contained in the absorbing set B.

Theorem 4 specifies the conditions under which the global pullback attractor exists in the non-autonomous dynamical system generated by the ODE (1) with a plastic velocity vector field evolving according to (2). As shown in [16] these follow from Assumptions 5, 6, 7 and 8 via Theorem 3.

Theorem 5

Suppose that Assumptions 2, 3 and 4 hold. Then the non-autonomous dynamical system generated by the ODE (1) describing the observable behaviour of the system has a forward attracting set \(\varOmega _B\), which is contained in the absorbing set B.

Theorem 5 expresses the conditions under which a forward attracting set exists in the non-autonomous dynamical system generated by the ODE (1) with a plastic velocity vector field evolving according to (2).

The pullback attractor is based on information from the system’s behaviour in the past, which is all that we know at the present time. In contrast, the forward attracting set tells us where the future dynamics ends up. However, in the model (1)–(2) the future sensory signal \(\eta (t)\), and hence the future vector field a(x, t), are not yet known, so there is no way for us to determine the forward attracting set at the moment of observation. Nevertheless, the pullback attractor provides partial information about what may happen in the future. See Kloeden and Yang [24] for a more detailed discussion.

3.3 Grönwall’s inequality

The Grönwall inequality, which is a very basic tool in the theory of differential equations, was used to establish the above results. In the sequel we will use the following version of it.

Lemma 1

Let \(\alpha \), \(\beta \) and u be continuous real-valued functions defined on an interval \({\mathbb {R}}\) such that

Suppose that \(\alpha (t)\) is non-decreasing. Then

4 Admissible input signals depending on a parameter

We will now consider an admissible class of input signals \(\eta \) which satisfy the continuity Assumption 2 and take values in common bounded subset \(\varSigma \) of \({\mathbb {R}}^m\).

Assumption 9

\(\eta \) : \({\mathbb {R}}\) \(\rightarrow \) \({\mathbb {R}}^m\) is continuous and uniformly bounded, i.e. takes values in a bounded subset \(\varSigma \) of \({\mathbb {R}}^m\).

We suppose that the stimulus \(\eta \) depends on a parameter \(\nu \) \(\in \) \({\mathbb {R}}\), and now write it \(\eta ^{(\nu )}\). This parameter may have biological significance or it could just be a convenient label to distinguish between different inputs. In particular, we assume that the \(\eta ^{(\nu )}\) depends continuously on the parameter \(\nu \) in the \(L^1\) norm uniformly on bounded time intervals.

Assumption 10

Suppose that \(\nu \) \(\rightarrow \) \(\nu _0\) and that for every \(\varepsilon \) > 0 and T > 0 there exists \(\delta \) \(=\) \(\delta (T,\varepsilon )\) > 0 such that

To simplify the exposition, we now assume that the vector field c does not depend explicitly on time and replace Assumptions 5 and 6 by

Assumption 11

c : \({\mathbb {R}}^d \times {\mathbb {R}}^d \times {\mathbb {R}}^m\) \(\rightarrow \) \({\mathbb {R}}^d\) is continuously differentiable in all variables.

This implies that the vector field c is locally Lipschitz on subsets of bounded sets of \({\mathbb {R}}^d \times {\mathbb {R}}^d \times {\mathbb {R}}^m\).

4.1 Continuity of the vector field a in the parameter \(\nu \)

This is a standard result in ODE theory, but we will give the details here, because we need the estimates later. Let \(a^\nu (x,t)\) and \(a^{(\nu _0)}(x,t)\) be the unique solutions of the ODE (2) for the input signal \( \eta ^{(\nu )}\) and \(\eta ^{(\nu _0)},\) respectively, with the same initial condition \(a^{(\nu )}(x,t_0)\) \(=\) \(a^{(\nu _0)}(x,t_0)\) \(=\) \(a_0(x)\). Thus

Lemma 2

Suppose that Assumptions 3, 4, 8, 9, 10 and 11 hold. Then the inequality

holds uniformly in x.

Proof

Subtracting the above integral forms of the ODEs for \(a^{(\nu )}(x,t)\) and \(a^{(\nu _0)}(x,t)\) gives

Taking the norm and using the Lipschitz property of the vector field c in its first and third variables gives

\(\square \)

Remark 1

Note that we have used a global Lipschitz condition here to simplify the exposition although Assumptions 8 and 11 provide only a local one. This is not a problem for the input variable since the admissible inputs take values in a common bounded set. For the other variables an appropriate local Lipschitz condition can be used, since the solutions remain bounded on a finite time interval.

Hence by the Grönwall inequality with

we obtain

This gives

and hence by Assumption 10

\(\square \)

4.2 Continuity of the solution \(x^{(\nu )}\) of ODE (1) in the parameter \(\nu \)

Let \(x^{(\nu )}(t,t_0,x_0)\) and \(x^{(\nu _0)}(x,t,t_0,x_0)\) be the unique solution of the ODE (1) for the above vector fields \(a^{(\nu )}(x,t)\) and \(a^{(\nu _0)}(x,t)\), respectively, with the same initial condition \(x^{(\nu )}(t_0,t_0,x_0)\) \(=\) \(x^{(\nu _0)}(x,t_0,t_0,x)\) \(=\) \(x_0 \). Thus, in integral form

Lemma 3

Suppose that Assumptions 2, 3, 4, 9 and 10 hold. Then

Proof

Subtracting the above integral forms of the ODEs (1) for \(x^{(\nu )}(t,t_0,x_0)\) and \(x^{(\nu _0)}(t,t_0,x_0) \) gives

Taking the norm and using the Lipschitz property of the vector field a in its first variable gives

Hence, by the Grönwall inequality with

and

we have

5 Upper semi-continuity of the pullback attractor and omega limit set of the ODE (1) in the input signal \(\eta \)

Pullback attractors and omega limit sets depend on the behaviour of the dynamics over semi-infinite time intervals. This must be in some sense uniform to ensure that they do not change too much when a parameter is changed.

For example, the required distance \(\delta \) \(=\) \(\delta (T,\varepsilon )\) > 0 in Assumption 10 between the parameters \(\nu \) and \(\nu _0\) depends on the desired distance \(\varepsilon \) > 0 between the input signals \( \eta ^{(\nu )}(t)\) and \( \eta ^{(\nu _0)}(t)\) and the length T > 0 only of the time interval over which the input signals are being compared, but not on the actual location of the interval itself.

5.1 Upper semi-continuity of the pullback attractors of the ODE (1) in the input signal \(\eta \)

Let \({\mathfrak {A}}^{(\nu )}\) \(=\) \(\{ A^{(\nu )}(t): t \in {\mathbb {R}} \}\) be the pullback attractor of the ODE (1) with the noise parameter \(\nu \) and let \({\mathfrak {A}}^{(\nu _0)}\) \(=\) \(\{ A^{(\nu _0)}(t): t \in {\mathbb {R}} \}\) be the pullback attractor of the ODE (1) with the noise parameter \(\nu _0\).

Theorem 6

Suppose that Assumptions 3, 4, 9, 10 and 11 hold. Then

Proof

We use a proof by contradiction. We suppose that it does not hold at, say, t \(=\) 0. In particular, we assume that there exists \(\varepsilon _0\) > 0 and a sequence \(\nu _n\) \(\rightarrow \) \(\nu _0\) as n \(\rightarrow \) \(\infty \) such that

Since \(A^{(\nu _n)}(0)\) is compact there exists \(a^{(\nu _n)}\) \(\in \) \({A}^{\nu _n}(0)\) such that

Let B be the common pullback absorbing ball for the ODE (1) with a noise parameter \(\nu \). In particular, it is the same for all \(\nu \). Since \({\mathfrak {A}}^{(\nu _0)}\) pullback attracts B, with the above \(\varepsilon _0\) > 0 there is a \(T_0\) \(=\) \(T(\varepsilon _0,\nu _0) \) > 0 such that

Since \({\mathfrak {A}}^{(\nu _n)}\) is invariant under \(x^{(\nu _n)}\) there exists \(b^{(\nu _n)}\) \(\in \) \({A}^{(\nu _n)}(-T_0)\) \(\subset \) B such that \(x^{(\nu _n)}(0,-T_0,b^{(\nu _n)})\) \(=\) \(a^{(\nu _n)}\). Hence, by the error estimate (7)

Finally, combining the estimates (9) and (10) we obtain

This contradicts the assumed estimate (8). This completes the proof. \(\square \)

5.2 Upper semi-continuous convergence of the omega limit sets in the input signal \(\eta \)

Here we need the systems to be defined only for t \(\ge \) \(T^*\) for some \(T^*\). Denote by \(\varOmega ^{(\nu )} _B\) and \(\varOmega ^{(\nu _0)} _B\) the limit sets starting in the absorbing set B for the non-autonomous dynamical systems \( x^{(\nu )}(t,t_0,x_0)\) and \( x^{(\nu _0)}(t,t_0,x_0)\) generated by the ODE (1) with parameterised vector fields \(a^{(\nu )}(x,t)\) and \(a^{(\nu _0)}(x,t)\), respectively.

In addition to earlier assumptions, we assume that \(\varOmega ^{(\nu _0)} _B\) is uniformly attracting with respect to the initial time \(t_0\), i.e.

Assumption 12

\(\varOmega ^{(\nu _0)} _B\) uniformly attracts the set B, i.e. for every \(\varepsilon \) > 0 there exists a \(T(\varepsilon )\) which is independent of \(t_0\) \(\ge \) \(T^*\), such that

This holds, for example, if the vector field \(a^{(\nu _0)}(x,t)\) of the ODE (1) is periodic, almost periodic or recurrent in t in some sense.

Theorem 7

Suppose that Assumptions 1, 3, 4, 9, 10 and 11 hold. Then

Proof

Suppose for contradiction that there exist a sequence \(\nu _n\) \(\rightarrow \) \(\nu _0\) as n \(\rightarrow \) \(\infty \) such that the above limit is not true. Then there exists \(\varepsilon _0\) > 0 such that

Since \(\varOmega ^{(\nu _n)} _B\) is compact, there exists \(w_n\) \(\in \) \(\varOmega ^{(\nu _n)} _B\) such that

First, by Assumption 12 on uniform attraction, we can pick \(T_0\) \(=\) \(T(\varepsilon _0/4)\) such that for any \(t_0\) \(\ge \) \(T^*\)

Fix such a \(\nu _n\). By the asymptotic negative invariance of \(\varOmega ^{(\nu _n)} _B\), for the above \(w_n\) \(\in \) \(\varOmega ^{(\nu _n)} _B\) \(\subset \) B, \(T_0\) and \(\varepsilon _0\) there exist \( {\hat{w}}_{T_0,n}\) \(\in \) \(\varOmega ^{(\nu _n)} _B\) \(\subset \) B, and a \(t^n_{\varepsilon _0}\) \(\gg \) 0 so that

Moreover,

by the assumption that \(\varOmega ^{(\nu _0)} _B\) uniformly attracts the set B.

By the inequality (7) on intervals of length \(T_0\) we have

when \(| \nu _n - \nu _0 |\) is small enough, i.e. n large enough. In particular,

Hence, using the above estimates,

which contradicts assumption (11). This completes the proof. \(\square \)

6 Noisy input signals and random dynamical systems

There is an extensive theory of random dynamical systems, see, for example, Arnold [4] and Crauel and Kloeden [7]. These are typically generated by Itô stochastic differential equations or random ODEs and their infinite-dimensional counterparts. In this theory random dynamical systems have a skew product flow structure, with the noise represented abstractly by a driving system acting on a canonical probability space.

This is very convenient for theoretical purposes, but it can lead to difficulties in specific applications, in particular when the noise input itself is being perturbed, see [21]. In such cases, which is what we are considering in this paper, it is much more convenient to consider a pathwise two-parameter semi-group or process structure as in Definition 1 as in Cui & Kloeden [8]. In this set-up, a sample path of the noise input is denoted by \(\eta (t,\omega )\), where \(\omega \) \(\in \) \(\varOmega \), the sample space of some underlying probability space \((\varOmega , {\mathcal {F}},{\mathbb {P}})\) (the details of which are not important for the pathwise considerations here).

The results for the model (1)–(2) given in the previous sections then apply pathwise for each given sample path \(\eta (t,\omega )\) of the input signal. All terms there could be labelled by this \(\omega \) to indicate their dependence on this particular input sample path, such as the solutions \(x(t,t_0,x_0;\omega )\), \(a(x,t;\omega )\) and the pullback attractor component sets \(A(t;\omega )\).

Note that the use of the \(L^1\) norm to measure the distance between different input signals in Assumption 10 allows input signals to vary considerably on a very small time interval. This is particularly useful for noisy perturbations of a reference signal, in particular a deterministic reference signal.

7 Practical implications

The model (1)–(2) considered here is based on the slightly more general model introduced in [17], which mathematically expresses a hypothesis about the most general principle fulfilled in the brain, that enables cognitive functions and could potentially be implemented in future artificial intelligent devices. This principle assumes the existence of two distinct tiers of self-organisation: one of the observable behaviour, and the other of the rules governing this behaviour. As applied to the brain, the first tier represents self-organisation of the neural activity. The second tier is self-organisation of the vector field, which governs neural activity and via that ultimately controls the observable manifestations of cognition, namely bodily movements and speech. The second tier occurs thanks to the self-organised plasticity of the brain’s physical structure [6, 28]. These tiers are described by Equations (1) and (2), respectively, and the model under study represents a very novel dynamical paradigm.

Importantly, the system (1)–(2), as well as its more complicated version in [17], do not claim to model the complete brain activity and are purely phenomenological, i.e. disconnected from the specific physico-chemical mechanisms or neural parameters giving rise to the brain’s vector field. Nevertheless, they can potentially provide considerable insight into the nature of cognition and intelligence as emergent functions of the brain [2].

In the general conceptual model from [17], the two tiers are coupled mutually, so that not only the vector field determines the behaviour, but also the behaviour affects evolution of the vector field. This is in agreement with how the neural activity in the brain affects the connection strengths between the neurons and hence the resultant vector field of the brain. However, in [17] a very simple example with uni-directional coupling, like in (1)–(2), displayed cognitive functions typical of artificial neural networks, which suggested that for their emergence mutual coupling might not be necessary. With this, the model (1)–(2) with uni-directional coupling, while featuring the critically important self-organised plasticity of the vector field, has an advantage of allowing one to detect phenomena, which under mutual coupling would be blurred or masked. It also allows for a more transparent analysis.

Both models state that the external signals can influence the rules of behaviour, but do not fully determine them. Namely, the behavioural rules a evolve according to (2) regardless of whether the stimulus \(\eta (t)\) is zero or not, because the function c is generally not identically zero. However, a nonzero \(\eta (t)\) modifies the function c in real time and hence affects evolution of a.

The potential benefit of this conceptual model to artificial intelligence (AI) lies in the possibility to build a device implementing function c in (1)–(2), which would be designed to specification for the purposes of the task posed. This way, the vector field a, controlling the observable behaviour, would evolve under the influence of sensory stimulus \(\eta \) in a transparent manner, thus making operation of the whole device interpretable in agreement with the burning need for explainable AI [27].

Assuming the feasibility of the model (1)–(2), as supported by several demonstrations with simplified special cases, for example, in [17], our results could be helpful in assessing the robustness and predictability of the cognitive processes and of the decision-making in biological or artificial intelligent systems.

Namely, we evaluated how the solutions x(t) were amended in response to a slight change in the sensory signal \(\eta (t)\), such as when the same object is seen from a different angle, or when one hears a distorted message. Our results suggest that the amount of change in the solution will be proportional to the amount of change in the input signal. Therefore, we predict that a small change in the signal would not drastically alter the observed behaviour represented by the individual trajectories and approximated by the non-autonomous attractors.

Importantly, the use of non-autonomous, as opposed to standard attractors, allowed us to work around the issue that even a tiny amount of noise can drastically modify the system behaviour if the dynamical system is close to a critical point [14]. Namely, standard attractors, which were originally introduced for autonomous dynamical systems, and were later also used to describe long-term behaviour of non-autonomous systems subjected to deterministic stationary oscillatory stimuli (such as periodic, quasiperiodic or deterministically chaotic fluctuating signals), represent static subsets of the phase space. Examples are invariant tori or periodic orbits on the tori often arising in the context of synchronisation [5].

If the applied signal is distorted by the addition of noise, attractors in the classical sense disappear. Here, if the noise-free system was close to a bifurcation, the observed noise-induced trajectory can considerably depart from its attractor, with just one of a plethora of examples provided in [1]. With this, prediction of the system response to random perturbation requires the knowledge of the bifurcation diagram and of the system’s proximity to any bifurcations, or of the structure of the phase space with its manifolds and limit sets, which in practical applications might not be easily available. Particularly in the brain the number of parameters, such as inter-neuron connections and excitability thresholds of individual neurons, is gigantic, and obtaining its full multi-parameter bifurcation diagram or revealing the manifolds do not seem feasible.

However, moving from classical to non-autonomous attractors allows one to work around the issue above. A non-autonomous attractor, either pullback or forward, is a subset in the “phase space–time”. Describing the behaviour of the same non-autonomous system in terms of non-autonomous attractors allows one to effectively absorb the bifurcation into the attractor itself. Namely, for every possible stimulus, there is a separate non-autonomous attractor in the form of a single spatio-temporal set approached by individual phase trajectories in the “phase space–time”.

Our results imply that the non-autonomous attractors vary upper semi continuously in the stimuli regardless of the proximity of the underlying autonomous system to any critical state.

References

D.V. Alexandrov, I.A. Bashkirtseva, L.B. Ryashko, Stochastically driven transitions between climate attractors. Tellus A Dyn. Meteorol. Oceanogr. 66(1), 23454 (2014)

A.P. Alivisatos, M. Chun, G.M. Church, R.J. Greenspan, M.L. Roukes, R. Yuste, The brain activity map project and the challenge of functional connectomics. Neuron 74(6), 970–974 (2012)

D. Antonio, D. Zanette, D. López, Frequency stabilization in nonlinear micromechanical oscillators. Nat. Commun. 3, 806 (2012)

L. Arnold, Random Dynamical Systems (Springer-Verlag, Berlin, 1998)

A.G. Balanov, N.B. Janson, O.V. Sosnovtseva, D.E. Postnov, Synchronization: From Simple to Complex (Springer, Berlin, Heidelberg, 2009)

A. Citri, R.C. Malenka, Synaptic plasticity: multiple forms, functions, and mechanisms. Neuropsychopharmacology 33(1), 18–41 (2007)

H. Crauel, P.E. Kloeden, Non-autonomous and random attractors. Jahresbericht der Deutschen Mathematiker-Vereinigung 117, 173–206 (2015)

H. Cui, P.E. Kloeden, Invariant forward random attractors of non-autonomous random dynamical systems. J. Differ. Eqns. 65, 6166–6186 (2018)

D.W. Dong, J.J. Hopfield, Dynamic properties of neural networks with adapting synapses. Netw. Comput. Neural Syst. 3(3), 267–283 (1992)

A. Faisal, L. Selen, D. Wolpert, Noise in the nervous system. Nat. Rev. Neurosci. 9, 292-s303 (2008)

R. Fitzhugh, Impulses and physiological states in theoretical models of nerve membrane. Â Biophys. J. 1(6), 445–466 (1961)

H. Haken, Synergetics: An Introduction: Nonequilibrium Phase Transitions and Self-Organization in Physics, Chemistry, and Biology (Springer-Verlag, Berlin, Heidelberg, 1977)

X. Han, P.E. Kloeden, Random Ordinary Differential Equations and Their Numerical Solution (Springer Nature, Singapore, 2017)

W. Horsthemke, L. Lefever, Noise-Induced Transitions (Springer, Heidelberg, 1984)

N.B. Janson, Non-linear dynamics of biological systems. Contemp. Phys. 53(2), 137–168 (2012)

N.B. Janson, P.E. Kloeden, Mathematical consistency and long-term behaviour of a dynamical system with a self-organising vector field. J. Dyn. Diff. Eqns. (2020). https://doi.org/10.1007/s10884-020-09834-7

N.B. Janson, C.J. Marsden, Dynamical system with plastic self-organized velocity field as an alternative conceptual model of a cognitive system. Sci. Rep. 7, 17007 (2017)

N.B. Janson, C.J. Marsden, Supplementary note to: dynamical system with plastic self-organized velocity field as an alternative conceptual model of a cognitive system. Sci. Rep. 7, 17007 (2017)

P.E. Kloeden, Pullback attractors of non-autonomous semidynamical systems. Stoch. Dyn. 3, 101–112 (2003)

P.E. Kloeden, Asymptotic invariance and the discretisation of non-autonomous forward attracting sets. J. Comput. Dyn. 3, 179–189 (2016)

P.E. Kloeden, V.S. Kozyakin, The perturbation of attractors of skew-product flows with a shadowing driving system. Discret. Contin. Dyn. Syst. 7, 883–893 (2001)

A. Stefanovska, P.V.E. McClintock (eds.), The Physics of Biological Oscillators - New Insights into Non-Equilibrium & Non-Autonomous Systems (Springer Nature, Switzerland, 2021)

P.E. Kloeden, M. Rasmussen, Non-Autonomous Dynamical Systems (Amer. Math. Soc, Providence, 2011)

P.E. Kloeden, M. Yang, Introduction to Non-Autonomous Dynamical Systems and Their Attractors (World Scientific Publishing Co. Inc, Singapore, 2020)

B. Lindner, J. Garcia-Ojalvo, A. Neiman, L. Schimansky-Geier, Effects of noise in excitable systems. Phys. Rep. 392, 321–424 (2004)

F. Romeiras, C. Grebogi, E. Ott, Multifractal properties of snapshot attractors of random maps. Phys. Rev. A 41, 784–799 (1990)

C. Rudin, Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1, 206–215 (2019)

D.J. Schulz, Plasticity and stability in neuronal output via changes in intrinsic excitability: it’s what’s inside that counts. J. Exp. Biol. 209, 4821–4827 (2006)

M.I. Vishik, Asymptotic Behaviour of Solutions of Evolutionary Equations (Cambridge University Press, Cambridge, 1992)

W. Walter, Ordinary Differential Equations (Springer-Verlag, New York, 1998)

Author information

Authors and Affiliations

Corresponding author

Additional information

The visit of PEK to Loughborough University was supported by London Mathematical Society.

Appendix: Validity of Assumption 1 for the solutions of the ODE (1)

Appendix: Validity of Assumption 1 for the solutions of the ODE (1)

The following lemma verifies Assumption 1 for the solution of x of the ODE (1).

Lemma 4

Under Assumptions 3, 4, 9, 10 and 11, there exists a \(T^*\) \(\ge \) 0 such that the solution x of the ODE (1) is Lipschitz continuous in initial conditions in the absorbing set B on finite time intervals \([t_0,t_0+T]\) uniformly in \(t_0\) \(\ge \) \(T^*\), i.e. there exists a constant \(L_B\) > 0 independent of \(t_0\) \(\ge \) \(T^*\) such that

for all \(x_0\), \(y_0\) \(\in \) B and \(T^*\) \(\le \) \(t_0\) \(\le \) \(\tau \) < t \(\le \) \(\tau +T\).

Proof

Consider the solutions \(x(t,\tau ,x_0)\) and \(x(t,\tau ,y_0)\) of the ODE (1) in the absorbing set B, which is positive invariant. Then

so by the Grönwall inequality,

for all \(T^*\) \(\le \) \(t_0\) \(\le \) \(\tau \) \(\le \) t \(\le \) \(t_0+ T \), so 0 \(\le \) \(t-\tau \) \(\le T\). Here \(L_B\) is the locally Lipschitz constant of the vector field c in the variable a. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Janson, N.B., Kloeden, P.E. Robustness of a dynamical systems model with a plastic self-organising vector field to noisy input signals. Eur. Phys. J. Plus 136, 720 (2021). https://doi.org/10.1140/epjp/s13360-021-01662-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjp/s13360-021-01662-y