Abstract

The simple story line that ‘Gell-Mann and Zweig invented quarks in 1964 and the quark model was generally accepted after 1968 when deep inelastic electron scattering experiments at SLAC showed that they are real’ contains elements of the truth, but is not true. This paper describes the origins and development of the quark model until it became generally accepted in the mid-1970s, as witnessed by a spectator and some-time participant who joined the field as a graduate student in October 1964. It aims to ensure that the role of Petermann is not overlooked, and Zweig and Bjorken get the recognition they deserve, and to clarify the role of Serber.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reactions to the death of Murray Gell-Mann, on 24 May 2019, suggest a need to give proper credit to those involved in the development of the quark model, to describe how it appeared to one of those involved,Footnote 1 and to try to lay to rest a number of myths.

In the July/August 2019 CERN Courier, the late Lars Brink rightly described Murray, who was the undisputed leader of theoretical particle physics through the 1950s and 1960s, as ‘one of the great geniuses of the twentieth century’ [20]. Reports of his death all mentioned his work on quarks, which some highlighted. Physics World, for example, headed its tribute [6] ‘Quark Pioneer Murray Gell-Mann dies’, adding in a subheading that he made ‘a number of breakthroughs including predicting the existence of quarks’, wrongly giving him sole credit for developments which his insistence, initially for good reasons, that quarks are purely ‘mathematical entities’ discouraged.

Gell-Mann created the conditions that made the discovery of quarks possible. But, according to the published record, neither he nor George Zweig, who is usually considered the co-discoverer, and deserves far more credit and recognition than he usually gets, was the first to submit a paper on the entities that Murray dubbed quarks. That honour goes to André Petermann [80] who just pipped him [44] and Zweig[95], [96], [97] at the post, but—although he was a CERN staff member—was not mentioned in the CERN Courier.

Gell-Mann—whose paper on quarks was ‘stimulated’ by Robert Serber—advocated abstracting algebraic relations from the quark model, comparing this [45] to ‘a method sometimes employed in French cuisine: a piece of pheasant meat is cooked between two slices of veal which are then thrown away’. In contrast, Serber treated quarks as real particles, and Petermann and—to a far greater extent—Zweig used what Gell-Mann was soon derisively calling the ‘naïve’ or ‘concrete’ quark model to derive results that went far beyond what could be inferred from the associated ‘current algebra’ as it became known.

Robert Serber—realised that Gell-Mann and Ne’eman’s SU(3) classification of strongly interacting particles can be explained by assuming that they are composed of three constituents, and ‘stimulated’ Gell-Mann to think further about this idea. His unpublished calculation of the magnetic moments of protons and neutrons was the first use of the ‘concrete quark model’. Courtesy AIP Emilio Segrè Visual Archives, Physics Today Collection

Murray Gell-Mann—coined the name quarks, and was the grandfather, but not the sole father, of the quark model. He insisted that quarks are mathematical entities, but later claimed that that by this he meant that they are permanently imprisoned inside the observed particles, as is now believed to be the case. Courtesy University of Chicago Photographic Archive, [apf06342], Special Collections Research Center, University of Chicago Library

George Zweig—father of the ‘Concrete Quark Model’. Photo courtesy G Zweig

André Petermann—in a paper (in French) submitted 5 days before Gell-Mann’s, derived mass formulae from a constituent model, noting that the constituents would have non-integral charges. Photo, taken in Manchester in 1954, the year after he and Stueckelberg published the first paper on the renormalization group, courtesy CERN

Richard (Dick) Dalitz—my supervisor - showed that the quark model accommodated all newly discovered particles. Portrait taken in May 1961 by Gian-Carlo Wick at Brookhaven National Laboratory. Courtesy AIP Emilio Segrè Visual Archives

James (Jim) Bjorken, generally known as bj—the only person to predict that deep inelastic electron scattering from nucleons would be like that from point like particles, who developed the physical description that became known as the parton model, before and independently of Feynman. Photo, taken in the late 1970s, Courtesy SLAC National Accelerator Laboratory

2 Prehistory and context

The prehistory of the quark model begins in 1949 with Fermi and Yang’s model [35] of the pi meson as a bound state of a nucleon and an antinucleon. They argued that the probability that all the particles that were being discovered were ‘really elementary’, as then assumed, ‘becomes less and less as their number increases’. Sakata[84] suggested extending the model to include the strange lambda baryon as a constituent of strange K mesons, but this proposal ran into trouble as more and more strange and non-strange particles were discovered. Order was imposed on the growing zoo of particles by Gell-Mann and Nishijma’s introduction of the hypercharge label, and Gell-Mann and Ne’eman’s use of the mathematical group SU(3)Footnote 2 to classify the known baryons and mesons as members of families of eight related particles. These steps are analogous to the realisation that chemical elements should be labelled by their atomic numbers and Mendeleev’s invention of the periodic table. The SU(3) scheme made a number of successful predictions, and was generally accepted following the discovery in 1964 of the spin 3/2 Ω− baryon, with the mass predicted by Gell-Mann, who had postulated its existence as the missing member of a tenfold SU(3) family.

No physical particles were initially assigned to the fundamental threefold (triplet) SU(3) family which we now know houses the three lightest quarks. Rajasekaran has reported that when Gell-Mann was lecturing on the ‘eightfold way’, as he called his classification, at a summer school in Bangalore in 1961, Dick Dalitz—who was also speaking in the school—repeatedly asked him why he was ignoring the triplets, but Gell-Mann evaded the question [82]. Two years elapsed before the idea of using the triplet was taken up, and over ten more years then passed before the idea that hadrons are made of quarks and gluons (that hold the quarks together) became generally accepted.Footnote 3 This was because the very idea that hadrons have fundamental constituents had fallen out of favour.

As the number of observed hadrons proliferated in the 1950s and early 1960s, it came to be thought that none enjoys a special status as ‘elementary’, but rather they are made of each other, in a ‘bootstrap model’, in which—it was hoped—their properties would be determined by self-consistency. In 1961, Geoffrey Chew, the leader of this ‘nuclear democracy’ movement, stated [23] that he believed the ‘conventional association of fields with strongly interacting particles to be empty’, and that with respect to strong interactions field theory was not only ‘sterile’ but ‘like an old soldier, is destined not to die but just to fade away’. There was no place for aristocratic quarks in this philosophy, to which many—perhaps most—particle physicists then subscribed.

3 The birth of quarks

At the end of his paper on quarks [44] Murray Gell-Mann wrote ‘These ideas were developed during a visit to Columbia University in March 1963; the author would like to thank Professor Robert Serber for simulating them’. Gell-Mann and Serber have given differing accounts of what happened.

In his memoirs[87], Serber writes that a couple of weeks earlier, in order to prepare themselves for the colloquium that Gell-Mann was scheduled to deliver, he and his colleagues asked Gian-Carlo Wick to give a talk about the irreducible representations of the SU(3) symmetry group. The next day, it occurred to Serber that he could reproduce Gell-Mann and Ne’eman’s SU(3) families ‘by a low-brow method by considering a particle now called a quark that could exist in three states…The suggestion was immediate: the baryons and mesons were not themselves elementary particles but were made of quarks—the baryons of three quarks, the mesons of quark and anti-quark’. On the day of the talk:

‘Before Murray's colloquium, I took him to lunch at Columbia's Faculty Club and explained this idea to him. He asked what the charges of my particles were, which was something I hadn’t looked at. He got out a pencil and on a paper napkin figured it out in a couple of minutes. The charges would be + 2/3 and −1/3 proton charges – an appalling result. During the colloquium Murray mentioned the idea and it was discussed at coffee afterwards…. Bacqui [sic] Beg … says he recalls that … Murray had said that the existence of such a particle will be a strange quirk of nature, and quirk was jokingly transformed into quark’.

Gell-Mann, on the other hand, recalled many years later [48] that:

‘On a visit to Columbia, I was asked by Bob Serber why I didn’t postulate a triplet of what we would now call SU(3) of flavor, making use of my relation 3 × 3 × 3 = 1+ 8 +8 + 10 to explain baryon octets, decimets, and singlets. I explained to him that I had tried it. I showed him on a napkin (at the Columbia Faculty Club, I believe) that the electric charges would come out + 2/3, - 1/3, - 1/3 for the fundamental objects’.

These accounts can only be reconciled if Gell-Mann’s question to Serber about charges was rhetorical. There seems no reason to doubt that Serber took it to be a question to which Gell-Mann did not already know the answer (although it would hardly have taken him a couple of minutes to figure out). Some years after the event, Gell-Mann told Zweig that ‘Serber hadn’t told him anything he did not already know’ [59], in which case it was generous of him to acknowledge Serber for stimulating his ideas. It would be surprising if Murray had not already thought of using the triplet representation (about which Dalitz quizzed him in 1961), but given his strong support for the bootstrap philosophy and the idea of nuclear democracy, he would presumably have quickly dismissed it.

In any case, Serber’s memoirs continue:

‘A day or two later, it occurred to me that while the quarks’ fractional charges were strange, the magnetic moments would not be. The magnetic moments depend on the ratio of charge to mass. In the nucleon the quark would have an effective mass one-third of the nucleon mass, so the one thirds would cancel out in the ratio and the quark would have integral nuclear magnetic moments. A simple calculation gave the result that the proton would have three nuclear magnetic moments and the neutron would have minus two, values quite close to the observed ones. That convinced me of the correctness of the quark theory. At that point, I should have published; but I never got round to it. Bacqui Beg suggested to me that the reason was that the idea seemed so obvious to me that I thought it must be familiar to the experts in the field. However, it was news to Murray, and sometime later he told Marvin Goldberger that he had never thought of it.’

This is the first recorded use of the quark model as more than a mnemonic or source from which to abstract algebraic relations. While his results were ‘quite close to the observed ones’, the fact that (without reference to masses) the model predicts that the ratio of the magnetic moments is −1.5, in good agreement with the observed value of −1.46, is perhaps more impressive (this result which was later derived from SU(6) symmetry by Beg et al. [8], and then by Becchi and Morpurgo [7] using the quark model, without the need for SU(6)).

Gell-Mann’s Physics Letter on quarks [44] was received on 4 January 1964. Paul Frampton has informed me that when he was visiting Cal Tech some years later, Helen Tuck, Murray’s Personal Assistant, told him that Murray first submitted the paper to Physical Review Letters (which would have been his normal practice) but it was rejected—which made him furious. When it was submitted to Physics Letters, Jacques Prentki at CERN was the editor. Torleif Ericson, another CERN physicist, has told me (private communication, December 2022) that one day he observed a crowd spilling out of Prentki’s office into the corridor. They were discussing what Jacques should do with Murray’s paper, which had got thumbs down from referees. Torleif recalls that finally Jacques, ‘in his inimitable English accent’, said ‘Murray is a grown up with a reputation to lose. Everybody knows that with 1/3 charges you can get this. If he wants to make a fool of himself, I will let him. So I accept his article.’ Having known Prentki, I am not surprised that he had already considered using the fundamental representation and knew that it would require non-integral charges, but I doubt more than a handful of others had thought about it.

In his Physics Letter, Gell-Mann proposed that algebraic relations should be abstracted from a ‘formal field theory model’ of quarks and used as a constraint on ‘bootstrap’ models. He only considered the possibility that quarks might be real particles in the concluding paragraph, in which he wrote that ‘It is fun to speculate about the way quarks would behave if they were physical particles of finite mass (instead of purely mathematical entities as they would be in the limit of infinite mass)’, pointed out that one would be stable, and concluded that ‘A search for stable quarks…at the highest energy accelerator would help to reassure us of the non-existence of real quarks’.

André Petermann’s paper [80] ‘Properties of Strangeness and a Mass Formula for Vector Mesons’, written in French, was received by Nuclear Physics on 30 December 1963, but it was not published until March 1965 and went almost unnoticed for fifty-five years. It is based on the idea that hadrons are all composed of three constituents and that, adopting Gell-Mann’s nomenclature, the strange quark is heavier than the non-strange quarks. He used this idea to interpret the Gell-Mann Okubo SU(3)-based relation [42, 72] between baryon masses, went on to derive a relation between the masses of the vector mesons, and then used the quark mass difference he inferred from baryon masses to calculate mass differences between vector mesons. Towards the end of the paper, he wrote of the constituents he proposed ‘if one wants to keep charge conservation, which is highly desirable, the particles must then have non-integral charges. This is unpleasant, but cannot, after all, be excluded on physical grounds’. This is the quark model.

His formula relating the masses of the φ, K* and ρ mesons had actually been derived earlier by Okubo [73] on the basis of SU(3) symmetry alone, but Petermann (followed closely by Zweig) was the first to publish an interpretation of mass formulae in terms of constituents and relate meson mass differences to baryon mass differences. It might be suspected that publication of his paper was delayed by referees, but there is no evidence of resubmission or of any revision. Another possible explanation is that he had not returned the proofs, which is plausible in the opinions of those who knew him, and is known to be the reason for a seven-year delay between submission and publication of another of his papers (A De Rujula, private communication).

Petermann (or Peterman as he spelled his name in most of his other publications), who was a recluse, did not follow up his paper, and it seems he only drew attention to it once, when asking Alvaro De Rújula to be his scientific executor.Footnote 4 It was only referenced once, in a 1975 paper on a quark search at the CERN Intersecting Storage Rings, before Alvaro referred to it in 2004 [30], publicised it at the end of a talk by Zweig in the CERN Auditorium in September 2012, and in 2014 published a note about it [31]. This is not surprising: the paper was in French, the title did not reflect its contents, and Zweig’s much more comprehensive treatment had rendered it redundant by the time of its delayed publication.

George Zweig proposed quarks (or aces as he called them), independently of Gell-Mann and of Petermann, while visiting CERN. His work is reported in a 26-page preprint [95] dated 17 January 1964, which was replaced by an 80-page version dated 24 February 1964 [96], and followed up in lectures at Erice [97]. Zweig has written fascinating accounts of his work and its reception [98,99,100,101] by Feynman, Gell-Mann and others, and (as described below) explained why his preprints were never published.Footnote 5

While at CERN, Zweig was supported by grants which paid an overhead to CERN, and provided $1,200 for publication costs. He wanted to publish his work in the Physical Review, but was prevented by the Head of the Theory Division, Leon van Hove (who later, as joint Director General of CERN, played a key role in promoting construction of the proton anti-proton collider). Van Hove told him that outputs from the CERN Theory Division had to be published in European journals, and instructed the theory secretariat not to type any of his papers (this was a real problem for Zweig who could not type and did not have a typewriter, although the late and widely lamented Tanya Fabergé, who for decades ran the Theory Division Secretariat, disobeyed instructions and typed his second preprint). He was scheduled to give a seminar, titled ‘Dealer’s choice: Aces are Wild’, but van Hove took down the announcement and told him ‘You are not allowed to speak at CERN’. To this day, Zweig does not know why he encountered such animus, but suspects it stemmed from his insistence on publishing in the Physical Review, which went counter to van Hove’s efforts to promote European physics.

Zweig was led to discover quarks by the data: in accounts of his work he insists that it was a discovery not an invention. The cornucopia of impressive results in his preprints include the famous Zweig rule, which provided an explanation for the very surprising fact that φ mesons decay much more frequently into two K mesons than into a ρ and a pi meson. In the quark model, the latter decay involves the annihilation of the constituents of the φ whereas in the former they are rearranged, which Zweig argued would be favoured dynamically. His papers not only laid the foundations of the use of the quark model to describe the properties of hadrons, but foresaw [96] that ‘high momentum transfer experiments may be necessary to detect aces’—which the SLAC deep inelastic scattering experiments later did.

4 Reactions and objections

When Zweig returned from CERN to Caltech, where he had been Feynman’s student, he told Feynman and Gell-Mann about his work. He recalls [101] that Feynman disliked the Zweig rule and espoused the bootstrap view that in the correct theory of strong interactions it would not be possible to say which particles are elementary, while Gell-Mann’s reaction was ‘Oh, the concrete quark model. That’s for blockheads’. Zweig, who is today based at MIT, started to make a transition to neurobiology in 1969: had he remained in the field, he might have received more of the recognition he deserves.

Those who worked on ‘concrete quarks’ in the 1960s thought that they had not been observed because they are very heavy (5 GeV or more), which led to many quark searches, as advocated—in different spirits—by both Gell-Mann and Zweig. I remember Viki Weisskopf, who was then the Director General of CERN, citing the search for quarks as a reason for building the CERN Intersecting Storage Rings in a lecture in Oxford in 1964. In a model with very heavy but tightly bound quarks, the binding energies and hence quark wave functions of (e.g.) a pi meson and the much heavier—but almost equally tightly bound—K meson would be very similar. This resolved the puzzle of how, as required by SU(3) symmetry, particles with such different masses could have otherwise very similar properties. Furthermore, Morpurgo pointed out that, although very tightly bound, quarks could move non-relativistically inside light mesons and baryons, as assumed by quark modellers [69]. This removed one objection that had been raised, but the question of how tightly bound quarks could behave effectively as free particles was not really answered until the advent of QCD.

A far more serious objection was that since quarks must have half integral spins then, according to the fundamental ‘spin-statistics’ theorem, their wave functions should be anti-symmetric under the exchange of all their labels, or in the usual jargon: they should be Fermions, not Bosons. The three quarks that form the ground states of the baryons are symmetric under the interchange of their quark and spin labels, and the theorem therefore required their space wave functions to be anti-symmetric. Anti-symmetry implies spatial variations that lead to large internal kinetic energies, and would normally only be expected for excited states. While not ruled out in principle, an anti-symmetric ground state space wave function would require a bizarre form for the inter-quark force.

In his preprints Zweig treated quarks as bosons, without comment. He has told me that he was aware of the problem, but assumed that—as the model otherwise worked so well—an explanation would eventually be found. The problem was not mentioned by Petermann or Serber, or by Gell-Mann although he was aware of it,Footnote 6 as others must have been, and he told me many years later that it was his main objection to concrete quarks, as he has written [48].

In fact, Greenberg [50] soon pointed out that the ground state space wave function could be symmetric if quarks obey ‘para-statistics of order three’ rather than Fermi statistics. This suggestion, which relied on a very unfamiliar and seemingly abstract idea, was too radical for most people, and neither it nor Han and Nambu’s related but different model [55], in which three quarks are also replaced by nine, with integral charges, found much favour. Greenberg’s proposal is equivalent to endowing quarks with a new three-valued internal label in which the wave functions of baryons are anti-symmetric, allowing the spatial ground state wave functions to be symmetric.Footnote 7 This label is now called colour and this proposal is known to be the correct. However, sceptics were not convinced at the time, and for many years few theorists took the quark model seriously.

5 Hadron spectroscopy

One person who did take concrete quarks seriously was Dick Dalitz [3], who became the leading proponent of the ‘naïve’ quark model, which he first discussed in his influential 1965 Les Houches summer school lectures [27]. In these lectures he gave the first detailed description of the expected spectrum of baryons in which one of the quarks carries one or two units of orbital angular momentum. This spectrum successfully accommodates the particles that were then known and the many others discovered later.Footnote 8

While it was not possible to ignore what Dalitz in September 1965 called the ‘parallelism … between the data and the simple quark model’ [28], it seemed possible, as he conceded, that it ‘might simply reflect the existence of general relationships which would also hold in a more sophisticated and complicated theory of elementary particle stuff’. This hope was strongly encouraged by the discovery, in 1964, that some of the results of the quark model, including for example the ratio between the magnetic moments of the proton and neutron, could be derived by assuming that the underlying laws are unchanged under the simultaneous exchange of the labels that characterise particles’ spins and their SU(3) labels, which—it was proposed—should be combined in the symmetry group SU(6) [54, 85]. This kicked started a well-publicised race to find a ‘final’ theory that incorporated SU(6) in a larger symmetry group that respects Einstein’s theory of relativity. A symmetry group called U-twiddle-twelve was one of the candidates that was proposed, which led Gell-Mann to describe the whole enterprise as ‘twiddle-twaddle’. And so it proved when it was shown that no symmetry can combine internal and space–time degrees of freedom in a non-trivial wayFootnote 9 (except, as was discovered many years later, supersymmetries, which relate bosons to fermions). As Zweig [102] recently said of SU(6), which cannot be a real symmetry, and SU(3), which is exact in the limit of equal light quark masses if electromagnetic interactions are ignored, ‘they must be outputs, not inputs, of a field theory for aces with different masses and spin dependent forces’.

It was by no means obvious that the quark model would be able to accommodate the plethora of new particles then being discovered. The ‘discovery’, in a missing mass experiment at CERN, that the A2 meson is actually two states could have killed the model, which only has a place for one. The group first reported a narrow dip in the centre of the A2 peak in 1965, and in 1967, following improvements in the apparatus, claimed that it had a statistical significance of six standard deviations. Several other experiments observed a split, albeit with much smaller significance (≤ 3 σ). Eventually the effect died, although it still had some life in it as late as 1970 [86], and the quark model survived this and other potential set-backs. Its successes were much appreciated by experimentalists, but theorists’ reactions were at best lukewarm if not antagonistic.Footnote 10

In his introductory talk at the 1966 Berkeley Conference on High-Energy Physics, Gell-Mann had this to say [46] of ‘three hypothetical and probably fictitious quarks’:

‘It’s hard to see how deeply bound states of such heavy real quarks could look like \(\overline{\mathrm{q} }\) q, say, rather than a terrible mixture of \(\overline{\mathrm{q} }\) q \(\overline{\mathrm{q} }\) q \(\overline{\mathrm{q} }\) q and so on …the idea that mesons and baryons are made primarily of quarks is difficult to believe, since we know that in the sense of dispersion theory, they are mostly, if not entirely, made up of each other. The probability that a meson consists of a real quark and an anti-quark pair rather than two mesons or a baryon and an antibaryon must be quite small. Thus it seems that whether or not real quarks exist, the q and \(\overline{\mathrm{q} }\) we have been talking about are mathematical entities.’ Gell-Mann had clearly not accepted quarks as fundamental degrees of freedom in a field theory that describes all hadrons.

Later in the meeting he walked out when Dalitz, as rapporteur on Strong Interactions and Symmetries, based most of his talk [29] on the quark model with anti-symmetric ground state space wave functions for baryons (which he abandoned the next year). Dalitz noted that we faced ‘an unfamiliar situation, but one which has much qualitative correspondence with the experimental data… The [hadrons] should be regarded as rather analogous to molecules whose constituent atoms are quarks. Such a … model appears especially unfamiliar in terms of the conventional ideas of field theory today … if it works well, then it will be the task of field theory to show how such a model can arise from … some field theory.’

After his talk, Maglić (who had ‘discovered’ the split A2) asked Dalitz ‘Does this model rely on the existence of physical quarks?’. He replied ‘… if there do not exist real particles, this model has no interest’. Whether or not confined quarks are ‘real particles’ can be debated, but a field theory (QCD) was eventually discovered that provides a basis for the naïve quark model.

6 Deep inelastic electron scattering

In the same conference, in the discussion after his talk on Electromagnetic Interactions, Sid Drell (the Deputy Director of SLAC) said [32] that he would ‘very much like to see inelastic electron or muon cross sections measuredFootnote 11…Also there are some sum rules, asymptotic statements derived by Bjorken and others, as to how these inelastic cross sections behave in energy, … which can be checked experimentally’. This wish was fulfilled in September 1968 when Jerry Friedman, speaking on behalf of the Friedman-Kendal-Taylor group, presented the first results of the classic SLAC-MIT ‘deep inelastic’ electron scattering experiments at the International Conference on High-Energy Physics in Vienna (Friedman has given an account of the work of this group[38]). He reported that the scattering cross sections were much larger than generally expected, and that—to first approximation—the dimensionless ‘structure functions’ that characterise the cross-section depend only on the dimensionless variable ν/q2 (which is defined below). The group realised that their results were suggestive of scattering from point-like objects, but decided by a vote that, against his wishes, Jerry should not say so (Friedman, private communication 2019). Wolfgang (‘Pief’) Panofsky, the Director of SLAC, was not aware of the vote, and when, speaking as rapporteur [74] said that ‘theoretical speculations are focused on the possibility that these data might give evidence on the behaviour of point-like, charged structures within the nucleon … The apparent success of the parametrization of the cross sections in the variable ν/q2 in addition to the large cross section itself is at least indicative that point-like interactions are becoming involved.’

The SLAC results surprised almost everyone, but there were many further twists and turns before the interpretation suggested by Panofsky was generally accepted and a consensus finally emerged that, together with complementary measurements of neutrino scattering at CERN, the experiments proved the existence of quarks. One person who was not surprised was James (Jim) Bjorken—universally known as bj. Already in 1966 he had inferred, from a sum rule that he ‘derived’ (the reason for the quotes will be explained later) for electron scattering from polarised targets [12], [13], that inelastic scattering must be ‘comparable to scattering off point-like charges’. Bj went on to show, using the same techniques, that at high energy E the total cross section for electron–positron annihilation to hadrons should vary as 1/E2, like that for annihilation into a muon and an anti-muon, and that the total cross section for neutrino scattering on protons and neutrons would be proportional to E.

Then in his 1967 Varenna summer school lectures [14] and in his talk at the 1967 SLAC conference Bjorken [15] discussed the sum rule derived by Adler (from Gell-Mann’s algebra of currents) for the difference between neutrino and anti-neutrino scattering on protons and neutrons, noting that ‘This result would also be true were the nucleon a point-like object, because the derivation is a general derivation. Therefore the difference of these two cross sections is a point-like cross section, and it is big.’ He then provided the following physical picture:

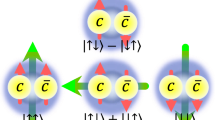

‘We assume that the nucleon is built of some kind of point-like constituents which could be seen if you could really look at it instantaneously in time ... If we go to very large energy and large q2... we can expect that the scattering will be incoherent from these point-like constituents. Suppose ... these point-like constituents had isospin one-half ... what the sum rule says is simply [N ↑] − [N ↓] = 1 for any configuration of constituents in the proton.Footnote 12 This gives a very simple-minded picture of this process which may look a little better if you really look at it, say, in the center-of-mass of the lepton and the incoming photon. In this frame the proton is ... contracted into a very thin pancake and the lepton scatters essentially instantaneously in time from it in the high energy limit. Furthermore the proper motion of any of the constituents inside the hadron is slowed down by time dilation. Provided one doesn’t observe too carefully the final energy of the lepton to avoid trouble with the uncertainty principle, this process looks qualitatively like a good measurement of the instantaneous distribution of matter or charge inside the nucleon’

This is the physical picture that underlies the parton model, although the name was provided later by Feynman who developed it independently, as a basis for understanding proton-proton scattering[36].

The SLAC experiments measured the total cross section for scattering ‘virtual’ photons from a proton or neutron. If the target is not polarised, the cross section can be expressed in terms of two dimensionless ‘structure’ functions. They depend on the energy and momentum that the virtual photon transfers from the incoming electron to the target, which can be combined to form the relativistic four component momentum vector, known as q. The structure functions depend on the mass of the virtual photon (q2) and a dimensionless variable x = q2/2ν, where ν is the four-dimensional scalar product q.p, p being the four momentum of the target, in whose rest frame q.p is equal to the energy transfer times the mass of the target. Viewed in a frame of reference in which the target proton or neutron is moving rapidly, x can be interpreted as the fraction of its momentum carried by the quark that is struck by the virtual photon, as pointed out by Feynman.

In 1968 (published 1969), Bjorken ‘derived’ the result that as q2 → ∞ these structure functions become non-vanishing functions of x alone [16]. This result, known as Bjorken scaling, would necessarily be true if no mass scales played a role at large q2, as implied by the original ‘naïve’ parton model. ‘Derived’ is in quotes because the methodsFootnote 13 that bj and others employed were soon found to be invalid in perturbation theory [2, 58]. In field theories, the scale (μ) at which the coupling constant is defined plays a role, and scaling is violated by powers of log(q2/μ2), although scaling and other results derived by the methods used by bj were later shown to survive to leading order in QCD.

At the end of his paper on scaling, bj wrote that ‘a more physical interpretation of what is going on is, without question, needed’! Having had partons without explicit scaling, he then had scaling without partons: while surprising with hindsight, this reflected the theoretical uncertainty that then prevailed. The data were soon found to exhibit approximate scaling, as Friedman and Panofsky reported in their Vienna conference talks in September 1968.

Meanwhile, in the words taken from a slide made by Marty Breidenbach [19], who as an MIT graduate student participated in the SLAC deep inelastic experiments:

-

Many of us did not understand bj’s current algebra motivation for scaling

-

Feynman visited SLAC in August 1968. He had been working on hadron-hadron interactions with point like constituents called partons. We showed him the early data on the weak q2 dependence and scaling—and he (after a night in a local dive bar) explained the data with his parton model.

-

In an infinite momentum frame, the point like partons were slowed, and the virtual photon is simply absorbed by one parton without interactions with the other partons—the impulse approximation.

-

This was a wonderful, understandable model for us.

It seems that bj’s 1967 use of the impulse approximation had not been taken on board by his experimental colleagues, and that it was Feynman who provided the connection between the physical picture they both developed and Bjorken scaling, and the parton interpretation of the scaling variable x as the fraction of the parent nucleon’s momentum that is carried by the parton that is struck by the virtual photon. In his own later account Bjorken [17], referring to Feynman’s visit to SLAC, wrote that the period 1966–71 was divided into ‘BF (Before Feynman) and AF (After Feynman)’, adding disarmingly that ‘the way he (Feynman) described the infinite-momentum constituent picture so familiar now was somewhat foreign, and seemingly naive. Retrospectively, there was nothing naive about it. I was hampered by my own flawed version of the constituent viewpoint, where for half of the argument I would use infinite-momentum thinking, and for the other half retreat to the proton rest frame’.

In 1969, Bjorken and Paschos [10, 11] constructed the first explicit quark-parton model. They wrote that ‘the important feature of this model, as developed by Feynman, is its use of the infinite-momentum frame of reference’ and thanked Feynman for discussions, with no reference to bj’s 1967 papers in which he already employed the infinite-momentum frame! In the same year Callan and Gross [21] showed, using formal methods, which later turned out to be correct to leading order in QCD, that the ratio of deep inelastic cross sections for scattering of longitudinal and transverse virtual photons is zero in models in which currents are built of spin 1/2 objects, such as quarks, and infinity in models in which they are built of bosonic fields. The first measurements, published in September 1970, found a ratio of 0.2 ± 0.2, which was encouraging for supporters of the quark model.

7 Neutrino as well as electron scattering

Very soon after the presentation of SLAC’s first deep inelastic electron data, Don Perkins and his colleagues realised that the neutrino data they had obtained in, much less precise and lower energy heavy liquid bubble chamber neutrino experiments at CERN in 1963–67, were consistent with the point-like behaviour observed at SLAC. Don reported this in a conference on Weak Interactions at CERN in 1969 [75], and he and Myatt[70] subsequently published a more a detailed analysis of the data.Footnote 14 The more precise results obtained with the much larger Gargamelle bubble chamber, which came into operation in 1972, are discussed later.

Meanwhile, early in 1969, David Gross gave a talk at CERN on theoretical approaches to deep inelastic scattering. I was then working on nuclear effects in neutrino scattering on nuclei with John Bell, of inequality fame, and was interested in neutrinos. To test my understanding of David’s talk, I applied the parton model to deep inelastic neutrino scattering and asked him if my results were right. It turned out nobody had done this (although I believe bj was doing it). Using formal methods, found later to be valid to lowest order in QCD, we found a sum rule that measures the difference between the number of baryons and anti-baryons in the nucleons[52], which clearly provides a critical test for the quark model. We were aware that the methods we used fail in perturbation theory, but blithely remarked that there was no reason to believe that field theory is relevant, because—since the SLAC experiments seemed to exhibit Bjorken scaling—it is contradicted by experiment!

In 1970 it was found at SLAC that the structure functions of neutrons and protons differ, in a way that is consistent with the quark model. This ruled out some alternatives, but others were more resilient. For example, I had found [64] that according to the quark-parton model the ratio of electron and neutrino structure functions is 5/18 if the contributions of strange quark-anti-quark pairs are neglected. This looks like, and is, a good way to test the model and measure quark charges, but it was also predicted by ‘generalised vector dominance’ models of deep inelastic scattering, and follows from assuming that the ratio of scattering of isospin 0 and isospin 1 virtual photons is 1/9, a result that was known to be approximately true for real photons. In the same paper, I pointed out that the area under the proton structure function would be 1/3 in a model with just three quarks and greater than 2/9 if a uniform sea of quark-anti-quark pairs was added (as in the Bjorken-Paschos model). This was hard to reconcile with the value 0.18 measured at SLAC, but (I wrote) could ‘easily be reduced by adding a background of neutral constituents (which could be responsible for binding quarks). No long after, I ‘derived’ a sum rule [65, 66] that provided a way to measure the fraction of a nucleon’s momentum that is carried by such neutral gluons, by combining electron and neutrino scattering data, which (according to the limited neutrino data available in 1971) turned out to be greater than or equal to 0.52 ± 0.38.

8 Quarks come out of the closet

Use of the quark model was by then gradually becoming ‘politically correct’. Its foundations were strengthened when it was found in 1969 that, thanks to the so-called chiral anomaly [1, 9], the rate at which the π0 meson decays into two photons can be calculated exactly and that the quark model gives the right result provided quarks have three colours. It was encouraging that Feynman, arriving late at the party, came out in support of the model as a co-author of a 1971 paper [37] that inter alia used it to derive (actually re-derive [25, 26]) results related to photo-production.

Nevertheless, many theorists remained sceptical. Gell-Mann’s later repeated claims (made particularly strongly in 1997 [48]) that when he had referred to quarks as ‘mathematical entities’ he meant that they were confined is hard to reconcile with his statements at this time (and earlier—see quotations collected by Zweig [98,99,100], [101]). In a talk at the 1971 Coral Gables Conference on work done with Fritzsch [47], he stated that the results that had been ‘derived’ for deep inelastic scattering ‘… are easy to accept if we draw our intuition from certain [quark] field theories with naïve manipulation of operators. However, detailed calculations using the renormalized perturbation theory expansions in renormalizable field theories do not reveal any of these sorts of behavior… If we accept the conclusions, therefore, we should probably not think in terms of perturbation expansion, but conclude, so to speak, that Nature reads books on free field theory as far as the Bjorken limit is concerned.’

While the final phrase provides a possible escape route, quarks cannot be real but confined (by what?) in a free field theory. Gell-Mann was not yet ready to entertain the idea that gluons are also needed, as he said explicitly when he spokeFootnote 15 about his work with Fritzsch at SLAC in early 1971.

9 Advent of QCD

By 1972 Gell-Mann’s position had changed. He and Fritzsch [39] discussed abstracting enough information about colour singlet operators from the quark-vector gluon model to describe all the degrees of freedom that are present. They went on to say ‘Now the interesting question has been raised lately whether we should regard the gluons as well as the quarks as being non-singlets with respect to colour (ref: J Wess, private communication to B Zumino). For example, they could form a colour octet of neutral vector fields obeying the Yang-Mills equations’, which is of course the case in QCD.

In fact, already in 1966, Nambu had suggested [71] that the additional SU(3) symmetry in the Han-Nambu three-triplet model should be coupled to eight non-Abelian gauge fields. In the same year, Greenberg and Zwanziger pointed out[51] that in non-relativistic three-triplet and three para-quark models, it would be natural for the lowest lying baryons to contain exactly three triplets. This work was largely forgotten when the three-triplet model and references to para-quarks fell out of fashion, but it was followed up in 1973 by Lipkin who noted [60] that ‘interactions of the type produced by the exchange of gauge vectors bosons classified in an octet of the SU(3) group, sometimes called color’ would promote coloured states to higher energy than colour singlets in a non-relativistic model. Finally, in 1973, Fritzsch, Gell-Mann and Leutwyler wrote their celebrated paper [40] on QCD, which—together with the discovery that such theories are asymptotically free [81], [53]—completed the theoretical foundations of the quark model.

In QCD the powers of log(q2/μ2) that violate Bjorken scaling in all but the lowest order of perturbation theory, combine to turn the strong coupling ‘constant’ into a function of q2, which vanishes like 1/log(q2/μ2) at large q2. This gives rise to (calculable) scaling violations, which were first observed two years later at SLAC. The physical picture (due to Ken Wilson) is that the resolution with which the virtual photon probes the structure of the nucleon increases with q2, and what looked like a quark at one scale, may be found to consist of a quark plus a gluon, or a quark plus a gluon and a quark-anti-quark pair, etc. as the resolution increases. This shifts the observed quark momentum spectrum to lower x as q2 increases and more constituents come into play. At low q2, the strong coupling ‘constant’ becomes very (perhaps infinitely) large, and it can be convincingly argued (if not rigorously proved) that only ‘colour singlets’—in the case of baryons, states that are anti-symmetric in the colour variable—can exit as free particles, while quarks should be forever confined.

In parallel, experimental support for the quark-parton picture was growing. In particular, in 1972, preliminary neutrino data from Gargamelle, provided ‘an astonishing verification of the Gell-Mann/Zweig quark model of hadrons’ in words used by Perkins in a review talk at the International Conference on High Energy [77]. By the time of the 1973 Hawaii Conference, at which I was one of four lecturers together with Dick Feynman, Don Perkins and Douglas Morrison, anti-neutrino data had become available from Gargamelle. Together with the neutrino data and the SLAC electron data, they provided good evidence that protons and neutrons are composed of point-like spin 1/2 particles with third -integral charges and baryon number one third (i.e. quarks) and neutral gluons [Perkins 1973].

But the ‘discovery’, later shown to be erroneous, of the so-called high y anomaly in one of the National Accelerator Laboratory (now known as Fermilab) neutrino experiments, which suggested that the quark picture might be wrong or that new constituents would have to be invoked, cast a shadow. It turned into an ominous dark cloud in 1973 when measurements of electron–positron annihilation into hadrons at the Cambridge Electron Accelerator[61] at an energy of 4 GeV found a cross session that was much larger than expected. A further measurement [91] at 5 GeV together with data at other energies with much smaller errors from the SPEAR collider at SLAC (presented for the first time by Burt Richter [Richter, 1964] in the 1975 International Conference on High-Energy Physics) showed that, rather than falling like E−2 as predicted by Bjorken in 1966, the cross section was constant. This major puzzle attracted huge attention and stimulated a lot of speculation, as discussed by Richter and Ellis[33, 83].

The cloud went away when the J/Ψ particle, discovered at Brookhaven (J) and by SPEAR (Ψ) in November 1974, was correctly interpreted as a bound state of a charm and anti-charm quark. In early 1975 a Brookhaven bubble chamber experiment reported the observation of one event [22] which ‘with the caveat associated with one event’, was ‘strongly indicative of charmed baryon production’, and in 1976 several charmed mesons were found at SPEAR [49, 79]. The ‘constant’ electron–positron annihilation cross-section was understood to be nothing more than a pause in the fall with energy as the threshold for charm particle production was crossed, and the annihilation rate changed from that expected with three flavours of quarks, each with three colours, to that expected with four flavours. From that point onwards, the quark model was finally almost universally accepted.

10 Concluding remarks

In the following years QCD also became accepted as evidence for the expected scaling violations accumulated, the three-jet (quark + anti-quark + gluon) events anticipated [34] in electron–positron annihilation were found in the TASSO experiment [92] at DESY (Söding has reviewed the history of this discovery [89]), providing direct evidence for the existence of gluons, and ‘perturbative QCD’ was successfully applied to many high-energy processes. In parallel, more quarks were discovered, and—ironically—it was realised that the SU(3) symmetry that started it all simply reflects the fact that three quarks are much lighter than the others and has no deep significance.

I have written this history to try to ensure that the role of Petermann is not overlooked, that Zweig and Bjorken get the recognition they deserve, and to attempt to clarify the role of Serber. I also wanted to recall the contemporary atmosphere which led most theorists to reject the quark model in the 1960s, despite the fact that it explained much of the data. What the British call the Whig view of history, which presents the past as an inevitable progression towards a more enlightened future, may be a useful pedagogical device in scientific text books, but the history of physics involves misunderstanding and confusions. In science, the judgement of experiment ensures that greater enlightenment eventually emerges, although—as in the case of the quark model—it can be a slow process.

As for Gell-Mann, he had very good reasons for initially insisting that quarks are mathematical entities, although in retrospect it is surprising that he stuck to his guns for so long. In any case, although his opposition to the naïve quark model was unsettling for its proponents, I doubt that it held back its acceptance significantly. If not the sole father, he was certainly the grandfather of the quark model, and as a proposer of QCD was involved in the final theoretical chapter. His many other achievements were stupendous: adapting Shakespeare, he bestrode mid-twentieth century theoretical particle physics like a colossus.

Change history

29 April 2024

A Correction to this paper has been published: https://doi.org/10.1140/epjh/s13129-024-00074-7

Notes

This account is mainly based on my own memory, cross-checked whenever possible with contemporary publications and accounts, although I have mentioned some contributions of which I only recently became aware. In writing it, I have tried to guard against Shakespeare warning that while ‘old men forget’ they sometimes ‘remember with advantages’. I have quoted parts of some of the interesting retrospective accounts of the development of the quark model and QCD by major contributors [56].

In discussions of the Fermi Yang model at the 6th Rochester Conference on High-Energy Nuclear Physics [5], there are several references to the nature of the ‘glue’ that would be required to bind nucleons and anti-nucleons to form pi-mesons. Teller mentioned vector bosons as one possibility, but worried that the force would be too strong and lead to nucleon/anti-nucleon annihilation. A vector interaction was first written down explicitly in the context of the Sakata model by Fujii [41]. Gell-Mann coined the word ‘gluon’ [43].

André Petermann is best known for his discovery, with his supervisor Ernst Stueckelberg, of the renormalisation group (which was independently discovered by Bogoliubov and Shirkov and by Gell-Mann and Low: see Shirkov [88] for a historical account). For recollections of Petermann see Alvarez-Gaumé [4] and Zichichi [94].

The following account is based on a private communication from Zweig. I have heard it suggested that the fact that his work was not published made Zweig ineligible for the award of what Murray always called ‘the Swedish Prize’ (for which Feynman nominated both of them in 1977 [99]), but the Nobel Statutes say only that the work must be ‘made public’, leaving it up to each committee to decide what counts as a publication (Per Carlson, private communication).

In 1971 Dick Feynman submitted a paper to Physical Review Letters in which he pointed out that if quarks obeyed Bose statistics, the so-called delta-I equals one-half rule (which is approximately obeyed in the weak decays of strange particles) would be exact. He graciously withdrew the paper when I informed him that I had already noted this, in a footnote [63]. Dick told me that Murray claimed that he already knew this result on the day he discovered quarks, and produced the dated page of his note book as evidence. It did not convince Dick, although it did show that Murray had thought about statistics from the beginning.

The idea was proposed in this form by Struminsky in a JINR Dubna preprint P-1939 (in Russian), dated 9 January 1965, ‘Magnetic Moments of Baryons in the Quark Model’ [90], in a footnote which reads ‘Three identical quarks cannot exist in an antisymmetric S-state. To realise an antisymmetric S-orbital state, it is necessary to assign an additional quantum number to a quark’. JINR D-1968 (also in Russian), dated 23 January 1965, ‘On Questions of Compositeness in the Theory of Elementary Particles’ by Bogoliubov, Struminsky, and Tavkhelidze [18] used this idea, noting that it is effectively equivalent to Greenberg’s proposal (which was published on 16 November 1964). This work was presented by Tavkhelidze in May 1965 in a conference in Trieste [68], but I don’t recall hearing of it. The quark model was much more widely accepted in Russia than in the West in the 1960s, by authors who (in addition to those at Dubna) included Levin, Frankfurt, Okun, Zel’dovich and Sakharov. I was aware of some of this work at the time, but I don’t remember being much influenced by it and I have not attempted to describe it in this personal reminiscence. I spent November 1967-June 1968 at FIAN/the Lebedev Institute in Moscow, but the many distinguished physicists there were not sympathetic to the quark model at that time (as I have described in ‘Evgeni Lvovich Feinberg and FIAN in the late 1960s, as seen by a young foreigner’ [67]), and I was only able to spend half a day at Dubna and a couple of hours at the Ioffe Institute in Leningrad where quarks were taken seriously.

Dalitz initially assumed that baryons would have antisymmetric space wave functions, which he described as ‘strange’ and ‘surprising’ for the ground state, but ‘perfectly possible … despite many remarks to the contrary in the literature’. He conceding that that we ‘know of no particularly natural mechanism’ that would lead to an antisymmetric ground state, and in 1967 accepted that the wave functions of baryons must be totally symmetric.

This was known by the time of the 1966 Berkeley Conference (see the review talk by Dalitz cited below), although the most definitive ‘no-go’ theorem came later—Coleman and Mandula [24].

I can vouch for this. I initially followed the ‘hot money’ in disparaging the model, and tried to persuade Dalitz that the so-called Weisskopf-Van Royen paradox was the last nail in its coffin. He said he would agree if I could show him that it survives in a fully relativistic formulation of the model. I eventually showed that it does not survive [62], but meanwhile I became a convert when I found myself saying one day ‘The quark model is stupid but it’s a very good way to remember the data’, paused and thought—what more can you ask of a model? Both at the Lebedev Institute in Moscow and CERN (where I was a Fellow from September 1968 to August 1970, when I moved to SLAC), I found it necessary to spend the first part of talks about my relativistic formulation defending using the quark model at all.

It is worth recalling that at that time proton scattering experiments were thought to be the way to study strong interactions, and that electron, muon and photon scattering could only play a supporting role. This is reflected in the attendance at the first two major conferences that I attended, in 1969 (a year after the first results of the SLAC deep-inelastic scattering experiments were announced). The European Particle Physics Conference, in Lund, attracted 610. The International Symposium on Electron and Photon Interactions at High Energy, in Liverpool, attracted 240.

[N ↑] and [N ↓] are shorthand for the number of constituents with isospin up and down.

These methods involved studying Fourier transforms of nucleon matrix elements of commutators of currents and their time derivatives, taking q to i∞, formally calculating the commutators and then letting the energy of the nucleon go to infinity, often in quark models in which forces were supposed to be transmitted by a vector gluon. The Adler sum rule, which was derived by different methods, is exact.

In retrospect, there were hints of point-like behaviour in the very first CERN bubble chamber experiment, but as Perkins explains in ‘An early neutrino experiment: how we missed quark substructure in 1963’ [78], neither he, nor John Bell and Tini Veltman with whom he discussed the data, picked up these hints. A short anti-neutrino run (with low flux) found an unexpectedly low rate (at that time it was expected that neutrinos and antineutrino cross-sections would be equal, whereas the latter is in fact less than half the former). Together with the large number of events in which no muon was observed, this caused confusion, and antineutrino running was abandoned until Gargamelle became available. In retrospect, many of the muon-less events must have been produced by neutral currents, which at the time were not expected. It seems that the simultaneous appearance of two unexpected phenomena may have prevented either being recognised (as shown by Perkins [76], the number of the muon-less events in the early CERN experiments implied a neutral current cross-section in line with that found a by Gargamelle).

He began by saying that he was going to abstract all possible results from the ‘light-cone algebra’ abstracted from a model of free, non-interacting quarks. This is equivalent to finding results that hold in all possible quark-parton models without gluons. I had done this the previous year [64] and concluded that the data require the addition of gluons; by the time of Gell-Mann’s talk I had shown [65] that they carry some 50% of the parent proton’s momentum (albeit with large errors). With extreme trepidation, I interrupted Gell-Mann and said that his assumption that quarks are free would lead to results that disagree with experiment. He replied ‘You must be Llewellyn Smith’, which was gratifying as we had not met previously, although he added characteristically ‘You spell your name wrongly’, before saying ‘I am aware of your result, but there are experimental uncertainties, and we should not give up abstracting results from the free quark model until we are really forced to do so’.

References

Adler, S., (1969a). Axial-Vector Vertex in Spinor Electrodynamics. Phys. Rev. 177, 2426–2438. doi:https://doi.org/10.1103/PhysRev.177.2426

Adler, S., and Tung, W-K. (1969b). Breakdown of Asymptotic Sum Rules in Perturbation Theory. Phys. Rev. Lett. 22, 978-981. doi: https://doi.org/10.1103/PhysRevLett.22.978

Aitchison I. J. R., and Llewellyn Smith C. H., (2016), Richard Henry Dalitz, 28 February 1925–13 January 2006, Biogr. Mems Fell. R. Soc. 62, 59–88. doi: https://doi.org/10.1098/rsbm.2016.0019

Alvarez-Gaumé L., et al (2012). André Petermann (1922–2011) https://home.web.cern.ch/news/obituary/cern/andre-petermann-1922-2011 Accessed 28 August 2023.

Ballam J., et al. (eds.), (1969). 6th Rochester Conference on High-Energy Nuclear Physics, Interscience, New York. https://pubs.aip.org/physicstoday/article-abstract/10/1/30/840153/High-Energy-Nuclear-Physics-Proceedings-of-the-6th?redirectedFrom=fulltext Accessed 28 August 2023.

Banks, M., (2019). Quark Pioneer Murray Gell-Mann dies, Phys. World, July 2019, 6–7, doi: https://doi.org/10.1088/2058-7058/32/7/8

Becchi, C. and Morpurgo, G., (1965). Test of the Nonrelativistic Quark Model for "Elementary" Particles: Radiative Decays of Vector Mesons, Phys. Rev. 140B 687-690. doi: https://doi.org/10.1103/PhysRev.140. B687

Bég M.A.B., et al., (1964). SU(6) and Electromagnetic Interactions, Phys. Rev. Lett. 13, 514-517. doi: https://doi.org/10.1103/PhysRevLett.13.514

Bell J.S., and Jackiw R., (1969). A PCAC puzzle: π0→ γγ in the σ-model, Nuovo Cim. A60 47–61. doi:https://doi.org/10.1007/BF02823296

Bjorken, J.D., and Paschos, E.A., (1969). Inelastic Electron-Proton and γ-Proton Scattering and the Structure of the Nucleon, Phys. Rev. 185,1975-1982. doi: https://doi.org/10.1103/PhysRev.185.1975

Bjorken, J.D., and Paschos, E.A., (1970). High-Energy Inelastic Neutrino-Nucleon Interactions, Phys. Rev. D1, 3151-3160, doi: https://doi.org/10.1103/PhysRevD.1.3151

Bjorken, J.D., (1966a). Applications of the Chiral U(6)⊗U(6) Algebra of Current Densities, Phys. Rev. 148, 1467-1478. doi: https://doi.org/10.1103/PhysRev.148.1467

Bjorken, J.D., (1966b). Inequality for Electron and Muon Scattering from Nucleons, Phys. Rev. Lett. 16 (1966) 408. doi: https://doi.org/10.1103/PhysRevLett.16.408

Bjorken, J.D., (1967a). Current algebra at small distances, in Proc. 1967 Varenna Summer School, 55–81, Academic Press, New York, 1968. https://cds.cern.ch/record/111775 Accessed 28 August 2023.

Bjorken, J.D., (1967b) Theoretical ideas on inelastic electron and muon scattering, in Proc. 1967 International Symposium on Electron and Photon Interactions at High Energies,109–127. Reprinted in “In conclusion”, World Scientific, Singapore, 2003, 7–25, doi: https://doi.org/10.1142/9789812775689_0001

Bjorken, J.D., (1969). Asymptotic Sum Rules at Infinite Momentum, Phys. Rev. 179, 1547 -1553. doi: https://doi.org/10.1103/PhysRev.179.1547

Bjorken, J.D., (1997). Deep-Inelastic Scattering: From Current Algebra to Partons. In Hoddeson L, et al., eds.(1997)), The Rise of the Standard Model: A History of Particle Physics from 1964 to 1979, Cambridge University Press, Cambridge, pp. 589–599, doi: https://doi.org/10.1017/CBO9780511471094.035

Bogoliubov N. N., Struminsky B. V., and Tavkhelidz A, N., (1965). On Questions of Compositeness in the Theory of Elementary Particles. JINR preprint D-1968 (in Russian).

Breidenbach, M., (2019). Talk at Quark Discovery, 50 years, MIT 25 October 2019. Video available from https://www.youtube.com/watch?v=ho1s4pDJzRo, starts after 1h18’. Accessed 28 August 2023

Brink, L., (2019). Gell-Mann’s Multi-Dimensional Genius, CERN Courier, July/August 2019, 25–27 https://cds.cern.ch/record/2681906 Accessed 28 August 2023

Callan, C.G., and Gross, D., (1969). High-Energy Electroproduction and the Constitution of the Electric Current, Phys. Rev. Lett. 22,156-159. doi: https://doi.org/10.1103/PhysRevLett.22.156

Cazzoli, E.G. et. al., (1975). Evidence for ΔS = − ΔQ Currents or Charmed-Baryon Production by Neutrinos, Phys. Rev. Lett. 34, 1125-1128. doi: https://doi.org/10.1103/PhysRevLett.34.1125

Chew, G.F., (1961) S-Matrix Theory of Strong Interactions, Benjamin, New York, 1961. Electronic preprint version UCRL-9701, https://escholarship.org/uc/item/2v38k2hr Accessed 28 August 2023

Coleman, S., and Mandula J., (1967). Phys. Rev. 159, 1251-1246. doi:https://doi.org/10.1103/PhysRev.159.1251

Copley, L.A., Karl, G. and Obryk, E., (1969a). Backward single pion photoproduction and the symmetric quark model, Phys. Lett. B29, 117–120, doi: https://doi.org/10.1016/0370-2693(69)90261-5

Copley, L.A., Karl, G. and Obryk, E., (1969b). Single pion photoproduction in the quark model, Nucl. Phys. B13, 303–319. doi: https://doi.org/10.1016/0550-3213(69)90237-5)

Dalitz, R.H., (1965a) Quark models for the “elementary particles”, in High Energy Physics: Proc. Les Houches Summer School, 1965 (Eds. C. DeWitt and M. Jacob), Gordon and Breach, New York, pp. 251–323.

Dalitz R.H., (1965b) Resonant states and strong interactions, in Proc. Oxford Int’l Conf. Elementary Particles, 1965, 157–181. Eds. Moorhouse, R.G., Taylor A.E. and Walsh T.R., Rutherford High Energy Laboratory, Chilton. https://cds.cern.ch/record/99127 Accessed 28 August 2023.

Dalitz, R.H., (1966) Symmetries and strong interactions, in Proc. XIII Int’l Conf. High Energy Physics, Berkeley 1966, 215–236. Ed. Alston-Garnjost, M,. University of California Press, Berkeley and Los Angeles. doi: https://libserv.aip.org/ipac20/ipac.jsp?uri=full=3100001~!29730!0&profile=rev-aipnbl Accessed 28 August 2023.

De Rújula, A., (2005). Fifty years of Yang–Mills theories: a phenomenological point of view, in 50 Years of Yang-Mills Theory, 401–429. Ed 't Hooft, G., World Scientific, Singapore, 2005, doi: https://doi.org/10.1142/9789812567147_0017

De Rujula, A., (2014) https://home.cern/news/opinion/physics/who-invented-quarks Accessed 28 August 2023.

Drell, S.D., Electrodynamic interactions, in Proc. XIII Int’l Conf. High Energy Physics, Berkeley 1966, 85–102. Ed. Alston-Garnjost, M. University of California Press, Berkeley and Los Angeles. doi: https://libserv.aip.org/ipac20/ipac.jsp?uri=full=3100001~!29730!0&profile=rev-aipnbl Accessed 28 August 2023

Ellis, J., (1974). Theoretical ideas about e−e+ → hadrons at high energies, Proc. 17th International Conference on High-Energy Physics IV, 20–35. Rutherford Lab., Chilton. doi: https://cds.cern.ch/record/103104/ Accessed 28 August 2023.

Ellis, J., Gaillard, M.K. and Ross, G., (1976). Search for gluons in e+e− annihilation, Nucl. Phys. B111, 253-271. doi: https://doi.org/10.1016/0550-3213(76)90542-3

Fermi, E. and Yang, C.N., (1949). Are Mesons Elementary Particles?, Phys. Rev. 76, 1739-1743. doi: https://doi.org/10.1103/PhysRev.76.1739

Feynman, R.P., (1969). The Behavior of Hadron Collisions at Extreme Energies, in Proc. 3rd International Conference on High-energy Collisions, Stony Brook, 1969,237–249. Gordon and Breach, New York. https://inspirehep.net/conferences/979937 Accessed 28 August 2023.

Feynman, R.P., Kislinger, M. and Ravndal, F., (1971). Matrix elements from a relativistic quark model. Phys. Rev. D3, 2706–2732. doi: https://doi.org/10.1103/PhysRevD.3.2706

Friedman, J., (1997). Deep-Inelastic Scattering and the Discovery of Quarks, in The Rise of the Standard Model: A History of Particle Physics from 1964 to 1979. Eds Hoddeson, L., Brown, M., and Riordan M., and Dresden, M., Cambridge University Press, Cambridge, 1997, pp. 566–588 doi: https://doi.org/10.1017/CBO9780511471094.034

Fritzsch, H. and Gell-Mann, M., (1972). Current algebra: quarks and what else?, in Proc. XVI Int. Conf. on High Energy Physics, 6–13 Sep. 1972 Batavia, Ill. Vol. 2, 135–165, eds. Jackson J.D. and Roberts A., National Accelerator Laboratory, Batavia, vol. 4,189–247. doi: https://inspirehep.net/literature/76469 Accessed 28 August 2023

Fritzsch, H., Gell-Mann, M. and Leutwyler, H., (1973). Advantages of the color octet gluon picture, Phys. Lett. B47, 365–368. doi: https://doi.org/10.1016/0370-2693(73)90625-4

Fujii, Y., (1959). On the Analogy between Strong Interaction and Electromagnetic Interaction. Prog Theor. Phys. 21, 232-240. doi: https://doi.org/10.1143/PTP.21.232

Gell-Mann, M., (1961). The Eightfold Way: A Theory of Strong Interaction Symmetry". Synchrotron Laboratory Report CTSL-20. California Institute of Technology. doi: https://doi.org/10.2172/4008239. Reprinted in The Eightfold Way, Gell-Mann, M., and Ne’eman, Y., Benjamin, 1964.

Gell-Mann, M., (1962). Symmetries of Baryons and Mesons. Phys. Rev., 125, 1067-1084. doi: https://doi.org/10.1103/PhysRev.125.1067

Gell-Mann, M., (1964a) A schematic model of baryons and mesons Phys. Lett. 8, 214–215. doi: https://doi.org/10.1016/S0031-9163(64)92001-3

Gell-Mann, M., (1964b). The symmetry group of vector and axial vector currents, Phys. 1, 63–7. doi: https://doi.org/10.1103/PhysicsPhysiqueFizika.1.63

Gell-Mann, M., (1966). Current Topics in Particle Physics, in Proc. XIII Int’l Conf. High Energy Physics, Berkeley 1966., 215–236. Ed. Alston-Garnjost, M,. University of California Press, Berkeley and Los Angeles. https://libserv.aip.org/ipac20/ipac.jsp?uri=full=3100001~!29730!0&profile=rev-aipnbl Accessed 28 August 2023

Gell-Mann, M. and Fritzsch, H., (1971) in Broken Scale Invariance and the Light Cone, Eds. Dal, C., Iverson, G.J., Perlmutter, A., and Gell-Mann M., Gordon and Breach, New York

Gell-Mann, M.,(1997). Quarks, Color and QCD, in Hoddeson, L. Brown, M. Riordan, Dresden M. (eds.), The Rise of the Standard Model: A History of Particle Physics from 1964 to 1979, Cambridge University Press, Cambridge, 1997, pp. 625–633, doi: https://doi.org/10.1017/CBO9780511471094.037

Goldhaber, G., et al., (1976). Observation in e+e− Annihilation of a Narrow State at 1865 MeV/c2 Decaying to Kπ and K πππ, Phys. Rev. Lett. 37 (1976) 255 -259. doi: https://doi.org/10.1103/PhysRevLett.37.255

Greenberg, O.W., (1964). Spin and Unitary-Spin Independence in a Paraquark Model of Baryons and Mesons, Phys. Rev. Lett. 13, 598 -602. doi: https://doi.org/10.1103/PhysRevLett.13.598

Greenberg, O.W. and Zwanziger, D., (1966). Saturation in Triplet Models of Hadrons. Phys. Rev. 150,1117-1180. doi: https://doi.org/10.1103/PhysRev.150.1177

Gross, D. and Llewellyn Smith C.H., (1969), High Energy Neutrino-Nucleon Scattering, Current Algebra and Partons. Nucl. Phys. B14 (1969) 337-347. doi: https://doi.org/10.1016/0550-3213(69)90213-2

Gross, D.J., and Wilczek, F., (1973). Ultraviolet behavior of non-abelian gauge theories, Phys. Rev. Lett. 30. 1343–1346. doi: https://doi.org/10.1103/PhysRevLett.30.1343

Gürsey, F. and Radicati, L.A., (1964). Spin and Unitary Spin Independence of Strong Interactions, Phys. Rev. Lett. 13, 173-175. doi: https://doi.org/10.1103/PhysRevLett.13.173

Han, M.Y., and Nambu, Y., (1965). Three-triplet model with double SU(3) symmetry, Phys. Rev. 139B, 10061010. doi: https://doi.org/10.1103/PhysRev.139.B1006

Hoddeson, L., Brown M, Riordan, M. Dresden, eds., (1997). The rise of the Standard Model Cambridge University Press. doi: https://doi.org/10.1017/CBO9780511471094.016

Ikeda, M., Ogawa, S., Ohnuki, Y., (1959). Prog. Theor. Phys. 22 ,715-724. doi: https://doi.org/10.1143/PTP.22.715

Jackiw, R. and Preparata, G., (1969). Probes for the Constituents of the Electromagnetic Current and Anomalous Commutators, Phys. Rev. Lett. 22, 975-977. doi: https://doi.org/10.1103/PhysRevLett.22.975

Johnson, G., (1999). Strange Beauty: Murray Gell-Mann and the Revolution in Twentieth-century Physics. Alfred A Knopf, New York.

Lipkin, H.J., (1973).Triality, exotics and the dynamical basis of the quark model. Phys. Lett. B45, 267–271. doi: https://doi.org/10.1016/0370-2693(73)90200-1

Litke, A., et al., (1973). Hadron Production by Electron-Positron Annihilation at 4-GeV Center-of-Mass Energy, Phys. Rev. Lett. 30, 1189-1192. doi: https://doi.org/10.1103/PhysRevLett.30.1189

Llewellyn Smith, C. H. (1968). A Relativistic Quark Model for Mesons and the "Weisskopf-Van Royen Paradox", Physics Letters 28B, 335-338. doi: https://doi.org/10.1016/0370-2693(68)90125-1

Llewellyn Smith, C. H. (1969). A Relativistic Formulation of the Quark Model for Mesons, Annals Phys. 53, 521 – 588. doi: https://doi.org/10.1016/0003-4916(69)90035-9

Llewellyn Smith, C.H., Current Algebra Sum Rules Suggested by the Parton Model, Nucl. Phys. B17 (1970) 277. doi: https://doi.org/10.1016/0550-3213(70)90165-3

Llewellyn Smith, C.H., (1971). Inelastic Lepton Scattering in Gluon Models, Phys. Rev. D4 2392 -2397. doi: https://doi.org/10.1103/PhysRevD.4.2392

Llewellyn Smith, C.H., (1972). Neutrino Reactions at Accelerator Energies, Phys. Rep. 3C, 261–379. doi: https://doi.org/10.1016/0370-1573(72)90010-5. Reprinted in Gauge Theories and Neutrino Physics, 175–294. Ed. Jacob, M. North Holland.

Llewellyn Smith, C.H, (2008). Evgeni Lvovich Feinberg and FIAN in the late 1960s, as seen by a young foreigner, in Evgeni Lvovich Feinberg – A Personality through the Prism of Memory, ed. Ginzburg, V. L., 261–263 , Fizmatlit ISBN 9785–94502–165–5 2008

Matveev, V. A. and Tavkhelidze, A .N., (2006). Color Quantum Number, Colored Quarks, and QCD, Phys. Part. Nuclei. 307–316. doi:https://doi.org/10.1134/S1063779606030014 Accessed 28 August 2023

Morpurgo, G., (1965). Is a non-relativistic approximation possible for the internal dynamics of "elementary" particles?, Phys. 2, 95-105. doi: https://doi.org/10.1103/PhysicsPhysiqueFizika.2.95

Myatt, G., Perkins, D.H, (1971). Further observations on scaling in neutrino interactions. Phys. Lett. B34, 542–546. doi:https://doi.org/10.1016/0370-2693(71)90676-9

Nambu, Y., (1966). A systematics of hadrons in subnuclear physics, in Preludes in Theoretical Physics: in honor of V.F. Weisskopf. Eds. de-Shalit, A., Feshbach, H., and van Hove, L. 133–142.North Holland, Amsterdam. https://archive.org/details/preludesintheore0000shal Accessed 28 August 2023

Okubo, S., (1962). Note on Unitary Symmetry in Strong Interactions, Prog. Theor. Phys. 27 949-966. doi: https://doi.org/10.1143/PTP.27.949

Okubo, S., (1963). Some consequences of unitary symmetry model, Phys. Lett. 4, 14-16. doi: https://doi.org/10.1016/0031-9163(63)90565-1

Panofsky, W.K.H., (1969). Low q electrodynamics, elastic and inelastic electron (and muon) scattering, in Proc. XIV International Conference on High Energy Physics, eds Prentki. J. and Steinberger J., CERN, Geneva, 1968, 23–42. doi: https://cds.cern.ch/record/99375 Accessed 28 August 2023

Perkins, D. H., (1969). Accelerator Neutrino Experiments. In Topical Conference on Weak Interactions, ed. Bell, J.S. CERN-69–07, CERN, Geneva. doi: https://doi.org/10.5170/CERN-1969-007

Perkins, D. H., (1974). Experimental Aspects of Neutrino Physics. In Proc. Fifth Hawaii Topical Conference in Particle Physic (1973), Dobson P.N., Peterson V.Z., and Tuan S. F eds., 501–710. University of Hawaii Press.

Perkins, D.H., (1972). Neutrino interactions, in Proc. XVI Int. Conf. on High Energy Physics, 6–13 Sep., Batavia, Ill., eds. Jackson J.D. and Roberts A., National Accelerator Laboratory, Batavia, vol. 4,189–247. https://inspirehep.net/literature/76469 Accessed 28August 2023.

Perkins, D. H. (2013). An early neutrino experiment: how we missed quark substructure in 1963. Eur. Phys. J. H 38, 713–726. doi: https://doi.org/10.1140/epjh/e2013-40024-3

Peruzzi, I.,et al., (1976).Observation of a Narrow Charged State at 1876 MeV/c2 Decaying to an Exotic Combination of Kππ, Phys. Rev. Lett. 37, 569-571. doi: https://doi.org/10.1103/PhysRevLett.37.569

Petermann, A., (1965). Propriétés de l’étrangeté et une formule de masse pour les mésons vectoriels. Nucl. Phys. 63, 349–352. doi: https://doi.org/10.1016/0029-5582(65)90348-2

Politzer, H.D., (1973) Reliable perturbative results for strong interactions? Phys. Rev. Lett. 30, 1346–1349. doi: https://doi.org/10.1103/PhysRevLett.30.1346

Rajasekaran, G., (2006). From atoms to quarks and beyond: A historical perspective. arXiv:physics/0602131v1 [physics.hist-ph] Accessed 28 August 2023

Richter, B., (1974). Plenary report on e+e− → hadrons, in Proc. 17th International Conference on High-Energy Physics, Rutherford Lab., Chilton, 1974, pp. IV. 37–55. doi: https://cds.cern.ch/record/103104/ Accessed 28 August 2023.

Sakata, S., (1956). On a Composite Model for the New Particles. Prog. Theor. Phys. 16, 686-688. doi: https://doi.org/10.1143/PTP.16.686

Sakita, B., (1964). Supermultiplets of Elementary Particles, Phys. Rev. B. 136, 1756,-1760. doi: https://doi.org/10.1103/PhysRev.136.B1756

Schübelin, P., (1970). The puzzle of the A2 meson, Phys. Today 23, 32 -38. doi: https://doi.org/10.1063/1.3021827

Serber, R., with Crease, R.P., (1998). Peace and War – Reminiscences of a Life on the Frontiers of Science, Columbia University Press, New York, 1998.

Shirkov, D. V., (1997) in The Rise of the Standard Model. Eds L. Hoddeson, L. Brown, Riordan M., and Dresden M., Cambridge University Press. doi: https://doi.org/10.1017/CBO9780511471094.016

Söding, P., (2010). On the discovery of the gluon, Eur. Phys. J. H. 35, 3–28. doi: https://doi.org/10.1140/epjh/e2010-00002-5

Struminsky, B. V., (1965). Magnetic Moments of Baryons in the Quark Model, JINR Dubna preprint P-1939 (in Russian)

Tarnopolsky, G. et al., (1974). Hadron Production by Electron-Positron Annihilation at 5 GeV Center-of-Mass Energy. Phys. Rev. Lett. 32, 432-435. doi: https://doi.org/10.1103/PhysRevLett.32.432

TASSO Collaboration (1979). Evidence for planar events in e+e− annihilation at high energies Phys. Lett. 86B, 243. doi: https://doi.org/10.1016/0370-2693(79)90830-X

Wess, J., (1960). Investigation of the invariance group in the three fundamental fields model Nuovo Cim, 15, 52-75. doi: https://doi.org/10.1007/BF02860331

Zichichi, A. (2012). Interactions with André Petermann. CERN Courier 3, April 2012, 24–25. doi: https://cds.cern.ch/record/1734779 Accessed 28 August 2023

Zweig, G., (1964a). An SU(3) model for strong interaction symmetry and its breaking. CERN preprint 8182/TH 401, doi: https://doi.org/10.17181/CERN-TH-401

Zweig, G., (1964b). An SU(3) model for strong interaction symmetry and its breaking II. CERN preprint 8419/TH 412. doi: https://doi.org/10.17181/CERN-TH-412

Zweig, G., (1965). Fractionally charged particles and SU6. In Symmetries in Elementary Particle Physics, Ed. A. Zichichi, Academic Press, New York, 192–234. doi: https://doi.org/10.1016/B978-1-4832-5648-1.50011-7

Zweig, G., (1980). Origins of the Quark Model. In Proc. Fourth International Conference on Baryon Resonances, ed. Isgur, N., University of Toronto, 439–479. https://cds.cern.ch/record/100706 ccessed 28 Q August 2023

Zweig, G., (2010a). Memories of Murray and the Quark Model, Int. J. Mod. Phys. A25 (2010) 3863–3877, doi: https://doi.org/10.1142/S0217751X10050494

Zweig, G., (2010b) Memories of Murray and the Quark Model, Proc. Conference in Honor of Murray Gell-Mann’s 80th birthday, World Scientific, 1–17 doi: https://doi.org/10.1142/S0217751X10050494

Zweig, G., (2015). Concrete quarks. Int. J. Mod. Phys. A30. 1430073, 1-28. doi: https://doi.org/10.1142/S0217751X14300737

Zweig, G., (2019). Talk at Quark Discovery, 50 years, MIT, 25 October 2019, video available from https://www.youtube.com/watch?v=ho1s4pDJzRo, starts after 7’41’. Accessed 28 August 2023

Acknowledgements