Abstract

We present an English translation of Erwin Schrödinger’s paper on “On the Reversal of the Laws of Nature‘’. In this paper, Schrödinger analyses the idea of time reversal of a diffusion process. Schrödinger’s paper acted as a prominent source of inspiration for the works of Bernstein on reciprocal processes and of Kolmogorov on time reversal properties of Markov processes and detailed balance. The ideas outlined by Schrödinger also inspired the development of probabilistic interpretations of quantum mechanics by Fényes, Nelson and others as well as the notion of “Euclidean Quantum Mechanics” as probabilistic analogue of quantization. In the second part of the paper, Schrödinger discusses the relation between time reversal and statistical laws of physics. We emphasize in our commentary the relevance of Schrödinger’s intuitions for contemporary developments in statistical nano-physics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Erwin Schrödinger had the rare privilege to be elected to the Prussian Academy of Science in February 1929, about 1 year and a half after his appointment to the chair of theoretical physics at the University of Berlin [23]. At the moment of his election, at the age of forty-two, Schrödinger was the youngest member of the Academy. Among physicists, other members of the Academy were Max Planck, who proposed Schrödinger’s membership, Max von Laue, Walther Nernst and Albert Einstein who held a special Academy professorship. During their common years in Berlin, Einstein and Schrödinger became good personal friends [23].

Schrödinger presented “On the Reversal of the Laws of Nature” to the Academy in March 1931. The intellectual context of the paper was marked by the intense debate on the interpretation of quantum mechanics [36]. As Schrödinger himself stated in § 3 of the paper, his “current concern” was to point out the existence of a classical probabilistic structure such that the probability density is given by “the product of a certain solution of” [the forward diffusion equation] “and a certain solution of” [the backward diffusion equation] and thus presenting “a striking analogy with quantum mechanics” (§ 4).

The observation of this analogy initiated parallel, and somewhat intertwined, lines of research aiming at either finding classical probabilistic analogues of quantum mechanics or more directly a classical probabilistic interpretation of quantum mechanics. Already in 1933, Reinhold Fürth showed [12] (see [31] for a translation) what in current mathematical language could be phrased as the existence of uncertainty relations satisfied by the variance of a martingale of a diffusion process times the variance of the martingale’s “current velocity” [27]. After the second world war, Fürth’s work became the starting point of the “stochastic mechanics” program proposed by Imre Fényes [11] and Edward Nelson [26, 27] as a probabilistic interpretation of quantum mechanics. A similar program was also pursued by Masao Nagasawa [24, 25] and Robert Aebi [1]. The collection [10] offers a recent appraisal of the state of the art focusing on Nelson’s contributions.

In a spirit perhaps closer to Schrödinger’s original idea, “Euclidean Quantum Mechanics” [37] applies modern developments of stochastic calculus of variations and optimal control theory (see [20, 38] for recent surveys) to study how “relations between quantum physics and classical probability theory” may “lead to new theorems in regular quantum mechanics” [37].

From the mathematical angle, and in particular the theory of Markov processes, the importance of the ideas put forward by Schrödinger was immediately realized. Sergei Bernstein discussed the contents of Schrödinger’s paper in his address to the International Conference of Mathematicians held in Zürich in 1932 [3]. Andreǐ Kolmogorov starts his 1936 “On the Theory of Markov Chains” paper [14] discussing the interest of studying time reversal of Markov processes for the “analysis of the reversibility of the statistical laws of nature” explicitly referring to Schrödinger’s paper. Kolmogorov’s 1937 paper on detailed balance [15] has the title “On the Reversibility of the Statistical Laws of Nature” thus clearly resonating the title of Schrödinger’s paper.

Our interest in presenting an English translation, so far missing to the best of our knowledge, of “On the Reversal of the Laws of Nature” is more directly motivated by the second part of the paper, especially § 6, where Schrödinger discusses fluctuations and time reversal in classical statistical physics.

The advent of nano-manipulations has made possible accurate laboratory observations of systems, natural and artificial, operating in contact with highly fluctuating environments. Fundamental questions concerning non-equilibrium statistical laws can be now posed in well-controlled experimental setups [32]. Theoretical analysis then requires a careful overhaul of the meaning of time reversal and dissipation in systems whose evolution laws can only be defined in a statistical sense see, e.g. [7, 21].

In particular, we want to draw the attention of readers to the analogy between the probabilistic mass transport problem discovered by Schrödinger and the optimal control problem associated with the derivation of Landauer’s bound in non-equilibrium statistical nano-physics [19]. In the remarkable paper [17] Rolf Landauer introduced his conjecture that only logically irreversible information processes are fundamentally linked to irreversible, i.e. finite dissipation, thermodynamic processes. Landauer’s conjecture has been the object of many refinements and criticisms see [18, 19] for overviews. The existence of a finite lower bound to the average cost of erasing a bit of memory has been ascertained in several recent experiments [4, 8, 16].

To delve a bit deeper into the analogy, we devote our commentary after the translation to first explaining, drawing also from [1, 2], how the problem considered by Schrödinger can be rephrased as an optimal control problem. Next, we try to give a glimpse into the modern, infinite dimensional, counter-part of Schrödinger’s optimal control problem [5, 6, 9, 22, 28,29,30, 33,34,35]. Finally, we turn to the analogy with the mathematical problem of proving the existence in the average sense of Landauer’s bound to the energy dissipated in a non-equilibrium transformation between target states. We refer to the lecture notes [13] for a masterly introduction to this subject.

We strove to write the commentary in a self-contained way. Our aim is to give readers a concise overview of Schrödinger’s paper from the standpoint of current developments in non-equilibrium statistical mechanics.

We conclude this introduction with a very much needed apology to all authors whose work we were not able to give proper visibility in our necessarily limited set of references.

2 On the reversal of the laws of nature

2.1 Introduction

If the probability to be in the interval \((x;x+dx)\) at time \(t_0\)

for a particle diffusing or performing a Brownian motion is given,

then it is precisely the solution w(x, t) for \( t\,>\,t_{{\mathfrak {0}}} \) of the diffusion equation

that becomes equal at \(t=t_{{\mathfrak {0}}}\) to the given function \(w_{{\mathfrak {0}}}(x)\). There is an extensive literature on problems of this kind, including many possible variations and complications suggested by special experimental arrangements and observation methods whereby the system in question does not need to be a diffusing particle at all but, for example, the electromechanical meter needle in the experimental setup devised by K. W. F. Kohlrausch to measure Schweidler oscillations, and equation (1) is replaced by its generalization, the so-called Fokker[-Planck] partial differential equation for the relevant system subject to some random influences [44, 46].

Such systems also give rise to a class of problems in probability theory which has been hitherto neglected or has received little attention, and which is already of interest from the purely mathematical side since the answer is not specified by a single solution of a Fokker[-Planck] equation but rather, as we will show, by the product of the solutions of two adjoint equations, and with time boundary conditions imposed not on an individual solution but on the product.

From the physics side, there is a close relation to the class of problems that von Smoluchowski [47,48,49,50,51,52,53] has uncovered in his latest beautiful works on the waiting and return times of very unlikely configurations in systems of diffusing particles. The conclusions, which we draw in § 6, can already be read off from the results of Smoluchowski, but occasion once more a sense of surprise in their sharp paradoxes. Furthermore, (§ 4) there are remarkable analogies with quantum mechanics that seem to me worth considering.

2.2 § 1

The simple example that I want to deal with here is the following. Let the probability to find the particle in a certain position be assigned not only at time \(t_{o}\) but also at a second time instant \(t_{{\mathfrak {1}}}> t_{{\mathfrak {0}}}\):

What is the probability for intermediate times, i.e. for any t such that

Obviously, w(x, t) is not solution of (1) since any solution of (1) is already fully specified at any later time by its initial value. Nor is w solution of the adjoint equation

as this solution, in turn, would be fully specified at any prior time by its final value \(w_1(x)\). Is the question somehow ill posed? This is certainly not the case. One recognizes this by considering a special case which we want to present in first place. Let us suppose that we have detected the particle at time \(t_{{\mathfrak {0}}}\) in \(x_{{\mathfrak {0}}}\) and at time \(t_{{\mathfrak {1}}}\) in \(x_{{\mathfrak {1}}}\) (\(w_{{\mathfrak {0}}}\) and \(w_{{\mathfrak {1}}}\) are then “Spitzenfunktionen”Footnote 1, respectively, sharply peaked at \(x=x_{{\mathfrak {0}}}\) and \(x=x_{{\mathfrak {1}}}\)). An auxiliary observer has observed the position of the particle at time t without, however, reporting us the result. The question is then: which probabilistic inferences can we draw from our two observations for the intervening observations of our assistant?

The answer is simple. I introduce the notation g(x, t) for the well-known fundamental solution of (1):

This is the probability density at position x and time \(t>0\) if the particle starts from \(x=0\) at time \(t=0\). Now I let the particle start many times, say N-times, from \(x=x_{{\mathfrak {0}}}\). Of such N experiments, I single out the ones for which the particle is in \((x_{{\mathfrak {1}}},x_{{\mathfrak {1}}}+\mathrm {d}x)\) at time \(t_{{\mathfrak {1}}}\). Their number is

Of these, I single out again the ones for which 1. the particle is in \((x, x+\mathrm {d}x)\) at time t and then 2. the particle is in \((x_{{\mathfrak {1}}},x_{{\mathfrak {1}}}+\mathrm {d}x_{{\mathfrak {1}}})\) at time \(t_{{\mathfrak {1}}}\). The number of these experiments is

The probability we are after is clearly the ratio \(n/n_{{\mathfrak {1}}}\), i.e.

This is the solution for the special case when at time \(t_{{\mathfrak {0}}}\) and time \(t_{{\mathfrak {1}}}\) the position of the particle is known with certainty.

2.3 § 2

We now consider the general case. The experimental setup is as follows. We let a large number N of particles start at time \(t_{{\mathfrak {0}}}\), namely

from the interval \((x_{{\mathfrak {0}}},x_{{\mathfrak {0}}}+\mathrm {d}x_{{\mathfrak {0}}})\). We observe that at time \(t_{{\mathfrak {1}}}\)

arrived in the interval \((x_{{\mathfrak {1}}},x_{{\mathfrak {1}}}+\mathrm {d}x_{{\mathfrak {1}}})\). (Incidental remark: this observation may be more or less surprising, and as such renders the outcome of our series of experiments more or less exceptional. The reason is that instead of (6) one would expect:

This is not, however, our concern here. We assume that the distributions (5) and (6) are actually realized and we have to draw conclusions based on this fact.)

The solution of this more general problem is considerably more difficult than in the special case previously considered. If we knew how many of the particles (5) contribute to (6), then we would have to multiply this number by (4) and then to integrate \(x_{{\mathfrak {0}}}\) and \(x_{{\mathfrak {1}}}\) from \(-\infty \) to \(+\infty \). Determining the aforementioned number is the main task.

We divide the x-axis in cells of equal size which, for simplicity’s sake, we take of unit length. We call \(a_{k}\) the number (5) which at time \(t_{{\mathfrak {0}}}\) starts from the kth cell, \(b_{l}\) the number (6) which at time \(t_{{\mathfrak {1}}}\) lands in the lth cell. Let \(g_{k l}\) be the a priori probability for a particle starting from the kth cell to arrive to the lth cell, i.e. \(g_{k \,l}\) is an appropriate notation for \(g(x_{{\mathfrak {1}}}-x_{{\mathfrak {0}}},t_{{\mathfrak {1}}}-t_{{\mathfrak {0}}})\) in the present case and satisfies \(g_{l k}=g_{k l}\). Finally, let \(c_{k l}\) be the number of particles which arrive into the lth from the kth cell. The following equations therefore apply

Between Eq. (7), there is one and only one identity which stems from

The matrix \(c_{kl}\) is clearly not given. The actually observed particle migration can come into being according to any of the \(c_{k\, l}\)-matrices compatible with (7). In the limit \(N=\infty \) (which is of course always meant), it will be, however, correct to assume that the actual migration will be realized with complete certainty by that \(c_{k\, l}\)-matrix which attributes the largest probability to the migration. Even for fixed \(c_{k\,l}\), the actually observed particle migration can be realized in very many different ways. One way is that one knew in which cell each individual particle landed. This possible realization yields for the observed outcome the probability

As mentioned above, there are, however, very many such equally probable possible realizations, specifically

The product of (9) and (10) results in the total probability yielded for the observed outcome by a fixed choice of \(c_{k l}\)

Now, as usual, we look for that \(c_{k l}\) which maximizes (11) under the constraints (7). One easily finds

The \(\psi _{k}\)’s and \(\phi _{l}\)’s are Lagrangian multipliers. They are determined by the constraints

Now, we have to translate (12) and (13) back to the language of the continuum. \(a_{k}\) and \(b_{l}\) are specified by (5) and (6). \(\psi _{k}\) and \(\phi _{l}\) are functions of x, namely we shall set

Furthermore, \(g_{k l}=g(x_{{\mathfrak {1}}}-x_{{\mathfrak {0}}},t_{{\mathfrak {1}}}-t_{{\mathfrak {0}}})\) holds. Hence,

and

is the desired number of particles which diffuse from \((x_{{\mathfrak {0}}},x_{{\mathfrak {0}}}+\mathrm {d}x_{{\mathfrak {0}}})\) to \((x_{{\mathfrak {1}}},x_{{\mathfrak {1}}}+\mathrm {d}x_{{\mathfrak {1}}})\). If we multiply (12’) by (4) and integrate over \(x_{{\mathfrak {0}}}\) and \(x_{{\mathfrak {1}}}\), we then obtain (after dividing by N) the probability density at x and time t:

This is the solution of the problem expressed in terms of the solution of the integral system (13’).

2.4 § 3

The discussion of this pair of equations would be certainly interesting but probably not simple because it is nonlinear. The existence and uniqueness of the solution (except perhaps for very tricky choices of \(w_{{\mathfrak {0}}}\) and \(w_{{\mathfrak {1}}}\)) I take for granted because of the reasonable question which in an unambiguous and sharp manner leads to these equations. Our current concern is less how to actually construct \(\psi \) and \(\phi \) from given \(w_{{\mathfrak {0}}}\) and \(w_{{\mathfrak {1}}}\) than the general form of w(x, t). The latter is in fact extremely transparent: the product of an arbitrary solution of (1) and an arbitrary solution of (2). Namely the first factor in (14) is nothing else than an arbitrary solution of (1) distinguished by \(\psi (x_{{\mathfrak {0}}})\), its value distribution at time \(t_{{\mathfrak {0}}}\). The same applies to the second factor in (14) with respect to Eq. (2). Furthermore, it is a simple consequence of (1) and (2) that the product of two solutions has a time independent \(\int _{-\infty }^{\infty }\mathrm {d}x\ldots \) preserving normalization to unity if it was normalized to unity at some time. (This restriction must be obviously imposed: one must only use 2 solutions whose product has a finite value of \(\int _{-\infty }^{\infty } \mathrm {d} x \ldots \), so that it can be normalized to 1). And then within the time interval in which the product of the solutions remains regular one may choose arbitrarily any two times \(t_{\mathfrak {0}}\) and \(t_{\mathfrak {1}}\) as the ones for which the probability density has been observed (of course observed to be precisely as given by the values in the product). Then, the product yields the probability density for intermediate times.

2.5 § 4

The most interesting thing about result today is the striking analogy with quantum mechanics. The existence of a certain relationship between the fundamental equation of wave mechanics and the Fokker[-Planck] equation, as between the statistical concepts arising from both of them, has probably impressed anyone familiar enough with both circles of ideas. And yet, a closer inspection reveals two very deep discrepancies. The first is that in the classical theory of random systems the probability density itself obeys a linear differential equation, whereas in wave mechanics this is the case for the so-called probability amplitudes, from which all probabilities are formed bilinearly. The second discrepancy resides in the following fact: whilst in both cases the differential equation is of first order in time, the presence of a factor \(\sqrt{-1}\) confers to the wave equation a hyperbolic or, physically stated, reversible character at variance with the parabolic-irreversible character of the Fokker[-Planck] equation.

In both these points, the example considered above shows a much closer analogy with wave mechanics although it concerns a classical, originally irreversible system. As in wave mechanics, the probability density is given not by the solution of a single Fokker[-Planck] equation but by the product of two equations differing only in the sign of the time variable. Thus, the solution does not privilege any time direction either. If one exchanges \(w_{{\mathfrak {0}}}(x)\) with \(w_{{\mathfrak {1}}}(x)\), one obtains precisely the reverse evolution of w(x, t) between \(t_{{\mathfrak {0}}}\) and \(t_{{\mathfrak {1}}}\). (In a certain sense, however, this fact also holds true for the simpler problem with just a single time boundary condition: if only the probability density at time \(t_{\mathfrak {0}}\) is given and nothing more, then the solution takes the same value at time \(t_{{\mathfrak {0}}}+t\) and \(t_{{\mathfrak {0}}}-t\).)

Whether this analogy will prove useful to clarify notions in quantum mechanics I cannot foresee, yet. The aforementioned \(\sqrt{-1}\) obviously constitutes, despite everything, a very far reaching difference. I cannot restrain myself from quoting here some words of A. S. Eddington on the interpretation of quantum mechanics—obscure as they may be—which can be found on page 216f of his Gifford lectures [43]

The whole interpretation is very obscure, but it seems to depend on whether you are considering the probability after you know what has happened or the probability for the purposes of prediction. The \(\psi \psi ^*\) is obtained by introducing two symmetrical systems of \(\psi \) waves traveling in opposite directions in time; one of these must presumably correspond to probable inference from what is known (or is stated) to have been the condition at a later time.

2.6 § 5

We wish now to write (14) in the form

where we assume that \(\Psi \) is a solution of (1), \(\Phi \) is a solution of (2) and the product \(\Psi \,\Phi \) is normalized to unity:

Upon multiplying the first equation by \(x\,\Phi \), the second by \(-x\,\Psi \), and adding them, one gets

Now, compute \(\int _{-\infty }^{\infty }\ldots \) and integrate by parts:

On the left hand side, there is the velocity with which the barycenter of the probability density moves. The integral on the right hand side, however, is constant because:

The centre of mass thus moves with constant velocity from its initial to its final position.

In the special case when the initial and the final position of the particle are known sharply, Eq. (4), one can furthermore state that the maximum of the probability moves uniformly from the initial to the final position. This is because (4) is at any time a Gaussian distribution, hence at any time the maximum and the mean value coincide.

2.7 § 6

In a special case, it is possible to specify immediately the solution of the pair of integral equations (13’). Namely, when the density \(w_{{\mathfrak {1}}}\) prescribed at the end of the time interval is precisely the one to which the initial distribution \(w_{{\mathfrak {0}}}\) evolves according to the free action of the diffusion equation (1), i.e. if

Then obviously, one has to set

w(x, t) satisfies then (1) in the entire time interval. If one imagines it as the diffusion process of many particles, then this is a thermodynamically completely normal diffusion process.

But also, conversely, when the initial distribution \(w_{{\mathfrak {0}}}\) is precisely the one into which the final distribution \(w_{{\mathfrak {1}}}\) would evolve during the time \(t_{{\mathfrak {1}}}-t_{{\mathfrak {0}}}\) according to the free action of the normal(!) diffusion equation (1); or in other words: when the final distribution is prescribed in such a way that it arises from the initial distribution following the reversed diffusion equation (2) in the time \(t_{{\mathfrak {1}}}-t_{{\mathfrak {0}}}\); also in this case the solution of (13’) is equally simple. In fact, the assumption then reads

and the solutions of (13’) are

w(x, t) then satisfies in the whole time interval the “reversed” equation (2), the corresponding diffusion process is thermodynamically as abnormal as possible. This, of course, occurs because of the odd boundary conditions, but renders possible a very interesting application to reality, namely to the way extremely unlikely exceptional states, that are occasionally, even if extremely rarely, to be expected, occur in a system in thermodynamic equilibrium.

Indeed, let us assume that we have observed the usual uniform distribution in a system of diffusing particles at time \(t_{{\mathfrak {0}}}\) and a substantial deviation therefrom at a later time \(t_{{\mathfrak {1}}}\), yet not so substantial not to noticeably return to the uniform distribution after following the diffusion law for a time \(t_{{\mathfrak {1}}}-t_{{\mathfrak {0}}}\). In addition, we assume to know with certainty that for intermediate times the system is left to itself in unperturbed thermodynamic equilibrium or, in other words, that the observed abnormal distribution is truly a spontaneous thermodynamic fluctuation phenomenon. If we were asked our opinion about the previous history that the observed strongly abnormal distribution could have probably had, then we would have to reply that its first signs probably date back as long as it will take for its last traces to disappear; that from these first signs an unfathomable swelling of the anomaly would have been occasioned by diffusion currents that almost always almost exactly flowed in the direction of the concentration gradient (upward and not downward slope) but, beside this sign difference, corresponded to the material [diffusion] constant D: in brief that the anomaly was probably caused by a precise time reversal of a normal diffusion process. Admittedly, this statement about the likely previous history would be only a probabilistic judgment, nevertheless it should, in my opinion, be granted the same degree of “almost certainty” as the corresponding statement about the likely future evolution, i.e. about the normal diffusion process to be expected for \(t > t_{{\mathfrak {1}}}\).

This statement, of course, must not lead to the misconception that a diffusion current in the direction of the gradient and with magnitude precisely corresponding to the diffusion constant D would be in itself much less unlikely than any other biased current of arbitrary magnitude. Our probabilistic conclusion is based not only on the diffusion mechanism but in an essential manner on the knowledge of the strongly anomalous final state, which we assume to have been actually observed. It turns out that it can always be attained in an infinitely simpler manner and with an exceedingly larger probability by a precise time reversal of the diffusion equation than by any other less radical means.

All the above may be without effort applied to arbitrary thermodynamic fluctuation phenomena as soon as they substantially exceed the range of normal fluctuations. The so-called irreversible laws of nature, if one interprets them statistically, do actually not privilege any time direction. This is because what they say in the particular case depends only upon the time boundary conditions at two “cross sections” (\(t_{{\mathfrak {0}}}\) and \(t_{{\mathfrak {1}}}\)) and is completely symmetric with respect to these cross sections without any special consequence associated with their time ordering. This fact is only somewhat concealed inasmuch we in general consider only one of the two “cross sections” as really observed whilst for the other the reliable rule holds that if it is removed sufficiently far in time, then one may assume that the state of maximum disorder or of maximum entropy applies there. That this rule is correct, is actually very peculiar and, in my opinion, not logically deducible. But in any case also this rule does not privilege any arrow of time inasmuch it applies equally in either of the two time directions the second cross section is removed provided it is at sufficient time separation from the first.

Incidentally, all of this was quite certainly already the explicit opinion of Boltzmann. In no other way can one understand for instance when he states the following at the end of his paper “Über die sogenannte H-Kurve” [42] the followingFootnote 2:

There is no doubt that we might as well conceive a world in which all natural processes can occur in reversed order. And yet a human being living in such a world would not have a different perception from us. He would just refer to as future what we refer to as past.

To those who regard as trivial and needless the extensive substantiation of this old thesis by means of the diffusion processes which were so exhaustively studied in this context already by Smoluchowski, I apologize. I will gladly subscribe to their opinion. But during discussions about these matters, I occasionally encountered considerable objections which made me unsure. It was suggested that the laws governing the emergence through fluctuations of a strongly anomalous state from a normal one are not nearly as strict as those governing its disappearance; rather that a certain anomalous state, if one accumulates enough record of its rare occurrences through appropriately long observation times, is relatively frequently attained through a completely disordered process, which does not correspond to the time reversal image of a normal process.

2.8 § 7

The considerations of the first three paragraphs can be applied with minor changes also to much more complicated cases: several spatial coordinates, variable diffusion coefficients, external forces which are arbitrary functions of position. One always gets a probability density in the form of the product of solutions of two adjoint equations which in general not only differ in the sign of the time variable but also in other terms. One finds that fundamental solutions (see Eq. (3) above) of the adjoint equations enjoy the simple (and certainly not new) property that they are obtained from one another by exchanging the coordinates of of the boundary conditions [des Aufpunkts und des Singularitaetspunkts] and by inverting the sign of time. But I do not wish to analyse these points more closely before time tells if they can really lead to a better understanding of quantum mechanics.

3 Commentary

The scope of these notes is first of all to explain the relation of the particle migration model of Sect. § 2 of Schrödinger’s paper with the modern theory of large deviations [68, 74, 75, 81, 116]. Ahead of his time, Schrödinger solves the particle migration model in the large deviation limit. In doing so, he identifies a quantifier of the divergence of the probability of a migration when the sample space is restricted to migrations between pre-assigned initial and final particle distributions from the probability of a particle migration when only the initial particle distribution is assigned, whereas the final distribution is arbitrary. The quantifier turns out to be a relative entropy, the Kullback–Leibler divergence [94], between a process connecting the two assigned probability densities and a reference process. Schrödinger uses the Kullback–Leibler divergence to formulate an optimal mass transport problem in the continuum limit [117]. Namely, equations (13’) of Schrödinger’s paper specify the minimizer of the Kullback–Leibler divergence between a reference Markov process and a second process whose transition probability evolves an initial assigned probability density into a target one, equally pre-assigned. Schrödinger explicitly constructs a probability density continuously interpolating between the assigned boundary conditions at the end of a time interval. The interpolating density admits a product decomposition reminiscent of Born’s law in Quantum Mechanics. Schrödinger derives this result without explicitly introducing microscopic dynamics in the continuum limit. Mainly drawing from [9], we show that the interpolating density can itself be directly regarded as the solution of a stochastic optimal control problem stemming from a microscopic formulation in terms of stochastic differential equations.

Next, we turn our attention to the notion of time reversal for Markov process introduced by Kolmogorov in [14]. Kolmogorov considered his results “in spite of their simplicity, to be new and not without interest for certain physical applications, in particular for the analysis of the reversibility of the statistical laws of nature, which Mr. Schrödinger has carried out in the case of a special example” [14].

Finally, we briefly discuss the analogy between Schrödinger’s optimal control problem and the mathematical derivation of Landauer’s bound (in the mean value sense) for diffusion processes.

Overall, the scope of this commentary is to offer the reader a first brief overview, admittedly incomplete in spite of our effort, of the significance of Schrödinger’s paper for current research from a non-equilibrium statistical physics perspective.

4 From particle migration model to optimal control

Our description of the particle migration model draws from [1, 2] which also provide further mathematical details and references.

4.1 Formulation of Schrödinger’s particle migration model

We suppose that

-

two sets \( \left\{ A_{i} \right\} _{i={\mathfrak {1}}}^{{\mathfrak {n}}} \) and \( \left\{ B_{i} \right\} _{i={\mathfrak {1}}}^{{\mathfrak {n}}} \) of \({\mathfrak {n}}\) boxes

-

N particles initially randomly located in the set \( \left\{ A_{i} \right\} _{i={\mathfrak {1}}}^{{\mathfrak {n}}} \)

are given. We want to move all the particles from a given distribution in the first set of boxes to an assigned distribution in the second set. We suppose that each particle migration is an independent event which occurs with probability (Fig. 1)

Particles can move in many ways. In other words, there can be multiple realizations of the particle migration process. We can put each realization in correspondence with the realization of a random variable \( {\mathscr {C}}=\left\{ {\mathscr {c}}_{i \,j} \right\} _{i,j=1}^{{\mathfrak {n}}}\). The ensemble \(\Gamma \) of the admissible values of \( {\mathscr {C}} \) consists of \({\mathfrak {n}}\,\times \,{\mathfrak {n}}\) square matrices \(C =\{c_{i\,j}\}_{i,j=1}^{{\mathfrak {n}}}\) with integer elements satisfying the constraint

The interpretation of the matrix elements is

The probability to sample the matrix C from the ensemble \( \Gamma \) is then specified by the multinomial distribution, i.e.

Furthermore, we may suppose that in each box \( A_{i} \) there are exactly \( a_{i} \) particles:

with the obvious constraint \(\sum _{i=1}^{{\mathfrak {n}}}a_{i}=N\). It is expedient to store the information about the marginal particle distribution in the boxes \( \left\{ A_{i} \right\} _{i=1}^{{\mathfrak {n}}} \) in a vector-valued random variable \( \widetilde{{\mathscr {C}}} \) whose components equal the sum over columns of \( {\mathscr {C}} \):

The events fixing the marginal

also obey a multinomial distribution

We, therefore, recover Schrödinger’s equation (11) in the form

The matrix elements \( c_{i\,j} \)’s on the right-hand side are now subject to the constraints (18), whereas

4.2 Large deviation and relative entropy

For large N, we can estimate multinomial probabilities by means of Stirling’s formula. To this effect, we introduce the initial probability distribution \( \left\{ w_{{\mathfrak {0}}}(i) \right\} _{i=1}^{{\mathfrak {n}}} \) and relate it to the initial particle distribution via

Similarly, we associate with each realization of \( {\mathscr {C}} \) an empirical probability distribution \( \left\{ k_{i\,j} \right\} _{i,j=1}^{{\mathfrak {n}}} \) by setting

and, correspondingly, an empirical transition probability

Upon retaining only leading order contributions in the large N-limit, after some straightforward algebra we get the large deviation asymptotics of the probability (19)

The symbol \( \asymp \) emphasizes that in (21) we are neglecting sub-exponential corrections. This is the gist of any large deviation estimate. Indeed, an arbitrary random quantity \( \alpha _{N} \) depending upon a positive definite parameter N is said to satisfy a large deviation principle if its probability distribution is amenable to the form

for N tending to infinity. The positive-definite function I is usually referred to as “rate function” or “Cramér function”. A large deviation estimate implies an exponential decay of the probability distribution except when \( \alpha _{N} \) attains its typical value \( a_{\star } \) such that

In the particular case of (21), the rate function coincides with the relative entropy or Kullback–Leibler divergence [94] (see also [68]) between the empirical (\( {\mathsf {K}}=\left\{ k(j|i) \right\} _{i,j=1}^{{\mathfrak {n}}} \)) and the a priori (\({\mathsf {G}}=\left\{ g(j|i) \right\} _{i,j=1}^{{\mathfrak {n}}} \)) transition probabilities averaged with respect to the initial particle distribution

The Kullback–Leibler is a positive definite quantity measuring how one probability distribution is different from a second, reference probability distribution. Namely, upon applying the elementary inequality

we immediately obtain

From these considerations, it immediately follows that for (21) the typical value of the rate function corresponds to the case when the two conditional probabilities coincide.

Previous to Schrödinger, Ludwig Boltzmann used large deviation type estimates in his pioneering work [63] connecting thermodynamics with the probability calculus. The rigorous theory of large deviations started perhaps 7 years after Schrödinger’s paper in 1938 with the work of Harald Cramér [69] motivated by the ruin problem in insurance mathematics. In the modern literature, an estimate like (21) is usually referred to as a “level 2” large deviation whose precise mathematical formulation goes under the name of Sanov’s lemma [109].

We refer to [116] or to chapter 6 of [32] for an overview of large deviation theory aimed at a physics readership. More mathematically oriented references on modern large deviation theory are, e.g. [74, 81].

4.3 Optimization problem in the continuum: “static” Schrödinger’s problem

We are now ready to reformulate the problem in a formal continuum limit. As our aim is to emphasize the connection with Landauer’s bound and related contemporary problems in statistical physics (see Sect. 4 below), we consider a straightforward generalization of the continuum limit by replacing the sum over indices in (22) with integrals over \({\mathbb {R}}^{d} \). The counterpart of the normalization condition (17) is then

The continuum limit conditional probability is

The probability density \( w_{{\mathfrak {0}}} \) is the generalization over \({\mathbb {R}}^{d} \) of the same quantity for \( d=1 \) considered by Schrödinger.

Next, we fix a reference transition probability density \({\mathscr {g}}\). The counterpart of the problem posed by Schrödinger reads as follows: finding the transition probability \({\mathscr {k}}\) minimizing its Kullback–Leibler divergence from \({\mathscr {g}}\) under the constraint that \({\mathscr {k}}\) evolves an initial density \(w_{{\mathfrak {0}}}\) into a final density \(w_{{\mathfrak {1}}}\). Mathematically, this is equivalent to find \( {\mathscr {k}} \) as the minimizer of the functional

for \( w_{i} \), \( i={\mathfrak {0}},{\mathfrak {1}} \) and \( {\mathscr {g}} \) given. Of the integrals appearing in \( {\mathscr {A}}\)

-

the integral appearing in the first line of (24) is the Kullback–Leibler divergence between \( {\mathscr {k}} \) and \( {\mathscr {g}} \) averaged with respect to the initial density \( w_{{\mathfrak {0}}} \). When dealing with the Kullback–Leibler divergence between transition probabilities of two Markov processes, we always imply here also averaging with respect to the initial density.

-

The integral having as a prefactor the Lagrange multiplier \(\lambda _{{\mathfrak {1}}}\) enforces \({\mathscr {k}}\) to map the assigned initial density \(w_{{\mathfrak {0}}}\) into \(w_{{\mathfrak {1}}}\);

-

the integral associated with the Lagrange multiplier \(\lambda _{{\mathfrak {0}}}\) enforces \({\mathscr {k}}\) to preserve probability.

In what follows, we denote by \( {\mathscr {k}}^{\prime } \) an arbitrary variation of \( {\mathscr {k}} \) satisfying the constraint

The stationary variation

yields the condition

with solution \({\mathscr {k}}(\varvec{x}_{{\mathfrak {1}}}|\varvec{x}_{{\mathfrak {0}}})= {\mathscr {g}}(\varvec{x}_{{\mathfrak {1}}}|\varvec{x}_{{\mathfrak {0}}}) \,e^{\lambda _{{\mathfrak {1}}}(\varvec{x}_{{\mathfrak {1}}})+\lambda _{{\mathfrak {0}}}(\varvec{x}_{{\mathfrak {0}}})}\). Similarly, arbitrary variations of \( {\mathscr {A}} \) with respect to the Lagrange multipliers yield the self-consistency conditions

and

Finally, upon setting \(\varphi _{{\mathfrak {1}}}(\varvec{x}_{{\mathfrak {1}}})=e^{\lambda _{{\mathfrak {1}}}(\varvec{x}_{{\mathfrak {1}}})}\) and \(w_{{\mathfrak {0}}}(\varvec{x}_{{\mathfrak {0}}})=\varphi _{{\mathfrak {0}}}(\varvec{x}_{{\mathfrak {0}}})\,e^{-\lambda _{{\mathfrak {0}}}(\varvec{x}_{{\mathfrak {0}}})}\) we impose the boundary conditions in the form of Schrödinger’s mass transport equations (13’)

The existence and uniqueness of the pair \( \varphi _{{\mathfrak {0}}}\), \(\varphi _{{\mathfrak {1}}} \) solving (27) was later proven by Robert Fortet [84] and under weaker hypotheses by Arne Beurling [58] and Benton Jamison [89]. Further notable extensions and refinements have then been considered in [25, 54, 55, 83, 104].

4.4 Probability at intermediate times

We now start making explicit use of the Markov property. We identify the reference transition probability density \( {\mathscr {g}} \) with the value at \( t=t_{{\mathfrak {1}}} \), \( s=t_{{\mathfrak {0}}} \) of a two-parameter family of Markov transition probabilities \( {\mathscr {g}}_{t\,s} \). For any t belonging to the closed interval \( [t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}] \), we then construct a probability density \( w_{t}\) according to the formula (Eq. (14) of Schrodinger)

with

The probability density (28) satisfies the conditions (27) as a consequence of the fact that the transition probability of a Markov process reduces in the limit \( |t-s|\downarrow 0 \) to the kernel of the identity operator

Overall, (28) is the source of what Schrödinger calls “remarkable analogies with quantum mechanics that seem to me worth considering”. Namely, (28) bears a formal resemblance with Born’s rule prescribing that probabilities in Quantum Mechanics must be computed as the modulus squared of a probability amplitude. Furthermore, in Quantum Mechanics, the complex conjugation operation is interpreted as time reversal. Schrödinger’s quote of Eddington’s Gifford lecture remark in § 4 of the paper refers to this fact. In the case of (28), time reversal is encoded in the definitions (29a), (29b) respectively, stating that \( {\bar{h}}_{t}\) evolves as a particular solution of Kolmogorov’s forward equation admitting \( {\mathscr {g}}_{t\,t_{{\mathfrak {0}}}} \) as fundamental solution and that \( h_{t} \) is a harmonic function with respect to \({\mathscr {g}}_{t_{{\mathfrak {1}}\,t}}\): a particular solution of Kolmogorov’s backward equation also admitting \({\mathscr {g}}_{t_{{\mathfrak {1}}}\,t}\) as fundamental solution; see, e.g. [1, 25, 107] for further details.

We now want to show how to directly obtain (28) as the solution of a dynamical optimal control problem [9].

5 Stochastic optimal control problem

5.1 Relation with the pathwise Kullback–Leibler entropy minimization: “dynamic” Schrödinger diffusion problem

We recall that the transition probability of a Markov process \( {\mathscr {k}}_{t\,s} \) obeys for any \(s\,\le \,u\,\le \,t\) the Chapman–Kolmogorov equation (see, e.g. [107]):

The Kullback–Leibler between transition probability \( {\mathscr {k}} \) and reference transition probability \( {\mathscr {g}} \) is

We notice that the Chapman–Kolmogorov equation allows us to pick an arbitrary \( s_{{\mathfrak {0}}} \) such that \( t_{{\mathfrak {0}}}\,\le \,s_{{\mathfrak {0}}} \,\le \,t_{{\mathfrak {1}}}\), and couch the Kullback–Leibler divergence into the form

where

The observation is useful if we then apply the inequality (23) to the first integral on the right-hand side of (31). After straightforward algebra, we obtain

where now

If we repeat the same steps over an arbitrary partition in \( n+2\,\ge \,3 \) sub-intervals of the time interval \( [t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}] \), we obtain

Passing to the limit \( n\uparrow \infty \), we may formally write

The right-hand side, if it exists, is the Kullback–Leibler divergence between the probability measure \( \mathrm {P}_{{\mathscr {k}}} \) generated by the Markov process with transition probability \( {\mathscr {k}} \) and initial density \(w_{{\mathfrak {0}}}\) and the probability measure \( \mathrm {P}_{{\mathscr {g}}} \) of the reference process with transition probability \( {\mathscr {g}} \) and initial density \(w_{{\mathfrak {0}}}\). We call \( \mathrm {D}_{\scriptscriptstyle {KL}}(\mathrm {P}_{{\mathscr {k}}}\Vert \mathrm {P}_{{\mathscr {g}}})\) the pathwise Kullback–Leibler divergence.

From the physics side, the inequality (34) has a simple interpretation. The Kullback–Leibler divergence is a relative entropy. If we construe entropy as a quantity counting the relevant number of degrees of freedom, physical intuition suggests that its value increases when measuring the divergence at each instant of time rather than once over the full time interval of the evolution. Most importantly, \( \mathrm {D}_{\scriptscriptstyle {KL}}(\mathrm {P}_{{\mathscr {k}}}\Vert \mathrm {P}_{{\mathscr {g}}})\) admits a direct expression in terms of quantities characterizing the microscopic state of the Markov process as we turn to show in the following section.

5.2 Explicit expression of the pathwise Kullback–Leibler divergence from a microscopic dynamics

From now on, we set the focus on the pathwise Kullback–Leibler divergence \( \mathrm {D}_{\scriptscriptstyle {KL}}(\mathrm {P}_{{\mathscr {k}}}\Vert \mathrm {P}_{{\mathscr {g}}})\). Working with pathwise Kullback–Leibler divergence is natural for non-equilibrium statistical mechanics ([9] and, e.g. [13, 25, 32, 96, 113]) and control theory (see, e.g. [33, 59, 66, 71, 78]) applications precisely because of the existence of a direct link with the microscopic dynamics. The naturalness of this concept is the reason why \( \mathrm {D}_{\scriptscriptstyle {KL}}(\mathrm {P}_{{\mathscr {k}}}\Vert \mathrm {P}_{{\mathscr {g}}})\) is often referred to in the statistical physics literature without the further specification of “pathwise”.

In order to determine the explicit expression of the limit of (33) as the mesh of the partition of \( [t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}] \) goes to zero, we note that the probability measures of \( \mathrm {P}_{{\mathscr {k}}} \) and \( \mathrm {P}_{{\mathscr {g}}} \) coincide with the path measures of Itô stochastic differential equations of the form

where \( \left\{ \varvec{\omega }_{t} \right\} _{t\,\in \,[t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}]} \) is a Wiener process. The drift in (35a) is the sum of two vector fields \(\varvec{b}\,,\varvec{u}:[t_{{\mathfrak {0}}},t_{{\mathfrak {1}}}]\,\times \,{\mathbb {R}}^{d}\mapsto {\mathbb {R}}^{d}\), the second of which, \( \varvec{u} \), called the control, we take to be identically vanishing in the reference case. The diffusion amplitude \({\mathsf {a}}\) is a position-dependent strictly positive definite matrix \({\mathsf {a}}_{t}:[t_{{\mathfrak {0}}},t_{{\mathfrak {1}}}]\,\times \,{\mathbb {R}}^{d}\mapsto {\mathbb {R}}^{d^{2}}\) related to the diffusion matrix by \({\text {A}}_{t}(\varvec{x})=({\mathsf {a}}_{t} {\mathsf {a}}_{t}^{\top })(\varvec{x}).\) The Itô differential equation (35) representation of the dynamics provides an explicit expression of the transition probability in any infinitesimal interval belonging to a partition of \( [t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}] \) (see, e.g. chapter 5 of [110]):

Here, we use the notation \(\Vert \varvec{v}\Vert _{{\mathsf {A}}^{-1}}^{2}=\langle \varvec{v}\,,{\mathsf {A}}^{-1}\varvec{v}\rangle _{{\mathbb {R}}^{d}}\) relating the squared norm with metric \( {\text {A}}^{-1} \) of a vector to the inner product in \( {\mathbb {R}}^{d} \), and \(\tau _{i}=t_{i+1}-t_{i}\). The short-time expression of the transition probability immediately implies

On each sub-interval, the integral over the variable \(\varvec{x}_{i+1}\) is Gaussian and after an obvious change of variables becomes

Passing to the limit, we finally arrive at

with \( w_{t}\left( \varvec{x}\right) \) evolving according to (32).

Remark

A further generalization is obtained if we take as reference process the system of Itô stochastic differential equations

where \(V:{\mathbb {R}}^{d}\times [t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}]\mapsto {\mathbb {R}}_{+}\). The reference pseudo-transition probability density admits the short-time representation

In such a case, we obtain the functional

We refer to the stochastic mechanics literature (see, e.g. [1, 24, 25, 38] and references therein) for a rigorous derivation and physical interpretation of this result.

5.3 Infinite dimensional optimal control problem

The optimal control problem associated with (37) is: once the probability densities \( w_{{\mathfrak {0}}} \) and \( w_{{\mathfrak {1}}} \) are assigned at the boundaries of the control interval \( [t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}] \), find the vector field \( \varvec{u} \) in (35a) such that \( w_{{\mathfrak {0}}} \) evolves into \( w_{{\mathfrak {1}}} \) whilst minimizing the Kullback–Leibler divergence (37). To the best of our knowledge, this formulation of Schrödinger’s problem is due to Hans Föllmer [83].

One way to derive the optimal control equations in analogy with what is done in the finite dimensional case (24) is to apply the method of the so-called adjoint equation, well known in statistical hydrodynamics [114, 115]. The idea is to reformulate optimal control as a variational problem for an action functional whereby the dynamics are imposed by means of Lagrange multipliers. We may conceptualize the adjoint equation method as an extension of Pontryagin’s principle (see, e.g. [100]) to stochastic dynamics in analogy with Jean-Michel Bismut’s treatment of stochastic variational calculus ([60] see also [92]). We refer to [9] for a mathematically rigorous treatment of the optimal control problem, whereas [57] provides an overview on optimal control in general and targeted at the physics audience.

5.3.1 The adjoint equation formulation of optimal control

In order to illustrate the adjoint equation method, we recall that the mean forward derivative of a test scalar function f along the paths of (35) is

The mean forward derivative is thus specified by the action of a differential operator \( {\text {L}}\), called the generator, on test scalar functions [110]. The expression of mean forward derivative along the paths of the reference process is readily seen by setting \( \varvec{u}_{t}=0 \) in the foregoing definition (39). The adjoint equation method consists of determining the optimal control equations by imposing that the functional

be stationary with respect to the fields J, w and \( \varvec{u} \). To justify (40), we observe that the field J plays the role of a Lagrange multiplier enforcing the evolution law that the probability density \( w_{t} \) must obey in \( [t_{{\mathfrak {0}}}\,t_{{\mathfrak {1}}}] \). Namely, an integration by parts over position variables defines the adjoint \( {\text {L}}^{\dagger } \) of the generator \( {\text {L}} \)

Similarly, an integration by parts with respect to the time variable brings about the identity

which allows us to couch (40) into the equivalent form

The role of J as Lagrange multiplier thus becomes manifest. At variance with the generator \( {\text {L}} \), the adjoint \( {\text {L}}^{\dagger } \) is not in general a differential operator as it instead depends upon the boundary conditions imposed on the stochastic process (see, e.g. [110]). This is ultimately the general reason for preferring (40) over (41) in the formulation of the adjoint equation method. Here, however, we always consider probabilities decaying sufficiently rapidly at infinity in the Euclidean space \( {\mathbb {R}}^{d} \). As a consequence, we are entitled to identify \({\text {L}}^{\dagger } \) with the differential operator specifying the Fokker–Planck equation governing the evolution of \( w_{t} \).

5.3.2 Optimal control equations

The action functional (40) is stationary if the fields satisfy

We recognize that (42a) is the Fokker–Planck equation and (42b) the dynamic programming equation of control theory. The stationary condition occasions the identification of the Lagrange multiplier with the value function of optimal control theory [100]. Finally, equation (42c) relates the stationary value of the so far unknown vector field \(\varvec{u}_{t}\) to the solution of the dynamic programming equation (42b).

5.4 Solution of the optimal control equations

Once we insert (42c) into (42b), we obtain the Hamilton–Jacobi–Bellman equation

The logarithmic transform

then maps the Hamilton–Jacobi–Bellman (43) into a backward Kolmogorov equation with respect to the reference process [9]:

In other words, the function \( h_{t}\) is \( {\mathscr {g}} \)-harmonic. The knowledge of the \( {\mathscr {g}} \)-harmonic function \( h_{t} \) allows us to determine via (42c) (44) the value of the optimal control:

Next, a direct calculation proves that the transition probabilities of the optimal control and reference process are linked by Doob’s transform of the transition probability [77], which yields

The solution of the optimal control problem is thus fully specified if we determine the boundary conditions for \( h_{t} \) from the solution of Schrödinger’s mass transport problem (27), where we now write

The definition of transition probability density implies that the probability density of the optimal control process evolves from \( t_{{\mathfrak {0}}} \) as

Upon inserting (46) in the above expression, we obtain

We thus recover the Born-like representation (28) of the probability density. In particular, the function \( {\bar{h}}_{t} \) defined in (29a) satisfies by construction the forward Kolmogorov equation with respect to the reference process

In summary, we have shown that the problem posed and, modulo technical refinements, solved by Schrödinger is the optimal control problem of finding the diffusion process interpolating between two target states whilst minimizing the Kullback–Leibler divergence from a reference uncontrolled process. We refer to [9] see also [1, 5, 22, 97,98,99] for further mathematical details.

5.5 Connection with the Schrödinger equation

The factorization of the interpolating probability (47) admits a suggestive rewriting which further exhibits formal analogies with quantum mechanics. Namely, if we introduce the complex “wave function”

then Born’s rule takes the expression familiar in quantum mechanics:

We emphasize that in (49) amplitude and phase factors are well defined as \( h_{t} \) and \( {\bar{h}}_{t} \) are positive definite. Furthermore, the result of a tedious but conceptually straightforward calculation using Kolmogorov’s forward (48a) and backward (45) equations and \(A_{t}(\varvec{x})=\frac{\hbar }{m}\) shows that the wave function (49) satisfies

where

We thus recognize that the equation governing the wave function evolution is a nonlinear Schrödinger equation for a particle in an electromagnetic field. Furthermore, had we taken as starting point the optimal control problem (38) then the linear potential term \( V_{t}(\varvec{x}) \) would appear on the right-hand side of (50). This latter observation is meant to emphasize that we can adapt the formulation of Schrödinger’s mass transport to recover all terms entering the most general form of Schrödinger’s equation in Quantum Mechanics. The deep discrepancies between Schrödinger’s mass transport problem and Quantum Mechanics are encapsulated in the nonlinear “Madelung-de Broglie” nonlinear potential (51) [73, 101]. A further discrepancy with ordinary Quantum Mechanics is the existence of a kinematic equation for the position process which we can straightforwardly derive by inserting the optimal value of the control into (35a):

Most of the physics literature inspired by Schrödinger’s paper have investigated the analogies between classical stochastic processes and quantum mechanics. It is impossible to give a fair account of this literature within this short commentary. We therefore restrict ourselves to a few observations that, we hope, might serve as an invitation to the existing excellent literature.

Whilst Schrödinger and, as mentioned in the introduction, Fürth [12] (see also [31]) uncover classical probabilistic analogues of quantum mechanics, the perspective of Fényes, [11] and Nelson [26, 27] is somewhat reversed as they try to reformulate quantum mechanics as a classical probabilistic theory. The aim is to show, in the words of Fényes [11], that “wave mechanics processes are special Markov processes” and that “the problem of the ‘hidden parameters’ can also be solved in quantum mechanics using the principle of causality” thus arriving at a “statistical derivation of the Schrödinger equation”. We refer to [86] for criticism (see [62] for a reply) and to [10] (see also [1, 25, 61]) for a state-of-the-art overview of this ambitious and controversial program.

Rich in applications, especially in numerical simulations of field theories, are imaginary-time models of finite and infinite dimensional quantum mechanics, which can be also traced back to the ideas put forward by Schrödinger and Fürth. In addition to the applications in optimal stochastic control mentioned in the introduction, it is worth mentioning the stochastic quantization proposed by Giorgi Parisi and Yongshi Wu in [106]. Stochastic quantization regards Euclidean quantum field theory as the equilibrium limit in an extra fictitious time variable of a statistical system coupled to a thermal reservoir. We refer to [72] for a self-contained presentation.

6 Relation with Kolmogorov’s time reversal

We now turn to discuss the implications for Schrödinger’s mass transport equations (27) of the time reversal relations for Markov processes described by Kolmogorov in [14, 15]. Schrödinger’s and Kolmogorov’s work originated a rich literature investigating properties of Markov processes under time reversal (see, e.g. [24, 67, 87]) and consequences for irreversibility in statistical and quantum physics (see, e.g. [7, 91, 96] and [88] for a pedagogic introduction). Without any pretense of completeness, we only highlight here some elementary facts.

6.1 Time reversal for Markov transition probability densities

We start by assuming that we are given

-

the transition probability density \( {\mathscr {g}}_{t\,s} \) of a Markov process for any \( s\,\le \,t\,\in \,[t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}] \);

-

a particular expression of the probability density of the Markov process \( p_{t} \) evolving from, e.g. \( p_{t_{{\mathfrak {0}}}} \) at time \( t_{{\mathfrak {0}}}\) and strictly positive for all \( t\,\in \,[t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}] \).

This information allows us to write the joint probability density of the Markov process at any times s and t

Drawing from [14], we then use the joint probability to define a time reversed transition probability associated with the density \( g_{t} \)

or, equivalently,

The time reversed transition probability density \( {\mathscr {g}}_{s\,t}^{(r)}(\varvec{y}|\varvec{x}) \) has the interpretation of specifying the probability density of the event that the process visits the state \( \varvec{y} \) at a previous time s conditional upon the fact that we know that the process is in \( \varvec{x} \) at a subsequent time t. A direct calculation (see, e.g. [25] and also [65, 67, 79, 80]) shows that if \( {\mathscr {g}}_{t\,s} \) and \( p_{s} \) obey the same microscopic dynamics (35a), then \( {\mathscr {g}}_{s\,t}^{(r)} \) satisfies a pair of adjoint Kolmogorov equations

where it now evolves with respect to s backwards in time, i.e. for values of s decreasing from t and

Alternatively, we may resort to the time reversal transformation

so that

in order to associate with \( {\mathscr {g}}_{s\,t}^{(r)} \) a forward process with transition probability density \( \tilde{{\mathscr {g}}}_{s^{\prime }\,t^{\prime }}\) specified by the identity

holding for all \( \varvec{x} \), \( \varvec{y} \). It is then readily verified that inserting \( \tilde{{\mathscr {g}}}_{s^{\prime }\,t^{\prime }} \) in (53) maps the “backward” Kolmogorov pair into the standard pair consisting of a forward Fokker–Planck equation and its adjoint.

6.2 Consequences for Schrödinger’s mass transport—general case

It is instructive to rewrite Schrödinger’s mass transport equations (27) in terms of \( {\mathscr {k}}_{s\,t}^{(r)} \). Some straightforward substitutions yield

where we introduced

Correspondingly, the equations for the \( {\mathscr {g}} \)-harmonic function and its adjoint, Eq. (29), become

We see that the harmonic function and its adjoint exchange roles if we rephrase Schrödinger’s mass transport equations in terms of the reversed transition probability density \(\smash {{\mathscr {g}}_{t\,t_{{\mathfrak {1}}}}^{(r)} }\). The time reversal operation (54) brings about a perhaps more interesting interpretation: we obtain forward dynamics with respect to the transition probability \( \tilde{{\mathscr {g}}}_{s^{\prime }\,t^{\prime }} \), whereas the boundary conditions \( w_{{\mathfrak {0}}} \), \( w_{{\mathfrak {1}}} \) exchange their roles.

6.3 Consequences for Schrödinger’s mass transport—detailed balance

Following § 4 of [14], we now make two further assumptions:

-

the transition probability density of the forward process is invariant under time translations

$$\begin{aligned} {\mathscr {g}}_{t\,s}(\varvec{x}|\varvec{y})= {\mathscr {g}}_{t-s}(\varvec{x}|\varvec{y}) \end{aligned}$$for all \( \varvec{x} \),\( \varvec{y} \);

-

the transition probability density of the forward process admits an invariant density \( p_{\star } \).

The hypotheses imply that the reversed transition probability specified by the invariant measure is then invariant under time translations. This property is inherited by the transition probability encoding the equivalent forward description

As a consequence (52) becomes

A stronger assumption is the detailed balance condition

or, equivalently,

The presence of detailed balance condition translates into an invariance property under time reversal of the solution of Schrödinger’s mass transport equations. More explicitly (55) becomes

Upon contrasting the above pair of equations with the time autonomous version of (27):

we arrive at the conclusion that under the detailed balance hypothesis (58) exchanging the boundary conditions \(w_{{\mathfrak {0}}}\longleftrightarrow w_{{\mathfrak {1}}}\) maps a solution of the Schrödinger mass transport equation (27) into a solution according to the transformation law

Under the same detailed balance hypothesis, we verify that (56) becomes

If we now compare (59) against the time autonomous limit of (29):

we see that the time reversal operation (54), respectively, relates (59a) to (60b) and (59b) to (60a). The interpretation is that exchanging the boundary conditions \(w_{{\mathfrak {0}}}\longleftrightarrow w_{{\mathfrak {1}}}\) occasions the transformation

so that the interpolating density (28) also transforms as

for any \( t\,\in \,[t_{{\mathfrak {0}}},t_{{\mathfrak {1}}}] \). This is an important consequence of the property that Schrödinger calls reversibility. In Schrödinger’s words, “thus the solution does not indicate any time direction. If one exchanges \(w_{0}\) with \(w_{1}\), one obtains precisely the reverse evolution of \(w_{t}(\varvec{x})\) ”. This idea is further highlighted if we couch the forward Kolmogorov equation associated with the solution of the optimal control problem in the form of the mass transport equation

driven by the current velocity [26]

Under time reversal, the current velocity transforms as

This means that the probability density is transported, in Schrödinger’s words, by a “diffusion current, which almost always almost exactly flowed in the direction of the concentration gradient (upward and not downward slope)”. It is thus tempting to interpret the discussion of § 6 in Schrödinger’s paper as a precursor of the optimal fluctuation theory later devised by Lars Onsager and Stefan Machlup [105].

7 Schrödinger’s mass transport and Landauer’s bound

Thermodynamic processes at the micro-scale and below occur in highly fluctuating environments [32, 111, 113]. The recognition of this fact has driven the effort to extend thermodynamics to encompass closed and open systems evolving far from thermal equilibrium. The investigation of fluctuation relations originating with the numerical observations of Denis Evans, Ezechiel Godert David Cohen and Gary Morriss [82] and their theoretical explanation by Giovanni Gallavotti and Ezechiel Godert David Cohen [85] played a pivotal role in this direction. Fluctuation relations are robust identities governing the statistics of thermodynamic quantifiers of the state of physical systems in and out thermal equilibrium. A major implication of the existence of fluctuation relations is the extension of the Second Law of thermodynamics in order to properly take into account positive as well as negative fluctuations of the entropy production [70, 90]. This extension can be completely achieved when open systems are modelled by means of finite-dimensional Langevin dynamics [21, 91, 95, 96]. In this latter context, a unified derivation of known fluctuation relations follows from comparing in a mathematically rigorous manner how different choices of time-reversal transformations affect given forward stochastic dynamics [7].

7.1 Elementary Langevin stochastic thermodynamics

For instance, suppose that the physical system is described by a Markov process \( \left\{ \varvec{\chi }_{t} \right\} _{t\,\ge \,0} \) taking values in a Euclidean space of dimension \(2\,d\) and solution of

Here, \(H_{t}\) is a scalar, possibly time dependent, function called the Hamiltonian, and \( {\mathsf {J}} \) and \( {\mathsf {S}} \) are an antisymmetric and a symmetric positive definite matrix, respectively. The drift in (62) is the sum of \( {\mathsf {J}}\partial _{\varvec{x}}H_{t} \), an incompressible component, and a gradient \( -{\mathsf {S}}\partial _{\varvec{x}}H_{t} \). If the Hamiltonian is time independent \( H_{t}\,\equiv \,H \), the incompressible component preserves H and for this reason it is referred to as the “conservative” component also in the general case. Similarly, the gradient component is referred to as the “dissipative” component as in the time autonomous case it drives the solution towards the minimum of H (if it exists). The Wiener differential \(\mathrm {d}\varvec{w}_{t}\) in (62) models thermal exchanges between the system and an infinite environment at temperature \(\beta ^{-1}\).

The kinematics of (62) are chosen such to satisfy the Einstein relation [7]. This means that under additional hypotheses on the Hamiltonian (e.g. time independent, confining) the probability measure generated by (62) converges for large times towards a unique Boltzmann equilibrium:

More generally for any finite time interval \( [t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}] \), we may interpret the Stratonovich stochastic differential (see, e.g. [110])

as a stochastic embodiment of the first law of thermodynamics [112]. In particular, we identify

as the heat released by an individual realization of the system evolution for \( t\,\in \,[t_{{\mathfrak {0}}}\,,t_{{\mathfrak {1}}}] \). A straightforward application of stochastic calculus then shows that the expectation value of the heat can always be couched into the form (see, e.g. [13, 56])

where \( p_{t} \) is the probability density of states of the system at time t. Of the two terms on the right-hand side, the first one is a non-sign-definite time-boundary term. It coincides with minus the variation of the Gibbs–Shannon entropy

From the thermodynamic point of view, we interpret it as measuring the system entropy variation in consequence of the transition. The second term on the right-hand side is positive definite and vanishes identically only at equilibrium. Already these elementary phenomenological considerations suggest the interpretation of the second term as the average entropy production during the thermodynamic transition. The analysis of the fluctuation relation between the probability measure of the process (62) and that of the process constructed by treating the dissipative and conservative components of the drift as, respectively, even and odd under the physically natural choice of time-reversal transformation ultimately validates the identification. The result of the analysis [7, 21] is the identity

proving that the positive definite component in the average heat coincides with Kullback–Leibler between the measure of forward process \( \mathrm {P} \) generated by (62) and the image-measure \( \mathrm {P}^{(r)}\circ R \) by path reversal R [7, 64] of the time-reversed process \( \mathrm {P}^{(r)}\). Combining (64) with the observation that the change of entropy of environment is related to the average heat release, we arrive at the expression of the average value of the Second Law in the framework of Langevin thermodynamics:

The total entropy production during an arbitrary transition can only increase. Conversely, only transitions governed by a probability measure invariant under time-reversal do not occasion an increase in the total entropy.

7.2 Landauer’s principle in the context of Langevin dynamics

Once expressed in the form (65), the Second Law of stochastic thermodynamics is closely related to the Landauer’s principle [17]. The principle states that the erasure of one bit of information performed in a thermal environment produces on average a heat release no smaller than \( \beta ^{-1}\,\ln 2 \). The bound is obtained in the quasi-static limit. The existence of a strictly positive lower bound to the heat release during erasure indicates a fundamental minimum cost that must be paid to run any computing process. It is, however, worth emphasizing here that thermodynamic processes in fluctuating thermal environments are described by stochastic quantities. Hence, a probabilistic formulation of Landauer’s principle other than existence on average may play an important role to determine the actual fundamental cost of computing [76]. Nevertheless, a natural question to ask concerns corrections to the \( \beta ^{-1}\,\ln 2 \) heat release estimate occasioned by a finite-time transition [56]. In the context of Langevin thermodynamics, the corresponding mathematical problem is pictorially described in Fig. 2. A stored bit of information is modelled by a probability density at time \( t_{{\mathfrak {0}}}\) having a single sharp maximum for instance to the right of the origin. Finite-time erasure consists of steering the probability density so that at time \( t_{{\mathfrak {1}}}\) it acquires two symmetric maxima around the origin. The physically relevant cost to minimize with respect to the drift is the Kullback–Leibler divergence on the right-hand side of (65). Conceptually, the resulting optimal control problem is very close to the one leading to Schrödinger’s mass transport. The important difference resides in the definition of the cost. In Schrödinger’s case, the cost of a transition depends upon the, in principle arbitrary, choice of the reference process. This arbitrariness is not present in the stochastic thermodynamics formulation of Landauer’s principle. The Kullback–Leibler divergence appearing in the Second Law of thermodynamics (64) is fully specified in terms of the drift and diffusion of the control process. Furthermore, it has the interpretation of total entropy production during a thermodynamic transition and it only vanishes at equilibrium. This fact becomes especially evident in the absence of a conservative component in the drift of (62) (\( {\mathsf {J}}=0 \)) and if \( {\mathsf {S}} \) is strictly positive definite. In such a case, the Kullback–Leibler divergence (64) admits the simple expression

where as before \( w_{t} \) is the probability density of the forward process and the vector field \( \varvec{v}_{t}\) is the current velocity (61)

At equilibrium, the current velocity vanishes and no steering between distinct states is possible. We can instead naturally rephrase the optimization problem using the current velocity as control to minimize the erasure cost. As a consequence [56], the optimal control equations for the mean average dissipation in a thermodynamic transition coincide with those of a classical optimal mass transport [117]. Furthermore, Schrödinger’s mass transport problem with reference process a free diffusion (\( \varvec{b}_{t}=0 \) in (43)) coincides with a viscous regularization of the classical mass transport [102]. Hence, the conceptual proximity between the two optimal control process becomes in this special case a quantitative relation. More generally, the same quantitative relation holds for micro-scale processes described by the Langevin–Smoluchowski, overdamped, approximation of (62) [56].

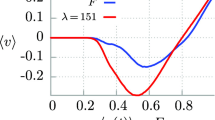

Pictorial description of a Schrödinger diffusion problem corresponding to the erasure of one bit of memory. According to Landauer’s principle, only logically irreversible operations are in principle thermodynamically irreversible, i.e. correspond to dissipative processes. Since other logical operations can be implemented reversibly, erasure would therefore be the only irreversible operation in the thermodynamics of computation. The controlled Markovian dynamics embodying a physically meaningful realization of the principle corresponds then to the minimizer of an adapted thermodynamic quantity. We discuss in Sect. 4 below the relation between the Kullback–Leibler divergence considered by Schrödinger and that entering the currently accepted formulation of the principle

The general interest of a quantitative relation between the optimal control problems associated with Schrödinger’s mass transport and Landauer’s principle is motivated by the following considerations. Ongoing experiments, e.g. [8], are pushing towards a better understanding of the cost of information processing by nano-scale machines, natural or artificial. At the nano-scale, inertial interactions cannot be neglected. In such a case, even highly stylized models of the dynamics neglecting quantum effects require the use of the full-fledged Langevin–Kramers dynamics [93, 118]. A direct quantitative correspondence with Schrödinger’s stochastic optimal control problem is no longer immediately evident. Nevertheless, the Langevin–Smoluchowski limit remains a stepping stone for analytical investigations based on multiscale perturbation theory [103]. In addition, the solution of Landauer’s optimal control problem in the Langevin–Smoluchowski limit is also the basis for the analysis of erasure when the bit of information is conceptualized as a macro-state specified by coarse graining the measure of a physical system with microscopic dynamics governed by (62) [108]. Thus, in a broad sense, Schrödinger’s vision of an optimal stochastic mass transport problem between target states offers a powerful theoretical framework for conceptualizing transitions in stochastic thermodynamics.

8 Conclusion

Schrödinger’s 1931 paper “On the Reversal of the Laws of Nature” appeared amid the debate on the interpretation of quantum mechanics. The paper triggered manifold developments of the theory of stochastic processes, directly or indirectly related to the still ongoing effort to shed light on the connections and the physical origins of the differences between classical statistical physics and probability theory on one side and quantum mechanics on the other. The advent of micro- and nano-scale technology in the last decades has made urgent the need for a deeper understanding of thermodynamic processes which occur in finite time and involve physical quantities described by inherently fluctuating quantities. Forging a theoretical framework adapted to match this challenge is a task that a significant part of the community working in theoretical and mathematical physics has endeavored to undertake. In our attempt to contribute to this collective effort, we found inspiration and guidance in reading, possibly from a slightly novel perspective, Schrödinger’s “On the Reversal of the Laws of Nature”. We therefore decided to offer our translation and commentary of “On the Reversal of the Laws of Nature” with the hope that, as it was for us, other colleagues may find in it a source of ideas to open new paths both in their research and teaching activity.

References

R. Aebi. Schrödinger Diffusion Processes. Probability and its applications. Birkhäuser, 1996.

R. Aebi. Schrödinger’s time-reversal of natural laws. The Mathematical Intelligencer, 18:62–67, 1996.

S. N. Bernstein. Sur les liaisons entre les grandeurs aléatoires. Verhandlungen des Internationalen Mathematiker-Kongresses Zürich, 1:288–309, 1932.

A. Bérut, A. Arakelyan, A. Petrosyan, S. Ciliberto, R. Dillenschneider, and E. Lutz. Experimental verification of Landauer’s principle linking information and thermodynamics. Nature, 483:187–189, 2012.

A. Blaquière. Controllability of a Fokker-Planck equation, the Schrödinger system, and a related stochastic optimal control (revised version). Dynamics and Control, 2(3):235–253, 1992.

Y. Chen, T. T. Georgiou, and M. Pavon. On the Relation Between Optimal Transport and Schrödinger Bridges: A Stochastic Control Viewpoint. Journal of Optimization Theory and Applications, 169(2):671–691, 2016.

R. Chetrite and K. Gawȩdzki. Fluctuation relations for diffusion processes. Communications in Mathematical Physics, 282(2):469–518, 2008.

S. Dago, J. Pereda, N. Barros, S. Ciliberto, and L. Bellon. Information and thermodynamics: fast and precise approach to Landauer’s bound in an underdamped micro-mechanical oscillator. Physical Review Letters, 126:170601, 2021.

P. Dai Pra. A stochastic control approach to reciprocal diffusion processes. Applied Mathematics and Optimization, 23(1):313–329, 1991.

W. G. Faris, L. Gross, B. Simon, D. C. Brydges, E. Carlen, C. Villani, G. F. Lawler, S. R. Buss, J. Hook, and E. Nelson. Diffusion, Quantum Theory, and Radically Elementary Mathematics, volume 47 of Mathematical Notes. Princeton University Press, 2006.

I. Fényes. Eine wahrscheinlichkeitstheoretische Begründung und Interpretation der Quantenmechanik. Zeitschrift für Physik, 132(1):81–106, 1952.

R. Fürth. Über einige Beziehungen zwischen klassischer Statistik und Quantenmechanik. Zeitschrift für Physik, 81(3-4):143–162, 1933.

K. Gawȩdzki. Fluctuation Relations in Stochastic Thermodynamics. Lecture notes, arXiv:1308.1518, 2013.

A. N. Kolmogorov. Zur Theorie der Markoffschen Ketten. Mathematische Annalen, 112(1):155–160, 1936.

A. N. Kolmogorov. Zur Umkehrbarkeit der statistischen Naturgesetze. Mathematische Annalen, 113:766–772, 1937.

J. V. Koski, T. Sagawa, O.-P. Saira, Y. Yoon, A. Kutvonen, P. Solinas, M. Möttönen, T. Ala-Nissila, and J. P. Pekola. Distribution of entropy production in a single-electron box. Nature Physics, 9(10):644–648, 2013.

R. Landauer. Irreversibility and heat generation in the computing process. IBM Journal of Research and Development, 5(3):183–191, 1961.

H. S. Leff and A. F. Rex. Maxwell’s demon 2: entropy, classical and quantum information, computing. Institute of Physics Publishing, 2 edition, 2003.